Abstract

Enabled by the remarkable dexterity of the human hand, specialized haptic exploration is a hallmark of object perception by touch. Haptic exploration normally takes place in a spatial world that is three-dimensional; nevertheless, stimuli of reduced spatial dimensionality are also used to display spatial information. This paper examines the consequences of full (three-dimensional) versus reduced (two-dimensional) spatial dimensionality for object processing by touch, particularly in comparison with vision. We begin with perceptual recognition of common human-made artefacts, then extend our discussion of spatial dimensionality in touch and vision to include faces, drawing from research on haptic recognition of facial identity and emotional expressions. Faces have often been characterized as constituting a specialized input for human perception. We find that contrary to vision, haptic processing of common objects is impaired by reduced spatial dimensionality, whereas haptic face processing is not. We interpret these results in terms of fundamental differences in object perception across the modalities, particularly the special role of manual exploration in extracting a three-dimensional structure.

Keywords: perception, haptics, face processing, inversion, object recognition

1. Haptic object recognition and the role of exploration

Some 25 years ago, we showed that people are highly adept at recognizing real three-dimensional common objects by touch [1]. At the time, most of the research on touch focused on characterizing this modality in terms of its sensitivity and acuity (e.g. spatial and temporal thresholds). In terms of spatial processing, lay-persons and scientists alike considered touch as no more than a highly inferior form of vision. The demonstration that real three-dimensional objects were recognized so effectively highlighted the human haptic system as a complex information processor and showed that cognitive processing associated with touch, including object recognition, deserved considerably more attention.

In this initial study, it was also apparent that the perceiver was actively involved in information acquisition: people not only held the objects in their hands, but they also rubbed, tapped and manipulated them. This led us to systematically investigate how the hand movements that occurred during object identification were related to the diagnostic properties of objects—attributes that were critical to placing the objects in a known category. Ultimately, we identified a set of haptic ‘exploratory procedures’, that is, stereotyped hand movements that are linked to specific object properties [2]. For example, to learn about an object's surface texture, people execute a procedure called ‘lateral motion’, where the hand moves tangentially relative to the surface of the object. An exploratory procedure is not just the preferred means of exploration, when a particular object property is sought; in fact, it is the most accurate way to acquire information about that property.

A procedure called contour following, in which the fingers move along the edges or curved surface of an object, proved to be optimal, as well as necessary, for extracting precise shape. However, contour following tends to be quite slow relative to other procedures, and the precision with which edge information can be extracted in this way is limited. Thus, the sense of touch is well suited to acquiring information about material properties of objects, but not about precise geometry [3–5].

The ecological reality, however, is that shape is the principal basis for object categorization, particularly at the basic level [6], where categories are distinguished by their shape and associated function-related motor movements (e.g. chair and table). Any perceptual system that aims to categorize objects must extract shape information. The field of vision has seen much controversy with respect to the nature of the shape representations that mediate object recognition and whether they are perspective-independent [7] or whether perspective-specific views are stored (e.g. [8]). Regardless, both theories share the assumption that geometric information is critical. The documented difficulty of extracting precise geometry by means of haptic exploration then leads to a conundrum: if shape is essential for object categorization, how can objects be named so quickly and accurately by touch in the absence of vision, as previously demonstrated [1]? Because structural differences are maximized at the boundaries between object categories (at least at the basic level), precise contour extraction often proves unnecessary. People attempting to recognize objects by touch often begin with an exploratory procedure called enclosure (grasping), which provides structural information that may by itself be sufficient to determine the object's identity [9].

Up to this point, touch has been characterized as both active and purposive, and when coupled with appropriate manipulation, as highly informative. We use our remarkably dextrous hands to manipulate, thereby permitting us to position our exploring effectors in three-dimensional space so as to contact the surfaces and contours of objects that interest us, wherever they may lie (within reach, of course). However, this only becomes possible when objects afford free and unrestricted exploration in the three-dimensional world. We now turn to the main topic of this paper, the role of spatial dimensionality in object perception. We will draw two contrasts—between the modalities of vision and touch, and between non-face objects and facial stimuli—in assessing whether, and how, the reduction of dimensionality in a stimulus object affects perception. Our foregoing review of haptic exploration clearly predicts that dimensionality matters: to the extent that reduced dimensionality impedes free exploration that would extract shape information, haptic object processing should be compromised. The effects go beyond peripheral stimulus analysis, however: objects rendered as raised lines may afford the extraction of contour, but central processes that convert these two-dimensional contours to three-dimensional shapes appear not to function through the haptic channel.

2. Spatial dimensionality and object processing in vision versus touch

A distinction that has attained some prominence in haptic research is made between objects that are three-dimensional and can be explored freely, and two-dimensional (flat) objects or their raised depictions, which preclude unconstrained dextrous exploration for purposes of perception. Research on visual object perception tends to present stimuli as two-dimensional displays (e.g. photographs, line drawings, etc.); however, research that uses stereo viewing or real, physical scenes and objects has been relatively specialized. In current studies of haptic object perception, however, this balance is essentially reversed. This difference between the research predilections across sensory modalities is not merely pragmatic; it stems from underlying and fundamental differences between the two perceptual domains.

Visual perception begins by transforming light from the three-dimensional physical world into a two-dimensional retinal image. As a result, early and mid-level processes (e.g. grouping and parsing) are devoted in large part to reconstructing that three-dimensional world. In marked contrast, haptic object perception originates through exploratory contacts within that same three-dimensional world. Objects at different depth planes are encountered by appropriate joint angles, and muscle/tendon/joint receptors accordingly signal such differences in depth. If, however, a two-dimensional object depiction, which is rendered as raised surfaces or edges, is presented for haptic exploration, the same receptors now register that they reside on a single depth plane.

The efficiency with which visual processes recover three-dimensional representations from the two-dimensional retinal projection allows objects depicted in two-dimensional pictures to be named remarkably fast and accurately. A single view can be recognized under an exposure of approximately 100 ms or less (e.g. [10,11]). The most important type of cue to an object's identity in a two-dimensional display is edge information; surface characteristics such as colour, brightness and texture are of limited value [12–15].

While visual processing of real objects intrinsically originates with two-dimensional images, touch takes place inherently within the three-dimensional world. When we began our study of haptic object recognition, this fundamental modality difference was known, but its importance to haptic perception was overlooked. An important observation in our early work was that raised-line pictures of familiar, common objects (e.g. comb and carrot) that were manually explored were extremely difficult to recognize, even by subjects who had visual experience and when lengthy exploration times (i.e. 1.5 min) were permitted [16]. The low recognition rate was all the more striking, given that real common objects are haptically recognized quickly and essentially without error [1].

Such poor recognition of raised-line object depictions challenged a naive model that we called visual mediation. It proposed that haptically discernible lines can be transferred to something like a ‘mind's eye’ and interpreted by the same processes that occur with mid-level vision [17]. However, there is a fundamental disconnect here: even when the lines and their spatial relationships are demonstrably successfully encoded by touch, the object itself is not recognized. In fact, raised-line drawings that are unrecognizable without vision can be drawn by the same subjects, rendering them immediately known [18]! This phenomenon clearly demonstrates that the problem is not sensory, but rather, more central.

At least one source of central limitation probably resides in the demands for spatio-temporal integration that are intrinsic to the recognition of raised-line drawings by touch. The two-dimensional nature of these depictions dictates sequential exploration. Studies of visual object recognition show that piecemeal viewing of an object over time can dramatically interfere with object naming, even when exploration of the object's contours is under the observer's control. For example, Loomis et al. [19] yoked visual exposure of an object to haptic exploration with the finger. Recognition then averaged only 38 per cent after almost 120 s of exploration, equivalent to the rate achieved with haptic exploration alone. That vision and touch led to comparably low performance, once spatio-temporal demands were equated, implicate those demands as a core difficulty, at a central level, in recognizing two-dimensional objects by touch.

The importance of access to three-dimensional structure in haptic recognition of common objects can be seen by a summary of several manipulations that successively reduced access to spatial information by constraining the exploring hand [20]. Naming accuracy decreased, and response time increased, successively across the following conditions: bare hand (approx. 100% accuracy in 3 s), gloved hand, index finger only, hand splinted to preclude flexion, index finger splinted, index finger with rigid sheath and hand holding a rigid probe with spherical tip. The accuracy and time for the last two conditions were near those typically observed for two-dimensional depictions. Thus, two-dimensional objects can be seen as the lowest extreme on a continuum representing access to three-dimensional structure, where free exploration of real objects with the bare hand represents the optimum.

To summarize, full three-dimensional information affords rapid and accurate access to object identities by touch. If exploration of three-dimensional objects is constrained to impede access to structure, recognition rates decline dramatically. Similarly, reduced dimensionality in the portrayal of haptically perceived objects severely constrains exploration. Moreover, the sequential pick-up of two-dimensional contours necessitates a process of spatio-temporal integration, which is arduous and prone to failure in vision as well as touch. As a result, dimensional reduction has a strong negative impact on haptic object recognition.

3. Spatial dimensionality: orientation dependence of object representations in vision and touch

As we have noted, our purpose here is to examine the consequences of reduced spatial dimensionality for object processing by touch, particularly in comparison with vision. In essence, our goal is to assess what in statistics would be characterized as a dimensionality × modality interaction: is there an effect of stimulus spatial dimensionality, and does it vary with modality? In this section, we add another variable: the orientation of objects within a spatial ‘frame of reference’. Frames of reference establish the coordinates within which objects and their contours are localized. Accordingly, they carry constraints on the spatial transformations that require changes in coordinate descriptions. From a body of studies on mental spatial transformations, beginning with the classic work of Shepard & Cooper (see [21]), it is well known that coordinate changes impose cognitive demands. Thus, the impact of rotations or perspective shifts on accuracy and/or response time is indicative of the frame of reference in which an object is represented.

As noted above, theories of visual object recognition have provided two opposing views of object representation, perspective-invariant volumetric primitives versus stored views. Effects of perspective shift and rotation have been used to test the theories. A review of the rather complex results is beyond the limits of this paper (e.g. [22–24]). However, it is noteworthy that the literature has tended not to contrast two- and three-dimensional object presentations.

Haptic perception presents an interesting context in which to ask about the orientation specificity of object representations, particularly objects of contrasting dimensionality. There is a fundamental difference between two-dimensional object depictions and three-dimensional objects pertaining to the stability of frames of reference under haptic exploration. A three-dimensional object that falls within the scale of the hand can be grasped, lifted, rotated and moved at will in the world, as typically occurs during manual exploration. When explored, it has a time-varying description in three-dimensional coordinates relative to the body or the world, but the object-centred description is stable.

In contrast, a two-dimensional representation is stable only within the coordinate frame of its depiction. If the viewer cannot achieve a representation within that frame, independent of his/her body, haptic identification should suffer when the depiction is moved about (like a normal object). Scocchia et al. [25] found that raised-line drawings were named less effectively under haptic exploration when the subject's head was turned to direct gaze 90° away from the vertical axis of the depicted orientation, suggesting an orientation-specific representation of the depiction relative to the viewer's head.

Perhaps because of the ease with which hand-held objects can be re-oriented, few studies have asked whether three-dimensional common objects are more readily named by touch when in a canonical orientation than when rotated. In one study, subjects oriented common objects, so they would be optimally informative for learning and memory [26]. The preferred orientation was upright (if there was a base plane), with the longer axis either along the frontal or sagittal plane. Although the result indicates a favoured view for prospective memory judgements, perceptual (specifically, haptic) identification rates for different orientations—of primary interest here—were not tested for common objects (also see evidence for orientation dependence in haptic memory tasks in [27–29]).

We note some evidence of orientation independence in three-dimensional object representation in our study, where common objects were queried by name for a yes/no response [9]. In one such task, the object was placed in the subject's palm with an uninformative surface facing downward; in a variant, a stable base surface of the object was placed on the table between the two hands, which then moved to enclose it. The sequence of exploratory activity was remarkably similar across the two situations, offering little evidence that the mode of presentation to the hand, including object orientation, markedly affects the process of basic-level naming of familiar three-dimensional objects.

Further data are needed to assess whether real, common objects are haptically represented in rotation-invariant ways, and whether departures from canonical orientation have greater effect on two-dimensional drawings than three-dimensional objects. In §5, we will return to orientation-specific representations, but in the stimulus domain of faces, where it has been more systematically addressed.

4. Spatial dimensionality: face processing in vision and touch

Next, we revisit the issues raised above regarding the role of dimensional structure in object recognition, but specifically in relation to face processing. Faces constitute a class of objects that have been of longstanding interest in the study of perceptual identification, both because of their social significance and the evidence that they are processed by distinct visual mechanisms. At a subordinate level, faces can be categorized in terms of personal identity. At a basic level, they are commonly classified in terms of the emotion expressed, as evidenced by the occurrence of common names for universal and distinct emotion categories (sadness, happiness, etc.) and by the clear structural cues that are invariant within emotion categories and that differ among them [30].

Until quite recently, it was not appreciated that live faces could also be recognized by touch, both at the level of personal identity [31] and emotion [32]. Subsequently, this capability has been extended to the recognition of rigid three-dimensional facemasks and two-dimensional facial depictions extracted from photographs. Figure 1 shows examples from these three stimulus sets used in our research.

Figure 1.

Examples of facial stimuli expressing happiness, as portrayed by a single actor. (a–c) Live face, facemask, two-dimensional line drawing.

A review of similarities and differences between vision and touch with respect to face processing is beyond the scope of this paper (see [33]). Here, we ask whether reduced spatial dimensionality has an impact on the haptic identification of faces as individuals and as affective expressions, as occurs with other natural objects and human-made artefacts. This question is raised in the context of a variety of findings in visual perception that point to fundamental differences in processing faces versus other common objects. Altering the contrast of pictures of faces [34] or inverting them [35,36] disrupts the recognition of identity; however, these manipulations have relatively little effect when non-face objects are involved. Double dissociations in face/object recognition have also been demonstrated: object agnosics can be spared deficits in face recognition [37], while some prosopagnosics are impaired when recognizing faces, but not non-face objects. (However, the idea that face processing involves specialized channels has been challenged, particularly by Gauthier et al. [38,39], who have suggested that face processing differs from that of other stimuli in that it involves greater expertise.)

In addition to the considerable neural and behavioural evidence for distinctiveness of face processing, faces are relatively unique physical objects with respect to their dimensionality and category structure. The basic shape of the head approximates a sphere, from which distinguishing features of identity and emotion protrude (cf. Marr's [40] idea of the 2-1/2D sketch, representing the depth and local orientation of points on a visible surface). The three-dimensional variation relative to the frontal plane of the head is limited, whereas three-dimensional variation in non-face objects is essentially unconstrained. Moreover, the categorical structure of faces differs from that of non-face objects. An important part of facial processing, identity recognition, involves subordinate-level categorization, where fine structural discriminations are made. If one considers emotional face categories to be basic level, the structural cues that distinguish them still tend to be less salient than those that differentiate basic-level non-face categories, like table and chair.

Given the uniqueness of facial structure, along with documented processing differences, it is not obvious whether faces will parallel the effects we reviewed above for non-face objects; that is, reduced spatial dimensionality impairs haptic perceptual recognition, but not visual. As was reviewed in §2, recognition of common non-face objects declines as access to three-dimensional structure is reduced by constraining exploration. The limited structural variation intrinsic to facial features may similarly constrain access to information content in the third dimension. We know that common non-face objects with minimal three-dimensional structure are not as affected as others by being reduced to two-dimensional depictions [16]. If reducing spatial dimensionality does not severely affect the information content in faces, its impact on face processing should not be as great as on identifying other common objects.

We begin our review of dimensionality effects in face identification with vision, where the effectiveness of two-dimensional displays found with non-face objects is clearly replicated. With visual exposure to two-dimensional facial displays, most people have remarkable capacities to recognize individuals and to process basic emotions.

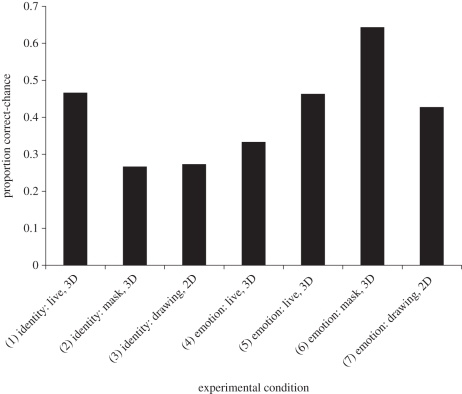

To assess whether two-dimensional depictions enable face processing through touch as effectively as three-dimensional, we turn to a series of studies in which facial identity or emotion is identified, conducted by us and collaborators. As the studies vary with respect to the number of response alternatives and, hence, the level of chance performance, we show the increment in accuracy relative to chance in figure 2. Although performance was significantly above chance in all cases, accuracy does not compare with the essentially error-free naming of non-face objects (where the number of response alternatives makes chance essentially zero).

Figure 2.

Adjusted performance for seven studies where face identity or emotion was reported after subjects explored live faces, rigid facemasks or raised-line drawings (2D, two-dimensional; 3D, three-dimensional). (1) [31]; (2) [41]; (3) [42]; (4) [32]; (5) [43]; (6) [44] and (7) [45].

With respect to facial identity, after only brief training people are able to haptically identify individuals portrayed in three-dimensional live faces [31] and three-dimensional rigid facemasks [41] at levels well above chance (match-to-sample task with three alternatives). McGregor et al. [42] measured the ability to identify two-dimensional raised-line drawings of the eyes, nose and mouth outlines transferred from facial photographs. After initial exposure to six unique face/name pairings, recognition in a naming test trial (six-alternative choice) achieved only 33 per cent accuracy; accuracy improved to 52 per cent after five blocks of training with feedback. When adjusted for chance, this improvement appears fairly similar to levels achieved with the three-dimensional live face and facemask stimuli (direct statistical comparisons are not possible, given the different tasks). If so, the effects of reducing spatial dimensionality on haptic recognition of facial identity would appear to differ from that observed with other non-face common objects, which are recognized far better than two-dimensional raised-line depictions, even when material cues are precluded (93% versus 30% for three- and two-dimensional objects, respectively [46]).

With respect to the haptic recognition of primary facial emotions, there is also little evidence of a decrement in performance for two-dimensional depictions relative to three-dimensional stimuli (live faces [32,43]; three-dimensional facemasks [44]; two-dimensional facemasks [45]). These studies asked subjects to classify facial emotions into the six categories thought to be universal [30]: anger, disgust, fear, happiness, sadness and surprise (in the two-dimensional study, neutral was also included as an alternative). Contrary to the hypothesis that dimensionality reduction might be detrimental, as occurs with common objects, performance was slightly more accurate with the two-dimensional faces than with either live face study, although one must be cautious about comparisons given the use of different tasks and actors. (Actor differences, in particular, are likely to be the basis for the different levels of performance in the two studies with live actors, conditions 4 and 5 in figure 2.)

Before concluding that spatial dimensionality has no effect on haptic face processing, however, we consider two other studies by our collaborators, one using live face displays [47] and the other using two-dimensional raised-line depictions [48]. Both compared performance when exploration was restricted either to the upper face (eyes/brows/forehead/nose) versus the lower (nose/mouth/jaw/chin). For identifying faces visually, the eye region appears to be most informative (e.g. [49,50]). For processing emotion, however, the mouth region appears to be more important [51,52]. Consistent with the latter findings, in both studies where touch was restricted, exploration of the lower region of the face produced significantly more accurate emotion identification.

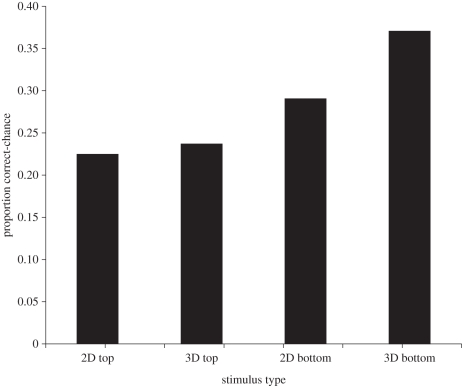

These studies offer us the opportunity to examine whether any advantage accruing from three-dimensionality varies with the intrinsic information content of the stimulus. Figure 3 shows the average proportion correct (adjusted for different guessing rates owing to the inclusion of neutral faces in the two-dimensional part-face study) for two- and three-dimensional top-only and bottom-only faces portraying the six canonical emotions. The decrement in accuracy for two-dimensional faces relative to three-dimensional was substantially greater for the more informative lower face region, and a t-test (using a pooled error term from the two studies) indicates that the effect of dimensionality for the lower face was significant (t38 = 2.35, p < 0.05, two-tailed).

Figure 3.

Adjusted accuracy for identifying facial emotion from part-faces rendered in two-dimensional (2D) versus three-dimensional (3D) live faces. Three- and two-dimensional data are from [47] and [48], respectively.

These findings moderate the conclusions from the whole-face data, which showed little effect of spatial dimensionality. The part-face data suggest that the reduction of information inherent in rendering faces in two dimensions does impair classification of facial emotion when the most critical information is isolated. Nonetheless, the effects are small relative to those found with haptic perception of common objects other than faces, as described previously.

On the whole, although our cross-experimental comparisons are somewhat speculative at this point, they suggest a different pattern for face and non-face objects with respect to the effects of spatial dimensionality. Faces offer no counterpart to the striking difference in efficacy of haptic identification between two-dimensional versus three-dimensional non-face objects. On this basis, should we conclude that this is another example of a distinct pattern of processing that faces exhibit relative to other object classes? The empirical difference is present: reduced dimensionality impairs haptic perception less in faces than other objects. However, the caveats raised above must be considered: faces, in comparison with most other common objects, may be less informative as three-dimensional stimuli, so that relatively little information is lost by reduction to two-dimensional.

We will return to the interpretation of dimensionality effects in faces versus non-face objects in the final discussion. First, we consider another variable that might differentiate faces from other non-face objects in two and three dimensions: the effects of orientation variation.

5. Spatial dimensionality: orientation dependence of facial representations in touch and vision

Although we have noted that persuasive research on the orientation specificity of haptically accessed representations of common objects other than faces is currently lacking (§3), extant data allow us to address this question specifically with respect to faces. The issue is of particular interest with face stimuli, given the substantial body of research on inversion effects. Since Yin's [35] classic demonstration that inversion (more specifically, rotation 180° in the picture plane) impairs face recognition, the visual presentation of inverted faces has been a common manipulation to test whether they are processed ‘configurally’ or feature by feature. Visual faces rendered in two dimensions are known to be disrupted by inversion more than two-dimensional portrayals of common objects (e.g. [36]). To our knowledge, comparable visual data on three-dimensional faces are lacking; however, we would expect typical inversion effects.

Data from our own work have assessed whether canonical orientation affects haptic face recognition, as it does vision; moreover, these studies allow us to compare the effects of inversion on two- and three-dimensional stimuli, in tasks where an individual person is to be identified by name, or where an emotional category is identified. The stimuli were two-dimensional raised-line drawings of critical features (abstracted from photographs), three-dimensional facemasks, or live faces. In the case of the emotion-recognition tasks, the stimuli were either live actors making emotional expressions or facemasks that were made from laser-scanned expressions of the same actors. The orientation is defined as upright if the facial stimulus is aligned with the face of the observer, whether the stimulus is presented in the frontal plane or ground plane. Figure 4 shows the relative effect of inversion, measured as the decrement in accuracy for inverted faces relative to upright, in proportion to upright (i.e. (upright-inverted)/upright). The inversion effects shown are statistically significant for all conditions, with the exception of the classification of emotional expressions on facemasks, where the null effect shows that the features used to identity facial emotions were as available in inverted as in upright depictions. There is clearly no tendency for the inversion effect to be greater for two- than three-dimensional faces.

Figure 4.

Decrement from inversion ((upright-inverted)/upright) for five studies where face identity or emotion was reported after subjects explored live faces, rigid facemasks or raised-line drawings (2D, two-dimensional; 3D, three-dimensional). The studies are (1) [42]; (2) [53]; (3) [45]; (4) [43] and (5) [44].

An intriguing finding emerging from these studies is that the inversion effect tends to be invariant over manipulations that strongly influence baseline accuracy. In the study in which subjects learned to name two-dimensional raised-line facial drawings over five blocks with feedback, the decrement in performance attributable to inversion remained essentially constant. Moreover, across three different types of stimulus display (two-dimensional drawings, three-dimensional facemasks and live faces), while inverted faces were generally processed for emotional cues less well than upright faces, inversion did not moderate the relative accuracy with which different emotions are recognized. This suggests that if inversion affects basic processes that extract facial structure and relate it to stored categories, it does so at a level that is insensitive to both information content in the stimulus (e.g. intrinsic emotion distinctiveness) and robustness of categorical information (e.g. level of learning).

In §4, we found no systematic performance decrement in the haptic processing of either facial identity or emotion when spatial dimensionality was reduced from three to two dimensions. This section highlighted the finding that whether faces were two- or three-dimensional, the canonical orientation led to better haptic recognition of both identity and emotion. Moreover, there is no systematic variation in the magnitude of the inversion effect across studies using two- and three-dimensional stimulus displays. The inversion studies thus offer additional evidence that dimensional reduction has little effect on haptic face processing. Effects of spatial dimensionality on haptically presented faces tend to resemble those obtained with visually presented objects.

6. Summary and conclusions

This paper evaluates the effects of reducing the spatial dimensionality of stimuli portraying objects on perceptual processing by both haptic and visual modalities. We have characterized our question as concerning an interaction between dimensionality and modality. The issue of how dimensional reduction affects perception is particularly relevant to touch because of the purposive nature of haptic exploration, which is afforded by dextrous manipulation in real three-dimensional space. Thus, objects rendered in full three-dimensional space are particularly appropriate for haptic perception.

The data we described for objects other than faces confirm the interaction of interest: haptic identification performance is notably superior for three-dimensional, as opposed to two-dimensional raised-line depictions; in contrast, two-dimensional object depictions are recognized very well visually, even with very brief presentations. We have argued that two-dimensional depictions present a disadvantage to haptic observers for two reasons: they preclude exploratory procedures that extract volume directly, and the contour extraction that they do afford is temporally extended, necessitating spatio-temporal integration and imposing a heavy memory load. When people try to identify real objects, but under exploratory constraints that limit access to three-dimensional structure, performance can decline to the level observed with two-dimensional objects.

We next turned to faces as a potentially special case, given differences in visual processing of faces and other non-face objects. Is the interaction between dimensionality and modality maintained? In contrast to the effect of reducing spatial dimensionality on the haptic perception of other common objects, our data indicate surprisingly little effect on haptic face processing of either identity (subordinate level) or emotion (basic level). Only when exploration is restricted to a highly informative region (i.e. the mouth area for emotion recognition), is there some evidence of a decrement in perceiving two-dimensional faces.

Orientation effects extend the picture. Although rarely considered with respect to the haptic perception of non-face common objects, they are a matter of considerable interest within the context of haptic face recognition. Decrements in visual face processing under inversion are well documented, and similar effects have been shown for haptic recognition of facial identity and emotion by touch. Despite the speculation that two-dimensional depictions might be more bound to fixed frames of reference (§2), we found little evidence of a greater inversion effect for haptically perceived two-dimensional (cf. three-dimensional) faces.

We now return to the issue of whether differential effects of dimensional reduction for non-face objects versus faces constitute evidence that faces are somehow a specialized channel. On this point, we are cautious. We note that people are not experts at haptic (cf. visual) face processing; in fact, few people routinely explore faces haptically, and it would be useful to obtain systematic data on face exploration as has been done for other domains [2]. Thus, the differences between faces and other objects may reflect familiarity. Moreover, as has been emphasized, dimensional reduction might not have as great an effect on geometric information in faces as in most other objects, and in touch as in vision, the categorical distinctions across both facial emotions and identities are subtle. Hence, our findings with regard to dimensionality are provocative, but by no means unequivocal in their implications.

The finding that haptic categorization of faces appears to be spared, in large part, from the negative consequences of reducing spatial dimensionality is of interest from an application perspective. Reduced dimensionality displays (two-dimensional) may serve as a powerful way to portray faces, both their personal identity and their emotional content, to low-vision populations. This is supported by evidence that facial recognition of emotions portrayed in two dimensions can be rapidly trained [54].

References

- 1.Klatzky R. L., Lederman S. J., Metzger V. 1985. Identifying objects by touch: an ‘expert system’. Percept. Psychophys. 37, 299–302 10.3758/BF03211351 (doi:10.3758/BF03211351) [DOI] [PubMed] [Google Scholar]

- 2.Lederman S. J., Klatzky R. L. 1987. Hand movements: a window into haptic object recognition. Cognitive Psychol. l9, 342–368 10.1016/0010-0285(87)90008-9 (doi:10.1016/0010-0285(87)90008-9) [DOI] [PubMed] [Google Scholar]

- 3.Klatzky R. L., Lederman S. J., Matula D. E. 1993. Haptic exploration in the presence of vision. J. Exp. Psychol. Hum. Percept. Perform. 19, 726–743 10.1037/0096-1523.19.4.726 (doi:10.1037/0096-1523.19.4.726) [DOI] [PubMed] [Google Scholar]

- 4.Klatzky R. L., Lederman S. J., Reed C. 1987. There's more to touch than meets the eye: the salience of object attributes for haptics with and without vision. J. Exp. Psychol. Gen. 116, 356–369 10.1037//0096-3445.116.4.356 (doi:10.1037//0096-3445.116.4.356) [DOI] [Google Scholar]

- 5.Lederman S. J., Klatzky R. L. 1997. Relative availability of surface and object properties during early haptic processing. J. Exp. Psychol. Hum. Percept. Perform. 23, 1680–1707 10.1037/0096-1523.23.6.1680 (doi:10.1037/0096-1523.23.6.1680) [DOI] [PubMed] [Google Scholar]

- 6.Rosch E. 1978. Principles of categorization. In Cognition and categorization (eds Rosch E., Lloyd B.), pp. 27–48 Hillsdale, NJ: Erlbaum [Google Scholar]

- 7.Biederman I. 1987. Recognition by components: a theory of human image understanding. Psychol. Rev. 94, 115–145 10.1037/0033-295X.94.2.115 (doi:10.1037/0033-295X.94.2.115) [DOI] [PubMed] [Google Scholar]

- 8.Tarr M. J., Bülthoff H. H. 1995. Is human object recognition better described by geon-structural-descriptions or by multiple-views? J. Exp. Psychol. Hum. Percept. Perform. 21, 1494–1505 10.1037/0096-1523.21.6.1494 (doi:10.1037/0096-1523.21.6.1494) [DOI] [PubMed] [Google Scholar]

- 9.Lederman S. J., Klatzky R. L. 1990. Haptic classification of common objects: knowledge-driven exploration. Cognitive Psychol. 22, 421–459 10.1016/0010-0285(90)90009-S (doi:10.1016/0010-0285(90)90009-S) [DOI] [PubMed] [Google Scholar]

- 10.Potter M. 1976. Short-term conceptual memory for pictures. J. Exp. Psychol. Hum. Learn. Mem. 2, 509–522 10.1037/0278-7393.2.5.509 (doi:10.1037/0278-7393.2.5.509) [DOI] [PubMed] [Google Scholar]

- 11.Subramaniam S., Biederman I., Madigan S. 2000. Accurate identification but no priming and chance recognition memory for pictures in RSVP sequences. Vis. Cogn. 7, 511–535 10.1080/135062800394630 (doi:10.1080/135062800394630) [DOI] [Google Scholar]

- 12.Biederman I., Ju G. 1988. Surface vs. edge-based determinants of visual recognition. Cognitive Psychol. 20, 38–64 10.1016/0010-0285(88)90024-2 (doi:10.1016/0010-0285(88)90024-2) [DOI] [PubMed] [Google Scholar]

- 13.Joseph J. E., Proffitt D. R. 1996. Semantic versus perceptual influences of color in object recognition. J. Exp. Psychol. Learn. Mem. Cogn. 22, 407–429 10.1037/0278-7393.22.2.407 (doi:10.1037/0278-7393.22.2.407) [DOI] [PubMed] [Google Scholar]

- 14.Humphrey G. K., Goodale M. A., Jakobson L. S., Servos P. 1994. The role of surface information in object recognition: studies of a visual form agnosic and normal subjects. Perception 23, 1457–1481 10.1068/p231457 (doi:10.1068/p231457) [DOI] [PubMed] [Google Scholar]

- 15.Wurm L. H., Legge G. E., Isenberg L. M., Luebker A. 1993. Color improves object recognition in normal and low-vision. J. Exp. Psychol. Hum. Percept. Perform. 19, 899–911 10.1037/0096-1523.19.4.899 (doi:10.1037/0096-1523.19.4.899) [DOI] [PubMed] [Google Scholar]

- 16.Lederman S. J., Klatzky R. L., Chataway C., Summers C. 1990. Visual mediation and the haptic recognition of two-dimensional pictures of common objects. Percept. Psychophys. 47, 54–64 10.3758/BF03208164 (doi:10.3758/BF03208164) [DOI] [PubMed] [Google Scholar]

- 17.Klatzky R. L., Lederman S. J. 1987. The intelligent hand. In The psychology of learning and motivation vol. 21 (ed. Bower G.), pp. 121–151 San Diego, CA: Academic Press [Google Scholar]

- 18.Wijntjes M. W. A., Lienen T., van Verstijnen I. M., Kappers A. M. L. 2008. Look what I've felt: unidentified haptic line drawings are identified after sketching. Acta Psychol. 128, 255–263 10.1016/j.actpsy.2008.01.006 (doi:10.1016/j.actpsy.2008.01.006) [DOI] [PubMed] [Google Scholar]

- 19.Loomis J., Klatzky R. L., Lederman S. J. 1991. Similarity of tactual and visual picture recognition with limited field of view. Perception 20, 167–177 10.1068/p200167 (doi:10.1068/p200167) [DOI] [PubMed] [Google Scholar]

- 20.Lederman S. J., Klatzky R. L. 2004. Haptic identification of common objects: effects of constraining the manual exploration process. Percept. Psychophys. 66, 618–628 10.3758/BF03194906 (doi:10.3758/BF03194906) [DOI] [PubMed] [Google Scholar]

- 21.Shepard R., Cooper L. 1982. Mental images and their transformations. Cambridge, MA: MIT Press [Google Scholar]

- 22.Biederman I., Kalocsai P. 1997. Neurocomputational bases of object and face recognition. Phil. Trans. R. Soc. Lond. B 352, 1203–1219 10.1098/rstb.1997.0103 (doi:10.1098/rstb.1997.0103) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hayward W. G., Tarr M. J. 1997. Testing conditions for viewpoint invariance in object recognition. J. Exp. Psychol. Hum. Percept. Perform. 23, 1511–1521 10.1037/0096-1523.23.5.1511 (doi:10.1037/0096-1523.23.5.1511) [DOI] [PubMed] [Google Scholar]

- 24.Leek E. C. 1998. Effects of stimulus orientation on the identification of common polyoriented objects. Psychon. B. Rev 5, 650–658 10.3758/BF03208841 (doi:10.3758/BF03208841) [DOI] [Google Scholar]

- 25.Scocchia L., Stucchi N., Loomis J. M. 2009. The influence of facing direction on the haptic identification of two-dimensional raised pictures. Perception 38, 606–612 10.1068/p5881 (doi:10.1068/p5881) [DOI] [PubMed] [Google Scholar]

- 26.Woods A. T., Moore A., Newell F. N. 2008. Canonical views in haptic object perception. Perception 37, 1867–1878 10.1068/p6038 (doi:10.1068/p6038) [DOI] [PubMed] [Google Scholar]

- 27.Lawson R. 2009. A comparison of the effects of depth rotation on visual and haptic three-dimensional object recognition. J. Exp. Psychol. Hum. Percept. Perform. 35, 911–930 10.1037/a0015025 (doi:10.1037/a0015025) [DOI] [PubMed] [Google Scholar]

- 28.Newell F. N., Ernst M. O., Tjan B. S., Bülthoff H. H. 2001. Viewpoint dependence in visual and haptic object recognition. Psychol. Sci. 12, 37–42 10.1111/1467-9280.00307 (doi:10.1111/1467-9280.00307) [DOI] [PubMed] [Google Scholar]

- 29.Lacey S., Peters A., Sathian K. 2007. Cross-modal object recognition is viewpoint-independent. PLoS ONE 9, 1–6 10.1371/journal.pone.0000890 (doi:10.1371/journal.pone.0000890) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ekman P., et al. 1987. Universals and cultural differences in the judgment of facial expression of emotions. J. Pers. Soc. Psychol. 53, 712–717 10.1037/0022-3514.53.4.712 (doi:10.1037/0022-3514.53.4.712) [DOI] [PubMed] [Google Scholar]

- 31.Kilgour A. R., Lederman S. J. 2002. Face recognition by hand. Percept. Psychophys. 64, 339–352 10.3758/BF03194708 (doi:10.3758/BF03194708) [DOI] [PubMed] [Google Scholar]

- 32.Lederman S. J., Klatzky R. L., Abramowicz A., Salsman K., Kitada R., Hamilton C. 2007. Haptic recognition of static and dynamic expressions of emotion in the live face. Psychol. Sci. 18, 158–164 10.1111/j.1467-9280.2007.01866.x (doi:10.1111/j.1467-9280.2007.01866.x) [DOI] [PubMed] [Google Scholar]

- 33.Lederman S. J., Klatzky R. L., Kitada R. 2010. Haptic face processing and its relation to vision. In Multisensory object perception in the primate brain (eds Kaiser J., Naumer M.), pp. 273–300 New York, NY: Springer [Google Scholar]

- 34.Subramaniam S., Biederman I. 1997. Does contrast reversal affect object identification? Invest. Opthalmol. Vis. Sci. 38, 998 [Google Scholar]

- 35.Yin R. K. 1969. Looking at upside down faces. J. Exp. Psychol. 81, 141–145 10.1037/h0027474 (doi:10.1037/h0027474) [DOI] [Google Scholar]

- 36.Tanaka J. W., Farah M. J. 1993. Parts and wholes in face recognition. Q. J. Exp. Psychol. 46A, 225–245 [DOI] [PubMed] [Google Scholar]

- 37.Behrmann M., Moscovitch M., Winocur G. 1994. Intact visual imagery and impaired visual perception in a patient with visual agnosia. J. Exp. Psychol. Hum. Percept. Perform. 20, 1068–1087 10.1037/0096-1523.20.5.1068 (doi:10.1037/0096-1523.20.5.1068) [DOI] [PubMed] [Google Scholar]

- 38.Gauthier I., Curran T., Curby K. M., Collins D. 2003. Perceptual interference supports a non-modular account of face processing. Nat. Neurosci. 6, 428–432 10.1038/nn1029 (doi:10.1038/nn1029) [DOI] [PubMed] [Google Scholar]

- 39.McGugin R. W., Gauthier I. 2010. Perceptual expertise with objects predicts another hallmark of face perception. J. Vis. 10, 1–12 10.1167/10.4.15 (doi:10.1167/10.4.15) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Marr D. 1982. Vision. San Francisco, CA: Freeman; 10.1002/neu.480130612 (doi:10.1002/neu.480130612). [DOI] [Google Scholar]

- 41.Kilgour A. R., Kitada R., Servos P., James T. W., Lederman S. L. 2005. Haptic face identification activates ventral occipital and temporal areas: an fMRI study. Brain Cogn. 59, 246–257 10.1016/j.bandc.2005.07.004 (doi:10.1016/j.bandc.2005.07.004) [DOI] [PubMed] [Google Scholar]

- 42.McGregor T. A., Klatzky R. L., Hamilton C., Lederman S. J. 2010. Haptic classification of facial identity in 2D displays: configural vs. feature-based processing. IEEE Trans. Haptics 3, 48–55 10.1109/TOH.2009.49 (doi:10.1109/TOH.2009.49) [DOI] [PubMed] [Google Scholar]

- 43.Direnfeld E. 2007. Haptic classification of live facial expressions of emotion: a face-inversion effect. Undergraduate honours thesis (available from present authors on request), Queen's University, Kingston, Ontario [Google Scholar]

- 44.Baron M. 2008. Haptic classification of facial expressions of emotion on 3-D facemasks and the absence of a haptic face-inversion effect. Undergraduate honours thesis (available from present authors on request), Queen's University, Kingston, Ontario [Google Scholar]

- 45.Lederman S. J., Klatzky R. L., Rennert-May E., Lee J. H., Ng K., Hamilton C. 2008. Haptic processing of facial expressions of emotion in 2-D raised-line drawings. IEEE Trans. Haptics 1, 27–38 10.1109/TOH.2008.3 (doi:10.1109/TOH.2008.3) [DOI] [PubMed] [Google Scholar]

- 46.Klatzky R. L., Loomis J., Lederman S. J., Wake H., Fujita N. 1993. Haptic identification of objects and their depictions. Percept. Psychophys. 54, 170–178 10.3758/BF03211752 (doi:10.3758/BF03211752) [DOI] [PubMed] [Google Scholar]

- 47.Abramowicz A. 2006. Haptic identification of emotional expressions portrayed statically vs. dynamically on live faces: the effect of eliminating eye/eyebrow/forehead vs. mouth/jaw/chin regions. Undergraduate honours thesis, Queen's University, Kingston, Ontario [Google Scholar]

- 48.Ho E. 2006. Haptic and visual identification of facial expressions of emotion in 2-D raisedline depictions: relative importance of mouth versus eyes + eyebrow regions. Undergraduate honours thesis, Queen's University, Kingston, Ontario [Google Scholar]

- 49.Keating C. F., Keating E. G. 1982. Visual scan patterns of rhesus monkeys viewing faces. Perception 11, 211–219 10.1068/p110211 (doi:10.1068/p110211) [DOI] [PubMed] [Google Scholar]

- 50.Leder H., Candrian G., Huber O., Bruce V. 2001. Configural features in the context of upright and inverted faces. Perception 30, 73–83 10.1068/p2911 (doi:10.1068/p2911) [DOI] [PubMed] [Google Scholar]

- 51.Bassili J. N. 1979. Emotion recognition: the role of facial movement and the relative importance of upper and lower areas of the face. J. Pers. Soc. Psychol. 37, 2049–2058 10.1037/0022-3514.37.11.2049 (doi:10.1037/0022-3514.37.11.2049) [DOI] [PubMed] [Google Scholar]

- 52.Calvo M. G., Nummenmaa L. 2008. Detection of emotional faces: salient physical features guide effective visual search. J. Exp. Psychol. Gen. 137, 471–494 10.1037/a0012771 (doi:10.1037/a0012771) [DOI] [PubMed] [Google Scholar]

- 53.Kilgour A. R., deGelder B., Lederman S. J. 2004. Haptic face recognition and prosopagnosia. Neuropsychologia 42, 707–712 10.1016/j.neuropsychologia.2003.11.021 (doi:10.1016/j.neuropsychologia.2003.11.021) [DOI] [PubMed] [Google Scholar]

- 54.Abramowicz A., Klatzky R. L., Lederman S. J. 2010. Learning and generalization in haptic classification of 2D raised-line drawings of facial expressions of emotion by sighted and adventitiously blind observers. Perception 39, 1261–1275 10.1068/p6686 (doi:10.1068/p6686) [DOI] [PubMed] [Google Scholar]