Abstract

Problem addressed

Family medicine residency programs require innovative means to assess residents’ competence in “soft” skills (eg, patient-centred care, communication, and professionalism) and to identify residents who are having difficulty early enough in their residency to provide remedial training.

Objective of program

To develop a method to assess residents’ competence in various skills and to identify residents who are having difficulty.

Program description

The Competency-Based Achievement System (CBAS) was designed to measure competence using 3 main principles: formative feedback, guided self-assessment, and regular face-to-face meetings. The CBAS is resident driven and provides a framework for meaningful interactions between residents and advisors. Residents use the CBAS to organize and review their feedback, to guide their own assessment of their progress, and to discern their future learning needs. Advisors use the CBAS to monitor, guide, and verify residents’ knowledge of and competence in important skills.

Conclusion

By focusing on specific skills and behaviour, the CBAS enables residents and advisors to make formative assessments and to communicate their findings. Feedback indicates that the CBAS is a user-friendly and helpful system to assess competence.

Résumé

Problème à l’étude

Les programmes de résidence en médecine familiale ont besoin de méthodes innovatrices pour évaluer la compétence des résidents dans les habiletés « molles » (p. ex. les soins centrés sur le patient, la communication et le professionnalisme) et pour identifier assez tôt dans leur résidence ceux qui éprouvent des difficultés afin de leur fournir une formation de rattrapage.

Objectif du programme

Développer une méthode permettant d’évaluer la compétence des résidents relativement à différentes habiletés et identifier les résidents qui éprouvent des difficultés.

Description du programme

Le Competency –Based Achievement System (CBAS) a été créé pour mesurer la compétence en utilisant 3 principes majeurs : une rétroaction formatrice, une auto-évaluation assistée et des rencontres individuelles régulières. Le CBAS est géré par les résidents et il fournit un cadre permettant des interactions significatives entre résidents et moniteurs. Les résidents se servent du CBAS pour organiser et réviser leur rétroaction, pour les guider dans l’évaluation qu’ils font de leur progrès et pour découvrir leurs besoins éventuels de formation. Les moniteurs utilisent le CBAS pour faire le suivi des résidents, les guider et vérifier leurs connaissances et compétences dans diverses habiletés.

Conclusion

En insistant sur des habiletés et comportements spécifiques, le CBAS permet aux résidents et aux moniteurs d’effectuer des évaluations formatrices et de partager leurs observations. La rétroaction indique que le CBAS est un moyen d’évaluer la compétence qui est utile et facile à utiliser.

Competence in family medicine includes knowing what to do, when and how to do it, and whether to do it at all. A valid and reliable means of assessing the competence of family physicians preparing to enter practice is essential to ensure patient safety. There are 2 important areas of concern when teaching residents and assessing their competence in family medicine: 1) assessment of residents’ development of “soft skills,” such as patient-centred care and communication, and 2) identification of those residents who are having difficulty early enough in their residency to provide remedial training.

Accurate assessment of competence requires continuing, serial, and direct observation of workplace behaviour and monitoring of progress based on feedback. This highlights the inadequacy of multiple-choice examinations or one-time demonstrations of skill (eg, objective structured clinical examinations [OSCEs]) to assess competence. These methods of assessment do not reveal the realities of being competent in practice.

Our work at the Department of Family Medicine at the University of Alberta in Edmonton builds upon the global movement toward competency-based medical education1 by providing not only a means of assessing competence, but also a system to support the development of competence. Our work draws from existing pilot programs in other areas of health care2–4 that suggest competency-based curricula and assessments lead to greater success in producing skilled and effective physicians when compared with traditional rotation and time-based programs.

The College of Family Physicians of Canada (CFPC) has also adopted a competency-based program. The skills family medicine residents need to learn and demonstrate have been determined from the results of a series of surveys conducted by the CFPC in which practising family physicians indicated the competency and skill domains they thought were most important for family physicians entering independent practice.5 The CFPC established separate working groups to develop guidelines for both curriculum development and competency-based assessment to ensure that residents were prepared for family practice. The challenge of implementing a competency-based framework in medical education is associated with the difficulties inherent in assessing competence. How do you assess competence? How do you know when someone has attained competence or is progressing toward it?

The most effective ways of measuring competence have yet to be clearly identified.6 Several medical education researchers suggest such methods as multiple-choice questionnaires, OSCEs, in-training evaluation reports (ITERs), and logs7,8; others build on that list by suggesting observer ratings.9 The concern about all these proposed methods is that they are limited to generating a checklist of competencies. Checklists provide only a summary evaluation. They do not give students useful information about what they are doing right or how they could improve before being evaluated. Information should be about residents’ performance and it must be specific to their progress toward clinical competence and to the development of a habitual approach to improving and using their skills and knowledge.10

To provide this kind of information, we developed an innovative approach to assessing not only the achievement of the many dimensions of competence in family medicine but also the progress toward that achievement. Our work was guided by the principles of high-quality feedback and the CFPC’s mandate to move to competency-based residency education.5 For residents, we wanted a system to guide their learning using formative feedback; and for their advisors and preceptors, we wanted a system that would be resident driven, so that residents would recognize when feedback was being given, would be able to act upon that feedback, and could help themselves to progress toward competence by soliciting feedback in areas where they needed it.

Drawing from work at the University of Maastricht in the Netherlands,2 the Cleveland Clinic Lerner College of Medicine in the United States,3 and other competency-based medical programs,4 our team determined the stages and requirements for a valid, reliable, and cost-effective system of evaluating competence using documented formative feedback. We called the system the Competency-Based Achievement System (CBAS). The key feature of the CBAS is that assessment goes both ways. Rather than clinical rotation-based summative evaluations from preceptors passing judgment on the knowledge and abilities of the residents they supervise, both advisors and residents review cumulative evidence of residents’ demonstrated skills and competence in a variety of clinical settings. After reviewing this evidence, advisors and residents come to a mutual understanding of residents’ strengths and weaknesses. The intent of the CBAS is to facilitate student-centred learning by giving residents a system for guided self-assessment.

Program description

The CBAS was designed to measure competence using 3 main principles: best practices in formative feedback, guided self-assessment, and regular face-to-face meetings between residents and advisors.

The CBAS uses FieldNotes as the primary tool for collecting evidence of progress toward competence. FieldNotes are forms in a prescription-sized pad on which immediate feedback about directly observed events can be noted. FieldNotes are intended to be a qualitative account of feedback11,12 and a check-in on progress. FieldNotes can serve as reminders of verbal feedback and act as memory prompts in place of detailed discussions of residents’ performance. Progress levels indicated on FieldNotes give residents assessments of their learning in real-time workplace settings. The contents of FieldNotes must be discussed by observers and residents in a timely fashion, ideally at the time FieldNotes are created or within a few days.

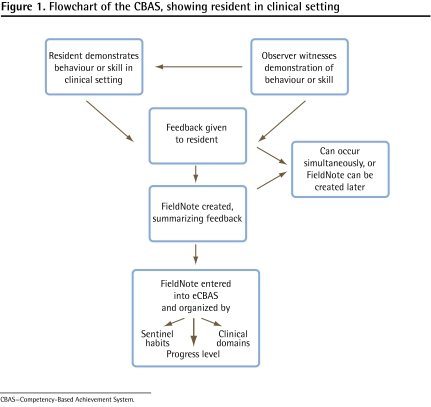

FieldNotes can be about diagnoses or management decisions, presentations, team interactions, charts or letters, patient interactions, procedures, and any other aspect of practising medicine. Observers could be preceptors, advisors, nurses, patients, peers, or other people on the scene. Residents are expected to get 1 FieldNote per clinical day and 1 FieldNote per clinical call-back day in a first-year family medicine rotation; more FieldNotes than this are a bonus for residents and are encouraged. Our program requires that FieldNotes be completed in eCBAS, an online electronic workbook (Box 1). Administrative support is available to residents to ensure that paper-based FieldNotes are entered into eCBAS. Residents use the FieldNotes in eCBAS to assess their progress toward competence as they proceed through the residency program and to identify gaps in their knowledge (Figure 1).

Box 1. Information about the eCBAS (electronic workbook).

The eCBAS was ...

developed in response to user demand for electronic FieldNote entry

built as a custom configuration of Microsoft Sharepoint

The eCBAS acts as a repository and sorting tool for FieldNotes

Its contents are not individually evaluated unless a FieldNote requires program attention

___________________________

CBAS—Competency-Based Achievement System.

Figure 1.

Flowchart of the CBAS, showing resident in clinical setting

CBAS—Competency-Based Achievement System.

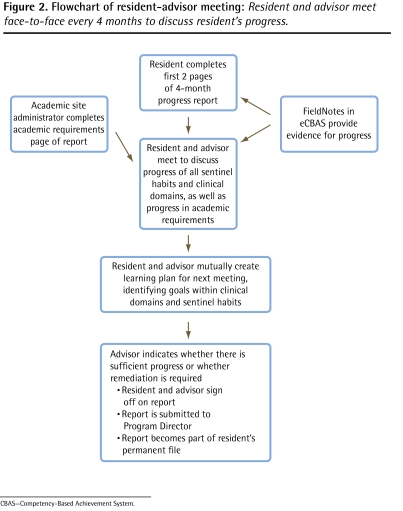

Residents and advisors have scheduled, structured, face-to-face meetings every 4 months to review residents’ progress (Figure 2). In preparation for these meetings, residents review all FieldNotes to assess their progress in sentinel habits (Box 2) and clinical domains (Box 3). Residents record the assessment of their progress on their 4-month progress report forms. Residents and advisors review the reports together. In-training evaluation reports for all rotations use the same sentinel habits and clinical domains organizational structure as the 4-month progress reports, and they are further support for residents’ self-assessments.

Figure 2.

Flowchart of resident-advisor meeting: Resident and advisor meet face-to-face every 4 months to discuss resident’s progress.

CBAS—Competency-Based Achievement System.

Box 2. Sentinel habits: Common skills and habits that make a good physician.

Residents collect feedback through FieldNotes and have discussions with their advisors about progress in the following sentinel habits:

Incorporates patient context in determining care and treatment

Generates relevant hypotheses

Uses best practice to manage patient care

Selects appropriate focus in clinical encounters

Applies key features for all procedures

Demonstrates respect and responsibility

Uses clear and timely verbal and written communication

Helps others learn

Promotes effective practice quality

Seeks guidance and feedback

Box 3. Clinical domains of family medicine.

Residents collect feedback through FieldNotes and have discussions with their advisors about progress in the following clinical domains:

Maternity and newborn care

Care of children and adolescents

Care of adults

Care of elderly people

Palliative and end-of-life care

Behavioural medicine and mental health

Surgical and procedural skills

Care of vulnerable and underserviced people

After residents have completed their portion of the 4-month progress report, they meet with their advisors to discuss the report. If the advisor agrees that the resident is accurate in his or her self-assessment, the 4-month progress report is left as completed by the resident. If the advisor believes that there are inaccuracies in the self-assessment or opportunities for additional insight, the resident and advisor mutually negotiate a more accurate progress report.

The final stage of the meeting is used to establish a learning action plan for the next 4 months. Together, advisors and residents identify possible opportunities for learning during upcoming scheduled clinical experiences or rotations. This learning action plan is recorded on the 4-month progress report, along with any comments on a resident’s progress. After the first meeting, each subsequent meeting begins with a review of the previous meeting’s learning action plan.

Sentinel habits

In response to requests to make the categories on the FieldNotes more relevant to daily clinical practice, we derived the key skills of a good physician from the CFPC’s skill dimensions and the CanMEDS–family medicine roles. These key skills need to be learned and repeated until they become habitual behaviour. We have labeled these skills “sentinel habits,” and they are used in the CBAS to guide the evaluation of progress toward competence.

Evaluation of the CBAS

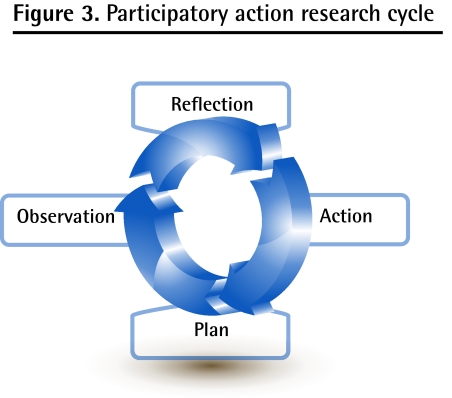

The CBAS was developed using participatory action research (PAR), and the PAR process was followed throughout pilot implementation to continuously monitor the system. In PAR research, the members of a community of interest are directly involved in reflection on a problem, in planning ways of addressing the problem, and in the action and observation that follows planning (Figure 3). The action is researched, changed, and researched again within the research process by participants.13 Table 1 summarizes each PAR cycle during development and implementation of the CBAS.

Figure 3.

Participatory action research cycle

Table 1.

Summary of each PAR cycle* during development and implementation of the CBAS

| PAR CYCLE | PARTICIPANTS | REFLECTION | PLAN AND ACTION | OBSERVATION |

|---|---|---|---|---|

| 1 | Program directors, advisors, CBAS team | Organization system needed for FieldNotes | Paper-based CBAS portfolio organized by CFPC’s skill dimensions and 99 priority topics | System is workable, but electronic version would be preferred by some |

| 2 | Advisors, program directors, CBAS team | Clear way to direct resident learning needed within system | Electronic system developed; “stacking” created (ie, self-determined topic stacks for targeted learning) | Faculty development in use of the CBAS needed; resident training needed; range of responses to “stacking” |

| 3 | Advisors, program directors, residents, CBAS team | Resident training will need to be competency based | Pilot implementation of the CBAS at 3 pilot sites | More faculty development needed; residents expect feedback to follow best practices as explained to them |

| 4 | Advisors, program directors, residents, CBAS team | Increase the CBAS’s value to learning | FieldNotes more clearly tied to summative evaluation through new 4-month report (version 1) | Stacking is confusing; tie between skill dimensions and CanMEDS-FM and workplace assessment not clear; a clearer understanding of progress is required |

| 5 | Advisors, program directors, residents, CBAS team | Find “missing link” between skill dimensions and CanMEDS-FM and workplace | Development of sentinel habits; introduction of progress levels on FieldNotes; match ITERs to sentinel habits | Saturation appears to have been reached on FieldNotes; advisors and residents actively using eCBAS (electronic workbook); sentinel habits appear to be useful and intuitive |

| 6 | Advisors, program directors, residents, CBAS team | Validate the CBAS | Full CBAS implementation; new 4-month report (version 2) | Data collection under way |

CanMEDS-FM—CanMEDS–family medicine, CBAS—Competency-Based Achievement System, CFPC—College of Family Physicians of Canada, ITERs—in-training evaluation reports, PAR—participatory action research.

All data were collected through focus groups and interviews.

Throughout the PAR cycles, our participants included 34 residents, 3 program directors, 8 advisors, and the 4 members of the CBAS team, which was made up of education experts in our Department of Family Medicine. All participation was voluntary; all participants gave signed consent; and the program was approved by the University of Alberta Human Research Ethics Board. Changes to the system were made based on user feedback. These changes were then evaluated to ensure they responded to users’ comments. Considerable changes were made as a result of pilot feedback (Table 2).

Table 2.

Changes to the CBAS program in response to feedback

| ORIGINAL COMPONENT | USER FEEDBACK | RESPONSE TO FEEDBACK |

|---|---|---|

| Paper-based portfolio | An electronic filing system was needed, as well as online access | Development of eCBAS (electronic workbook) |

| Stacking (ie, open-ended method of categorizing FieldNotes) | Too open; residents wanted more guidance and direction | Stacking became optional; FieldNotes were now categorized by clinical domains and sentinel habits |

| Guiding principles: CFPC’s skill dimensions | Too abstract; residents failed to see a connection between the higher-level skill dimensions and their own day-to-day experiences in clinical practice | Identification of sentinel habits (the common skills and habits that make a good physician) |

| Expectation of 1 FieldNote per clinical half-day | Residents found it difficult to get FieldNotes in many rotations, and found many FieldNotes did not give good feedback | New expectation of 1 FieldNote per clinical day in first-year family medicine rotation, and 1 FieldNote per clinical call-back day. FieldNotes acquired beyond this were a bonus and were welcome |

| Residents to enter every FieldNote in eCBAS | Too time-consuming; many residents believed that this component was useless busywork | Electronic FieldNotes were strongly encouraged; paper FieldNotes were given to support staff for manual entry |

| Focus on FieldNotes and FieldNotes reviews to guide learning | Not enough of a link to assessment | Emphasis was on regularly scheduled meetings between residents and advisors; FieldNotes could be used for review and as documentation but were not the focus. Progress levels created for each FieldNote |

CBAS—Competency-Based Achievement System, CFPC—College of Family Physicians of Canada.

We held focus groups to discuss the system. Comments from these groups revealed that, in the pilot implementation and in the first stages of full implementation, the CBAS was proving useful. Advisors and program directors had positive responses about the CBAS, and program directors indicated that they found that the CBAS gave more complete information than previous evaluation methods had. Before the CBAS, in-training evaluation reports and progress reports were in the form of checklists, which gave little information to program directors on residents’ progress.

Residents’ responses were more cautiously positive. Some residents at one of the pilot sites asked to have the CBAS discontinued. Residents at 3 other pilot sites saw potential in the CBAS and appreciated the quick response to requests for changes. End-user focus group data revealed the need for faculty development in giving good formative feedback and the need for continuous education for residents in using formative feedback to develop competence.

Focus groups with program directors revealed that the CBAS had proven to be an effective way of identifying residents having difficulty. The emphasis on regular use of FieldNotes and more frequent face-to-face meetings allowed residents who were having difficulty to be recognized and helped. The “stacking” (self-determined topic stacks for targeted learning) feature of the CBAS was used successfully to help residents focus on areas in which they needed further experience and more knowledge. Residents in need of remediation also benefited from the learning action plans embedded in the 4-month progress reports.

Discussion

The CBAS is unique among existing competency-based assessment systems owing to its focus on authentic workplace-based assessments. Unlike other systems,4–6 all formative feedback in the CBAS comes from direct observation of clinical practice and behaviour during encounters. The CBAS does not rely on summative examinations, checklists, or OSCEs. All summative evaluations are derived directly from the actual behaviour and knowledge exhibited by residents in their daily practice.

The transition to the CBAS as the primary assessment method in our program has met with success from the perspective of program directors. The new 4-month progress reports give more detailed information about residents’ progress. Residents are seeing the connection between the formative feedback they receive during their clinical days and the summative assessments of progress they get about every 4 months. This connection between learning and progress is much clearer than with previous assessments.

Most important, the focus groups indicated that residents now recognized good formative feedback. This was both the greatest success and the greatest challenge of the CBAS. Whereas the residents in our program previously complained about not receiving feedback, they now complained about not receiving good-enough feedback. Our residents are no longer satisfied with hearing “Good job!” They want to know why it is a good job. The feedback process is now clear to our residents, and they want the best constructive feedback they can get.

Limitations

The limitations to evaluating the research portion of this study were primarily the difficulty in recruiting participants. While many residents participated in the focus groups, not all residents shared their opinions within the PAR framework. Also, data collection during evaluation of the pilot implementation focused primarily on program directors and residents; advisors did not participate fully. We will address this limitation in our future research.

Future directions

As of July 2010, the CBAS was fully implemented across our entire residency program. We continue to evaluate and solicit feedback from users within the PAR framework and to make changes to the CBAS based on end-user feedback (from residents, advisors, program directors, program and site administrators, and off-service preceptors). This process will continue for the next several years as we validate the CBAS and expand it to other programs and specialties. We also intend to follow our CBAS-trained residents as they begin to practise in order to determine whether they are better able to assess themselves as practising physicians.

Conclusion

The CBAS is designed to sample certain skills and behaviour, which allows for formative assessment. The feedback gathered facilitates meaningful discussion around residents’ progress and allows residents to become more aware of how to direct their learning and practise guided self-assessment.

The CBAS approach to assessment incorporates PAR into each stage of development, implementation, monitoring, and readjustment. Our research shows that including all CBAS users makes this dynamic process easy to use and useful for assessing competence.

Finally, there is a feeling of empowerment among our users as they realize that they can decide how competency-based assessment will happen for them. We think this will further improve the perception of a positive learning environment in our residency program.

EDITOR’S KEY POINTS

The Department of Family Medicine at the University of Alberta in Edmonton created the Competency-Based Achievement System (CBAS) to assess residents’ competence during their preparation to practice.

Through formative feedback, guided self-assessment, and regular face-to-face meetings, the CBAS provides a framework for meaningful communication between residents and advisors.

Comments from those who have implemented the CBAS indicate that it is a user-friendly and helpful method to assess a resident’s progress.

POINTS DE REPÈRE DU RÉDACTEUR

Le département de médecine familiale de l’université de l’Alberta à Edmonton a créé le Competency–Based Achievement System (CBAS) pour évaluer la compétence des rési-dents durant leur préparation à la pratique.

Au moyen d’une rétroaction formatrice, d’une autoévaluation assistée et de rencontres individuelles, le CABS assure une communication significative entre les résidents et leurs moniteurs.

Les commentaires de ceux qui ont fait l’essai du CBAS indiquent que cette méthode d’évaluation des progrès des résidents est utile et facile à utiliser.

Footnotes

This article has been peer reviewed.

Cet article a fait l’objet d’une révision par des pairs.

Contributors

All the authors contributed to concept and design of the program; data gathering, analysis, and interpretation; and preparing the manuscript for submission.

Competing interests

None declared

References

- 1.Carraccio C, Wolfsthal SD, Englander R, Ferentz K, Martin C. Shifting paradigms: from Flexner to competencies. Acad Med. 2002;77(5):361–7. doi: 10.1097/00001888-200205000-00003. [DOI] [PubMed] [Google Scholar]

- 2.Van Tartwijk J, Driessen E, van der Vleuten C, Stokking K. Factors influencing the introduction of portfolios. Qual High Educ. 2007;13(1):69–79. [Google Scholar]

- 3.Dannefer EF, Henson LC. The portfolio approach to competency-based assessment at the Cleveland Clinic Lerner College of Medicine. Acad Med. 2007;82(5):493–502. doi: 10.1097/ACM.0b013e31803ead30. [DOI] [PubMed] [Google Scholar]

- 4.Carraccio C, Englander R. Evaluating competence using a portfolio: a literature review and web-based application to the ACGME competencies. Teach Learn Med. 2004;16(4):381–7. doi: 10.1207/s15328015tlm1604_13. [DOI] [PubMed] [Google Scholar]

- 5.College of Family Physicians of Canada . Defining competence for the purposes of certification by the College of Family Physicians of Canada. The new evaluation objectives in family medicine. Mississauga, ON: College of Family Physicians of Canada; 2009. Available from: www.cfpc.ca/uploadedFiles/Education/Defining%20Competence%20Complete%20Document%20bookmarked.pdf. Accessed 2010 Jul 15. [Google Scholar]

- 6.Ten Cate O, Scheele F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. 2007;82(6):542–7. doi: 10.1097/ACM.0b013e31805559c7. [DOI] [PubMed] [Google Scholar]

- 7.Smith SR, Goldman RE, Dollase RH, Taylor JS. Assessing medical students for non-traditional competencies. Med Teach. 2007;29(7):711–6. doi: 10.1080/01421590701316555. [DOI] [PubMed] [Google Scholar]

- 8.Sherbino J, Bandiera G, Frank J. Assessing competence in emergency medicine trainees: an overview of effective methodologies. CJEM. 2008;10(4):365–71. doi: 10.1017/s1481803500010381. [DOI] [PubMed] [Google Scholar]

- 9.Shumway JM, Harden RM. AMEE guide no. 25: the assessment of learning outcomes for the competent and reflective physician. Med Teach. 2003;25(6):569–84. doi: 10.1080/0142159032000151907. [DOI] [PubMed] [Google Scholar]

- 10.Epstein RM, Hundert EM. Defining and assessing professional competence. JAMA. 2002;287(2):226–35. doi: 10.1001/jama.287.2.226. [DOI] [PubMed] [Google Scholar]

- 11.Donoff MG. The science of in-training evaluation. Facilitating learning with qualitative research. Can Fam Physician. 1990;36:2002–6. [PMC free article] [PubMed] [Google Scholar]

- 12.Donoff MG. Field notes. Assisting achievement and documenting competence. Can Fam Physician. 2009;55:1260–2. e100–2. (Eng), (Fr). [PMC free article] [PubMed] [Google Scholar]

- 13.Reason P, Bradbury H, editors. Handbook of action research: participative inquiry and practice. Thousand Oaks, Calif: Sage; 2001. [Google Scholar]