Abstract

“Normal hearing” is typically defined by threshold audibility, even though everyday communication relies on extracting key features of easily audible sound, not on sound detection. Anecdotally, many normal-hearing listeners report difficulty communicating in settings where there are competing sound sources, but the reasons for such difficulties are debated: Do these difficulties originate from deficits in cognitive processing, or differences in peripheral, sensory encoding? Here we show that listeners with clinically normal thresholds exhibit very large individual differences on a task requiring them to focus spatial selective auditory attention to understand one speech stream when there are similar, competing speech streams coming from other directions. These individual differences in selective auditory attention ability are unrelated to age, reading span (a measure of cognitive function), and minor differences in absolute hearing threshold; however, selective attention ability correlates with the ability to detect simple frequency modulation in a clearly audible tone. Importantly, we also find that selective attention performance correlates with physiological measures of how well the periodic, temporal structure of sounds above the threshold of audibility are encoded in early, subcortical portions of the auditory pathway. These results suggest that the fidelity of early sensory encoding of the temporal structure in suprathreshold sounds influences the ability to communicate in challenging settings. Tests like these may help tease apart how peripheral and central deficits contribute to communication impairments, ultimately leading to new approaches to combat the social isolation that often ensues.

Keywords: auditory processing disorder, frequency following response, individual differences, auditory scene analysis, informational masking

Making sense of conversations in busy restaurants or streets is a challenge, as competing sound sources add up to create a confusing cacophony. Central to communicating in such environments is selective attention, the process that enables listeners to filter out unwanted events and focus on a desired source (1). By listening for an object with a particular attribute (for instance, by focusing on the source from straight ahead), competing sound sources can be suppressed and the desired object brought into a listener's attentional focus (2–4).

Many listeners with clinically normal hearing complain of difficulties with selective auditory attention (5). Because such difficulties manifest in complex everyday tasks, they are often assumed to arise from central processing deficits (6, 7). However, there is no real consensus: Are “normal-hearing” listeners who have trouble conversing in ordinary social settings suffering from a central deficit, or is there a peripheral cause (8, 9)? Reflecting this ongoing debate, clinical diagnoses use a range of labels to describe normal-hearing listeners who cannot communicate easily when there are competing sound sources; there is no standard procedure for identifying these listeners and no mechanistic explanation for their difficulties (7, 10).

A spate of recent studies suggests that hearing-impaired listeners are relatively insensitive to the fine spectrotemporal detail in suprathreshold sounds and that this impedes the ability to understand a source in a noisy background (11–13). Given that normal hearing is defined simply on the basis of the quietest sounds a listener can hear at each acoustic frequency, the fidelity with which the auditory system encodes suprathreshold sounds may also differ across listeners with clinically normal hearing (14). Such differences could in turn explain why some normal-hearing listeners have problems in selective auditory attention tasks.

We recently showed that the clarity of the acoustic scene impacts the ability to direct selective auditory attention (15). We reasoned that by distorting the fine spectrotemporal structure of sound, echoes and reverberation would interfere with the ability of listeners to use the suprathreshold source attribute of perceived location to select a desired talker from amid streams of competing talkers, even if the acoustic distortion was not enough to interfere with intelligibility of the speech streams (16–18). Consistent with our expectations, we found that reverberant energy interfered with spatial selective auditory attention, even though listeners still understood words from the scene. Importantly, our listeners, who all had clinically normal auditory thresholds, differed dramatically in their ability to focus attention on speech from a known direction in the presence of similar, competing speech streams (15). This result hints that if the fidelity of the sensory representation of suprathreshold sounds differs across normal-hearing listeners, it may help explain differences in the ability to focus attention on a desired sound stream in a complex setting.

Results

Here, we first recruited additional subjects and tested them on our original selective auditory attention task, verifying our earlier results showing large individual differences in selective auditory attention ability (15). We then ran additional tests with a subset of our listeners to explore whether the individual differences could be attributed to differences in basic sensory encoding of fine timing in acoustic signals. We show that spatial selective attention ability correlates with sensitivity to detection of frequency modulation in a sinusoidal stimulus, a measure of perceptual acuity for fine spectrotemporal structure of suprathreshold sound (12). Importantly, we also find that selective attention ability correlates with an objective physiological measure of the fidelity with which fine spectrotemporal detail of audible speech is encoded subcortically, suggesting that the difficulties some listeners have with selective auditory attention reflect poor encoding at early sensory stages of the auditory system.

Spatial Selective Attention Task.

Forty-two normal-hearing adults, aged 18–55, were tested on our selective attention task (an additional 8 did not complete the task as directed; their data are not included here) (15). All listeners had audiometric thresholds within 20 dB hearing level (HL) or better for octave frequencies from 250 Hz to 8 kHz and less than 10 dB threshold asymmetry between the left and right ears. We designed our task to both mimic everyday listening situations (requiring listeners to focus spatial attention to make sense of a target signal) and to emphasize coding of fine spectrotemporal details in stimuli.

Three simultaneous streams of speech were simulated from three different directions relative to the head: one from 15° to the left, one from directly ahead (azimuth 0°), and one from 15° to the right. Each stream consisting of four spoken digits; the onsets of each of the four digits were time aligned across the three spatial streams. Listeners were instructed to report the digits from the center stream, ignoring the left and right masker streams.

Because the three speech streams consisted of simultaneous digits spoken by the same male talker, the only feature distinguishing target from maskers was direction. Moreover, because all three sources were close to midline, the only reliable spatial cue was the interaural time difference (ITD), which depends on fine comparisons of the timing of the signals reaching the left and right ears (emphasizing encoding of suprathreshold detail in the input sounds). Thus, this task requires listeners to make use of subtle spectrotemporal detail to compute the location of the target and masker sounds, which then can be used to select the target from the three-source mixture. Three levels of reverberation were simulated (anechoic, intermediate reverberation, and high reverberation), to titrate the difficulty of the task.

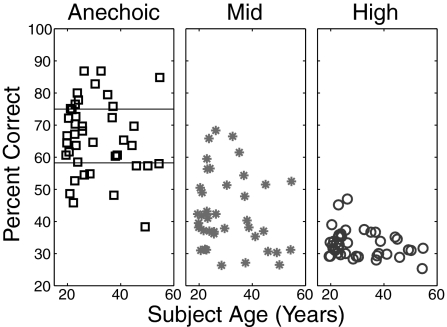

We find that reverberant energy interferes with the ability to report the center target (compare panels in Fig. 1). Confirming our previous results, we see large individual differences, with performance in the easiest, anechoic condition ranging from less than 40% to almost 90% correct. Moreover, these individual differences were not explained by factors that have previously been linked to individual differences, such as age (Fig. 1), differences in working memory ability, or slight differences in pure tone threshold found in our normal-hearing subject population (19–22).

Fig. 1.

The ability to perform a spatial selective auditory attention task varies greatly across listeners, an effect that is uncorrelated with age. In addition, performance is adversely affected by the addition of reverberant energy (compare results across panels). Individual data points plot the percentage of correctly reported target digits reported by an individual listener as a function of the listener's age. Each panel shows results for a different level of reverberant energy. Listeners from the top quartile (above the Upper horizontal line in the Left panel) and bottom quartile (below the Lower horizontal line in the Left panel) in the anechoic condition were recruited for additional testing.

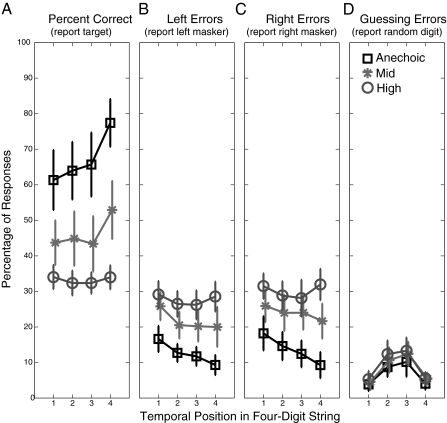

As in other studies of spatial selective auditory attention, the likelihood of a correct response tends to increase from digit to digit, reflecting an enhancement in attentional focus across time (Fig. 2A) (23). Most incorrect responses correspond to reports of one of the masker digits. Indeed, in the highest reverberation condition, subjects seem to select randomly among the three competing digits, reporting the target, the left masker, and the right masker with nearly equal probability (compare high-reverberation results, plotted as circles; Fig. 2 A–C). Guessing errors (reports of digits not actually present in one of the competing streams) are relatively rare, particularly for the first and last digits in the sequence where primacy and recency effects reduce recall errors (24) (Fig. 2D), suggesting that memory limits have little influence on performance. This pattern of errors confirms that the addition of reverberant energy does not render the speech streams unintelligible, but instead cripples the ability of the subjects to focus spatial selective attention on the target words, presumably by degrading fine spectrotemporal structure important for computing spatial location.

Fig. 2.

The likelihood of answering correctly tends to increase with digit position, reflecting a “build-up of attention.” As reverberant energy increases, listeners become more likely to report left or right masker digits. Overall, the rate of guessing errors (reporting a digit not in the mixture) is low. (A) Across-subject average percentage correct (±SEM), corresponding to reports of the target, for the three levels of reverberant energy. (B) Across-subject average percentage of reports of left masker digits (±SEM). (C) Across-subject average percentage of reports of right masker digits (±SEM). (D) Across-subject average percentage of guessing errors (±SEM).

To examine the causes of the large differences observed, we recruited 17 listeners from the top (n = 9) and bottom (n = 8) quartiles of performance in the anechoic condition on the spatial attention task (henceforth, the “top-quartile” and “bottom-quartile” listeners; Fig. 1, Left) to perform additional tests. Whereas this selection of subjects is not ideal, focusing the additional tests on some of the best and worst subjects allowed us to efficiently explore whether there is any relationship between spatial selective attention ability and other measures of performance. The additional tests were designed to reveal whether differences in selective auditory attention ability arise because individuals differ in their sensitivity to the fine spectrotemporal structure of sound and whether any such differences in spectrotemporal sensitivity are due to differences in early sensory encoding in the auditory system.

Frequency Modulation Detection Task.

To investigate whether listeners who have difficulty focusing spatial selective auditory attention differ in basic auditory abilities, we measured sensitivity to frequency modulation (FM) using an adaptive threshold procedure. At typical detection thresholds, small FM excursions are thought to be perceptible because they cause subtle differences in the timing of neural spikes in the auditory periphery (12). Given that our spatial selective attention task depends on computing ITDs from fine timing differences of the signals reaching the left and right ears, we hypothesized that individual differences in spatial selective attention might be related to differences in FM detection threshold.

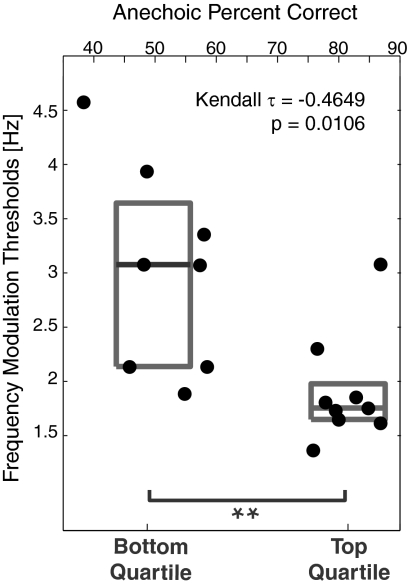

Listeners identified which of three intervals contained a modulated, rather than pure-tone, sinusoid (12). We find that, as a group, the top-quartile listeners in our selective attention task have lower FM thresholds than the bottom-quartile listeners [single-way ANOVA with factor of listener group: F(1,15) = 9.58, P = 0.0074; Fig. 3]. We further tested the relationship between basic temporal sensitivity and selective attention performance at the individual-listener level using rank correlations, calculated as the Kendall τ (which does not assume that measures are normally distributed). We find a significant negative rank correlation between individual subject performance on the spatial selective attention task in the anechoic condition (the condition in which individual differences were greatest) and the just-noticeable frequency excursion in the FM detection task (Kendall τ = −0.4649, P = 0.0106; Fig. 3). In other words, the greater the percentage of target digits a particular listener is able to report in an attention task, the smaller the FM excursion that he or she can detect. Because our subject pool included only good and bad listeners from our spatial selective attention task, we cannot use these results to quantify the relationship between performance on the attention task and on the FM task for the general population (e.g., using regression analysis). Nonetheless, these results show that individuals who have difficulty using fine ITDs to focus spatial selective auditory attention on a desired talker amid a mixture of talkers also struggle to detect small within-channel timing fluctuations in response to a simple sound.

Fig. 3.

The smallest detectable frequency modulation excursion (FM) is related to spatial selection attention performance in the easiest, anechoic condition, both at the group level and on an individual subject basis. FM thresholds are summarized by gray boxes for bottom- and top-quartile selective attention listener groups (25th to 75th percentiles; gray lines show median). Threshold FM is plotted as a function of the percentage correct on the spatial attention task in the anechoic condition for each listener (individual data points; top abscissa).

Measurement of Subcortical Encoding of Suprathreshold Detail.

We then measured the fidelity of physiological encoding of the temporal structure of a periodic sound for the same top-quartile and bottom-quartile listeners. Specifically, we recorded scalp voltages in response to presentations of the syllable /dah/, an approach that has been used in a number of recent studies to characterize the precision of early sensory encoding in subcortical auditory areas (25, 26). The steady-state vowel portion of the syllable is monotonized to have a repetition rate of 100 Hz, which, upon playback, gives rise to phase-locked responses in subcortical portions of the auditory pathway at the harmonics of 100 Hz, up to about 2 kHz (the frequency following response, FFR) (27). The strength of the FFR reflects the reliability with which periodic structure in a suprathreshold acoustic input is encoded physiologically, as observed on the scalp. We hypothesized that the strength of the FFR would relate to performance on our spatial attention task, which depends on fine temporal processing to compute source direction.

Each listener completed one FFR session, in which the syllable /dah/ was presented in quiet and in broadband noise at a signal-to-noise ratio (SNR) of 10 dB. For each subject in both quiet and noise, we estimated the phase locking value (PLV) (28, 29), a measure of the consistency of the evoked signal's phase at a given frequency, for frequencies spanning the first five harmonics of the input syllable.

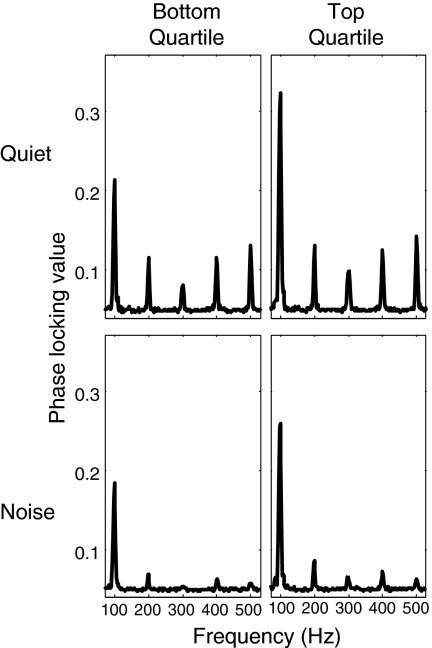

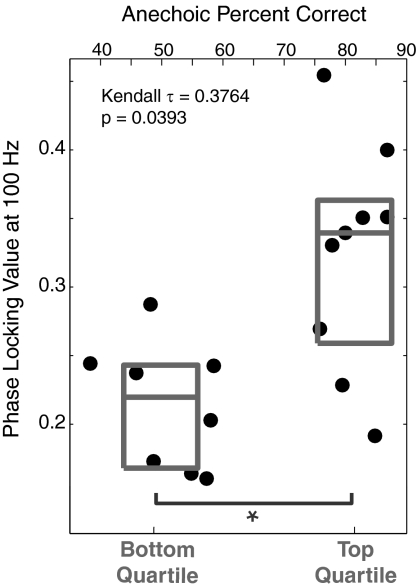

The PLV is strong at the harmonics of 100 Hz (Fig. 4). We find that for each of the first five harmonics of 100 Hz, noise significantly reduces the fidelity of the physiological encoding of the temporal structure of the input signal [100 Hz: F(1,48) = 37.5810, P = 7.9e-07; 200 Hz: F(1,48) = 46.4595, P = 7.0e-08; 300 Hz: F(1,48) = 36.9554, P = 9.5e-07; 400 Hz: F(1,48) = 40.7489, P = 3.2e-07; 500 Hz: F(1,48) = 94.1444, P = 3.4e-12; all P values corrected as described in Methods]. Whereas the interaction of listening condition × listener group was not significant for any harmonic, the PLV was greater for the top-quartile listeners than the bottom-quartile listeners at 100 Hz [F(1,15) = 10.0799, P = 0.03 after corrections; there were no significant differences in the PLV strength between the listener groups at the other four harmonics]. Extending this finding, we also find a significant positive rank correlation between the strength of the PLV at the fundamental frequency of 100 Hz and performance on our selective task in the anechoic condition (Kendall τ = 0.3764, P = 0.0393; Fig. 5). As with the FM-detection analysis, because we tested only top- and bottom-quartile listeners from the spatial selective attention task, we cannot use standard regression techniques to try to quantify the relationship between the FFR strength and spatial selective attention performance at the individual subject level. Still, we find that the strength with which subcortical portions of the auditory pathway encode the fundamental frequency of an acoustic input are related to how well individual listeners can direct spatial selective auditory attention in a complex scene.

Fig. 4.

The neural phase locking to the harmonics of an acoustic input with a 100-Hz pitch is stronger in quiet than in the presence of acoustic noise at all harmonics and stronger for our top-quartile listeners than our bottom-quartile listeners at 100 Hz. Each panel plots the phase locking value as a function of frequency. (Upper) Results in quiet. (Lower) Results in noise. (Left) Bottom-quartile listeners in our attention task. (Right) Top-quartile listeners in our attention task.

Fig. 5.

The strength with which the spectrotemporal structure of a periodic input signal is encoded subcortically is related to spatial selective attention performance in the easiest, anechoic condition, both at the group level and on an individual subject basis. The strength of the 100-Hz PLVs in quiet is summarized by gray boxes for bottom- and top-quartile selective attention listener groups (25th to 75th percentiles; gray lines show median). The 100-Hz PLV strength in quiet is plotted as a function of the percentage correct on the spatial attention task in the anechoic condition for each listener (individual data points; top abscissa).

Discussion

Our results reveal large intersubject differences in how effectively normal-hearing listeners are able to focus selective attention on a target speech stream amid competing masker streams that differ from the target only in spatial position. These individual differences in spatial selective auditory attention are related to the ability to detect changes in the spectrotemporal content of a simple, suprathreshold tone and, importantly, with an objective physiological measure of the fidelity of subcortical encoding of temporal structure in suprathreshold signals. These results underscore that thresholds of audibility often used to define “normal hearing” in the clinic do not capture important differences in basic sensory encoding that have important consequences for communicating in everyday situations.

A number of past studies find that the precision of the FFR is reduced in a wide range of special populations (e.g., nonmusicians compared with musicians, speakers of nontonal languages compared with speakers of tonal languages, children with learning disabilities or autism spectrum disorder compared with children without such difficulties, etc.) (26, 30–35). Some of these studies also demonstrate a correlation between subcortical encoding and performance on simple auditory tasks, like the ability to understand a target when “energetic masking” is the main limitation on performance (e.g., refs. 30, 34).

Our approach differs from past studies relating individual differences in subcortical auditory encoding to perceptual abilities in two key ways. First, there is no reason, a priori, to expect large individual differences in the subject population we recruited (who have no known hearing or perceptual deficits) in either perceptual abilities or in physiological responses; however, we find significant variations in the ability to selectively attend to a desired speech stream, which are related to differences in the physiological encoding of acoustic temporal structure. Second, our spatial selective attention task tests how well listeners can use spatial cues to distinguish a target speech stream from otherwise identical, intelligible masking streams, rather than the ability to understand target speech degraded by masking signals that overlap with the target in time and frequency (“energetic masking”) (2–4). In our selective attention task, even at the highest level of reverberation, listeners fail because they report the wrong words (from the competing streams), not because target speech in a sound mixture is unintelligible. Thus, performance in our attention task is limited by the ability to focus spatial attention on a target and filter out competing, intelligible streams, not by the audibility of the target speech stream; our task emphasizes encoding the suprathreshold fine timing cues used to compute ITDs, which are important for selective attention in real-world settings. Here, testing normal-hearing listeners across a large range of ages, we find a relationship between robustness of subcortical encoding and the ability to direct spatial selective auditory attention using the suprathreshold attribute of talker location.

In animal models, noise exposure that does not cause permanent threshold shifts nonetheless results in a reduction in the number of spiral ganglia encoding input acoustic signals (36). Such a change in the redundancy with which sound is encoded in the periphery could explain the individual differences we observed. For instance, temporal coding at the level of the cochlear nucleus is often more precise than it is at the level of the auditory nerve, presumably because information from multiple auditory nerve fibers is integrated to achieve a less noisy sensory representation (37). With fewer independent spiral ganglia to transmit sound to the cochlear nucleus, suprathreshold sound reaching a noise-exposed ear may be coded less robustly in the brainstem, even if detection thresholds are unchanged. Taken together, these results highlight the importance of educating listeners about the dangers of noise exposure, especially given the ubiquity of personal music players and other devices that can damage hearing.

Our results shed light on the importance of peripheral auditory coding for auditory perception in all types of complex social settings, from the boardroom to the football field. Individuals with poorer peripheral encoding (like our bottom-quartile listeners) may struggle to communicate or even withdraw entirely in such settings (38). Such listeners are likely to be particularly vulnerable when faced with everyday challenges that listeners with more robust auditory peripheral coding can handle relatively gracefully, from listening in reverberant or noisy rooms to dealing with the normal effects of aging on auditory processing (39–46). Our results suggest an approach for teasing apart peripheral and central factors that contribute to hearing difficulties in daily life. For instance, a portion of the listeners who have been diagnosed with auditory processing disorder or King-Kopetskey syndrome (10, 47, 48) may have deficits in subcortical auditory function that could be identified and diagnosed using objective measures like those reported here.

Methods

The selective attention task methods were identical to ref. 15.

In the FM detection task, listeners indicated which of three 750-Hz tones (interstimulus interval 750 ms) contained 2 Hz frequency modulation (12). The frequency excursion varied adaptively using a two-down, one-up procedure (step size 1 Hz) to estimate the 70.7% correct FM threshold. Individual thresholds were estimated by averaging the last 12 reversals per run, then averaging across six runs.

Physiological responses were measured at the Cz electrode (BioSemi EEG system), referenced to the earlobe. Subjects watched a silent, subtitled movie while the 100-Hz monotonized syllable /dah/ was presented 4,000 times each in quiet and in a broadband noise (SNR 10 dB) (25, 26). In half of the trials, the acoustic signal was inverted. Trials were randomly ordered, with a randomly jittered interstimulus interval to prevent spectrotemporal artifact. Epochs containing eye blinks were rejected (electrodes above and below the eye; a minimum of 1,800 clean trials remained for each subject and condition after eye-blink rejection). Bootstrapping yielded Gaussian-distributed estimates of the PLV referenced to the stimulus (28, 29) at 100, 200, 300, 400, and 500 Hz. Two hundred independent PLV estimates were averaged for each combination of quiet/noise and signal polarity, each from 400 draws (with replacement) of clean trials; positive and negative polarity PLVs were then averaged.

Mixed-effects ANOVAs were run on the PLV (one per harmonic) with fixed-effect factors of noise condition, listener group, and signal polarity; random-effect factor of subject; and interaction listening condition × listener group. F ratios were calculated using the method of restricted maximum likelihood. Corresponding P values used conservative lower bounds on the degree of freedom (df) (49). Mauchly sphericity tests (50) found no significant nonsphericity at any frequency (P > 0.5), so no adjustments to F ratios or df were undertaken. Results were Bonferroni corrected for multiple comparisons (five harmonics).

Acknowledgments

Nina Kraus and Trent Nicol helped set up the FFR recording apparatus at Boston University. This work was sponsored by Grants from the National Institutes of Health (National Institute on Deafness and Other Communication Disorders R01 DC009477) and a National Security Science and Engineering Faculty Fellowship (both to B.G.S.-C.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- 2.Kidd G, Jr, Arbogast TL, Mason CR, Gallun FJ. The advantage of knowing where to listen. J Acoust Soc Am. 2005;118:3804–3815. doi: 10.1121/1.2109187. [DOI] [PubMed] [Google Scholar]

- 3.Darwin CJ, Brungart DS, Simpson BD. Effects of fundamental frequency and vocal-tract length changes on attention to one of two simultaneous talkers. J Acoust Soc Am. 2003;114:2913–2922. doi: 10.1121/1.1616924. [DOI] [PubMed] [Google Scholar]

- 4.Shinn-Cunningham BG. Object-based auditory and visual attention. Trends Cogn Sci. 2008;12:182–186. doi: 10.1016/j.tics.2008.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Best V, Marrone N, Mason CR, Kidd G, Jr, Shinn-Cunningham BG. Effects of sensorineural hearing loss on visually guided attention in a multitalker environment. J Assoc Res Otolaryngol. 2009;10:142–149. doi: 10.1007/s10162-008-0146-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Conway AR, Cowan N, Bunting MF. The cocktail party phenomenon revisited: The importance of working memory capacity. Psychon Bull Rev. 2001;8:331–335. doi: 10.3758/bf03196169. [DOI] [PubMed] [Google Scholar]

- 7.Saunders GH, Haggard MP. The clinical assessment of “Obscure Auditory Dysfunction” (OAD) 2. Case control analysis of determining factors. Ear Hear. 1992;13:241–254. doi: 10.1097/00003446-199208000-00006. [DOI] [PubMed] [Google Scholar]

- 8.Colflesh GJ, Conway AR. Individual differences in working memory capacity and divided attention in dichotic listening. Psychon Bull Rev. 2007;14:699–703. doi: 10.3758/bf03196824. [DOI] [PubMed] [Google Scholar]

- 9.Akeroyd MA. Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int J Audiol. 2008;47(Suppl 2):S53–S71. doi: 10.1080/14992020802301142. [DOI] [PubMed] [Google Scholar]

- 10.Dawes P, Bishop D. Auditory processing disorder in relation to developmental disorders of language, communication and attention: A review and critique. Int J Lang Commun Disord. 2009;44:440–465. doi: 10.1080/13682820902929073. [DOI] [PubMed] [Google Scholar]

- 11.Lorenzi C, Gilbert G, Carn H, Garnier S, Moore BC. Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proc Natl Acad Sci USA. 2006;103:18866–18869. doi: 10.1073/pnas.0607364103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Strelcyk O, Dau T. Relations between frequency selectivity, temporal fine-structure processing, and speech reception in impaired hearing. J Acoust Soc Am. 2009;125:3328–3345. doi: 10.1121/1.3097469. [DOI] [PubMed] [Google Scholar]

- 13.Goverts ST, Houtgast T. The binaural intelligibility level difference in hearing-impaired listeners: The role of supra-threshold deficits. J Acoust Soc Am. 2010;127:3073–3084. doi: 10.1121/1.3372716. [DOI] [PubMed] [Google Scholar]

- 14.Lorenzi C, Debruille L, Garnier S, Fleuriot P, Moore BC. Abnormal processing of temporal fine structure in speech for frequencies where absolute thresholds are normal. J Acoust Soc Am. 2009;125:27–30. doi: 10.1121/1.2939125. [DOI] [PubMed] [Google Scholar]

- 15.Ruggles D, Shinn-Cunningham B. Spatial selective auditory attention in the presence of reverberant energy: Individual differences in normal-hearing listeners. J Assoc Res Otolaryngol. 2011;12:395–405. doi: 10.1007/s10162-010-0254-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hopkins K, Moore B. The contribution of temporal fine structure information to the intelligibility of speech in noise. J Acoust Soc Am. 2008;123:3710. doi: 10.1121/1.3037233. [DOI] [PubMed] [Google Scholar]

- 17.MacDonald EN, Pichora-Fuller MK, Schneider BA. Effects on speech intelligibility of temporal jittering and spectral smearing of the high-frequency components of speech. Hear Res. 2010;261:63–66. doi: 10.1016/j.heares.2010.01.005. [DOI] [PubMed] [Google Scholar]

- 18.Lavandier M, Culling JF. Speech segregation in rooms: Monaural, binaural, and interacting effects of reverberation on target and interferer. J Acoust Soc Am. 2008;123:2237–2248. doi: 10.1121/1.2871943. [DOI] [PubMed] [Google Scholar]

- 19.Singh G, Pichora-Fuller MK, Schneider BA. The effect of age on auditory spatial attention in conditions of real and simulated spatial separation. J Acoust Soc Am. 2008;124:1294–1305. doi: 10.1121/1.2949399. [DOI] [PubMed] [Google Scholar]

- 20.Tun PA, McCoy S, Wingfield A. Aging, hearing acuity, and the attentional costs of effortful listening. Psychol Aging. 2009;24:761–766. doi: 10.1037/a0014802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rönnberg J, Rudner M, Foo C, Lunner T. Cognition counts: A working memory system for ease of language understanding (ELU) Int J Audiol. 2008;47(Suppl 2):S99–S105. doi: 10.1080/14992020802301167. [DOI] [PubMed] [Google Scholar]

- 22.Arlinger S, Lunner T, Lyxell B, Pichora-Fuller MK. The emergence of cognitive hearing science. Scand J Psychol. 2009;50:371–384. doi: 10.1111/j.1467-9450.2009.00753.x. [DOI] [PubMed] [Google Scholar]

- 23.Best V, Ozmeral EJ, Kopco N, Shinn-Cunningham BG. Object continuity enhances selective auditory attention. Proc Natl Acad Sci USA. 2008;105:13174–13178. doi: 10.1073/pnas.0803718105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jahnke JC. Primacy and recency effects in serial-position curves of immediate recall. J Exp Psychol. 1965;70:130–132. doi: 10.1037/h0022013. [DOI] [PubMed] [Google Scholar]

- 25.Wible B, Nicol T, Kraus N. Correlation between brainstem and cortical auditory processes in normal and language-impaired children. Brain. 2005;128:417–423. doi: 10.1093/brain/awh367. [DOI] [PubMed] [Google Scholar]

- 26.Russo N, Nicol T, Trommer B, Zecker S, Kraus N. Brainstem transcription of speech is disrupted in children with autism spectrum disorders. Dev Sci. 2009;12:557–567. doi: 10.1111/j.1467-7687.2008.00790.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Smith JC, Marsh JT, Brown WS. Far-field recorded frequency-following responses: Evidence for the locus of brainstem sources. Electroencephalogr Clin Neurophysiol. 1975;39:465–472. doi: 10.1016/0013-4694(75)90047-4. [DOI] [PubMed] [Google Scholar]

- 28.Lachaux JP, Rodriguez E, Martinerie J, Varela FJ. Measuring phase synchrony in brain signals. Hum Brain Mapp. 1999;8:194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thomson DJ. Spectrum estimation and harmonic analysis. Proc IEEE. 1982;70:1055–1096. [Google Scholar]

- 30.Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N. Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception. Proc Natl Acad Sci USA. 2009;106:13022–13027. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Banai K, et al. Reading and subcortical auditory function. Cereb Cortex. 2009;19:2699–2707. doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Russo NM, et al. Deficient brainstem encoding of pitch in children with Autism Spectrum Disorders. Clin Neurophysiol. 2008;119:1720–1731. doi: 10.1016/j.clinph.2008.01.108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wong PC, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat Neurosci. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bidelman GM, Krishnan A, Gandour JT. Enhanced brainstem encoding predicts musicians’ perceptual advantages with pitch. Eur J Neurosci. 2011;33:530–538. doi: 10.1111/j.1460-9568.2010.07527.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Krishnan A, Gandour JT, Bidelman GM. The effects of tone language experience on pitch processing in the brainstem. J Neurolinguist. 2010;23:81–95. doi: 10.1016/j.jneuroling.2009.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kujawa SG, Liberman MC. Adding insult to injury: Cochlear nerve degeneration after “temporary” noise-induced hearing loss. J Neurosci. 2009;29:14077–14085. doi: 10.1523/JNEUROSCI.2845-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Oertel D, Bal R, Gardner SM, Smith PH, Joris PX. Detection of synchrony in the activity of auditory nerve fibers by octopus cells of the mammalian cochlear nucleus. Proc Natl Acad Sci USA. 2000;97:11773–11779. doi: 10.1073/pnas.97.22.11773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gatehouse S, Noble W. The Speech, Spatial and Qualities of Hearing Scale (SSQ) Int J Audiol. 2004;43:85–99. doi: 10.1080/14992020400050014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Darwin CJ, Hukin RW. Effects of reverberation on spatial, prosodic, and vocal-tract size cues to selective attention. J Acoust Soc Am. 2000;108:335–342. doi: 10.1121/1.429468. [DOI] [PubMed] [Google Scholar]

- 40.Deroche M, Culling JF. Effects of reverberation on perceptual segregation of competing voices by difference in fundamental frequency. J Acoust Soc Am. 2008;123:2978. [Google Scholar]

- 41.Grose JH, Mamo SK, Hall JW., 3rd Age effects in temporal envelope processing: Speech unmasking and auditory steady state responses. Ear Hear. 2009;30:568–575. doi: 10.1097/AUD.0b013e3181ac128f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Grose JH, Mamo SK. Processing of temporal fine structure as a function of age. Ear Hear. 2010;31:755–760. doi: 10.1097/AUD.0b013e3181e627e7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pichora-Fuller MK, Singh G. Effects of age on auditory and cognitive processing: Implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif. 2006;10:29–59. doi: 10.1177/108471380601000103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Helfer KS, Freyman RL. Aging and speech-on-speech masking. Ear Hear. 2008;29:87–98. doi: 10.1097/AUD.0b013e31815d638b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ross B, Fujioka T, Tremblay KL, Picton TW. Aging in binaural hearing begins in mid-life: Evidence from cortical auditory-evoked responses to changes in interaural phase. J Neurosci. 2007;27:11172–11178. doi: 10.1523/JNEUROSCI.1813-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Clinard CG, Tremblay KL, Krishnan AR. Aging alters the perception and physiological representation of frequency: evidence from human frequency-following response recordings. Hear Res. 2010;264:48–55. doi: 10.1016/j.heares.2009.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Badri R, Siegel JH, Wright BA. Auditory filter shapes and high-frequency hearing in adults who have impaired speech in noise performance despite clinically normal audiograms. J Acoust Soc Am. 2011;129:852–863. doi: 10.1121/1.3523476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Stephens D, Zhao F. The role of a family history in King Kopetzky Syndrome (obscure auditory dysfunction) Acta Otolaryngol. 2000;120:197–200. doi: 10.1080/000164800750000900. [DOI] [PubMed] [Google Scholar]

- 49.Pinheiro J, Bates D. Mixed-Effects Models in S and S-PLUS. New York: Springer; 2000. [Google Scholar]

- 50.Box GEP. Problems in the analysis of growth and wear curves. Biometrics. 1950;6:362–389. [PubMed] [Google Scholar]