Abstract

In the context of large-scale multiple hypothesis testing, the hypotheses often possess certain group structures based on additional information such as Gene Ontology in gene expression data and phenotypes in genome-wide association studies. It is hence desirable to incorporate such information when dealing with multiplicity problems to increase statistical power. In this article, we demonstrate the benefit of considering group structure by presenting a p-value weighting procedure which utilizes the relative importance of each group while controlling the false discovery rate under weak conditions. The procedure is easy to implement and shown to be more powerful than the classical Benjamini–Hochberg procedure in both theoretical and simulation studies. By estimating the proportion of true null hypotheses, the data-driven procedure controls the false discovery rate asymptotically. Our analysis on one breast cancer dataset confirms that the procedure performs favorably compared with the classical method.

Keywords: Adaptive procedure, Benjamini–Hochberg procedure, Group structure, Positive regression dependence

1. INTRODUCTION

Ever since the seminal work of Benjamini and Hochberg (1995), the concept of false discovery rate (FDR) and the FDR controlling Benjamini–Hochberg (BH) procedure have been widely adopted to replace traditional methods, like family-wise error rate (FWER), in fields such as bioinformatics where a large number of hypotheses are tested. For example, in gene expression microarray experiments or brain image studies, each gene or brain location is associated with one hypothesis. Usually there are tens of thousands of them. The more conservative family-wise error rate controlling procedures often have extremely low power as the number of hypotheses gets large. Under the FDR framework, the power can be increased.

In many cases, there is prior information that a natural group structure exists among the hypotheses, or the hypotheses can be divided into subgroups based on the characteristics of the problem. For example, for gene expression data, Gene Ontology (The Gene Ontology Consortium 2000) provides a natural stratification among genes based on three ontologies. In genome-wide association study, each marker might be tested for association with several phenotypes of interest; or tests might be conducted assuming different genetic models (Sun et al. 2006). In clinical trials, hypotheses are commonly divided into primary and secondary based on the relative importance of the features of the disease (Dmitrienko, Offen, and Westfall 2003). Ignorance of such group structures in data analysis can be dangerous. Efron (2008) pointed out that applying multiple comparison treatments such as FDR to the entire set of hypotheses may lead to overly conservative or overly liberal conclusions within any particular subgroup of the cases.

In multiple hypothesis testing, utilizing group structure can be achieved by assigning weights for the hypotheses (or p-values) in each group. Such an idea of using group information and weights has been adopted by several authors. Efron (2008) considered the Separate-Class model where the hypotheses are divided into distinct groups, and showed the legitimacy of such separate analysis for FDR methods. Benjamini and Hochberg (1997) analyzed both the p-value weighting and the error weighting methods and evaluated different procedures. Genovese, Roeder, and Wasserman (2006) investigated the merit of multiple testing procedures using weighted p-values and claimed that their weighted Benjamini–Hochberg procedure controls the FWER and FDR while improving power. Wasserman and Roeder (2006) further explored their p-value weighting procedure by introducing an optimal weighting scheme for FWER control. Roeder et al. (2006) considered linkage study to weight the p-values and showed their procedure improved power considerably when the linkage study is informative. Although in clinical trials, Finner and Roter (2001) pointed out that FDR control is hardly used, it is still potentially interesting to explore possible applications of FDR with group structures in clinical trials settings. Other notable publications include Storey, Taylor, and Siegmund (2004) and Rubin, van der Laan, and Dudoit (2005).

Very few results, however, have been published so far on proper p-value weighting schemes for procedures that control the FDR. In this paper, we will present the Group Benjamini–Hochberg (GBH) procedure, which offers a weighting scheme based on a simple Bayesian argument and utilizes the prior information within each group through the proportion of true nulls among the hypotheses. Our procedure controls the FDR not only for independent hypotheses but also for p-values with certain dependence structures. When the proportion of true null hypotheses is unknown, we show that by estimating it in each group, the data-driven GBH procedure offers asymptotic FDR control for p-values under weak dependence. This extends the results of both Genovese, Roeder, and Wasserman (2006) and Storey, Taylor, and Siegmund (2004).

When the information on group structure is less apparent, an alternative is to apply techniques such as clustering to assign groups. It can be a good strategy when we have spatially clustered hypotheses, that is, if one hypothesis is false, the nearby hypotheses are more likely to be false. For example, Quackenbush (2001) pointed out that in microarray studies, genes that are contained in a particular pathway or respond to a common environmental challenge, should show similar patterns of expression. Clustering methods are useful for identifying such gene expression patterns in time or space.

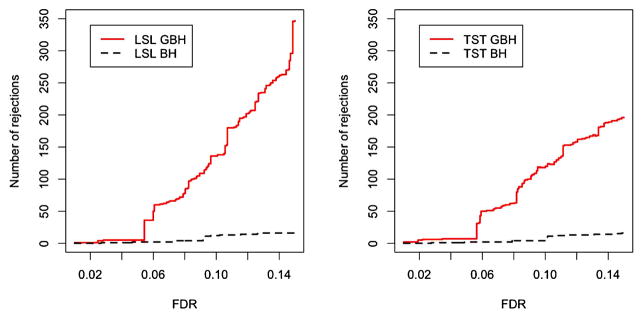

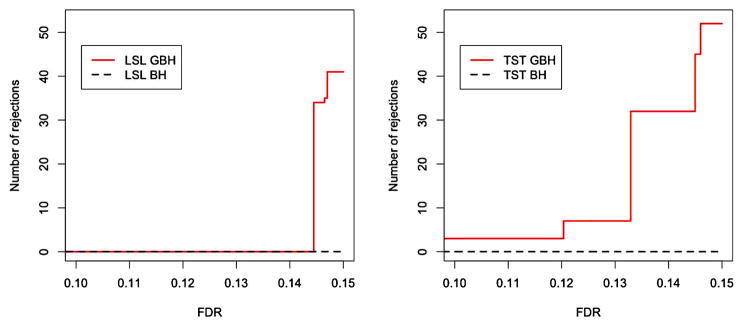

Our simulation results indicate that when the proportions of true nulls in each group are different, the GBH procedure is more powerful than the BH procedure while keeping the FDR controlled at the desired level. The GBH procedure also works well for situations where the number of signals is small among the hypotheses. Therefore, the procedure could be applied to microarray or genome-wide association studies where a large number of genes are monitored but only a few among them are actually differentially expressed or associated with disease. We apply our procedure to the analysis of a well-known breast cancer microarray dataset using two different grouping methods. The results indicate that the GBH procedure is able to identify more genes than the BH procedure by putting more focus on the potentially important groups. Figure 1 shows the advantage of the GBH procedure over the BH procedure under k-means clustering for two methods of estimating the true null hypotheses in each group.

Figure 1.

Breast cancer study, 24,184 genes. Plot shows the number of signals detected by GBH and BH procedures versus prespecified FDR level. Left panel: adaptive LSL GBH procedure. Right panel: adaptive TST GBH procedure. Details for LSL and TST approaches are in Section 2.3. Genes are assigned into six groups using k-means clustering. Data from van’t Veer et al. (2002). The online version of this figure is in color.

The rest of the paper is organized as follows. After a brief review of the FDR framework and the classical BH procedure, we present our GBH procedure in Section 2.2 and investigate our weighting scheme from both practical and Bayesian perspectives. Comparison of the classical BH and the GBH procedures in terms of expected number of rejections is discussed in Section 2.4. After discussing the data-driven GBH procedure in Section 2.3, we prove its asymptotic FDR control property in Section 3. Simulation studies of the BH and GBH procedures for normal random variables are reported in Section 4, including both independent and positive regression dependent cases. In Section 5, we show an application of the GBH procedure on a breast cancer dataset, using both the Gene Ontology grouping and k-means clustering strategies. The proofs for the main theorems are included in the Appendix.

2. THE GBH PROCEDURE

In this section, we introduce the Group Benjamini–Hochberg (GBH) procedure. It takes advantage of the proportion of true null hypotheses, which represents the relative importance of each group. We first examine the case where the proportions are known and then discuss data-driven procedures where the proportions are estimated based on the data.

2.1 Preliminaries

We first review the FDR framework and the classical BH procedure. Consider the problem of testing N hypotheses Hi vs HAi, i ∈ IN = {1, …, N} among which n0 are null hypotheses and n1 = N − n0 are alternatives (signals). Let V be the number of null hypotheses that are falsely rejected (false discoveries) and R be the total number of rejected hypotheses (discoveries). Benjamini and Hochberg (1995) introduced the FDR, which is defined as the expected ratio of V and R when R is positive, that is,

| (2.1) |

where R ∨ 1 ≡ max(R, 1). They also proposed the BH procedure which focuses on the ordered p-values P(1) ≤ ··· ≤ P(N) from N hypothesis tests. Given a level α ∈ (0, 1), the BH procedure rejects all hypotheses of which P(i) ≤ P(k), where

| (2.2) |

Benjamini and Hochberg (1995) proved that for independent hypotheses, the BH procedure controls the FDR at level π0α where π0 = n0/N is the proportion of true null hypotheses. Hence, the BH procedure actually controls the FDR at a more stringent level. One can therefore increase the power by first estimating the unknown parameter π0 using, say, π̂0, and then applying the BH procedure on the weighted p-values π̂0Pi, i = 1, …, N at level α. Such a data-driven method is referred to as an adaptive procedure.

2.2 The GBH Procedure for the Oracle Case

When group information is taken into consideration, we assume that the N hypotheses can be divided into K disjoint groups with group sizes ng, g = 1, …, K. Let Ig be the index set of the gth group. The index set IN of all hypotheses satisfies

| (2.3) |

where Ig,0 = {i ∈ Ig : Hi is true} consists of indices for null hypotheses and Ig,1 = {i ∈ Ig : Hi is false} is for the alternatives. Let ng,0 = |Ig,0| and ng,1 = ng − ng,0 be the number of null and alternative hypotheses in group g, respectively. Then πg,0 = ng,0/ng and πg,1 = ng,1/ng are the corresponding proportions of null and alternative hypotheses in group g. Let

| (2.4) |

be the overall proportion of null hypotheses. In this section, we consider the so-called “oracle case,” where πg,0 ∈ [0, 1] is assumed to be given for each group. The case for unknown πg,0 is discussed in Section 2.3.

Definition 1 (The GBH procedure for the oracle case)

For each p-value in group g, calculate the weighted p-values . Let if πg,0 = 1. If πg,0 = 1 for all g, accept all the hypotheses and stop. Otherwise go to the next step.

Pool all the weighted p-values together and let be the corresponding order statistics.

-

Compute

If such a k exists, reject the k hypotheses associated with ; otherwise do not reject any of the hypotheses.

The GBH procedure weights the p-values for each group depending on the corresponding proportion of true null hypotheses in the group, that is, πg,0. This idea is intuitively appealing because for any group with a small πg,0, more rejections are expected and vice versa. The weight πg,0/πg,1 differentiates groups by (relatively) enlarging p-values in groups with larger πg,0, therefore larger power is expected after applying the BH procedure on the pooled weighted p-values.

Benjamini and Yekutieli (2001) introduced the concept of positive regression dependence on subsets (PRDS) and proved that the BH procedure controls the FDR for p-values with such property. Finner, Dickhaus, and Rosters (2009, p. 603) argued that the PRDS property implies

| (2.5) |

for any j ∈ IN, i ∈ ∪g Ig,0 and t ∈ (0, jα/N]. Examples of distribution satisfying the PRDS property include multivariate normal with nonnegative correlations and (absolute) multivariate t-distribution. It is worth pointing out that independence is a special case of PRDS; see Benjamini and Yekutieli (2001) and Finner, Dickhaus, and Rosters (2007 and Finner, Dickhaus, and Rosters (2009) for details.

For the oracle case, the following theorem guarantees that the GBH procedure controls the FDR rigorously for p-values with the PRDS property (hence provides FDR control for independent p-values as well).

Theorem 1

Assume the hypotheses satisfy (2.3) and the proportion of trull null hypotheses, πg,0 ∈ [0, 1], is known for each group, then the GBH procedure controls the FDR at level α for p-values with the PRDS property.

Genovese, Roeder, and Wasserman (2006) analyzed the method of p-value weighting for independent p-values and proved FDR control of their procedure with a general set of weights. Some of the arguments in the proof of the above theorem can be implied by theorem 1 in Genovese, Roeder, and Wasserman (2006, p. 513). Nevertheless, we not only extend the result to p-values with the PRDS property, but also make up a small gap in their proof of FDR control (Genovese, Roeder, and Wasserman 2006, p. 514, first equation). Furthermore, the GBH procedure makes use of the information (i.e., πg,0) embedded within each group, and provides a quasi-optimal way of assigning weights. Its advantage can be understood in two perspectives.

The GBH Procedure Works Well for Data With Sparse Signals

In many cases of multiple hypothesis testing, there tends to be a strong assumption that there are few signals, that is, most of the N hypotheses are true nulls. In microarray studies, for instance, majority of the genes are not related to certain disease, therefore we have the situation in which the πg,0 of each group will be close to 1. Our weighting strategy performs well in such settings. For example, suppose we have two groups of p-values with π1,0 = 0.9 and π2,0 = 0.99. According to the GBH procedure, we are going to multiply 0.9/0.1 = 9 to the first group and 0.99/0.01 = 99 to the second group. Performing multiple comparison procedure on the combined weighted p-values means we put more attention on the p-values from the first group rather than the second one. As a result, more signals are expected. In the extreme case where one of the proportions is 1, say, π1,0 = 1 and π2,0 ∈ (0, 1), according to the GBH procedure, all the p-values in the first group are rescaled to ∞, therefore no rejection (signal) would be reported in that group and our full attention would be focused on the second group. This is consistent with the fact that the first group contains no signal.

The GBH Procedure Has a Bayesian Interpretation

From the Bayesian point of view, the weighting scheme, πg,0/πg,1, can be interpreted as follows. Let Hg,i be a hypothesis in group g such that Hg,i = 0 with probability πg,0 and Hg,i = 1 with probability πg,1 = 1 − πg,0. Let Pg,i be the corresponding p-value and has a conditional distribution

The “Bayesian FDR” (Efron and Tibshirani 2002) of Hg,i for Pg,i ≤ p is

| (2.6) |

If Ug follows a uniform distribution, the above equation becomes

Note that the above equation is an increasing function of [Fg(p)]−1(πg,0/πg,1)p, therefore ranking the Bayesian FDR is equivalent to focusing on the quantity

| (2.7) |

Then the ideal weight for the p-values in group g should be [Fg(p)]−1(πg,0/πg,1), which can be viewed as two sources of influence on the p-values. If Fg = F for all g, the first influence is through [F(p)]−1, which can be regarded as the p-value effect. The other influence is the relative importance of the groups, that is, πg,0/πg,1. In practice, Fg is usually unknown and hard to estimate, especially when the number of alternatives is small. Hence, we just focus on the group effect in the ideal weight. Note that the weight we choose, that is, πg,0/πg,1 is not an aggressive one, since the cut-off point for the original p-values is big for important groups with small πg,0/πg,1, which implies that the ideal weight for groups with small πg,0/πg,1 is relatively smaller.

2.3 The Adaptive GBH Procedure

As mentioned in the previous sections, knowledge of the proportion of true null hypotheses, that is, π0, can be useful in improving the power of FDR-controlling procedures. Such information, however, is not available in practice. Estimating the unknown quantity using observed data is then a natural idea, which brings us to the adaptive procedure.

Definition 2 (The adaptive GBH procedure)

For each group, estimate πg,0 by π̂g,0.

Apply the GBH procedure in Definition 1, with πg,0 replaced by π̂g,0.

Various estimators of π0 were proposed by Schweder and Spjøtvoll (1982) and Storey (2002) and Storey, Taylor, and Siegmund (2004) based on the tail proportion of p-values, and by Efron et al. (2001) based on the mixture densities of null and alternative distribution of hypotheses. Jin and Cai (2007) estimated π0 based on the empirical characteristic function and Fourier analysis. Meinshausen and Rice (2006) and Genovese and Wasserman (2004) provided consistent estimators of π0 under certain conditions.

The adaptive GBH procedure does not require a specific estimator of πg,0, therefore people may choose their favorite estimator in practice. We take the following two examples to illustrate the practical use of the adaptive GBH procedure.

Example 1 [Least-Slope (LSL) method]

The least-slope (LSL) estimator proposed by Benjamini and Hochberg (2000) performs well in situations where signals are sparse. Hsueh, Chen, and Kodell (2003) compared several methods including Schweder and Spjøtvoll (1982), Storey (2002), and the LSL estimator, and found that the LSL estimator gives the most satisfactory empirical results.

Definition 3 (Adaptive LSL GBH procedure)

For p-values in each group g, starting from i = 1, compute lg,i = (ng + 1 − i)/(1 − Pg,(i)), where Pg,(i) is the ith order statistics in group g. As i increases, stop when lg,j > lg,j−1 for the first time.

- For each group, compute the LSL estimator of πg,0

(2.8) Apply the GBH procedure at level α with πg,0 replaced by .

The LSL estimator is asymptotically related to the estimator proposed by Schweder and Spjøtvoll (1982). It is also conservative in the sense that it overestimates πg,0 in each group.

Example 2 [The Two-Stage (TST) method]

Benjamini, Krieger, and Yekutieli (2006) proposed the TST adaptive BH procedure and showed that it offers finite-sample FDR control for independent p-values.

Definition 4 (Adaptive TST GBH procedure)

For p-values in each group g, apply the BH procedure at level α′ = α/(1 + α). Let rg,1 be the number of rejections.

- For each group, compute the TST estimator of πg,0

(2.9) Apply the GBH procedure at level α′ with πg,0 replaced by .

The TST method applies the BH procedure in the first step and uses the number of rejected hypotheses as an estimator of the number of alternatives.

Both the LSL and TST methods are straightforward to implement in practice and in the next section we show both of them have good asymptotic properties. Our simulation and real data analysis show that they outperform the adaptive BH procedure, in which the group structure of the data is not considered.

Remark 1

We should point out that in applications, the adaptive GBH procedure does not rely on which estimator people choose. The performance, however, does depend on the distribution of signal among groups. If there is no significant difference in the proportions of signals among hypotheses for different groups, the adaptive GBH procedure degenerates to uni-group case. As long as the groups are dissimilar in terms of true null proportion and the estimator of πg,0 can detect (not necessarily fully detect) the proportion of true null hypotheses for each group, the adaptive GBH procedure is expected to outperform the adaptive BH procedure.

2.4 Comparison of the GBH and BH Procedures

In previous sections, we show that the GBH procedure controls the FDR for the finite sample case when the πg,0’s are known. It is of interest to compare the performance of GBH with that of the BH procedure. In this section, we are going to compare the expected number of rejections for the two procedures.

Benjamini and Hochberg (1995) showed that the BH procedure controls the FDR at level π0α. In order to compare the BH and GBH procedures at the same α level, we consider the following rescaled p-values:

| (2.10) |

where πg,0 ∈ (0, 1). Note that π0 = πg,0(1 − π0)/πg,1 when πg,0 = π0 for all g.

For group g, let Dg be the distribution of p-values such that

| (2.11) |

where Ug and Fg are the distribution functions for p-values under the null and alternative hypotheses. Let D̃g(t) be the empirical cumulative distribution function of p-values in group g, that is,

| (2.12) |

It is proved in Lemma 2 that under weak conditions D̃g(t) converges uniformly to Dg(t).

For the uni-group case, in the framework of (2.10), it has been proved by several authors (Benjamini and Hochberg 1995; Storey 2002; Genovese and Wasserman 2002; Genovese, Roeder, and Wasserman 2006) that the threshold of the BH procedure can be written as

where is the empirical cumulative distribution function of the p-values, and the procedure rejects any hypothesis with a p-value less than or equal to TBH. We can extend this result to the framework of GBH. For notation purpose define where ag = πg,0(1 − π0)/(1 − πg,0). Let GN (a, t) be the empirical distribution of the weighted p-values, that is,

| (2.13) |

Note that N · GN (a, t) is the number of rejections for the (oracle) GBH procedure with respect to the threshold t on the weighted p-values. When π0 < 1, where π0 defined in (2.4) is the overall proportion of null hypotheses, it can be shown that the threshold of the GBH procedure is equivalent to

where c(a) = {t : 0 ≤ t ≤ maxg ag}.

For any fixed threshold t ∈ c(a), let

[RBH(t)] and

[RBH(t)] and

[RGBH(t)] be the expected number of rejections of the BH and GBH procedure, respectively. The following theorem provides a sufficient condition for

[RGBH(t)] be the expected number of rejections of the BH and GBH procedure, respectively. The following theorem provides a sufficient condition for

[RBH(t)] ≤

[RBH(t)] ≤

[RGBH(t)].

[RGBH(t)].

Lemma 1

Let Ug and Fg be the distributions of p-values under the null and alternative hypotheses in group g. Assume Ug = U and Fg = F for all g. If U ~ Unif[0, 1] and x ↦ F(t/x) is convex for x ≥ t̃, where

, then

[RBH(t)] ≤

[RBH(t)] ≤

[RGBH(t)].

[RGBH(t)].

Take the classical normal mean model for an example. Suppose we observe Xi = θ + Zi, where . Consider the multiple testing problem

The distribution of p-values under alternative is F(u) = 1 − Φ[Φ−1 (1 − u) − θA], where Φ is the standard Normal distribution function. It can be shown that x ↦ F(t/x) is convex if

| (2.14) |

where φ is the standard Normal density function. Note that t/t̃ is the threshold of the unscaled p-values for rejecting the corresponding hypotheses in one group, therefore t/t̃ is small. Since the right-hand side of (2.14) is a decreasing function of t/t̃, (2.14) becomes θA ≤ 4.12 when t/t̃ ≤ 0.05 and θA ≤ 5.33 when t/t̃ ≤ 0.01. This suggests the convexity is true for most of the cases.

For adaptive procedures, πg,0 is replaced by its estimator π̂g,0. Let where âg = π̂g,0(1 − π̂0)/(1 − π̂g,0) and π̂0 = Σg ng π̂0/N. To conduct the BH procedure adaptively, we first estimate π0 by π̂0 and then perform the BH procedure at level α/π̂0. The corresponding threshold of the adaptive BH procedure is

| (2.15) |

and the threshold of the adaptive GBH procedure is

| (2.16) |

where

| (2.17) |

Remark 2

Both (2.15) and (2.16) depend on the data, hence they are no longer fixed. In the next section we are going to prove that T̂BH and T̂GBH converges in probability to some fixed and , respectively. Theorem 4 in Section 3.1 demonstrates that and therefore the adaptive GBH procedure rejects more than the adaptive BH procedure asymptotically.

3. GBH ASYMPTOTICS

In many applications of multiple hypothesis testing, not only are the proportions of true null hypotheses unknown, but the number of hypotheses is also very large. It is hence applicable to analyze the behavior of the GBH procedure for large N. In this section, we focus on the asymptotic property of the adaptive GBH procedure.

Genovese, Roeder, and Wasserman (2006) and Storey, Taylor, and Siegmund (2004) proved some useful results for asymptotic FDR control using empirical process argument for the BH procedure. We extend them further in the setting of the GBH procedure. We first discuss the case where we have consistent estimator of the proportion of true null hypotheses, then move on to a more general case.

3.1 Adaptive GBH With Consistent Estimator of πg,0

When N → ∞ and the number of groups K is finite, we assume the following condition is satisfied in every group

| (3.1) |

By the construction, Σg πg = 1 and πg,0 + πg,1 = 1. The following lemma shows that (2.12) converges uniformly to (2.11) under the above condition.

Lemma 2

Under (3.1), let Ug(t) and Fg(t) be continuous functions. For any t ≥ 0, if the p-values satisfy

| (3.2) |

| (3.3) |

Then .

Storey, Taylor, and Siegmund (2004) described weak dependence as any type of dependence in which Conditions (3.2) and (3.3) are satisfied. Weak dependence contains the case of independent p-values, but for p-values with the PRDS property, these conditions are not necessarily true. An example is given in Section 4.

In this section, we focus on the case when we have consistent estimator of πg,0 in every group, that is,

| (3.4) |

Recall that , where âg = π̂g,0 (1 − π̂0)/(1 − π̂g,0). Under the above condition, we have . Let be the limiting distribution of the weighted p-values for all groups and let B(a, t) = t/G(a, t). Then define

The following theorem establishes the asymptotic equivalence of (2.16) and , and thus implies asymptotic FDR control of the adaptive GBH procedure.

Theorem 2

Suppose Conditions (3.1) through (3.4) are satisfied for all groups. Suppose further that Ug(t) = t for 0 ≤ t ≤ 1 in every group. If t ↦ B(a, t) has a nonzero derivative at and limt↓0 B(a, t) ≠ α, then and FDR(T̂GBH) ≤ α + o(1).

Note that the statement of the theorem has a similar flavor as theorem 2 in Genovese, Roeder, and Wasserman (2006, p. 515). But our assumption is weaker and more importantly, the π̂g,0’s are estimated based on the data.

Similarly, for the adaptive BH procedure, we define the distribution of all p-values as C(t) = π0U(t) + (1 − π0)F(t), where U(t) and F(t) are continuous functions. Let be such that

The following theorem illustrates that asymptotically the adaptive GBH procedure has more expected number of rejections than the adaptive BH procedure. Note that RBH(·) and RGBH(·) denote the number of rejections of the BH and GBH procedures, respectively.

Theorem 3

Under Conditions (3.1) through (3.4). Assume in each group Ug(t) = U(t) = t, 0 ≤ t ≤ 1 and Fg(t) = F(t), where x ↦ F(t/x) is convex for x ≥ ming ag. Assume further that both B(a, t) and t/C(t/π0) are increasing in t. If π0 ≥ α and limt↓0 t/C(t/π) ≤ α, then , and therefore

Remark 3

Sometimes the assumption that all the alternative hypotheses across different groups follow the same distribution may not be appropriate. The condition Fg(t) = F(t) in the above theorem is necessary to establish Theorem 3. However, that assumption is not a necessity in establishing Theorem 2 and Theorem 4, where we show FDR control for the adaptive GBH procedure.

3.2 Discussion for Inconsistent Estimator of πg,0

For a general estimator of πg,0, let π̂g,0 ∈ (0, 1] be an estimator of πg,0 such that

| (3.5) |

where the latter condition means at least one ζg is less than 1 among all groups. Let where ρg = ζg/(1 − ζg) and ρg = ∞ when ζg = 1. Then, we have . Let be the limiting distribution of the weighted p-values for all groups. Denote and define

| (3.6) |

Theorem 4

Suppose Conditions (3.1) through (3.3) and (3.5) are satisfied for all groups. Suppose further that Ug(t) = t for 0 ≤ t ≤ 1 and ζg ≥ bgπg,0 for some bg > 0 in every group. If t ↦ B(ρ, t) has a nonzero derivative at and limt↓0 B(ρ, t) ≠ α, then and FDR(T̂GBH) ≤ α/ming{bg} + o(1). In particular, FDR(T̂GBH) ≤ α + o(1) when bg ≥ 1 for all groups.

The theorem generalizes the result in Theorem 2 and indicates that the adaptive GBH procedure controls the FDR at level α not only for consistent estimators of πg,0’s, but also for asymptotically conservative estimators.

Remark 4

For the TST estimator in (2.9), note that

where T̂0 is the threshold for the BH procedure in the first step. Following from Theorem 4, , where t0 satisfies t0/(πg,0t0 + πg,1Fg(t0)) = α′. Since Fg(t0) ≤ 1, it can be shown that t0 ≤ (1 − πg,0)α′/(1 − α′πg,0). Then,

Therefore, by Theorem 4 the adaptive TST GBH procedure controls FDR at level α′/(1 − α′) = α asymptotically.

Remark 5

As ng → ∞, the LSL estimator defined in (2.8) can be viewed as a special case of the estimator π̂g,0(λ) proposed by Schweder and Spjøtvoll (1982). For fixed λ, π̂g,0(λ) satisfies

under Conditions (3.2) and (3.3). Therefore, π̂g,0(λ) is asymptotically conservative and by Theorem 4 the FDR is controlled asymptotically at α for π̂g,0(λ).

4. SIMULATION STUDIES

For simplicity, assume the hypotheses are divided into two groups. Without loss of generality, assume there are n observations in each group. Consider the following model, let

| (4.1) |

be the ith test statistic in group g, where Zgi and Z0 are independent standard Normal random variables. Note that Cov(Tgu, Tgv) = ξg, for u, v ∈ {1, …, n}, u ≠ v and the model satisfies the PRDS property discussed in Section 2.2 when 0 ≤ ξg ≤ 1. Similar dependence structures were considered in Finner, Dickhaus, and Roters (2007) and Benjamini, Krieger, and Yekutieli (2006). Note that when ξg > 0, Conditions (3.2) and (3.3) are not satisfied for large N due to the extra Z0 term.

Consider the hypothesis testing problem with two groups H0 : θj = 0 vs Ha : θj > 0, for j = 1, …, 2n. In this section, we compare the performances of the BH and GBH procedures for both oracle and adaptive cases. For the adaptive BH procedure, we compute the (either LSL or TST) estimator π̂0 for all p-values and then apply the BH procedure at level α/π̂0.

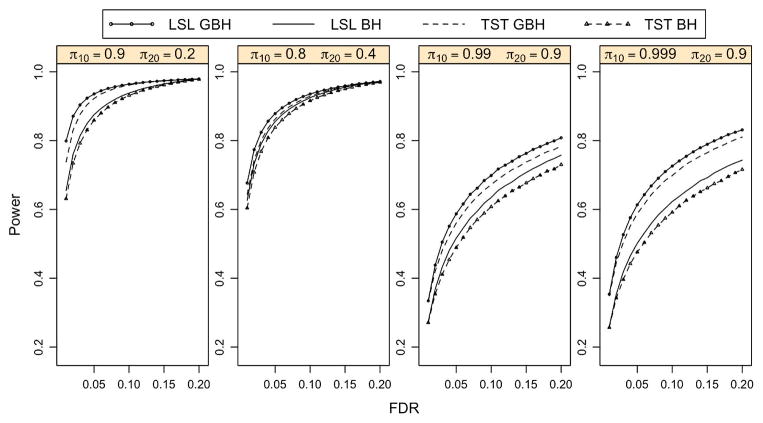

Four combinations of πg,0’s are considered: (1) π1,0 = 0.9 vs π2,0 = 0.2; (2) π1,0 = 0.8 vs π2,0 = 0.4; (3) π1,0 = 0.99 vs π2,0 = 0.9; (4) π1,0 = 0.999 vs π2,0 = 0.9. In each case, we generate ng = 10,000 test statistics for each of the two groups based on (4.1). In every group, ngπg,0 of the hypotheses are null and the rest are alternatives with corresponding θ = 3 in one group and θ = 5 in the other group. Other combinations of n and θ’s are also considered and the results are similar. Since we have the information about which hypothesis is from the alternative, the power for the two procedures can be obtained, which is the proportion of true rejections among the false null hypotheses. The power of the BH and GBH procedures is evaluated in pairs based on 200 iterations for each of the 20 FDR levels between 0.01 and 0.2. The results for the oracle and adaptive cases are as follows.

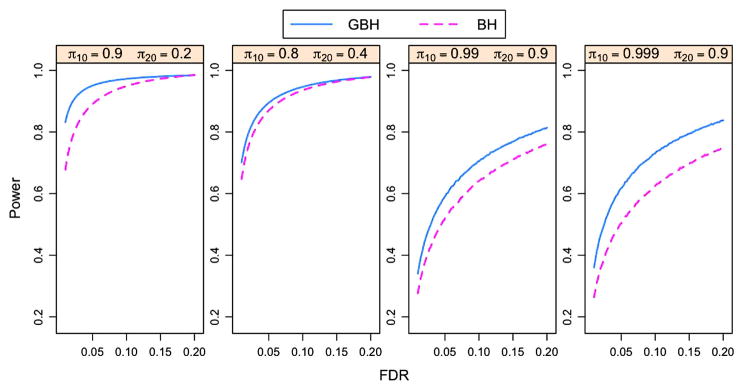

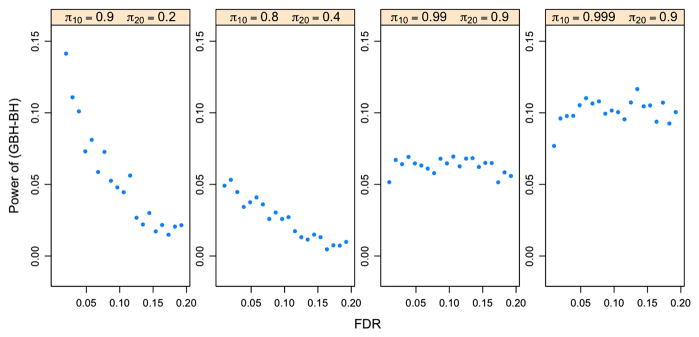

For the oracle case with independent p-values, Figure 2 indicates that the GBH procedure outperforms the BH procedure in all four cases, especially when πg,0’s are close to 1 (the last two panels). The more the groups differ in πg,0, the larger the difference is obtained in the power of the two procedures. This is also true for p-values with the PRDS property. Figure 3 shows the power difference between the GBH and BH procedures for p-values under model (4.1) with ξ1 = ξ2 = 0.5. All points being above zero indicates the GBH procedure outperforms the BH procedure for all four cases.

Figure 2.

Power curves of the oracle BH and GBH procedures for independent p-values. The p-values are generated based on model (4.1) with ξ1 = ξ2 = 0 and n = 10,000 for each group. Each panel corresponds to one combination of πg,0’s for two groups. The online version of this figure is in color.

Figure 3.

Power differences of the oracle BH and GBH procedures for p-values with the PRDS property. The p-values are generated based on model (4.1) with ξ1 = ξ2 = 0.5 and n = 10,000 for each group. Each panel corresponds to one combination of πg,0’s for two groups. The online version of this figure is in color.

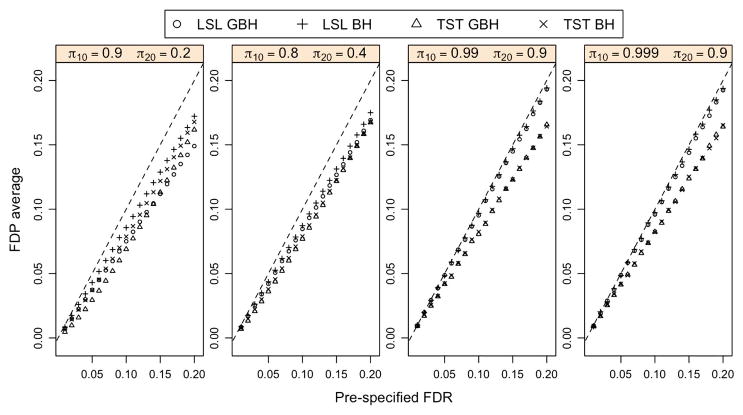

For the adaptive case with independent p-values, we estimate the unknown πg,0’s using either the TST or LSL method introduced in Section 2.3. Figure 4 indicates that the average of the false discovery proportion (FDP) is controlled at a prespecified FDR level for both the BH and GBH procedures with either the TST or LSL method. The power improvement of the adaptive GBH over the adaptive BH procedure is shown in Figure 5. Both the TST GBH and the LSL GBH procedures are more powerful than the corresponding adaptive BH procedures.

Figure 4.

Comparison of the average FDP and the prespecified FDR for the adaptive BH and GBH procedures. The dash line is the 45-degree line. The p-values are generated based on model (4.1) with ξ1 = ξ2 = 0 and n = 10,000 for each group. Each point of the FDP is the average of 200 iterations. The online version of this figure is in color.

Figure 5.

Power curves of the adaptive BH and GBH procedures for independent p-values. The p-values are generated based on model (4.1) with ξ1 = ξ2 = 0 and n = 10,000 for each group. Each panel corresponds to one combination of πg,0’s for two groups. The online version of this figure is in color.

We also analyze the performance of the adaptive GBH procedure for weighting scheme other than πg,0/πg,1. According to (2.6), when Ug is uniform, the Bayesian FDR is [πg,0/Dg(p)]p, where Dg(p) is the distribution function of p-values in group g. It’s therefore natural to consider the weight π̂g,0/D̃g(p), where D̃g is the empirical distribution, as pointed out by a referee. Although this weight takes into consideration of the distribution of p-values in each group, the power of the adaptive procedure using this weight is often low in the situation where we have sparse signals and estimating the alternative distribution is difficult.

5. APPLICATIONS

van’t Veer et al. (2002) used microarrays to study the primary breast tumors of 78 young patients, of which 44 developed cancer in less than 5 years and the other 34 were cancer free during that period. In total 24,184 genes were monitored and p-values were obtained for each gene by comparing the mean ratio of log10 intensities. A fraction of the data is listed in Table 1.

Table 1.

Part of the breast cancer dataset in van’t Veer et al. (2002)

| Gene ID | Developed cancer in 5 years

|

Cancer-free in 5 years

|

p-value | ||||||

|---|---|---|---|---|---|---|---|---|---|

| patient 1 | patient 2 | … | patient 44 | patient 45 | patient 46 | … | patient 78 | ||

| AA000990 | 0.080 | 0.130 | … | 0.136 | −0.513 | −0.098 | … | −0.015 | 0.7937 |

| AA001113 | −0.159 | −0.087 | … | −0.116 | 0.190 | −0.204 | … | 0.082 | 0.4897 |

| AA001360 | −0.018 | −0.024 | … | −0.255 | 0.114 | −0.042 | … | 0.200 | 0.1224 |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

NOTE: The entries are adjusted log10(red/green) ratios from cDNA microarrays. The p-values were calculated based on a two-sample t-test for each gene.

In order to apply the GBH procedure which makes use of the group structure, we need to stratify the genes first. Here we consider two grouping strategies.

5.1 Grouping Using Gene Ontology (GO)

The GO project (The Gene Ontology Consortium 2000) provides detailed annotations for a gene product’s biology. It consists of three ontologies, namely Biological Process, Molecular Function, and Cellular Component, each representing a key concept in Molecular Biology. The GO terms are classified into one of the three ontologies. Based on the GO terms, one can construct a top-down tree diagram, in which the higher nodes represent more general biological concepts.

The tree structure provides the idea of GO grouping which can be summarized as follows. After choosing one of the three ontologies, say Biological Process, some higher nodes are selected as ancestors according to the generic GO slim file, which contains the broad overview of each ontology without the detail of each GO terms (available at http://www.geneontology.org/GO.slims.shtml). Next, for those genes with GO IDs, we trace them upward to the nodes we have chosen. Genes that share common ancestors are then grouped together. The biggest concern for GO grouping in our case is that the mapping rate is low. Even though the GO consortium updates their data base on a daily basis, not every gene in our data has a GO ID. For our case, 9492 of the 24,184 genes have the annotation information for Biological Process, therefore the mapping rate for our data is 9492/24,184 ≈ 39%.

However, we may still use the remaining 9492 genes to see the difference of using group information in multiple hypothesis testings. We first divide the genes into four groups with respect to Biological Process, that is, (1) Cell communication; (2) Cell growth and/or maintenance; (3) Development; and (4) Multifunction. The results for the adaptive BH and GBH procedures are listed in Table 2. For simplicity, we just report the results for the LSL method.

Table 2.

Comparison of the adaptive LSL GBH and the adaptive LSL BH procedures for GO grouping. FDR level = 0.15

| Group | # of genes | LSL BH | LSL GBH | ||

|---|---|---|---|---|---|

| (1) Cell communication | 593 | 0.995 | 0 | 3 | |

| (2) Cell growth/maintenance | 4142 | 0.987 | 0 | 13 | |

| (3) Development | 434 | 0.989 | 0 | 0 | |

| (4) Multifunction | 4323 | 0.983 | 0 | 25 | |

| Total | 9492 | 0 | 41 |

At FDR level 0.15, Table 2 indicates that the adaptive GBH procedure focuses more on groups with smaller estimated πg,0’s, that is, groups (2) and (4), and is able to discover genes that are not detectable using the adaptive BH procedure. In fact, as shown in Figure 6, using either the LSL or TST method, the adaptive BH procedure cannot detect any signals when the FDR level is less than 0.15.

Figure 6.

Breast cancer study, 9492 genes. The plots show the number of genes detected by the adaptive BH and GBH procedures using Gene Ontology grouping. Left panel: the LSL method. Right panel: the TST method. Data from van’t Veer et al. (2002). The online version of this figure is in color.

Even though the mapping rate for this dataset is low, the idea of GO grouping could be a good choice if the data were collected in terms of GO identities; or the mapping between the GO ID and other gene IDs (e.g., GenBank Accession Number) was more complete. Then each group may correspond to different biological processes or genetic functions within the tumor and the GBH method can help us to find more signals among desired groups.

5.2 Grouping Using k-Means Clustering

Another grouping idea is to apply clustering. Here we choose k-means clustering with initial points satisfying maximum separation rule based on all the 78 samples. Note that we are not just clustering the p-values. Unlike GO grouping, k-means clustering makes use of the whole dataset and we do not have to worry about the mapping rate. Although we do have the difficulties regarding cluster analysis, for example, the choice of initial points, number of clusters, and the interpretation of each cluster, we use it as an illustrative example to compare the performances of the adaptive BH and GBH procedures.

In order to have a reliable estimator for each group, six clusters are selected such that within each cluster there are at least 200 genes. Table 3 shows the results for the two procedures using the LSL method at FDR level 0.1. Most of the additional discoveries found by the adaptive GBH procedure come from the first cluster, which is expected to contain more signals because the estimated πg,0 is relatively smaller than the others. Gene-annotation enrichment analysis confirms that those 109 genes selected by the GBH procedure in the first cluster are closely associated with cell cycle, mitosis, chromosome segregation, and phosphoprotein, which are common factors related to breast cancer. Similar analyses on the four and five-cluster cases indicate that the number of genes detected by the adaptive GBH procedure is 145 and 226, respectively. Out of those genes, 94 of them are overlapping with the six-cluster case. Comparing with an average of eight genes discovered by random grouping, which assigns groups randomly with the same group sizes as the above three cases, clustering and using the GBH procedure is advantageous in our case.

Table 3.

Comparison of the adaptive LSL GBH and the adaptive LSL BH procedures for k-means grouping. FDR level = 0.1

| Cluster | # of genes | LSL BH | LSL GBH | ||

|---|---|---|---|---|---|

| 1 | 1904 | 0.871 | 4 | 109 | |

| 2 | 214 | 0.991 | 0 | 0 | |

| 3 | 1368 | 0.999 | 0 | 0 | |

| 4 | 2458 | 0.969 | 1 | 19 | |

| 5 | 7058 | 0.999 | 4 | 2 | |

| 6 | 11,164 | 0.996 | 3 | 6 | |

| Total | 24,184 | 12 | 136 |

For comparison of the two procedures over a range of FDR levels, Figure 1 shows the increment in the number of signals detected by the adaptive GBH over BH procedure for both the LSL and TST methods. This indeed shows that by applying the GBH procedure, more signals can be detected.

6. SUMMARY

We have presented a new approach of p-value weighting procedure GBH for controlling the FDR when the hypotheses are believed to have some group structure. We prove that it controls the FDR for hypotheses with the positive regression dependence property when the proportions of true null hypotheses πg,0’s are known in each group. The weighting scheme (πg,0/πg,1) for the p-values in each group makes it possible to focus on groups that are expected to have more signals.

By estimating πg,0 for each group, we propose the adaptive GBH procedure and show that it controls the FDR asymptotically under weak dependence. We demonstrate the benefit of the adaptive GBH over BH by two methods of estimating πg,0, namely the LSL (Benjamini and Hochberg 2000) and the TST (Benjamini, Krieger, and Yekutieli 2006) estimators. As we have pointed out, the choice of the estimator for πg,0 in general does not affect the performance of the adaptive GBH procedure. In practice, people may choose the estimator based on their own preference.

Acknowledgments

James Hu and Harrison Zhou’s research is supported in part by NSF grant DMS-0645676. Hongyu Zhao’s research is supported in part by NSF grant DMS-0714817 and NIH grant GM59507.

APPENDIX: PROOFS

Proof of Theorem 1

The proof is based on the proof of theorem 4.1 in Finner, Dickhaus, and Roters (2009). Let ϕ = (ϕ1, …, ϕN) be the multiple testing procedure. ϕi = 0 means retaining Hi and ϕi = 1 means rejecting Hi. The FDR for oracle GBH is

Note that if πg,0 = 0 or πg,0 = 1 for some g, that group doesn’t contribute to the FDR because Ig,0 = ∅ if πg,0 = 0 and if πg,0 = 1 (we treat πg,0/πg,1 as ∞). Let η = {g : πg,0 ∈ (0, 1)}. Then

Using the proof of theorem 4.1 in Finner, Dickhaus, and Rosters (2009), we have

Proof of Lemma 1

For the unweighted case, the expected number of rejections of BH procedure for a given threshold t, where t ≤ t̃ = (1 − π0) maxg πg,0/πg,1 is

Similarly, the expected number of rejections of GBH procedure for t ≤ t̃ is

Let εg = ngπg,1/Σg ngπg,1 and xg = πg,0 (1 − π0)/πg,1. Now that x ↦ F(t/x) is convex for all x ≥ t̃. We have F(t/Σgεgxg) ≤ Σg εgF(t/xg), that is,

Therefore,

[RBH(t)] ≤

[RBH(t)] ≤

[RGBH(t)] for t ≤ t̃.

[RGBH(t)] for t ≤ t̃.

Proof of Lemma 2

Consider the estimator of Dg(t) defined in (2.12). Under (3.1), for any t ≥ 0,

where and by Glivenko–Cantelli Theorem. Therefore, .

Proof of Theorem 2

Theorem 4 generalizes this theorem. See the proof of Theorem 4.

Proof of Theorem 3

Under the conditions that Ug(t) = U(t) = t and Fg(t) = F(t) for all g, in the proof of Lemma 2, we show that G(a, t) ≥ C(t/π0) for all 0 ≤ t ≤ ming ag. Since G(a, π0) ≤ π0 = t/C(t/π0)|t=π0 and both G(a, t) and t/C(t/π0) are increasing, we have G(a, t) ≥ C(t/π0) for all 0 ≤ t ≤ maxg ag. Deduce B(a, t) ≤ t/C(t/π0) for t ∈ c(a). Therefore . Conditions limt↓0 t/C(t/π) ≤ α and π0 ≥ α guarantee that .

Note that both G(a, t) and t/C(t/π0) are continuous, we have

Since , deduce . For the adaptive BH procedure, the number of rejections RBH(T̂BH) = Σi∈IN{Pi ≤ T̂BH/π̂0} = N · CN (T̂BH/π̂0), and

where

by Glivenko–Cantelli Theorem and

by continuous mapping theorem. Therefore

. Analogously one can show that

. A more generalized argument is shown in the proof of Theorem 4. By dominant convergence theorem we have

and

. Therefore

[RBH(T̂BH)]/

[RBH(T̂BH)]/

[RGBH(T̂GBH)] ≤ 1 + o(1).

[RGBH(T̂GBH)] ≤ 1 + o(1).

Proof of Theorem 4

The proof applies Glivenko–Cantelli Theorem as in Storey, Taylor, and Siegmund (2004) and Genovese, Roeder, and Wasserman (2006). Let S = c(â) ∪ c(ρ). For any t ∈ S, we have

Note that for t ≥ 0,

| (A.1) |

where the last step is implied by Lemma 2. On the other hand,

Since Dg is continuous on [0, +∞) and limt→∞ Dg(t) = 1 is finite, Dg is uniform continuous. By continuous mapping theorem, . Therefore . So we have .

Let . According to (2.16) and (3.6), T̂GBH = supt∈c(â){t : BN (â, t) ≤ α} and , where . Note that the assumption limt↓0 B(π, t) ≠ α implies .

We first show . For any ξ > 0, note that B(ρ, t) is increasing for t ≥ maxg ρg, therefore , otherwise it contradicts with being the supremum. Fix δ > 0, for any δ′ ≥ δ, let . Then

where ε1 = Σg |ng,0/Nâg − πgπg,0/ρg|/(Σg πgπg,0/ρg), and ε2 = supt∈S |GN (â, t) − G(ρ, t)|/(Σg πgπg,0t′/ρg). Since and infδ′≥δ B(ρ, t′) > α, it can be derived that Pr(∩δ′≥δ {BN (â, t′) > α}) → 1 which implies .

On the other hand, since B(ρ, t) has a nonzero derivative at , it must be positive, otherwise cannot be the supremum of all t such that B(ρ, t) ≤ α. Thus, t ↦ B(ρ, t) is an increasing function and for any ξ > 0, . For any δ > 0, let ,

where and B(ρ, t°) < α. Then Pr(BN (â, t°) < α) → 1. Deduce . Combine this and previous result we get .

Next, we prove FDR(T̂GBH) ≤ α/ming{bg} + o(1). Let

be the empirical distribution of p-values under null hypothesis for adaptive GBH procedure. Note that implies for any δ > 0. Since , deduce Pr(T̂GBH > 0) → 1. On the other hand, the assumption ζ̂ < 1 rules out the situation where T̂GBH/âg → 0 for all groups. Therefore Pr(Σg Σi∈Ig {Pi ≤ T̂GBH/âg} ≥ 1) → 1. Then the false discovery proportion (FDP) is

where HN (â, T̂GBH) satisfies

By Condition (3.2), Glivenko–Cantelli Theorem implies . Therefore,

| (A.2) |

Now that and by (3.1),

| (A.3) |

Combine (A.2) and (A.3) we have

| (A.4) |

On the other hand,

where by (A.1) and by continuous mapping theorem. Therefore,

| (A.5) |

Since and ζ̄ < 1, we have . By (A.4) and (A.5),

By dominated convergence theorem,

| (A.6) |

Note that ζg ≥ bgπg,0 for some bg > 0. Deduce ρg ≥ bgπg,0/(1 − ζg). Since Ug(t) ≤ t for all t ≥ 0, we have

Hence, FDR(T̂GBH) ≤ α/ming{bg} + o(1).

Contributor Information

James X. Hu, Email: xing.hu@yale.edu, Department of Statistics, Yale University, New Haven, CT 06511.

Hongyu Zhao, Email: hongyu.zhao@yale.edu, Department of Epidemiology and Public Health, Yale University, New Haven, CT 06511.

Harrison H. Zhou, Email: huibin.zhou@yale.edu, Department of Statistics, Yale University, New Haven, CT 06511.

References

- Benjamini Y, Hochberg Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. Journal of the Royal Statistical Society, Ser B. 1995;57:289–300. [Google Scholar]

- Benjamini Y, Hochberg Y. Multiple Hypothesis Testing With Weights. Scandinavian Journal of Statistics. 1997;24:407–418. [Google Scholar]

- Benjamini Y, Hochberg Y. On the Adaptive Control of the False Discovery Rate in Multiple Testing With Independent Statistics. Journal of Educational and Behavioral Statistics. 2000;25:60–83. [Google Scholar]

- Benjamini Y, Yekutieli D. The Control of the False Discovery Rate in Multiple Testing Under Dependency. The Annals of Statistics. 2001;29:1165–1188. [Google Scholar]

- Benjamini Y, Krieger MA, Yekutieli D. Adaptive Linear Step-Up Procedures That Control the False Discovery Rate. Biometrika. 2006;93(3):491–507. [Google Scholar]

- Dmitrienko A, Offen WW, Westfall HP. Gatekeeping Strategies for Clinical Trials That Do Not Require All Primary Effects to Be Significant. Statistics in Medicine. 2003;22:2387–2400. doi: 10.1002/sim.1526. [DOI] [PubMed] [Google Scholar]

- Efron B. Simultaneous Inference: When Should Hypothesis Testing Problems be Combined? The Annals of Applied Statistics. 2008;2 (1):197–223. [Google Scholar]

- Efron B, Tibshirani R. Empirical Bayes Methods and False Discovery Rates for Microarrays. Genetic Epidemiology. 2002;23:70–86. doi: 10.1002/gepi.1124. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani R, Storey JD, Tusher V. Empirical Bayes Analysis of a Microarray Experiment. Journal of the American Statistical Association. 2001;96:1151–1160. [Google Scholar]

- Finner H, Roters M. On the False Discovery Rate and Expected Type 1 Errors. Biometrical Journal. 2001;43(8):985–1005. [Google Scholar]

- Finner H, Dickhaus T, Roters M. Dependency and False Discovery Rate: Asymptotics. The Annals of Statistics. 2007;35:1432–1455. [Google Scholar]

- Finner H, Dickhaus T, Roters M. On the False Discovery Rate and an Asymptotically Optimal Rejection Curve. The Annals of Statistics. 2009;37(2):596–618. [Google Scholar]

- Genovese CR, Wasserman L. A Stochastic Process Approach to False Discovery Control. The Annals of Statistics. 2002;32:1035–1061. [Google Scholar]

- Genovese CR, Wasserman L. A Stochastic Process Approach to False Discovery Control. The Annals of Statistics. 2004;32:1035–1061. [Google Scholar]

- Genovese CR, Roeder K, Wasserman L. False Discovery Control With P-Value Weighting. Biometrika. 2006;93:509–524. [Google Scholar]

- Hsueh H, Chen J, Kodell R. Comparison of Methods for Estimating the Number of True Null Hypotheses in Multiplicity Testing. Journal of Biopharmaceutical Statistics. 2003;13(4):675–689. doi: 10.1081/BIP-120024202. [DOI] [PubMed] [Google Scholar]

- Jin J, Cai T. Estimating the Null and the Proportion of Non-Null Effects in Large-Scale Multiple Comparisons. Journal of the American Statistical Association. 2007;102:495–506. [Google Scholar]

- Meinshausen N, Rice J. Estimating the Proportion of False Null Hypotheses Among a Large Number of Independently Tested Hypotheses. The Annals of Statistics. 2006;34(1):373–393. [Google Scholar]

- Quackenbush J. Computational Analysis of Microarray Data. Nature Review Genetics. 2001;2:418–427. doi: 10.1038/35076576. [DOI] [PubMed] [Google Scholar]

- Roeder K, Bacanu SA, Wasserman L, Devlin B. Using Linkage Genome Scans to Improve Power of Association in Genome Scans. The American Journal of Human Genetics. 2006;78(2):243–252. doi: 10.1086/500026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubin D, van der Laan M, Dudiot S. Technical report. University of California, Berkeley, Division of Biostatistics; 2005. Multiple Testing Procedures Which Are Optimal at a Simple Alternative; p. 171. [Google Scholar]

- Schweder T, Spjøtvoll E. Plots of P-Values to Evaluate Many Tests Simultaneously. Biometrika. 1982;69:493–502. [Google Scholar]

- Storey JD. A Direct Approach to False Discovery Rates. Journal of the Royal Statistical Society, Ser B. 2002;64:479–498. [Google Scholar]

- Storey JD, Taylor JE, Siegmund D. Strong Control, Conservative Point Estimation, and Simultaneous Conservative Consistency of False Discovery Rates: A Unified Approach. Journal of the Royal Statistical Society, Ser B. 2004;66:187–205. [Google Scholar]

- Sun L, Craiu RV, Paterson AD, Bull SB. Stratified False Discovery Control for Large-Scale Hypothesis Testing With Application to Genome-Wide Association Studies. Genetic Epidemiology. 2006;30(6):519–530. doi: 10.1002/gepi.20164. [DOI] [PubMed] [Google Scholar]

- The Gene Ontology Consortium. Gene Ontology: Tool for the Unification of Biology. Nature Genetics. 2000;25:25–29. doi: 10.1038/75556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van’t Veer LJ, et al. Gene Expression Profiling Predicts Clinical Outcome of Breast Cancer. Nature. 2002;415:530–536. doi: 10.1038/415530a. [DOI] [PubMed] [Google Scholar]

- Wasserman L, Roeder K. “Weighted Hypothesis Testing,” technical report. Carnegie Mellon University, Dept. Statistics; 2006. [Google Scholar]