Abstract

We investigated the effects of systematic changes in levels of treatment integrity by altering errors of commission during error-correction procedures as part of discrete-trial training. We taught 3 students with autism receptive nonsense shapes under 3 treatment integrity conditions (0%, 50%, or 100% errors of commission). Participants exhibited higher levels of performance during perfect implementation (0% errors). For 2 of the 3 participants, performance was low and showed no differentiation in the remaining conditions. Findings suggest that 50% commission errors may be as detrimental as 100% commission errors on teaching outcomes.

Keywords: treatment integrity, errors of commission, discrete-trial teaching, procedural fidelity

Treatment integrity has been conceptualized as consistent and accurate implementation of a treatment protocol in the manner in which it was designed (Gresham, 1989). Numerous applied studies provide evidence that client gains are best achieved when treatment integrity is high (e.g., DiGennaro, Martens, & Kleinmann, 2007; DiGennaro, Martens, & McIntyre, 2005). However, in each of these studies, client gains were simply correlated with observed integrity levels. The degree to which the treatment was implemented with fidelity was not systematically manipulated.

Some studies included parametric analyses in which differing levels of integrity were experimentally manipulated to demonstrate a functional relation between treatment integrity and client outcomes. For example, several studies demonstrated that behavior-reduction strategies were most effective when time-out procedures were implemented with 100% integrity (Northup, Fisher, Kahng, Harrell, & Kurtz, 1997; Rhymer, Evans-Hampton, McCurdy, & Watson, 2002). Similarly, Noell, Gresham, and Gansle (2002) and Wilder, Atwell, and Wine (2006) found that lower levels of treatment integrity impeded the acquisition of academic-related skills (e.g., mathematics performance and compliance to directives, respectively).

Although the studies mentioned above offer scientifically rigorous examples of the importance of treatment integrity considerations, all of these studies exclusively examined integrity errors of omission (i.e., not implementing components of a protocol). Recent discussions have expanded the concept of treatment integrity to include errors of commission (i.e., a violation of integrity due to implementation of procedures that are not prescribed by the treatment protocol; St. Peter Pipkin, Vollmer, & Sloman, 2010; Vollmer, Roane, Ringdahl, & Marcus, 1999; Worsdell, Iwata, Hanley, Thompson, & Kahng, 2000). These studies produced inconsistent results when behavior-reduction strategies featured commission errors. For example, Vollmer et al. (1999) demonstrated that omission and commission errors during implementation of differential reinforcement of alternative behavior reduced treatment efficacy if a higher rate of reinforcement was delivered for problem behavior. However, Worsdell et al. (2000) documented therapeutic outcomes during functional communication training despite commission errors (i.e., reinforcement of problem behavior). In another study, commission errors were found to be more detrimental than omission errors in both a human operant task and with children with disabilities in an educational setting (St. Peter Pipkin et al., 2010). Based on the mixed results of these studies, it is clear that additional research is needed to investigate the impact of commission errors on responding. To date, no study has exclusively examined errors of commission during instruction. Using the parametric analyses employed by Wilder et al. (2006) to examine the effects of omission errors on preschoolers' compliance to adult directives, we examined the impact of commission errors during discrete-trial instruction on the acquisition of receptive identification of nonsense shapes.

METHOD

Participants and Setting

We recruited three boys (Alek, Justin, and Salvatore) to participate in this study from a private school for individuals with autism. All of the participants were 8 years old and had been diagnosed with autism. Alek had also been diagnosed with a variant of Landau-Kleffner syndrome and a seizure disorder. During their enrollment, participants received discrete-trial instruction and were accustomed to delayed reinforcement through the use of a token economy. The study took place in the students' classrooms. Each classroom had six students, one teacher, and two to three teaching assistants.

Response Measurement, Interobserver Agreement, and Procedural Fidelity

The dependent variable was the percentage of discrete trials with accurate participant responding (i.e., percentage accuracy), which we defined as placement of the correct target stimulus into the experimenter's hand within 5 s of the discriminative stimulus. Percentage accuracy was calculated by dividing the total number of correct responses by the total number of trials and converting this ratio to a percentage. Data were collected by the first author (a doctoral-level behavior analyst) using paper and a pencil during one to three 5-min sessions 3 to 5 days per school week. The number of sessions varied due to the schedules of the classrooms and the experimenter. The participants did not receive classroom instruction by the first author outside experimental sessions. An independent second observer simultaneously collected data on participant behavior and the experimenter's implementation of discrete-trial training (i.e., procedural fidelity) during 57% (Alek), 35% (Justin), and 41% (Salvatore) of sessions. For participant behavior, an agreement was scored when both observers scored student performance identically (i.e., as correct or incorrect). Agreement was calculated as the number of agreements divided by agreements plus disagreements, multiplied by 100%. Mean percentage agreement was 99.6% (range, 90% to 100%) for Alek, 99.4% (range, 90% to 100%) for Justin, and 99.8% (range, 98% to 100%) for Salvatore. Procedural fidelity data were collected using a task analysis of discrete-trial teaching, error correction, and consequence manipulation (i.e., appropriate implementation of errors of commission). Procedural fidelity was 99.8% (range, 95% to 100%) for Alek and 100% for both Justin and Salvatore.

Design and Procedure

A combined multielement and nonconcurrent multiple baseline design across participants was used to evaluate the effects of differing levels of treatment integrity (i.e., commission errors) on participants' performance during discrete-trial instruction of receptive nonsense shapes.

Baseline

The experimenter presented three different cards horizontally on a tabletop, each depicting a different nonsense shape. Subsequent to appropriate attending (i.e., hands on lap or table and eye contact with the experimenter), the experimenter instructed the participants to “find [shape]” at which time the experimenter simultaneously extended her hand to receive a card. We allowed participants up to 5 s to exhibit a response. The experimenter did not deliver programmed consequences (i.e., tokens, social praise, and error correction) contingent on performance. Each session consisted of 10 consecutive trials of the same shape. The location of shapes on the tabletop was varied after each trial and was determined a priori by computer randomization.

Consequence manipulation

The purpose of this phase was to examine the effects of treatment integrity manipulation on participant performance. Participants received instruction as described in baseline; however, the experimenter rewarded unprompted correct responses with a token and social praise. In addition, least-to-most prompting was used as an error-correction procedure. Specifically, when participants made errors, the experimenter provided a gestural prompt to the correct shape followed by physical guidance at the hand if the gestural prompt did not evoke correct responding. Correct responding with the prompt was followed by a neutral statement only (e.g., “that's [shape]”). Thus, prompted correct responses were not followed by praise and a token. The consequence manipulation consisted of implementing discrete-trial teaching at three different integrity levels (e.g., 100%, 50%, and 0% commission errors). Each integrity level was associated with one nonsense shape (kiki: 100% errors; bouba: 50% errors; manoo: 0% errors). A commission error consisted of reinforcing an incorrect response by providing a token and social praise before the error-correction procedure was implemented. Thus, errors were reinforced as prescribed by each condition (see below) followed by error correction using least-to-most prompting. During the 100% errors of commission condition (i.e., kiki), every incorrect response was followed by an error of commission. During 50% errors of commission (i.e., bouba), every other incorrect response was rewarded with a token and social praise.1 Commission errors were not made during the 0% errors of commission condition (i.e., manoo). That is, this condition was associated with perfect integrity levels. Each session consisted of 10 consecutive trials of one shape associated with that condition. The order of conditions was counterbalanced across sessions.

RESULTS AND DISCUSSION

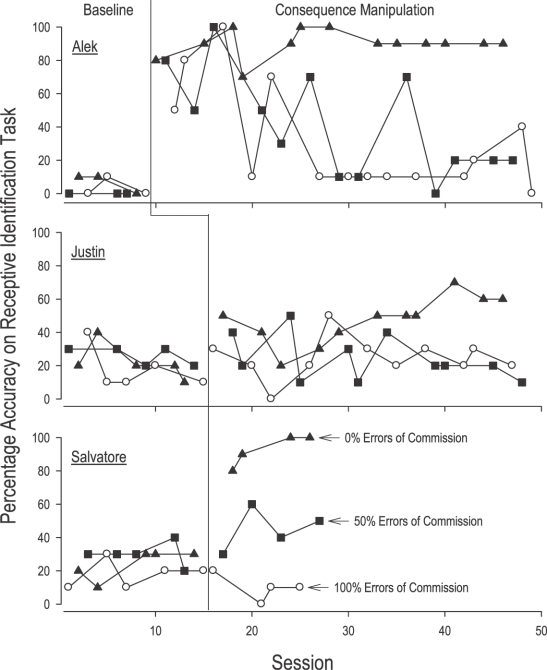

Figure 1 presents the participants' percentage accuracy across all sessions. During baseline, performances were generally low across all conditions. During the consequence manipulation, Alek's performance across the three conditions was initially undifferentiated. However, after five sessions in each condition, His percentage accuracy was high and stable during the 0% errors of commission condition (M = 90%) and low (M = 42%) during the 50% errors of commission condition. By the end of the study, his performance in the 50% errors condition did not differ from performance during the 100% errors condition (M = 31%). Like Alek, Justin's performance was initially undifferentiated, but after approximately five sessions, he exhibited a higher percentage accuracy when the experimenter made no commission errors (M = 47%). Performance during the 50% and 100% errors conditions was similar (M = 24% in both conditions). We observed differential outcomes immediately for Salvatore. His percentage accuracy was highest during the 0% commission errors condition (M = 92%). He averaged 45% and 10% accuracy during the 50% and 100% commission errors conditions, respectively.

Figure 1.

Percentage accuracy on the receptive identification task during discrete-trial teaching across baseline and consequence manipulation conditions for each participant.

These findings replicate previous research and suggest that relatively higher degrees of treatment integrity yield better acquisition (Noell et al., 2002; Wilder et al., 2006). That is, commission errors appear to degrade performance just as omission errors do. The degrading levels of accuracy for Alek imply that persistent low integrity could adversely affect performance even for those students who show initial progress under suboptimal conditions. Most important, however, the present study suggests that making some commission errors contingent on incorrect responses may be just as detrimental as making many commission errors during discrete-trial instruction.

Several limitations exist that should be addressed in future research. First, all of the participants had previous exposure to errorless teaching strategies that were not used in this study. The degree to which this affected performance is unknown, but we suspect that Justin's relatively lower performance even under optimal training conditions may have been influenced by the type of teaching strategy we selected. Second, the assignment of one shape to each integrity condition was not counterbalanced across participants; thus, faster acquisition in one condition may have been due to the specific instructional target associated with that condition. Finally, it is unlikely that practitioners who work in applied settings will exhibit error patterns similar to those that we programmed in this preliminary study. That is, the procedural errors we examined may not be representative of those typically committed in applied settings, and, as a result, the ecological validity of our findings may be compromised. It will be valuable, then, for future studies to examine the effects of other types of procedural fidelity errors during discrete-trial instruction. Moreover, research suggests that practitioners are unlikely to implement procedures with 100% accuracy in practice settings (e.g., DiGennaro et al., 2007). Thus, future studies should examine the relation between decrements in treatment integrity and student outcomes to identify the level of integrity needed to prevent declines in student performance. These results could inform practitioners about acceptable criterion levels for staff training and ongoing evaluation.

Acknowledgments

Florence D. DiGennaro Reed and Derek D. Reed are now at the Department of Applied Behavioral Science, University of Kansas.

Footnotes

Actual percentages of errors of commission were 52%, 52%, and 53% for Alek, Justin, and Salvatore, respectively. Obtained percentages deviated slightly from programmed percentages due to the fact that student performance dictated how many commission errors would be possible in 10 trials.

REFERENCES

- DiGennaro F.D, Martens B.K, Kleinmann A.E. A comparison of performance feedback procedures on teachers' treatment implementation integrity and students' inappropriate behavior in special education classrooms. Journal of Applied Behavior Analysis. 2007;40:447–461. doi: 10.1901/jaba.2007.40-447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiGennaro F.D, Martens B.K, McIntyre L.L. Increasing treatment integrity through negative reinforcement: Effects on teacher and student behavior. School Psychology Review. 2005;34:220–231. [Google Scholar]

- Gresham F.M. Assessment of treatment integrity in school consultation and prereferral intervention. School Psychology Review. 1989;18:37–50. [Google Scholar]

- Noell G.H, Gresham F.M, Gansle K.A. Does treatment integrity matter? A preliminary investigation of instructional implementation and mathematics performance. Journal of Behavioral Education. 2002;11:51–67. [Google Scholar]

- Northup J, Fisher W, Kahng S, Harrell R, Kurtz P. An assessment of the necessary strength of behavioral treatments for severe behavior problems. Journal of Developmental and Physical Disabilities. 1997;9:1–16. [Google Scholar]

- Rhymer K.N, Evans-Hampton T.N, McCurdy M, Watson T.S. Effects of varying levels of treatment integrity on toddler aggressive behavior. Special Services in the Schools. 2002;18:75–81. [Google Scholar]

- Pipkin C.S, Vollmer T.R, Sloman K.N. Effects of treatment integrity failures during differential reinforcement of alternative behavior: A translational model. Journal of Applied Behavior Analysis. 2010;43:47–70. doi: 10.1901/jaba.2010.43-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Roane H.S, Ringdahl J.E, Marcus B.A. Evaluating treatment challenges with differential reinforcement of alternative behavior. Journal of Applied Behavior Analysis. 1999;32:9–23. [Google Scholar]

- Wilder D.A, Atwell J, Wine B. The effects of varying levels of treatment integrity on child compliance during treatment with a three-step prompting procedure. Journal of Applied Behavior Analysis. 2006;39:369–373. doi: 10.1901/jaba.2006.144-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsdell A.S, Iwata B.A, Hanley G.P, Thompson R.H, Kahng S. Effects of continuous and intermittent reinforcement for problem behavior during functional communication training. Journal of Applied Behavior Analysis. 2000;33:167–179. doi: 10.1901/jaba.2000.33-167. [DOI] [PMC free article] [PubMed] [Google Scholar]