Sir,

Negative studies play a very important part in evidence-based medicine, so their methodological and statistical quality should be strong enough to consider them as true negative.[1,2] It has been observed in many articles published in medical journals that if the P value is less than 0.05, the study is considered as positive and if the P value is more that 0.05, it is considered as negative. “P value” has a very important place in research, but it has been highlighted that it has some important limitations and that misuse of P values is not uncommon. Conclusions on the basis of observed P values should only be made after consideration of many factors like study design, primary objectives of the study, sample size of the study, etc.[3] An insignificant P value does not automatically indicate that the particular research project has “failed to disprove the null hypothesis”. Based on insignificant P values, one cannot conclude that the “null hypothesis is true”, in other words, that both groups are equal or comparable (absence of evidence is not evidence of absence). Let us suppose that two groups are compared for two interventions for the same disease and that none of the interventions causes a statistically significant difference between the groups (P > 0.05). In this case, it cannot be concluded that both interventions are equally effective and that one intervention can be used at the place of the other.[4] The current study was done with the aim of critically analyzing the negative studies published in Indian Medical Journals to see whether the conclusions reported in these studies are accurate or not.

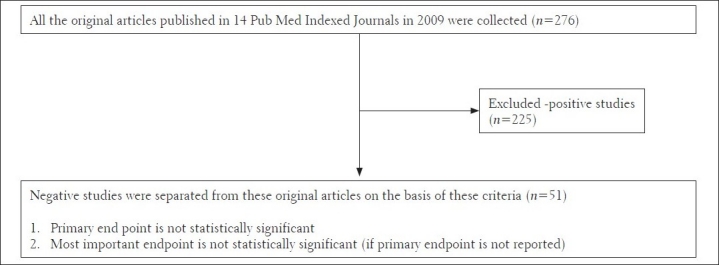

All the Pubmed indexed journals subscribed by the central library of our institute were taken into consideration. These journals were Annals of Indian Academy of Neurology (AIAN), Indian Journal of Orthopedics (IJOrtho), Indian Journal of Critical Care Medicine (IJCCM), Indian Journal of Dermatology, Venereology and Leprology (IJDVL), Indian Journal of Nephrology (IJN), Indian Journal of Dermatology (IJD), Indian Journal of Ophthalmology (IJO), Indian Journal of Urology (IJU), Indian Journal of Anesthesia (IJA), Indian Journal of Psychiatry (IJPsy), Indian Pediatrics (IP), Indian Journal of Medical Research (IJMR), Indian Journal of Medical Science (IJMS) and Indian Journal of Community Medicine (IJCM). All the original articles published in 2009 from these journals were evaluated by the first author (JK) to sort out negative studies. Studies were considered negative studies on the basis of these criteria: 1. the primary outcome was not statistically significant or 2. the most important outcome was not statistically significant, if the primary outcome was not reported. The most important outcome was considered to be the outcome for which either a sample size calculation was done or which came first in the abstract. Out of all 276 studies 50 studies were randomly assessed by second author to see whether they are negative studies or not (ĸ = 0.87). all these studies were carefully assessed by the first author to analyze the statements related to conclusion of the studies.

Out of 276 studies published in 2009 in these journals, 51 (18.4%) were negative studies. Out of the 51 negative studies, 39 (76.4%) were prospective studies, 9 (17.6%) were clinical trials and 3 (5.1%) were retrospective studies. Proportions were measured in 24 (47%) and mean/median in 27 (52.9%) of the studies. P values were calculated in all 51 studies. Sample size calculation was reported in only 7 (13.7%) studies. Contingency Tables (Chi-square and Fisher exact test) were used in 21 (41.1%) studies, t tests (includes both paired and unpaired) in 17 (33.3%) studies, analysis of variance (ANOVA) in 7 (13.7%) studies, the Mann-Whitney test in 3 (5.8%) and the Kruskal-Wallis test in 3 (5.8%) of the studies. In 14 (27.4%, 95% CI 17.1-40.9%) of the 51 negative studies identified, it was concluded that the groups were “equal/comparable”; in 36 (70%, 95% CI 57-81.2%) studies it was mentioned that there was “no statistical significant difference”; and in only one study it was mentioned that the study “failed to disprove the null hypothesis”.

This study shows that in 28% negative studies, the conclusions are incorrect as insignificant P values are used to prove null hypothesis, which is incorrect. This incorrect conclusion shows the poor knowledge of research methodology of not only the researchers but also the peer reviewers and editors of the journals. If investigators are interested in testing equivalence of interventions, different study designs, for example, studies with a non-inferiority design, should be used.[5,6] This study highlights the importance of adequate reporting of various parameters in published studies which can be useful in checking the validity of the study. The issues of underreporting of these parameters are already explored in a few studies done for western and Indian journals.[7–9] This article highlights the importance of statistical review of submitted manuscripts. All manuscripts should be sent for statistical review and every journal should have a statistical editor. It is possible that conclusions of such articles become the basis for the application of one intervention at the place of another. To avoid this, readers who identify such wrong conclusions in articles should write a letter to the editor of the respective journal in order to communicate such errors to other readers.

Figure 1.

Qourum chart

References

- 1.Williams HC, Seed P. Inadequate size of ‘negative’ clinical trials in dermatology. Br J Dermatol. 1993;128:317–26. doi: 10.1111/j.1365-2133.1993.tb00178.x. [DOI] [PubMed] [Google Scholar]

- 2.Keen HI, Pile K, Hill CL. The prevalence of under-powered randomized clinical trials in rheumatology. J Rheumatol. 2005;32:2083–8. [PubMed] [Google Scholar]

- 3.Lesaffre E. Use and misuse of the P value. Bull NYU Hosp Jt Dis. 2008;66:146–9. [PubMed] [Google Scholar]

- 4.Cohen HW. P values: Use and Misuse in Medical Literature. Am J Hypertens. 2011;24:18–23. doi: 10.1038/ajh.2010.205. [DOI] [PubMed] [Google Scholar]

- 5.Piaggio G, Elbourne DR, Altman DG, Pocock SJ, Evans SJ. for the CONSORT Group: Reporting of noninferiority and equivalence randomized trials: An extension of the CONSORT statement. JAMA. 2006;295:1152–60. doi: 10.1001/jama.295.10.1152. [DOI] [PubMed] [Google Scholar]

- 6.Costa LJ, Xavier AC, del Giglio A. Negative results in cancer clinical trials-equivalence or poor accrual? Control Clin Trials. 2004;25:525–33. doi: 10.1016/j.cct.2004.08.001. [DOI] [PubMed] [Google Scholar]

- 7.Hebert RS, Wright SM, Dittus RS, Elasy TA. Prominent medical journals often provide insufficient information to assess the validity of studies with negative results. J Negat Results Biomed. 2002;1:1. doi: 10.1186/1477-5751-1-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jaykaran, Saxena D, Yadav P, Kantharia ND, Solanky P. Quality of reporting of descriptive and inferential statistics in negative studies published in Indian Medical Journals. J Pharm Negat Results. 2011 [In Press] [Google Scholar]

- 9.Jaykaran, Saxena D, Yadav P, Kantharia ND. Most of the inconclusive studies published in Indian Medical Journals are underpowered. Indian Pediatr. 2011 [In Press] [PubMed] [Google Scholar]