The Challenge

Continuous improvement of graduate medical education programs is the objective of the Common Program Requirement1 for an annual program evaluation. Although guidelines outlining the who, what, and how for the evaluation are included in the Common Program Requirements, there appears to be a lack of clarity about the expectations for a thorough evaluation as “Evaluation of Program” is one of the most common citations by Residency Review Committees (RRCs).

What Is Known

Process Evaluation and Strategies

In contrast to researchers, whose goal is finding new, generalizable knowledge, evaluators focus on determining the value or effectiveness of a specific program, event, or activity. The annual program evaluation is a form of “process” evaluation, designed to support ongoing improvement. Process evaluation focuses on the degree to which program activities, materials, and procedures support achievement of program goals, including resident or fellow performance.2 The evaluator (program director, designated institutional officer [DIO], educator, or faculty member) is responsible for leading a systematic, comprehensive, objective, and fair evaluation process3 that yields accurate and useful information for continuous improvement to all stakeholders.4

Rip Out Action Items

The Plan-Do-Study-Act Program Evaluation Cycle:

-

Plan:

Identify problem areas noted by external sources, such as the Institutional Review Committee and the RRC.

Identify existing evaluation information, by Common Program Requirement and present in blueprint.

Identify information gaps per blueprint and gather data.

-

Do:

Collate and present data annually to all faculty and resident representatives.

Identify and act on 2 to 3 target deficiencies, approve action plan, and document.

-

Study:

Report findings throughout year and at annual faculty and resident meetings.

Continue to collect findings and monitor progress.

-

Act:

Actively engage the faculty and residents in the improvement of the 2 to 3 action items.

Repeat process.

Common Program Requirements:

The annual review is essentially a program level Practice-Based Learning and Improvement process for programs to actively engage in continuous improvement, based on constant self-evaluation.1 The principles of evaluation and improvement science (Plan-Do-Study-Act) can be useful when looking at one's education efforts for residents and fellows.4 One way to conduct the annual review is to reframe the Common and specialty-specific Program Requirements, using the who, what, how paradigm; this serves to deconstruct the evaluation process and support a stepwise approach to process evaluation.

Who: Residents and faculty confidentially evaluate the program. Other stakeholders (eg, patients, staff) may evaluate the program from their respective vantage points. The program director has primary responsibility for the overall evaluation process. The findings are reviewed by the institution's Internal Review Committee, Graduate Medical Education Committee, and DIO.5

What: Prior RRC citations, Accreditation Council for Graduate Medical Education (ACGME) and institution survey results, the curriculum, resident performance, faculty development efforts, alumni performance, and program quality are the focal evaluation areas.

How: A systematic, written evaluation designed to identify deficiencies resulting in an approved action or improvement plan, with ongoing follow-up by the program director or education committee and written documentation at each step.

How Can You Start TODAY

Identify the information you already have about your program specific to the Common Program Requirements.

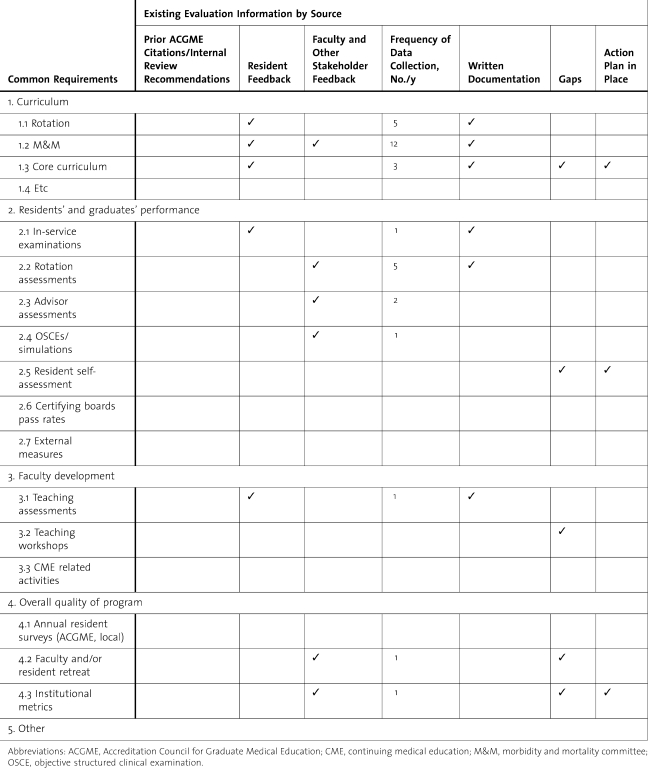

List the information in an evaluation blueprint by requirements, with who provides the information, the number of times per year, and whether written documentation is available (table).

Identify unneeded redundancies and information gaps by Common Program Requirement topic and source. For example, your annual faculty retreat includes a review of the overall quality of the program. However, there are no residents at the retreat nor do the minutes document the program's strengths or deficiencies with associated action plans.

Prioritize the actions needed to rectify issues found on the ACGME resident and program faculty surveys, prior internal review recommendations, and RRC citations.

Present preliminary findings to the department chair and/or leadership; discuss findings and infrastructure needed to create and sustain a robust evaluation process.

TABLE.

Program Evaluation Blueprint

What Can You Do LONG TERM

Form or use an existing Education Review Committee that is explicitly charged with program evaluation.

-

Present evaluation blueprint and associated data to the Education Review Committee.

Identify gaps or deficiencies from the blueprint.

Develop targeted action plan focused on 2 to 3 deficiencies as the primary focus for the year with metrics for progress.

Seek key leadership support and resources; document faculty input and approval for action plan, especially for those areas noted by the internal review committee and ACGME.

-

Continue evaluation data collection; implement new data collection tools and procedures as needed. Evaluation methods or tools that are overly time consuming or otherwise not feasible are rarely sustainable.

Before collecting any information, consider what difference that information will make in the evaluation of your program. If the data are positive, if the data are mixed, if the data are negative, what will you do?

If the results won't change what you are doing, then perhaps you should ask a different question and/or gather the data in a different way.

Seek to use existing venues/data collection strategies to address gap areas. Elaborate data collection processes are rarely sustainable.

Consider bringing in outside help if needed for assistance, consultation, or outside perspectives (eg, another program director, the DIO, an education specialist, and/or someone who specializes in program evaluation).

Provide plan updates and data on action-plan progress to the faculty and residents throughout the year. Modify plan as needed.

Present full data set and findings annually to residents, faculty, and other stakeholders. Demonstrate that target deficiencies have been addressed or are improving. Maintain permanent documentation of the findings.

Repeat the process.

Resources for Further Information

Rossi PH, Lipsey MW, Freeman HE. Evaluation: A Systematic Approach. 7th ed. Newbury Park, CA: Sage Publications, Inc; 2003.

Institute for Healthcare Improvement. Plan-do-study-act (PDSA) worksheet. http://www.ihi.org/IHI/Topics/Improvement/ImprovementMethods/Tools/Plan-Do-Study-Act+%28PDSA%29+Worksheet.htm. Accessed May 31, 2011.

ACGME. All RC notable practices. http://www.acgme.org/acWebsite/notablepractices/default.asp?SpecID=14. Accessed June 4, 2011.

Footnotes

Deborah Simpson, PhD, is Associate Dean and Professor, Medical College of Wisconsin. Monica Lypson, MD, MHPE, is Assistant Dean of Graduate Medical Education and Associate Professor of Internal Medicine at the University of Michigan. Both authors are associate editors for the Journal of Graduate Medical Education.

References

- 1.ACGME. Common Program Requirements: effective July 1, 2011. http://www.acgme-2010standards.org/pdf/Common_Program_Requirements_07012011.pdf. Accessed May 18, 2011. [Google Scholar]

- 2.Department of Education. Evaluation primer: an overview of educational evaluation. http://www2.ed.gov/offices/OUS/PES/primer1.html. Accessed May 22, 2011. [Google Scholar]

- 3.American Evaluation Association. The program evaluation standards: summary form. http://www.eval.org/evaluationdocuments/progeval.html. Accessed May 22, 2011. [Google Scholar]

- 4.Institute for Healthcare Improvement. How to improve. http://www.ihi.org/IHI/Topics/Improvement/ImprovementMethods/HowToImprove/. Accessed May 18, 2011. [Google Scholar]

- 5.ACGME. Institutional Requirements: effective July 1, 2007. http://www.acgme.org/acWebsite/irc/irc_IRCpr07012007.pdf. Accessed May 31, 2011. [Google Scholar]