Summary

The fusiform face area (FFA) and the superior temporal sulcus (STS) are suggested to process facial identity and facial expression information respectively. We recently demonstrated a functional dissociation between the FFA and the STS as well as correlated sensitivity of the STS and the amygdala to facial expressions using an interocular suppression paradigm (Jiang and He 2006). In the current event-related brain potential (ERP) study, we investigated the temporal dynamics of facial information processing. Observers viewed neutral, fearful, and scrambled face stimuli, either visibly or rendered invisible through interocular suppression. Relative to scrambled face stimuli, intact visible faces elicited larger positive P1 (110–130 ms) and larger negative N1 or N170 (160–180 ms) potentials at posterior occipital and bilateral occipito-temporal regions respectively, with the N170 amplitude significantly greater for fearful than neutral faces. Invisible intact faces generated a stronger signal than scrambled faces at 140–200 ms over posterior occipital areas whereas invisible fearful faces (compared to neutral and scrambled faces) elicited a significantly larger negative deflection starting at 220 ms along the STS. These results provide further evidence for cortical processing of facial information without awareness and elucidate the temporal sequence of automatic facial expression information extraction.

Keywords: face, facial expression, awareness, event-related potential (ERP)

INTRODUCTION

Facial information plays a critical role in human social interaction, and face perception is one of the most highly developed visual skills in humans. When we see a face, at least two main types of information are processed. On the one hand, a face is registered as a face and identified as belonging to a unique individual, establishing general facial category and facial identity information. On the other hand, facial expressions are evaluated in terms of their potential relevance to rewarding or aversive events or their social significance (Posamentier and Abdi, 2003). The relative ease and speed with which facial identity and facial expression information are processed suggests that a highly specialized system or systems are responsible for face perception, be it domain specific (Kanwisher et al., 1997) or general (Gauthier et al., 1999).

Bruce and Young (1986) proposed an influential model of face perception in which separate functional routes were posited for the recognition of facial identity and facial expression. More recently, Haxby and colleagues (2000) further suggested two functionally and neuroanatomically distinct pathways for the visual analysis of faces: one codes changeable facial properties (such as expression, lip movement and eye gaze) and involves the superior temporal sulcus (STS), whereas the other codes invariant facial properties (such as identity) and involves the lateral fusiform gyrus (FG) (Haxby et al., 2000). These models share the idea of distinct functional modules for the visual analysis of facial identity and expression.

Although evidence from behavioral and neuropsychological studies of patients with impaired face perception following brain damage and studies of nonhuman primates support the existence of two neural systems for facial processing (Campbell et al., 1990; Hasselmo et al., 1989; Heywood and Cowey, 1992; Humphreys et al., 1993; Tranel et al., 1988; Young et al., 1995), recent brain imaging studies have yielded less convergent findings; thus, the functional roles of the FG and the STS remain unclear, especially in relation to the emotional processing of faces (Fairhall and Ishai, 2007; Ishai et al., 2005; LaBar et al., 2003; Narumoto et al., 2001; Vuilleumier et al., 2001).

In a recent fMRI experiment, we examined the loci and pathways for processing invariant and changeable facial properties with and without influence from a conscious representation of facial information (Jiang and He, 2006). In the experiment, we presented participants with faces depicting neutral and fearful expressions. These images were either presented binocularly (visible) or dichoptically with strong suppression noise, and thus rendered invisible due to interocular suppression (see Fig. 1 in Jiang & He, 2006). With this paradigm, we found that the right fusiform face area (FFA), the right superior temporal sulcus (STS), and the amygdala responded strongly to visible faces. However, when face images were rendered invisible, activity in the FFA to both neutral and fearful faces was greatly reduced, though still measurable; activity in the STS was robust to invisible fearful faces but not to invisible neutral faces. The results in the invisible condition support the existence of dissociable neural systems specialized for processing facial identity and expression information (Jiang and He, 2006), and provide further insight into the automatic processing of facial expression information under interocular suppression (Pasley et al., 2004; Williams et al., 2004).

Interestingly, in the visible condition, the differentiation between neutral and fearful faces suggested a coupling between activity in the amygdala and the FFA rather than the STS (see also Fairhall and Ishai, 2007), given that both the FFA and the amygdala showed significantly greater responses to fearful than neutral faces. However, in the invisible condition, activity in the amygdala was highly correlated with that of the STS but not the FFA (Jiang and He, 2006). This strong correlation between STS and amygdala activity, combined with the finding that the STS only responded to invisible fearful faces but not neutral faces, suggests a possible connection between the STS and the amygdala that may contribute to the processing of facial expression information even in the absence of awareness. However, due to the limited temporal resolution of fMRI, we could not further investigate the temporal dynamics of facial information processing with and without influence from a conscious representation, and we could not distinguish whether facial expression information was communicated from the amygdala to the STS or vice versa.

In the current study, we recorded event-related brain potentials (ERPs) to examine the dynamics of facial information processing (and in particular, facial expression information) while observers viewed neutral, fearful, and scrambled face stimuli, either visibly or rendered invisible through interocular suppression (see Fig. 1). In brief, we found that 1) in the visible condition, intact faces, compared with scrambled face stimuli, evoked significantly larger P1 and N1 (N170) components at posterior occipital and bilateral occipito-temporal regions respectively, with facial expression (fearful faces vs. neutral faces) reflected by a stronger N1 (N170) response; 2) in the invisible condition, face-related components were significantly reduced compared with the visible condition. There remained small but significant responses to faces versus scrambled faces between 140 ms and 200 ms after stimulus onset in posterior occipital areas, and more importantly, a facial expression specific response was evident starting at 220 ms and was associated with activity in the STS.

Figure 1.

Sample stimuli and procedure used in the current study. (A) In the invisible condition, the intact face images with neutral and fearful expressions and the scrambled face images presented to the non-dominant eye can be completely suppressed from awareness by dynamic Mondrian patterns presented to the dominant eye, due to interocular suppression. The suppression effectiveness was verified by objective behavioural experiments. (B) The visible condition was identical to the invisible condition except that the Mondrian patterns were not presented; instead the same image (either an intact face or scrambled face) was presented to both eyes.

The current study thus provides further evidence for cortical processing of facial information in the absence of awareness and sheds new light on the temporal sequence of facial expression information extraction.

MATERIALS AND METHODS

Participants

Eighteen healthy observers (7 male) participated in the current ERP experiment. Observers had normal or corrected-to-normal vision and provided written, informed consent in accordance with procedures and protocols approved by the human subjects review committee of the University of Minnesota. They were naive to the purpose of the experiment.

Stimuli and procedure

Stimuli selected from the NimStim face stimulus set (The Research Network on Early Experience and Brain Development) were configured as described below using the MATLAB software package (The MathWorks, Inc.) and displayed on a 19-in. Mitsubishi Diamond Pro monitor (1024 × 768 at 100 Hz) using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). The images presented to the two eyes were displayed side-by-side on the monitor and perceptually fused using a mirror stereoscope mounted to the monitor. A central point (0.45°×0.45°) was always presented to each eye serving as the fixation. A frame (8.1°×10.8°) that extended beyond the outer border of the stimulus was presented to facilitate stable convergence of the two eyes’ images. The viewing distance was 36.8 cm.

In conventional binocular rivalry, two different images are displayed dichoptically to the two eyes, and the observer’s percept alternates between one image and the other. By continuously flashing random Mondrian images (around 10 Hz) to one eye, a static low-contrast image in the other eye can be completely suppressed for an extended duration, known as continuous flash suppression (Tsuchiya and Koch, 2005). Pilot data showed that the spectrum power of the early ERP components to faces was around 10 Hz, thus we used 20 Hz dynamic noise in the current study to reduce the noise contribution to critical ERP components while still achieving effective interocular suppression.

Observers viewed the face images (neutral, fearful, and scrambled faces) through a mirror stereoscope. The scrambled face stimuli were constructed by segmenting the face images into 18 × 24 square grids and randomly rearranging the grid elements. The visible and invisible conditions were run in separate blocks. In the invisible condition, dynamic Mondrian patterns (20 Hz) were presented to the subject’s dominant eye, and the face stimuli were simultaneously presented to the other eye for the same period, rendering the face images invisible. The faces and the Mondrian patterns extended 6.7°×9.4° and each trial was presented for 500 ms, followed by a randomized intertrial interval (ITI) from 300 ms to 700 ms in which only the fixation and the outer frame were presented. The contrast of the face images was adjusted for each individual participant to ensure that the images were effectively suppressed and truly invisible for the length of the experiment (Jiang and He, 2006; Tsuchiya et al., 2006). The observers were asked to perform a moderately demanding fixation task (detecting a slight change in the contrast of the fixation point) throughout each run to facilitate stable fixation and to help maintain their attention on the center of the display. The fixation point changed at random time points (on average once every 1 s), independent of the face stimulus presentation. During each trial of the invisible condition, observers were also asked to report whether they saw any faces or any parts of faces other than the Mondrian patterns by pressing another key, and these trials were discarded from further analysis. On average, 6.21% of the invisible trials were excluded. The visible condition was exactly the same as the invisible condition except that the Mondrian patterns were replaced with the same face stimulus presented to the other eye, so that observers could tell whether a neutral, fearful, or scrambled face stimulus was presented during the block.

In both conditions, neutral, fearful, and scrambled face stimuli were presented randomly with one block consisting of 144 trials. Each observer took part in 3 or 4 visible blocks and 9 or 12 invisible blocks. After the ERP session, a subset of the observers also performed a two-alternative forced-choice (2AFC) test to check the effectiveness of the suppression (see below).

ERP data recording and analysis

Electroencephalograms (EEG) were recorded from 64 scalp electrodes embedded in a NeuroScan Quik-Cap. Electrode positions included the standard 10–20 system locations and additional intermediate positions (Fig. 2). Four bipolar facial electrodes, positioned on the outer canthi of each eye and in the inferior and superior areas of each orbit, monitored horizontal and vertical EOG (HEOG and VEOG), respectively. The skin resistance of each electrode was adjusted to less than 5 kΩ. EEG was continuously recorded at a rate of 1000 Hz using CPz as an online reference. The signal was amplified using Synamps amplifiers and bandpass filtered online at 0.05–200 Hz. We epoched and averaged the EEG signal 200 ms prior to and 1000 ms after stimulus onset, and the pre-stimulus interval was used for baseline correction. Prior to averaging, epochs were screened for eye blinks and other artifacts. Epochs contaminated by eye blinks, eye movements, or muscle potentials exceeding ±50 μV at any electrode were excluded from averaging. Overall, less than 10% of the epochs were excluded from analyses. Averaged ERPs were baseline corrected by subtracting the averaged amplitude of the baseline and were re-referenced offline using a mean reference.

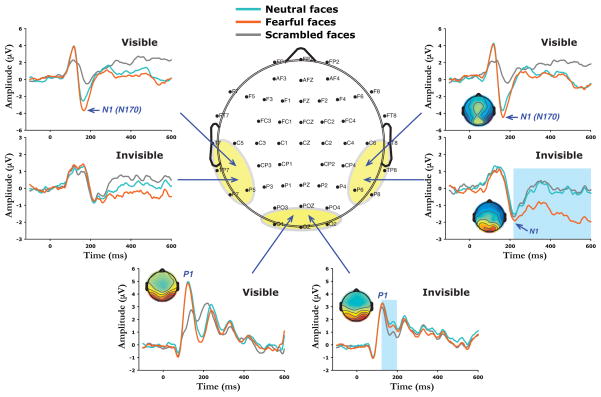

Figure 2.

Depiction of the 64-channel scalp electrode montage and the grand average ERPs evoked by visible and invisible face images. Relative to scrambled faces, neutral and fearful faces elicited both larger P1 and larger N1 (N170) components at posterior occipital (waveforms averaged from electrodes OZ, POZ, O1, and O2 were shown in the lower center) and bilateral temporal regions (waveforms averaged from electrodes P6/P5, P8/P7, TP8/TP7, and T8/T7 were shown in the upper right and upper left), respectively, with the amplitude of the N1 stronger for fearful than neutral faces. However, when face images were rendered invisible, processing of face detection (intact faces vs. scrambled faces) was reflected only in activity at 140–200 ms over posterior occipital areas (lower center, highlighted in blue) while fearful faces (compared to neutral and scrambled faces) produced a significantly larger negative deflection starting at 220 ms (N1) along superior temporal regions (upper right, highlighted in blue). The small insets show the voltage topographies for the P1 and N1 components.

Both peak amplitudes and latencies were measured to examine effects associated with face type and awareness state conditions. Peak latencies were measured relative to stimulus onset. ERP peak amplitudes and latencies for face stimuli were subjected to repeated measures ANOVAs with face type and awareness state as within-subjects factors. To isolate the electrophysiological activity related to the processes of face detection and facial expression discrimination, difference waves were obtained by subtracting ERPs to the scrambled face stimuli from ERPs to the neutral face stimuli, and by subtracting ERPs to the neutral face stimuli from ERPs to the fearful face stimuli, respectively.

Objective measures of the suppression effectiveness

Since the interpretation of the study critically depends on the suppressed images being truly invisible, we also checked the suppression effectiveness in a criterion-free way. Six of the participants completed a two-alternative forced-choice (2AFC) task following the ERP experiment. The experimental context (contrast, luminance, viewing angle, etc.) was made to be fully comparable with that in the ERP experiment. The same set of intact and scrambled face images were used in this behavioral measurement. Each trial consisted of two successive temporal intervals (500 ms each, with a 500-ms blank gap between them). The intact face image (either a neutral or fearful expression) could be presented randomly in the first or the second interval, and the scrambled face was presented in the other interval. Observers pressed one of two buttons to indicate whether the intact face was presented in the first or the second interval. Each observer underwent 100 trials, and all performed at chance level (50%). To further test the possibility that the face stimuli could be fused with the Mondrian pattern during the initial brief period of stimulus presentation (Wolfe, 1983), but remain invisible due to the masking effect of the subsequent dynamic Mondrian patterns, we performed the same 2AFC experiment but with a brief presentation duration (100 ms). Observers still performed at chance level under this condition. Therefore, these 2AFC measurements confirmed that the suppressed images were truly invisible during the presentation.

RESULTS AND DISCUSSION

ERP responses to visible and invisible face images

ERP responses to face stimuli were characterized by an early positive component (P1, peaking at 110–130 ms after stimulus onset) over the posterior occipital cortex (Fig. 2, lower center), followed by a negative wave (N1 or N170, peaking at 160–180 ms after stimulus onset) over bilateral temporal regions (Fig. 2, upper right and upper left). When face images were rendered invisible, the face stimuli elicited similar positive (P1) and negative (N1) components as those in the visible condition (Fig. 2).

However, amplitudes of the ERP components were significantly reduced for the invisible fearful and the neutral face presentations compared with the visible condition (P1: F1, 17 = 9.56, p < 0.007; N1: F1, 17 = 14.1, p < 0.002). It should also be noted that although the peak latency of the P1 component did not differ significantly for the visible versus the invisible conditions (120 ms vs. 126 ms), the peak latency of the negative ERP component (N1) was significantly delayed for the invisible compared to the visible condition (225 ms vs. 173 ms, F1, 17 = 96.2, p < 0.001).

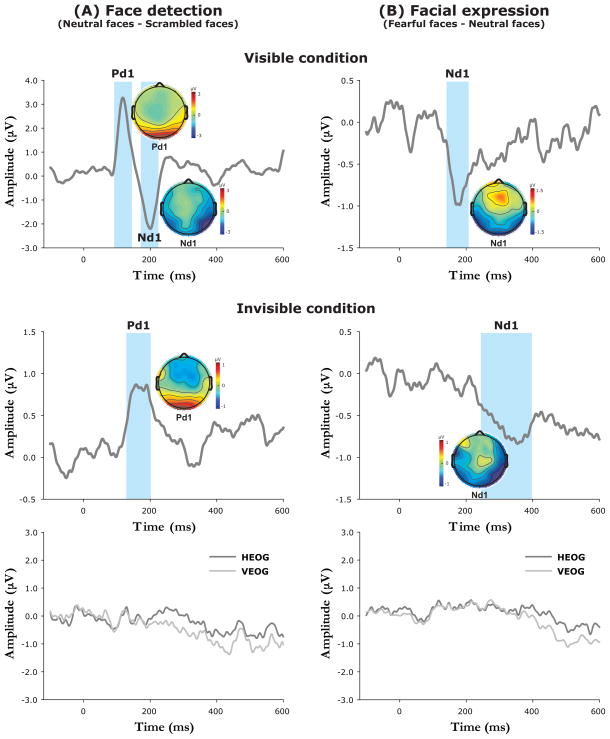

Differentiating intact faces from scrambled faces

To better isolate the electrophysiological activity related to the processing of face detection, difference waves were obtained by subtracting ERPs to the scrambled face stimuli from ERPs to the neutral face stimuli. Difference waves and voltage topographies of each difference-wave component are plotted in Fig. 3A. As can be seen from Fig. 2 and Fig. 3A, within the visible condition both the neutral and the fearful faces (relative to the scrambled face stimuli) elicited a larger P1 component (Pd1, neutral faces: F1, 17 = 15.9, p < 0.001; fearful faces: F1, 17 = 15.1, p < 0.001) and a larger N1 (N170) component (Nd1, neutral faces: F1, 17 = 24.6, p < 0.001; fearful faces: F1, 17 = 38.0, p < 0.001). Interestingly, when face images were rendered invisible, differential brain responses to intact faces versus scrambled faces were reflected only in positive activity (140–200 ms) over posterior occipital sites following the peak of the P1 component (Pd1, neutral faces: F1, 17 = 13.5, p < 0.002; fearful faces: F1, 17 = 10.1, p < 0.006). In contrast with the visible condition, the neutral and scrambled face stimuli were not distinguished from each other in the N1 component (F1, 17 = 0.09, p > 0.7) in the invisible condition.

Figure 3.

Difference waves and voltage topographies for distinctive face processes. (A) The difference-wave components for early face detection (neutral faces vs. scrambled faces) were significant at Pd1 and Nd1 components at posterior occipital and bilateral temporal regions in the visible condition whereas only Pd1 was significant in the invisible condition. (B) The difference-wave components specific to the neural encoding of facial expression (fearful faces vs. neutral faces) were indexed by an Nd1 component at lateral superior temporal sites in both the visible and invisible conditions. Moreover, the difference waves obtained from HEOG and VEOG in the invisible condition (bottom panels) show that there was no significant effect of eye movements at or before the latencies of the Pd1 and Nd1 components.

Processing of facial expression

To better isolate the brain response related to facial expression processing, difference waves were obtained by subtracting ERPs to the neutral face stimuli from ERPs to the fearful face stimuli (Fig. 3B). While in the visible condition the fearful faces were not differentiated from the neutral faces in the P1 component (F1, 17 = 0.005, p > 0.9), the fearful faces evoked a significantly stronger response than the neutral faces in the N1 (N170) component (Nd1, F1, 17 = 7.16, p < 0.02). Following the N1 component, there was no difference between the neutral and the fearful faces (F1, 17 = 1.42, p > 0.2). When face images were rendered invisible, the affective component of facial expression (fearful faces vs. neutral faces) yielded a significantly larger negative deflection (N1) along lateral superior temporal areas commencing 220 ms after stimulus onset (Nd1, F1, 17 = 7.64, p < 0.02). The differentiation of the N1 (Nd1) component between face detection (Fig. 3A) and facial expression processing (Fig. 3B) in the visible and invisible conditions is consistent with the pattern of our fMRI results for the STS, which evidenced comparable responses to the neutral and fearful faces in the visible condition, but responses to the fearful faces only in the invisible condition. The fact that this response pattern was evident only for the electrodes close to the lateral superior temporal regions supports the idea that the N1 effect originated from the STS.

However, it is also possible that the ERP amplitude differences in distinctive face processes (face detection and facial expression) in the invisible condition might be due to increased eye movements associated with neutral face stimuli compared with scrambled face stimuli (Pd1 in Fig. 3A), or increased eye movements for fearful face stimuli than for neutral face stimuli (Nd1 in Fig. 3B). To test this possibility, we extracted data from HEOG and VEOG channels and calculated the difference waves for both face detection and facial expression processing. As illustrated in Fig. 3 (bottom panels), the HEOG and VEOG waveforms did not show a significant effect at or before the latencies of the P1 (Pd1) and N1 (Nd1) components (p > 0.2). These results suggest that the observed effects of face detection and facial expression processing under interocular suppression are not simply due to increased eye movements for neutral or fearful face stimuli, but indeed reflect cortical processing of invisible facial information.

GENERAL DISCUSSION

In the current study, we investigated the temporal dynamics of facial information extraction. Recent ERP and MEG experiments have shown that face specific processing can occur as early as 170 ms (N170/M170 component), associated with face detection and categorization (Anaki et al., 2007; Bentin et al., 1996; Itier and Taylor, 2004a), and/or emotional expression recognition (Blau et al., 2007; Miyoshi et al., 2004). The N170/M170 component has been demonstrated to be a face-selective response in the human brain, though its function, characteristics, and source origins are still controversial (Batty and Taylor, 2003; Harris and Nakayama, 2007, 2008; Itier et al., 2006; Itier and Taylor, 2004a, b; Sagiv and Bentin, 2001; Xu et al., 2005).

Consistent with previous studies, we found that both neutral and fearful faces evoked strong P1 and N1 (N170) responses compared with scrambled face stimuli. Furthermore, the N1 (N170) amplitude, which is associated with activity in bilateral temporal regions, was significantly larger for fearful than neutral faces. By rendering face images invisible through interocular suppression, our data show that intact faces were differentiated from scrambled face stimuli between 140 ms and 200 ms (P1) over posterior occipital regions, whereas encoding of facial expression (fearful faces vs. neutral faces) started just after 220 ms (N1) and could be observed in the superior temporal sites (STS) only. Although it is unclear whether the ERP component sensitive to intact face versus scrambled face stimuli reflects early visual processing of general objects or a face-specific response (Itier and Taylor, 2004a), the facial expression related activity in the STS clearly supports cortical processing of facial information in the absence of awareness. Indeed, it has been shown that when upright and inverted faces were paired with identical suppression noise in a modified binocular rivalry paradigm, upright faces took less time to gain dominance compared to inverted faces (Jiang et al., 2007). This observation was replicated and extended to emotional faces (i.e., fearful faces became dominant faster than neutral faces) (Yang et al., 2007). These findings suggest that suppressed face images are processed to the level where the brain can tell an upright (or emotional) face from an inverted (or neutral) face, suggesting that facial information (both face detection and facial expression) can indeed be processed to some extent in the absence of explicit awareness.

How did information arrive at the STS when face images were suppressed interocularly? It is possible that interocular suppression is incomplete at early cortical areas and facial information leaks through at the site of interocular competition. This idea is consistent with the current view that binocular rivalry may be a process involving multistage competition (Blake and Logothetis, 2002; Freeman, 2005; Nguyen et al., 2003; Tong et al., 1998) and that the Magnocellular pathway may be less vulnerable to rivalry competition (He et al., 2004). To investigate this possibility, we extracted the data in the OFA from our previous neuroimaging study (Jiang and He, 2006), since the OFA is believed to be the first stage in face processing models (Calder and Young, 2005; Haxby et al., 2000). Compared with the visible condition, interocular suppression eliminated activity to faces in the OFA whereas amygdala activity was robust in both the visible and invisible conditions (see Fig. 3 in Jiang & He, 2006). The fact that the OFA did not show a significant response to the invisible faces indeed casts some doubts on this cortical-leaking possibility. However, it is still possible that activity in the OFA to invisible faces reflects an early visual process on a time course that is too short to be revealed by fMRI.

Alternatively, invisible facial information may travel through subcortical pathways to the amygdala and bypass the cortical site of interocular suppression to eventually reach the STS. Indeed, it has been reported that during suppressed periods of binocular rivalry, emotional faces still generate a greater response in the amygdala relative to neutral faces and non-face objects (Pasley et al., 2004; Williams et al., 2004), and our previous fMRI results also showed that amygdala activity was less dependent on the conscious states of face perception (Jiang and He, 2006). Indeed, a recent intracranial recording study found that specific responses to fearful faces, compared with neutral faces, were first recorded in the amygdala as early as 200 ms after stimulus onset, and then spread to occipito-temporal visual regions including the STS (Krolak-Salmon et al., 2004). Collectively, our current data in conjunction with the strong correlation between activity in the STS and the amygdala found in our fMRI study (Jiang and He, 2006) suggests that the signal related to fearful faces in the STS was modulated by the amygdala (Krolak-Salmon et al., 2004; Morris et al., 1998; Pessoa et al., 2002).

In summary, the current results provide further evidence for cortical processing of facial information in the absence of awareness and suggest that different neural subsystems for encoding facial information can be better revealed when faces are rendered invisible to observers, possibly due to the reduced modulatory effects from conscious representation. We suggest that cortical and sub-cortical visual pathways jointly contribute to facial information processing, conveying the fine detail and the crude emotion information of faces, respectively.

Acknowledgments

This research was supported by the James S. McDonnell Foundation, the National Institutes of Health Grants R01 EY015261-01, P50 MH072850, and P30 NS057091, and the National Institute of Child Health & Human Development Grant T32 HD007151. Y.J. was also supported by the Eva O. Miller Fellowship, the Neuroengineering Fellowship, and the Doctoral Dissertation Fellowship from the University of Minnesota.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anaki D, Zion-Golumbic E, Bentin S. Electrophysiological neural mechanisms for detection, configural analysis and recognition of faces. Neuroimage. 2007;37:1407–1416. doi: 10.1016/j.neuroimage.2007.05.054. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Brain Res Cogn Brain Res. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. J Cogn Neurosci. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake R, Logothetis NK. Visual competition. Nat Rev Neurosci. 2002;3:13–21. doi: 10.1038/nrn701. [DOI] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. The face-specific N170 component is modulated by emotional facial expression. Behav Brain Funct. 2007;3:7. doi: 10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Campbell R, Heywood CA, Cowey A, Regard M, Landis T. Sensitivity to eye gaze in prosopagnosic patients and monkeys with superior temporal sulcus ablation. Neuropsychologia. 1990;28:1123–1142. doi: 10.1016/0028-3932(90)90050-x. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective Connectivity within the Distributed Cortical Network for Face Perception. Cereb Cortex. 2007;17:2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Freeman AW. Multistage model for binocular rivalry. J Neurophysiol. 2005;94:4412–4420. doi: 10.1152/jn.00557.2005. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nat Neurosci. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- Harris A, Nakayama K. Rapid face-selective adaptation of an early extrastriate component in MEG. Cereb Cortex. 2007;17:63–70. doi: 10.1093/cercor/bhj124. [DOI] [PubMed] [Google Scholar]

- Harris A, Nakayama K. Rapid adaptation of the m170 response: importance of face parts. Cereb Cortex. 2008;18:467–476. doi: 10.1093/cercor/bhm078. [DOI] [PubMed] [Google Scholar]

- Hasselmo ME, Rolls ET, Baylis GC. The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res. 1989;32:203–218. doi: 10.1016/s0166-4328(89)80054-3. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- He S, Carlson TA, Chen X. pathways and temporal dynamics in binocular rivalry. In: Alais D, Blake R, editors. Binocular rivalry and perceptual ambiguity. MIT Press; Boston, MA: 2004. [Google Scholar]

- Heywood CA, Cowey A. The role of the ‘face-cell’ area in the discrimination and recognition of faces by monkeys. Philos Trans R Soc Lond B Biol Sci. 1992;335:31–37. doi: 10.1098/rstb.1992.0004. discussion 37–38. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Donnelly N, Riddoch MJ. Expression is computed separately from facial identity, and it is computed separately for moving and static faces: neuropsychological evidence. Neuropsychologia. 1993;31:173–181. doi: 10.1016/0028-3932(93)90045-2. [DOI] [PubMed] [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P. Face perception is mediated by a distributed cortical network. Brain Res Bull. 2005;67:87–93. doi: 10.1016/j.brainresbull.2005.05.027. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Herdman AT, George N, Cheyne D, Taylor MJ. Inversion and contrast-reversal effects on face processing assessed by MEG. Brain Res. 2006;1115:108–120. doi: 10.1016/j.brainres.2006.07.072. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb Cortex. 2004a;14:132–142. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Source analysis of the N170 to faces and objects. Neuroreport. 2004b;15:1261–1265. doi: 10.1097/01.wnr.0000127827.73576.d8. [DOI] [PubMed] [Google Scholar]

- Jiang Y, Costello P, He S. Processing of invisible stimuli: advantage of upright faces and recognizable words in overcoming interocular suppression. Psychol Sci. 2007;18:349–355. doi: 10.1111/j.1467-9280.2007.01902.x. [DOI] [PubMed] [Google Scholar]

- Jiang Y, He S. Cortical responses to invisible faces: dissociating subsystems for facial-information processing. Curr Biol. 2006;16:2023–2029. doi: 10.1016/j.cub.2006.08.084. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krolak-Salmon P, Henaff MA, Vighetto A, Bertrand O, Mauguiere F. Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: a depth electrode ERP study in human. Neuron. 2004;42:665–676. doi: 10.1016/s0896-6273(04)00264-8. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Crupain MJ, Voyvodic JT, McCarthy G. Dynamic perception of facial affect and identity in the human brain. Cereb Cortex. 2003;13:1023–1033. doi: 10.1093/cercor/13.10.1023. [DOI] [PubMed] [Google Scholar]

- Miyoshi M, Katayama J, Morotomi T. Face-specific N170 component is modulated by facial expressional change. Neuroreport. 2004;15:911–914. doi: 10.1097/00001756-200404090-00035. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buchel C, Frith CD, Young AW, Calder AJ, Dolan RJ. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121 ( Pt 1):47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Narumoto J, Okada T, Sadato N, Fukui K, Yonekura Y. Attention to emotion modulates fMRI activity in human right superior temporal sulcus. Brain Res Cogn Brain Res. 2001;12:225–231. doi: 10.1016/s0926-6410(01)00053-2. [DOI] [PubMed] [Google Scholar]

- Nguyen VA, Freeman AW, Alais D. Increasing depth of binocular rivalry suppression along two visual pathways. Vision Res. 2003;43:2003–2008. doi: 10.1016/s0042-6989(03)00314-6. [DOI] [PubMed] [Google Scholar]

- Pasley BN, Mayes LC, Schultz RT. Subcortical discrimination of unperceived objects during binocular rivalry. Neuron. 2004;42:163–172. doi: 10.1016/s0896-6273(04)00155-2. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- Pessoa L, Kastner S, Ungerleider LG. Attentional control of the processing of neural and emotional stimuli. Brain Res Cogn Brain Res. 2002;15:31–45. doi: 10.1016/s0926-6410(02)00214-8. [DOI] [PubMed] [Google Scholar]

- Posamentier MT, Abdi H. Processing faces and facial expressions. Neuropsychol Rev. 2003;13:113–143. doi: 10.1023/a:1025519712569. [DOI] [PubMed] [Google Scholar]

- Sagiv N, Bentin S. Structural encoding of human and schematic faces: holistic and part-based processes. J Cogn Neurosci. 2001;13:937–951. doi: 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- Tong F, Nakayama K, Vaughan JT, Kanwisher N. Binocular rivalry and visual awareness in human extrastriate cortex. Neuron. 1998;21:753–759. doi: 10.1016/s0896-6273(00)80592-9. [DOI] [PubMed] [Google Scholar]

- Tranel D, Damasio AR, Damasio H. Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology. 1988;38:690–696. doi: 10.1212/wnl.38.5.690. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Koch C. Continuous flash suppression reduces negative afterimages. Nat Neurosci. 2005;8:1096–1101. doi: 10.1038/nn1500. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Koch C, Gilroy LA, Blake R. Depth of interocular suppression associated with continuous flash suppression, flash suppression, and binocular rivalry. J Vis. 2006;6:1068–1078. doi: 10.1167/6.10.6. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Williams MA, Morris AP, McGlone F, Abbott DF, Mattingley JB. Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. J Neurosci. 2004;24:2898–2904. doi: 10.1523/JNEUROSCI.4977-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe JM. Influence of spatial frequency, luminance, and duration on binocular rivalry and abnormal fusion of briefly presented dichoptic stimuli. Perception. 1983;12:447–456. doi: 10.1068/p120447. [DOI] [PubMed] [Google Scholar]

- Xu Y, Liu J, Kanwisher N. The M170 is selective for faces, not for expertise. Neuropsychologia. 2005;43:588–597. doi: 10.1016/j.neuropsychologia.2004.07.016. [DOI] [PubMed] [Google Scholar]

- Yang E, Zald DH, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7:882–886. doi: 10.1037/1528-3542.7.4.882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young AW, Aggleton JP, Hellawell DJ, Johnson M, Broks P, Hanley JR. Face processing impairments after amygdalotomy. Brain. 1995;118 (Pt 1):15–24. doi: 10.1093/brain/118.1.15. [DOI] [PubMed] [Google Scholar]