Abstract

How does the brain translate information signaling potential rewards into motivation to get them? Motivation to obtain reward is thought to depend on the midbrain [particularly the ventral tegmental area (VTA)], the nucleus accumbens (NAcc), and the dorsolateral prefrontal cortex (dlPFC), but it is not clear how the interactions among these regions relate to reward-motivated behavior. To study the influence of motivation on these reward-responsive regions and on their interactions, we used dynamic causal modeling to analyze functional magnetic resonance imaging (fMRI) data from humans performing a simple task designed to isolate reward anticipation. The use of fMRI permitted the simultaneous measurement of multiple brain regions while human participants anticipated and prepared for opportunities to obtain reward, thus allowing characterization of how information about reward changes physiology underlying motivational drive. Furthermore, we modeled the impact of external reward cues on causal relationships within this network, thus elaborating a link between physiology, connectivity, and motivation. Specifically, our results indicated that dlPFC was the exclusive entry point of information about reward in this network, and that anticipated reward availability caused VTA activation only via its effect on the dlPFC. Anticipated reward thus increased dlPFC activation directly, whereas it influenced VTA and NAcc only indirectly, by enhancing intrinsically weak or inactive pathways from the dlPFC. Our findings of a directional prefrontal influence on dopaminergic regions during reward anticipation suggest a model in which the dlPFC integrates and transmits representations of reward to the mesolimbic and mesocortical dopamine systems, thereby initiating motivated behavior.

Introduction

Motivation translates goals into action. The initiation and organization of motivated behavior is thought to depend on the mesolimbic and mesocortical dopamine systems of the brain (Salamone et al., 2007), that is, the projections from the ventral tegmental area (VTA) to the nucleus accumbens (NAcc) and the prefrontal cortex (PFC) (Berridge and Robinson, 1998; Wise, 2004). Dopamine in the NAcc and PFC is indeed critical to the normal function of these regions (Goldman-Rakic, 1998; Durstewitz et al., 2000; Hazy et al., 2006; Alcaro et al., 2007). However, much remains unknown about how interactions among these regions relate to motivated behavior. VTA neurons respond to anticipated reward, but from where do they get information about reward cues in the environment? What dynamic changes in network function, triggered by reward anticipation, occur during motivation?

The VTA, NAcc, and PFC are each strongly implicated in motivation to obtain reward: VTA neurons respond to reward cues and increase their activity before goal-directed behavior (Ljungberg et al., 1992; Schultz, 1998; Fiorillo et al., 2003). The NAcc is essential for translating motivational drive into motor behavior (for review, see Goto and Grace, 2005). The PFC, specifically the dorsolateral prefrontal cortex (dlPFC), is involved in the representation and integration of goals and reward information (Miller and Cohen, 2001; Wagner et al., 2001; Watanabe and Sakagami, 2007).

Physiological relationships among the VTA, NAcc, and PFC are similarly thought to be crucial for executing reward-motivated behavior. These include dopamine release in the NAcc following VTA activation (Fields et al., 2007; Roitman et al., 2008) and modulation of VTA responsivity by both the NAcc (Grace et al., 2007) and PFC (Gariano and Groves, 1988; Svensson and Tung, 1989; Gao et al., 2007). Importantly, studies of the role of the PFC in driving VTA activity have been in anesthetized rodent models, thus imposing two important constraints on extant evidence. First, because rodents lack an expanded prefrontal cortex, the physiological role of dlPFC in modulating VTA remains unknown (Frankle et al., 2006). Second, and more fundamentally, characterization of physiological interactions in anesthetized animals cannot address the relationship between physiology and motivated behavior. Despite their well documented interactions, the dynamics of the network linking dlPFC, VTA, and NAcc has yet to be investigated during motivated behavior. Specifically, it is unclear where information signaling potential reward enters this network and how it impacts the relationships between these reward-responsive regions (but see Bromberg-Martin et al., 2010).

To investigate how this network supporting motivated behavior responds to information about potential rewards, we used functional magnetic resonance imaging (fMRI) to measure activations in the dlPFC, VTA, and NAcc during a rewarded reaction time task. This task allowed us to isolate activations associated with motivation, which occurred after the presentation of reward-informative cues and before the execution of goal-directed behavior. Using dynamic causal modeling (DCM), an analysis technique optimized to model causal relationships in fMRI data (Friston et al., 2003), we identified where reward information enters and how it modulates this dopamine-dependent system.

Materials and Methods

Subjects and behavioral task.

The data analyzed in this study were originally collected to examine the anticipation of either gaining or losing monetary rewards for either oneself or a charity, as described in detail in a previous report (Carter et al., 2009). Twenty young adults completed the original study. Four subjects were excluded due to poor data acquisition (i.e., signal drop out, poor coverage), one was excluded due to a Beck Depression Inventory score indicating depression, and three were excluded due to insufficient activation in at least one of the regions of interest (see below, Dynamic causal modeling), leaving 12 subjects in the data reported here (age, 23.9 years; SD, 3.8 years; six males).

To experimentally manipulate subjects' motivational state, we used a modified monetary incentive delay task (Knutson et al., 2001; Carter et al., 2009). The current analyses used only the data acquired while participants were anticipating monetary gains for themselves, resulting in 20 trials per condition. During these trials, initial cues marked the start of the trial and indicated whether individuals could earn either $4 (cue, figure on a red background) or $0 (cue, figure on a yellow background) for a fast reaction time to an upcoming target. After a variable delay (4–4.5 s), a response target (target, a white square) appeared, indicating that participants were to press a button using their right index finger as quickly as possible. Participants earned the amount indicated by the cue if they responded in time or earned nothing otherwise. Using information about response times on previous trials in the same condition, an adaptive algorithm set reaction time thresholds so that subjects won ∼65% of the time.

fMRI data acquisition.

A 3T GE Signa MRI scanner was used to acquire blood oxygen level-dependent (BOLD) contrast images. Each of the two runs comprised 416 volumes (TR, 1 s; TE, 27 ms; flip angle, 77°; voxel size, 3.75 × 3.75 × 3.75 mm) of 17 axial slices positioned to provide coverage of the midbrain, while also including striatum, and dlPFC (for image of coverage, see Carter et al., 2009). This restricted volume, which sacrificed superior parietal cortex, permitted a TR of 1 s for increased sensitivity in regions of interest where susceptibility artifact can be problematic. The GE Signa EPI sequence automatically passes images through a Fermi filter with a transition width of 10 mm and radius of one-half the matrix size, which results in an effective smoothing kernel of 4.8 mm2. At the beginning of the scanning session, we collected localizer images to identify the participant's head position within the scanner. Additionally, we acquired inversion recovery spoiled gradient recalled (IR-SPGR) high-resolution whole-volume T1-weighted images (voxel size, 1 × 1 × 1 mm) and 17 IR-SPGR images, coplanar with the BOLD contrast images, for use with registration, normalization, and anatomical specification of regions of interest (see below).

fMRI preprocessing.

Images were skull stripped using the BET tool of FSL (Smith, 2002). Preprocessing was performed using SPM8 software (http://www.fil.ion.ucl.ac.uk/spm). Images from separate runs from each subject were concatenated, and then realigned using a fourth-degree B spline, with the mean image as a reference. Images were then smoothed using a 4 mm Gaussian kernel, yielding a cumulative effective smoothing kernel of 6.25 mm FWHM. This 4 mm kernel was previously tested against 2, 6, and 8 mm kernels, for subcortical and medial temporal regions of interest, and gave both maximum Z scores and more limited spatial extent of activations, appropriate to the anatomy of these regions. For within-subject analysis, images were coregistered in two steps: first, IR-SPGR whole-volume T1-weighted images were coregistered to the 17 slice IR-SPGR images using a normalized mutual information function. Functional data were then coregistered to the resliced T1 images. For between-subject analyses, high-resolution structural images were normalized to MNI space and normalization parameters were applied to coregistered functional images.

The first (within-subject) level statistical models were analyzed using a general linear model (GLM). Regressors included 2 task-related regressors of interest, 13 task-related regressors of no interest, 6 motion regressors of no interest, and 3 session effects as covariates of no interest. The task-related effects were modeled with 15 columns in the design matrix that represented the anticipation and outcome possibilities for self, charity, and control. Both cue and outcome periods were modeled with 1 s boxcars at cue and outcome onset. This allowed for isolation of neural activity related to processing of the cue and preparation to execute motivated behavior. Task-related regressors of interest modeled the cue/anticipation periods for $4 and $0 gain trials. Task-related regressors of no interest included the outcome regressors for $4 and $0 self trails, as well as both cue and outcome regressors for charity, and control trials. The β estimates were then calculated using a general linear model with a canonical hemodynamic response basis function. Contrast images for $4–$0 cue regressors were computed for each subject and entered into a between-subject random-effects analysis. Statistical thresholds were set to p < 0.01, false discovery rate (FDR) corrected, with a cluster extent of six voxels for the group-level GLM analyses (Genovese et al., 2002). For construction of DCMs, an additional first-level analysis was run that included a column with the combined anticipation periods for $4 and $0 gain trials.

Dynamic causal modeling.

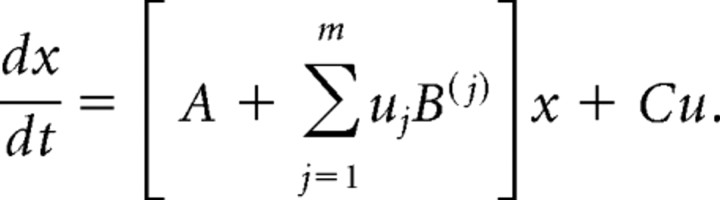

All DCM analyses were conducted in DCM8 as implemented by SPM8. Dynamic causal modeling uses generative models of brain responses to infer the hidden activity of brain regions during different experimental contexts (Friston et al., 2003). A DCM is composed of a system of nodes that interact via unidirectional connections. Experimental manipulations are treated as perturbations in the system, which operate either by directly influencing the activity of one or more nodes (driving inputs), or by influencing the strength of connection between nodes (modulatory input). The latter effect of exogenous input represents how the coupling between two regions varies in response to experimental manipulations.

DCM represents the hidden neuronal population dynamics in each region with a state variable x. For inputs u, the state equation for DCM is:

|

Matrix A represents the strengths of context-independent or intrinsic connections, matrix B represents the modulation of context-dependent pathways, and matrix C represents the driving input to the network. The state equation is transformed into a predicted BOLD signal by a biophysical forward model of hemodynamic responses (Friston et al., 2000; Stephan et al., 2007). Model parameters are estimated using variational Bayes under the Laplace approximation, with the objective of maximizing the negative free energy as an approximation to the log model evidence, a measure of the balance between model fit and model complexity.

Selection of volumes of interest.

Volumes of interest (VOIs) were defined by taking the intersection of anatomical boundaries and significant functional activations. For the NAcc and VTA, anatomical boundaries were hand drawn using AFNI software on high-resolution anatomical images in individual space (afni.nimh.nih.gov/afni). The NAcc was drawn according to the procedure outlined by Breiter et al. (1997). The ventral tegmental area was drawn in the saggital section and was identified with the following boundaries: the superior boundary was the most superior horizontal section containing the superior colliculus. The inferior boundary was the most inferior horizontal section containing the red nucleus. The lateral boundaries were drawn in the sagittal plane as vertical lines connecting the center of the colliculus and the peak of curvature of the interpeduncular fassa. The anterior boundary was clearly visible as CSF, and the posterior boundary was a horizontal line bisecting the red nucleus in the axial plane. For the dlPFC, anatomical boundaries were defined on the MNI template (fmri.wfubmc.edu/cms) as the intersection of the left BA 46 and medial frontal gyrus, and back-transformed into individual space.

The objective of DCM is to formulate and compare different possible mechanisms by which an established effect (local response) may have arisen. How this effect is initially detected and established, before the DCM analysis, depends on the particular question that is being asked. In the context of a GLM analysis, as in our case, this is usually done by requiring that there is a certain degree of activation in each region considered. Here, we operationalized this by requiring that, for each subject, each region showed an activation at the level of p < 0.05, uncorrected, with a cluster extent threshold of >3 voxels in subcortical/midbrain structures and 5 voxels in cortical structures. This criterion eliminated three subjects from the DCM analysis (two for NAcc; one for dlPFC). The time series for both the VTA and the NAcc were extracted from the peak voxel within each subject's VOI, following the study by Adcock et al. (2006). For the dlPFC, the VOI was an 8 mm sphere around each individual's peak activation. Mean coordinates ([x y z]) in MNI space for the three VOIs were as follows: left NAcc, [−8 14 −1]; right NAcc, [12 14 −3]; left VTA, [−2 −15 −13]; right VTA, [3 −17 −12]; dlPFC, [−44 38 19].

Construction of DCMs.

To answer the questions of where information about potential reward enters the system and how the system is modulated by reward anticipation, the model space included all possible configurations of driving input and several possible combinations of context-sensitive connections. Driving inputs represent cue information about all reward types (high and low), while modulatory inputs represented only high reward cues. All models assume reciprocal intrinsic connections between all regions due to the evidence from rodent literature of either monosynaptic pathways or direct functional relationships between the VTA, NAcc, and PFC (see Introduction). To reduce the number of models, we used a simplifying assumption that the bidirectional VTA–NAcc connections are modulated by the reward context. This is a reasonable assumption given the strong evidence that these two regions are critical for supporting a motivational state (for review, see Haber and Knutson, 2010). The context sensitivity of all connections to and from the dlPFC was systematically varied, resulting in 16 models. In addition, we crossed these models with all 7 possible combinations of driving input configurations. In total, 112 models per subject were fitted using a variational Bayes scheme, and posterior means [MAP (maximum a posteriori)] and posterior variances were estimated for each connection of each model.

Bayesian model selection.

Bayesian model selection (BMS), in combination with family level inference and Bayesian model averaging, was used to determine the most likely model structure given our data (Stephan et al., 2009, 2010; Penny et al., 2010). In a first comparison step, the model evidence, which balances model fit and complexity, was computed for each model using the negative free-energy approximation to the log-model evidence. Models were compared at the group level using a novel random-effects BMS procedure (Stephan et al., 2009). The models were assessed using the exceedance probability, the probability that a given model explains the data better than all other models. In a second level of comparison, we used family-level inference using Gibbs sampling on seven families (Penny et al., 2010), with all models in each family sharing a different driving input configuration. Models in the winning family were then subjected to the random-effects BMS procedure. Finally, we analyzed the winning family using Bayesian model averaging, a procedure that provides a measure of the most likely parameter values for an entire family of models across subjects (Stephan et al., 2009, 2010). Parameter significance is assessed by the fraction of samples in the posterior density that are greater from zero (posterior densities are sampled with 10,000 points), and parameters were considered significant at a posterior probability threshold of 95%.

Results

Behavioral data

To assess subjects' motivational state, we examined reaction times to target stimuli. An algorithm calibrated response time thresholds separately within each condition so that participants were successful ∼65% of the time [$4: mean (M), 64%; SD, 7%; $0: M, 63%; SD, 6%], to ensure equivalent reinforcement rates across conditions. For all included participants, reaction times to the target in $4 gain trials (M, 201 ms; SD, 42 ms) were faster than in $0 gain trials (M, 226 ms; SD, 61 ms; p < 0.001), signifying that participants were more motivated to perform in the $4 condition. Behavioral data for the loss and charity trials not included in this analysis can be found in the study by Carter et al. (2009).

GLM analyses

To identify neural substrates of motivation to obtain reward, we used a GLM analysis of the period beginning with the presentation of the cue (either $4 vs $0); hereafter, we refer to this period of anticipation of the opportunity to obtain reward as “reward motivation.” GLM analyses of the contrast $4 > $0 during this period revealed that reward motivation was associated with significant activations (p < 0.01, FDR-corrected, 6 voxel cluster extent threshold) in all of the regions of interest used for the DCM analysis: bilaterally in the VTA, NAcc, dlPFC. Additional significant activations were observed bilaterally in the midbrain (surrounding the VTA), dorsal striatum, ventral striatum (surrounding the NAcc), posterior parietal cortex, inferior parietal lobule, insula, ventrolateral prefrontal cortex, cerebellum, and ventral visual stream as well as the in the left hippocampus.

DCM analysis

We used DCM with BMS and model space partitioning to examine a network consisting of VTA, NAcc, and dlPFC during reward motivation. Our DCMs estimate the strength of driving inputs, whereby information signaling an upcoming opportunity to obtain reward directly influences neural activity in a region; intrinsic connectivity, whereby regions influence each other in the absence of reward information; and modulatory inputs, whereby information signaling the opportunity for future reward changes the strength of coupling between regions (Friston et al., 2003). We used BMS to compare the relative evidence of alternate DCMs that varied both the location of driving inputs into the system as well as the pattern of modulatory inputs, as specified below. We then applied the recently developed approach of model space partitioning, which allowed us to compare families of DCMs that varied with respect to driving input, factoring out all other differences in the models (Penny et al., 2010).

Using random-effects BMS, we compared 112 models that differed both in where information signaling potential reward entered the network and how this information influenced connectivity (Fig. 1). We tested a subset of the full model space that included all possible combinations of driving inputs, full intrinsic connectivity, and varied all combinations of possible modulatory connections between all regions except the connections between the VTA and NAcc (for details, see Materials and Methods). The exceedance probabilities (the probability that a model explains the data better than all others considered) of the top eight models together summed to 81% (Fig. 2). These top eight models all shared the feature of driving input to the dlPFC.

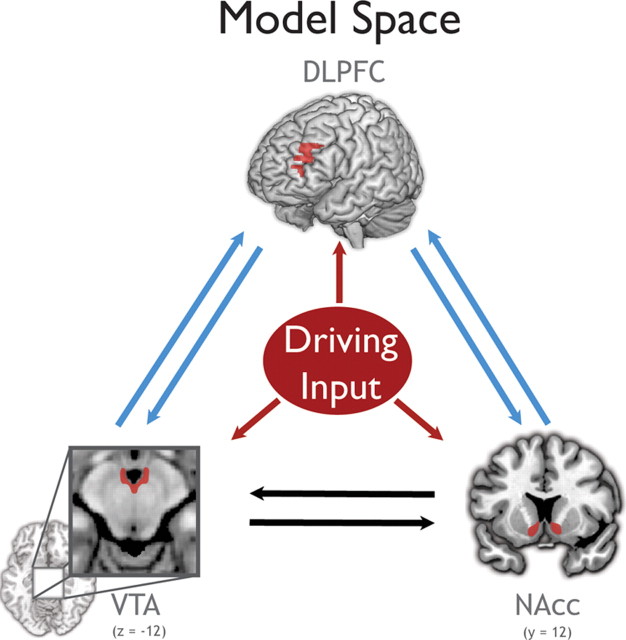

Figure 1.

The full tested model space. Sixteen models that systematically varied the context sensitivity of the connections represented by blue arrows were constructed. The black arrows represent connections that were allowed to be context sensitive in all models. These 16 models were crossed with all seven possible combinations of driving inputs (red). Driving inputs represent cue information about all reward types (high and low), while modulatory inputs represented only high reward cues.

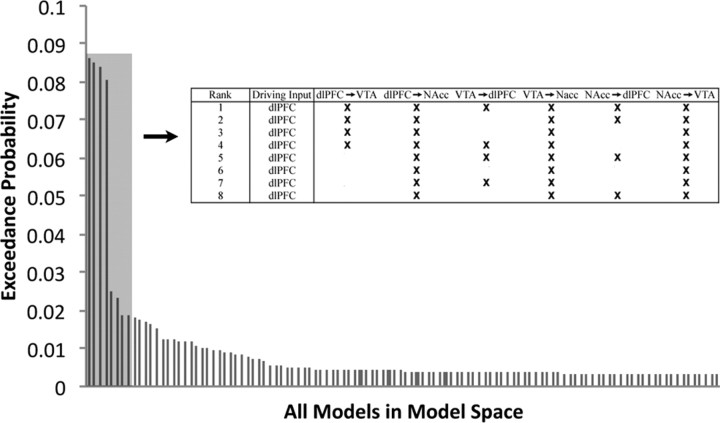

Figure 2.

Bayesian model selection results for the full model space. The Bayesian model selection indicates the most likely model for the full model space. The top eight models account for 81% of the exceedance probability (the exceedance probabilities for all 112 models sum to 1). All eight of the best models have the driving input solely at the dlPFC. Context-sensitive connections for the top eight models are shown above in the inset.

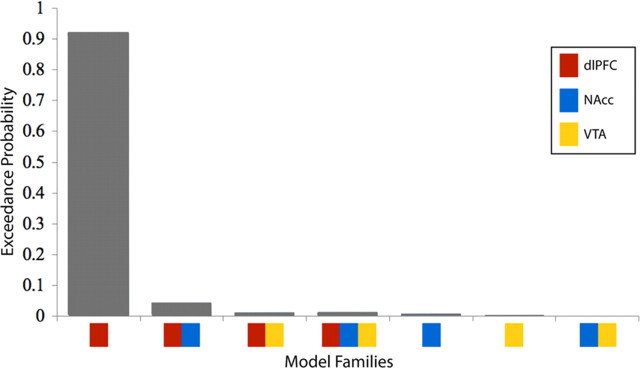

We next used model space partitioning to compare families of models defined by their driving input configuration. This analysis determined the most likely target of driving input, regardless of modulatory connectivity. We grouped our 112 models into 7 families of 16 models, each with the same driving input configuration, and compared these families using Bayesian family level inference. This analysis yielded very strong evidence (exceedance probability of 0.93) that the family of models with the driving input solely to the dlPFC provided a better fit to the data (i.e., had higher evidence) than the six other families considered, including those where there were driving inputs to both the dlPFC and other regions (Fig. 3). Because exceedance probabilities across families sum to 1, the relative probabilities of families is more informative than the absolute probability (Penny et al., 2010). In the present study, the ratio of the exceedance probability of the best to second best family was 21. The equivalent ratio for the expected posterior probability, the expected likelihood of obtaining a model in a particular family from any randomly selected subject, was 3.3. Thus, the exceedance probability of 0.93 observed here represents very strong evidence that reward information was best modeled as entering the system at the dlPFC.

Figure 3.

Exceedance probabilities for each family of models sharing a driving input configuration. The ratio of exceedance probabilities of the first to second best model is 21, indicating with very high certainty that reward information enters the modeled network solely at the dlPFC.

The models with driving inputs to the dlPFC were then compared with one another using BMS within the winning family. As anticipated, no single superior model within this group was determined. Notably, the top four models within the winning family, summing to 69% of the exceedance probability, all had modulatory connections from dlPFC to VTA, indicating that the influence of the dlPFC on the VTA was also important for determining model fit.

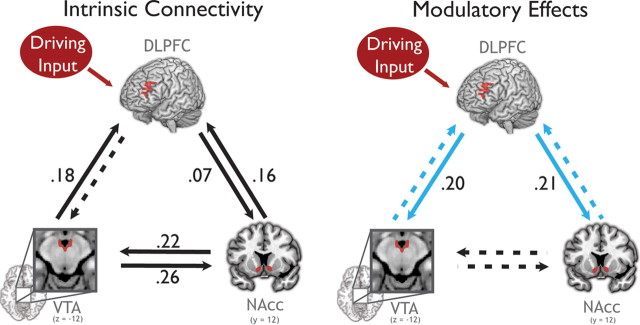

To determine how reward information entering the dlPFC affects network dynamics across subjects, we used Bayesian model averaging to compute the weighted average of modulatory changes in connectivity for the winning family of models (Stephan et al., 2009) (Fig. 4, Table 1). Parameter estimates were considered significant at a posterior probability threshold of 95% that the posterior mean is different from zero. At baseline, intrinsic connection strengths were strongest from the VTA to NAcc and NAcc to VTA. This connection was not significantly modulated by reward motivation even though all of the models tested allowed for the expression of context-dependent modulation of these connections. However, we emphasize that all intrinsic connections were included in all models, so the finding of strong intrinsic connectivity is independent of our constraints on the model space. This lack of significant modulation is unexpected given the role of these regions in supporting motivation.

Figure 4.

Connectivity determined by Bayesian model averaging of models in the winning family, which all shared driving inputs solely to the dlPFC (Occam's window, 11.8 models; SD, 5.3). All solid connections are significant at a posterior probability threshold of 95% that the posterior mean is larger than zero. The dotted connections are not significant. Driving inputs represent cue information about all reward types (high and low), while modulatory inputs represented only high reward cues. Left, The intrinsic (baseline) connectivity for each connection. Right, Modulation of connectivity during reward motivation. Modulation of the blue connections was varied in the model space. Connection strengths are indicated on each arrow (in hertz). Only the connections from dlPFC to VTA and NAcc are significantly modulated by reward motivation.

Table 1.

Means and SDs of all the parameter estimates for the averaged model

| Intrinsic connections |

Modulatory connections |

|||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| VTA→NAcc | 0.26* | 0.07 | 0.07 | 0.07 |

| VTA→dlPFC | 0.18* | 0.07 | 0.03 | 0.06 |

| NAcc→VTA | 0.22* | 0.07 | 0.08 | 0.07 |

| NAcc→dlPFC | 0.16* | 0.06 | 0.04 | 0.06 |

| dlPFC→VTA | 0.01 | 0.07 | 0.20* | 0.07 |

| dlPFC→NAcc | 0.07* | 0.07 | 0.21* | 0.07 |

| Driving input | 0.09* | 0.02 | ||

The starred values indicate significant parameters.

Reward motivation induced significant increases in connection strength only in the connections from the dlPFC to the NAcc and to the VTA. This is not an artifact of the fact that driving input entered the dlPFC; in fact, it has been suggested that, when a driving input to a region and a modulatory input to its efferents are correlated (as they are here), the constraints on the prior variances of the driving and modulatory connections result in an underestimation of the strength of the modulatory effect (SPM listserv, 022036). The modulatory effect of reward motivation in these two connections from dlPFC was very strong: The connection from the dlPFC to the VTA increased from zero and the modulatory effect from the dlPFC to the NAcc is 290% of the intrinsic connection strength. Thus, reward motivation engaged previously weak or inactive pathways from the dlPFC to the VTA and NAcc, without significantly altering connectivity throughout the rest of the system.

Discussion

We investigated the impact of reward motivation on the dynamics of mesolimbic and mesocortical dopaminergic regions in a network comprising VTA, NAcc, and dlPFC. Importantly, our task structure permitted us to isolate neural activity concurrent with onset of information signaling potential reward and distinct from processing related to reward outcomes, allowing the observation of motivation preceding the execution of goal-directed behavior. Our results indicate that, during a simple rewarded reaction time task, information about expected reward entered this network solely at the dlPFC. This reward information increased the modulation by the dlPFC of the VTA and the NAcc, structures that are known to influence the physiology and plasticity of networks supporting motivated behavior, attention, and memory throughout the brain. Together, these findings suggest that, in response to goal-relevant information, the PFC harnesses these modulatory pathways to generate physiological states that correspond to expectancy and motivation.

To characterize the network of brain regions involved in motivation, we used DCM. Importantly, DCM does not assume that temporal precedence is necessary for causality. Because the lag between neural activity and BOLD activation can theoretically vary across brain regions, due to vascular factors, DCM is particularly appropriate for detecting network interactions in BOLD data. In addition, DCM allows for inference about causal interactions between regions that depend on the experimental context. These inferences can be tested across a theoretically unlimited model space, here allowing us to test among all possible driving input configurations.

Our DCM analysis of the VTA–NAcc–dlPFC network during reward motivation indicated that the driving input was exclusively to the dlPFC. This means that, in this behavioral context and within the modeled network, information signaling potentially available reward entered the dopamine system at the dlPFC and not at the other regions in the model. Furthermore, driving input unique to the dlPFC appeared to be the feature of the models that was most important for determining model fit. Our findings demonstrate that reward cues directly increased dlPFC activation, and only influenced activation in the VTA or NAcc indirectly, via connections from the dlPFC.

In addition to the regions modeled in our analysis, previous research has identified other candidate regions, such as the medial prefrontal cortex, orbitofrontal cortex, and habenula (Staudinger et al., 2009; Bromberg-Martin et al., 2010) that could plausibly initiate motivated behavior. The question of how these regions interact with this network, especially the dlPFC, is an important avenue of future research. However, it is important to note that, if any of these regions were driving the modeled network via efferents to the VTA or NAcc, one would expect to see this influence expressed in our data as a driving input to the VTA or NAcc. Thus, our finding of a unique driving input to the dlPFC indicates that, in this behavioral context, information signaling potential reward entered the modeled network neither via subcortical relays nor other prefrontal cortical inputs to the VTA, but rather via the dlPFC.

In addition to demonstrating PFC modulation of VTA in awake animals during motivated behavior, the current findings are, to our knowledge, the first demonstration of a prefrontal influence on the VTA in humans or other nonhuman primates. Bayesian model averaging revealed strong modulation of the VTA by the dlPFC specifically during reward motivation; this VTA–dlPFC pathway was not engaged intrinsically. Moreover, there was a nearly threefold increase in connectivity strength from the dlPFC to the NAcc during reward motivation. Conversely, intrinsic VTA–NAcc connectivity was significant, but was not modulated by reward. This result could indicate that connectivity between the VTA and NAcc is always strong regardless of the level of motivation. However, based on prior research showing that reward information has an effect on VTA modulation of NAcc (Bakshi and Kelley, 1991; Ikemoto and Panksepp, 1999; Parkinson et al., 2002), this interpretation is unlikely to be correct. More plausible is that changes in VTA–NAcc connectivity existed, but because their effect size was small relative to that of dlPFC connectivity, they did not contribute significantly to the model evidence, further suggesting that dlPFC modulation was highly influential for the function of this network.

The finding that there was no increase in the connection strength from the VTA to the dlPFC may seem to conflict with physiology literature demonstrating dopaminergic modulation over the PFC (Williams and Goldman-Rakic, 1995; Durstewitz et al., 2000; Gao and Goldman-Rakic, 2003; Paspalas and Goldman-Rakic, 2004; Seamans and Yang, 2004; Wang et al., 2004; Gao et al., 2007). Although we found a modest intrinsic influence of the VTA on the PFC, this influence did not change with motivational state. However, we do not believe our findings to be contradictory to the above literature, as the modulatory influences may contribute to separate, but strongly interacting, behavioral processes. We believe the modulatory role of the PFC over the VTA contributes to goal-directed, instrumental components of behavior, and the modulatory role of the VTA over the PFC may be especially important to other behavioral processes, such as updating or task switching, which were not manipulated in our paradigm. Thus, our findings, in the context of the previous literature, suggest that paradigms that evoked motivated executive behaviors would reveal bidirectional modulations between the VTA and dlPFC.

Building on the wealth of previous research outlining the influence of midbrain dopamine on target regions, the current findings suggest a model in which the dlPFC integrates information about potential reward and implements goal-directed behavior by tuning mesolimbic dopamine projections. This interpretation is consonant with evidence from the rodent literature showing that the PFC is the only cortical region that projects to dopamine neurons in the VTA (Beckstead et al., 1979; Sesack and Pickel, 1992; Sesack and Carr, 2002; Frankle et al., 2006). The findings fill a critical gap in this literature: stimulation of the PFC has been shown to regulate the firing patterns of dopamine neurons in rodents (Gariano and Groves, 1988; Svensson and Tung, 1989; Gao et al., 2007), and multisite recordings demonstrate phase-coherence between the PFC and the VTA that mediates slow-oscillation burst firing (Gao et al., 2007), but there has been no demonstration that these physiological relationships are driven by motivation and goal-directed behavior. Furthermore, the absence of an expanded frontal cortex in the rodent makes an appropriate rodent correlate of primate dlPFC unclear. Although there is evidence in primates for excitatory projections from the PFC to midbrain dopamine neurons (Williams and Goldman-Rakic, 1998; Frankle et al., 2006), the functional significance of these relatively sparse projections has been questioned. Our findings showing a physiological relationship between prefrontal cortex and VTA in humans thus fill a second critical gap in the extant literature on human (and nonhuman primate) motivation.

Within the PFC, dlPFC is well situated to orchestrate motivated behavior because of its role in planning and goal maintenance. Primate physiology studies have demonstrated that, while both the orbitofrontal cortex and the dlPFC encode reward information, only dlPFC activity predicts which behaviors a monkey will execute (Wallis and Miller, 2003). Furthermore, the dlPFC maintains goal-relevant information during working memory (Levy and Goldman-Rakic, 2000; Wager and Smith, 2003; Owen et al., 2005), updates this information as goals dynamically change during task switching (Dove et al., 2000; Kimberg et al., 2000; MacDonald et al., 2000; Rushworth et al., 2002; Crone et al., 2006; Sakai, 2008; Savine et al., 2010), and arbitrates between conflicting goals during decision making (MacDonald et al., 2000; McClure et al., 2004, 2007; Ridderinkhof et al., 2004; Boettiger et al., 2007; Hare et al., 2009). These previous findings suggest a role for the dlPFC in implementing behavioral goals, but they do not characterize the nature and direction of interactions between dlPFC and other regions supporting motivated behavior. Computational and neuroimaging work has posited a role for the dlPFC in modulating the striatum in the context of instructed reward learning (Doll et al., 2009; Li et al., 2011). Our results corroborate these recent findings and further implicate the dlPFC in initiating motivated behavior, via the novel demonstration of a directed influence on the VTA. Transcranial magnetic stimulation of the dlPFC changes the valuation of both cigarettes (Amiaz et al., 2009) and food (Camus et al., 2009), and also induces dopamine release in the striatum (Pogarell et al., 2006; Ko et al., 2008); however, these results do not reveal how dlPFC activation affects network activation or dynamics. The current findings directly demonstrate dlPFC influence over not only the NAcc but also the VTA during reward-motivated behavior, as postulated by prior work.

In summary, we found that motivation to obtain reward is instantiated by a transfer of information from the dlPFC to the NAcc and VTA; we saw no evidence of the reverse. These findings show that the dlPFC can orchestrate the dynamics of this neuromodulatory network in a contextually appropriate manner. Furthermore, by suggesting an anatomical source for information about expected reward that activates dopaminergic regions, the findings also shed light on the fundamental question of how dopamine neurons define value. Finally, because of the widespread effects of VTA activation and resultant dopamine release, this interaction represents a candidate mechanism whereby dorsolateral prefrontal cortex modulates physiology and plasticity throughout the brain to support goal-directed behavior.

Footnotes

This work was supported by NIMH Grant 70685 (S.A.H.), NINDS Grant 41328 (S.A.H.), National Alliance for Research on Schizophrenia and Depression (R.A.A.), and The Dana Foundation (R.A.A.). R.M.C. is supported by NIH Fellowship NIH51156. S.A.H. was supported by an Incubator Award from the Duke Institute for Brain Sciences. R.A.A. is supported by the Alfred P. Sloan Foundation and The Esther A. and Joseph Klingenstein Fund Foundation. We gratefully acknowledge K. E. Stephan's help with methodological considerations related to the DCM analyses.

The authors declare no competing financial interests.

References

- Adcock RA, Thangavel A, Whitfield-Gabrieli S, Knutson B, Gabrieli JD. Reward-motivated learning: mesolimbic activation precedes memory formation. Neuron. 2006;50:507–517. doi: 10.1016/j.neuron.2006.03.036. [DOI] [PubMed] [Google Scholar]

- Alcaro A, Huber R, Panksepp J. Behavioral functions of the mesolimbic dopaminergic system: an affective neuroethological perspective. Brain Res Rev. 2007;56:283–321. doi: 10.1016/j.brainresrev.2007.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amiaz R, Levy D, Vainiger D, Grunhaus L, Zangen A. Repeated high-frequency transcranial magnetic stimulation over the dorsolateral prefrontal cortex reduces cigarette craving and consumption. Addiction. 2009;104:653–660. doi: 10.1111/j.1360-0443.2008.02448.x. [DOI] [PubMed] [Google Scholar]

- Bakshi VP, Kelley AE. Dopaminergic regulation of feeding behavior. 1. Differential effects of haloperidol microinfusion into 3 striatal subregions. Psychobiology. 1991;19:223–232. [Google Scholar]

- Beckstead RM, Domesick VB, Nauta WJ. Efferent connections of the substantia nigra and ventral tegmental area in the rat. Brain Res. 1979;175:191–217. doi: 10.1016/0006-8993(79)91001-1. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Res Brain Res Rev. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Boettiger CA, Mitchell JM, Tavares VC, Robertson M, Joslyn G, D'Esposito M, Fields HL. Immediate reward bias in humans: fronto-parietal networks and a role for the catechol-O-methyltransferase 158Val/Val genotype. J Neurosci. 2007;27:14383–14391. doi: 10.1523/JNEUROSCI.2551-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiter HC, Gollub RL, Weisskoff RM, Kennedy DN, Makris N, Berke JD, Goodman JM, Kantor HL, Gastfriend DR, Riorden JP, Mathew RT, Rosen BR, Hyman SE. Acute effects of cocaine on human brain activity and emotion. Neuron. 1997;19:591–611. doi: 10.1016/s0896-6273(00)80374-8. [DOI] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68:815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camus M, Halelamien N, Plassmann H, Shimojo S, O'Doherty J, Camerer C, Rangel A. Repetitive transcranial magnetic stimulation over the right dorsolateral prefrontal cortex decreases valuations during food choices. Eur J Neurosci. 2009;30:1980–1988. doi: 10.1111/j.1460-9568.2009.06991.x. [DOI] [PubMed] [Google Scholar]

- Carter RM, Macinnes JJ, Huettel SA, Adcock RA. Activation in the VTA and nucleus accumbens increases in anticipation of both gains and losses. Front Behav Neurosci. 2009;3:21. doi: 10.3389/neuro.08.021.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crone EA, Wendelken C, Donohue SE, Bunge SA. Neural evidence for dissociable components of task-switching. Cereb Cortex. 2006;16:475–486. doi: 10.1093/cercor/bhi127. [DOI] [PubMed] [Google Scholar]

- Doll BB, Jacobs WJ, Sanfey AG, Frank MJ. Instructional control of reinforcement learning: a behavioral and neurocomputational investigation. Brain Res. 2009;1299:74–94. doi: 10.1016/j.brainres.2009.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dove A, Pollmann S, Schubert T, Wiggins CJ, von Cramon DY. Prefrontal cortex activation in task switching: an event-related fMRI study. Brain Res Cogn Brain Res. 2000;9:103–109. doi: 10.1016/s0926-6410(99)00029-4. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Seamans JK, Sejnowski TJ. Neurocomputational models of working memory. Nat Neurosci. 2000;3(Suppl):1184–1191. doi: 10.1038/81460. [DOI] [PubMed] [Google Scholar]

- Fields HL, Hjelmstad GO, Margolis EB, Nicola SM. Ventral tegmental area neurons in learned appetitive behavior and positive reinforcement. Annu Rev Neurosci. 2007;30:289–316. doi: 10.1146/annurev.neuro.30.051606.094341. [DOI] [PubMed] [Google Scholar]

- Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- Frankle WG, Laruelle M, Haber SN. Prefrontal cortical projections to the midbrain in primates: evidence for a sparse connection. Neuropsychopharmacology. 2006;31:1627–1636. doi: 10.1038/sj.npp.1300990. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Mechelli A, Turner R, Price CJ. Nonlinear responses in fMRI: the Balloon model, Volterra kernels, and other hemodynamics. Neuroimage. 2000;12:466–477. doi: 10.1006/nimg.2000.0630. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Harrison L, Penny W. Dynamic causal modelling. Neuroimage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- Gao M, Liu CL, Yang S, Jin GZ, Bunney BS, Shi WX. Functional coupling between the prefrontal cortex and dopamine neurons in the ventral tegmental area. J Neurosci. 2007;27:5414–5421. doi: 10.1523/JNEUROSCI.5347-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao WJ, Goldman-Rakic PS. Selective modulation of excitatory and inhibitory microcircuits by dopamine. Proc Natl Acad Sci U S A. 2003;100:2836–2841. doi: 10.1073/pnas.262796399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gariano RF, Groves PM. Burst firing induced in midbrain dopamine neurons by stimulation of the medial prefrontal and anterior cingulate cortices. Brain Res. 1988;462:194–198. doi: 10.1016/0006-8993(88)90606-3. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. The cortical dopamine system: role in memory and cognition. Adv Pharmacol. 1998;42:707–711. doi: 10.1016/s1054-3589(08)60846-7. [DOI] [PubMed] [Google Scholar]

- Goto Y, Grace AA. Dopaminergic modulation of limbic and cortical drive of nucleus accumbens in goal-directed behavior. Nat Neurosci. 2005;8:805–812. doi: 10.1038/nn1471. [DOI] [PubMed] [Google Scholar]

- Grace AA, Floresco SB, Goto Y, Lodge DJ. Regulation of firing of dopaminergic neurons and control of goal-directed behaviors. Trends Neurosci. 2007;30:220–227. doi: 10.1016/j.tins.2007.03.003. [DOI] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacology. 2010;35:4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, Camerer CF, Rangel A. Self-control in decision-making involves modulation of the vmPFC valuation system. Science. 2009;324:646–648. doi: 10.1126/science.1168450. [DOI] [PubMed] [Google Scholar]

- Hazy TE, Frank MJ, O'Reilly RC. Banishing the homunculus: making working memory work. Neuroscience. 2006;139:105–118. doi: 10.1016/j.neuroscience.2005.04.067. [DOI] [PubMed] [Google Scholar]

- Ikemoto S, Panksepp J. The role of nucleus accumbens dopamine in motivated behavior: a unifying interpretation with special reference to reward-seeking. Brain Res Brain Res Rev. 1999;31:6–41. doi: 10.1016/s0165-0173(99)00023-5. [DOI] [PubMed] [Google Scholar]

- Kimberg DY, Aguirre GK, D'Esposito M. Modulation task-related neural activity in task-switching: an fMRI study. Brain Res Cogn Brain Res. 2000;10:189–196. doi: 10.1016/s0926-6410(00)00016-1. [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci. 2001;21:RC159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. (1–5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ko JH, Monchi O, Ptito A, Bloomfield P, Houle S, Stafella AP. Theta burst stimulation-induced inhibition of dorsolateral prefrontal cortex reveals hemispheric asymmetry in striatal dopamine release during set shifting task: a TMS-[(11)C]raclopride PET study. Eur J Neurosci. 2008;28:2147–2155. doi: 10.1111/j.1460-9568.2008.06501.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy R, Goldman-Rakic PS. Segregation of working memory functions within the dorsolateral prefrontal cortex. Exp Brain Res. 2000;133:23–32. doi: 10.1007/s002210000397. [DOI] [PubMed] [Google Scholar]

- Li J, Delgado MR, Phelps EA. How instructed knowledge modulates the neural systems of reward learning. Proc Natl Acad Sci U S A. 2011;108:55–60. doi: 10.1073/pnas.1014938108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ljungberg T, Apicella P, Schultz W. Responses of monkey dopamine neurons during learning of behavioral reactions. J Neurophysiol. 1992;67:145–163. doi: 10.1152/jn.1992.67.1.145. [DOI] [PubMed] [Google Scholar]

- MacDonald AW, 3rd, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288:1835–1838. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- McClure SM, Ericson KM, Laibson DI, Loewenstein G, Cohen JD. Time discounting for primary rewards. J Neurosci. 2007;27:5796–5804. doi: 10.1523/JNEUROSCI.4246-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Owen AM, McMillan KM, Laird AR, Bullmore E. N-back working memory paradigm: a meta-analysis of normative functional neuroimaging studies. Hum Brain Mapp. 2005;25:46–59. doi: 10.1002/hbm.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkinson JA, Dalley JW, Cardinal RN, Bamford A, Fehnert B, Lachenal G, Rudarakanchana N, Halkerston KM, Robbins TW, Everitt BJ. Nucleus accumbens dopamine depletion impairs both acquisition and performance of appetitive pavlovian approach behaviour: implications for mesoaccumbens dopamine function. Behav Brain Res. 2002;137:149–163. doi: 10.1016/s0166-4328(02)00291-7. [DOI] [PubMed] [Google Scholar]

- Paspalas CD, Goldman-Rakic PS. Microdomains for dopamine volume neurotransmission in primate prefrontal cortex. J Neurosci. 2004;24:5292–5300. doi: 10.1523/JNEUROSCI.0195-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penny WD, Stephan KE, Daunizeau J, Rosa MJ, Friston KJ, Schofield TM, Leff AP. Comparing families of dynamic causal models. PLoS Comput Biol. 2010;6:e1000709. doi: 10.1371/journal.pcbi.1000709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pogarell O, Koch W, Popperl G, Tatsch K, Jakob F, Zwanzger P, Mulert C, Rupprecht R, Moller HJ, Hegerl U, Padberg F. Striatal dopamine release after prefrontal repetitive transcranial magnetic stimulation in major depression: preliminary results of a dynamic [123I] IBZM SPECT study. J Psychiatr Res. 2006;40:307–314. doi: 10.1016/j.jpsychires.2005.09.001. [DOI] [PubMed] [Google Scholar]

- Ridderinkhof KR, van den Wildenberg WP, Segalowitz SJ, Carter CS. Neurocognitive mechanisms of cognitive control: the role of prefrontal cortex in action selection, response inhibition, performance monitoring, and reward-based learning. Brain Cogn. 2004;56:129–140. doi: 10.1016/j.bandc.2004.09.016. [DOI] [PubMed] [Google Scholar]

- Roitman MF, Wheeler RA, Wightman RM, Carelli RM. Real-time chemical responses in the nucleus accumbens differentiate rewarding and aversive stimuli. Nat Neurosci. 2008;11:1376–1377. doi: 10.1038/nn.2219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth MF, Passingham RE, Nobre AC. Components of switching intentional set. J Cogn Neurosci. 2002;14:1139–1150. doi: 10.1162/089892902760807159. [DOI] [PubMed] [Google Scholar]

- Sakai K. Task set and prefrontal cortex. Annu Rev Neurosci. 2008;31:219–245. doi: 10.1146/annurev.neuro.31.060407.125642. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A, Mingote SM. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl) 2007;191:461–482. doi: 10.1007/s00213-006-0668-9. [DOI] [PubMed] [Google Scholar]

- Savine AC, Beck SM, Edwards BG, Chiew KS, Braver TS. Enhancement of cognitive control by approach and avoidance motivational states. Cogn Emot. 2010;24:338–356. doi: 10.1080/02699930903381564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Seamans JK, Yang CR. The principal features and mechanisms of dopamine modulation in the prefrontal cortex. Prog Neurobiol. 2004;74:1–58. doi: 10.1016/j.pneurobio.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Sesack SR, Carr DB. Selective prefrontal cortex inputs to dopamine cells: implications for schizophrenia. Physiol Behav. 2002;77:513–517. doi: 10.1016/s0031-9384(02)00931-9. [DOI] [PubMed] [Google Scholar]

- Sesack SR, Pickel VM. Prefrontal cortical efferents in the rat synapse on unlabeled neuronal targets of catecholamine terminals in the nucleus accumbens septi and on dopamine neurons in the ventral tegmental area. J Comp Neurol. 1992;320:145–160. doi: 10.1002/cne.903200202. [DOI] [PubMed] [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Hum Brain Mapp. 2002;17:143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staudinger MR, Erk S, Abler B, Walter H. Cognitive reappraisal modulates expected value and prediction error encoding in the ventral striatum. Neuroimage. 2009;47:713–721. doi: 10.1016/j.neuroimage.2009.04.095. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Weiskopf N, Drysdale PM, Robinson PA, Friston KJ. Comparing hemodynamic models with DCM. Neuroimage. 2007;38:387–401. doi: 10.1016/j.neuroimage.2007.07.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ. Bayesian model selection for group studies. Neuroimage. 2009;46:1004–1017. doi: 10.1016/j.neuroimage.2009.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Moran RJ, den Ouden HE, Daunizeau J, Friston KJ. Ten simple rules for dynamic causal modeling. Neuroimage. 2010;49:3099–3109. doi: 10.1016/j.neuroimage.2009.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svensson TH, Tung CS. Local cooling of pre-frontal cortex induces pacemaker-like firing of dopamine neurons in rat ventral tegmental area in vivo. Acta Physiol Scand. 1989;136:135–136. doi: 10.1111/j.1748-1716.1989.tb08640.x. [DOI] [PubMed] [Google Scholar]

- Wager TD, Smith EE. Neuroimaging studies of working memory: a meta-analysis. Cogn Affect Behav Neurosci. 2003;3:255–274. doi: 10.3758/cabn.3.4.255. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Maril A, Bjork RA, Schacter DL. Prefrontal contributions to executive control: fMRI evidence for functional distinctions within lateral prefrontal cortex. Neuroimage. 2001;14:1337–1347. doi: 10.1006/nimg.2001.0936. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- Wang M, Vijayraghavan S, Goldman-Rakic PS. Selective D2 receptor actions on the functional circuitry of working memory. Science. 2004;303:853–856. doi: 10.1126/science.1091162. [DOI] [PubMed] [Google Scholar]

- Watanabe M, Sakagami M. Integration of cognitive and motivational context information in the primate prefrontal cortex. Cereb Cortex. 2007;17:i101–i109. doi: 10.1093/cercor/bhm067. [DOI] [PubMed] [Google Scholar]

- Williams GV, Goldman-Rakic PS. Modulation of memory fields by dopamine D1 receptors in prefrontal cortex. Nature. 1995;376:572–575. doi: 10.1038/376572a0. [DOI] [PubMed] [Google Scholar]

- Williams SM, Goldman-Rakic PS. Widespread origin of the primate mesofrontal dopamine system. Cereb Cortex. 1998;8:321–345. doi: 10.1093/cercor/8.4.321. [DOI] [PubMed] [Google Scholar]

- Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5:483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]