Abstract

We study the effects of network topology on the response of networks of coupled discrete excitable systems to an external stochastic stimulus. We extend recent results that characterize the response in terms of spectral properties of the adjacency matrix by allowing distributions in the transmission delays and in the number of refractory states and by developing a nonperturbative approximation to the steady state network response. We confirm our theoretical results with numerical simulations. We find that the steady state response amplitude is inversely proportional to the duration of refractoriness, which reduces the maximum attainable dynamic range. We also find that transmission delays alter the time required to reach steady state. Importantly, neither delays nor refractoriness impact the general prediction that criticality and maximum dynamic range occur when the largest eigenvalue of the adjacency matrix is unity.

Networks of coupled excitable systems describe many engineering, biological, and social applications. Recent studies of how such networks respond to an external, stochastic stimulus have provided insight on information processing in sensory neural networks.1, 2 In agreement with recent experiments,3 these studies showed that the dynamic range of neural tissue is maximized in the critical regime, which is precisely balanced between growth and decay of propagating excitation. This regime was studied theoretically for directed random Erdős-Rényi networks in Ref. 1, where it was found to be characterized by a network mean degree equal to one. However, other studies4, 7 showed that this condition does not specify criticality for other network topologies. In this paper, extending recent results, we present a general framework for studying the effects of network topology on the response to a stochastic stimulus. With this framework, we derive a requirement for criticality and maximum dynamic range that holds for a wide variety of network topologies. Moreover, we show that this prediction holds when refractory states and transmission time delays are included in the network dynamics, although other aspects of the response do depend on these properties.

INTRODUCTION

Many applications involve networks of coupled excitable systems. Two prominent examples are the spread of information through neural networks and the spread of disease through human populations. The collective dynamics of such systems often defy naive expectations based on the dynamics of their individual components. For example, the collective response of a neural network can encode sensory stimuli which span more than 10 orders of magnitude in intensity, while the response of a single neuron typically encodes a much smaller range of stimulus intensities. Likewise, the collective properties of social contact networks determine when a disease becomes an epidemic.

Recently, a framework to study the response of a network of coupled excitable systems to a stochastic stimulus of varying strength has been proposed. The Kinouchi-Copelli model1 considers the response of an undirected Erdős-Rényi random network of coupled discrete excitable systems to a stochastic external stimulus. A mean-field analysis of this model predicted1 that the maximum dynamic range (the range of stimuli over which the network’s response varies significantly) occurs in the critical regime where an excited neuron excites, on average, one other neuron. This criterion can be stated as the mean out-degree of the network being one, 〈dout〉=1, where the out-degree of a node dout is defined as the expected number of nodes an excited node excites in the next time step (Ref. 1 refers to this quantity as the branching ratio).

Subsequent studies explored this system on networks with power-law degree distributions and hypercubic lattice coupling, and with a varying number of loops,2, 4, 5, 6, 7 showing that the criterion for criticality based on the network mean degree does not hold for networks with a heterogeneous degree distribution. However, these studies (except2) do not take into account features that are commonly found in real networks, such as, for example, community structure, correlations between in- and out-degree of a given node, or correlations between the degree of two nodes at the ends of a given edge.8 Furthermore, they do not consider the effect of transmission delays or a distribution in the number of refractory states.

In a recent report,2 we presented an analysis of the Kinouchi-Copelli model that accounts for a complex network topology. We found that the general criterion for criticality is that the largest eigenvalue of the network adjacency matrix is one, λ=1, rather than 〈dout〉=1. While this improved criterion successfully takes into account various structural properties of networks, our analysis did not address the effect of delays or multiple refractory states, and was based on perturbative approximations to the network response. In this paper, we will extend the results of Ref. 2 by developing a nonperturbative analysis that accounts for distributions in the transmission delays and number of refractory states.

This paper is organized as follows. In Sec. 2, we describe previous related work and the standard Kinouchi-Copelli model. In Sec. 3, we present the model to be analyzed and derive a governing equation for its dynamics. In Sec. 4, we present our main theoretical results. In Sec. 5, we apply our results to estimate the dynamic range of excitable networks. In Sec. 6, we present numerical experiments to validate our results. We discuss our results in Sec. 7.

BACKGROUND

In this section, we describe the Kinouchi-Copelli model1 and other relevant previous work. In order to focus on the effects of network topology, the dynamics of the excitable systems is taken to be as simple as possible. The model considers N coupled excitable elements. Each element i can be in one of m + 1 states, xi. The state xi=0 is the resting state, xi=1 is the excited state, and there may be additional refractory states xi=2,3,…,m. At discrete times t=0,1,… the states of the elements are updated as follows: (i) If element i is in the resting state, , it can be excited by another excited element j, , with probability Aij, or independently by an external process with probability η; (ii) the elements that are excited or in a refractory state, , will deterministically make a transition to the next refractory state if one is available, or return to the resting state otherwise (i.e., if , and if ).

For a given value of the external stimulation probability η, which is interpreted as the stimulus strength, the network response F is defined in Ref. 1 as

| (1) |

where 〈·〉t denotes an average over time and ft is the fraction of excited nodes at time t. Of interest is the dependence of the response F(η) on the topology of the network encoded by the connection probabilities Aij. In particular, it is found that, depending on the network A, the network response can be of three types:1, 2quiescent, in which the network activity is zero for vanishing stimulus, limη→0F=0; active, in which there is self-sustained activity for vanishing stimulus, limη→0F>0; and critical, in which the response is still zero for vanishing stimulus but is characterized by sporadic long lasting avalanches of activity that cause a much slower decay in the response, compared with the quiescent case, as the stimulus is decreased. Recent experiments3 suggest that cultured and acute cortical slices operate naturally in the critical regime. Therefore, the network properties that characterize this regime are of particular importance.

In Ref. 1, the response F was theoretically analyzed as a function of the external stimulation probability, η, using a mean-field approximation in which connection strengths were considered uniform, Aij=〈d〉∕N for all i,j. It was shown that the critical regime is achieved at the value 〈d〉=1, with the network being quiescent (active) if 〈d〉<1(〈d〉>1). For more general networks (i.e., Aij not constant), 〈d〉 is defined as the mean degree , where and are the in- and out-degrees of node i, respectively, and 〈·〉 is an average over nodes. Such critical branching processes result in avalanches of excitation with power-law distributed sizes. Cascades of neural activity with power-law size and duration distributions have been observed in brain tissue cultures,3, 9, 10, 11, 12 awake monkeys,10, 13 and anesthetized rats.14, 15, 16 While 〈d〉=1 successfully predicts the critical regime for Erdős-Rényi random networks,1 it does not result in criticality in networks with a more heterogeneous degree distribution.4, 7 Perhaps more importantly, previous theoretical analyses1, 4, 7 are not able to take into account features that are commonly found in real networks, such as, for example, community structure, correlations between in- and out-degree of a given node, or correlations between the degree of two nodes at the ends of a given edge.8 We will generalize the mean-field criterion 〈d〉=1 to account for complex interaction topologies encoded in the matrix A as well as refractoriness and transmission delays.

GENERALIZED KINOUCHI-COPELLI MODEL

Description of the model

We will analyze a generalized version of the Kinouchi-Copelli model which includes possibly heterogeneous distributions of delays and refractory periods. The model is as follows:

There are N excitable elements, labeled i=1,…,N.

At discrete times t=0,1,…, each element i can be in one of mi+1 states, . The state is the resting state, is the excited state, and there may be additional refractory states .

If element i is in the resting state at time t, , it can be excited in the next time step, , by another excited element j with delay τij (i.e., if ) with probability Aij, or independently by an external stimulus with probability η.

The elements that are excited or in a refractory state, , will deterministically make a transition to the next refractory state if one is available, or return to the resting state otherwise (i.e., if , and if ).

The coupling network, encoded by the matrix with entries Aij, is allowed to have complex topology.

Model dynamics

By considering a large ensemble of realizations of the above stochastic process on the same network, we can define the probability that node i is at state at time t as . The probabilities evolve in one time step by

| (2) |

| (3) |

| (4) |

| (5) |

and we also have the normalization condition

| (6) |

where in Eq. 2 is the rate of transitions from the ready to the excited state, given by

| (7) |

where is one if node j is excited at time t and zero otherwise, and E[·] denotes an ensemble average. Assuming that the neighbors of node i being excited are independent events, we obtain, letting ,

| (8) |

We note that the assumption of independence is reasonable if there are few short loops in the network and has been successfully used in similar situations.17, 18 However, this assumption is violated if the number of bidirectional links is significant and, therefore, we will restrict our attention to purely directed networks. Inserting the expression above in Eq. 2 and eliminating in terms of for j=2,…,mi, we obtain the governing equation for the dynamics of

| (9) |

In the following, we will analyze the response of the network by studying solutions of this equation as a function of η.

ANALYSIS

In this section we study the solutions of Eq. 9 and the associated network response. In Sec. 4A, we develop a nonperturbative approximation to the steady state response of the network. In Sec. 4B, we analyze limiting cases of the steady-state response that give us additional qualitative insight. In Sec. 4C, we study the effect of a distribution in the transmission time delays on the time scale of relaxation to the steady state solutions. We then discuss in Sec. 4D how our results relate to previous work.

Steady-state response

First, we will study steady-state solutions to Eq. 9. To find those, we set in Eq. 9, which becomes

| (10) |

Proceeding as in Ref. 2, by assuming Aijpj is small, we replace Πj(1-Aijpj) by exp(-∑jAijpj) to get

| (11) |

The assumption that Aijpj is small is motivated as follows. If the weights Aij are not very different from each other and each node has many incoming connections (such as in neural networks, where the number of synapses per neuron is estimated30 to be of the order of 10000), then near the onset of self-sustained activity, one should have ∑jAij~1 (the mean-field prediction of Ref. 1, which we refine here, states that the node average of ∑jAij is one at criticality), implying Aij is small. The quantity Aijpj is even smaller, especially for low levels of activity where pj is small.

To proceed further, we find convenient to define an alternative network response as

| (12) |

where

| (13) |

and if node j is excited at time t and 0 otherwise. The variable can be interpreted as proportional to the number of excited nodes weighted by their out-degree . In terms of the probabilities pi, is

| (14) |

and can be interpreted as the fraction of links that successfully transmit an excitation. This is analogous to the interpretation of F in Eq. 1 as the fraction of excited nodes. In principle, the definitions of and preclude their use in comparing directly against commonly used measures of activity because knowledge of the matrix A is required to estimate them. However, we note that in all the numerical experiments discussed below, and F were found to be nearly identical. To develop a nonperturbative approximation to , we solve Eq. 11 for pi in terms of . Multiplying the resulting expression by Aki and summing over i, we obtain

| (15) |

Now, we use the fact that the largest eigenvalue of A, λ, is typically much larger than the second eigenvalue,19, 20 and thus Ap≈su, where s is a scalar to be determined and u is the right eigenvector of A corresponding to λ. The validity of this approximation will be discussed in Sec. 6C. With this substitution, the previous equation reduces to

| (16) |

Noting that

where , we substitute into Eq. 16 yielding

which may now be summed over k, simplified, and solved for , yielding the scalar equation

| (17) |

We note that in the notation above, the outer average 〈·〉 corresponds to a sum over the index i in Eq. 16. Given the adjacency matrix A, Eq. 17 can be solved numerically to obtain the response as a function of η. We call Eq. 17 the “nonperturbative approximation” because its derivation does not rely on a perturbative truncation of the product term of Eq. 10, and we will numerically test its validity in Sec. 6, where we will find that Eq. 17 can be a good approximation for all values of η. In order to gain theoretical insight into how some features of the network topology and the distribution of the number of refractory states affect the response, we will use Eq. 17 to obtain analytical expressions for the response in various limits.

Perturbative approximations

While the nonperturbative approximation developed in the last section provides information for all ranges of stimulus, it is useful to consider perturbative approximations, for example, to determine the transition point from quiescent to active behavior. We will obtain an approximation to which is valid for small η and . To do this, we expand the right hand side of Eq. 16 to second order in s and first order in η (as we will see, expanding to second order in s is necessary to treat the η=0 case) obtaining

| (18) |

Multiplying by the left eigenvector entry vk and summing over k we obtain, using ∑kAkivk=λvi and rearranging,

| (19) |

In terms of , this equation becomes

| (20) |

To find the transition from no activity, , to self-sustained activity, , for vanishing stimulus, we let η→0+ in the previous equation to find

| (21) |

where the solution was chosen for λ<1 to satisfy . This equation shows that the transition from a quiescent network to one with self-sustained activity has, if the response is interpreted as an order parameter, the signatures of a second order (continuous) phase transition. In addition, while the eigenvalue λ determines when this transition occurs (at λ=1), its associated eigenvectors u and v determine the significance of the observed response past the transition. If 〈vu2〉>>〈uv〉〈u〉, for example, the response might be initially too small to be of importance. One aspect that was not considered in Ref. 2 is how the distribution of refractory periods affects the response. If the refractory periods mi are strongly positively correlated with the product , they can significantly increase the term 〈mvu2〉 in the denominator, decreasing the response. This can be intuitively understood by noting that this amounts to preferentially increasing the refractory period of the nodes that are more likely to be active (as measured by the approximation pi∝ui valid close to the critical regime), thus removing them from the available nodes for longer times.

The response for small stimulus and response in Eq. 21 agrees with the perturbative expression derived for F directly from Eq. 9 in Ref. 2 if mi=1 and λ→1 and confirms the findings in Ref. 2 that the critical point is determined by λ=1. Henceforth, we will refer to networks with λ<1 as quiescent, to networks with λ>1 as active, and to networks with λ=1 as critical.

The behavior of the system for high stimulation is also of interest. When η=1, node i cycles deterministically through its mi+1 available states, and so pi=(1+mi)-1. The question is how this behavior changes as η decreases from 1. This information can be extracted directly from Eq. 11 by linearizing around the solution η=1 and . Setting η=1-δη and with δη≪1 and , we obtain

| (22) |

Thus, the response of the nodes to a decreased stimulus depends on a combination of their refractory period (which determines ) and decays exponentially with the number of expected excitations from its neighbors. In terms of the aggregate response , Eq. 22 becomes, after multiplying by Aki, summing over k and i, and normalizing,

| (23) |

Dynamics near the critical regime

As in Ref. 2, we will study the transition from no activity to self-sustained activity in the limit of vanishing stimulus by linearizing Eq. 9 around for η=0. Assuming is small, we obtain to first order

| (24) |

Assuming exponential growth, , we obtain

| (25) |

The critical regime, determined as the boundary between no activity and self-sustained activity as η→0, i.e., between the solution being stable and unstable, can be found by setting α=1, obtaining

| (1) |

This implies that the onset of criticality occurs when λ=1 and in this case w=u. This conclusion agrees with the results in Ref. 2 and those in Sec. 4B. Although the critical regime is not affected by the presence of delays or refractory states, the rate of growth (decay) α of perturbations for the active (quiescent) regime depends on the distribution of delays. To illustrate this, we consider the case when the network deviates slightly from the critical state, so that the largest eigenvalue of A is λ=1+δλ and has right eigenvector u, Au=(1+δλ)u. Expecting the solution w to Eq. 25 to be close to u, we set wi=ui+δui and α=1+μ, where the rate of growth μ is assumed to be small. Inserting these in Eq. 25, we get to first order

| (27) |

where the entries of the matrix are given by . To eliminate the term δu, we left-multiply by the left eigenvector of A, v, satisfying vTA=(1+δλ)vT. Canceling small terms, we get

| (28) |

If the delay is constant, τij=τ, we obtain

| (29) |

and in this particular case, a more general result can be obtained from Eq. 25, which implies α=λ1∕(1+τ).

Relation to previous results

Here, we will briefly discuss how our results for the critical regime agree with previous work in particular cases. Correlations between degrees at the ends of a randomly chosen edge (assortative mixing by degree8) can be measured by the correlation coefficient

| (30) |

defined in Ref. 19, with 〈·〉e denoting an average over edges. The correlation coefficient ρ is greater than 1 if the correlation between the in-degree and out-degree of nodes at the end of a randomly chosen edge is positive, less than one if the correlation is negative, and one if there is no correlation. For a large class of networks, the largest eigenvalue may be approximated19 by λ≈ρ〈dindout〉∕〈d〉. In the absence of correlations, when ρ=1, the largest eigenvalue can be approximated by λ≈〈dindout〉∕〈d〉. If there are no correlations between din and dout at a node (node degree correlations) or if the degree distribution is sufficiently homogeneous, then 〈dindout〉≈〈d〉2 and the approximation reduces to λ≈〈d〉. This is the situation that was considered in Ref. 1, and thus they found that the critical regime was determined by 〈d〉=1. In the case of Refs. 4, 7, with more heterogeneous degree distributions, λ≈〈dindout〉∕〈d〉 applied, which accounts for their observation that the critical regime did not occur at 〈d〉=1.

The situation encountered here is analogous to what occurs in the analysis of the transition to chaos in Boolean networks18 and in the transition to synchronization in networks of coupled oscillators,21 where it is found that, instead of the mean degree, the largest eigenvalue is what determines the transition between different collective dynamical regimes.

Other previous studies in random networks have also investigated spectral properties of A to gain insight on the stability of dynamics in neural networks22 and have shown how λ could be changed by modifying the distribution of synapse strengths.23 In addition, it has been shown recently that the largest eigenvalues in the spectrum of the connectivity matrix may affect learning efficiency in recurrent chaotic neural networks.24

DYNAMIC RANGE

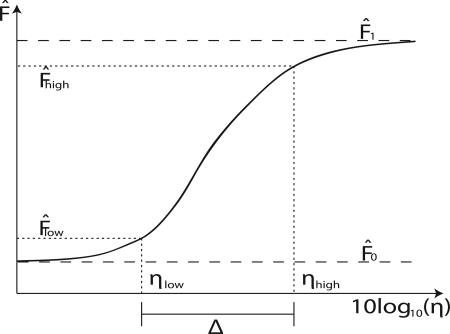

We have studied the response of the network to stimuli of varying strengths. In particular, we studied in detail the response close to the critical regime. As has been previously noted,1 this regime corresponds to the point where the dynamic range Δ is maximized. In our context, the dynamic range can be defined as the range of stimulus η that results in significant changes in response . Typically, the dynamic range is given in decibels and measured using arbitrary thresholds just above the baseline () and below the saturation () values, respectively, as illustrated in Fig. 1 for the active network case . More precisely, the value of stimulus ηlow (ηhigh) corresponding to a low (high) threshold of activity () are found and the dynamic range is calculated as

| (31) |

Using our approximations to the response as a function of stimulus η, we can study the effect of network topology on the dynamic range. The first approximation is based on the analysis of Sec. 4A. Using Eq. 17, the values of η corresponding to a given stimulus threshold can be found numerically and the dynamic range calculated.

Figure 1.

Schematic illustration of the definition of dynamic range in the active network case. The baseline and saturation values are and , respectively. Two threshold values, denoted by and , respectively, are used to determine the range of values of η defined as the dynamic range Δ.

Another approximation that gives theoretical insight into the effects of network topology and the distribution of refractory states on the dynamic range can be developed as in Ref. 2, by using the perturbative approximations developed in Sec. 4B. In order to satisfy the restrictions under which those approximations were developed, we will use and . Taking the upper threshold to be is reasonable if the response decreases quickly from , so that the effect of the network on the dynamic range is dependent mostly on its effect on . Whether or not this is the case can be established numerically or theoretically from Eq. 22, and we find it is so in our numerical examples when mi are not large (see Fig. 5). Taking ηhigh=1 and ηlow=η* we have

| (32) |

The stimulus level η can be found in terms of by solving Eq. 20 and keeping the leading order terms in , obtaining

| (33) |

This equation shows that as η→0 the response scales as for the quiescent curves (λ<1) and as for the critical curve (λ=1). We highlight that these scaling exponents for both the quiescent and critical regimes are precisely those derived in Ref. 1 for random networks, attesting to their robustness to the generalization of the criticality criterion to λ=1, the inclusion of time delays, and heterogeneous refractory periods. This is particularly important because these exponents could be measured experimentally.1 Using this approximation for η* in Eq. 32, we obtain an analytical expression for the dynamic range valid when the lower threshold F* is small. Of particular theoretical interest is the maximum achievable dynamic range Δmax for a given topology. It can be found by setting λ=1 in Eq. 33 and inserting the result in Eq. 32, obtaining

| (34) |

where Δ0=-20log10(F*)>0 depends on the threshold F* but is independent of the network topology or the distribution of refractory states. The second term of Eq. 34 suggests that a positive correlation between refractoriness m and eigenvector entries u and v will decrease dynamic range, whereas a negative correlation will increase dynamic range. This prediction may be investigated in more depth in future publications. The second term of Eq. 34 also suggests that an overall increase in the number of refractory states will lead to an overall decrease in dynamic range. This is in contrast with the result of Ref. 25, which found that there exists a m>0 which maximizes dynamic range in two-dimensional arrays of neurons. We note that the assumption of independence used in deriving Eq. 8 is not valid for a two dimensional array.

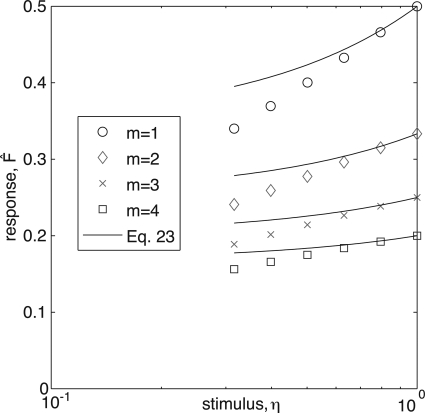

Figure 5.

(Color online) Simulation data (symbols) compare reasonably with the prediction of the perturbative approximation close to saturation, Eq. 23, for different refractory states. δη was chosen to be the different between η=100 and η=10-0.1, corresponding to the two rightmost data points of each simulation.

NUMERICAL EXPERIMENTS

We tested our theoretical results from Sec. 4 by comparing their predictions to direct simulation of our generalized Kinouchi-Copelli model described in Sec. 3. Simulation parameters were chosen specifically to test the validity of Eqs. 17, 23, 28, 34. All simulations, except where indicated, were run with N=104 nodes for T=105 timesteps, over a range of η from 10-5 to 1.

Construction of networks

We created networks in three steps: first, we created binary directed networks, Aij∈{0,1}, with particular degree distributions as described below, forbidding bidirectional links and self-connections; second, we assigned a weight to each link, drawn from a uniform distribution between 0 and 1; third, we calculated λ for the resulting network and multiplied A by a constant to rescale λ to the targeted eigenvalue.27 The two classes of topology considered for simulations were directed Erdős-Rényi random networks and directed scale-free networks with power law degree distributions, where we set the power law exponent to γ=2.5, and enforced a minimum degree of 10 and a maximum degree of 1000. Erdős-Rényi networks28 were constructed by linking any pair of nodes with probability p=15∕N, and scale-free networks were constructed by first generating in-degree and out-degree sequences drawn from the power law distribution described above, assigning those target degrees to N nodes, and then connecting them using the configuration model.29 In some cases, an additional fourth step was used to change the assortativity coefficient ρ, defined in Eq. 30, of a critical (i.e., with λ=1) scale-free network, making this network more assortative (disassortative) by choosing two links at random, and swapping their destination connections only if the resulting swap would increase (decrease) ρ. This swapping allows for the degree of assortativity (and thereby, λ) to be modified while preserving the network’s degree distribution.8, 19

Results of numerical experiments

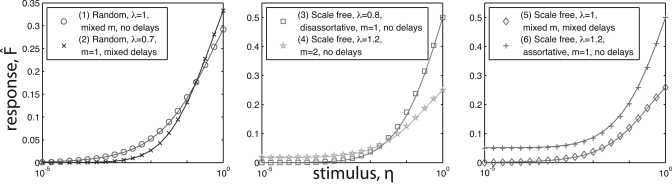

We first demonstrate the ability of the non-perturbative approximation to predict aggregate network behavior in a variety of conditions. Fig. 2 shows a multitude of simulations (symbols) with the predicted behavior of Eq. 17 overlaid (lines). The cases considered in Fig. 2 include different combinations of topology, assortativity, largest eigenvalue λ, delays, and number of refractory states. The number of refractory states mi was chosen either constant, mi=m, or randomly chosen with equal probability among {1,2,…,mmax}. Similarly, the delays τij were either constant, τij=τ, or uniformly chosen with uniform probability in (0,τmax). The predictions capture the behavior of the simulations, with particularly good agreement for networks with neutral assortativity, ρ=1. In the assortative and disassortative cases shown [cases (3) and (6) in Fig. 2], low and high stimulus simulations are well captured by the prediction, while a small deviation can be observed for intermediate values of η [e.g., in case (6) in the right panel of Fig. 2, the crosses have a small systematic error around η=10-2 ]. In Sec. 6C, we will discuss why Eq. 17, which assumes Ap≈su, works so well. In particular, we will discuss why this approximation is expected to work well for small and large η.

Figure 2.

(Color online) Semi-log plots of data from five simulations (symbols) testing a variety of situations in order to show the robustness of Eq. 17 (lines) to various sets of conditions: (1) random network; λ=1; mixed refractory states, mi∈{1,2,3}; no delays; (2) random network; λ=0.7; no refractory states, mi=m=1; mixed delays, τij∈{0,1,2,3}; (3) scale free network; λ=0.8; disassortative rewiring; no refractory states, mi=m=1; no delays; (4) scale free network; λ=1.2; uniform refractory states, mi=m=2; no delays; (5) Scale free network; λ=1.0; mixed refractory states, mi∈{1,2,3,4}; mixed delays, τij∈{0,1,2}; (6) Scale free network; λ=1.2; uniform refractory states, mi=m=1; no delays. The plots show excellent agreement between the prediction and simulation at many points in parameter space.

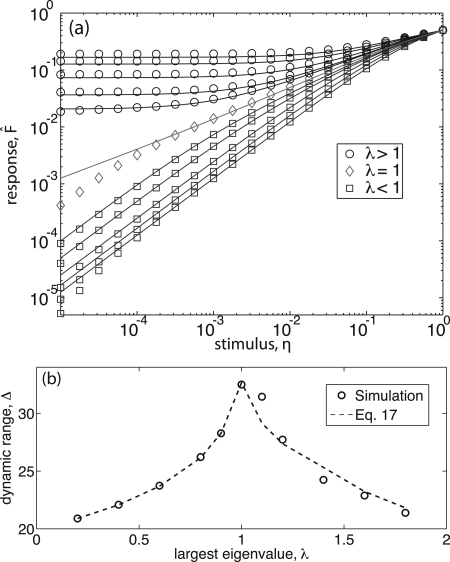

As reported previously,2 we find in our simulations that networks with λ=1 show critical dynamics and exhibit maximum dynamic range. This applies to random networks, scale free networks, and scale free networks with modified assortativity. Networks with λ<1 exhibit no self-sustained activity in the absence of stimulus, whereas networks with λ>1 exhibit self-sustained activity. Furthermore, in all numerical experiments, with distributed refractory states and various time delays, the criticality of networks at λ=1 was preserved as predicted above. Typical results in Fig. 3a show the response as a function of stimulus η for scale free networks with γ=2.5, refractory states mi=m=1, and no time delays, with λ ranging from 0.2 to 1.8. Each symbol in the figure is generated by a single simulation on a single network realization. Lines show obtained from numerical solution of Eq. 17. We note that the simulations with λ=1 show a deviation from the theoretically predicted critical curve for values of η less than 10-4. We believe this is due to the fact that for such low values of η, a much longer time average than the one we are doing would be required. For example, with η=10-5 we expect that, using 105 time steps, a given node will not be excited externally with probability e-1≈0.37. This might be especially important in the critical regime, where activity is mostly determined by sporadic avalanches propagated by hubs.

Figure 3.

(Color online) Simulation data for scale-free networks of 104 nodes (symbols) and numerical solution of Eq. 17 (lines). (a) Stimulus vs response predictions agree well in the regime where Ap≈su, as discussed in Sec. 6C. Eigenvalues range from 0.2 to 0.9 (blue squares), exactly 1.0 (red diamonds), and from 1.1 to 1.8 (black circles). (b) Dynamic range predictions capture maximization at λ=1 as well as the non-critical behavior.

Figure 3b shows the dynamic range Δ calculated using F*=10-2 directly from the simulation (circles) and using Eq. 17 (dashed line). As demonstrated in Ref. 2, the dynamic range is maximized when λ=1. We note that in Ref. 2, the dynamic range was estimated using a perturbative approximation and as a consequence, our prediction had a systematic error in the λ>1 regime [cf. Fig. 1b in Ref. 2]. The nonperturbative approximation Eq. 17 results in a much better prediction.

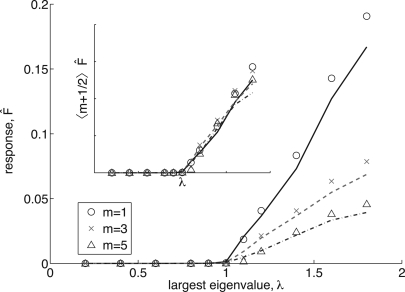

Figure 4 shows the transition that occurs at λ=1 when η→0 for experiments with a varying number of refractory states, m = 1, 3, and 5. Symbols indicate the results of direct simulation using η=10-5 and the lines correspond to Eq. 17, which describes well the result of the simulations. We found that for this particular network, the perturbative approximation (21) only gives correct results very close to the transition at λ=1, and its quantitative predictions degrade quickly as grows. [A similar situation can be observed in Fig. 2b of Ref. 2.] However, we found that the perturbative approximation is still useful to predict the effect of the refractory states. Equation 21 predicts that the response should scale as 〈m+1∕2〉-1. The inset shows how, after multiplication by 〈m+1∕2〉, the response curves collapse into a single curve. Figure 4 also depicts a linear relationship, for λ>1. Making a connection with the theory of nonequilibrium phase transitions in which , we derive λc=1 and the critical exponent β=1.

Figure 4.

(Color online) Phase transitions of Fη→0 for different refractory states, m for simulations (symbols) and Eq. 17 (lines). Inset: Eq. 21 predicts that phase transitions should scale by 〈m+1∕2〉-1, confirmed by rescaling data from the larger plot accordingly.

Figure 5 shows the response close to η=1 calculated for various values of m from the simulation (symbols), and from Eq. 23 (solid lines). Equation 23 describes well the slope of close to η=1. An important observation is that as m grows, the relative slope at η=1 decreases. Therefore, if the typical refractory period m is large, the response saturates [e.g., reaching 90% of ] for smaller values of η.

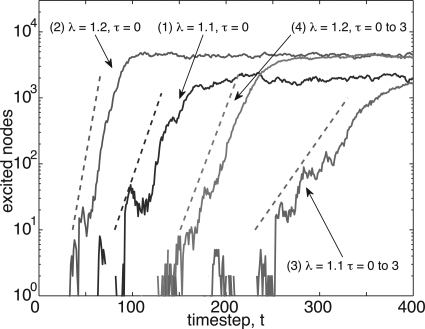

Transmission delays, as in the analogous system of gene regulatory networks,18 do not modify steady state response. However, delays modify the time scale of relaxation to steady state. We quantified this modification in the growth rate in Eq. 28, which determines the growth rate of perturbations from an almost critical quiescent network in terms of a matrix determined from the distribution of delays. In Fig. 6, we show time series (solid lines) for the initial growth in the number of excited nodes within four active networks with and without time delays. For comparison, we show the slope that results from the corresponding growth rates obtained from Eq. 28 (dotted lines). The timesteps shown on the horizontal axis have been shifted to display multiple results together, but not rescaled or distorted. As shown in Fig. 6, Eq. 28 is helpful in quantifying the growth rate of signals within the network in the regime during which growth is exponential. In this limited regime, simulation data compare well with time series of excitations and capture the growth rate’s dependence on eigenvalue and time delays. We note here that Eq. 28 predicts the growth rate of , and, therefore, the growth rate of both ft and . Here, we have chosen to show the growth in the number of excited nodes (proportional to ft) which is more experimentally accessible than .

Figure 6.

(Color online) Time series (solid lines) for initial growth of signals within four active networks, with growth rates from Eq. 28 shown (dotted lines). The timesteps shown on the horizontal axis have been shifted to display multiple results together, but not rescaled or distorted. In less than 100 timesteps, all networks tested exhausted the exponential growth regime. N = 100 000 nodes and η=10-6, for (1) λ=1.1, τ=0, (2) λ=1.2, τ=0, (3) λ=1.1, τ∈{0,1,2,3}, and (4) λ=1.2, τ∈{0,1,2,3}.

Validity of the approximation Ap ∝ u

Here, we will address the question of the validity of our approximation Ap∝u, which was used to develop the nonperturbative approximation Eq. 17. First, we note that when η and p are small, the linear analysis of Sec. 4C and Ref. 2 shows that p∝u, and, therefore, the approximation Ap∝u is justified in this regime. As η grows, and for situations where p is not small, one should expect deviations of p from being parallel to u. However, we note that because pi measures how active node i is, it should still be highly correlated with the in-degree of node i. Because in many situations the in-degree is also correlated with the entries of the eigenvector u, we expect that in those cases p remains correlated with u. After multiplication by A, the approximation can only become better. For the class of networks in which the ratio between the largest eigenvalue λ and the next largest eigenvalue scales as (which include Erdős-Rényi and other networks),20 we expect that Ap∝u should be a good approximation.

Another reason why the approximation Ap∝u works well even when is not small is that the errors introduced by this approximation vanish exactly when η=1. To see this, note that for η=1, because each node cycles deterministically through its mi+1 available states, we have pi=1∕(1+mi), which gives , which agrees exactly with the result of setting η=1 in Eq. 17. Thus, even as the assumption Ap∝u may become less accurate as η grows, the importance of the error introduced by it decreases and eventually vanishes at η=1.

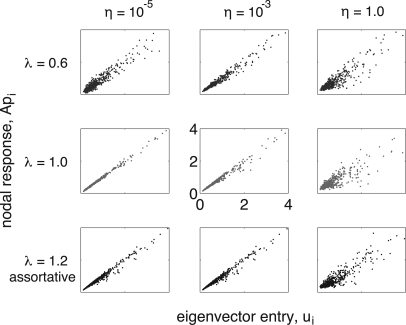

To illustrate how the assumption Ap≈su works in some particular examples, Fig. 7 compares normalized Api and ui for a variety of eigenvalues and stimulus levels. Good agreement between them (characterized by a high correlation) indicates that the assumption of Sec. 4A is valid, whereas more noisy agreement for some cases indicates that the assumption Ap∝u is invalid (although, as discussed above, this does not necessarily imply that the nonperturbative approach will fail). Low stimulus levels in quiescent networks (top left panel) show relatively low correlation for short simulations, but the correlation improves with more timesteps as relative nodal response increases at well connected nodes and decreases at poorly connected nodes. Assortative networks (bottom panels) show slightly lower correlation as well, corroborating the results shown in Fig. 2 where the predictive power of Eq. 17 is slightly diminished for the assortative network. As expected, correlation between Ap and u entries is worst at η=1 (right panels), but we reiterate that for η=1 this error does not affect the predictions of Eq. 17.

Figure 7.

(Color online) Plots of normalized Api vs sui for scale-free networks, with eigenvalues 0.6 (blue, top row), 1.0 (red, middle row), and 1.2 with assortative mixing (black, bottom row) at stimulus levels η=10-5,10-3, and 1 for the left, middle, and center columns, respectively. Agreement is very good for critical and active cases, with more noise in the quiescent case due to less incoming stimuli over the duration of the simulation.

DISCUSSION

In this paper, we studied a generalized version of the Kinouchi-Copelli model in complex networks. We developed a nonperturbative treatment [Eq. 17] that allows us to find the response of the network for a given value of the stimulus given a matrix of excitation transmission probabilities A. Our approach includes the possibility of heterogeneous distributions of excitation transmission delays and numbers of refractory states. An important assumption in our theory is that there are many incoming links to every node, which allows us to transform the product in Eq. 9 into an exponential. This assumption is very reasonable for neural networks, where the number of synapses per neuron is estimated30 to be of the order of 10000. In addition, in order to obtain a closed equation for , we assumed Ap∝u. As discussed in Sec. 6C, this approximation works well in the regime when the response and stimulus are small. Furthermore, the error introduced by this approximation becomes smaller as the probability of stimulus increases and eventually vanishes for η=1. The result is that Eq. 17 predicts the response satisfactorily for all values of η. While we validated our predictions using scale-free networks with various correlation properties, we did not test them in topologies in which mean-field theories have been shown to fail, such as periodic hypercubes and branching tree networks.6, 26 This study is left to future research.

Our theory describes how the introduction of additional refractory states modifies the network response by modifying Eq. 17. In addition, their effect is captured by the perturbative approximations of Sec. 4B which, although valid in principle only for very small , we have found successfully predict the effect of a distribution in the number of refractory states for a larger range of response values.

We studied the effect of time delays on the time scale needed to reach a steady-state response and found that Eq. 28 determines the growth rate of perturbations from a quiescent, almost critical network. The temporal characteristics of the response could be important in the study of sensory systems, in which the stimulus level might be constantly changing in time. Additionally, delays may be important in studying the phenomenon of synchronization and propagation of wavefronts, which we do not study here. Synchronization in epidemic models similar to the model considered here has been well-described in the absence of time delays,31 and synchronization in Rulkov neurons has been shown to be affected subtly by time delays.32 However, the effect of time delays on synchronization in our model remains an open line of inquiry.

An important practical question regarding the application of our theory to neuroscience is how our results can be made compatible with the presence of excitatory and inhibitory connections in neural networks. Considering one excited neuron, and after excitatory and inhibitory connections are taken into account, the important quantity that determines the future activity of the network is how many other neurons are expected to be excited by the originally excited neuron. This number might depend on the overall balance of excitatory and inhibitory connections, but it must be a positive number. The Kinouchi-Copelli model we are using, and similar models used successfully by neuroscientists to model neuronal avalanches,3 have therefore considered only excitatory neurons, while adjusting the probabilities of excitation transmission to account for different balances of excitatory and inhibitory neurons. Nevertheless, we believe a generalization of the Kinouchi-Copelli model that accounts for inhibitory connections should be investigated in the future.

Another important issue is the generality of our findings for more biologically realistic excitable systems. We conjecture that the effect of network topology on the dynamic range of networks of continuous-time, continuous-state coupled excitable systems such as coupled ordinary differential equation (ODE) neuron models33 is qualitatively similar to its effect on the class of discrete-time and discrete-state dynamical systems studied here. However, this remains open to investigation.

ACKNOWLEDGMENTS

We thank Dietmar Plenz for useful discussions. The work of Daniel B. Larremore was supported by the NSF’s Mentoring Through Critical Transition Points (MCTP) Grant No. DMS-0602284. The work of Woodrow L. Shew was supported by the Intramural Research Program of the National Institute of Mental Health. The work of Edward Ott was supported by ONR Grant No. N00014-07-1-0734. The work of Juan G. Restrepo was supported by NSF Grant No. DMS-0908221.

References

- Kinouchi O. and Copelli M., Nat. Phys. 2, 348 (2006). 10.1038/nphys289 [DOI] [Google Scholar]

- Larremore D. B., Shew W. L., and Restrepo J. G., Phys. Rev. Lett. 106, 058101 (2011). 10.1103/PhysRevLett.106.058101 [DOI] [PubMed] [Google Scholar]

- Shew W. L., Yang H., Petermann T., Roy R., and Plenz D., J. Neurosci. 29(49), 15595 (2009). 10.1523/JNEUROSCI.3864-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Copelli M. and Campos P. R. A., Eur. Phys. J. B 56, 273 (2007). 10.1140/epjb/e2007-00114-7 [DOI] [Google Scholar]

- Ribeiro T. L. and Copelli M., Phys. Rev. E 77, 051911 (2008). 10.1103/PhysRevE.77.051911 [DOI] [PubMed] [Google Scholar]

- Assis V. R. V. and Copelli M., Phys. Rev. E 77, 011923 (2008). 10.1103/PhysRevE.77.011923 [DOI] [PubMed] [Google Scholar]

- Wu A., Xu X. J., and Wang Y. H., Phys. Rev. E 75, 032901 (2007). 10.1103/PhysRevE.75.032901 [DOI] [Google Scholar]

- Newman M. E. J., Phys. Rev. E 67, 026126 (2003). 10.1103/PhysRevE.67.026126 [DOI] [Google Scholar]

- Beggs J. M. and Plenz D., J. Neurosci. 23, 11167 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shew W. L., Yang H., Yu S., Roy R., and Plenz D., J. Neurosci. 31, 55 (2011). 10.1523/JNEUROSCI.4637-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasquale V., Massobrio P., Bologna L. L., Chiappalone M., and Martinoia S., Neuroscience 153(4), 1354 (2008). 10.1016/j.neuroscience.2008.03.050 [DOI] [PubMed] [Google Scholar]

- Tetzlaff C., Okujeni S., Egert U., Wörgötter F., and Butz M., PLOS Comput. Biol. 6(12), e1001013 (2010). 10.1371/journal.pcbi.1001013.g001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petermann T., Thiagarajan T. C., Lebedev M., Nicolelis M., Chialvo D. R., and Plenz D., Proc. Natl. Acad. Sci. U.S.A. 106, 1592115926 (2009). 10.1073/pnas.0904089106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gireesh E. D. and Plenz D., Proc. Natl. Acad. Sci. U.S.A. 105, 7576 (2008). 10.1073/pnas.0800537105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro T. L., Copelli M., Caixeta F., Belchior H., Chialvo D. R., Nicolelis M. A. L., and Ribeiro S., PLoS ONE 5(11), e14129 (2010). 10.1371/journal.pone.0014129.g001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hahn G., Petermann T., Havenith M. N., Yu S., Singer W., Plenz D., Nikolić D., J. Neurophys. 104, 3312 (2010). 10.1152/jn.00953.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Restrepo J. G., Ott E., and Hunt B. R., Phys. Rev. Lett. 100, 058701 (2008). 10.1103/PhysRevLett.100.058701 [DOI] [PubMed] [Google Scholar]

- Pomerance A., Ott E., Girvan M., and Losert W., Proc. Natl. Acad. Sci. 106, 20 (2009). 10.1073/pnas.0900142106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Restrepo J. G., Ott E., and Hunt B. R., Phys. Rev. E 76, 056119 (2007). 10.1103/PhysRevE.76.056119 [DOI] [PubMed] [Google Scholar]

- Chauhan S., Girvan M., and Ott E., Phys. Rev. E 80, 056114 (2009). 10.1103/PhysRevE.80.056114 [DOI] [PubMed] [Google Scholar]

- Restrepo J. G., Ott E., and Hunt B. R., Phys. Rev. E 71, 036151 (2005). 10.1103/PhysRevE.71.036151 [DOI] [PubMed] [Google Scholar]

- Gray R. T. and Robinson P. A., Neurocomputing 70, 1000 (2007). [Google Scholar]

- Rajan K. and Abbott L. F., Phys. Rev. Lett. 97, 188104 (2006). 10.1103/PhysRevLett.97.188104 [DOI] [PubMed] [Google Scholar]

- Sussillo D. and Abbott L. F., Neuron 63, 544 (2009). 10.1016/j.neuron.2009.07.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Copelli M. and Kinouchi O., Physica A 349, 431 (2005). 10.1016/j.physa.2004.10.043 [DOI] [Google Scholar]

- Gollo L. L., Kinouchi O., and Copelli M., PLoS Comput. Biol. 5(6), e10000402 (2009). 10.1371/journal.pcbi.1000402.g001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- This is almost certainly not the method by which real biological networks tune their connectivity, but provides a theoretical starting point by which we can assess the properties of networks with varying λ, without commenting on how real networks may achieve such connectivities.

- Erdős P. and Rényi A., Publ. Math. 6, 290 (1959). [Google Scholar]

- Newman M. E. J., SIAM Rev. 45, 167 (2003). 10.1137/S003614450342480 [DOI] [Google Scholar]

- Braitenburg V., and Schuz A., Cortex: Statistics and Geometry of Neuronal Connectivity (Springer-Verlag, Berlin, 1991). [Google Scholar]

- Girvan M., Callaway D., Newman M. E. J., and Strogatz S. H., Phys. Rev. E 65, 031915 (2002). 10.1103/PhysRevE.65.031915 [DOI] [PubMed] [Google Scholar]

- Wang Q., Perc M., Duan Z., and Chen G., Phys. Rev. E 80, 026206 (2009). 10.1103/PhysRevE.80.026206 [DOI] [PubMed] [Google Scholar]

- Izhikevich E., The Geometry of Excitability and Bursting (MIT, Cambridge, MA, 2007). [Google Scholar]