Abstract

PURPOSE

We compared use of a new diabetes dashboard screen with use of a conventional approach of viewing multiple electronic health record (EHR) screens to find data needed for ambulatory diabetes care.

METHODS

We performed a usability study, including a quantitative time study and qualitative analysis of information-seeking behaviors. While being recorded with Morae Recorder software and “think-aloud” interview methods, 10 primary care physicians first searched their EHR for 10 diabetes data elements using a conventional approach for a simulated patient, and then using a new diabetes dashboard for another. We measured time, number of mouse clicks, and accuracy. Two coders analyzed think-aloud and interview data using grounded theory methodology.

RESULTS

The mean time needed to find all data elements was 5.5 minutes using the conventional approach vs 1.3 minutes using the diabetes dashboard (P <.001). Physicians correctly identified 94% of the data requested using the conventional method, vs 100% with the dashboard (P <.01). The mean number of mouse clicks was 60 for conventional searching vs 3 clicks with the diabetes dashboard (P <.001). A common theme was that in everyday practice, if physicians had to spend too much time searching for data, they would either continue without it or order a test again.

CONCLUSIONS

Using a patient-specific diabetes dashboard improves both the efficiency and accuracy of acquiring data needed for high-quality diabetes care. Usability analysis tools can provide important insights into the value of optimizing physician use of health information technologies.

Keywords: Decision support, electronic health record, medical records, diabetes, dashboard, information retrieval, workflow, office automation, technology, informatics

INTRODUCTION

Although electronic health records (EHRs) hold great promise for improving clinical care, they sometimes function more as data repositories than as dynamic patient care tools. Recommended improvements in EHR decision support include improving the human-computer interface and summarizing patient-level information.1 The combination of dense, poorly organized information in the EHR, high demand for this information, time constraints, multitasking, and frequent interruptions creates cognitive overload for physicians.2 Succinctly presenting relevant information helps physicians deal with this phenomenon of “too much information.”1,3 Organizing relevant information can also prompt physicians to meet quality standards for patients with chronic conditions.4 Lastly, highly usable decision support tools may help to mitigate physician dissatisfaction with the EHR, as well as satisfy Centers for Medicare & Medicaid Services (CMS) criteria for Meaningful Use decision support.5–8

There are substantial obstacles to creating clinically useful decision support tools, however. Software developers and clinician users must collaborate to design these tools together, but this type of collaboration requires a large commitment of resources. For example, developers estimated that creating a decision support tool to adjust medication dosages in patients with renal impairment required 924.5 hours and $48,668 to create 94 alerts for 62 drugs.9 Moreover, physician members of the team had the highest time commitment and cost at 414.25 hours and $25,902, respectively.

Substantial improvements in the ease of obtaining needed information, quality of care, or cost of care could mitigate development time and cost for decision support tools. To investigate this issue, we studied a new diabetes dashboard that summarized current information needed to care for a patient with diabetes, including the patient’s status on quality indicators, such as a hemoglobin A1c (HbA1c) level of less than 9%. Our primary objective was to quantify the time saved for clinicians using the diabetes dashboard over the conventional method of searching through the chart to retrieve information. Our secondary objectives included quantifying reduced mouse clicks with the new dashboard and identifying any improvements in information retrieval accuracy. Finally, we incorporated a qualitative evaluation of physician interviews because physician attitudes can be a stumbling block for many EHR implementations.7

METHODS

Dashboard Development

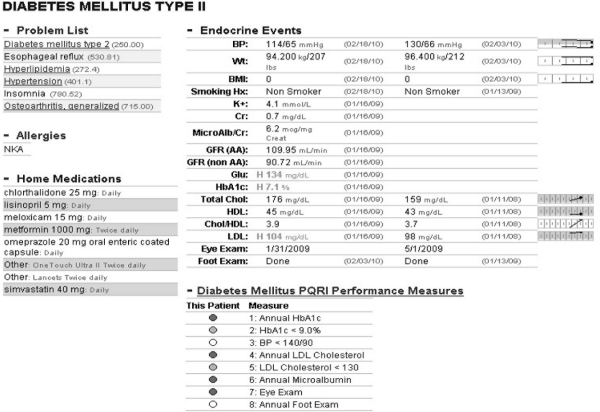

In 2007, University of Missouri Health System (UMHS) family physicians collaborated with Cerner Corporation to develop a diabetes dashboard that would be automatically generated by the EHR and summarize patient-level data important for diabetes care (Figure 1). Developing the dashboard involved an initial focus group with family physicians and a series of iterative design steps targeting clinician needs. The development process used user-centered design, reflecting user involvement in the design and development processes through iterative feedback and evaluation activities.10 Involving intended users early in the design process has several advantages: expectations are more closely matched to functionality, users develop a sense of ownership by providing suggestions reflecting their needs, early rapid iterations between software versions allow shorter development times, and less redesign is needed after implementation.10

Figure 1.

Diabetes dashboard screen.

Once a patient’s EHR has been opened, users access the diabetes dashboard with 2 mouse clicks. The dashboard was designed using Tufte’s principles for the visual display of quantitative information, including displaying high-density clinically relevant information in a single visual plane, and use of sparklines, or word-sized graphics.11

Study Design

We conducted this usability evaluation 2 weeks before systemwide introduction of the diabetes dashboard to UMHS clinicians. We used a mobile usability laboratory, including a laptop loaded with the EHR program, data for 2 simulated patients, Morae Recorder software (version 2.0.1., TechSmith Corporation, Okemos, Michigan), and a video camera.12 Faculty and graduate students from the University of Missouri’s Information Experience Laboratory conducted the simulation. Participants were 10 UMHS family and general internal medicine physicians with outpatient practices. In addition to seeking specialty variation, we purposefully sampled to maximize variation in sex, years in practice, and experience with the EHR.

We directly observed and audio- and video-recorded physicians while they searched the EHR for clinical data elements in each of 2 simulated charts of patients with diabetes. These simulated patients, here called Patient A and Patient B, were constructed specifically for this study, had similar amounts of clinical data, and appeared in the EHR exactly as actual patient charts would appear on any normal clinic day for these physicians. For example, smoking status and foot examination data were contained within clinic visit notes, while laboratory data were on a separate pathology tab. The charts were constructed so that some data were harder to find than other data; for example, although HbA1c and low-density lipoprotein cholesterol values were contained on an initial pathology screen displaying the last 200 laboratory values, physicians had to expand this default range to include older laboratory data to find the urine microalbumin-creatinine ratio. This need to expand the default range to access older laboratory values is common in routine EHR use.

Physicians accessed the EHR as they normally would and were directed to the chart of each test patient in turn. For both patients, the physicians were asked to find and record the specific values of 10 data elements important for diabetes care (Table 1). For Patient A, the physicians had to use a conventional search through multiple portions of the EHR, including screens for vital signs, laboratory values, medications, and clinic visit notes. For Patient B, the diabetes dashboard function was enabled. As the dashboard was completely new to physicians, they watched a 90-second video about how to access the dashboard before using it for Patient B.

Table 1.

Ten Diabetes Care Data Elements Used for Physician Searches

| Date of last HbA1c level |

| Value of last HbA1c level |

| Date of last LDL cholesterol level |

| Value of last LDL cholesterol level |

| Value of last blood pressure |

| Value of last urine microalbumin-creatinine ratio |

| Date of last foot examination |

| Date of last eye examination |

| Smoking status |

| Daily use of aspirin |

HbA1c=hemoglobin A1c; LDL=low-density lipoprotein.

For both simulated patients, we asked physicians to verbally describe their actions and strategies while searching, termed think-aloud interviewing, and to record the values for the 10 diabetes data elements using pencil and paper on a data sheet designed for the study.13 Although physicians were given a list of data elements to find, they were free to search for them in any order. As part of the think-aloud method, physicians announced when they found each data point, for example, “found smoking status,” and were prompted to make other comments about their search experience.

At the end of the session, physicians participated in a short, semistructured interview about their experience. We asked about their attitudes toward the new diabetes dashboard, ease of obtaining data, normal practice patterns, and how the new dashboard might be used in their daily clinical work.

Measurements

The primary outcome was time to obtain all 10 data elements without vs with the dashboard. We measured total time on task, which included time to write down each answer, as well as actual time on task (time to find the information but not writing time). Secondary outcomes were the number of mouse clicks required to find data elements and the percentage of correct responses. Morae recordings were used to compute time to each data point and mouse clicks.12 We judged data accuracy by comparing physicians’ written data sheets with the master list of chart data. Recordings of physician think-aloud and post-task interviews were transcribed for qualitative analysis.

Analysis

For the main outcome measure, time to find all data, and for the secondary time and mouse click measures, we computed summary descriptive statistics for both the standard EHR search (Patient A) and diabetes dashboard (Patient B) methods. We compared means using 2-tailed t tests for paired samples. For accuracy of data found, we used χ2 tests for binomial proportions.

We used grounded theory to analyze transcripts of the interviews and think-aloud content.14 Two coders, one a usability expert (J.L.M.) and one a physician researcher (R.J.K.), independently coded transcripts line by line and then established consensus on codes and themes. The study was approved by the University of Missouri Health Sciences Institutional Review Board. All physicians participating in the study received a waiver of documentation of consent. Simulated patient charts were used for this study.

RESULTS

As planned, the 10 physicians were demographically diverse. Fifty percent were younger than 45 years of age, and 50% were women. Forty percent had fewer than 10 years of clinical experience, 50% had fewer than 5 years of EHR experience, and 60% had fewer than 5 years of experience with PowerChart (Cerner Corporation, North Kansas City, Missouri), the EHR used at the study site. Sixty percent considered themselves average EHR users, while 40% considered themselves above-average users.

Quantitative Results

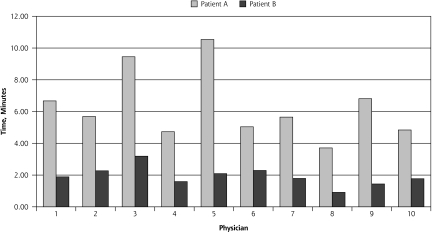

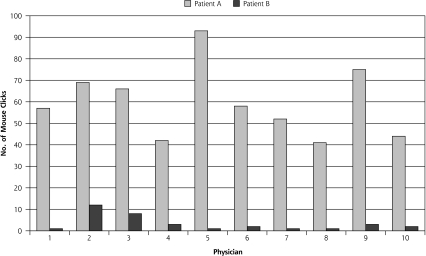

The mean total time on task, finding all 10 data elements, was significantly less with use of the diabetes dashboard, 1.9 minutes (SD = 0.6) vs 6.3 minutes (SD = 2.2) without the dashboard (P <.001). Similarly, mean actual time on task (total time minus writing time) was significantly less with the dashboard: 1.3 minutes (SD = 0.6) vs 5.5 minutes (SD = 2.1) (P <.001) (Figure 2). The dashboard also required far fewer mouse clicks on average, 3 clicks (SD = 4) vs 60 clicks (SD = 16) (P <.001) (Figure 3).

Figure 2.

Actual time on task for Patient A (conventional electronic health record search) and Patient B (dashboard search).

Figure 3.

Total number of mouse clicks for Patient A (conventional electronic health record search) and Patient B (dashboard search).

Physicians essentially clicked 3 times to obtain all data from 1 screen using the dashboard, whereas the conventional search was much more complex. The participants searched through several clinical notes, some notes more than once. Supplemental Table 1, available online at http://www.annfammed.org/cgi/content/full/9/5/398/DC1, provides the order of screens visited for each physician. Most physicians chose to scroll through the 4 sections of the Pathology screen instead of using the buttons for the specific sections. Supplemental Figure 1, available online at http://www.annfammed.org/cgi/content/full/9/5/398/DC1, presents screenshots of typical screens visited by the physicians while searching for data.

Using the dashboard also reduced errors. Of the 100 total data elements sought (10 physicians each searching for 10 elements), there were 3 instances of recording incorrect data and 3 instances in which a data element could not be found. All errors were on Patient A with the conventional EHR search (94% accuracy) compared with none on Patient B with the dashboard (100% accuracy) (P <.01).

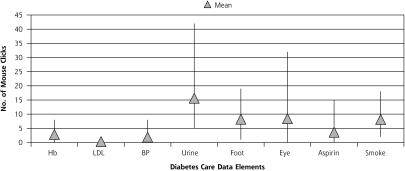

Similar to the case for actual patient charts, some items in the simulated charts were much more difficult to find than others. As shown in Figure 4, for Patient A, finding the urine microalbumin-creatinine ratio, which was dated before the default range, had the highest average number of mouse clicks (mean = 15.6; range, 5–42); low-density lipoprotein cholesterol level had the least (mean = 0.3; range 0–1) (P <.01), likely because it was located on the same screen as the HbA1c value.

Figure 4.

Number of mouse clicks needed to find each data element.

Aspirin = daily use of aspirin; BP = value of last blood pressure; Eye = date of last eye examination; Foot = date of last foot examination; Hb = hemoglobin A1c level; LDL = low-density lipoprotein cholesterol level; Smoke = smoking status; Urine = value of last urine microalbumin-creatinine ratio.

Qualitative Results

Several themes emerged from analysis of the think-aloud and semistructured interviews. For 9 of 10 physicians, date of last foot examination, date of last eye examination, and smoking status were the most difficult items to find without the dashboard. Physicians indicated that they had to sift through clinic notes or appointment lists to find them:

Finding the information on the last eye exam is the most difficult. The only way that I got any hint about that was to go to the appointment list [where] I saw she had the ophthalmology appointment last year sometime. The foot exam is a little bit easier, but I still needed to look for 4 to 5 clinic notes to find that. It’s just hidden in there.

When physicians were asked how long they would look for a particular piece of data for an actual visit with a patient with diabetes, answers ranged from 30 seconds to 2 minutes. Some physicians stated that for each patient with diabetes on their schedule, they spent 5 to 30 minutes finding data, especially for a new patient:

If I knew the patient was coming, even though they were new, I probably take 10 or 15 minutes the night before or the morning before the visit.

We questioned participants about what they would do if they could not find information needed for an actual encounter with a patient with diabetes. Physicians had varied strategies, including ordering a new test, asking the patient or nurse about the information, or requesting records from a previous physician.

I just quit looking…I might ask the patient, you know. If they don’t remember when was the last exam, I probably just have to repeat it.

If someone is transferring care from a doctor in town… sometimes I’ll get the record. But usually I just say, “Oh, forget it.” It’s just too much hassle to get the information, so I just say to the patient, “I’d like to get fresh labs on you.”

Physician opinion about the new dashboard was overwhelmingly positive. Most thought that it was well organized and would save them time:

I am pleased to hear that this screen will be available. I don’t know how to do it yet, but to be able to import this whole screen into one of my notes, that would save me lots and lots of time that I spend right now trying to find it. Also, I like it organized by chronic conditions.

How soon can we get this? Can we get this in production this evening? I really appreciate you doing this.

Suggestions for improving the dashboard included adding alerts that are generated when something is due and providing capability to import dashboard elements into notes. Suggestions were also made to reorganize some of the data and to add immunization information.

DISCUSSION

Our results quantify benefits that can be attained with a decision support tool. These benefits might offset the additional development costs of using user-centered design methods.9,10 The diabetes dashboard is a system intervention that makes it easier for clinicians to find information. Physicians frequently cite the EHR as being a source of increased workload15; however, our intervention led to significantly decreased physician time and mouse clicks. With user-centered design principles, we developed a diabetes dashboard that physicians quickly learned to use, increased efficiency, and contained the data needed for high-quality diabetes care.10 Clinicians can see at a glance what laboratory tests patients need and what their trends have been over the last 2 years. The 5 minutes saved by using the dashboard is worth $6.59 for a physician with a salary and benefits package of $180,000 per year. Multiplying this figure by the number of diabetes patients seen by all physicians in a practice or health system could translate to a substantial cost benefit. There are still 2 major unanswered questions: (1) were physicians already consistently clicking to view all data contained on the dashboard, or did they not bother to take the time to locate the information during a busy clinic day, and (2) did having all the clinical information available in a single view improve quality of care. Additional investigation is therefore needed to determine not only whether the diabetes dashboard’s time savings lead to increased productivity and revenue, but more importantly, whether having information more easily available improves the quality of patient care, safety, and outcomes.

The diabetes dashboard also led to fewer errors. Additionally, our interview data suggest that it may reduce costs, as physicians stated they often repeated tests that they could not find easily. By improving the ease of documentation, the tool may also decrease time between the patient visit and completing electronic documentation, thereby improving documentation accuracy. These outcomes, in addition to clinical outcomes, are areas for future study.

The physicians in our sample enthusiastically and immediately embraced the new dashboard. They found it easy to use after watching a 90-second video, heralding it as exactly what they needed. According to the UMHS Chief Medical Information Officer, this was one of the best-received technology introductions in the 8-year history of using the EHR (personal communication, Michael LeFevre, MD, MSPH; June 29, 2011).

Regarding areas of specific difficulty during conventional searching, there is nothing inherently more difficult about finding one laboratory value over another. Arbitrarily, we made the oldest laboratory result the urine microalbumin-creatinine ratio and the most recent result the low-density lipoprotein cholesterol level. So, the challenge in finding the older urine test (Figure 4) relates to the process of expanding the default range to find older results. This problem can be particularly prominent when the system default restricts the results displayed to a set number, such as the most recent 200 results. When users need to look back farther, they must change the settings, which was clearly easier for some of our physicians than others. Further user training or different default settings may have made this task easier for some users; however, expanding default settings may slow down system performance and require sifting through more data.

There are several limitations to our study. Although our primary care clinics have seen improvements in quality measures for diabetes, these improvements cannot be solely attributed to use of the dashboard as our efforts to improve diabetes care have been multifactorial, including a near simultaneous introduction of enhanced patient registry functions. We used a single EHR product in this study; however, these findings can likely be extrapolated to other EHR systems and certainly to users of the multinational PowerChart EHR. Additionally, Patient A was always presented before Patient B. There was a slight difference in average writing time (52 seconds for Patient A but 36 seconds for Patient B); however, differences between the 2 simulated patients persisted after we subtracted writing times.

We made the decision to use a mobile usability laboratory and simulated patient charts, rather than studying physicians caring for patients in their offices, an approach that had both limitations and strengths. Because of the somewhat artificial nature of the task, that is, “find these 10 data elements relevant to diabetes care” rather than “provide care for this patient with diabetes,” we decided not to perform a cognitive task order analysis. Using the simulated patient records and mobile usability laboratory nonetheless allowed us to create charts that were comparable in data quantity and complexity, to get precise time and mouse click measurements, and to interview physicians about their experience immediately after the task.

Since the introduction of the diabetes dashboard, designers have refined documentation templates to facilitate documentation of eye and foot examinations so that these examinations are identified as meeting quality metrics on the dashboard. Additional features have been added to the dashboard, including a link to a diabetes treatment algorithm and a link that automatically calculates and displays the patient’s Framingham risk score.16 As requested by physicians in the qualitative interviews, data from the dashboard can now be directly imported into a progress note, likely improving both quality and ease of documentation. Summary dashboards have been developed and released for several other ambulatory chronic conditions, and inpatient dashboards have been developed for use in the intensive care unit and neonatal intensive care unit.

The importance of electronic decision support tools to improve knowledge management is reflected in the CMS Meaningful Use incentive program, which includes incorporating decision support rules in the EHR.8 Two-thirds of US Medicare beneficiaries aged 65 years or older have multiple chronic conditions.17 The need to simultaneously manage and coordinate the care of multiple conditions is becoming increasingly important. Systems and summary pages that can dynamically render only the relevant data elements based on a patient’s problem list are the next step in patient-level data summarization.

Our study quantifies the improved efficiency and accuracy of information retrieval with a diabetes dashboard and suggests that there may be an associated reduction in costs. User-centered design led to a decision support tool that physicians found intuitive and easy to use and that they readily and immediately embraced. Ultimately, this decision support tool helps to ensure that needed information is readily available at the time clinical decisions are made.

Acknowledgments

Anindita Paul, Yunhui Lu, Xin Wang, Said Alghenaimi, and Ngoc Vo assisted with data collection and Morae Recorder analysis.

Footnotes

This project was presented at the North American Primary Care Research Group Annual Conference, November 2008, Puerto Rico.

Disclaimer: The content of this article is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Conflicts of interest: Dr Kochendorfer has received support as a consultant and speaking honoraria from Cerner Corporation, Kansas City, Missouri. The other authors report no conflicts of interest.

Funding support: This project was supported by grant R18HS017035 from the Agency for Healthcare Research and Quality.

References

- 1.Sittig DF, Wright A, Osheroff JA, et al. Grand challenges in clinical decision support. J Biomed Inform. 2008;41(2):387–392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kirsh D. A few thoughts on cognitive overload. Intellectica. 2000; 30(1):19–51 [Google Scholar]

- 3.Rose AF, Schnipper JL, Park ER, Poon EG, Li Q, Middleton B. Using qualitative studies to improve the usability of an EMR. J Biomed Inform. 2005;38(1):51–60 [DOI] [PubMed] [Google Scholar]

- 4.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223–1238 [DOI] [PubMed] [Google Scholar]

- 5.Edsall RL, Adler KG. User satisfaction with EHRs: report of a survey of 422 family physicians. Fam Pract Manag. 2008;15(2):25–32 [PubMed] [Google Scholar]

- 6.Edsall RL, Adler KG. The 2009 EHR user satisfaction survey: responses from 2,012 family physicians. Fam Pract Manag. 2009; 16(6):10–16 [PubMed] [Google Scholar]

- 7.Miller RH, Sim I. Physicians’ use of electronic medical records: barriers and solutions. Health Aff (Millwood). 2004;23(2):116–126 [DOI] [PubMed] [Google Scholar]

- 8.Eligible professional meaningful use core measures. Baltimore, MD: US Department of Health & Human Services, Centers for Medicare & Medicaid Services; 2010. Updated Nov 7, 2010. https://www.cms.gov/EHRIncentivePrograms/30_Meaningful_Use.asp Accessed August 22, 2011 [Google Scholar]

- 9.Field TS, Rochon P, Lee M, et al. Costs associated with developing and implementing a computerized clinical decision support system for medication dosing for patients with renal insufficiency in the long-term care setting. J Am Med Inform Assoc. 2008;15(4):466–472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Norman DA, Draper SW, eds. User Centered System Design: New Perspectives on Human-Computer Interaction. Hillsdale, NJ: L. Erlbaum Associates; 1986 [Google Scholar]

- 11.Tufte ER. The Visual Display of Quantitative Information. Cheshire, CT: Graphics Press; 2001 [Google Scholar]

- 12.Morae software, version 2.0.1 Okemos, MI: TechSmith Corporation; 2007 [Google Scholar]

- 13.Lundgrén-Laine H, Salanterä S. Think-aloud technique and protocol analysis in clinical decision-making research. Qual Health Res. 2010;20(4):565–575 [DOI] [PubMed] [Google Scholar]

- 14.Charmaz K. Grounded theory: objectivist and constructivist methods. In: Denzin NK, Lincoln YS, eds. Handbook of Qualitative Research. Thousand Oaks, CA: Sage Publications; 2000:509–535 [Google Scholar]

- 15.Mitchell E, Sullivan F. A descriptive feast but an evaluative famine: systematic review of published articles on primary care computing during 1980–97. BMJ. 2001;322(7281):279–282 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wilson PW, D’Agostino RB, Levy D, Belanger AM, Silbershatz H, Kannel WB. Prediction of coronary heart disease using risk factor categories. Circulation. 1998;97(18):1837–1847 [DOI] [PubMed] [Google Scholar]

- 17.Thorpe KE, Howard DH. The rise in spending among Medicare beneficiaries: the role of chronic disease prevalence and changes in treatment intensity. Health Aff (Millwood). 2006;25(5):w378–w388 [DOI] [PubMed] [Google Scholar]