Abstract

Background

Within many health care disciplines, research networks have emerged to connect researchers who are physically separated, to facilitate sharing of expertise and resources, and to exchange valuable skills. A multicentre research network committed to studying difficult cancer pain problems was launched in 2004 as part of a Canadian initiative to increase palliative and end-of-life care research capacity. Funding was received for 5 years to support network activities.

Methods

Mid-way through the 5-year granting period, an external review panel provided a formal mid-grant evaluation. Concurrently, an internal evaluation of the network by survey of its members was conducted. Based on feedback from both evaluations and on a review of the literature, we identified several components believed to be relevant to the development of a successful clinical cancer research network.

Results

These common elements of successful clinical cancer research networks were identified: shared vision, formal governance policies and terms of reference, infrastructure support, regular and effective communication, an accountability framework, a succession planning strategy to address membership change over time, multiple strategies to engage network members, regular review of goals and timelines, and a balance between structure and creativity.

Conclusions

In establishing and conducting a multi-year, multicentre clinical cancer research network, network members were led to reflect on the factors that contributed most to the achievement of network goals. Several specific factors were identified that seemed to be highly relevant in promoting success. These observations are presented to foster further discussion on the successful design and operation of research networks.

Keywords: Clinical cancer research, research network, multicentre, network evaluation, collaboration

1. INTRODUCTION

Canada is characterized by a small population dispersed across a huge geographic area. In Canada and other countries, research networks have emerged within many health care disciplines to connect researchers who are physically separated and thus to facilitate sharing of expertise and resources and exchange of valuable skills1. At the same time, research networks may serve to increase research involvement, to build research capacity, and to develop a research culture2. Other benefits to participation in a research network may include access to large and diverse patient populations for clinical trial research1, increased funding opportunities1, access to support staff who can facilitate the research projects, opportunities for professional development, and mentorship by experienced researchers3.

The Team for Difficult Cancer Pain was established in 2004 in response to a funding announcement by the Cancer Institute of the Canadian Institutes of Health Research of $16.5 million over 5 years to support the development of palliative and end-of-life care research networks. Ten theme-based, multicentre, New Emerging Team Grants (up to $300,000 annually for 5 years) were awarded to address the long-term issue of capacity-building within the field of palliative and end-of-life care research. Our team was successful in its application, and as a result, a research network of investigators committed to studying difficult cancer pain problems was launched.

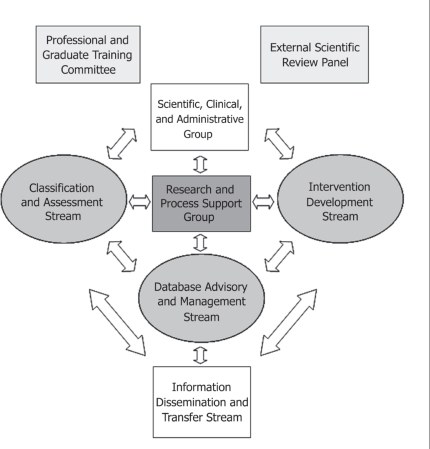

The network consisted of 4 research “streams,” a Professional and Graduate Training Committee, and a central infrastructure support group, including the lead investigator of the network (Figure 1). Principal investigators led individual projects in partnership with a multidisciplinary complement of colleagues. In essence, the network was structured to support a range of interdependent research activities from pain assessment tools to pain interventions, knowledge transfer, and development of an Internet-based education initiative to increase palliative care research capacity in the country. Mid-way through the 5-year grant period, feedback on the network’s progress was provided by members through an internal review and by two internationally recognized leaders in cancer pain who conducted an external review.

FIGURE 1.

Organizational structure of the Canadian Institutes of Health Research Team for Difficult Cancer Pain. The original network consisted of 32 Canadian professionals from medicine, nursing, psychology, statistics, pharmacology, and pharmacy, plus 5 internationally recognized cancer pain researchers acting as consultants or collaborators

Having now reached the end of the network’s 5-year funding period, we reflected on the lessons learned during that time, and herein, we provide suggestions for establishing a successful clinical cancer research network. Our report has two sections: methods and results of a pre-planned mid-grant network evaluation; and end-of-grant reflections on lessons learned about relevant elements of successful clinical cancer research networks.

2. MID-GRANT EVALUATION

2.1. Methods and Results

External evaluation of the network was described in the original grant. An External Scientific Review Panel was created to provide external accountability for the network by evaluating its performance with respect to the achievement of its goals and to the scientific merit of its research program. Two internationally recognized leaders in cancer pain (external to the network) agreed to sit on the review panel. Mid-way through the 5-year granting period, the panel was asked to provide a formal mid-grant evaluation. The panel was provided with a series of documents summarizing network activities to that date and was asked to evaluate the organization, timeliness, productivity, and overall achievements of the network at that point relative to the network’s original goals and objectives. Questions arising from the review were addressed in writing by the lead investigator of the network, and a detailed discussion of the network’s achievements and planned activities for the remaining term occurred in an ensuing teleconference.

At the same time that the external review was proceeding, we sought to undertake an internal evaluation of the network by its members. We reviewed the literature to learn which methods, if any, had been used for internal evaluations of the functioning and productivity of other research networks. Although the literature on research networks is modest in size, we were able to find reports characterizing research network evaluation2,4–8. We adapted the Cancer Research Network evaluation survey (described at http://jncimono.oxfordjournals.org/content/2005/35/26/suppl/DC1) for use within our network. Surveys were sent to 34 network members by e-mail and were returned in a way that ensured anonymity.

2.2. Key Findings

2.2.1. External Review

Table i summarizes outcomes from the external evaluation of the network. The review panel indicated that overall network productivity was good, but with significant variability in productivity between streams. For the streams that were behind schedule in their planned activities, it was recommended either that effort be made to advance activities or that the streams be abandoned and their resources directed elsewhere. Other suggestions for improvement included developing concrete processes for moving ideas through to proposals and then to study implementation, and expanding the Database stream into an Analysis stream in which all data would be collected and analyzed in a consistent manner. All team members were involved to a greater or lesser extent in unrelated non-network activities. Therefore, attention was needed to ensure that all papers, grants, and other measures of productivity that were attributed to the network were indeed directly related to network activities, so as to provide an unambiguous picture of true productivity.

TABLE I.

Feedback from external review panel

| Positive feedback | The network’s overall strategic direction is appropriate |

| Productivity is good, especially since network members are not reimbursed for their involvement | |

| The research topics chosen for study are important issues to address | |

| Team members work collaboratively | |

| Suggestions for improvement | Abandon unproductive streams/projects and focus resources on more productive streams/projects |

| Establish governance policies/guidelines for the development of new proposals and allocation of resources | |

| Revisit project lists on a regular basis to define planned activities and specify timelines | |

| Be mindful of maintaining integrity when reporting measures of productivity | |

| Expand the Database Group to an Analysis Group, and develop defined processes for data collection and consistent data analysis by dedicated data analysts |

2.2.2. Internal Review

In the internal evaluation, 11 network members (32%) returned surveys. Although the response rate was low, many respondents were invested in the network through their roles or their network involvement—3 were stream leaders, 2 were investigators, and 1 was a co-applicant—and 5 of the 11 respondents were involved in multiple network projects or reported spending 6 or more hours per week on network activities.

Most respondents (64%–73%) felt that the network principal investigator and stream leaders were effective at fostering cooperation, making decisions to support network goals, and providing opportunities to express ideas. Similarly, most respondents (73%–100%) indicated that the infrastructure team was effective at keeping the team informed, fostering engagement, and providing support. To stay informed of network activities, 82% of respondents reported using newsletters and e-mail messages; 73% of respondents used conference calls; and 36% and 27% respectively used face-to-face meetings and informal conversations. Almost all respondents (90%) felt as informed as they needed to be about activities with their stream of interest, and 60% of respondents felt as informed as they needed to be about activities in other streams. The most common barrier to participating in network activities was the substantial commitment of the respondents to other clinical and research activities.

Qualitative comments from survey respondents indicated that the network was well-organized, had good communication strategies, and was succeeding at fostering cooperation and facilitating collaborative research. Respondents suggested reserving conference calls for specific project-related discussions (as opposed to general updates), and welcomed more face-to-face meetings to improve collaboration between streams. A few respondents indicated a need for more mentoring of junior investigators, who were not always sure when and how to get involved.

3. END-OF-GRANT REFLECTIONS

3.1. Lessons Learned

At the end of the 5-year term of the research grant, 34 peer-reviewed manuscripts had been published or were in press, 8 manuscripts were under review or in preparation, and 38 oral presentations had been given at regional, national, or international meetings. Several network members went on to obtain competitive grant funding beyond the original team grant, consistent with the network’s goal of building sustained research capacity in palliative care.

But what have we learned about establishing a successful clinical cancer research network?

Based on feedback from the internal and external reviews, and on a review of the literature on research networks, we formulated several conclusions on how a new clinical cancer research network could best be positioned for success. We suggest that research networks be developed with attention to several core components, as summarized in Table ii and described in the subsections that follow. These components were important to our palliative care research team, but they are also likely relevant to other clinical cancer research networks, and they should be considered at the initial stages of network planning. Although barriers to high-functioning networks have been described in the literature1,9–11, our focus here is on applying strategies to support the development of a successful clinical cancer research network.

TABLE II.

Common elements of successful research networks

| Common elements of successful research networks | References |

|---|---|

| A shared vision | 9–12 |

| Formal governance policies and terms of reference | 10–13 |

| An infrastructure team dedicated to the goals and activities of the network | 11,8–16 |

| Regular and effective communication | 10,11,8,16–18 |

| A framework for holding members accountable | 1,4 |

| A succession planning strategy to address membership change over time | — |

| Multiple strategies to engage network members | 11,18,19 |

| Regular review of goals and timelines | 9,11,16 |

| A balance between structure and creativity | — |

3.2. Recommendations for Establishing a Successful Multicentre Clinical Research Network

3.2.1. Articulate a Shared Vision

The team should be built with individuals who demonstrate a variety of strengths—and who all believe strongly in the proposed outcomes and vision of the network. A high level of “buy-in” from team members and stakeholders is critical at the beginning stages of the process. If buy-in is mediocre at the beginning of the endeavor, motivation may wane as the project proceeds, even if everything is going smoothly. The project leader needs to continuously share the vision with partners, to embrace iterative learning to keep goals on track, and to address waning motivation with personal contact.

3.2.2. Develop Governance Policies and Terms of Reference

Many networks come together as a result of burgeoning relationships in a research community. Preexisting personal and professional relationships often help to provide the initial basis of trust20. Network members may have already informally discussed their common goals and research priorities. However, candid dialogue at the beginning of the project should take place to determine how participants are going to be rewarded, what the benefits of belonging to the network are (for example, support for individual research projects), and how academic achievements, publication guidelines, seed funding, collaborative opportunities, and other aspects of the network will be handled. The network should establish policy guidelines for data sharing and management of intellectual property that establish how samples and primary data will be transferred between members, how data analyses will be released into the public domain, when intellectual property protection should be sought, and how intellectual property is managed. (The reader is referred to Chokshi et al.21 for more information on this topic.)

The network should be transparent about how and why decisions are made, and should consider how best to engage members in the activities and development of the network2. We went to extra effort to support transparency through regular team teleconferences and newsletters. Our external review panel suggested developing clear processes for how ideas are translated into proposals and how proposals are put forward for study implementation.

Reviewing the literature and reflecting on our own experience, we conclude that no single particular governance structure will reliably translate into success. Each network needs to develop its own structure in the context of its stakeholders, funding, research environment, personalities, and other key factors.

3.2.3. Establish an Infrastructure Team

Certain elements of infrastructure are critical to the success of the network11,15. The infrastructure team should be dedicated to the activities outlined in the goals and objectives of the endeavor. A project manager who is skilled at identifying and allocating resources, establishing timelines, and facilitating smooth processes from an operational perspective is probably essential. Depending on the size of the team and the objectives of the endeavor, the infrastructure support team may also include administrators that provide general, research, and grant-related support, and a lab manager, if appropriate.

3.2.4. Communicate Frequently

Productivity and team building hinge on regular and effective communication. Communication of vision, updates, projects, opportunities, successes, strategies, and barriers to overcome is a responsibility shared by all team members. The beginning stages of teambuilding are crucial, because this is the time when a sense of trust is established and tested, when partners are rewarded for engaging productively, and when a sense of team identity is forged. The importance of face-to-face meetings in developing trust and understanding between network members has been emphasized in the literature15,17. In actuality, our research network had only a few face-to-face meetings, both as a whole and within streams. However, our lack of face-to-face meetings was not detrimental to our productivity, and in fact, in our experience, having face-to-face meetings within streams was, surprisingly, not a predictor of success. We speculate that deep pre-existing interpersonal relationships contributed to a smaller-than-expected need for face-to-face meetings.

To avoid miscommunication, we do recommend that network members be explicit with each other regarding the anticipated nature of each person’s role—for example, as a consultant, collaborator or co-investigator, or mentor—in the various projects. At the same time, we recognize that roles may change over time20. Expectations of collaborators—that is, the nature of their involvement and the responsibilities to which they are committing, among other aspects—must be clear and agreed-on by all parties22.

3.2.5. Develop an Accountability Framework

One aspect of successful teams that has been underemphasized in the biomedical literature is an accountability framework about which all team members are aware at the outset of the project. Clear expectations and adherence to those expectations will promote equal performance by partners and a sense of fairness for all parties.

An accountability framework should start with effective communication among stakeholders so that proposed outcomes, milestones, timelines, tasks, and delegation for completion are agree a priori. A concrete plan should be established in the event that milestones are not reached. In this manner, all stakeholders will be aware of their role in each project, the requirements to be met at particular times, and the action that will be taken if performance expectations are not met.

In hindsight, a brief discussion at the end of each of our network’s projects would have been beneficial. This type of interaction offers an opportunity to explore specific strategies that worked, barriers encountered, and what the team would or could have done differently.

3.2.6. Establish a Succession-Planning Strategy

A community of researchers is highly dynamic, and the composition of a research network will inevitably change over time. The interest of some members wanes, other members move on to new positions, and new members are recruited. Networks should have a plan in place to deal with attrition and a succession plan for vacated roles. Implementing a change of this nature may include steps that reflect granting, national, and local perspectives. It is helpful to know in advance the type of documentation that will be needed before team members leave the network, because collecting documentation in a timely fashion after the fact may be challenging. Succession planning for signing authority for research accounts needs to be established ahead of time.

3.2.7. Devise Multiple Strategies to Engage Network Members

Several reports describe a lack of member participation as one of the key challenges faced by research networks1,3,11,13,19. Research engagement is important to address, because researchers who are more fully engaged in network activities are more likely to work together to promote the ongoing success of the network and its activities. Multiple strategies should be implemented to encourage participation by busy clinicians with competing commitments.

Our network did not provide any salary support for collaborators (that is, participation in network projects was entirely voluntary and occurred on top of the members’ regular clinical, academic, teaching, and administrative responsibilities), and so it was important that research topics be of personal interest and clinically relevant to network members. The perceived “ownership” of the project by members—and the involvement of influential study “champions”—has been shown to improve project participation11.

The research team should provide a means for ongoing publicity for its members—for example, authorship or mention in local or professional society newsletters11. Achievements should be openly celebrated and disseminated to the group. We suggest that the core team would be prudent to identify some early “wins” for team members (easily achieved, but with a sense of significant achievement) that can be shared with the rest of the group and emulated for further success. The first network studies (or studies for initiating new network members) should ideally be short, interesting, of low burden to the participating site, and able to provide immediate positive feedback of results. These kinds of studies build momentum for ongoing network participation11. Opportunities for authorship (based on continued participation), and particularly for meriting first authorship, provide motivation to network members to remain involved.

Members should be made aware of opportunities for participation11. Results of our own internal evaluation indicated that some members did not know how to participate. In particular, a desire for more mentoring of junior investigators was expressed by some respondents during the internal evaluation. Our network did not establish a formal mentorship program, but we did explicitly plan to position investigators to be authors on manuscripts, and informal mentoring was provided on how to achieve and merit authorship. In addition, our development of an online palliative care research course was specifically offered to improve mentorship and to provide resources to new researchers.

The Internet literature describes “lurkers” as individuals who follow online discussion forums but who rarely or never contribute to the discussion23,24. We propose that networks may also have lurkers: people who remain informed of network activities, but who show little overt evidence of their interest. We discovered that lurkers are often quite proud of their team and that they become actively engaged when the opportunity seems right to them. Networks should look for ways to encourage lurkers to be more active and should not seek to have them leave the team in the absence of compelling reasons to do so. In our network and the many other networks we have encountered, much of the productivity and leadership arises in association with a small core group of collaborators. The larger network membership, including many lurkers, remains an important constituency to expand the activity base, to assist with succession planning, and to enhance the range of ideas and innovation.

As a final observation, we recommend building an evaluation process into the network at the beginning. That evaluation process can include steps similar to those that we undertook (external review, survey), but may be complemented by additional metrics—for example, project timelines from inception to completion, number of team members engaged in specific projects, and comparisons with similar consortia and their progress, among others.

3.2.8. Review Goals and Timelines Regularly

It is helpful to periodically revisit goals, because, understandably, goals may change over time with changes in the research climate, network composition, and so on. We posit that recognizing when to change course and tp reallocate resources is more important than trying to meet all original goals, which may turn out to no longer be attainable or desirable.

Within our network, each stream demonstrated productivity in the form of peer-reviewed publications and other measures (for example, an online distance education course on palliative care research methods that was developed by our Professional and Graduate Training Committee). However, it was not possible to predict which streams would have higher productivity, or when. Feedback from the external review panel indicated that revisiting and revising planned activities, and their timelines for completion, can help to sustain momentum.

3.2.9. Strike a Balance Between Structure and Creativity

The network needs sufficient structure and accountability to assure early wins and continued productivity. However, at the same time, it also requires sufficient openness to unanticipated ideas, collaborations, or leadership. While paying attention to leadership roles and responsibilities, a high-functioning network will be open to innovation from all network members and will focus on opportunities for productivity together.

4. SUMMARY

In establishing and conducting a multi-year, multicentre clinical cancer research network, network members were led to reflect on the factors that contributed most to the achievement of network goals. Although research networks have inherent challenges, they offer the opportunity for geographically separated individuals to work together toward common objectives. We identified several specific factors that seemed to be highly relevant in promoting success. We offer our observations in the hope of fostering further discussion on the successful design and operation of research networks.

5. ACKNOWLEDGMENTS

The authors acknowledge the ongoing support of the Institute of Cancer Research of the Canadian Institutes of Health Research (chir), and specifically, the leadership and encouragement of Dr. Judith Bray, Assistant Director of the Cancer Institute. Funding was provided by chir New Emerging Team grant PET69772. Dr. Greta Cummings is supported by a New Investigator Award (cihr) and a Population Health Investigator Award (Alberta Heritage Foundation for Medical Research). Many colleagues contributed substantially to the success of this research team in its early phases, particularly Drs. Kim Fisher and Penny Brasher.

Footnotes

6. CONFLICT OF INTEREST DISCLOSURES

The authors have no financial conflicts of interest to declare.

7. REFERENCES

- 1.El Ansari W, Maxwell AE, Mikolajczyk RT, Stock C, Naydenova V, Krämer A. Promoting public health: benefits and challenges of a Europeanwide research consortium on student health. Cent Eur J Public Health. 2007;15:58–65. doi: 10.21101/cejph.a3418. [DOI] [PubMed] [Google Scholar]

- 2.Vanderheyden L, Verhoef M, Scott C, Pain K. Evaluability assessment as a tool for research network development: experiences of the complementary and alternative medicine education and research network of Alberta, Canada. Can J Prog Eval. 2006;21:63–82. [Google Scholar]

- 3.Bakken S, Lantigua RA, Busacca LV, Bigger JT. Barriers, enablers, and incentives for research participation: a report from the Ambulatory Care Research Network (acrn) J Am Board Fam Med. 2009;22:436–45. doi: 10.3122/jabfm.2009.04.090017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Greene SM, Hart G, Wagner EH. Measuring and improving performance in multicenter research consortia. J Natl Cancer Inst Monogr. 2005;(35):26–32. doi: 10.1093/jncimonographs/lgi034. [DOI] [PubMed] [Google Scholar]

- 5.Popp JK, L’Heureux LN, Dolinski CM, et al. How do you evaluate a network? A Canadian Child and Youth Health Network experience. Can J Prog Eval. 2005;20:123–50. [Google Scholar]

- 6.Clement S, Pickering A, Rowlands G, Thiru K, Candy B, de Lusignan S. Towards a conceptual framework for evaluating primary care research networks. Br J Gen Pract. 2000;50:651–2. [PMC free article] [PubMed] [Google Scholar]

- 7.Fenton E, Harvey J, Sturt J. Evaluating primary care research networks. Health Serv Manage Res. 2007;20:162–73. doi: 10.1258/095148407781395955. [DOI] [PubMed] [Google Scholar]

- 8.Wagner EH, Greene SM, Hart G, et al. Building a research consortium of large health systems: the Cancer Research Network. J Natl Cancer Inst Monogr. 2005;(35):3–11. doi: 10.1093/jncimonographs/lgi032. [DOI] [PubMed] [Google Scholar]

- 9.Marshall JC, Cook DJ. Investigator-led clinical research consortia: the Canadian Critical Care Trials Group. Crit Care Med. 2009;37(suppl 1):S165–72. doi: 10.1097/CCM.0b013e3181921079. [DOI] [PubMed] [Google Scholar]

- 10.Williams RL, Johnson SB, Greene SM, et al. Signposts along the nih roadmap for reengineering clinical research: lessons from the Clinical Research Networks initiative. Arch Intern Med. 2008;168:1919–25. doi: 10.1001/archinte.168.17.1919. [DOI] [PubMed] [Google Scholar]

- 11.Kutner JS, Main DS, Westfall JM, Pace W. The practice-based research network as a model for end-of-life care research: challenges and opportunities. Cancer Control. 2005;12:186–95. doi: 10.1177/107327480501200309. [DOI] [PubMed] [Google Scholar]

- 12.Lang JE, Anderson L, LoGerfo J, et al. The Prevention Research Centers Healthy Aging Research Network. Prev Chronic Dis. 2006;3:A17. [PMC free article] [PubMed] [Google Scholar]

- 13.Wright LL. How to develop and manage a successful research network. Semin Pediatr Surg. 2002;11:175–80. doi: 10.1053/spsu.2002.33740. [DOI] [PubMed] [Google Scholar]

- 14.Willson DF, Dean JM, Newth C, et al. Collaborative Pediatric Critical Care Research Network (cpccrn) Pediatr Crit Care Med. 2006;7:301–7. doi: 10.1097/01.PCC.0000227106.66902.4F. [DOI] [PubMed] [Google Scholar]

- 15.Green LA, White LL, Barry HC, Nease DE, Jr, Hudson BL. Infrastructure requirements for practice-based research networks. Ann Fam Med. 2005;3(suppl 1):S5–11. doi: 10.1370/afm.299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Winstein C, Pate P, Ge T, et al. The physical therapy clinical research network (PTClinResNet): methods, efficacy, and benefits of a rehabilitation research network. Am J Phys Med Rehabil. 2008;87:937–50. doi: 10.1097/PHM.0b013e31816178fc. [DOI] [PubMed] [Google Scholar]

- 17.Griffiths F, Wild A, Harvey J, Fenton E. The productivity of primary care research networks. Br J Gen Pract. 2000;50:913–15. [PMC free article] [PubMed] [Google Scholar]

- 18.Lasker RD, Weiss ES, Miller R. Partnership synergy: a practical framework for studying and strengthening the collaborative advantage. Milbank Q. 2001;79:179–205. doi: 10.1111/1468-0009.00203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Green LA, Niebauer LJ, Miller RS, Lutz LJ. An analysis of reasons for discontinuing participation in a practice-based research network. Fam Med. 1991;23:447–9. [PubMed] [Google Scholar]

- 20.Carey TS, Howard DL, Goldmon M, Roberson JT, Godley PA, Ammerman A. Developing effective interuniversity partnerships and community-based research to address health disparities. Acad Med. 2005;80:1039–45. doi: 10.1097/00001888-200511000-00012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chokshi DA, Parker M, Kwiatkowski DP. Data sharing and intellectual property in a genomic epidemiology network: policies for large-scale research collaboration. Bull World Health Organ. 2006;84:382–7. doi: 10.2471/BLT.06.029843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jenerette CM, Funk M, Ruff C, Grey M, Adderley–Kelly B, McCorkle R. Models of inter-institutional collaboration to build research capacity for reducing health disparities. Nurs Outlook. 2008;56:16–24. doi: 10.1016/j.outlook.2007.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Alley J, Greenhaus K. Turning lurkers into learners: increasing participation in your online discussions. Learn Lead Technol. 2007;35:18–21. [Google Scholar]

- 24.Nummenmaa M, Nummenmaa L. University students’ emotions, interest and activities in a web-based learning environment. Br J Educ Psychol. 2008;78:163–78. doi: 10.1348/000709907X203733. [DOI] [PubMed] [Google Scholar]