Abstract

Objective

To examine how attributes affecting sustainability differ across VHA organizational components and by staff characteristics.

Subjects

Surveys of 870 change team members and 50 staff interviews within the VA’s Mental Health System Redesign initiative.

Methods

A one-way ANOVA with a Tukey post-hoc test examined differences in sustainability by VISN, job classification, and tenure from staff survey data of the Sustainability Index. Qualitative interviews used an iterative process to identify “a priori” and “in vivo” themes. A simple stepwise linear regression explored predictors of sustainability.

Results

Sustainability differed across VISN and staff tenure. Job classification differences existed for: 1) Benefits and Credibility of the change and 2) staff involvement and attitudes toward change. Sustainability barriers were: staff and institutional resistance, and non-supportive leadership. Facilitators were: commitment to veterans, strong leadership, and use of QI Tools. Sustainability predictors were outcomes tracking, regular reporting, and use of PDSA cycles.

Conclusions

Creating homogeneous implementation and sustainability processes across a national health system is difficult. Despite the VA’s best evidence-based implementation efforts, there was significant variance. Locally tailored interventions might better support sustainability than “one-size-fits all” approaches. Further research is needed to understand how participation in a QI collaborative affects sustainability.

Keywords: Sustainability, Job Classifications, Veterans Administration, Mental Health, Organizational Change

The implementation of an organizational change does not guarantee that it will be sustained. . Although most changes are sustained at least briefly,1,2 these efforts are often more difficult than implementation of a change. A few studies indicate that up to 70% of changes are not sustained.3,4 Change not sustained is a direct waste of invested resources, has costs associated with missed opportunities, and affects an organization’s ability to implement change in the future. However, we still have much to learn about the attributes contributing to an organization’s ability to successfully sustain change.

Some organizational attributes that facilitate or impede change sustainability have been identified. These attributes can be separated into factors related to the: (1) process of the change, (2) staff involved in implementing and leading change, and (3) organizational support for the change. Process attributes include the credibility of the change and the likelihood that the change will benefit staff, clients, and other stakeholders.2,5–7 Adaptability of the change is another process attribute; meaning processes must evolve to shifts in the organizational environment.2,6,8 If the change is not consistent with the rest of the organizational environment, it is unlikely to be maintained. Finally, a system to effectively and continuously monitor and share the effects of the change must be in place from the onset of the project.9–12

Staff attributes include frontline staff involvement, staff attitudes toward the change process, and support of leadership in the change process. Specifically, a change idea that is generated locally, based on articulated staff needs and supported by an internal champion, enhances staff positive attitudes and involvement in the change process.2,7,13,14 Intrinsic staff motivation to participate in the change increases the quality and likelihood of sustaining that change.15,16 In turn, such participation may provide sufficient evidence for staff to believe in the credibility and benefits of the change. Parker et al.17 classified this quality improvement style as a local participatory approach because it adapts the processes to the organizational environment.

Uninvolved and non-supportive leadership are significant barriers to implementing and sustaining change6,18; while strong, involved and committed leaders—including executive, senior, mid-level, and clinical—can inspire a culture of change.5,9,10,19–21 Supportive leaders create a coherent vision for organizational transformation. They articulate the benefits and credibility of the change, and empower employees to fully participate in the change process by providing training to master newly required skills.22–27 As champions, effective leaders enhance the implementation and sustainability of change by breaking down organizational barriers and aligning changes with the organization’s strategic aims.2,7,23,28 Leaders also allocate organizational resources (e.g., staff, facilities, and equipment) and change policies, procedures, and job descriptions to create an infrastructure to support change sustainability.7,12,29 How these attributes affect sustainability or differ by staff characteristics is not well understood.

The Veterans’ Health Administration (VHA) component of the Department of Veterans’ Affairs (VA) has made remarkable strides in quality and performance over the past fifteen years.30–40 As efforts to improve care and sustain improved performance continue, VHA has supported state-of-the-art implementation studies and efforts under three different initiatives, QUERI1, VERC2, and System Redesign3. The Mental Health System Redesign (MHSR) Collaborative was designed on the IHI Breakthrough Series model adopted by the VHA to employ evidenced-based change implementation techniques. This initiative began in May 2008 and ended in June 2009. Its purpose was to: “launch, sustain and spread Systems Redesign improvements made in Inpatient and Outpatient processes throughout the care continuum.”4 Participants engaged in a quality improvement training that included aim identification, PDSA cycles, change teams, managing resistance, data collection and interpretation, and process mapping. All VA Medical Centers (VAMC) created a national team to select and implement a process improvement project focused on topics such as improving access, managing flow through inpatient and outpatient settings, clinical care, (e.g., depression follow-up, homeless access, suicide monitoring, evidence based psychotherapy), and MH\Primary Care Integration. Teams reported progress through monthly phone calls and at four face-to-face learning collaborative sessions where they received feedback and additional training. Change teams received telephone coaching during the intervention. The learning collaborative model and tools helped teams prepare for and implement change by focusing on effective attributes, including the importance of leadership support and identification of strategies to support improvement.41

National VA-wide systems redesign (SR) initiatives provide an excellent opportunity to understand the key attributes of sustaining implementation across many facilities. It also framed several important questions about those attributes associated with sustained change. Did this evidence-based, well-planned initiative result in high scores on the Sustainability Index? Did staff perceptions about the impact of process improvement or organizational change team characteristics predict Sustainability Index scores? Was a large organization like VHA able to achieve similar SI scores across its organizational divisions and components of personnel? Could we identify, in a qualitative approach, new variables, specific to VA health care settings that should be investigated as predictors of sustainability?

In this research, we used a mixed methods approach to examine and explore differences in staff perceptions about the projects across Veteran Integrated Service Networks (VISN) and by job function and tenure within the VA on factors that affect the sustainability of change implemented within the VA Mental Health Systems Redesign (MHSR) initiative.

Methods

Study Population

Participation was open to all change team members within the Mental Health System Redesign (MHSR) initiative. A master list of change team members by VA facility (n=870) was created from contact information on required change project reports and through the internal VA mail system. E-mail invitations asked respondents to voluntarily complete a brief 30-item online survey that measured MHSR sustainability efforts in their organization. Follow-up e-mails and phone calls were made to increase the overall survey response rate. Responses from each facility were used to create the composite sustainability score.

University of Wisconsin-Madison (UW) researchers functioned as outside evaluators and conducted 50 semi-structured, qualitative telephone interviews with staff at VISN 2 and VISN 12. These networks provided a wide variation of administrative attributes, as well as geographic and demographic diversity. Staff were randomly selected and voluntarily agreed to participate in an interview and assured that neither their identity nor their responses would be shared with anyone at the VA. The in-depth interviews were not taped and explored staff perceptions about participating in and sustaining the MHSR projects.

Outcome Measures

The online survey assessed staff perceptions of sustainability of the change project, level of facility participation in the project, and demographic information. The British National Health Service Sustainability Index (SI) model5, which measures ten factors that affect sustainability, was used to assess sustainability propensity.42 Table 1 provides an overview of the SI dimensions and the measurement intent of each factor within the SI model. The model uses a multi-attribute utility algorithm to estimate the likelihood that a change will be sustained. The SI measures the process, staff, and organizational attributes previously identified. In turn, SI scores are used to guide changes in sustainability efforts. A total SI score of less than 55 suggests a need to develop an action plan to increase the likelihood that change will be sustained.43 While used extensively within the BNHS, the SI’s application to measure sustainability of organizational change within the U.S. health care system has been limited.

Table 1.

Understanding the British National Health System Sustainability Index Model

| SI Dimension | SI Factor | Measurement Intent of SI Question |

|---|---|---|

| Process | Benefits beyond helping patients | Change improves efficiency and makes jobs easier |

| Credibility of the Benefits | Evidence supports the benefits change of the change which is obvious to all key stakeholders. | |

| Adaptability of improved process | Assess staff perceptions that change can continue in the face of changes in staff, leadership, organization structures, | |

| Effectiveness of the System | Examines the presence of a system to continually monitor the impact of change | |

| Staff | Staff involvement | Focuses on the engagement of staff at the start of the change process and ensuring that training is received for the newly required skills. |

| Staff attitudes | Seeks reduce resistance to change through empowerment and belief by staff that the change will be sustained | |

| Senior Leadership Engagement | Effort and responsibility by management to sustain the change and it involves staff sharing of information about the change process and seeking advice from senior leaders | |

| Clinical leadership engagement | Similar to senior leadership engagement but the focus is on key clinical leaders | |

| Organization | Fit with organization’s strategic aims & culture | Examines prior history in sustaining change and that improvement goals are consistent with agency goals |

| Infrastructure for Sustainability | Explores if resources (staff, facilities, job descriptions, money etc) are available to help sustain change. |

Demographic questions included gender, race, ethnicity, and information about job function (administration/management, clinical, clerical/reception, technical/data analysis, quality improvement, and other); employment status (full time, part time, contract and other); and facility and VA tenure. Respondents were asked about the organization's participation in and experience with the VA MHSR Initiative and its related sustainability efforts. The frequency of coach calls and change team meetings was measured using a five-item Likert scale (weekly or less, monthly or less, quarterly or less, whenever we could find the time, or do not know). To assess continual outcome tracking, respondents were asked if the change project outcomes were still being tracked (Yes or No). If yes, respondents noted the frequency of change team meetings to review outcomes using the same five-item scale as above. Ongoing use of PDSA Change Cycle or other process improvement techniques, participation on a change team, involvement in a facility or VISN level system redesign project, and regular communication of MHSR results with facility leadership was measured using a three-item Likert scale (Yes, No, or Do not know).

Qualitative interview guides were developed for staff and leadership. The staff interview guide consisted of 28 questions grouped into six categories. Questions focused on change projects, leadership, sustainability efforts, and effect of the change project, demographics, and general comments. The leadership interview guide held 12 questions asking about general impressions and involvement, how project results were shared, resource availability to support change efforts, and the project’s impact. Interviews were anonymous and could be terminated at any time.

Analysis

A mixed methods approach was used to analyze the results. We used a one-way ANOVA with a Tukey post-hoc test to examine differences in sustainability scores across the VISN and by job classification and tenure. The analysis used a simple stepwise linear regression to explore predictor variables for sustainability. Survey questions related to the impact of process improvement on system redesign, facility change team characteristics, and respondent demographics were included in an exploratory analysis to see which factors predicted the overall sustainability propensity score. The analysis for the overall SI score and the sub-scales of process, staff, and organization was limited to those respondents who answered all ten questions in the sustainability model.

The qualitative analysis of the interviews combined a content analysis approach with qualitative inquiry allowing us to discover and quantify respondents’ experience and perceptions. We used an iterative process to identify “a priori” themes based on the SI and to create “in vivo” themes as they emerged during the coding.44 Two analysts coded each interview independently and then discussed variations until consensus was reached. After coding all interviews, we used the constant comparative method to combine similar themes with limited data under more general themes.44 The analysis was completed using N-VIVO-7 that allowed for counting the number of respondents who addressed these themes and density of these themes (number of overall mentions). Theme density is a valid proxy for its level of importance.45,46 The final step in the analysis was to run N-VIVO code reports (participant N and density) of salient themes and coding matrices related to significant survey findings. For instance, successive reports explored the intersection of position (leadership vs. staff or clinical vs. administrative) with perceived: (1) processes for implementation, (2) outcomes and benefits of the change, (3) positive and negative supports for change, (4) the role of staff resistance, and (5) the role of leadership. We also ran the same matrices for the intersections of these factors with tenure at the VA. Descriptive statistics were then used to analyze the results.

Anonymity was promised to all persons completing the survey or who were interviewed. No identifiable data or information was shared with any VA employees or officials. The UW-Madison Health Sciences Institutional Review Board approved the research as exempt.

Key Findings

Participant Characteristics

Surveys were sent to 870 VA personnel resulting in 427 (49.1%) responses that were further broken down across VISNs and by job classification and tenure. Staff demographics are in Table 2. Respondents were categorized into one of four job classifications [administration/management (n=151, 35.6%), clinical administrator/manager (n=84, 19.5%), clinician (n=137 32.3%), and other non-clinical or administrative staff (n=51, 12%)] and one of four VA tenure categories [less than 5 years (n=104), 5 to 12 years (n=88), 12 to 21 years (n=90), and greater than 21 years (n=88)]. The respondents had worked for the VA for approximately 14 years. The majority of the respondents were female and worked full-time at the VA. Demographics from the qualitative interview are also in Table 2.

Table 2.

Respondent Demographics

| Employee Demographics | % (N) | Mean | SD |

|---|---|---|---|

| Sex | |||

| Male | 21.7 (92) | ||

| Female | 48.3 (205) | ||

| Refused/No Response | 29.9 (127) | ||

| Race | |||

| American Indian/Alaska Native | 0.9 (4) | ||

| Asian | 3.1 (13) | ||

| Black or African American | 9.7 (41) | ||

| More than one Race | 3.1 (13) | ||

| Native Hawaiian or Other Pacific Islander | 0.9 (4) | ||

| White | 47.2 (200) | ||

| Refused to Answer | 11.6 (49) | ||

| No Response | 23.6 (100) | ||

| Average Tenure in Years | |||

| Facility Tenure | 12.0 | 9.8 | |

| VA Tenure (Staff Survey) | 13.7 | 10.1 | |

| Job Classification | |||

| Administration/Management | 35.6 (151) | ||

| Clinician Only1 | 32.3 (137) | ||

| Clinical Administrator/Management2 | 19.8 (84) | ||

| Other Non Clinical or Administrative Staff3 | 12.0 (51) | ||

| Qualitative Interview Demographics | |||

| VA Tenure in Years | |||

| 0 to 10 Years | 22 (11) | ||

| 11 to 20 Years | 28 (14) | ||

| 20 or more Years | 24 (12) | ||

| No Response | 26 (13) | ||

| Job Classification | |||

| Senior Leadership | 24 (12) | ||

| Line Staff 4 | 76 (38) |

Includes clinicians, RN’s, Occupational Therapist, Social Workers

Includes respondents who are both clinicians and managers

Includes clerical/reception, quality improvement, information technology and other support staff

Staff positions includes 25 clinical (including 12 nurses), 15 managers and 2 in both categories

Results by VISN

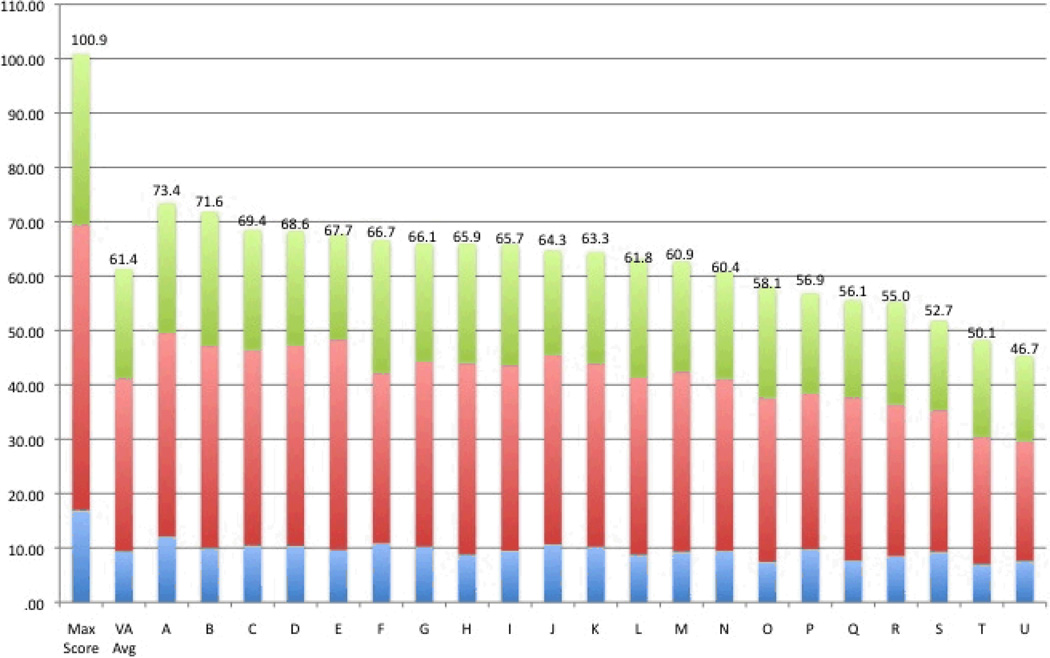

The overall average sustainability score across all VISNs was 61.4 (range: 46.7 to 73.4) out of a total attainable score of 100.9 points (see Figure 1). The difference in the total sustainability scores was significant (p=0.047) across the VISNs. Four of the individual sustainability questions differed across VISNs. These included: 1) adaptability of the change process (p=0.017), 2) fit with the organizational strategic plan (p=0.061), 3) senior leadership engagement (p=0.073), and 4) credibility of the benefits (p=0.099). When exploring differences by sustainability categories, we found marginally significant differences across VISNs’ related to the process of sustaining change (p=0.069) as well as perceptions regarding how staff were engaged (p=0.057).

Figure 1.

Variation in Total Sustainability Score by VISN

Influence of Tenure

We then examined how job classification and VA tenure might influence results. Only staff involvement (p=0.086) showed a marginally significant difference across tenure categories. Staff involvement measures the perception that staff were involved in the change process from the start and were adequately trained to sustain the new process. Using the questions’ maximum score of 11.5, we were able to compute average response by tenure category as a percent of maximum to examine differences. The results were as follows: less than 5 years (μ=6.32, 55.0%), 5 to 12 years (μ=5.61, 48.8%), 12 to 21 years (μ=6.98, 60.7%), and greater than 21 years (μ=7.02, 61.0%). This appears to indicate that long-term VA staff (tenure greater than 12 years) perceived that they were slightly more involved in the change process from initiation. Results may be driven by other factors within the VA, including the change leader and the strength of the change team’s social interaction.

Differences by Job Classification

A significant difference in perceptions of sustainability existed across staff from different job classifications. Table 3 summarizes the results by job category. Five individual sustainability questions were significantly different across job classifications: Benefits (p=0.001), Credibility (p=0.009), Staff Involvement (p=0.024), Staff Attitudes (p=0.011), and Infrastructure (p=0.018). The adaptability of the change showed a marginally significant difference by job class (p=0.060). The aggregation of the questions into sub-categories and overall score showed significant difference across VISNs’ for the Process (p=0.029) and Organization (p=0.050) category of SI scores as well as the total Sustainability Score (p=0.075).

Table 3.

Differences in Sustainability Scores by Job Category

| Overall ANOVA Results |

Mean Sustainability Scores by Job Category | |||||||

|---|---|---|---|---|---|---|---|---|

| Sustainability Index Factor | N of Cases |

F | p value | Administration/ Management |

Clinician | Clinician Administrator/ Management |

Other Non- Clinical or Admin Staff |

Overall |

| Benefits beyond helping patients | 378 | 5.57 | 0.001 | 5.61 | 4.44#*, ^ | 4.33*, ^ | 5.79 | 4.98 |

| Credibility of the Benefits | 373 | 3.94 | 0.009 | 5.94 | 4.93* | 4.89* | 5.72 | 5.36 |

| Adaptability of Improved Process | 374 | 2.49 | 0.060 | 5.22 | 4.50# | 5.14 | 5.11 | 4.96 |

| Effectiveness of the System | 370 | 0.87 | 0.456 | 4.97 | 4.57 | 4.97 | 4.93 | 4.83 |

| Staff Involvement and Training | 373 | 3.19 | 0.024 | 7.17 | 5.58* | 6.69 | 6.72 | 6.51 |

| Staff Attitudes | 373 | 3.77 | 0.011 | 5.76 | 4.37* | 4.32# | 5.96 | 5.03 |

| Senior Leadership Engagement | 367 | 1.06 | 0.365 | 10.21 | 8.97 | 9.38 | 9.21 | 9.53 |

| Clinical Leadership Engagement | 368 | 0.25 | 0.858 | 11.08 | 10.59 | 11.03 | 10.59 | 10.86 |

| Fit with the organization aims and culture | 364 | 1.52 | 0.208 | 4.83 | 4.36 | 4.18 | 4.64 | 4.52 |

| Infrastructure for Sustainability | 369 | 3.38 | 0.018 | 5.31 | 4.50 | 4.21#, ^ | 5.80 | 4.87 |

| Process SI Score | 346 | 3.03 | 0.029 | 21.75 | 18.93* | 19.27 | 21.38 | 20.24 |

| Staff SI Score | 346 | 1.645 | 0.153 | 34.22 | 29.66 | 31.30 | 31.07 | 31.76 |

| Organization SI Score | 346 | 2.63 | 0.050 | 10.10 | 8.99 | 8.33# | 10.25 | 9.36 |

| Total Sustainability Score | 346 | 2.32 | 0.075 | 66.07 | 57.57# | 58.91 | 62.70 | 61.36 |

As compared to the administrative/management mean, the difference is significant at p < 0.05

As compared to the administrative/management mean, the difference is significant at p < 0.10

As compared to the other non-clinical or admin staff mean, the difference is significant at p < 0.10

Clinicians’ perspectives of sustainability most often differed from those of managers. In each instance, managers’ perceptions were higher than the clinicians. Significant differences included benefits beyond helping patients (Δ = 1.17, p = 0.011), credibility of the benefits (Δ = 1.01, p = 0.020), staff involvement (Δ = 1.59, p = 0.014), staff attitudes (Δ = 1.39, p = 0.042), and the process sub SI score (Δ = 2.83, p = 0.038). Clinicians overall SI score also differs from managers (Δ = 8.50, p = 0.067) and for perceptions about adaptability (Δ = 0.72, p = 0.057). Clinicians’ opinions about the benefits of the change are lower than other non-support staff (Δ = −1.34, p = 0.062). In each case, the clinicians who are on the front line implementing change are less sure that changes will be sustained than managers or other support staff.

Clinician administrators also have significantly lower scores as compared to other managers. They are less sure that the change has benefits beyond helping patients (Δ = 1.29, p = 0.014) or believe in credibility of the benefits (Δ = 1.06, p = 0.037) than others in leadership. Their attitude toward change is also lower (Δ = 1.44, p = 0.075). Clinician administrators are skeptical that the infrastructure for sustainability (Δ = 1.11, p = 0.095) and the resources for change as captured in organization SI category score (Δ = 1.76, p = 0.069) are present in the organization. These results also indicated that clinician managers rated the benefits beyond helping patients (Δ = 1.46, p = 0.054) and the infrastructure for sustainability (Δ = 1.60, p = 0.062) lower than other support staff. Despite having a dual role, these differences reinforce the premise that clinicians have lower opinions about sustainability propensity in the organization than their peers in leadership or other support staff.

These perception differences warranted further exploration. The sustainability index allows a respondent to choose from one of four statements related to each of the ten factors. Each statement has an associated weight used in the calculation of the overall SI score. We explored the differences by examining the response distribution for each factor across the four job categories (Table 4). The results show significant differences in the responses for factors related to benefits (χ = 33.19, p = 0.000) and staff attitudes (χ = 24.99, p = 0.003). For each factor, administrators were more likely to assign the highest possible rating to the factor; however, clinicians and clinician administrators more often chose the lowest rating for this factor. The results also show a marginally significant difference in the response distribution for the credibility of the benefits and staff involvement factors. Although it was not possible to discern why staff members chose a particular answer, the qualitative interviews provide some insights into why these differences in perceptions exist.

Table 4.

Difference in Staff Responses to the Sustainability Index Factors by Job Category

| Number of Responses by Job Category | Percent by Job Category | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Highest Level Rating |

Second Level Rating |

Third Level Rating |

Lowest Level Rating (0) |

Total | Highest Level Rating |

Second Level Rating |

Third Level Rating |

Lowest Level Rating (0) |

Chi- Square |

P Value |

|

| Benefits beyond helping patients | 33.19 | 0.000 | |||||||||

| Administration Management | 51 | 66 | 1 | 17 | 135 | 37.8% | 48.9% | 0.7% | 12.6% | ||

| Clinician Only | 33 | 50 | 5 | 34 | 122 | 27.0% | 41.0% | 4.1% | 27.9% | ||

| Clinician Administrator | 16 | 44 | 0 | 20 | 80 | 20.0% | 55.0% | 0.0% | 25.0% | ||

| Non Clinical or Admin Staff | 18 | 15 | 4 | 5 | 42 | 42.9% | 35.7% | 9.5% | 11.9% | ||

| Credibility of the Benefits | 15.76 | 0.072 | |||||||||

| Administration Management | 32 | 65 | 27 | 8 | 132 | 24.2% | 49.2% | 20.5% | 6.1% | ||

| Clinician Only | 19 | 49 | 37 | 16 | 121 | 15.7% | 40.5% | 30.6% | 13.2% | ||

| Clinician Administrator | 14 | 31 | 22 | 13 | 80 | 17.5% | 38.8% | 27.5% | 16.3% | ||

| Non Clinical or Admin Staff | 13 | 14 | 9 | 5 | 41 | 31.7% | 34.1% | 22.0% | 12.2% | ||

| Adaptability of Improved Process | 10.25 | 0.330 | |||||||||

| Administration Management | 75 | 44 | 8 | 6 | 133 | 56.4% | 33.1% | 6.0% | 4.5% | ||

| Clinician Only | 53 | 39 | 17 | 12 | 121 | 43.8% | 32.2% | 14.0% | 9.9% | ||

| Clinician Administrator | 44 | 24 | 7 | 4 | 79 | 55.7% | 30.4% | 8.9% | 5.1% | ||

| Non Clinical or Admin Staff | 23 | 13 | 4 | 2 | 42 | 54.8% | 31.0% | 9.5% | 4.8% | ||

| Effectiveness of the System | 6.03 | 0.737 | |||||||||

| Administration Management | 75 | 31 | 17 | 7 | 130 | 57.7% | 23.8% | 13.1% | 5.4% | ||

| Clinician Only | 61 | 24 | 25 | 10 | 120 | 50.8% | 20.0% | 20.8% | 8.3% | ||

| Clinician Administrator | 46 | 16 | 13 | 4 | 79 | 58.2% | 20.3% | 16.5% | 5.1% | ||

| Non Clinical or Admin Staff | 23 | 11 | 7 | 1 | 42 | 54.8% | 26.2% | 16.7% | 2.4% | ||

| Staff Involvement and Training | 16.15 | 0.064 | |||||||||

| Administration Management | 53 | 38 | 25 | 17 | 133 | 39.8% | 28.6% | 18.8% | 12.8% | ||

| Clinician Only | 32 | 28 | 26 | 34 | 120 | 26.7% | 23.3% | 21.7% | 28.3% | ||

| Clinician Administrator | 30 | 22 | 12 | 15 | 79 | 38.0% | 27.8% | 15.2% | 19.0% | ||

| Non Clinical or Admin Staff | 15 | 7 | 12 | 8 | 42 | 35.7% | 16.7% | 28.6% | 19.0% | ||

| Staff Attitudes | 24.99 | 0.003 | |||||||||

| Administration Management | 40 | 35 | 29 | 29 | 133 | 30.1% | 26.3% | 21.8% | 21.8% | ||

| Clinician Only | 30 | 19 | 20 | 52 | 121 | 24.8% | 15.7% | 16.5% | 43.0% | ||

| Clinician Administrator | 19 | 16 | 10 | 34 | 79 | 24.1% | 20.3% | 12.7% | 43.0% | ||

| Non Clinical or Admin Staff | 12 | 15 | 7 | 7 | 41 | 29.3% | 36.6% | 17.1% | 17.1% | ||

| Senior Leadership Engagement | 8.09 | 0.525 | |||||||||

| Administration Management | 69 | 24 | 27 | 11 | 131 | 52.7% | 18.3% | 20.6% | 8.4% | ||

| Clinician Only | 52 | 22 | 25 | 19 | 118 | 44.1% | 18.6% | 21.2% | 16.1% | ||

| Clinician Administrator | 40 | 9 | 15 | 15 | 79 | 50.6% | 11.4% | 19.0% | 19.0% | ||

| Non Clinical or Admin Staff | 19 | 7 | 7 | 7 | 40 | 47.5% | 17.5% | 17.5% | 17.5% | ||

| Clinical Leadership Engagement | 4.33 | 0.888 | |||||||||

| Administration Management | 78 | 19 | 30 | 5 | 132 | 59.1% | 14.4% | 22.7% | 3.8% | ||

| Clinician Only | 67 | 20 | 22 | 10 | 119 | 56.3% | 16.8% | 18.5% | 8.4% | ||

| Clinician Administrator | 47 | 10 | 16 | 5 | 78 | 60.3% | 12.8% | 20.5% | 6.4% | ||

| Non Clinical or Admin Staff | 23 | 6 | 7 | 4 | 40 | 57.5% | 15.0% | 17.5% | 10.0% | ||

| Fit with the organization aims and culture | 9.13 | 0.426 | |||||||||

| Administration Management | 57 | 48 | 17 | 8 | 130 | 43.8% | 36.9% | 13.1% | 6.2% | ||

| Clinician Only | 42 | 45 | 17 | 13 | 117 | 35.9% | 38.5% | 14.5% | 11.1% | ||

| Clinician Administrator | 27 | 23 | 16 | 12 | 78 | 34.6% | 29.5% | 20.5% | 15.4% | ||

| Non Clinical or Admin Staff | 16 | 15 | 6 | 3 | 40 | 40.0% | 37.5% | 15.0% | 7.5% | ||

| Infrastructure for Sustainability | 14.35 | 0.110 | |||||||||

| Administration Management | 44 | 27 | 44 | 15 | 130 | 33.8% | 20.8% | 33.8% | 11.5% | ||

| Clinician Only | 29 | 21 | 49 | 20 | 119 | 24.4% | 17.6% | 41.2% | 16.8% | ||

| Clinician Administrator | 16 | 14 | 35 | 14 | 79 | 20.3% | 17.7% | 44.3% | 17.7% | ||

| Non Clinical or Admin Staff | 19 | 6 | 10 | 7 | 42 | 45.2% | 14.3% | 23.8% | 16.7% | ||

Qualitative Interviews

Table 5 summarizes the qualitative interviews into five major themes: initial outcomes and sustainability, impact of the change process, problem solving strategies, perspectives on leadership, and thoughts about the change process. Informants reported more positive than negative effects of the change process. Veterans were the greatest beneficiaries. “That’s our concept…the veteran decides what and where he wants to go…. It’s so wonderful that veterans are getting a say in their healthcare.” Staff benefited personally and professionally. “The impact on me – was stimulating, good to have a break from the clinical, meeting others." Finally, the organization also benefited from the change initiative in, “team spirit – and the unit has been positively affected, [as have the] facility measures;” and in growing the organization’s skills, “Team members were empowered with idea making. We used skills of the team and took them a step further.”

Table 5.

Results from the Qualitative Interviews: Staff Perceptions about Change

| N of Respondents |

% of Total |

|

|---|---|---|

| Initial Outcomes and Sustainability (N = 37) | ||

| Positive Results | 27 | 73% |

| Negative Results | 4 | 11% |

| Mixed Results | 6 | 16% |

| Impact of the Change Process (N = 37) | ||

| Benefits to: | ||

| Veterans | 26 | 70% |

| Staff | 15 | 41% |

| Organization | 14 | 38% |

| Self | 15 | 41% |

| Negative Consequences for | ||

| Veterans | 1 | 3% |

| Staff | 5 | 14% |

| Organization | 1 | 3% |

| Problem Solving Strategies (N=46) | ||

| Use of Quality Improvement Tools | 35 | 76% |

| Data and Evidences | 22 | 48% |

| PDSA | 18 | 39% |

| Flowcharting | 19 | 41% |

| Strong Communication/Collaboration | 28 | 61% |

| Institutional-Structural Changes | 28 | 61% |

| Positive Leadership | 21 | 46% |

| Learning Organization | 17 | 37% |

| Perspective on Leadership* (N=50) | ||

| Involved, supportive, or inspiring | 30 | 60% |

| Uninvolved or unaware, micromanaging, and\or not supportive | 21 | 42% |

| Thoughts about the Change Process (N = 50) | ||

| Embedded staff resistance (others resisted change) | 26 | 52% |

| Supported and contributed to the change project | 20 | 40% |

| Change fatigue, top down approach or skepticism challenged implementation and sustainability | 18 | 36% |

| I resisted change | 4 | 8% |

| Skeptical of top-down, but favored locally driven, change efforts | 3 | 6% |

1 respondent expressed both positive and negative perspectives

Respondents (n= 46) credited problem-solving strategies (e.g., effective communication, data, PDSA or flowcharting) to the success of their system redesign project. Communication creates a shared mission, “You must get buy-in: Everyone must understand why you’re doing it, the value of the project. You must relate the project to them.” It is also important to keep staff engaged in multiple ways such as at lunch and during staff meetings, “we went over what we’re doing and sent e-mails to inform everyone about what we were doing.” Modifications to job descriptions and training support an infrastructure for change, “We taught new staff and built change into people’s jobs.” Other quality improvement tools (PDSA and flow charting) drove the change process: “We do performance improvement planning very well. Most know of the PDSA. With the systems redesign, the flow process identifies the gaps and the follow-up …[and] had us look at the redesign [process].”

Despite higher levels of success than failure, respondents talked more about factors challenging rather than facilitating sustainability (N=30, 60% vs. N=22, 44%). Staff resistance and low leadership support were the most cited implementation challenges. Staff resistance (of others) was described as embedded in a culture of, “It’s always been done this way,” and a “Mind-numbing standstill – huge resistance to change, things stay the same.” Administrative staff and leaders were more likely to describe greater resistance among clinicians, “They want more control over their schedule, not to have their grids open for others to fill.” Clinicians acknowledged that their initial resistance relaxed, “He wanted [our] open slots so they could put patients in those slots. I opposed that, while I valued the idea,” and “…[we], took our time to address the issues.”

Low leadership support was cited as a major barrier to change (40% of respondents), “Often senior management goals are different than front line staff.” Respondents were far more likely to identify strongly involved leadership (60%) as a contributor to change project success, “He monitored [activities], [and] shared information at manager meetings so they were aware of the issues we were addressing and could move thru the continuum.” Despite these differences, the respondents were divided in their general perceptions about leadership support. Compared to administrators, clinicians (48% vs. 40%) were more likely to cite leadership’s lack of involvement as a challenge to sustainability. These findings are consistent with survey analyses. Unlike the surveys, the interview data did not show any differences by tenure at the VA.

Exploratory Analysis of the SI Data

The exploratory analysis found that three model variables were significant predictors of sustainability. The model intercept for the sustainability score is 5.71 and the adjusted R-Square for the model is 0.32 (F=32.69, p=0.000). The predictors include: 1) tracking outcomes overtime (β=10.74, p=0.000); 2) regular reporting of system redesign results to leadership (β = 9.59, p=0.000); and 3) ongoing use of PDSA Change Cycles or other rapid performance improvement techniques to improve the agency (β=6.67, p=0.000). Persons answering Yes to the three questions above have a higher sustainability score than those who had mixed responses. The administrative job classification was also in the final model (β=2.21, p=0.436). The results appear to support earlier findings that organizational leaders have a stronger belief that change will be sustained.

Discussion

VHA is well on its way to employing evidence-based strategies to implement change.12,47 The time is ripe to adopt a systematic approach to understand the factors that aid or impede sustaining these change initiatives. The multi-attribute British National Health Service Sustainability Index (SI) revealed significant variance in scores across VHA regional networks (VISNs) for a national VA mental health system redesign initiative. Despite a standardized, expert-led implementation, the variance in the sustainability scores signaled significant heterogeneity among the VISNs’ approach to implementing and sustaining change. These cross-VISN differences suggest opportunities to improve future change initiatives. For example, The British National Health Service uses a specific facility’s SI results to select and tailor interventions for their specific needs (e.g. staff attitudes). Likewise, this strategy could be used to tailor interventions for a specific facility or VISN.

Other studies have identified attributes associated with sustainability,2,7,10,23 however this appears to be the first to explore variance in sustainability by staff characteristics. Our analysis demonstrates that staff perceptions not only vary across organizations but also by job category and tenure. Differences in SI scores by job function provide insights into intra-organizational factors that affect sustainability. We also discovered different perceptions about leadership support for the change process. Clinicians or clinical managers were more likely to rate attributes associated with sustainability lower than did administrators or other non-support staff. These results may indicate that clinicians were not actively aware of the changes, were not offered opportunities to participate in the change process within their agency and as a result, do not believe in the benefits of the change. For example, it is a leadership responsibility to help staff understand that everyone benefits from the change process.48 When combined with findings from the qualitative analysis, our results suggest that tailoring approaches to change initiatives for different groups might be more effective in engaging their support. Disagreement among VA staff about sustainability supports the theory that efforts to implement change should address differences across the four levels of change (e.g, individual vs. group/team) within organizations and may inform future efforts to sustain change.49

A learning collaborative initiative that engages and empowers staff at the local level might be a promising approach to tailoring future initiatives.50 By engaging staff at the local level, it might increase participation, ownership, and support for the change process. This local participatory approach fosters buy-in, fits the change to the local environment, focuses implementation efforts, and rewards staff—all key attributes of sustainability.16,17 These efforts represent a critical component of quality improvement teams and a learning collaborative51,52 and are key to sustaining change.53

Research on the useful features of a learning collaborative and their relationship to improvement supports findings from the exploratory analysis.51 For example, participants were more likely to find the use of PDSA cycles helpful. The use of PDSA was higher for participants who improved, albeit not statistically significant. However, the monthly exchange of reports (i.e., regular MHSR leadership reports) was higher in organizations showing significant improvement.51 In light of this research, our exploratory analysis suggests that regular reports might enhance implementation and sustainability of quality improvement projects.

Future research should explore how specific interventions (i.e., sustainability planning) affects staff perceptions about the change process and its sustainability.7,15,29 Ongoing efforts to enhance personal growth or individual commitment might overcome indifferent leadership and staff resistance and help contribute to a culture of change that supports sustainability in future system redesign initiatives.49

This study had several limitations. Staff perceptions about sustainability were captured at the end of the Mental Health System Redesign initiative and were not measured over time. The retrospective reflection on an organizations’ propensity to sustain change may not be consistent with actual sustainability of the changes. Completion of the survey after the intervention may not account for changes in staff perceptions about sustainability overtime. While 49% of staff responded, the response rate varied across VISNs. This degree of variation may have influenced the VISN specific results. The qualitative interviews were conducted in only two of the twenty-three VISNs. While the sample included managers and staff, the issues and concerns raised are relevant to the environment within these two VISNs and may not reflect efforts to sustain change in another VISN. Our findings, based on surveys and interviews that were non-identifiable by VA managers and leaders, might be different had they been shared with immediate supervisors and managers.

Conclusion

Our results highlight the difficulty in creating a homogeneous implementation and sustainability process across a national health system. Despite the VHA’s best evidence-based implementation efforts, the significant variance must be understood and addressed in future projects. Particularly interesting is whether the SI could be used to diagnose and address sustainability problems in real time. While these results are informative, we still know very little about how a readiness to sustain change is related to actual sustainability of improvement or how participation in a quality improvement learning collaborative influences staff perceptions about and longitudinal changes in sustainability propensity within organizations—or ultimately in how changes are sustained in a healthcare system that must address evolving challenges.

Acknowledgements

We are indebted to Anna Wheelock for her hard work and dedication to this study. We are grateful to JoAnn Kirchner, PhD, Director of the Mental Health QUERI, Little Rock, VAMC and Mary Schonn, PhD. for their general assistance support. We would like to thank to Andrea Gianopoulos for her editorial assistance. We thank Mary Schonn, PhD Transformation Lead, Improving Veterans Mental Health for her comments on an earlier version of the manuscript. We also appreciate the many employees of the Veterans Administration who took the time to participate in this study.

Funding/Support: This material is based upon work supported by the Department of Veterans Affairs, Veterans Health Administration, Office of Research and Development" Mental Health Queri (RRP 09-161). Dr Ford’s work was also supported in part by grant 5 R01 DA020832 from the National Institute of Drug Abuse.

Footnotes

"The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government."

Mental Health Systems Redesign (http://www.hsrd.research.va.gov/for_researchers/cyber_seminars/archives/qip-052609.pdf)

Veteran Engineering Research Center (http://www.queri.research.va.gov/meetings/verc/Woodbridge.ppt)

British National Health Service Sustainability Tool (http://www.institute.nhs.uk/sustainability_model/introduction/find_out_more_about_the_model.html)

Contributor Information

James H. Ford, II, Assistant Scientist, Center for Health Enhancement System Studies, University of Wisconsin – Madison.

Dean Krahn, Chief, Mental Health Service, William S. Middleton Memorial Veterans Hospital, Madison, Wisconsin; Professor (CHS), Department of Psychiatry, University of Wisconsin, Madison, WI.

Meg Wise, Associate Scientist, Center for Health Enhancement System Studies, University of Wisconsin – Madison.

Karen Anderson Oliver, Health Systems Specialist, William S. Middleton Memorial Veterans Hospital, Madison, Wisconsin.

References

- 1.Beery WL, Senter S, Cheadle A, et al. Evaluating the legacy of community health initiatives. Am J Eval. 2005;26(2):150–165. [Google Scholar]

- 2.Scheirer MA. Is sustainability possible? A review and commentary on empirical studies of program sustainability. Am J Eval. 2005;26(3):320–347. [Google Scholar]

- 3.Beer M, Nohria N. Cracking the code of change. Harv Bus Rev. 2000 May–June;:15–23. [PubMed] [Google Scholar]

- 4.Strebel P. Why do employees resist change? Harv Bus Rev. 1996;74(3):86–94. [Google Scholar]

- 5.Boss RW, Dunford BB, Boss AD, McConkie ML. Sustainable change in the public sector: the longitudinal benefits of organization development. J Appl Behav Sci. 2010;46(4):436–472. [Google Scholar]

- 6.Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Johnson K, Hays C, Center H, Daley C. Building capacity and sustainable prevention innovations: a sustainability planning model. Eval Program Plann. 2004;27(2):135–149. [Google Scholar]

- 8.Hagedorn H, Hogan M, Smith JL, et al. Lessons learned about implementing research evidence into clinical practice. J Gen Intern Med. 2006;21 S2:S21–S24. doi: 10.1111/j.1525-1497.2006.00358.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bateman N. Sustainability: the elusive element of process improvement. Int J Oper Product Manage. 2005;25(3):261–276. [Google Scholar]

- 10.Bray P, Cummings DM, Wolf M, Massing MW, Reaves J. After the collaborative is over: what sustains quality improvement initiatives in primary care practices? Jt Comm J Qual Patient Saf. 2009;35(10) doi: 10.1016/s1553-7250(09)35069-2. 502-3AP. [DOI] [PubMed] [Google Scholar]

- 11.Jacobs RL. Institutionalizing organizational change through cascade training. J Eur Ind Train. 2002;26:177–182. [Google Scholar]

- 12.Solberg L. Lessons for non-VA care delivery systems from the US Department of Veterans Affairs Quality Enhancement Research Initiative: QUERI series. Implement Sci. 2009;4:9. doi: 10.1186/1748-5908-4-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.O'Loughlin J, Renaud L, Richard L, Sanchez Gomez L, Paradis G. Correlates of the sustainability of community-based heart health promotion interventions. Prev Med. 1998;27(05):702–712. doi: 10.1006/pmed.1998.0348. [DOI] [PubMed] [Google Scholar]

- 14.Savaya R, Spiro S, Elran-Barak R. Sustainability of social programs. Am J Eval. 2008;29(4):478–493. [Google Scholar]

- 15.Davies B, Edwards N. The action cycle: sustain knowledge use. In: Strauss S, Tetroe J, Graham ID, editors. Knowledge Translation in Health Care: Moving from Evidence to Practice. Oxford: Wiley-Blackwell; 2009. pp. 165–173. [Google Scholar]

- 16.Parker LE, de Pillis E, Altschuler A, Rubenstein LV, Meredith LS. Balancing participation and expertise: a comparison of locally and centrally managed health care quality improvement within primary care practices. Qual Health Res. 2007;17(9):1268–1279. doi: 10.1177/1049732307307447. [DOI] [PubMed] [Google Scholar]

- 17.Parker LE, Kirchner JAE, Bonner LM, et al. Creating a quality-improvement dialogue: utilizing knowledge from frontline staff, managers, and experts to foster health care quality improvement. Qual Health Res. 2009;19(2):229–242. doi: 10.1177/1049732308329481. [DOI] [PubMed] [Google Scholar]

- 18.Huq Z, Martin TN. Workforce cultural factors in TQM/CQI implementation in hospitals. Health Care Manage Rev. 2000;25(3):80–93. doi: 10.1097/00004010-200007000-00009. [DOI] [PubMed] [Google Scholar]

- 19.Gollop R, Whitby E, Buchanan D, Ketley D. Influencing skeptical staff to become supporters of service improvement: a qualitative study of doctors’ and managers’ views. Qual Saf Health Care. 2004;13(2):108–114. doi: 10.1136/qshc.2003.007450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Savaya R, Elsworth G, Rogers P. Projected sustainability of innovative social programs. Eval Rev. 2009;33(2):189–205. doi: 10.1177/0193841X08322860. [DOI] [PubMed] [Google Scholar]

- 21.Wang A, Wolf M, Carlyle R, Wilkerson J, Porterfield D, Reaves J. The North Carolina experience with the diabetes health disparities collaboratives. Jt Comm J Qual Saf. 2004;30(7):396–404. doi: 10.1016/s1549-3741(04)30045-6. [DOI] [PubMed] [Google Scholar]

- 22.Black JS, Gregersen HB. Leading Strategic Change: Breaking Through the Brain Barrier. Upper Saddle River, NJ: Pearson Education, Inc.; 2002. [Google Scholar]

- 23.Buchanan D, Fitzgerald L, Ketley D, et al. No going back: a review of the literature on sustaining organizational change. Int J Manage Rev. 2005;7(3):189–205. [Google Scholar]

- 24.Kotter JP, Schlesinger LA. Choosing strategies for change. Harv Bus Rev. 1979;57(2):106–114. [PubMed] [Google Scholar]

- 25.Kotter JP. Leading Change. Boston, MA: Harvard Business Press; 1996. [Google Scholar]

- 26.Kotter JP, Cohen DS. The Heart of Change: Real-Life Stories of How People Change Their Organization. Boston, MA: Harvard Business School Press; 2002. [Google Scholar]

- 27.Shediac-Rizkallah MC, Bone LR. Planning for the sustainability of community-based health programs: conceptual frameworks and future directions for research, practice and policy. Health Educ Res. 1998;13(1):87–108. doi: 10.1093/her/13.1.87. [DOI] [PubMed] [Google Scholar]

- 28.Weiner BJ, Shortell SM, Alexander J. Promoting clinical involvement in hospital quality improvement efforts: the effects of top management, board, and physician leadership. Health Serv Res. 1997;32(4):491–510. [PMC free article] [PubMed] [Google Scholar]

- 29.Sridharan S, Go S, Zinzow H, Gray A, Gutierrez Barrett M. Analysis of strategic plans to assess planning for sustainability of comprehensive community initiatives. Eval Program Plann. 2007;30(1):105–113. doi: 10.1016/j.evalprogplan.2006.10.006. [DOI] [PubMed] [Google Scholar]

- 30.Bonello RS, Fletcher CE, Becker WK, et al. An intensive care unit quality improvement collaborative in nine Department of Veterans Affairs hospitals: reducing ventilator-associated pneumonia and catheter-related bloodstream infection rates. Jt Comm J Qual Patient Saf. 2008;34(11):639–645. doi: 10.1016/s1553-7250(08)34081-1. [DOI] [PubMed] [Google Scholar]

- 31.Cleeland CS, Reyes-Gibby CC, Schall M, et al. Rapid improvement in pain management: the Veterans Health Administration and the Institute for Healthcare Improvement collaborative. Clin J Pain. 2003;19(5):298–305. doi: 10.1097/00002508-200309000-00003. [DOI] [PubMed] [Google Scholar]

- 32.Fremont AM, Joyce G, Anaya HD, et al. An HIV Collaborative in the VHA: do advanced HIT and one-day sessions change the collaborative experience? Jt Comm J Qual Patient Saf. 2006;32(6):324–336. doi: 10.1016/s1553-7250(06)32042-9. [DOI] [PubMed] [Google Scholar]

- 33.Hammermeister KE, Johnson R, Marshall G, Grover FL. Continuous assessment and improvement in quality of care. A model from the Department of Veterans Affairs cardiac surgery. Ann Surg. 1994;219(3):281–290. doi: 10.1097/00000658-199403000-00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Khuri SF, Daley J, Henderson W, et al. The Department of Veterans Affairs' NSQIP: the first national, validated, outcome-based, risk-adjusted, and peer-controlled program for the measurement and enhancement of the quality of surgical care. National VA Surgical Quality Improvement Program. Ann Surg. 1998;228(4):491–507. doi: 10.1097/00000658-199810000-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lukas CVD, Meterko MM, Mohr D, et al. Implementation of a clinical innovation: the case of advanced clinic access in the Department of Veterans Affairs. J Ambulatory Care Manage. 2008;31(2):94–108. doi: 10.1097/01.JAC.0000314699.04301.3e. [DOI] [PubMed] [Google Scholar]

- 36.Neily J, Mills PD, Young-Xu Y, et al. Association between implementation of a medical team training program and surgical mortality. JAMA. 2010;304(15):1693–1700. doi: 10.1001/jama.2010.1506. [DOI] [PubMed] [Google Scholar]

- 37.Nolan K, Schall MW, Erb F, Nolan T. Using a framework for spread: the case of patient access in the Veterans Health Administration. Jt Comm J Qual Patient Saf. 2005;31(6):339–347. doi: 10.1016/s1553-7250(05)31045-2. [DOI] [PubMed] [Google Scholar]

- 38.Piette JD, Weinberger M, Kraemer FB, McPhee SJ. Impact of automated calls with nurse follow-up on diabetes treatment outcomes in a Department of Veterans Affairs health care system. Diabetes Care. 2001;24(2):202–208. doi: 10.2337/diacare.24.2.202. [DOI] [PubMed] [Google Scholar]

- 39.Stetler C, McQueen L, Demakis J, Mittman B. An organizational framework and strategic implementation for system-level change to enhance research-based practice: QUERI Series. Implement Sci. 2008;3:30. doi: 10.1186/1748-5908-3-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Weeks WB, Mills PD, Waldron J, Brown SH, Speroff T, Coulson LR. A model for improving the quality and timeliness of compensation and pension examinations in VA facilities. J Healthc Manag. 2003;48(4):252–262. [PubMed] [Google Scholar]

- 41.Wilson T, Berwick DM, Cleary PD. What do collaborative improvement projects do? Experience from seven countries. Jt Comm J Qual Saf. 2003;29(2):85–93. doi: 10.1016/s1549-3741(03)29011-0. [DOI] [PubMed] [Google Scholar]

- 42.Davies B, Tremblay D, Edwards N. Sustaining evidence based practice systems and measuring the impacts. In: Bick D, Graham ID, editors. Evaluating the Impact of Implementing Evidence Based Practice. Oxford: Wiley-Blackwell; 2010. pp. 165–188. [Google Scholar]

- 43.Maher L, Gustafson D, Evans A. Sustainability. Leicester, England: British National Health Service Modernization Agency; 2004. [Google Scholar]

- 44.Strauss AL. Qualitative Analysis for Social Scientists. Cambridge: Cambridge University Press; 1987. [Google Scholar]

- 45.Pennebaker JW. Telling stories: the health benefits of narrative. Lit Med. 2000;19(1):3–18. doi: 10.1353/lm.2000.0011. [DOI] [PubMed] [Google Scholar]

- 46.Pennebaker JW, Francis ME. Cognitive, emotional, and language processes in disclosure. Cogn Emot. 1996;10(6):601–626. [Google Scholar]

- 47.Bowman C, Sobo E, Asch S, Gifford A. Measuring persistence of implementation: QUERI series. Implement Sci. 2008;3(1):21. doi: 10.1186/1748-5908-3-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ham C, Kipping R, McLeod H. Redesigning work processes in health care: lessons from the National Health Service. Milbank Q. 2003;81(3):415–439. doi: 10.1111/1468-0009.t01-3-00062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ferlie EW, Shortell SH. Improving the quality of health care in the United Kingdom and the United States: a framework for change. Milbank Q. 2001;79(2):281–315. doi: 10.1111/1468-0009.00206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Roosa M, Scripa JS, Zastowny TR, Ford JH., II . Eval Program Plann. Using a NIATx based local learning collaborative for performance improvement. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Nembhard IM. Learning and improving in quality improvement collaboratives: which collaborative features do participants value most? Health Serv Res. 2009;44(2p1):359–378. doi: 10.1111/j.1475-6773.2008.00923.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mills PD, Weeks WB. Characteristics of successful quality improvement teams: lessons from five collaborative projects in the VHA. Jt Comm J Qual Saf. 2004;30(3):105–115. doi: 10.1016/s1549-3741(04)30017-1. [DOI] [PubMed] [Google Scholar]

- 53.Oldham J. Achieving large system change in health care. JAMA. 2009;301(9):965–966. doi: 10.1001/jama.2009.228. [DOI] [PubMed] [Google Scholar]