1. INTRODUCTION

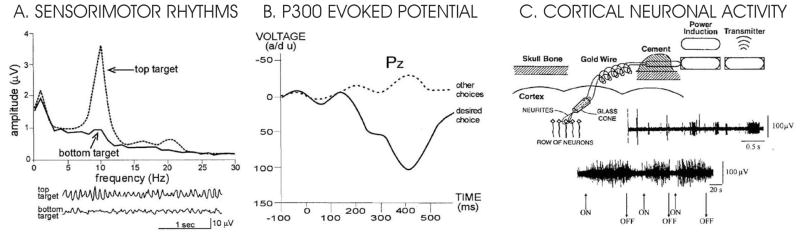

Brain activity produces electrical signals that are detectable on the scalp, on the cortical surface or within the brain. Brain computer interfaces (BCIs) translate these signals into outputs that function to allow the user to communicate without the participation of peripheral nerves and muscles [36] (see Figure 1). Because they do not depend on neuromuscular control, BCIs can provide options for communication and control for people with devastating neuromuscular disorders such as amyotrophic lateral sclerosis (ALS), brainstem stroke, cerebral palsy and spinal cord injury. The central purpose of BCI research and development is to enable these users to convey their wishes to caregivers, to use word-processing programs and other software, or even to control a robotic arm or a neuroprosthesis. More recently there has been speculation that BCIs could be useful to people with lesser, or even no, motor impairments.

Figure 1.

Basic design and operation of any BCI system. Signals from the brain are acquired by electrodes on the scalp or head and processed to extract specific signal features that reflect the user’s intent. These features are translated into commands to operate a device. The user must develop and maintain good correlation between his or her intent and the signal features employed by the BCI and the BCI must select and extract features that the user can control and must translate those features into device commands. (adapted from [36]).

It has been known since the pioneering work of Hans Berger over 80 years ago that the electrical activity of the brain can be recorded non-invasively by electrodes on the surface of the scalp [23]. Berger observed that a rhythm of about 10 Hz was prominent on the posterior scalp and was reactive to light. He called it the alpha rhythm. This and other observations by Berger showed that the electroencephalogram (EEG) could serve as an index of the gross state of the brain. Despite Berger’s careful work many scientists were initially skeptical and there were suggestions that the EEG might represent some sort of artifact. However, subsequent work demonstrated conclusively that the EEG is produced by brain activity [23].

Electrodes on the surface of the scalp are at some distance from brain tissue and are separated from it by the coverings of the brain, the skull, subcutaneous tissue, and the scalp. As a result, the signal is considerably degraded and only the synchronized activity of large numbers of neural elements can be detected. This limits the resolution with which brain activity can be monitored. Furthermore, scalp electrodes also pick up activity from sources other than the brain. These other sources include environmental noise (e.g., 50- or 60-Hz activity from power lines) and biological noise (e.g. activity from the heart, skeletal muscles, and eyes). Nevertheless, since the time of Berger, many studies have used the EEG very effectively to gain insight into brain function. Many of these studies have used averaging to separate EEG from superimposed electrical noise.

There are two major paradigms used in EEG research. Evoked potentials are transient waveforms (i.e., brief pertubations in the ongoing activity) that are phase-locked to an event such as a visual stimulus. They are typically analyzed by averaging many similar events in the time-domain. The EEG also contains oscillatory features. Although these oscillatory features may occur in response to specific events, they are usually not phase-locked and are typically studied by spectral analysis. Historically, most EEG studies have examined phase-locked evoked potentials. Both of these major paradigms have been applied in BCIs [36].

The term brain-computer interface can be traced to Vidal who devised a BCI system based on visual evoked-potentials [34]. His users viewed a diamond-shaped red checkerboard illuminated with a xenon flash. By attending to different corners of the flashing checkerboard they could generate right, up, left, or down commands. This enabled them to move through a maze presented on a graphic terminal. An IBM360 mainframe digitized the data and a XDS Sigma 7 computer controlled the experimental events. The users first provided data to train a stepwise linear discriminant function. These users then navigated the maze online in real-time. Thus, Vidal [34] used signal processing techniques to realize real-time analysis of the EEG with minimal averaging. The waveforms shown by Vidal [34] suggest that his BCI used EEG activity in the time frame of the N100-P200 components (The N and P indicate negative and positive peaks and the numbers indicate the approximate latency in msec).

Vidal’s achievement was an interesting demonstration of proof-of-principle. At the time, it was far from practical given that it depended on a time-shared system with limited processing capacity. Vidal [34] also included in his system online removal of non-EEG (i.e., ocular) artifacts. Somewhat earlier, Dewan [6] instructed users to explicitly use eye movements in order to modulate their brain waves. He showed that subjects could learn to transmit Morse code messages using EEG activity associated with eye movements.

The fact that both the Dewan and Vidal BCIs depended on eye movements made them somewhat less interesting from scientific or clinical points of view, since they required actual muscle control (i.e., eye movements) and simply used EEG to reflect the resulting gaze direction.

2. VARIETIES OF BCI SIGNALS

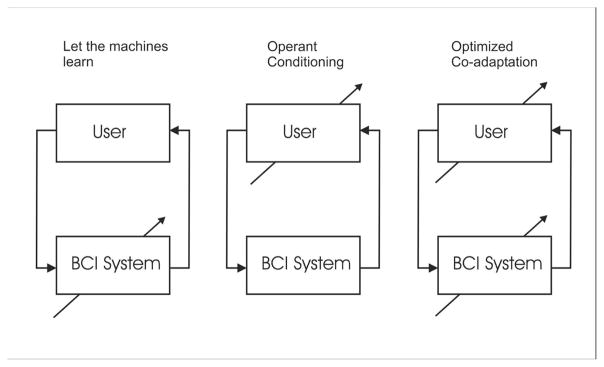

Farwell and Donchin [7] reported the first use of a P300-based spelling device (see Figure 2b). The P300 is a positive potential occurring around 300 msec after an event that is significant to the subject. It is considered a “cognitive” potential since it is generated in tasks when subjects attend and discriminate stimuli. Farwell and Donchin’s[7] users viewed a 6 × 6 matrix of the letters of the alphabet and several other symbols. They focused attention on the desired selection as the rows and columns of the matrix were repeatedly flashed to elicit visual evoked potentials. Farwell and Donchin [7] found that their users were able to spell the word “brain” with the P300 spelling device. In addition, they did an offline comparison of detection algorithms and found that the stepwise linear discriminant analysis was generally best. The fact that the P300 potential reflects attention rather than simply gaze direction implied that this BCI did not depend on muscle (i.e., eye-movement) control. Thus, it represented a significant advance. Several groups have further developed this BCI method [13].

Figure 2.

Present-day human BCI systems. A–B are non-invasive and Cis invasive. A. Sensorimotor rhythm BCI. Scalp EEG is recorded over sensorimotor cortex. Users control the amplitude of rhythms to move a cursor to a target on the screen. B. P300 BCI. A matrix of possible choices is presented on a screen and scalp EEG is recorded while these choices flash in succession. C. Cortical neuronal BCI. Electrodes implanted in cortex detect action potentials of single neurons. Users learn to control neuronal firing rate to move a cursor on a screen. (adapted from [36]).

Wolpaw et al. [38] reported the first use of sensorimotor rhythms (SMRs) for cursor control (see Figure 2a). Sensorimotor rhythms are EEG rhythms that change with movement or the imagination of movement and are spontaneous in the sense that they do not require specific stimuli for their occurrence. People learned to vary their SMRs to move a cursor to hit one of two targets located on the top or bottom edge of a video screen. Cursor movement was controlled by SMR amplitude (measured by spectral analysis). A distinctive feature of this task is that it required the user to rapidly switch between two states in order to select a particular target. The rapid bidirectional nature of the Wolpaw et al. [38] paradigm made it distinct from prior studies that produced long-term unidirectional changes in brain rhythms (e.g,, users were required to maintain an increase in the size of an EEG rhythm for minutes at a time). In a series of subsequent studies, this group showed that the signals that controlled the cursor were actual EEG activity and also that covert muscle activity did not mediate this EEG control [18,31].

These initial SMR results were subsequently replicated by others [21,24] and extended to multidimensional control [37]. Together, these P300 and SMR BCI studies showed that non-invasive EEG recording of brain signals can serve as the basis for communication and control devices.

A number of laboratories have explored the possibility of developing brain-computer interfaces using single-neuron activity detected by microelectrodes implanted in cortex [12,30] (see Figure 2c). Much of this research had been done in non-human primates. However there have been trials in human users [12]. Other studies have shown that recordings of electrocorticographic (ECoG) activity from the surface of the brain can also provide signals for a BCI [15]. To date these studies have indicated that invasive recording methods could also serve as the basis for BCIs. At the same time, important issues concerning their suitability for long-term human use remain to be resolved.

Earlier studies demonstrating operant conditioning of single units in motor cortex of primates [9], the hippocampal theta rhythm of dogs [2] or the sensorimotor rhythm in humans [29] showed that brain activity could be trained with operant techniques. However these studies are not demonstrations of BCI systems for communication and control since they required subjects to increase brain activity for periods of many minutes. Thus they showed that brain activity could be tonically altered in a single direction by training. However communication and control devices require that the user be able to select from at least two distinct alternatives (i.e., there must be at least one bit of information per selection). Effective communication and control devices require users to rapidly switch between multiple alternatives.

In addition to electrophysiological measures, researchers have also demonstrated the feasibility of magnetoencephalography (MEG)[20], functional magnetic resonance imaging (fMRI)[28], and near-infrared systems (fNIR)[4]. Present technology for recording MEG and fMRI is both expensive and very bulky, making these technologies unlikely for practical applications in the near term. fNIR is potentially cheaper and more compact. However both fMRI and fNIR are based on changes in cerebral blood flow, which is an inherently slow response [11]. Currently electrophysiological features represent the most practical signals for BCI applications.

3. BCI SYSTEM DESIGN

Communication and control applications are interactive processes that require the user to observe the results of their efforts in order to maintain good performance and to correct mistakes. For this reason, actual BCIs need to run in real-time and to provide real-time feedback to the user. While many early BCI studies satisfied this requirement [24,38], later studies have often been based on offline analyses of pre-recorded data [1]. For example, in the Lotte et al. [16] review of studies evaluating BCI signal classification algorithms, most had used offline analyses. Indeed, the current popularity of BCI research is probably due in part to the ease with which offline analyses can be performed on publicly available data sets. While such offline studies may help to guide actual online BCI investigations, there is no guarantee that offline results will generalize to online performance. The user’s brain signals are often affected by the BCI’s outputs, which are in turn determined by the algorithm that the BCI is using. Thus, it is not possible to predict results exactly from offline analyses that cannot take those effects into account.

Blankertz et al. [3] note several trends in the results of a BCI data classification competition. Most of the winning entries used linear classifiers, the most popular being Fisher’s discriminant and linear support vector machines (SVM). The winning entries for data sets with multichannel oscillatory features often used common spatial patterns. In their review of the literature on BCI classifiers, Lotte et al. [16] conclude that SVMs are particularly efficient. They attribute this to their regularization property and their immunity to the curse-of-dimensionality. They also concluded that combinations of classifiers seem to be very efficient. Lotte et al. [16] also note that there is a lack of comparisons of classifiers within the same study using otherwise identical parameters.

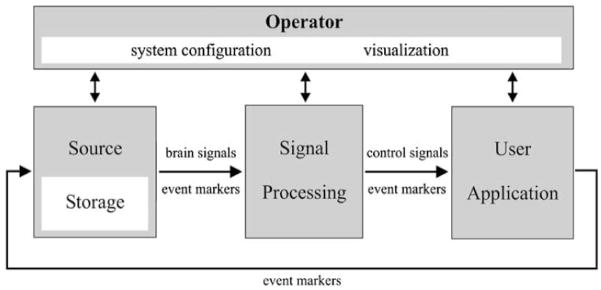

Muller and Blankertz [21] have advocated a machine learning approach to BCI in which a statistical analysis of a calibration measurement is used to train the system. The goal of this approach is to develop a “zero-training” method that provides effective performance from the first session. They contrast this approach with one based on training users to control specific features of brain signals [38]. A system that can be used without extensive training is appealing since it requires less initial effort on the part of both the BCI user and the system operator. The operation of such a system is based upon the as yet uncertain premise that the user can repeatedly and reliably maintain the specified correlations between brain signals and intent. Figure 3 presents three different conceptualizations of where adaptation might take place to establish and maintain good BCI performance. In the first the BCI adapts to the user, in the second the user adapts to the BCI, and in the third both user and system adapt to each other.

Figure 3.

Three approaches to BCI design. The arrows indicate the element that adapts. This is either the BCI, the user, or both adapt to optimize and maintain BCI performance. (adapted from [17]).

A number of BCI systems are designed to detect the user’s performance of specific cognitive tasks. Curran et al. [3] suggest that cognitive tasks such as navigation and auditory imagery might be more useful in driving a BCI than motor imagery. However, sensorimotor rhythm-based BCIs may have several advantages over systems that depend on complex cognitive operations. For example, the structures involved in auditory imagery are also likely to be driven by auditory sensory input. Wolpaw and McFarland [37] report that with extended practice users report that motor imagery no longer is necessary to operate a sensorimotor rhythm-based BCI. As is typical of many simple motor tasks, performance becomes automatized with extended practice. Automatized performance may be less likely to interfere with mental operations that users might wish to engage in concurrent with BCI use. For example, composing a manuscript is much easier if the writer does not need to think extensively about each individual keystroke.

As noted above, EEG recording may be contaminated by non-brain activity (e.g., line noise, muscle activity, etc.) (see Fatourechi et al. [8] for a review). Activity recorded from the scalp represents the superposition of many signals, some of which originate in the brain and some of which originate elsewhere. These include potentials generated by the retinal dipoles (i.e., eye movements and eye blinks) and the facial muscles. It is noteworthy that mental effort is often associated with changes in eye blink rate and muscle activity [35]. BCI Users might generate these artifacts without being aware of what they are doing simply by making facial expressions associated with effort.

Facial muscles can generate signals with energy in the spectral bands used as features in an SMR-based BCI [18]. Muscle activity can also modulate SMR activity. For example, a user could move his/her right hand in order to desynchronize the mu rhythm over the left hemisphere. This sort of mediation of the EEG by peripheral muscle movements was a concern during the early days of BCI development. As noted earlier, Dewan [6] trained users to send Morse code messages using occipital alpha rhythms modulated by voluntary movements of eye muscles. For this reason, Vaughan et al. [33] recorded EMG from 10 distal limb muscles while BCI users used central mu or beta rhythms to move a cursor to targets on a video screen. EMG activity was very low in these well-trained users. Most importantly, the correlations between target position and EEG activity could not be accounted for by EMG activity. Similar studies have been done with BCI users moving a cursor in two dimensions [37]. These studies show that SMR modulation does not require actual movements or muscle activity.

4. BCI APPLICATIONS

There have been several recent BCI spelling systems based different EEG signals including the mu rhythm [22,26] and the P300 [31]. Both of the mu rhythm systems made use of machine learning paradigms that minimized training. Users of the mu-based systems reportedly averaged between 2.3–7 characters/min [22] and 2.85–3.38 characters/min [26]. The P300 system averaged 3.66 selections/min [31]. As noted by Townsend et al. [24] the reported rate depends upon how the figure is computed and authors often do not provide details. Omitting time between trials increased Townsend et al.’s [31] results from 3.66 to 5.92 characters/sec. In any case, these various systems perform within a similar general range. At current rates, only users with limited options could benefit from their use.

BCI systems have also been developed for control applications. For example several groupos have shown that human subjects could use EEG to drive a simulated wheelchair [10,14]. Bell et al. [1] showed that the P300 could be used to select between complex commands to a partially autonomous humanoid robot. For a review of the use of BCI for robotic and prosthetic devices see McFarland and Wolpaw [19].

Several commercial concerns have recently produced inexpensive devices that are purported to measure EEG. Both Emotiv and Neurosky have developed products with a limited number of electrodes that do not use conventional gel-based recording technology [27]. These devices are intended to provide input for video games. It is not clear to what extent these devices use actual EEG activity as opposed to scalp muscle activity or other non-brain signals. Given the well-established prominence of EMG activity in activity recorded from the head, it seems likely that such signals account for much of the control produced by these devices [27].

5. PROSPECTS AND CONCLUSIONS

In a review of the use of BCI technology for robotic and prosthetic devices, McFarland and Wolpaw [19] concluded that the major problem currently facing BCI applications is providing fast, accurate and reliable control signals. This is true for other uses of BCI as well. Current BCI systems that have been shown to operate using actual brain activity can provide communication and control options that are of practical value mainly for people who are severely limited in their motor skills and thus have few other options. Widespread use of BCI technology by individuals with little or no disability is unlikely in the short-term. This would require much greater speed and accuracy than has so far been demonstrated in the scientific literature.

Both non-invasive and invasive methods could benefit from improvements in recording methods. Current invasive methods have not yet dealt adequately with the need for long-term performance stability. The brain’s complex reaction to an implant is still imperfectly understood and can impair long-term performance. Current non-invasive (i.e., EEG) electrodes require a certain level of skill in the person placing them, and require periodic maintenance to maintain sufficiently good contact with the skin. More convenient and stable electrodes are under development. Improved methods for extracting key EEG features, for translating those features into device control, and for training users should also help to improve BCI performance.

Recent developments in computer hardware have provided compact portable systems that are extremely powerful. Use of digital electronics has also lead to improvements in the size and performance of EEG amplifiers. Thus it is no longer necessary to use a large time-shared mainframe as was the case with Vidal [34]. Standard laptops can easily handle the vast majority of real-time BCI protocols. There have also been improvements in signal processing and machine learning algorithms. Coupled with the discovery of new EEG features for BCI use and the development of new paradigms for user training, these improvements are gradually increasing the speed and reliability of BCI communication and control. These developments can be facilitated by the BCI2000 software platform [25].

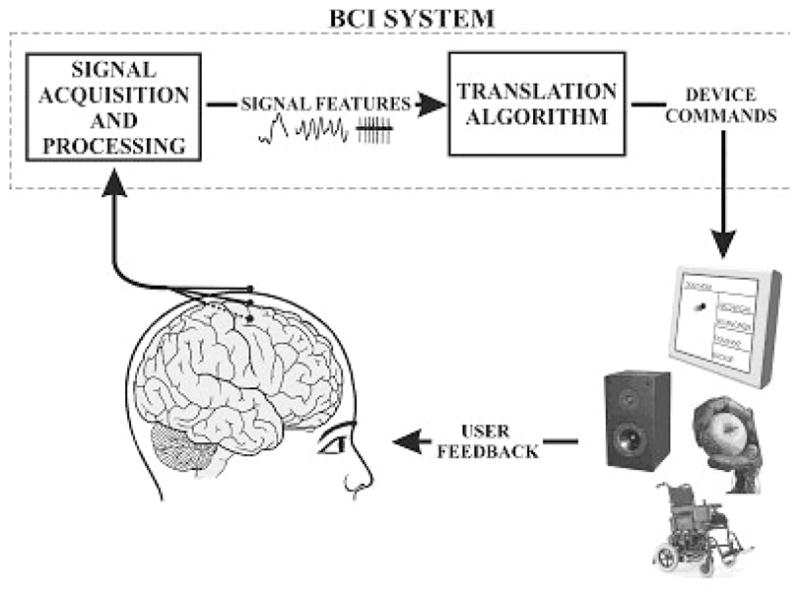

BCI2000 is a general-purpose research and development system that can incorporate alone or in combination any brain signals, signal processing methods, output devices, and operating protocols. BCI2000 consists of a general standard for creating interchangeable modules designed according to object-oriented principles (see Figure 5). These consist of a source module for signal acquisition, a signal processing module, and a user application. Configuration and coordination of these three modules is accomplished by a fourth operator module. To date several different source modules, signal processing modules, and user applications have been created for the BCI2000 standard (see http://www.bci2000.org/BCI2000/Home.html).

Figure 5.

BCI2000 design. BCI2000 consists of four modules: operator, source, signal processing, and application. The operator module deals with system configuration and online presentation of results to the investigator. During operation, information is communicated from source to signal processing to user application and back to source (adapted from [25]).

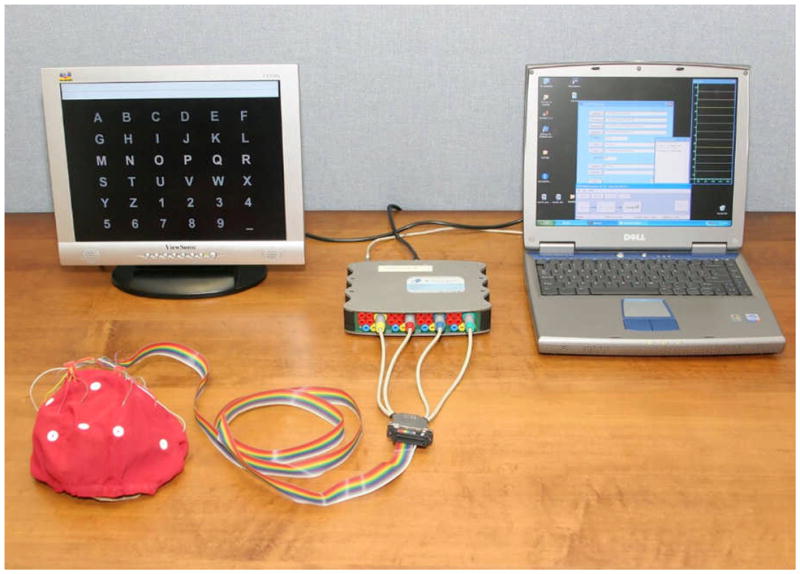

We have recently begun to develop a system for home use by individuals with severe motor impairments [32]. The basic hardware for this system is shown in Figure 4 and consists of a laptop computer with 16 channel EEG acquisition, a second screen that is placed in front of the user, and an electrode cap. Software for this system is provided by the BCI2000 general-purpose system [25]. The initial users of this system are individuals with late-stage ALS who have little or no voluntary movement and have found conventional assistive communication devices to be inadequate for their needs. We use the P300-based matrix speller for these applications due to its relatively high throughput for spelling and simplicity of use. Currently, a 49-year-old scientist with ALS has used this BCI system on a daily basis for approximately 3 years. He found it superior to his eye-gaze system (i.e., a letter selection device based upon direction of eye gaze) and uses the BCI 4–6 h/day for e-mail and other important purposes [32].

Figure 4.

Hardware used in our current home BCI system. It consists of a 16 channel electrode cap for signal recording, a solid-state amplifier, a laptop, and an additional monitor to provide for the user display.

How far BCI technology will develop and how useful it will become depends on future research developments. At present, it is apparent that BCIs can serve the basic communication needs of people whose severe motor disabilities prevent them from using conventional augmentative communications devices, all of which require some muscle control.

Acknowledgments

This work was supported in part by grants from NIH (HD30146 (NCMRR, NICHD) and EB00856 (NIBIB & NINDS)) and the James S. McDonnell Foundation. We thank Chad Boulay and Peter Brunner for their comments on this manuscript.

Contributor Information

Dennis J. McFarland, Email: mcfarlan@wadsworth.org, Laboratory of Neural Injury and Repair, Wadsworth Center, New York State Department of Health, Albany, New York 12201-0509

Jonathan R. Wolpaw, Email: wolpaw@wadsworth.org, Laboratory of Neural Injury and Repair, Wadsworth Center, New York State Department of Health, Albany, New York 12201-0509

References

- 1.Bell CJ, Shenoy P, Chalodhorn R, Rao RPN. Control of a humanoid robot by a noninvasive brain-computer interface in humans. Journal of Neural Engineering. 2008;5:214–220. doi: 10.1088/1741-2560/5/2/012. [DOI] [PubMed] [Google Scholar]

- 2.Black AH, Young GA, Batenchuk C. Avoidance training of hippocampal theta waves in flaxedilized dogs and its relation to skeletal movement. Journal of Comparative and Physiological Psychology. 1970;70:15–24. doi: 10.1037/h0028393. [DOI] [PubMed] [Google Scholar]

- 3.Blankertz B, Muller K-R, Krusienski DJ, Schalk G, Wolpaw JR, Schlogl A, Pfurtscheller G, Millan J, Schroder M, Birbaumer N. The BCI competition III: Validating alternative approaches to actual BCI problems. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2006;14:153–159. doi: 10.1109/TNSRE.2006.875642. [DOI] [PubMed] [Google Scholar]

- 4.Coyle SM, Ward TE, Markham CM. Brain-computer interface using a simplified functional near-infrared spectroscopy system. Journal of Neural Engineering. 2007;4:219–226. doi: 10.1088/1741-2560/4/3/007. [DOI] [PubMed] [Google Scholar]

- 5.Curran E, Sykacek P, Stokes M, Roberts SJ, Penny W, Johnsrude I, Owen A. IEEE Transactions on Neural Systems and Rehabilitation Engineering. Vol. 12. 2003. pp. 48–54. [DOI] [PubMed] [Google Scholar]

- 6.Dewan EM. Occipital alpha rhythm eye position and lens accommodation. Nature. 1967;214:975–977. doi: 10.1038/214975a0. [DOI] [PubMed] [Google Scholar]

- 7.Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalography and Clinical Neurophysiology. 1988;70:510–523. doi: 10.1016/0013-4694(88)90149-6. [DOI] [PubMed] [Google Scholar]

- 8.Fatourechi M, Bashashati A, Ward RK, Birch GE. EMG and EOG artifacts in brain computer interface systems: a survey. Clinical Neurophysiology. 2007;118:480–494. doi: 10.1016/j.clinph.2006.10.019. [DOI] [PubMed] [Google Scholar]

- 9.Fetz EE. Operant conditioning of cortical unit activity. Science. 1969;163:955–958. doi: 10.1126/science.163.3870.955. [DOI] [PubMed] [Google Scholar]

- 10.Galan F, Nuttin M, Lew E, Ferrez PW, Vanacker G, Philips J, Millan JdR. A brain-actuated wheelchair: Asynchronous and non-invasive Brain-computer interfaces for continuous control of robots. Clinical Neurophysiology. 2008;119:2159–2169. doi: 10.1016/j.clinph.2008.06.001. [DOI] [PubMed] [Google Scholar]

- 11.He B, Liu Z. Multimodal functional neuroimaging: integrating functional MRI and EEG/MEG. IEEE Reviews in Biomedical Engineering. 2008;1:23–40. doi: 10.1109/RBME.2008.2008233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Penn DRD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 13.Krusienski DJ, Sellers EW, McFarland DJ, Vaughan TM, Wolpaw JR. Toward enhanced P300 speller performance. Journal of Neuroscience Methods. 2008;167:15–21. doi: 10.1016/j.jneumeth.2007.07.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Leeb R, Friedman D, Muller-Putz GR, Scherer R, Slater M, Pfurtscheller G. Self-paced (Asynchronous) BCI control of a Wheelchair in virtual environments: A case study with a tetraplegic. Computational Intelligence and Neuroscience. 2007:79642. doi: 10.1155/2007/79642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Leuthardt EC, Schalk G, Wolpaw JR, Ojemann JG, Moran DW. A brain-computer interface using electrocorticographic signals in humans. Journal of Neural Engineering. 2004;1:63–71. doi: 10.1088/1741-2560/1/2/001. [DOI] [PubMed] [Google Scholar]

- 16.Lotte F, Congedo M, Lecuyer A, Lamarche F, Arnaldi B. A review of classification algorithms for EEG-based brain-computer interfaces. Journal of Neural Engineering. 2007;4:1–13. doi: 10.1088/1741-2560/4/2/R01. [DOI] [PubMed] [Google Scholar]

- 17.McFarland DJ, Krusienski DJ, Wolpaw JR. Brain-computer interface signal processing at the Wadsworth Center: mu and sensorimotor beta rhythms. Progress in Brain Research. 2006;159:411–419. doi: 10.1016/S0079-6123(06)59026-0. [DOI] [PubMed] [Google Scholar]

- 18.McFarland DJ, Sarnacki WA, Vaughan TM, Wolpaw JR. Brain-computer interface (BCI) operation: signal and nosie during early training sessions. Clinical Neurophysiology. 2005;116:56–62. doi: 10.1016/j.clinph.2004.07.004. [DOI] [PubMed] [Google Scholar]

- 19.McFarland DJ, Wolpaw JR. Brain-computer interface operation of robotic and prosthetic devices. Computer. 2008;41:48–52. [Google Scholar]

- 20.Mellinger J, Schalk G, Braun C, Preissl H, Rosenstiel W, Birbaumer N, Kubler A. An MEG-based brain-computer interface (BCI) Neuroimage. 2007;36:581–593. doi: 10.1016/j.neuroimage.2007.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Muller K-R, Blankertz B. Towards noninvasive brain-computer interfaces. IEEE Signal Processing Magazine. 2006;23:125–128. [Google Scholar]

- 22.Muller KR, Tangermann M, Dornhege G, Krauledat M, Curio GT, Blankertz B. Machine learning for real-time single-trial EEG-analysis: From brain-computer interfacing to mental state monitoring. Journal of Neuroscience Methods. 2008;167:82–90. doi: 10.1016/j.jneumeth.2007.09.022. [DOI] [PubMed] [Google Scholar]

- 23.Neidermeyer E. Historical aspects. In: Neidermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic principals, clinical applications, and related fields. 5. Lippincott Williams and Wilkins; Philadelphia: 2005. pp. 1–15. [Google Scholar]

- 24.Pfurtscheller G, Flotzinger D, Kalcher J. Brain-computer interface- a new communication device for handicapped persons. Journal of Microcomputer Applications. 1993;16:293–299. [Google Scholar]

- 25.Schalk G, McFarland DJ, Hinterberger T, Birbaumer N, Wolpaw JR. BCI2000: A general-purpose brain-computer interface (BCI) system. IEEE Transactions on Biomedical Engineering. 2004;51:1034–1043. doi: 10.1109/TBME.2004.827072. [DOI] [PubMed] [Google Scholar]

- 26.Scherer R, Muller GR, Neuper C, Graimann B, Pfurtschheller G. An asynchronously controlled EEG-based virtual keyboard: Improvement of the spelling rate. IEEE Transactions on Biomedical Engineering. 2004;51:979–984. doi: 10.1109/TBME.2004.827062. [DOI] [PubMed] [Google Scholar]

- 27.Singer E. Technology Review. 2008. July/August, Brain games; pp. 82–84. [Google Scholar]

- 28.Sitaram R, Caria A, Veit R, Gaber T, Rota G, Kuebler A, Birbaumer N. fMRI Brain-Computer interface: A tool for Neuroscientific research and treatment. Computational Intelligence and Neuroscience. 2007:25487. doi: 10.1155/2007/25487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sterman MB, MacDonald LR, Stone RK. Biofeedback training of sensorimotor EEG in man and its effect on epilepsy. Epilepsia. 1974;15:395–416. doi: 10.1111/j.1528-1157.1974.tb04016.x. [DOI] [PubMed] [Google Scholar]

- 30.Taylor DA, Helms Tillery S, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science. 2002;296:1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- 31.Townsend G, LaPallo BK, Boulay CB, Krusienski DJ, Frye GE, Hauser CK, Schwartz NE, Vaughan TM, Wolpaw JR, Sellers EW. A novel P300-based brain-computer interface stimulus presentation paradigm: Moving beyond rows and columns. Clinical Neurophysiology. 121:1109–1120. doi: 10.1016/j.clinph.2010.01.030. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vaughan TM, McFarland DJ, Schalk G, Sarnacki WA, Krusienski DJ, Sellers EW, Wolpaw JR. The Wadsworth BCI research and development program: At home with BCI. IEEE Transactions on Rehabilitation Engineering. 2006;14:229–233. doi: 10.1109/TNSRE.2006.875577. [DOI] [PubMed] [Google Scholar]

- 33.Vaughan TM, Miner LA, McFarland DJ, Wolpaw JR. EEG-based communication: analysis of concurrent EMG activity. Electroencephalography and Clinical Neurophysiology. 1998;107:428–433. doi: 10.1016/s0013-4694(98)00107-2. [DOI] [PubMed] [Google Scholar]

- 34.Vidal JJ. Real-time detection of brain events in EEG. Proceedings of the IEEE. 1977;65:633–641. [Google Scholar]

- 35.Whitham EM, Lewis T, Pope KJ, Fitzbibbon SP, Clark CR, Loveless S, DeLosAngeles D, Wallace AK, Broberg M, Willoughby JO. Thinking activates EMG in scalp electrical recordings. Clinical Neurophysiology. 2008;119:1166–1175. doi: 10.1016/j.clinph.2008.01.024. [DOI] [PubMed] [Google Scholar]

- 36.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 37.Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a non-invasive brain-computer interface. Proceedings of the National Academy of Science. 2004;51:17849–17854. doi: 10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wolpaw JR, McFarland DJ, Neat GW, Forneris CA. An EEG-based brain-computer interface for cursor control. Electroencephalography and Clinical Neurophysiology. 1991;78:252–259. doi: 10.1016/0013-4694(91)90040-b. [DOI] [PubMed] [Google Scholar]