Abstract

Defocus blur is nearly always present in natural images: Objects at only one distance can be perfectly focused. Images of objects at other distances are blurred by an amount depending on pupil diameter and lens properties. Despite the fact that defocus is of great behavioral, perceptual, and biological importance, it is unknown how biological systems estimate defocus. Given a set of natural scenes and the properties of the vision system, we show from first principles how to optimally estimate defocus at each location in any individual image. We show for the human visual system that high-precision, unbiased estimates are obtainable under natural viewing conditions for patches with detectable contrast. The high quality of the estimates is surprising given the heterogeneity of natural images. Additionally, we quantify the degree to which the sign ambiguity often attributed to defocus is resolved by monochromatic aberrations (other than defocus) and chromatic aberrations; chromatic aberrations fully resolve the sign ambiguity. Finally, we show that simple spatial and spatio-chromatic receptive fields extract the information optimally. The approach can be tailored to any environment–vision system pairing: natural or man-made, animal or machine. Thus, it provides a principled general framework for analyzing the psychophysics and neurophysiology of defocus estimation in species across the animal kingdom and for developing optimal image-based defocus and depth estimation algorithms for computational vision systems.

Keywords: optics, sensor sampling, Bayesian statistics, depth perception, auto-focus

In a vast number of animals, vision begins with lens systems that focus and defocus light on the retinal photoreceptors. Lenses focus light perfectly from only one distance, and natural scenes contain objects at many distances. Thus, defocus information is nearly always present in images of natural scenes. Defocus information is vital for many natural tasks: depth and scale estimation (1, 2), accommodation control (3, 4), and eye growth regulation (5, 6). However, little is known about the computations visual systems use to estimate defocus in images of natural scenes. The computer vision and engineering literatures describe algorithms for defocus estimation (7, 8). However, they typically require simultaneous multiple images (9–11), special lens apertures (11, 12), or light with known patterns projected onto the environment (9). Mammalian visual systems usually lack these advantages. Thus, these methods cannot serve as plausible models of defocus estimation in many visual systems.

Although defocus estimation is but one problem faced by vision systems, few estimation problems have broader scope. Vision scientists have developed models for how defocus is used as a cue to depth and have identified stimulus factors that drive accommodation (biological autofocusing). Neurobiologists have identified defocus cues and transcription factors that trigger eye growth, a significant contributor to the development of near-sightedness (5). Comparative physiologists have established accommodation's role in predatory behavior across the animal kingdom, in species as diverse as the chameleon (13) and the cuttlefish (14). Engineers have developed methods for autofocusing camera lenses and automatically estimating depth from defocus across an image. However, there is no widely accepted formal theory for how defocus information should be extracted from individual natural images. The absence of such a theory constitutes a significant theoretical gap.

Here, we describe a principled approach for estimating defocus in small regions of individual images, given a training set of natural images, a wave-optics model of the lens system, a sensor array, and a specification of noise and processing inefficiencies. We begin by considering a vision system with diffraction- and defocus-limited optics, a sensor array sensitive only to one wavelength of light, and sensor noise consistent with human detection thresholds. We then consider more realistic vision systems that include human monochromatic aberrations, human chromatic aberrations, and sensors similar to human photoreceptors.

The defocus of a target region is defined as the difference between the lens system's current power and the power required to bring the target region into focus,

where  is the defocus, Dfocus is the current power, and

is the defocus, Dfocus is the current power, and  is the power required to image the target sharply, expressed in diopters (1/m). The goal is to estimate

is the power required to image the target sharply, expressed in diopters (1/m). The goal is to estimate  in each local region of an image.

in each local region of an image.

Estimating defocus, like many visual estimation tasks, suffers from the “inverse optics” problem: It is impossible to determine with certainty, from the image alone, whether image blur is due to defocus or some property of the scene (e.g., fog). Defocus estimation also suffers from a sign ambiguity: Under certain conditions, point targets at the same dioptric distances nearer or farther than the focus distance are imaged identically. However, numerous constraints exist that can make a solution possible. Previous work has not taken an integrative approach that capitalizes on all of these constraints.

Results

The information for defocus estimation is jointly determined by the properties of natural scenes, the optical system, the sensor array, and sensor noise. The input from a natural scene can be represented by an idealized image  that gives the radiance at each location

that gives the radiance at each location  in the plane of the sensor array, for each wavelength

in the plane of the sensor array, for each wavelength  . An optical system degrades the idealized image and can be represented by a point-spread function

. An optical system degrades the idealized image and can be represented by a point-spread function  , which gives the spatial distribution of light across the sensor array produced by a point target of wavelength

, which gives the spatial distribution of light across the sensor array produced by a point target of wavelength  and defocus

and defocus  . The sensor array is represented by a wavelength sensitivity function

. The sensor array is represented by a wavelength sensitivity function  and a spatial sampling function

and a spatial sampling function  for each class of sensor

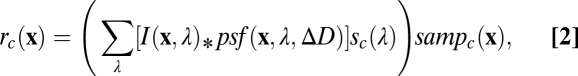

for each class of sensor  . Combining these factors gives the spatial pattern of responses in a given class of sensor,

. Combining these factors gives the spatial pattern of responses in a given class of sensor,

|

where  represents 2D convolution in

represents 2D convolution in  . Noise and processing inefficiencies then corrupt these sensor responses. The goal is to estimate defocus from the noisy sensor responses in the available sensor classes.

. Noise and processing inefficiencies then corrupt these sensor responses. The goal is to estimate defocus from the noisy sensor responses in the available sensor classes.

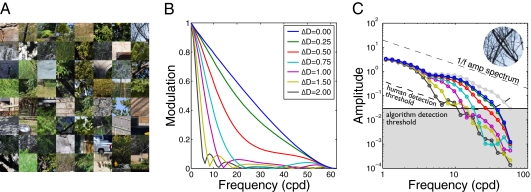

The first factor determining defocus information is the statistical structure of the input images from natural scenes. These statistics must be determined by empirical measurement. The most accurate method would be to measure full radiance functions  with a hyperspectral camera. However, well-focused, calibrated digital photographs were used as approximations to full radiance functions. This approach is sufficiently accurate for the present purposes (SI Methods and Fig. S1); in fact, it is preferred because hyperspectral images are often contaminated by motion blur. Eight hundred 128 × 128 pixel input patches were randomly sampled from 80 natural scenes containing trees, shrubs, grass, clouds, buildings, roads, cars, etc.; 400 were used for training and the other 400 for testing (Fig. 1A).

with a hyperspectral camera. However, well-focused, calibrated digital photographs were used as approximations to full radiance functions. This approach is sufficiently accurate for the present purposes (SI Methods and Fig. S1); in fact, it is preferred because hyperspectral images are often contaminated by motion blur. Eight hundred 128 × 128 pixel input patches were randomly sampled from 80 natural scenes containing trees, shrubs, grass, clouds, buildings, roads, cars, etc.; 400 were used for training and the other 400 for testing (Fig. 1A).

Fig. 1.

Natural scene inputs and the effect of defocus in a diffraction- and defocus-limited vision system. (A) Examples of natural inputs. (B) Optical effect of defocus. Curves show one-dimensional modulation transfer functions (MTFs), the radially averaged Fourier amplitude spectra of the point-spread functions. (C) Radially averaged amplitude spectra of the top-rightmost patch in A. Circles indicate the mean amplitude in each radial bin. Light gray circles show the spectrum of the idealized natural input. The dashed black curve shows the human neural detection threshold.

The next factor is the optical system, which is characterized by the point-spread function. The term for the point-spread function in Eq. 2 can be expanded to make the factors determining its form more explicit,

where  specifies the shape, size, and transmittance of the pupil aperture, and

specifies the shape, size, and transmittance of the pupil aperture, and  is a wave aberration function, which depends on the position z in the plane of the aperture, the wavelength of light, the defocus level, and other aberrations introduced by the lens system (15). The aperture function determines the effect of diffraction on the image quality. The wave aberration function determines degradations in image quality not attributable to diffraction. A perfect lens system (i.e., limited only by diffraction and defocus) converts light originating from a point on a target object to a converging spherical wavefront. The wave aberration function describes how the actual converging wavefront differs from a perfect spherical wavefront at each point in the pupil aperture. The human pupil is circular and its minimum diameter under bright daylight conditions is ∼2 mm (16); this pupil shape and size are assumed throughout the paper. Note that a 2-mm pupil is conservative because defocus information increases (depth-of-focus decreases) as pupil size increases.

is a wave aberration function, which depends on the position z in the plane of the aperture, the wavelength of light, the defocus level, and other aberrations introduced by the lens system (15). The aperture function determines the effect of diffraction on the image quality. The wave aberration function determines degradations in image quality not attributable to diffraction. A perfect lens system (i.e., limited only by diffraction and defocus) converts light originating from a point on a target object to a converging spherical wavefront. The wave aberration function describes how the actual converging wavefront differs from a perfect spherical wavefront at each point in the pupil aperture. The human pupil is circular and its minimum diameter under bright daylight conditions is ∼2 mm (16); this pupil shape and size are assumed throughout the paper. Note that a 2-mm pupil is conservative because defocus information increases (depth-of-focus decreases) as pupil size increases.

The next factor is the sensor array. Two sensor arrays were considered: an array having a single sensor class sensitive only to 570 nm and an array having two sensor classes with the spatial sampling and wavelength sensitivities of the human long-wavelength (L) and short-wavelength (S) cones (17). (A system sensitive only to one wavelength will be insensitive to chromatic aberrations.) Human foveal cones sample the retinal image at ∼128 samples/degree; this rate is assumed throughout the paper. Thus, the 128 × 128 pixel input patches have a visual angle of 1 degree.

The last factor determining defocus information is the combined effect of photon noise, system noise, and processing inefficiencies. We represent this factor in our algorithm by applying a threshold determined from human psychophysical detection thresholds (18). (For the analyses that follow, we found that applying a threshold has a nearly identical effect to adding noise.)

The proposed computational approach is based on the well-known fact that defocus affects the spatial Fourier spectrum of sensor responses. Here, we consider only amplitude spectra (19), although the approach can be generalized to include phase spectra. There are two steps to the approach: (i) Discover the spatial frequency filters that are most diagnostic of defocus and (ii) determine how to use the filter responses to obtain continuous defocus estimates. A perfect lens system attenuates the amplitude spectrum of the input image equally in all orientations. Hence, to estimate the spatial frequency filters it is reasonable, although not required, to average across orientation. Fig. 1B shows how spatial frequency amplitudes are modulated by different magnitudes of defocus (i.e., modulation transfer functions). Fig. 1C shows the effect of defocus on the amplitude spectrum of a sampled retinal image patch; higher spatial frequencies become more attenuated as defocus magnitude increases. The gray boundary represents the detection threshold imposed on our algorithm. For any given image patch, the shape of the spectrum above the threshold would make it easy to estimate the magnitude of defocus of that patch. However, the substantial variation of local amplitude spectra in natural images makes the task difficult. Hence, we seek filters tuned to spatial frequency features that are optimally diagnostic of the level of defocus, given the variation in natural image patches.

To discover these filters, we use a recently developed statistical learning algorithm called accuracy maximization analysis (AMA) (20). As long as the algorithm does not get stuck in local minima, it finds the Bayes-optimal feature dimensions (in rank order) for maximizing accuracy in a given identification task (see http://jburge.cps.utexas.edu/research/Code.html for Matlab implementation of AMA). We applied this algorithm to the task of identifying the defocus level, from a discrete set of levels, of sampled retinal image patches. The number of discrete levels was picked to allow accurate continuous estimation (SI Methods). Specifically, a random set of natural input patches was passed through a model lens system at defocus levels between 0 and 2.25 diopters, in 0.25-diopter steps, and then sampled by the sensor array. Each sampled image patch was then converted to a contrast image by subtracting and dividing by the mean. Next, the contrast image was windowed by a raised cosine (0.5 degrees at half height) and fast-Fourier transformed. Finally, the log of its radially averaged squared amplitude (power) spectrum was computed and normalized to a mean of zero and vector magnitude of 1.0. [The log transform was used because it nearly equalizes the standard deviation (SD) of the amplitude in each radial bin (Fig. S2). Other transforms that equalize variability, such as frequency-dependent gain control, yield comparable performance.] Four thousand normalized amplitude spectra (400 natural inputs × 10 defocus levels) constituted the training set for AMA.

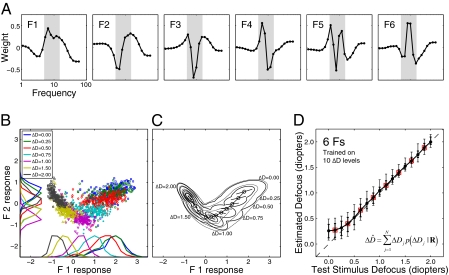

Fig. 2A shows the six most useful defocus filters for a vision system having diffraction- and defocus-limited optics and sensors that are sensitive only to 570 nm light. The filters have several interesting features. First, they capture most of the relevant information; additional filters add little to overall accuracy. Second, they provide better performance than filters based on principal components analysis or matched templates (Fig. S3). Third, they are relatively smooth and hence could be implemented by combining a few simple, center-surround receptive fields like those found in retina or primary visual cortex. Fourth, the filter energy is concentrated in the 5–15 cycles per degree (cpd) frequency range, which is similar to the range known to drive human accommodation (4–8 cpd) (21–23).

Fig. 2.

Optimal filters and defocus estimation. (A) The first six AMA filters. Filter energy is concentrated in a limited frequency range (shaded area). (B) Filter responses to amplitude spectra in the training set (1.25, 1.75, and 2.25 diopters not plotted). Symbols represent joint responses from the two most informative filters. Marginal distributions are shown on each axis. (C) Gaussian fits to filter responses. Thick lines are iso-likelihood contours on the maximum-likelihood surface determined from fits to the response distributions at trained defocus levels. Thin lines are iso-likelihood contours on interpolated response distributions (SI Methods). Circles indicate interpolated means separated by a d′ (i.e., Mahalanobis distance) of 1. Line segments show the direction of principle variance and ±1 SD. (D) Defocus estimates for test stimuli. Circles represent the mean defocus estimate for each defocus level. Error bars represent 68% (thick bars) and 90% (thin bars) confidence intervals. Boxes indicate defocus levels not in the training set. The equal-sized error bars at both trained and untrained levels indicates that the algorithm outputs continuous estimates.

The AMA filters encode information in local amplitude spectra relevant for estimating defocus. However, the Bayesian decoder built into the AMA algorithm can be used only with the training stimuli, because that decoder needs access to the mean and variance of each filter's response to each stimulus (20). In other words, AMA finds only the optimal filters.

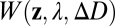

The next step is to combine (pool) the filter responses to estimate defocus in arbitrary image patches, having arbitrary defocus. We take a standard approach. First, the joint probability distribution of filter responses to natural image patches is estimated for the defocus levels in the training set. For each defocus level, the filter responses are fit with a Gaussian by calculating the sample mean and covariance matrix. Fig. 2B shows the joint distribution of the first two AMA filter responses for several levels of defocus. Fig. 2C shows contour plots of the fitted Gaussians. Second, given the joint distributions (which are six dimensional, one dimension for each filter), defocus estimates are obtained with a weighted summation formula

|

where  is one of the N trained defocus levels, and

is one of the N trained defocus levels, and  is the posterior probability of that defocus level given the observed vector of filter responses

is the posterior probability of that defocus level given the observed vector of filter responses  . The response vector is given by the dot product of each filter with the normalized, logged amplitude spectrum. The posterior probabilities are obtained by applying Bayes’ rule to the fitted Gaussian probability distributions (SI Methods). Eq. 4 gives the Bayes optimal estimate when the goal is to minimize the mean-squared error of the estimates and when N is sufficiently large, which it is in our case (SI Methods).

. The response vector is given by the dot product of each filter with the normalized, logged amplitude spectrum. The posterior probabilities are obtained by applying Bayes’ rule to the fitted Gaussian probability distributions (SI Methods). Eq. 4 gives the Bayes optimal estimate when the goal is to minimize the mean-squared error of the estimates and when N is sufficiently large, which it is in our case (SI Methods).

Defocus estimates for the test patches are plotted as a function of defocus in Fig. 2D for our initial case of a vision system having perfect optics and a single class of sensor. None of the test patches were in the training set. Performance is quite good. Precision is high and bias is low once defocus exceeds ∼0.25 diopters, roughly the defocus detection threshold in humans (21, 24). Precision decreases at low levels of defocus because a modest change in defocus (e.g., 0.25 diopters) does not change the amplitude spectra significantly when the base defocus is zero; more substantial changes occur when the base defocus is nonzero (24, 25) (Fig. 1C). The bias near zero occurs because in vision systems having perfect optics and sensors sensitive only to a single wavelength, positive and negative defocus levels of identical magnitude yield identical amplitude spectra. Thus, the bias is due to a boundary effect: Estimation errors can be made above but not below zero.

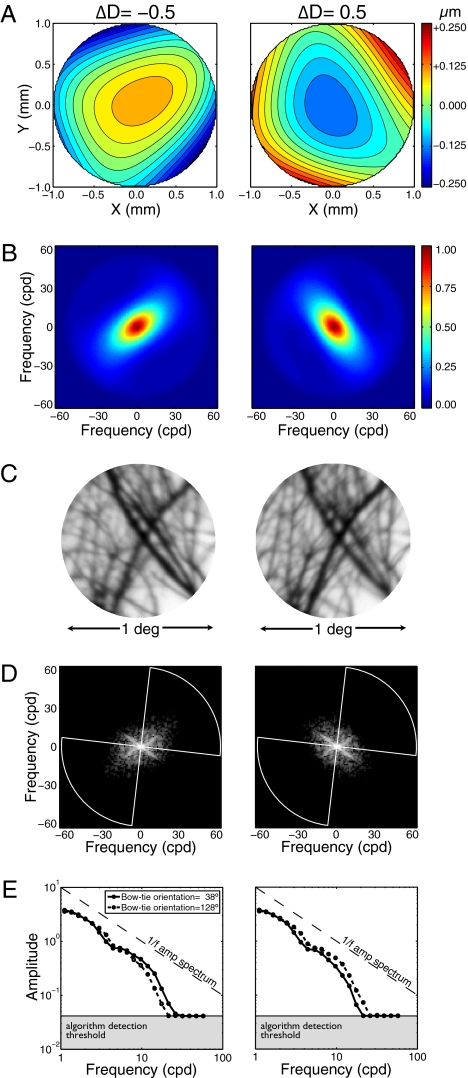

Now, consider a biologically realistic lens system having monochromatic aberrations (e.g., astigmatic and spherical). Although such lens systems reduce the quality of the best-focused image, they can introduce information useful for recovering defocus sign (26). To examine this possibility, we changed the optical model to include the monochromatic aberrations of human eyes. Aberration maps for two defocus levels are shown for the first author's right eye (Fig. 3A). At the time the first author's optics were measured, he had 20/20 acuity and 0.17 diopters of astigmatism, and his higher-order aberrations were about equal in magnitude to his astigmatism (Table S1). Spatial frequency attenuation due to the lens optics now differs as a function of the defocus sign. When focused behind the target (negative defocus), the eye's 2D modulation transfer function (MTF) is oriented near the positive oblique; when focused in front (positive defocus), the MTF has the opposite orientation (Fig. 3B). Image features oriented orthogonally to the MTF's dominant orientation are imaged more sharply. This effect is seen in the sampled retinal image patches (Fig. 3C) and in their corresponding 2D amplitude spectra (Fig. 3D).

Fig. 3.

Effect of defocus sign in a vision system with human monochromatic aberrations. (A) Wavefront aberration functions of the first author's right eye for −0.5 and +0.5 diopters of defocus (x and y represent location in the pupil aperture). Color indicates wavefront errors in micrometers. (B) Corresponding 2D MTFs. Orientation differences are due primarily to astigmatism. Color indicates transfer magnitude. (C) Image patch defocused by −0.5 and +0.5 diopters. Relative sharpness of differently oriented image features changes as a function of defocus sign. (D) Logged 2D-sampled retinal image amplitude spectra. The spectra were radially averaged within two “bowties” (one shown, white lines) that were centered on the dominant orientations of the negatively and positively defocused MTFs (SI Methods). (E) Thresholded bowtie amplitude spectra. Curves show the bowtie amplitude spectra at the dominant orientations of the negatively and positively defocused MTFs (solid and dashed curves, respectively).

Many monochromatic aberrations in human optics contribute to the effect of defocus sign on the MTF, but astigmatism—the difference in lens power along different lens meridians—is the primary contributor (27). Interestingly, astigmatism is deliberately added to the lenses in compact disc players to aid their autofocus devices.

To examine whether orientation differences can be exploited to recover defocus sign, optimal AMA filters were relearned for vision systems having the optics of specific eyes and the same single-sensor array as before. There were two procedural differences: (i) Instead of averaging radially across all orientations, the spectra were radially averaged in two orthogonal “bowties” (Fig. 3D) centered on the MTF's dominant orientation (SI Methods) for each sign of defocus (Fig. 3E). (ii) The same training natural inputs were passed through the optics at defocus levels ranging from −2.25 to 2.25 diopters in 0.25-diopter steps, yielding 7,600 thresholded spectra (400 natural inputs × 19 defocus levels).

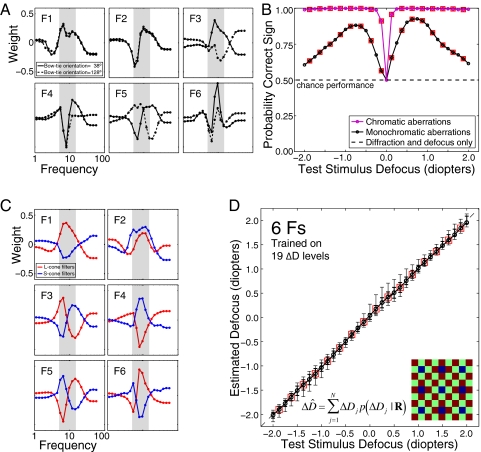

The filters for the first author's right eye (Fig. 4A) yield estimates of defocus magnitude similar in accuracy to those in Fig. 2D (Fig. S4A). Importantly, the filters now extract information about defocus sign. Fig. 4B (black curve) shows the proportion of test stimuli where the sign of the defocus estimate was correct. Although performance was well above chance, a number of errors occurred. Similar performance was obtained with “standard observer” optics (28); better performance was obtained with the first author's left eye, which has more astigmatism. Thus, a vision system with human monochromatic aberrations and a single sensor class can estimate both the magnitude and the sign of defocus with reasonable accuracy.

Fig. 4.

Optimal filters and defocus estimates for vision systems with human monochromatic or chromatic aberrations. (A) Optimal filters for a vision system with the optics of the first author's right eye and a sensor array sensitive only to 570 nm light. Solid lines show filter sensitivity to orientations in the “bowtie” centered on the dominant orientation of the negatively defocused MTF (Fig. 3 D and E). Dotted lines show filter sensitivities to the other orientations. (B) Defocus sign identification. The black curve shows performance for a vision system with the first author's monochromatic aberrations. The magenta curve shows performance for a system sensitive to chromatic aberration. (C) Optimal filters for the system sensitive to chromatic aberrations. Red curves show L-cone filters. Blue curves show S-cone filters. Inset in D shows the rectangular mosaic of L (red), M (green), and S (blue) cones used to sample the retinal images (57, 57, and 14 samples/degree, respectively). M-cone responses were not used in the analysis. (D) Defocus estimates using the filters in C. Error bars represent the 68% (thick bars) and 90% (thin bars) confidence intervals on the estimates. Boxes mark defocus levels not in the training set. Error bars at untrained levels are as small as at trained levels, indicating that the algorithm makes continuous estimates.

Finally, consider a vision system with two sensor classes, each with a different wavelength sensitivity function. In this vision system, chromatic aberrations can be exploited. It has long been recognized that chromatic aberrations provide a signed cue to defocus (29, 30). The human eye's refractive power changes by ∼1 diopter between 570 and 445 nm (31), the peak sensitivities of the L and S cones. Typically, humans focus the 570-nm wavelength of broadband targets most sharply (32). Therefore, when the eye is focused on or in front of a target, the L-cone image is sharper than the S-cone image; the opposite is true when the lens is focused sufficiently behind the target. Chromatic aberration thus introduces sign information in a manner similar to astigmatism. Whereas astigmatism introduces a sign-dependent statistical tendency for amplitudes at some orientations to be greater than others, chromatic aberration introduces a sign-dependent tendency for one sensor class to have greater amplitudes than the other.

Optimal AMA filters were learned again, this time for a vision system with diffraction, defocus, chromatic aberrations, and sensors with spatial sampling and wavelength sensitivities similar to human cones. In humans, S cones have ∼1/4 the sampling rate of L and medium wavelength (M) cones (33). We sampled the retinal image with a rectangular cone mosaic similar to the human cone mosaic. For simplicity, M-cone responses were not used in the analysis. The amplitude spectra from L and S sensors were radially averaged because the optics are again radially symmetric. Optimal filters are shown in Fig. 4C. Cells with similar properties (i.e., double chromatically opponent, spatial-frequency bandpass receptive fields tuned to the same frequency) have been reported in primate early visual cortex (34, 35). Such cells would be well suited to estimating defocus (30).

A vision system sensitive to chromatic aberration yields unbiased defocus estimates with high precision (∼ ±1/16 diopters) over a wide range (Fig. 4D). Sensitivity to chromatic aberrations also allows the sign of defocus to be identified with near 100% accuracy (Fig. 4B, magenta curve). The usefulness of chromatic aberrations is due to at least three factors. First, the ∼1-diopter defocus difference between the L- and S-cone images produces a larger signal than the difference due to the monochromatic aberrations in the analyzed eyes (Fig. S5; compare with Fig. 3E). Second, natural L- and S-cone input spectra are more correlated than the spectra in the orientation bowties (Fig. S6); the greater the correlation between spectra is, the more robust the filter responses are to variability in the shape of input spectra. Third, small defocus changes are easier to discriminate in images that are already somewhat defocused (21, 24). Thus, when the L-cone image is perfectly focused, S-cone filters are more sensitive to changes in defocus, and vice versa. In other words, chromatic aberrations ensure that at least one sensor will always be in its “sweet spot”.

How sensitive are these results to the assumptions about the spatial sampling of L and S cones? To find out, we changed our third model vision system so that both L and S cones had full resolution (i.e., 128 samples/degree each). We found similar filters and only a small performance benefit (Fig. S7). Thus, defocus estimation performance is robust to large variations in the spatial sampling of human cones.

Some assumptions implicit in our analysis were not fully consistent with natural scene statistics. One assumption was that the statistical structure of natural scenes is invariant with viewing distance (36). Another was that there is no depth variation within image patches, which is not true of many locations in natural scenes. Rather, defocus information was consistent with planar fronto-parallel surfaces displaced from the focus distance. Note, however, that the smaller the patch is (in our case, 0.5 degrees at half height), the less the effect of depth variation. Nonetheless, an important next step is to analyze a database of luminance-range images so that the effect of within-patch depth variation can be accounted for. Other aspects of our analysis were inconsistent with the human visual system. For instance, we used a fixed 2-mm pupil diameter. Human pupil diameter increases as light level decreases; it fluctuates slightly even under steady illumination. We tested how well the filters in Fig. 4 can be used to estimate defocus in images obtained with other pupil diameters. The filters are robust to changes in pupil diameter (Fig. S4 A and B). Importantly, none of these details affect the qualitative findings or main conclusions.

We stress that our aim has been to show how to characterize and extract defocus information from natural images, not to provide an explicit model of human defocus estimation. That problem is for future work.

Our results have several implications. First, they demonstrate that excellent defocus information (including sign) is available in natural images captured by the human visual system. Second, they suggest principled hypotheses (local filters and filter response pooling rules) for how the human visual system should encode and decode defocus information. Third, they provide a rigorous benchmark against which to evaluate human performance in tasks involving defocus estimation. Fourth, they demonstrate the potential value of this approach for any organism with a visual system. Finally, they demonstrate that it should be possible to design useful defocus estimation algorithms for digital imaging systems without the need for specialized hardware. For example, incorporating the optics, sensors, and noise of digital cameras into our framework could lead to improved methods for autofocusing.

Defocus information is even more widely available in the animal kingdom than binocular disparity. Only some sighted animals have visual fields with substantial binocular overlap, but nearly all have lens systems that image light on their photoreceptors. Our results show that sufficient signed defocus information exists in individual natural images for defocus to function as an absolute depth cue once pupil diameter and focus distance are known. In this respect, defocus is similar to binocular disparity, which functions as an absolute depth cue once pupil separation and fixation distance are known. Defocus becomes a higher precision depth cue as focus distance decreases. Perhaps this is why many smaller animals, especially those without consistent binocular overlap, use defocus as their primary depth cue in predatory behavior (13, 14). Thus, the theoretical framework described here could guide behavioral and neurophysiological studies of defocus and depth estimation in many organisms.

In conclusion, we have developed a method for rigorously characterizing the defocus information available to a vision system by combining a model of the system's wave optics, sensor sampling, and noise with a Bayesian statistical analysis of the sensor responses to natural images. This approach should be widely applicable to other vision systems and other estimation problems, and it illustrates the value of natural scene statistics and statistical decision theory for the analysis of sensory and perceptual systems.

Methods

Natural Scenes.

Natural scenes were photographed with a tripod-mounted Nikon D700 14-bit SLR camera (4,256 × 2,836 pixels) fitted with a Sigma 50-mm prime lens. Scenes were those commonly viewed by researchers at the University of Texas at Austin. Details on camera parameters (aperture, shutter speed, ISO), on camera calibration, and on our rationale for excluding very low contrast patches from the analysis are in SI Methods.

Optics.

All three wave-optics models assumed a focus distance of 40 cm (2.5 diopters), a single refracting surface, and the Fraunhoffer approximation, which implies that at or near the focal plane the optical transfer function (OTF) is given by the cross-correlation of the generalized pupil function with its complex conjugate (15). The wavefront aberration functions of the first author's eyes were measured with a Shack–Hartman wavefront sensor and expressed as 66 coefficients on the Zernike polynomial series (Table S1). The coefficients were scaled to the 2-mm pupil diameter used in the analysis from the 5-mm diameter used during wavefront aberration measurement (37).

A refractive defocus correction was applied to each model vision system before analysis began to ensure 0-diopter targets were focused best. Details on this process, and on how the dominant MTF orientations in Fig. 3 were determined, are in SI Methods.

Sensor Array Responses.

To account for the effect of chromatic aberration on the L- and S-cone sensor responses in the third vision system, we created polychromatic point-spread functions for each sensor class. See SI Methods for details.

Noise.

To account for the effects of sensor noise and subsequent processing inefficiencies, a detection threshold was applied at each frequency (e.g., Fig. 1C); amplitudes below the threshold were set equal to the threshold amplitude. The threshold was based on interferometric measurements that bypass the optics of the eye (18) under the assumption that the limiting noise determining the detection threshold is introduced after the image is encoded by the photoreceptors.

Accuracy Maximization Analysis.

AMA was used to estimate optimal filters for defocus estimation. See SI Methods for details on the logic of AMA.

Estimating Defocus.

Given an observed filter response vector  , a continuous defocus estimate was obtained by computing the expected value of the posterior probability distribution over a set of discrete defocus levels (Eq. 4). Details of this computation, of likelihood distribution estimation, and of likelihood distribution interpolation are in SI Methods.

, a continuous defocus estimate was obtained by computing the expected value of the posterior probability distribution over a set of discrete defocus levels (Eq. 4). Details of this computation, of likelihood distribution estimation, and of likelihood distribution interpolation are in SI Methods.

Supplementary Material

Acknowledgments

We thank Austin Roorda for measuring the first author's monochromatic aberrations, and Larry Thibos for helpful discussions on physiological optics. We thank David Brainard and Larry Thibos for comments on an earlier version of the manuscript. This work was supported by National Institutes of Health (NIH) Grant EY11747 to WSG.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1108491108/-/DCSupplemental.

References

- 1.Held RT, Cooper EA, O'Brien JF, Banks MS. Using blur to affect perceived distance and size. ACM Trans Graph. 2010;29(2):19.1–19.16. doi: 10.1145/1731047.1731057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Vishwanath D, Blaser E. Retinal blur and the perception of egocentric distance. J Vis. 2010;10:26:1–16. doi: 10.1167/10.10.26. [DOI] [PubMed] [Google Scholar]

- 3.Kruger PB, Mathews S, Aggarwala KR, Sanchez N. Chromatic aberration and ocular focus: Fincham revisited. Vision Res. 1993;33:1397–1411. doi: 10.1016/0042-6989(93)90046-y. [DOI] [PubMed] [Google Scholar]

- 4.Kruger PB, Mathews S, Katz M, Aggarwala KR, Nowbotsing S. Accommodation without feedback suggests directional signals specify ocular focus. Vision Res. 1997;37:2511–2526. doi: 10.1016/s0042-6989(97)00056-4. [DOI] [PubMed] [Google Scholar]

- 5.Wallman J, Winawer J. Homeostasis of eye growth and the question of myopia. Neuron. 2004;43:447–468. doi: 10.1016/j.neuron.2004.08.008. [DOI] [PubMed] [Google Scholar]

- 6.Diether S, Wildsoet CF. Stimulus requirements for the decoding of myopic and hyperopic defocus under single and competing defocus conditions in the chicken. Invest Ophthalmol Vis Sci. 2005;46:2242–2252. doi: 10.1167/iovs.04-1200. [DOI] [PubMed] [Google Scholar]

- 7.Pentland AP. A new sense for depth of field. IEEE Trans Patt Anal Mach Intell. 1987;9:523–531. doi: 10.1109/tpami.1987.4767940. [DOI] [PubMed] [Google Scholar]

- 8.Wandell BA, El Gamal A, Girod B. Common principles of image acquisition systems and biological vision. Proc IEEE. 2002;90(1):5–17. [Google Scholar]

- 9.Pentland AP, Scherock S, Darrel T, Girod B. Simple range cameras based on focal error. J Opt Soc Am A. 1994;11:2925–2934. [Google Scholar]

- 10.Watanabe M, Nayar SK. Rational filters for passive depth from defocus. Int J Comput Vis. 1997;27:203–225. [Google Scholar]

- 11.Zhou C, Lin S, Nayar S. Coded aperture pairs for depth from defocus and defocus blurring. Int J Comput Vis. 2011;93(1):53–69. [Google Scholar]

- 12.Levin A, Fergus R, Durand F, Freeman W. Image and depth from a conventional camera with a coded aperture. ACM Trans Graph. 2007;26(3):70.1–70.9. [Google Scholar]

- 13.Harkness L. Chameleons use accommodation cues to judge distance. Nature. 1977;267:346–349. doi: 10.1038/267346a0. [DOI] [PubMed] [Google Scholar]

- 14.Schaeffel F, Murphy CJ, Howland HC. Accommodation in the cuttlefish (Sepia officinalis) J Exp Biol. 1999;202:3127–3134. doi: 10.1242/jeb.202.22.3127. [DOI] [PubMed] [Google Scholar]

- 15.Goodman JW. Introduction to Fourier Optics. 2nd Ed. New York: McGraw-Hill; 1996. [Google Scholar]

- 16.Wyszecki G, Stiles WS. Color Science: Concepts and Methods, Quantitative Data and Formulas. New York: Wiley; 1982. [Google Scholar]

- 17.Stockman A, Sharpe LT. The spectral sensitivities of the middle- and long-wavelength-sensitive cones derived from measurements in observers of known genotype. Vision Res. 2000;40:1711–1737. doi: 10.1016/s0042-6989(00)00021-3. [DOI] [PubMed] [Google Scholar]

- 18.Williams DR. Visibility of interference fringes near the resolution limit. J Opt Soc Am A. 1985;2:1087–1093. doi: 10.1364/josaa.2.001087. [DOI] [PubMed] [Google Scholar]

- 19.Field DJ, Brady N. Visual sensitivity, blur and the sources of variability in the amplitude spectra of natural scenes. Vision Res. 1997;37:3367–3383. doi: 10.1016/s0042-6989(97)00181-8. [DOI] [PubMed] [Google Scholar]

- 20.Geisler WS, Najemnik J, Ing AD. Optimal stimulus encoders for natural tasks. J Vis. 2009;9(13)17:1–16. doi: 10.1167/9.13.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Walsh G, Charman WN. Visual sensitivity to temporal change in focus and its relevance to the accommodation response. Vision Res. 1988;28:1207–1221. doi: 10.1016/0042-6989(88)90037-5. [DOI] [PubMed] [Google Scholar]

- 22.Mathews S, Kruger PB. Spatiotemporal transfer function of human accommodation. Vision Res. 1994;34:1965–1980. doi: 10.1016/0042-6989(94)90026-4. [DOI] [PubMed] [Google Scholar]

- 23.Mackenzie KJ, Hoffman DM, Watt SJ. Accommodation to multiple-focal-plane displays: Implications for improving stereoscopic displays and for accommodative control. J Vis. 2010;10(8):22:1–20. doi: 10.1167/10.8.22. [DOI] [PubMed] [Google Scholar]

- 24.Wang B, Ciuffreda KJ. Foveal blur discrimination of the human eye. Ophthalmic Physiol Opt. 2005;25:45–51. doi: 10.1111/j.1475-1313.2004.00250.x. [DOI] [PubMed] [Google Scholar]

- 25.Charman WN, Tucker J. Accommodation and color. J Opt Soc Am. 1978;68:459–471. doi: 10.1364/josa.68.000459. [DOI] [PubMed] [Google Scholar]

- 26.Wilson BJ, Decker KE, Roorda A. Monochromatic aberrations provide an odd-error cue to focus direction. J Opt Soc Am A Opt Image Sci Vis. 2002;19(5):833–839. doi: 10.1364/josaa.19.000833. [DOI] [PubMed] [Google Scholar]

- 27.Porter J, Guirao A, Cox IG, Williams DR. Monochromatic aberrations of the human eye in a large population. J Opt Soc Am A Opt Image Sci Vis. 2001;18:1793–1803. doi: 10.1364/josaa.18.001793. [DOI] [PubMed] [Google Scholar]

- 28.Autrusseau F, Thibos LN, Shevell S. (2011) Chromatic and wavefront aberrations: L-, M-, and S-cone stimulation with typical and extreme retinal image quality. Vision Res. doi: 10.1016/j.visres.2011.08.020. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fincham EF. The accommodation reflex and its stimulus. Br J Ophthalmol. 1951;35:381–393. doi: 10.1136/bjo.35.7.381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Flitcroft DI. A neural and computational model for the chromatic control of accommodation. Vis Neurosci. 1990;5:547–555. doi: 10.1017/s0952523800000705. [DOI] [PubMed] [Google Scholar]

- 31.Thibos LN, Ye M, Zhang X, Bradley A. The chromatic eye: A new reduced-eye model of ocular chromatic aberration in humans. Appl Opt. 1992;31:3594–3600. doi: 10.1364/AO.31.003594. [DOI] [PubMed] [Google Scholar]

- 32.Thibos LN, Bradley A. Modeling the refractive and neuro-sensor systems of the eye. In: Mouroulis P, editor. Visual Instrumentation: Optical Design and Engineering Principle. New York: McGraw-Hill; 1999. pp. 101–159. [Google Scholar]

- 33.Packer O, Williams DR. Light, the retinal image, and photoreceptors. In: Shevell SK, editor. The Science of Color. 2nd Ed. Amsterdam: Elsevier; 2003. pp. 41–102. [Google Scholar]

- 34.Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. J Physiol. 1968;195:215–243. doi: 10.1113/jphysiol.1968.sp008455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Johnson EN, Hawken MJ, Shapley R. The spatial transformation of color in the primary visual cortex of the macaque monkey. Nat Neurosci. 2001;4:409–416. doi: 10.1038/86061. [DOI] [PubMed] [Google Scholar]

- 36.Ruderman DL. The statistics of natural images. Network. 1994;5:517–548. [Google Scholar]

- 37.Campbell CE. Matrix method to find a new set of Zernike coefficients from an original set when the aperture radius is changed. J Opt Soc Am A Opt Image Sci Vis. 2003;20:209–217. doi: 10.1364/josaa.20.000209. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.