Abstract

Spike-timing-dependent plasticity (STDP) modifies the weight (or strength) of synaptic connections between neurons and is considered to be crucial for generating network structure. It has been observed in physiology that, in addition to spike timing, the weight update also depends on the current value of the weight. The functional implications of this feature are still largely unclear. Additive STDP gives rise to strong competition among synapses, but due to the absence of weight dependence, it requires hard boundaries to secure the stability of weight dynamics. Multiplicative STDP with linear weight dependence for depression ensures stability, but it lacks sufficiently strong competition required to obtain a clear synaptic specialization. A solution to this stability-versus-function dilemma can be found with an intermediate parametrization between additive and multiplicative STDP. Here we propose a novel solution to the dilemma, named log-STDP, whose key feature is a sublinear weight dependence for depression. Due to its specific weight dependence, this new model can produce significantly broad weight distributions with no hard upper bound, similar to those recently observed in experiments. Log-STDP induces graded competition between synapses, such that synapses receiving stronger input correlations are pushed further in the tail of (very) large weights. Strong weights are functionally important to enhance the neuronal response to synchronous spike volleys. Depending on the input configuration, multiple groups of correlated synaptic inputs exhibit either winner-share-all or winner-take-all behavior. When the configuration of input correlations changes, individual synapses quickly and robustly readapt to represent the new configuration. We also demonstrate the advantages of log-STDP for generating a stable structure of strong weights in a recurrently connected network. These properties of log-STDP are compared with those of previous models. Through long-tail weight distributions, log-STDP achieves both stable dynamics for and robust competition of synapses, which are crucial for spike-based information processing.

Introduction

Modifications of the strength (or weight) of synaptic connections between neurons that occur in an activity-dependent manner are hypothesized to play an active role in generating the structure of neuronal networks [1]–[7]. The importance of the relative timing between pre- and postsynaptic spikes for the weight modification, known as spike-timing-dependent plasticity (STDP), has been demonstrated in many brain areas and across many species [8]–[10]. Many models have been proposed to investigate the functional implications of STDP; see [11] for a review. Owing to its time scale, STDP can capture fine temporal correlations between incoming spike trains to select some synaptic input pathways [1], [12]–[16] However, which features of STDP are both biologically realistic and functionally appropriate remains unclear.

In this paper, we propose a novel STDP rule, termed log-STDP, that can produce long-tail distributions of synaptic strengths similar to those reported in recent experiments. Pyramidal cells in the rat visual cortex exhibit lognormal-like distributions for the amplitudes of excitatory postsynaptic potentials (EPSPs) [17]. Electrophysiological measurements in the barrel cortex of mice also revealed rare large-amplitude responses in addition to more frequent medium- and small-amplitude responses [18]. In addition to their long-tail character, the observed distributions also exhibit a couple of outliers many times (e.g., 20) stronger than the mean. Similar long-tail distributions have also been observed by two-photon imaging of dendritic spines in the hippocampal CA1 of young rats [19], where the spine size may be positively correlated with the strength of synapse [20]. These findings led us to investigate the conditions under which STDP can generate such long-tail weight distributions in an activity-dependent manner. While a learning rule leading to lognormal weight distributions was formulated in terms of firing rates [21], spike-based mechanisms have not been examined theoretically. A recent numerical study [22] made use of spread weight distributions obtained using STDP, but did not investigate the underlying dynamics. Here we focus on the conditions allowing STDP to produce long-tail weight distributions.

Moreover, we study the functional implications of log-STDP in terms of synaptic specialization. We focus on how STDP can achieve both a stable weight distribution and effective selection of synaptic input pathways, which we refer to as the stability-versus-function “dilemma”. Additive STDP (add-STDP) can rapidly and efficiently select synaptic pathways by splitting synaptic weights into a bimodal distribution of weak and strong synapses [1], [14], [23]. However, the stability of the weight distribution requires hard bounds due to the resulting unstable weight dynamics. Moreover, even for uncorrelated inputs, add-STDP can split a unimodal weight distribution, in a way that does not meaningfully represent the input statistics. In contrast, weight-dependent update rules can generate stable unimodal distributions [24]–[26]. Weight dependence is supported by experimental observations [27], which have been used to fit the multiplicative STDP (mlt-STDP) proposed by van Rossum et al. [24]. On the down side, weight dependence weakens the competition among synapses and may lead to only weakly skewed weight distributions. Narrow unimodal weight distributions are functionally less interesting than either bimodal or spread distributions with significant positive skewness [22]. Gütig et al. showed that an intermediate parametrization between add-STDP and the multiplicative STDP of Rubin et al. [25] provides a solution to the dilemma [15]; we will refer to their “non-linear temporally asymmetric” model as nlta-STDP. However, their model relies on a “soft” upper bound for synaptic weights and thus is not naturally reconcilable with long-tail weight distributions. We will examine the advantages of log-STDP for 1) representing the statistical properties of input spike trains (i.e., spike-time correlations) [15], [28]–[30] and 2) the reorganization of existing circuitry to adapt to a new input configuration [2], [31]. In doing so, we will compare log-STDP with the “extreme” cases of add-STDP and mlt-STDP, as well as nlta-STDP.

Results

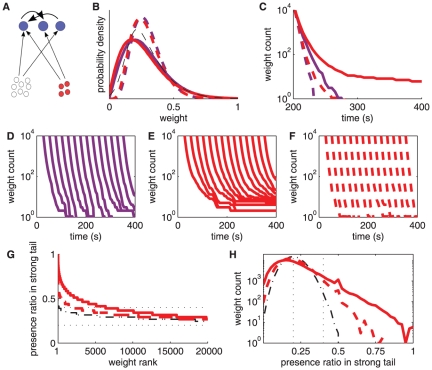

We first explain how we derived the novel model of log-STDP. Then, we study the synaptic dynamics for a single neuron whose plastic synapses are stimulated by an arbitrary number of input spike trains, as illustrated in Fig. 1A. Finally, we examine how the results for a single neuron extend to the case of a recurrent network.

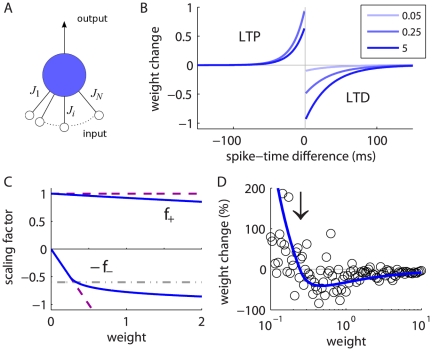

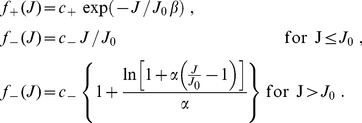

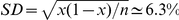

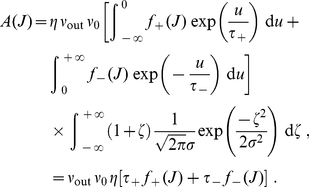

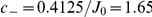

Figure 1. Single neuron equipped with STDP-plastic synapses.

A: Single neuron excited by  input spike trains. The synaptic strength of synapse

input spike trains. The synaptic strength of synapse  is denoted by

is denoted by  . B: Potentiation (LTP) and depression (LTD) curves

. B: Potentiation (LTP) and depression (LTD) curves  with

with  in (4). Darker curves indicate stronger values for the weight

in (4). Darker curves indicate stronger values for the weight  :

:  (light blue),

(light blue),  (medium blue), and

(medium blue), and  (dark blue) in (6). In the top left quadrant for LTP, the two curves in lighter blue are superimposed, since potentiation is quasi-constant for small weights. C: Functions

(dark blue) in (6). In the top left quadrant for LTP, the two curves in lighter blue are superimposed, since potentiation is quasi-constant for small weights. C: Functions  for LTP and

for LTP and  for LTD in log-STDP (blue solid curve) in (6) with

for LTD in log-STDP (blue solid curve) in (6) with  ,

,  and

and  ; mlt-STDP similar to van Rossum et al.'s model [24] (pink dashed line); and add-STDP similar to Song et al.'s model [1] (gray dashed-dotted curve for depression and pink dashed curve for potentiation). D: Weight change (in percent of the original weight) resulting from 20 successive modifications induced by log-STDP with random pairing of pre- and postsynaptic spikes (within the range

; mlt-STDP similar to van Rossum et al.'s model [24] (pink dashed line); and add-STDP similar to Song et al.'s model [1] (gray dashed-dotted curve for depression and pink dashed curve for potentiation). D: Weight change (in percent of the original weight) resulting from 20 successive modifications induced by log-STDP with random pairing of pre- and postsynaptic spikes (within the range  ms). In qualitative agreement with experimental measurements [19], smaller weights experience large fluctuations whereas larger weights exhibit less variability. The mean expected modification (blue solid curve) and

ms). In qualitative agreement with experimental measurements [19], smaller weights experience large fluctuations whereas larger weights exhibit less variability. The mean expected modification (blue solid curve) and  is indicated by the vertical arrow.

is indicated by the vertical arrow.

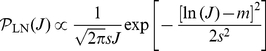

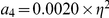

Toy plasticity model producing lognormal weight distribution

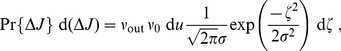

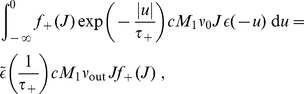

Following previous studies [24], [29], [32], we use the Fokker-Planck formalism to study the probability density  of a population of weights

of a population of weights  that are modified by many plasticity updates. Denoting by

that are modified by many plasticity updates. Denoting by  and

and  the first and second stochastic moments of the weight updates (or drift and diffusion terms, resp.), the stationary solution of the Fokker-Planck equation is the following distribution:

the first and second stochastic moments of the weight updates (or drift and diffusion terms, resp.), the stationary solution of the Fokker-Planck equation is the following distribution:

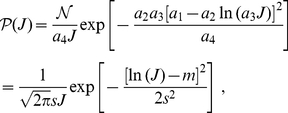

| (1) |

where  is a normalization factor. We observe that there exists a family of functions

is a normalization factor. We observe that there exists a family of functions  and

and  for which the expression in (1) is exactly a lognormal distribution, namely

for which the expression in (1) is exactly a lognormal distribution, namely

|

(2) |

with parameters  and

and  , the latter being related to the spread of the distribution. Typical examples for

, the latter being related to the spread of the distribution. Typical examples for  and

and  are represented in green in Fig. 2A (solid and dashed curves, resp.). The key features here are the decreasing log-like saturating profile for

are represented in green in Fig. 2A (solid and dashed curves, resp.). The key features here are the decreasing log-like saturating profile for  which crosses the x-axis, and the linearly increasing function for

which crosses the x-axis, and the linearly increasing function for  . Note that these conditions need only be satisfied around the crossing value to obtain a close-to-lognormal distribution. Details can be found in Methods with explicit expressions for

. Note that these conditions need only be satisfied around the crossing value to obtain a close-to-lognormal distribution. Details can be found in Methods with explicit expressions for  and

and  in (22). However, we cannot regard this fictive plasticity model, hereafter referred to as ‘toy model’, as biologically realistic. A first reason is that the mean weight update in the case of uncorrelated inputs is

in (22). However, we cannot regard this fictive plasticity model, hereafter referred to as ‘toy model’, as biologically realistic. A first reason is that the mean weight update in the case of uncorrelated inputs is  , which diverges as the weight

, which diverges as the weight  approaches 0. Another reason is that an STDP rule cannot be explicitly derived from this model. For STDP,

approaches 0. Another reason is that an STDP rule cannot be explicitly derived from this model. For STDP,  and

and  cannot be freely chosen, but are tied to each other. Nevertheless, from this toy model we design a biologically realistic STDP rule that is also inspired by the experimentally-inspired mlt-STDP proposed by van Rossum et al. [24].

cannot be freely chosen, but are tied to each other. Nevertheless, from this toy model we design a biologically realistic STDP rule that is also inspired by the experimentally-inspired mlt-STDP proposed by van Rossum et al. [24].

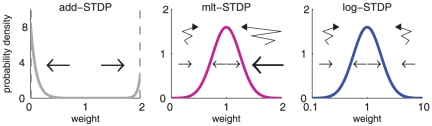

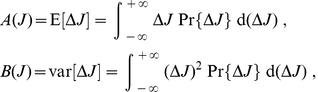

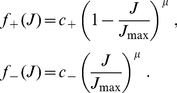

Figure 2. Comparison between the toy model and our new model of log-STDP.

A: Plot of the functions  (solid curves, left y-axis) and

(solid curves, left y-axis) and  (dashed-dotted curves, right y-axis) that describe the first and second moments of the weight dynamics, cf. (1). Comparison between log-STDP (blue curves) with

(dashed-dotted curves, right y-axis) that describe the first and second moments of the weight dynamics, cf. (1). Comparison between log-STDP (blue curves) with  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ms in (3), (4) and (6); and the toy model (green curves) with

ms in (3), (4) and (6); and the toy model (green curves) with  ,

,  ,

,  , and

, and  in (22). B: Solutions of the Fokker-Planck equation for the curves plotted in A. The x-axis has a log scale. Left inset: log scales for both axes.

in (22). B: Solutions of the Fokker-Planck equation for the curves plotted in A. The x-axis has a log scale. Left inset: log scales for both axes.

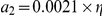

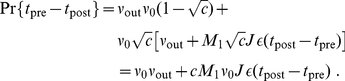

STDP model capable of generating long-tail weight distributions

Here we present the mathematical description of ‘log-STDP’. In this phenomenological model, the change in the synaptic weight induced by pre- and postsynaptic spikes at respective times  and

and  is given by

is given by

| (3) |

where the learning rate  determines the speed of learning. The Gaussian white noise

determines the speed of learning. The Gaussian white noise  describes the variability observed in physiology; it has zero mean and variance

describes the variability observed in physiology; it has zero mean and variance  . Here, we treat the case where all spike pairs contribute to STDP. Depending on the relative timing of the spike pair

. Here, we treat the case where all spike pairs contribute to STDP. Depending on the relative timing of the spike pair  , the learning window

, the learning window  represented in Fig. 1B leads to potentiation (LTP) or depression (LTD), respectively:

represented in Fig. 1B leads to potentiation (LTP) or depression (LTD), respectively:

|

(4) |

The shape of the weight distribution produced by STDP can be adjusted via the scaling functions  in (4) that determine the weight dependence. These functions are involved in the drift term

in (4) that determine the weight dependence. These functions are involved in the drift term  and noise term

and noise term  that determine the synaptic dynamics and particularly the stationary weight distribution in (1). For a general model of STDP described by (3) and (4)

that determine the synaptic dynamics and particularly the stationary weight distribution in (1). For a general model of STDP described by (3) and (4)  and

and  are given by:

are given by:

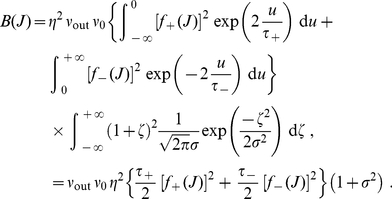

|

(5) |

where  is the variance of the white noise

is the variance of the white noise  . The derivation of (5) neglects input-output correlations. This is a good approximation when a neuron is stimulated by many uncorrelated inputs. In this case, the neuron model does not play a significant role in the synaptic dynamics. Details can be found in Methods (‘STDP dynamics for uncorrelated inputs’). Here the idea is to obtain similar dynamics for the toy model and the STDP rule, such that the latter produces lognormal-like weight distributions. To do so, we match the functions

. The derivation of (5) neglects input-output correlations. This is a good approximation when a neuron is stimulated by many uncorrelated inputs. In this case, the neuron model does not play a significant role in the synaptic dynamics. Details can be found in Methods (‘STDP dynamics for uncorrelated inputs’). Here the idea is to obtain similar dynamics for the toy model and the STDP rule, such that the latter produces lognormal-like weight distributions. To do so, we match the functions  (solid curves) and

(solid curves) and  (dashed curves) for our novel model (blue) and the toy model (green) represented in Fig. 2A. In particular, we focus on the profile of

(dashed curves) for our novel model (blue) and the toy model (green) represented in Fig. 2A. In particular, we focus on the profile of  around its crossing point with the x-axis to infer the shapes of the LTP and LTD curves. From (5),

around its crossing point with the x-axis to infer the shapes of the LTP and LTD curves. From (5),  relates to the difference

relates to the difference  . To obtain the log-like profile of

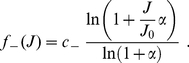

. To obtain the log-like profile of  in the toy model, several possibilities can be imagined. An option is increasing LTP and linear LTD, somewhat similar to the ‘power-law’ STDP model proposed by Morrison et al. [26]. However, we will focus on the “converse” solution with almost constant LTP and sublinear LTD. This leads to the following expressions that are represented in Fig. 1C:

in the toy model, several possibilities can be imagined. An option is increasing LTP and linear LTD, somewhat similar to the ‘power-law’ STDP model proposed by Morrison et al. [26]. However, we will focus on the “converse” solution with almost constant LTP and sublinear LTD. This leads to the following expressions that are represented in Fig. 1C:

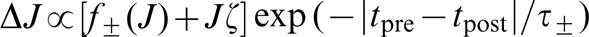

|

(6) |

LTD discriminates between the ranges of small weights ( ) and large weights (

) and large weights ( ). The weight dependence for LTD in log-STDP is similar to mlt-STDP [24] for

). The weight dependence for LTD in log-STDP is similar to mlt-STDP [24] for  , i.e., it increases linearly with

, i.e., it increases linearly with  . However, the LTD curve

. However, the LTD curve  becomes sublinear for

becomes sublinear for  , and

, and  determines the degree of the log-like saturation. This choice is motivated by examining the sole effect of changing LTD for “large” weights compared with the classic model of mlt-STDP. In practice, we choose the function

determines the degree of the log-like saturation. This choice is motivated by examining the sole effect of changing LTD for “large” weights compared with the classic model of mlt-STDP. In practice, we choose the function  for LTP to be roughly constant around

for LTP to be roughly constant around  , such that the exponential decay controlled by

, such that the exponential decay controlled by  only shows for, say,

only shows for, say,  . Note that, in the range

. Note that, in the range  , log-STDP coincides with mlt-STDP when

, log-STDP coincides with mlt-STDP when  and

and  ; and it tends toward add-STDP when

; and it tends toward add-STDP when  and

and  .

.

Noise scheme

Before studying the dynamics induced by log-STDP, we discuss the role of noise in our model in the light of previous models. Our model involves two sources of noise in the STDP dynamics, via the white noise  (with variance

(with variance  ) and the learning rate

) and the learning rate  in (3). The learning speed resizes the weight updates, which matters when input spike trains are random to a large degree. As can be seen in (5), the order of magnitude between

in (3). The learning speed resizes the weight updates, which matters when input spike trains are random to a large degree. As can be seen in (5), the order of magnitude between  and

and  crucially depends on

crucially depends on  [29]. Because

[29]. Because  modulates the term involving

modulates the term involving  in (3), its effect depends on

in (3), its effect depends on  via the scaling functions

via the scaling functions  . For log-STDP with quasi-constant LTP and sublinear LTD, the noise experienced by a strong weight is weaker in proportion as compared to a weaker weight; see Fig. 1D. In this sense, log-STDP qualitatively resembles the model of activity-dependent plasticity used by Yasumatsu et al. [19] to explain the observed fluctuations of spine volumes. In contrast, the original model proposed by van Rossum et al. [24] involves a STDP noise that linearly increases with the weight

. For log-STDP with quasi-constant LTP and sublinear LTD, the noise experienced by a strong weight is weaker in proportion as compared to a weaker weight; see Fig. 1D. In this sense, log-STDP qualitatively resembles the model of activity-dependent plasticity used by Yasumatsu et al. [19] to explain the observed fluctuations of spine volumes. In contrast, the original model proposed by van Rossum et al. [24] involves a STDP noise that linearly increases with the weight  for both LTP and LTD, namely

for both LTP and LTD, namely  . Further details are discussed in Methods (‘Baseline parameters for log-STDP’).

. Further details are discussed in Methods (‘Baseline parameters for log-STDP’).

Compared to the study by van Rossum et al. [24], we use a relatively fast learning rate  and a weaker value for

and a weaker value for  in our version of mlt-STDP (and log-STDP, etc.). The original model of van Rossum et al. assumes that the variability observed in the weight updates [27] originates from STDP only. There, the intrinsic variability of single synapses and measurement noise are neglected. This means that STDP updates may not be as noisy as proposed by van Rossum et al. This motivates the use of a smaller value for

in our version of mlt-STDP (and log-STDP, etc.). The original model of van Rossum et al. assumes that the variability observed in the weight updates [27] originates from STDP only. There, the intrinsic variability of single synapses and measurement noise are neglected. This means that STDP updates may not be as noisy as proposed by van Rossum et al. This motivates the use of a smaller value for  here. Note that, interestingly, plasticity-independent variability has been recently reported to be proportionally larger for weak than strong synapses [18]. This goes in the same line as more stability for strong weights in our model, via the dependence of

here. Note that, interestingly, plasticity-independent variability has been recently reported to be proportionally larger for weak than strong synapses [18]. This goes in the same line as more stability for strong weights in our model, via the dependence of  on

on  .

.

A last point concerns spike-pair restrictions: all pairs of pre- and postsynaptic spikes contribute to STDP in the present study, which implies more updates and thus more noise in the synaptic dynamics. Consequently, even though individual updates in our version of mlt-STP are less noisy than in the original model of van Rossum et al. [24], the global noise experienced by the synaptic weights is comparable in both models during the ongoing spiking activity and leads to spread distributions.

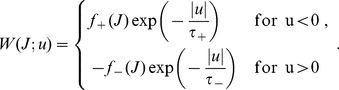

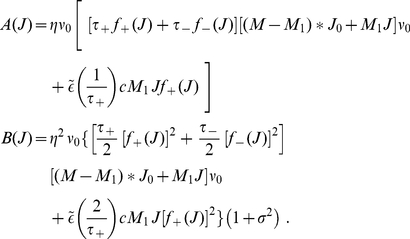

Predicting the stable weight distribution

Our theoretical framework allows us to evaluate the weight distribution produced by an arbitrary weight-dependent STDP model, by combining (1) and (5). In this section, we focus on the case of uncorrelated input spike trains, for which (5) is valid. However, the theoretical prediction may not be reliable when the synaptic dynamics does not have a stable fixed point. For example, add-STDP requires taking into account the effect of input-output spike-time correlations to obtain a bimodal distribution of [24], [32]. Such theoretical refinements will be discussed later. In this study,  is chosen such that LTP and LTD in log-STDP (roughly) balance each other for uncorrelated inputs, namely

is chosen such that LTP and LTD in log-STDP (roughly) balance each other for uncorrelated inputs, namely  . It corresponds to the intersection of the drift (solid curve) and the x-axis in Fig. 2A. Therefore,

. It corresponds to the intersection of the drift (solid curve) and the x-axis in Fig. 2A. Therefore,  will also be referred to as the ‘fixed point’ of the dynamics in the following. In the absence of noise and for slow learning, the weights cluster around the fixed point

will also be referred to as the ‘fixed point’ of the dynamics in the following. In the absence of noise and for slow learning, the weights cluster around the fixed point  , when it is stable (negative slope for

, when it is stable (negative slope for  ). Otherwise, the weight distribution spreads around the fixed point. The noise term

). Otherwise, the weight distribution spreads around the fixed point. The noise term  (dashed curves in Fig. 2A) can be somewhat interpreted graphically from the LTP and LTD curves,

(dashed curves in Fig. 2A) can be somewhat interpreted graphically from the LTP and LTD curves,  and

and  in Fig. 1C. When they are farther apart, the resulting noise is stronger. In log-STDP, because depression increases sublinearly (blue solid curve for

in Fig. 1C. When they are farther apart, the resulting noise is stronger. In log-STDP, because depression increases sublinearly (blue solid curve for  in Fig. 1C), noise in log-STDP is weaker than that for mlt-STDP for which depression increases linearly (pink dashed curve for

in Fig. 1C), noise in log-STDP is weaker than that for mlt-STDP for which depression increases linearly (pink dashed curve for  ). Figure S1 provides a qualitative comparison of the relationship between the

). Figure S1 provides a qualitative comparison of the relationship between the  curves (column A) and the drift and noise terms (

curves (column A) and the drift and noise terms ( and

and  in column B) for different STDP models, as well as the resulting weight distributions (column C).

in column B) for different STDP models, as well as the resulting weight distributions (column C).

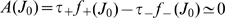

As a first control, we verify that the stationary distributions in Fig. 2B are similar for the toy model and log-STDP, even though we only roughly match  and

and  in Fig. 2A. The tail of strong weights vanishes slightly faster for log-STDP than for the toy model (see inset with a log-log plot) because of the weaker noise for large weights, cf. the dashed curves in Fig. 2A. The comparison with mlt-STDP (pink solid curve) in Fig. 3A shows the influence of sublinear LTD. The weight distribution is more skewed and the tail of large weights extends further for log-STDP (blue solid curve); see also Fig. 3B with log-scaled axes. Even though the difference between log-STDP and mlt-STDP may not look dramatic in Fig. 3A and B, we will show later that the underlying dynamics are clearly different, especially in the case of correlated inputs. The weight distribution for add-STDP (gray dashed-dotted curve) is spread because our choice of parameters leads to strong noise in the synaptic dynamics (especially the fast learning rate

in Fig. 2A. The tail of strong weights vanishes slightly faster for log-STDP than for the toy model (see inset with a log-log plot) because of the weaker noise for large weights, cf. the dashed curves in Fig. 2A. The comparison with mlt-STDP (pink solid curve) in Fig. 3A shows the influence of sublinear LTD. The weight distribution is more skewed and the tail of large weights extends further for log-STDP (blue solid curve); see also Fig. 3B with log-scaled axes. Even though the difference between log-STDP and mlt-STDP may not look dramatic in Fig. 3A and B, we will show later that the underlying dynamics are clearly different, especially in the case of correlated inputs. The weight distribution for add-STDP (gray dashed-dotted curve) is spread because our choice of parameters leads to strong noise in the synaptic dynamics (especially the fast learning rate  ). Note that, in contrast to Fig. 3B, STDP can also lead to a bimodal distribution clustered at each bound or even a unimodal distribution located at the upper bound, e.g., for weaker LTD than used here. Then, the value of the upper bound on the weights may critically affect the resulting distribution.

). Note that, in contrast to Fig. 3B, STDP can also lead to a bimodal distribution clustered at each bound or even a unimodal distribution located at the upper bound, e.g., for weaker LTD than used here. Then, the value of the upper bound on the weights may critically affect the resulting distribution.

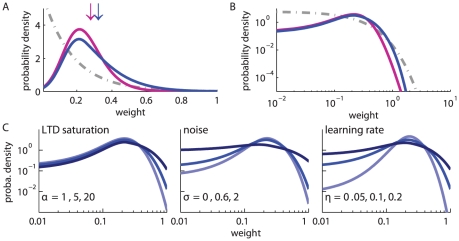

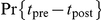

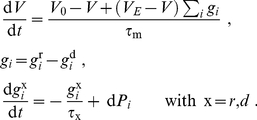

Figure 3. Theoretical predictions of weight distribution shaped by STDP.

A: Resulting weight distribution for log-STDP (blue solid curve) with the saturation for LTD corresponding to  in (6); mlt-STDP inspired by the model of van Rossum et al. [24] (pink solid curve) in (27); and add-STDP [1], [14] (gray dashed-dotted curve) in (26). Log-STDP and mlt-STDP are parameterized to obtain roughly the same equilibrium value for the mean weight (arrows); without noise and very slow learning, the resulting narrow distribution would be centered around the fixed point

in (6); mlt-STDP inspired by the model of van Rossum et al. [24] (pink solid curve) in (27); and add-STDP [1], [14] (gray dashed-dotted curve) in (26). Log-STDP and mlt-STDP are parameterized to obtain roughly the same equilibrium value for the mean weight (arrows); without noise and very slow learning, the resulting narrow distribution would be centered around the fixed point  . The curves are evaluated using (1) and (5) with the same learning rate

. The curves are evaluated using (1) and (5) with the same learning rate  and noise level corresponding to

and noise level corresponding to  in (3). B: Similar to A with log-scaled axes. C: Effect of the parameters in log-STDP. Comparison between the predicted weight distributions with the baseline parameters

in (3). B: Similar to A with log-scaled axes. C: Effect of the parameters in log-STDP. Comparison between the predicted weight distributions with the baseline parameters  ,

,  and

and  in (6) (medium blue curve in B) and two variants with the parameter change indicated in each plot (darker curves correspond to larger values).

in (6) (medium blue curve in B) and two variants with the parameter change indicated in each plot (darker curves correspond to larger values).

The toy model is sufficiently simple to obtain an analytical expression for the spread of the resulting distribution, see (24) in Methods. Because of the proximity between the dynamics induced by the toy model and log-STDP, we can predict the effect of the parameters in log-STDP on the stationary weight distribution. These trends are illustrated in Fig. 3C (log-log plots), which compares the weight distributions for the baseline parameters (medium blue curve; same as Fig. 3B) and two variants for a given parameter, a smaller value and a larger value (lighter and darker blue curves, resp.). For larger  , LTD has a more pronounced saturating log-like profile and the tail of strong weights extends further. Both stronger noise with a larger value for

, LTD has a more pronounced saturating log-like profile and the tail of strong weights extends further. Both stronger noise with a larger value for  and a faster learning rate

and a faster learning rate  strengthen the shuffling of the weights, which results in more widely spread distributions.

strengthen the shuffling of the weights, which results in more widely spread distributions.

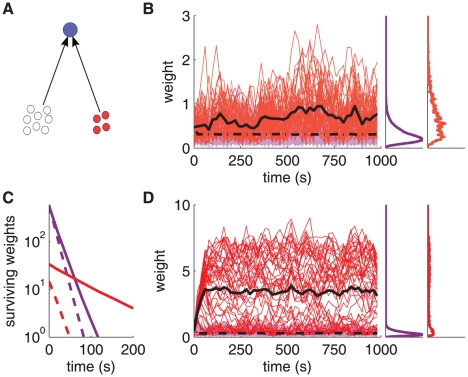

Continuous shuffling of synaptic weights

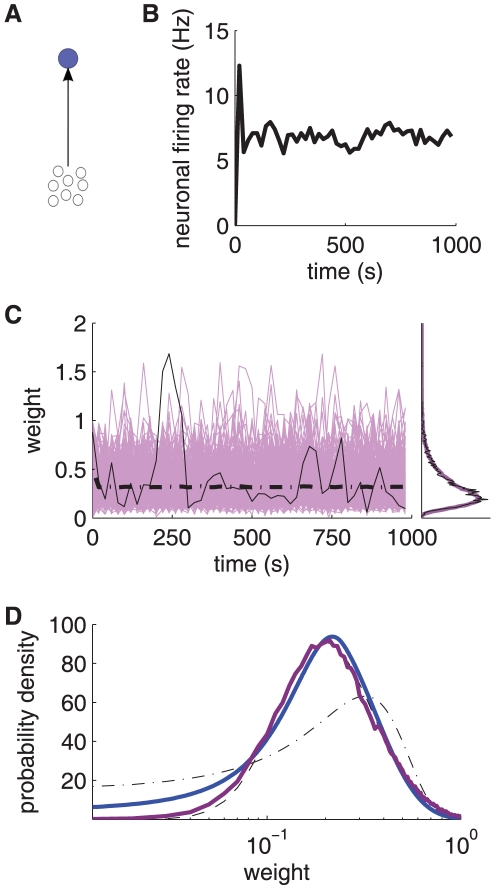

Rapid adaptation to the external world is enhanced when weights experience a certain degree of noise. With log-STDP, synapses are shuffled because of the plasticity-intrinsic noise  and random input spikes in a highly dynamical manner, even after the synaptic population reaches the equilibrium state. To show this, we conduct numerical simulations of an integrate-and-fire neuron (parameters are given in Methods) with

and random input spikes in a highly dynamical manner, even after the synaptic population reaches the equilibrium state. To show this, we conduct numerical simulations of an integrate-and-fire neuron (parameters are given in Methods) with  synapses, each receiving uncorrelated (Poisson) spike trains with input firing rate

synapses, each receiving uncorrelated (Poisson) spike trains with input firing rate  Hz (Fig. 4A). The output neuronal firing rate, hereafter denoted by

Hz (Fig. 4A). The output neuronal firing rate, hereafter denoted by  , stabilizes between 6 and 8 Hz (Fig. 4B). The evolution of synaptic weights is displayed in Fig. 4C, which shows that individual synaptic weights are constantly shuffled by STDP (cf. black thin trace) within the stable weight distribution (right inset). The simulated mean weight (black thick dashed-dotted trace) stabilizes around

, stabilizes between 6 and 8 Hz (Fig. 4B). The evolution of synaptic weights is displayed in Fig. 4C, which shows that individual synaptic weights are constantly shuffled by STDP (cf. black thin trace) within the stable weight distribution (right inset). The simulated mean weight (black thick dashed-dotted trace) stabilizes around  , which is actually larger than the fixed point

, which is actually larger than the fixed point  : this mainly follows because of the lower bound enforced on the weight at

: this mainly follows because of the lower bound enforced on the weight at  , which prevents the weights from spreading downward. (The solution of the Fokker-Planck equation takes this into account via the boundary condition at zero.) In Fig. 4D, the resulting weight distribution (purple curve) is satisfactorily predicted by expression in (1) (blue curve), except for small weights. The latter discrepancy arises from the finite size of the weight updates. Two fits using linear regression on the simulated weights (black thin curves) confirm that their distribution is closer to lognormal (dashed curve) than Gaussian (dashed-dotted curve). Figure S2 provides comparisons between the simulated and predicted distributions when varying the parameters

, which prevents the weights from spreading downward. (The solution of the Fokker-Planck equation takes this into account via the boundary condition at zero.) In Fig. 4D, the resulting weight distribution (purple curve) is satisfactorily predicted by expression in (1) (blue curve), except for small weights. The latter discrepancy arises from the finite size of the weight updates. Two fits using linear regression on the simulated weights (black thin curves) confirm that their distribution is closer to lognormal (dashed curve) than Gaussian (dashed-dotted curve). Figure S2 provides comparisons between the simulated and predicted distributions when varying the parameters  and

and  . Those simulation results agree with the predictions in Fig. 3C.

. Those simulation results agree with the predictions in Fig. 3C.

Figure 4. Strong shuffling of individual weights within the stable distribution.

A: Schematic diagram of the neuron (top blue filled circle) stimulated by a pool of 3000 uncorrelated inputs (bottom open circles). B: Evolution of the neuronal output firing rate. C: Evolution of synaptic weights in the case of uncorrelated inputs. The purple traces represent a portion of the input weights recorded every 20 s, the black thin trace corresponds to an individual weight, and the black thick dashed-dotted trace indicates the mean weight over the  inputs. Right inset: The mean weight histogram averaged over the learning epoch is plotted in purple and the spot histogram at time 1000 s is represented by the black thin line. D: Comparison between the simulated weight distribution (purple thick curve; it corresponds to the purple curve in C) and the analytical prediction (blue thick curve; same as Fig. 3) with a log scale for the weights (x-axis). The black thin curves represent a Gaussian fit (dashed-dotted) and a lognormal fit (dashed) of the simulated weight distribution, obtained using linear regression. The same baseline parameters as in Fig. 3 have been used here.

inputs. Right inset: The mean weight histogram averaged over the learning epoch is plotted in purple and the spot histogram at time 1000 s is represented by the black thin line. D: Comparison between the simulated weight distribution (purple thick curve; it corresponds to the purple curve in C) and the analytical prediction (blue thick curve; same as Fig. 3) with a log scale for the weights (x-axis). The black thin curves represent a Gaussian fit (dashed-dotted) and a lognormal fit (dashed) of the simulated weight distribution, obtained using linear regression. The same baseline parameters as in Fig. 3 have been used here.

Representation of input spike-time correlations in the weight structure

The temporal “antisymmetry” (i.e., LTP versus LTD) of the learning window has been shown to favor correlated inputs, therefore generating weight specialization [1], [14], [15]. In order to examine how an input correlation structure is encoded in the weight structure by STDP, we consider the configuration in Fig. 5A that involves a small group of correlated inputs (bottom red circles) among many other uncorrelated inputs (bottom open circles). The correlated group consists of  input spike trains that have instantaneous pairwise spike-time correlations with strength

input spike trains that have instantaneous pairwise spike-time correlations with strength  . The mean firing rate is the same for uncorrelated and correlated inputs, namely

. The mean firing rate is the same for uncorrelated and correlated inputs, namely  Hz. Details about the input generation can be found in Methods (‘Generating correlated spike trains’). Only a few tens of inputs take part in the volleys of correlated spikes, which are embedded in the synaptic bombardment of the total

Hz. Details about the input generation can be found in Methods (‘Generating correlated spike trains’). Only a few tens of inputs take part in the volleys of correlated spikes, which are embedded in the synaptic bombardment of the total  inputs. In comparison, in the absence of any other stimulation, the coincident spiking of more than 500 inputs is necessary to trigger an output spike. In this sense, we consider “weak” spike-time correlations in a physiologically plausible range.

inputs. In comparison, in the absence of any other stimulation, the coincident spiking of more than 500 inputs is necessary to trigger an output spike. In this sense, we consider “weak” spike-time correlations in a physiologically plausible range.

Figure 5. Input spike-time correlations lead to robust weight specialization.

A: Schematic diagram of the neuron (top blue filled circle) stimulated by a pool of 2950 uncorrelated inputs (bottom open circles) and a pool of 50 correlated inputs (bottom red filled circles). B: Evolution of the synaptic weights for the configuration in A with weak correlation ( ). The correlated group is favored (red traces, mean in black thick solid line), synonymous with a greater chance of appear in the tail of the distribution compared to uncorrelated inputs (purple traces, mean in black thick dashed-dotted line). Right insets: normalized time-averaged histograms. C: Survival time of the weights from uncorrelated (purple curves) and correlated (red curves) inputs in the top 20% of the distribution. Comparison between log-STDP (solid curves) and mlt-STDP (dashed curves). The y-axis indicates the number of synapses present in the top 20% at each counting round (performed every 20 s) from 100 s until the time on the x-axis. The data are averages over 20 trials. D: Similar to B with stronger correlation (

). The correlated group is favored (red traces, mean in black thick solid line), synonymous with a greater chance of appear in the tail of the distribution compared to uncorrelated inputs (purple traces, mean in black thick dashed-dotted line). Right insets: normalized time-averaged histograms. C: Survival time of the weights from uncorrelated (purple curves) and correlated (red curves) inputs in the top 20% of the distribution. Comparison between log-STDP (solid curves) and mlt-STDP (dashed curves). The y-axis indicates the number of synapses present in the top 20% at each counting round (performed every 20 s) from 100 s until the time on the x-axis. The data are averages over 20 trials. D: Similar to B with stronger correlation ( ). The weights from correlated inputs are pushed out of the main body of the distribution and saturate to a much larger value than the mean (black thick dashed-dotted line), roughly 30 times larger here.

). The weights from correlated inputs are pushed out of the main body of the distribution and saturate to a much larger value than the mean (black thick dashed-dotted line), roughly 30 times larger here.

When the inputs are only weakly correlated ( , meaning that 20% of the spikes are involved in synchronous events for each input), the weight distribution remains unimodal, as illustrated in Fig. 5B. Nevertheless, weights from correlated inputs are found more often in the tail of the distribution (red traces). In Fig. 5C, the weights from correlated inputs (red solid curve) survive for a longer time in the top 20% of the distribution compared to uncorrelated inputs (purple solid curve). The mean dwell time for both groups of inputs is given in Table 1. (Note that the “survival” here does not consider the history of the weights between the checks that are performed every 20 s. Nevertheless, this describes well the comparative trends in the persistence of strong weights for the different STDP models.) Weights from uncorrelated inputs are subject to shuffling only, whereas weights from correlated inputs also experience (weak) potentiation. Although the inputs remain correlated, the temporary weight structure is not robustly sustained and is erased due to the STDP noisy dynamics.

, meaning that 20% of the spikes are involved in synchronous events for each input), the weight distribution remains unimodal, as illustrated in Fig. 5B. Nevertheless, weights from correlated inputs are found more often in the tail of the distribution (red traces). In Fig. 5C, the weights from correlated inputs (red solid curve) survive for a longer time in the top 20% of the distribution compared to uncorrelated inputs (purple solid curve). The mean dwell time for both groups of inputs is given in Table 1. (Note that the “survival” here does not consider the history of the weights between the checks that are performed every 20 s. Nevertheless, this describes well the comparative trends in the persistence of strong weights for the different STDP models.) Weights from uncorrelated inputs are subject to shuffling only, whereas weights from correlated inputs also experience (weak) potentiation. Although the inputs remain correlated, the temporary weight structure is not robustly sustained and is erased due to the STDP noisy dynamics.

Table 1. Mean dwell time of the input weights in the top 20% of the distribution.

| uncorrelated inputs | correlated inputs | |

| log-STDP | 9.0 s | 78.4 s |

| mlt-STDP | 5.2 s | 11.6 s |

The dwell times correspond to the simulation for a single neuron and weak input correlation in Fig. 5A–C.

In contrast, stronger input correlations ( , meaning that 50% of the input spikes correspond to synchronous events) can potentiate the corresponding weights to a value many times larger than the mean. In Fig. 5D, the mean weight for the 50 correlated inputs is

, meaning that 50% of the input spikes correspond to synchronous events) can potentiate the corresponding weights to a value many times larger than the mean. In Fig. 5D, the mean weight for the 50 correlated inputs is  (with the strongest weights up to 10), as compared to

(with the strongest weights up to 10), as compared to  for the 2950 uncorrelated inputs. Here the drift clearly overpowers the noise to extract those weights from the main body of the distribution. Strongly potentiated weights are inhomogeneous and experience relative stability despite the noise (see the black trace of an individual weight). This occurs even for identical synaptic delays, meaning that the weight potentiation is not all-or-nothing, but rather gradual.

for the 2950 uncorrelated inputs. Here the drift clearly overpowers the noise to extract those weights from the main body of the distribution. Strongly potentiated weights are inhomogeneous and experience relative stability despite the noise (see the black trace of an individual weight). This occurs even for identical synaptic delays, meaning that the weight potentiation is not all-or-nothing, but rather gradual.

When synaptic inputs involve multiple correlated groups, log-STDP can sort the corresponding mean weights in increasing order of their correlation strengths; see Fig. S3 for an illustrative example. Both the slowly increasing LTD and decaying LTP contribute to this effect. The trends shown here are in agreement with previous results using the almost-additive version of nlta-STDP and the Poisson neuron model [30], which examined in depth the potentiation for several input pools with distinct correlation levels and different degrees of weight dependence. Note that nlta-STDP incorporated single-spike plasticity contributions in order to sort the mean weights of the input groups depending on their correlation strengths between the lower and upper weight bounds in that previous study. Here, however, log-STDP may produce a multimodal weight distribution, but the global mean of the distribution is kept small (around  ). Therefore, the weights from strongly correlated inputs are pushed to the tail of strong synapses while the majority of weights remains in the main body of weak synapses. The emerging distribution may thus be highly skewed.

). Therefore, the weights from strongly correlated inputs are pushed to the tail of strong synapses while the majority of weights remains in the main body of weak synapses. The emerging distribution may thus be highly skewed.

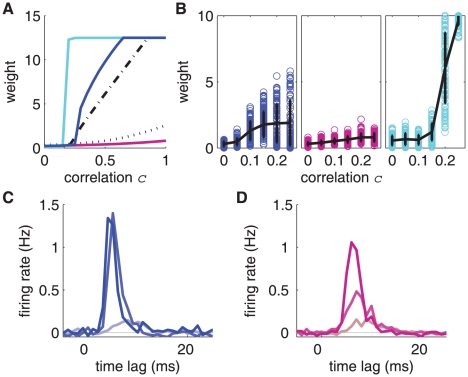

Sensitivity to input correlations

Now we examine in more detail how log-STDP is sensitive to input correlations. For any STDP model, potentiated weights imply stronger input-output correlations and, in turn, larger LTP induced by STDP. This self-reinforcing potentiation mechanism may be blocked when the weight dependence is “too” strong, though. Because of its sublinear profile for LTD and the resulting spread weight distribution, log-STDP exhibits an enhanced potentiation capability compared to mlt-STDP. Using the Poisson neuron model, we can evaluate how the equilibrium mean weight for the correlated inputs depends upon the input correlation  . This provides a qualitative prediction for the behavior of integrate-and-fire neuron, for which a full calculation is out of the scope of this paper. Figure 6A illustrates the predicted effect of input correlations for several STDP models; see (21) in Methods for details on the calculations. Log-STDP (blue curve) exhibits a rather steep curve for the fixed point, indicating graded but strong potentiation when input correlations increase. For comparison, we examine the model recently proposed by Hennequin et al. [22], which has a roughly piecewise profile for LTD with a slower increase for

. This provides a qualitative prediction for the behavior of integrate-and-fire neuron, for which a full calculation is out of the scope of this paper. Figure 6A illustrates the predicted effect of input correlations for several STDP models; see (21) in Methods for details on the calculations. Log-STDP (blue curve) exhibits a rather steep curve for the fixed point, indicating graded but strong potentiation when input correlations increase. For comparison, we examine the model recently proposed by Hennequin et al. [22], which has a roughly piecewise profile for LTD with a slower increase for  than

than  (the details are provided in Supporting Information). Because of this change in curvature, this model behaves similarly to log-STDP (black dashed-dotted curve). The nlta-STDP model proposed by Gütig et al. [15] is also sensitive to input correlations. In the parameter range where nlta-STDP can induce strong potentiation (

(the details are provided in Supporting Information). Because of this change in curvature, this model behaves similarly to log-STDP (black dashed-dotted curve). The nlta-STDP model proposed by Gütig et al. [15] is also sensitive to input correlations. In the parameter range where nlta-STDP can induce strong potentiation ( in (28) in Methods), the equilibrium weight always exhibits a sharp step from the lower to the upper bound (cyan curve). Outside this parameter range, nlta-STDP resembles mlt-STDP, meaning weak competition. In other words, potentiation for nlta-STDP is rather all-or-nothing. In contrast to these three models, mlt-STDP (pink curve) and power-law STDP proposed by Morrison et al. [26] (black dotted curve) appear far less sensitive to input correlations. LTD in both models increases linearly with the weight, which strongly counterbalances LTP. The weak potentiation of correlated inputs by mlt-STDP explains the only minor increase of stability for the tail of the distribution in Fig. 5C (thick dashed curves) and Table 1. The weight distributions corresponding to the five STDP models are illustrated in Fig. S1 (column C). Although the predictions in Fig. 6A do not include noise, simulations in Fig. 6B for log-STDP (blue), mlt-STDP (pink) and nlta-STDP (cyan) agree with the trends. Namely, log-STDP exhibits a gradual potentiation of correlated inputs, which is intermediate between the weak increase for mlt-STDP and the all-or-nothing behavior for nlta-STDP. The number of correlated inputs also plays a role here: a larger correlated group induces stronger potentiation (as indicated by (18) in Methods), as does stronger correlation.

in (28) in Methods), the equilibrium weight always exhibits a sharp step from the lower to the upper bound (cyan curve). Outside this parameter range, nlta-STDP resembles mlt-STDP, meaning weak competition. In other words, potentiation for nlta-STDP is rather all-or-nothing. In contrast to these three models, mlt-STDP (pink curve) and power-law STDP proposed by Morrison et al. [26] (black dotted curve) appear far less sensitive to input correlations. LTD in both models increases linearly with the weight, which strongly counterbalances LTP. The weak potentiation of correlated inputs by mlt-STDP explains the only minor increase of stability for the tail of the distribution in Fig. 5C (thick dashed curves) and Table 1. The weight distributions corresponding to the five STDP models are illustrated in Fig. S1 (column C). Although the predictions in Fig. 6A do not include noise, simulations in Fig. 6B for log-STDP (blue), mlt-STDP (pink) and nlta-STDP (cyan) agree with the trends. Namely, log-STDP exhibits a gradual potentiation of correlated inputs, which is intermediate between the weak increase for mlt-STDP and the all-or-nothing behavior for nlta-STDP. The number of correlated inputs also plays a role here: a larger correlated group induces stronger potentiation (as indicated by (18) in Methods), as does stronger correlation.

Figure 6. Sensitivity to input correlations.

A: Theoretical equilibrium weights plotted as a function of the input correlation. Comparison of log-STDP (blue), mlt-STDP (pink), nlta-STDP (cyan), Hennequin et al.'s model [22] (dashed-dotted black) and ‘power-law- STDP of Morrison et al. [26] (dotted black). The parameters for the Poisson neuron and the last two models are the same as Fig. S1. The curves are estimated using the zeros of (20), which is based on the Poisson neuron and also neglects the effect of noise. B: Simulated potentiated weights as a function of the input correlation for the same configuration as in Fig. 5B and D. Comparison of log-STDP (blue), mlt-STDP (pink) and nlta-STDP (cyan). The respective mean weights and standard deviations are represented by the black curves and error bars. The results are taken from 10 simulations. C & D: Post-stimulus time histograms of the output neuron after training (averaged over 100 s), where the stimuli are the input correlated events. Comparison between C log-STDP (blue) and D mlt-STDP (pink) for  ,

,  and

and  (from darker to lighter color, resp.). For log-STDP, the neuronal response is reliably and precisely triggered by correlated events (more than 80% occurrence) for

(from darker to lighter color, resp.). For log-STDP, the neuronal response is reliably and precisely triggered by correlated events (more than 80% occurrence) for  , whereas mlt-STDP yields 70% for

, whereas mlt-STDP yields 70% for  and only 50% for

and only 50% for  .

.

The presence of strong weights also affects the neuronal output firing rate. The simulation for log-STDP in Fig. 5D corresponds to  Hz (black solid line in Fig. S3C). In comparison, the baseline firing rate for uncorrelated inputs stabilizes around

Hz (black solid line in Fig. S3C). In comparison, the baseline firing rate for uncorrelated inputs stabilizes around  Hz in Fig. 4B. The larger total incoming weight in Fig. 5D alone does not explain the gap in the firing rate. Rather, this significant increase arises because input correlated events cause the neuronal output to effectively fire. This is confirmed by the post-stimulus time histogram of the output neuron in Fig. 6C, where correlated events are taken as the reference stimulus. The stronger input correlations are (indicated by darker color), the stronger some weights are potentiated and the more reliable the drive of the output firing by each correlated event is. For

Hz in Fig. 4B. The larger total incoming weight in Fig. 5D alone does not explain the gap in the firing rate. Rather, this significant increase arises because input correlated events cause the neuronal output to effectively fire. This is confirmed by the post-stimulus time histogram of the output neuron in Fig. 6C, where correlated events are taken as the reference stimulus. The stronger input correlations are (indicated by darker color), the stronger some weights are potentiated and the more reliable the drive of the output firing by each correlated event is. For  , the neuronal response is locked to each input correlated event with log-STDP. In Fig. 6D, mlt-STDP (darker to lighter pink) leads to a weaker and later-in-time histogram, especially for

, the neuronal response is locked to each input correlated event with log-STDP. In Fig. 6D, mlt-STDP (darker to lighter pink) leads to a weaker and later-in-time histogram, especially for  (medium pink). The corresponding neuronal firing rate is then

(medium pink). The corresponding neuronal firing rate is then  Hz, almost unchanged compared to about 7 Hz for uncorrelated inputs. These results clarify that the neuronal response is robustly and precisely driven in a broader range of input correlations for log-STDP than for mlt-STDP. Note that the good overall reliability of the neuronal response even when weights are weakly potentiated (especially for mlt-STDP) is partly related to the integrate-and-fire neuron model. The difference between log-STDP and mlt-STDP is much clearer when using a Poisson neuron as shown in Fig. S5, for which the output firing probability linearly increases with the synaptic weights.

Hz, almost unchanged compared to about 7 Hz for uncorrelated inputs. These results clarify that the neuronal response is robustly and precisely driven in a broader range of input correlations for log-STDP than for mlt-STDP. Note that the good overall reliability of the neuronal response even when weights are weakly potentiated (especially for mlt-STDP) is partly related to the integrate-and-fire neuron model. The difference between log-STDP and mlt-STDP is much clearer when using a Poisson neuron as shown in Fig. S5, for which the output firing probability linearly increases with the synaptic weights.

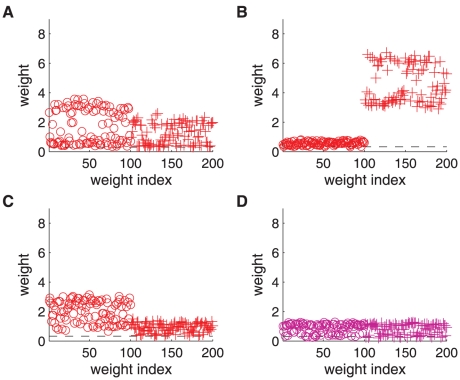

Now we show how the sensitivity to input correlations for log-STDP and mlt-STDP (Figs. 6C and D) affects the resulting synaptic competition. When two identical correlated groups (with no correlation between each other) excite a neuron, a desirable outcome is the specialization to only one of those while discarding the other. This is important to select functional pathways in a consistent manner, without “mixing” spiking information. Add-STDP and nlta-STDP can perform such a ‘symmetry breaking’, whereas mlt-STDP cannot do so [2], [15]. Because of its sensitivity to input spike-time correlations shown in Fig. 6C, we expect log-STDP to be capable of symmetry breaking, at least when input correlations are sufficiently strong. For the baseline parameters ( ) and strong correlations (

) and strong correlations ( ), the first correlated group slightly dominates (circles), but does not completely repress the other group (pluses) in Fig. 7A. However, with very strong correlations (

), the first correlated group slightly dominates (circles), but does not completely repress the other group (pluses) in Fig. 7A. However, with very strong correlations ( ) in Fig. 7B, the second group clearly takes over the driving of the neuronal firing, and the red group is at the level of uncorrelated inputs (black dashed line). With still

) in Fig. 7B, the second group clearly takes over the driving of the neuronal firing, and the red group is at the level of uncorrelated inputs (black dashed line). With still  , but tuning LTD closer to mlt-STDP with

, but tuning LTD closer to mlt-STDP with  , we obtain a similar situation to that in Fig. 7A, with no clear winner (not shown). In such winner-share-all cases, either group may slightly and temporarily dominate the other group during the simulation (and roles may swap over time), but both groups coexist in the tail of strong weights. In contrast, winner-take-all can be obtained for

, we obtain a similar situation to that in Fig. 7A, with no clear winner (not shown). In such winner-share-all cases, either group may slightly and temporarily dominate the other group during the simulation (and roles may swap over time), but both groups coexist in the tail of strong weights. In contrast, winner-take-all can be obtained for  as in Fig. 7A when using a more pronounced saturating LTD (

as in Fig. 7A when using a more pronounced saturating LTD ( ), as illustrated in Fig. 7C. Altogether, stronger saturation for LTD and, to a lesser extent, stronger potentiation (i.e., higher values for

), as illustrated in Fig. 7C. Altogether, stronger saturation for LTD and, to a lesser extent, stronger potentiation (i.e., higher values for  and

and  in our model, resp.) favor a winner-take-all behavior. In contrast, the same simulation as Fig. 7B with mlt-STDP not only shows weakly potentiated weights, but the two input groups cannot be separated by the learning dynamics; only a winner-share-all behavior occurs (Fig. 7D).

in our model, resp.) favor a winner-take-all behavior. In contrast, the same simulation as Fig. 7B with mlt-STDP not only shows weakly potentiated weights, but the two input groups cannot be separated by the learning dynamics; only a winner-share-all behavior occurs (Fig. 7D).

Figure 7. Competition between two identical strongly correlated input pools.

The configuration is similar to Fig. 5 with two groups of 100 correlated inputs each with the same strength  , in addition to 2800 uncorrelated inputs. A: Winner-share-all for

, in addition to 2800 uncorrelated inputs. A: Winner-share-all for  and log-STDP with

and log-STDP with  . B: Winner-take-all for

. B: Winner-take-all for  and log-STDP with

and log-STDP with  . C: Winner-take-all for

. C: Winner-take-all for  and log-STDP with

and log-STDP with  . D: Winner-share-all for

. D: Winner-share-all for  and mlt-STDP. The circles and pluses indicate the weight strengths averaged values over 300 s of simulation (after the initial development of the structure) for the two correlated groups, respectively. The dashed line indicates the fixed point

and mlt-STDP. The circles and pluses indicate the weight strengths averaged values over 300 s of simulation (after the initial development of the structure) for the two correlated groups, respectively. The dashed line indicates the fixed point  of the weight dynamics.

of the weight dynamics.

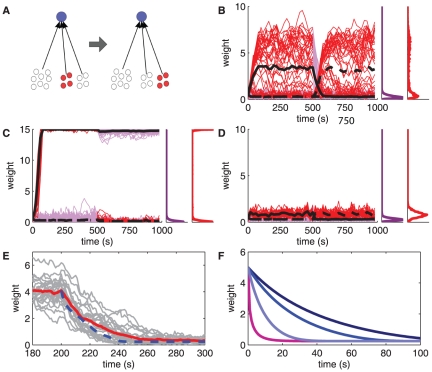

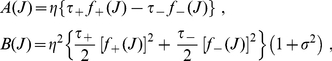

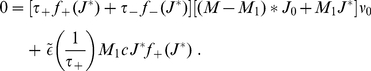

Remodeling of synaptic pathways

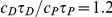

The external world to which the brain has to adapt keeps changing over time. When the input configuration changes significantly, a desirable behavior for a neuron with plastic synapses consists in forgetting the previously learned weight structure to readapt. To compare the performance of the different STDP models, we consider a neuron receiving inputs from a large uncorrelated pool and two small pools (either uncorrelated or correlated) of 50 inputs. As illustrated in Fig. 8A, the two pools switch their correlation strengths at 500 s: before 500 s the first (second) group is strongly correlated (uncorrelated), while after 500 s the second (first) group is strongly correlated (uncorrelated, resp.). The restructuring process goes quite efficiently with mlt-STDP (Fig. 8D), but not with add-STDP (Fig. 8C). Because of unstable weight dynamics, add-STDP may fail to forget the previously learned structure [31]. The strong weights clustered at the upper bound then drive the neuronal output (even without input correlations), which prevents the second correlated group to be learned. The stronger the upper bound, the more difficult it is for the neuron to readapt. In contrast, even though mlt-STDP manages to readapt, the weight specialization remains weak, as explained in the previous section. Because of its well-balanced dynamics, log-STDP successfully combines the strong points of add-STDP and mlt-STDP. As shown in Fig. 8B, log-STDP rapidly selects the input pathway from the second group when it starts to show strong correlations, while rapidly weakening the pathway from the first group. Note that similar results can be obtained with nlta-STDP.

Figure 8. Remodeling of synaptic weights when the input configuration changes.

A: Schematic representation similar to Fig. 5A for two groups of 50 inputs each, which exhibit strong spike-time correlations ( , represented by the bottom red filled circles) only between 0 and 500 s for the first group and between 500 and 1000 s for the second group. B,C,D: Comparison of the evolution of the synaptic weights for B log-STDP (baseline parameters); C add-STDP with a ratio between depression and potentiation equal to

, represented by the bottom red filled circles) only between 0 and 500 s for the first group and between 500 and 1000 s for the second group. B,C,D: Comparison of the evolution of the synaptic weights for B log-STDP (baseline parameters); C add-STDP with a ratio between depression and potentiation equal to  and an upper bound set to 15; and D mlt-STDP where depression is linearly increasing with the current value of the weight strength (

and an upper bound set to 15; and D mlt-STDP where depression is linearly increasing with the current value of the weight strength ( and

and  ). These three plots are similar to Fig. 5B, except that red traces indicate weights coming from correlated inputs only when correlation is turned on (purple otherwise). The black thick solid and dashed curves represent the respective mean weights for the first and second correlated groups, respectively. E Decay of potentiated weights back to the baseline equilibrium value (

). These three plots are similar to Fig. 5B, except that red traces indicate weights coming from correlated inputs only when correlation is turned on (purple otherwise). The black thick solid and dashed curves represent the respective mean weights for the first and second correlated groups, respectively. E Decay of potentiated weights back to the baseline equilibrium value ( ) after input correlation is switched off. Simulated weights are represented by gray traces and their mean by the red thick curve. The theoretical prediction in (7) is plotted in dashed-dotted blue. F Comparison of the predicted decay of potentiated weights for mlt-STDP (pink) and log-STDP for

) after input correlation is switched off. Simulated weights are represented by gray traces and their mean by the red thick curve. The theoretical prediction in (7) is plotted in dashed-dotted blue. F Comparison of the predicted decay of potentiated weights for mlt-STDP (pink) and log-STDP for  ,

,  and

and  (light to dark blue, resp.). The curves correspond to (7), where

(light to dark blue, resp.). The curves correspond to (7), where  is calculated using the Poisson neuron model.

is calculated using the Poisson neuron model.

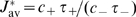

After the correlation switch at 500 s, the potentiated weights from the first correlated group return to their baseline equilibrium value, close to the fixed point  . In a similar simulation to that in Fig. 8B, the weights stronger than 1 at 500 s are represented by the gray traces in Fig. 8E. Their decay is driven by the drift

. In a similar simulation to that in Fig. 8B, the weights stronger than 1 at 500 s are represented by the gray traces in Fig. 8E. Their decay is driven by the drift  , which is affected by the weight dependence [31]. Neglecting noise, we can use the expression in (5) to approximate the trajectory of the mean weight (black curve)

, which is affected by the weight dependence [31]. Neglecting noise, we can use the expression in (5) to approximate the trajectory of the mean weight (black curve)

| (7) |

By integrating this formula and using the simulated firing rate for  , we obtain the blue dashed-dotted curve, which satisfactorily predicts the decaying mean weight. From (7), it is clear that a weaker drift

, we obtain the blue dashed-dotted curve, which satisfactorily predicts the decaying mean weight. From (7), it is clear that a weaker drift  leads to a longer decay time. In Fig. 8F, a more pronounced saturating LTD (i.e., larger values for

leads to a longer decay time. In Fig. 8F, a more pronounced saturating LTD (i.e., larger values for  ) increases the decay time, up to several tens of seconds. In comparison, mlt-STDP (pink curve) forgets the learned structure after a much shorter period. (The trajectory for mlt-STDP is exponential [31], but a simple analytical result cannot be derived for log-STDP. The Poisson neuron model was used to evaluate

) increases the decay time, up to several tens of seconds. In comparison, mlt-STDP (pink curve) forgets the learned structure after a much shorter period. (The trajectory for mlt-STDP is exponential [31], but a simple analytical result cannot be derived for log-STDP. The Poisson neuron model was used to evaluate  .)

.)

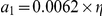

Emergence and persistence of a weight structure in a recurrently connected network

In order to assess whether the interesting dynamics produced by log-STDP for a single neuron also holds in the case of a recurrent network, we first reproduce a previous result of network self-organization [33]. The goal for STDP is to split of the initially homogeneous distribution for both input and recurrent weights. As shown in Fig. 7, such a symmetry breaking requires strong competition. As illustrated in Fig. S6, log-STDP produces a clear weight structure that represents the input correlation configuration, even though the potentiation is weaker than in Fig. 5D. Here log-STDP performs as well as an almost-additive version of nlta-STDP model in terms of competition.

Following the results in Fig. 5C, we evaluate now whether log-STDP favors the stability of strong weights in a network. As illustrated in Fig. 9A, the network neurons have plastic recurrent connections (thick arrows) and fixed input connections (thin arrows) from two pools of inputs, here 2900 with no correlation (open circles) and 100 with correlations (red filled circles). To compensate the partial connectivity (10% for all connections), all inputs have a higher firing rate equal to 10 Hz and the input weights have been scaled up ( ) in order to obtain neuronal firing rates in the same range as in the case of a single neuron (Fig. S7). Even without input correlation, recurrent excitatory connections induce (positive) spike-time correlations. The cross-correlograms between neurons are symmetric [33], which results in both LTP and LTD. Due to a net LTD effect, the weight distribution in Fig. 9B is slightly shifted toward smaller values (purple thick solid curve), compared to the case of feed-forward connections (black thin dashed curve). Here input correlations have a small effect on the weight distribution, as indicated by the red solid curve in Fig. 9B to be compared with the purple solid curve. The resulting interneuronal correlations are weak and comparable to the situation in Fig. 5B.

) in order to obtain neuronal firing rates in the same range as in the case of a single neuron (Fig. S7). Even without input correlation, recurrent excitatory connections induce (positive) spike-time correlations. The cross-correlograms between neurons are symmetric [33], which results in both LTP and LTD. Due to a net LTD effect, the weight distribution in Fig. 9B is slightly shifted toward smaller values (purple thick solid curve), compared to the case of feed-forward connections (black thin dashed curve). Here input correlations have a small effect on the weight distribution, as indicated by the red solid curve in Fig. 9B to be compared with the purple solid curve. The resulting interneuronal correlations are weak and comparable to the situation in Fig. 5B.

Figure 9. Stability of the emerging structure of strong weights in a recurrently connected network.

A: Schematic representation of the network with plastic recurrent connections (thick arrows) and fixed input connections (thin arrows). The network neurons (top blue filled circles) are excited by one pool of 2900 uncorrelated inputs (bottom open circles), and one pool of 100 inputs (bottom red filled circles) whose spike trains may be correlated. B: Time-averaged distributions of the recurrent weights over the learning epoch. Comparison of log-STDP (solid curves) and mlt-STDP (dashed curves) when the small group (red filled circles in A) is uncorrelated (purple) and correlated (red). C: Survival of strong synapses (top 20%) of the distribution over time. The color coding is similar to B. As in Fig. 5C, checks are performed every 5 s and the y-axis indicates the number of surviving synapses from  s until the time on the x-axis, cf. (8). D,E,F: Similar curves to B with different starting times

s until the time on the x-axis, cf. (8). D,E,F: Similar curves to B with different starting times  . Comparison of D log-STDP with no correlation; E log-STDP with correlations; and F mlt-STDP with correlations. G: Ratio of presence in the top 20% at each check (every 5 s between 200 and 395 s) for the initially strongest at

. Comparison of D log-STDP with no correlation; E log-STDP with correlations; and F mlt-STDP with correlations. G: Ratio of presence in the top 20% at each check (every 5 s between 200 and 395 s) for the initially strongest at  s, cf. (9). Comparison between log-STDP (solid curve) and mlt-STDP (dashed curve) for correlated inputs. The weight indices (x-axis) are sorted. The two horizontal dotted lines indicate 20% and 40%, respectively. H: Distributions of the presence ratio corresponding to G, with a log-scaled y-axis.

s, cf. (9). Comparison between log-STDP (solid curve) and mlt-STDP (dashed curve) for correlated inputs. The weight indices (x-axis) are sorted. The two horizontal dotted lines indicate 20% and 40%, respectively. H: Distributions of the presence ratio corresponding to G, with a log-scaled y-axis.

However, these input correlations do affect the fine structure of recurrent connections for log-STDP. To show this, we firstly examine the “survival” of the potentiated synapses in the top of the distribution, as in Fig. 5C. Figure 9C represents the survival of the strongest synapses from time  s onwards, checks being performed every 5 s. The curves correspond to the number of weights that are present in the top 20% of the distribution at each check from

s onwards, checks being performed every 5 s. The curves correspond to the number of weights that are present in the top 20% of the distribution at each check from  to

to  , in a similar fashion to Fig. 5C. Formally, we denote by

, in a similar fashion to Fig. 5C. Formally, we denote by  the set of weight indices in the top 20% of the whole population at time

the set of weight indices in the top 20% of the whole population at time  (roughly

(roughly  among the total

among the total  synapses). The curves in Fig. 9C correspond to the number of weights in

synapses). The curves in Fig. 9C correspond to the number of weights in

| (8) |

where  is a multiple of 5 s. When the small pool of 100 inputs has no correlation, the number of surviving synapses in

is a multiple of 5 s. When the small pool of 100 inputs has no correlation, the number of surviving synapses in  decreases to zero (purple solid curve). In contrast, correlated inputs allow strong synapses to survive for a longer time (red solid curve) and a few even persist until the end of the simulation. Figure 9D and E show similar curves for different starting times

decreases to zero (purple solid curve). In contrast, correlated inputs allow strong synapses to survive for a longer time (red solid curve) and a few even persist until the end of the simulation. Figure 9D and E show similar curves for different starting times  . For uncorrelated inputs, the surviving time is comparable for all

. For uncorrelated inputs, the surviving time is comparable for all  and no structure emerges. However, input correlations build up a structure (Fig. 9E), which grows larger as time goes.

and no structure emerges. However, input correlations build up a structure (Fig. 9E), which grows larger as time goes.

Compared to log-STDP, the weights are shuffled more quickly with mlt-STDP and no structure develops. This is illustrated in Fig. 9C by the thick dashed curves, to be compared with the thick solid curves. The survival time of strong weights for correlated inputs with mlt-STDP (red dashed curve) is even shorter than that for uncorrelated inputs with log-STDP (purple solid curve). The mean dwell time for the 6000 weights that last the longest in the top 20% is given in Table 2. Note that only a few recurrent weights persist in the tail for a long time compared to the input weights of a single neuron (leading to smaller values compared to Table 1), because the correlations between network neurons are quite weak here.

Table 2. Mean dwell time for the 6000 recurrent weights that last the longest in the top 20% of the distribution.

| without input correlation | with input correlation | |

| log-STDP | 7.2 s | 9.0 s |

| mlt-STDP | 4.5 s | 5.0 s |

The dwell times correspond to the simulations of the recurrent network in Fig. 9C. Because roughly two thirds of the  initial weights in the tail (top 20%) at 200 s disappear at the following counting round, only the 6000 weights with longest dwell times are taken into account here.

initial weights in the tail (top 20%) at 200 s disappear at the following counting round, only the 6000 weights with longest dwell times are taken into account here.

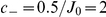

Finally, we assess the persistence of weights in the strong tail in another manner. Because input correlations are sustained here, it makes sense to check how many times each weight appears in the strong tail. The repeated presence of weights in the tail implies some consistency for an emerged weight structure, even though some weights get repressed and pushed out at some times. We thus calculate for each weight  the ratio of presence in the strong tail between 200 and 395 s (

the ratio of presence in the strong tail between 200 and 395 s ( checks), namely

checks), namely

| (9) |

where  is the characteristic function, valued 1 when its argument is true. The

is the characteristic function, valued 1 when its argument is true. The  highest ratios

highest ratios  are plotted in Fig. 9G in a rank order for log-STDP (red solid curve) and mlt-STDP (red dashed curve) when inputs have correlations. The (smoothed) histograms of

are plotted in Fig. 9G in a rank order for log-STDP (red solid curve) and mlt-STDP (red dashed curve) when inputs have correlations. The (smoothed) histograms of  for the whole population is represented in Fig. 9H. As expected, we find more weights with a higher ratio

for the whole population is represented in Fig. 9H. As expected, we find more weights with a higher ratio  for log-STDP than mlt-STDP, meaning that the tail of strong weight is more stable over time. In the extreme case where the synaptic dynamics is very noisy, the weights in the strong tail are like chosen by a random draw of

for log-STDP than mlt-STDP, meaning that the tail of strong weight is more stable over time. In the extreme case where the synaptic dynamics is very noisy, the weights in the strong tail are like chosen by a random draw of  weights among the total

weights among the total  . Here it corresponds to the average presence ratio

. Here it corresponds to the average presence ratio  (lower horizontal dotted line in Fig. 9G) and the standard deviation

(lower horizontal dotted line in Fig. 9G) and the standard deviation  , as a random draw of a portion

, as a random draw of a portion  of elements within the whole pool

of elements within the whole pool  checks. We set a significance threshold for the ratios

checks. We set a significance threshold for the ratios  at three times the standard deviation above the mean (the upper horizontal dotted line in Fig. 9G indicates

at three times the standard deviation above the mean (the upper horizontal dotted line in Fig. 9G indicates  ). For a random draw every 5 s (thin dashed-dotted curve), only 130 weights among the total

). For a random draw every 5 s (thin dashed-dotted curve), only 130 weights among the total  have a ratio

have a ratio  . With mlt-STDP, 2142 weights satisfy

. With mlt-STDP, 2142 weights satisfy  , but only 46 weights

, but only 46 weights  . This is much lower than the figures for log-STDP, for which about a third of the tail, namely 6351, have

. This is much lower than the figures for log-STDP, for which about a third of the tail, namely 6351, have  and 1075 weights