Abstract

Objectives

Many technologies intended for patient use are never developed or evaluated with principles of user-centered design. In this review, we explore different approaches to assessing usability and acceptability, drawn from selected exemplar studies in the health sciences literature.

Data sources

Peer-reviewed research manuscripts were selected from Medline and other data sources accessible through pubmed.gov. We also present a framework for developing patient-centered technologies that we recently employed.

Conclusions

While there are studies utilizing principles of user-centered design, many more do not report formative usability testing results and may only report post-hoc satisfaction surveys. Consequently, adoption by user groups may be limited.

Implications for Nursing Practice

We encourage nurses in practice to look for and examine usability testing results prior to considering implementation of any patient-centered technology.

Keywords: Usability, Acceptability, Patient-centered, Informatics

Computer technologies are increasingly available for patients with cancer. However, many technologies intended for patient use are never developed or evaluated based on established principles of user-centered design. This systematic design approach focuses on the users’ perspectives and experiences throughout the software development life cycle; identifying and ensuring the development of a user-friendly application.1 While the principles of user-centered design have been common in other areas (e.g., aviation, nuclear power, and automotive industries), these practices may be overlooked in health care where the approach has often included requiring end users to adapt to the interface, rather than the interface adapting to the end users. 2, 3 Too often, computer applications have been developed by programmers and project staff who merely assume understanding of the end users’ needs.4 This can result in a product that does not adequately meet the needs of the final audience. Poor design and testing of the user interface may both confound and alter the process of data collection and the delivery of interventions.5 It may also deter adoption by potential users including other researchers, clinicians, and potential patients. Further, as computer applications for oncology patients incorporate more sophisticated features (longitudinal use, home use, interfaces for mobile devices, delivery of educational interventions based on screening results, etc.) it becomes imperative that patient interactions with these features is carefully evaluated during the design and deployment phases to ensure a high degree of usability and application fidelity.

By identifying the relevant end users and including them in every stage of development, from the initial needs analysis to the usability testing of prototypes, the potential for a well-used and successful product is more likely. 4 This can be achieved by following the principles of user-centered design. It seeks to understand who the user is (or may be) and, just as importantly, the context in which they use the product. The field combines elements of numerous disciplines and its application can range from employing a full user-research team throughout the product creation process to simply taking advantage of quick, informal tools like card sorting.

Rubin and Chisnell6 defined three foundations for user-centered design: early focus on users and tasks, empirical measurement of products and iterative design. Whether the product in question is a television remote control or electronic medical records system, user-centered design is meant to insure that products have a high degree of usability. By taking advantage of this deliberate approach to design, patient-centered technologies can be made more usable and thus more effective adjuncts to clinical cancer care.

How is usability testing typically conducted? Observing actual or potential users interact with an application with little or no assistance is the basic process. It can be conducted in a laboratory, in a participant’s environment (for example, their home or office) or remotely.5 Usability testing need not be incredibly complex or costly. Prominent usability scholar, Jakob Nielsen has claimed that with three to five users a development team will find most of the problems.7, 8 Multiple techniques may be involved in usability testing which can be applied in a variety of settings ranging from a high-tech usability laboratory to a patient’s home (Table 1).

Table 1.

Usability techniques defined and typical testing locations.

| Technique | Description | Location |

|---|---|---|

| Card Sorting | Allows users to provide input on grouping content. They are asked to sort note cards that list content areas in a way that makes sense to them. Conducted during planning stages. | Flexible |

| Contextual Inquiry | Visit typical users in the environment where they would interact with the product. Used to gain insight on how the product should be designed to meet the needs of the audience. Conducted during planning stages. | Participant’s environment (home, office or anywhere they’d interact with the technology) |

| Participatory Design | Including users and other stakeholders in the process of planning the initial design. May start with initial exploration of the problem(s) being addressed by the potential product. May involve paper mockups of screens etc. Conducted during planning stages. | Flexible – often a conference room with access to white boards. |

| Eye Tracker | Device used to track and record eye movements when viewing an interface. Good for determining whether users are examining the UI in ways that may not have been expected or if they are missing elements. Typically done after an initial version of the UI is complete | Usability Lab |

| Paper Prototypes | Simple method for getting user feedback via paper-based wireframes. Conducted during design/testing phase. | Flexible |

| Remote Testing | Indirect usability testing – software may be used to record a participant’s actions. Done when potential participants are not easily accessible. Conducted during design/testing phase. | Flexible, but special software may be needed. |

| Task-based Testing | Provide participants with list of key tasks and track steps taken and success rate. Can be used with Think Aloud. Conducted during design/testing phase. | Flexible – any location where they can access the technology. |

|

Think Aloud (Talk Aloud/Cognitive Walkthrough) |

Participants are told about the product and a set of tasks. They are asked to “think aloud” to verbally describe what they’re thinking, doing and feeling. Conducted during design/testing phase. May be repeated as the design is refined. | Flexible – any location where a version of the product can be accessed. |

| Heuristic Evaluation | Review of the interface based on a set of common usability principles. Typically each element of a user interface is evaluated on relevant heuristics and any concerns are ranked with an indication of their severity. Conducted during design/testing phase. Easy to do and often done iteratively | Flexible. |

In this review, we explore different approaches to assessing usability and acceptability, drawn from selected studies in the health sciences literature that explore computer technologies for patients with cancer. We also present a framework for developing patient-centered technologies that we recently employed and make recommendations for other researchers.

Methods

We conducted a broad search against the biomedical literature from Medline and other sources using pubmed.gov, which is developed and maintained by the National Center for Biotechnology Information (NCBI) and the U.S National Library of Medicine (NLM) within the National Institutes of Health (NIH).9 This search was limited to the last five years and to studies that related to usability and acceptability testing of computer applications for patients with cancer. Permutations of the following key words were used in our search: ‘oncology, cancer, acceptability, usability’. Articles with stated research objectives or study aims involving usability and acceptability testing were compiled into a preliminary list (n=25). We conducted a deeper review of this list and ranked articles on a scale of 1–5 with 5 representing the most detailed explanations of formative testing procedures. A total of 7 studies received a rank of 5. These studies were reviewed given a more in-depth review and three were selected as exemplars based on the diversity of usability frameworks, application design, and testing procedures.

Exemplar Studies

Reichlin and colleagues10 explored the acceptability and usability of an interactive web-based game intended to help men with prostate cancer in their treatment decision process. The stated objective was to gain user feedback on an alpha version of the application related to the usability and feasibility of the game, and to guide future iterations. Specifically, the researchers evaluated if 1) the users would accept the game as a decision aid; 2) whether users could easily navigate as intended; and 3) whether it increased confidence and participation in decision making. The application was designed so that users could provide their preferences regarding possible side effects associated with four typical treatments. The graphical interface, which was designed with participatory input from prior unpublished work, involved two rounds of play – each having a computer guided orientation round. Two different metaphors were used in this decision support aid: simulated playing cards (Figure 1) for helping patients rate side effects for different treatments and a slot machine metaphor to communicate probabilities of different side effects.

Figure 1. Simulated Playing Cards for Rating Side Effects;10.

Source: http://www.jmir.org/2011/1/e4/, (c); distributed under Creative Commons Attribution License

With respect to the application design, the researchers reported involving users (n=13) throughout the design and build process. The sample was comprised of men with a recent diagnosis of localized prostate cancer and who had already made a treatment decision. Participants were observed first using the game and were then given an investigator-developed survey instrument regarding usability and acceptability of the application. Following the survey, the men participated in one of three focus groups. Results from the survey indicated that participants found the game to be an effective method for providing information on treatments and side effects. The survey respondents also reported three features that needed improvement: how the time periods for side effects were displayed, the use of the slot-machine ‘spinner’ and the display of final treatments ranked highest by the user.

Analysis of focus group data found that participants appreciated being able to generate lists of questions for their clinicians. In general, subjects reported the game as acceptable and useful in a decision making process. They also reported wanting to see a greater level of detail with respect to the side effects, a greater range of treatment options, more transparency on the data underlying the probabilities presented, and more data about longer term outcomes. Another area that the researchers highlighted in discussing their results was the participants’ desire for personalization – or for the application to elicit user data (age, marital status, physical health) and to use these within the application.

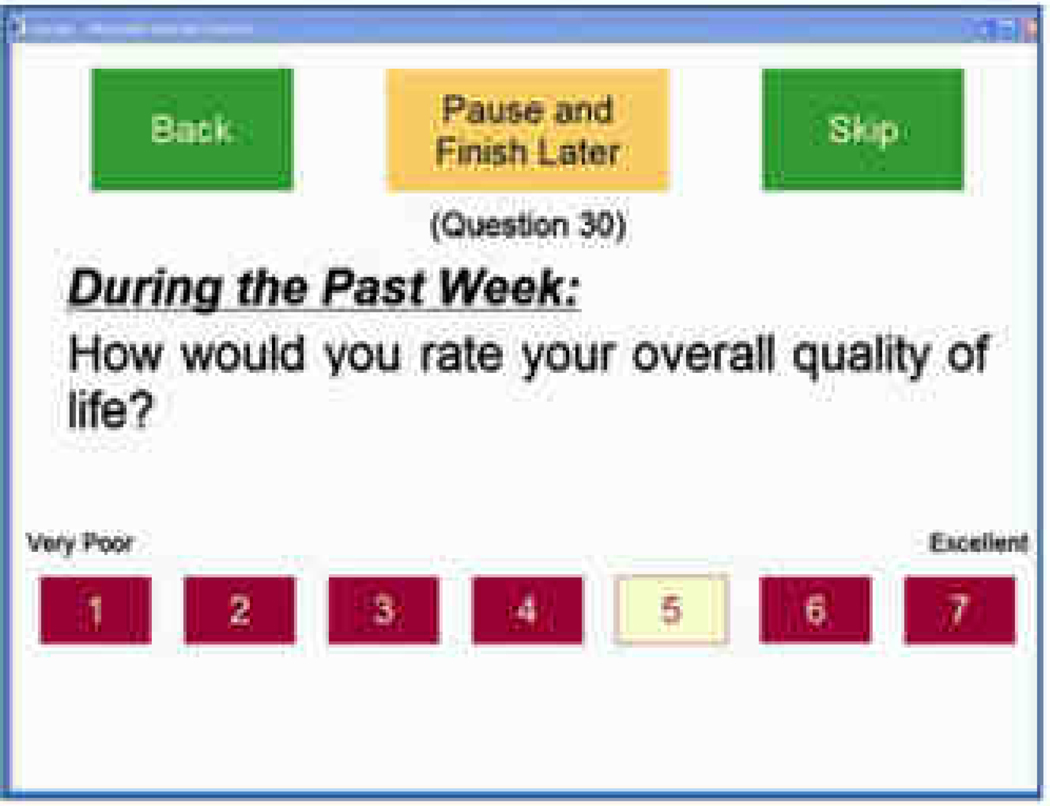

Noting the importance of ensuring usability for patients with limited computer experience, vision and dexterity issues, Fromme, Kenwoirthy-Heinige, and Hribar11 reported the development process for a tablet computer application designed to help patients report symptom and quality of life information. The team began by gathering user requirements from participating oncologists; chief among these was a high degree of ease of use given the computer literacy and functional limitations among the target audience. During the user-testing phase, they observed men with prostate cancer (n=7) interact with the application followed by interviews. The application was also assessed at a later time using a larger group of patients (n=60) with diverse cancer types; while participants in this group did not have their sessions observed, or interviews conducted, an ‘ease-of-use’ survey was administered. The graphical web interface for displaying symptom and quality of life questions was based on National Cancer Institute guidelines (now adopted by all of the national institutes)12 along with heuristic evaluations drawn from the literature. The researchers provide a thorough discussion on how vision issues guided their selection process of font sizes (18–27 point), choice of a Sans Serif font and use of primary colors for navigational elements to counter changes in the lens and cornea related to aging. In line with this approach, the researchers utilized larger boxes in place of radio buttons given the small target area of native HTML radio button elements and color coding of selected response options (Figure 2). Other design considerations included displaying only one question at a time so that the largest font sizes and interface elements could be used without requiring scrolling and having the survey automatically advance to the next question following a user response. In an attempt to simplify the interface, the researchers disabled navigational controls on the browser and added a pause button.

Figure 2. Example Survey Item, with permission11.

Direct observations using ‘talk aloud’ approaches and subsequent interviews with the subjects (n=7) found issues related to delays in the conditional coloring of response boxes following selection of an answer box, screen visibility, tablet settings with the stylus button accidentally bringing up context menus and the tablet itself switching from portrait to landscape settings. Results from the 8-item survey given to the larger research sample (n=60) indicated high ease-of-use scores overall and while patients over 65 had statistically lower scores, the reported ease of use was still quite high for this group.

Stoddard and colleagues13 reported on efforts to test and refine a smoking cessation website created by the National Cancer Institute (NCI). The original prototype was designed using recommendations by the National Cancer Institute,12 with content developed and edited by communication experts for a 6th grade reading level. The authors made revisions based on feedback from other usability experts at NCI and also developed a formal usability testing plan. The first stage of this testing plan involved identifying a list of critical tasks that most users would need to perform such as knowing where to find information on the website about medications and how to print copies. Five subjects, all current smokers with an interest in quitting, participated in usability tests which were videotaped and conducted in a private room with an adjoining observation room. As participants used the website and completed critical tasks, they were encouraged to use the ‘talk aloud’ approach. Two rounds of usability testing took place, in the first round participants were assessed on whether each of nine given tasks could be completed within one minute. Participants were also given an internally constructed 10 item satisfaction survey. The overall task completion rate for the participants in the first round was 42.3% with none of the tasks on finding specific information being finished successfully. Modifications to the website were then made including moving or eliminating information, edits to labels, and navigation elements. In a subsequent round of testing with seven new subjects, the overall task completion rate increased over two-fold to 89% and was deemed acceptable in meeting overall testing goals for the site. Responses to the 10 item satisfaction survey after the second round of testing with participants found increases in satisfaction across many of the questions.

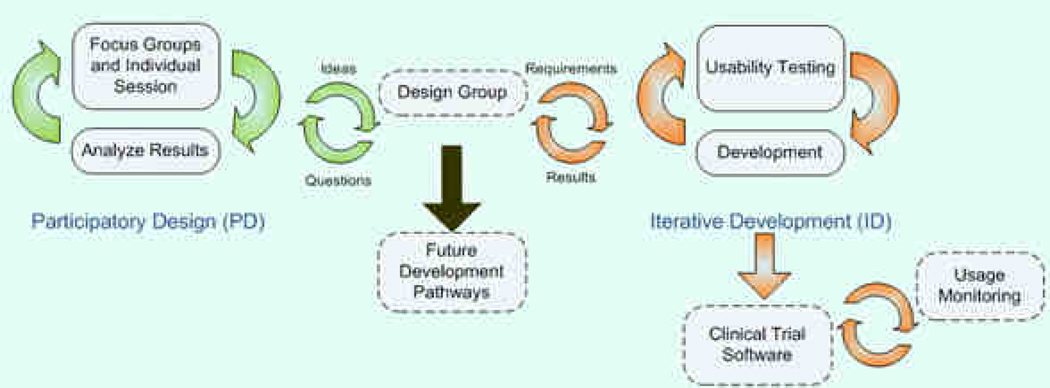

Within our own research efforts, we have focused on developing and evaluating the Electronic Self Report Assessment- Cancer (ESRA-C) computer platform, which is designed for use by patients throughout ambulatory services of a comprehensive cancer center. We have reported elsewhere that both patient and clinician users reported high acceptability and the application significantly increased discussion of symptom and quality of life issues in clinic visits.14, 15 We recently completed data collection in a second randomized clinical trial examining the impact of ESRA-C on patient verbal report of problematic issues and symptom distress levels at end of therapy. Because the new intervention was substantially more complex at the patient level, we focused on systematic development and testing with a fully patient- centered approach with few assumptions regarding patient needs and requests. The design process for this application included a needs assessment, analysis, design/testing/development, and application release as suggested by Mayhew16. Interaction with participants reflects the testing phase of this lifecycle and by virtue of iterative development, we were able to identify new user needs.

Figure 3 describes the two iterative methods that were used to further develop ESRA-C and extend it: participatory design (PD)17 and iterative development (ID). The cycle on the left used focus groups, individual interviews, and mock-up prototypes to assess the ESRA-C content and experience, particularly the reporting and viewing of patient-centered assessment data. We also assessed how the users wanted to integrate their assessments into a personal health record and how they preferred to control access to this information. Findings from focus groups and individual interviews were brought back to a subset of investigators comprising the Design Group who evaluated the findings of the PD process in light of architectural, technical, and budget concerns, and determined which needs could be addressed with the patient-oriented ESRA-C, and which must be deferred for future development.

Figure 3. Iterative Development Model for ESRA-C.

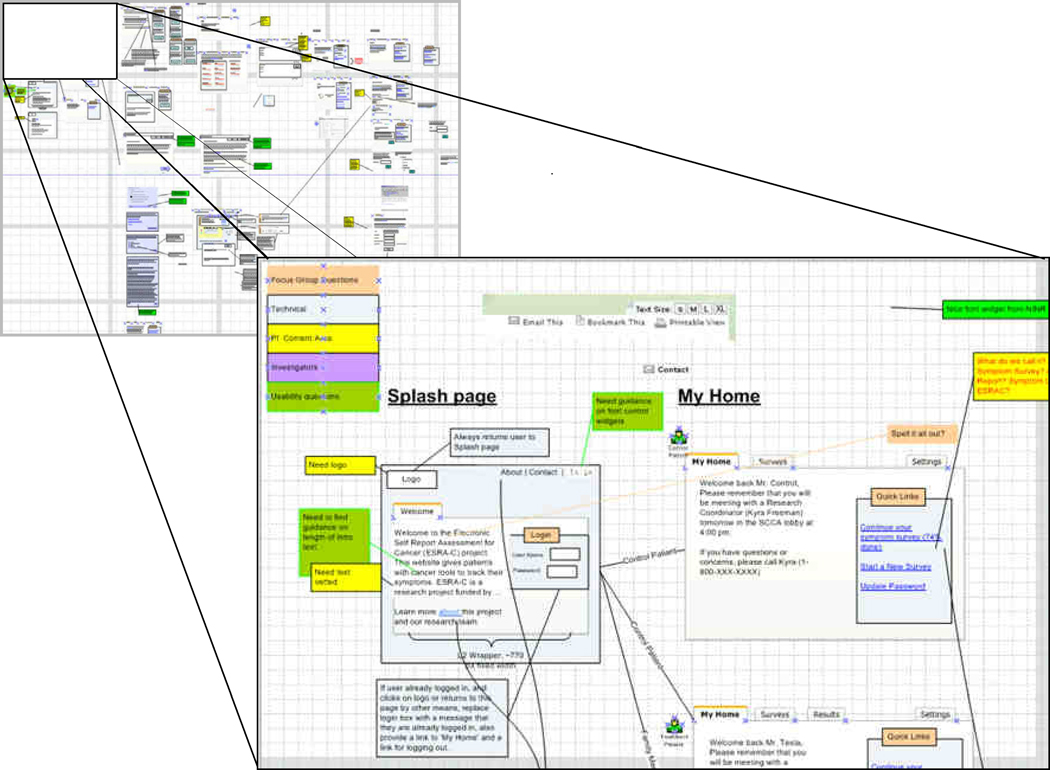

The cycle on the right used an iterative process of development and usability testing to evaluate solutions proposed to meet the project requirements. We began this process concurrently with the PD process, as our previous experience with the clinician-oriented ESRA-C15 provided us several areas with which to begin. We iteratively integrated both feedback from usability sessions and from the need assessment activities into new mockups of ESRA-C for use in subsequent usability testing sessions and within a story-board (Figure 4) depicting how the user would move through the application. Data capture for later analysis was made possible through an external video camera and a Tobii T60 eye tracker.18 The results have been analyzed, incorporated into the newest version of ESRA-C and will be published elsewhere.

Figure 4. Blow up of usability testing in ESRA-C story boar.

A number of key findings and implications for practice emerged from our review of these three exemplar studies as well as our own research experience. A summary of the exemplar studies may be found in Table 2, followed by a discussion of implications for clinical practice

Table 2.

Summary of Exemplar Patient-centered Technology Usability Studies

| Authors | Application | Methods & Sample | Relevant Findings |

|---|---|---|---|

| Reichlin et al.10 | Decision support aid: Web-based interactive game for men with prostate cancer to aid in treatment decision making. | Alpha version tested with direct observation, survey, and focus groups with (n=13) post-treatment men. | Application reported as acceptable and useful, usability problems identified with some navigation elements, desire for sourcing statistical information and personalization. |

| Fromme, et al11 | Screening Application: Tablet computer application for administering a 30-item quality of life survey | Assessed user requirements from oncologists, built application and conducted individual usability tests (n=7) along with semi-structured interviews. Also administered ‘ease-of use’ surveys to participants (n=60). | High degree of usability found – lower in older patients but still quite high overall. Some modifications required faster color indication of response option selection. Difficulties with tablet and stylus. |

| Stoddard et al13 | Information search and retrieval application: Web site for smoking cessation. | Original application designed with usability experts and based on NCI guidelines. Defined critical tasks and observed subjects (n=5) attempt tasks. Used ‘Talk aloud’ approach. Modifications were made and another round of testing took place (n=7). Also administered 10-item satisfaction test to subjects. | Less than half the tasks were completed successfully, however after revisions almost 90% of tasks with a new group were completed successfully. Initial designs were based on input from usability experts but, when tested with patients, many problems were found. |

Issues to Consider

As oncology nurses consider employing, adapting or creating patient-centered technologies, we recommend consideration of three main issues: a) user participatory involvement at early stages, b) adherence to design guidance, and c) benefits of multiple approaches to evaluation.

Participatory involvement

The exemplar studies are noteworthy for engaging patients within the design and build process to not only capture their input on what features might be useful for them in the planning stages, but also their evaluations of working prototypes. This involvement is central to user-centered design. Fromme, et al11 provided a very clear description of user involvement within the design process. As content experts, perhaps with usability experts on development teams as well, it can be very easy to assume that ‘we know’ what the patient would want in the application and how easy it is to use. In finding that half of the critical tasks were not successfully completed, with the original application that had been designed with input from content and usability experts, Stoddard and colleagues13 emphasized how reliance on experts can result in over-estimating the ease of use for the target audience.

Adherence to design guidance

While designs need evaluation through usability testing, in many cases there are established evidence-based guidelines. Fromme, et al11 and Stoddard, et al13 provided clear examples of applying published recommendations to user interface design. In both cases, the researchers cited guidelines from the National Cancer Institute (NCI).12 These guidelines are available online and provide excellent overviews on various usability methods, as well as downloadable templates.

Benefits of multiple approaches to evaluation

It is not uncommon for investigators to assess usability by administering post-hoc surveys that elicit measures on ‘satisfaction’ and ‘acceptability’ of the computer application. Velikova and colleagues19 made a coherent argument concerning potential ceiling effects with such measures and asserted that measuring satisfaction may not be an appropriate sole outcome measure for patients with cancer. The studies we reviewed are noteworthy in employing multiple approaches to assessing usability rather than solely administering a post-hoc survey and for involvement of patients early in the development process. Fromme, et al11 incorporated observation of critical tasks, structured interview, and survey techniques. These researchers extended their testing beyond the application itself and examined the usability of the physical device, finding several limitations of the tablet and stylus. Stoddard, et al13 reported in detail on identifying and quantifying critical tasks. The researchers worked this assessment into a round of iterative development to assess the impact of design modifications and then used a second sample of naïve users for re-testing.

Conclusions and Recommendations

User-centered design principles involve participatory input and iterative design cycles.20 In these reviewed studies, and in our own research experience, involving users in the design and testing process contributed toward more important insights into the applications and ways to refine the applications to make them more user-friendly. A failure to identify important design flaws can not only decrease diffusion and adoption rates, but can threaten the integrity of research data that is being collected. Another key practice implication included using design guidance during the design process. In Table 4 we list some selected usability resources that we have found to be especially useful. We suggest that researchers should consider adopting multiple approaches toward assessing usability. These approaches can help validate findings and inform researchers about new concerns. Particularly relevant is the need for iterative development approaches, an important part of successful application design.

Table 4.

Selected Usability Resources

| Usability.gov Guidelines www.usability.gov/guidelines |

| Website and books by Jakob Nielsen www.useit.com |

| Stephen Krug’s book “Don’t Make Me Think” about usability principles.21 |

| Diverse articles on usability testing. www.boxesandarrows.com |

| IBM’s site on user-centered design: www-01.ibm.com/software/ucd/ucd.html |

| European Union Funded Site dedicated to promoting usability www.usabilitynet.org |

In summary, we recommend that nurses and investigators with clinical oncology expertise collaborate with representative end-users, developers and vendors early in the research process and build sufficient amounts of time into project timelines to ensure that participant involvement in the design and testing phases is secured. Conducting usability evaluations does not need to be a large, costly, undertaking with eye trackers and screen recording software. Rather consider working with paper-based mockups using some representative target users and iteratively move toward interviewing and observing target users as they work with rough prototypes on the computer. Build evaluation steps into your research and include results in research reports and, above all, involve the user.

Acknowledgements

Special thanks to Justin McReynolds and Greg Whitman for assistance with development and testing of Electronic Self Report Assessment – Cancer (ESRA-C) and to Dr. William Lober for conceptualizing and illustrating the development model for ESRA-C.

Funding: This work was supported in part by the National Institutes of Health, National Institute for Nursing Research NR008726 (Berry, PI)

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Work completed at the University of Washington, Seattle, WA

Contributor Information

Seth Wolpin, Department of Biobehavioral Nursing and Health Systems, University of Washington School of Nursing, Seattle, WA.

Mark Stewart, Department of Biobehavioral Nursing and Health Systems, University of Washington School of Nursing, Seattle, WA.

References

- 1.Kinzie MB, Cohn WF, Julian MF, Knaus WA. A user-centered model for web site design: needs assessment, user interface design, and rapid prototyping. J Am Med Inform Assoc. 2002 Jul–Aug;9(4):320–330. doi: 10.1197/jamia.M0822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zhang J. Human-centered computing in health information systems. Part 1: analysis and design. J Biomed Inform. 2005 Feb;38(1):1–3. doi: 10.1016/j.jbi.2004.12.002. [DOI] [PubMed] [Google Scholar]

- 3.Johnson CM, Johnson TR, Zhang J. A user-centered framework for redesigning health care interfaces. J Biomed Inform. 2005 Feb;38(1):75–87. doi: 10.1016/j.jbi.2004.11.005. [DOI] [PubMed] [Google Scholar]

- 4.Samaras GM, Horst RL. A systems engineering perspective on the human-centered design of health information systems. J Biomed Inform. 2005 Feb;38(1):61–74. doi: 10.1016/j.jbi.2004.11.013. [DOI] [PubMed] [Google Scholar]

- 5.Kushniruk A. Evaluation in the design of health information systems: application of approaches emerging from usability engineering. Comput Biol Med. 2002 May;32(3):141–149. doi: 10.1016/s0010-4825(02)00011-2. [DOI] [PubMed] [Google Scholar]

- 6.Rubin J, Chisnell D. Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests. 2nd ed. Indianapolis, IN: John Wiley and Sons, Inc; 2008. [Google Scholar]

- 7.Nielsen J, Landauer TK. A mathematical model of the finding of usability problems; Proceedings of the INTERACT '93 and CHI '93 conference on Human factors in computing systems; The Netherlands: ACM; 1993. Vol Amsterdam. [Google Scholar]

- 8.Nielson J. [Accessed 3/3/2011];Why you only need to test with 5 users. 2000; http://www.useit.com/alertbox/20000319.html. 2011

- 9.National Library of Medicine. [Accessed 2/16/2011];Pub Med Help and FAQs: http://www.ncbi.nlm.nih.gov/books/NBK3827/#pubmedhelp.FAQs.

- 10.Reichlin L, Mani N, McArthur K, Harris AM, Rajan N, Dacso CC. Assessing the acceptability and usability of an interactive serious game in aiding treatment decisions for patients with localized prostate cancer. J Med Internet Res. 13(1):e4. doi: 10.2196/jmir.1519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fromme EK, Kenworthy-Heinige T, Hribar M. Developing an easy-to-use tablet computer application for assessing patient-reported outcomes in patients with cancer. Support Care Cancer. 2010 May 29; doi: 10.1007/s00520-010-0905-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. [Accessed 2/20/2011, 2011];Usability.gov Your guide for developing usable & useful Web sites. http://usability.gov/guidelines/index.html.

- 13.Stoddard JL, Augustson EM, Mabry PL. The importance of usability testing in the development of an internet-based smoking cessation treatment resource. Nicotine Tob Res. 2006 Dec;8 Suppl 1:S87–S93. doi: 10.1080/14622200601048189. [DOI] [PubMed] [Google Scholar]

- 14.Wolpin S, Berry D, Austin-Seymour M, et al. Acceptability of an Electronic Self-Report Assessment Program for patients with cancer. Comput Inform Nurs. 2008 Nov-Dec;26(6):332–338. doi: 10.1097/01.NCN.0000336464.79692.6a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Berry DL, Blumenstein BA, Halpenny B, et al. Enhancing Patient-Provider Communication With the Electronic Self-Report Assessment for Cancer: A Randomized Trial. J Clin Oncol. 2011 Jan 31; doi: 10.1200/JCO.2010.30.3909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mayhew DJ. The Usability Engineering Lifecycle: a Practioner’s handbook for user interface design. San Francisco: Academic Press; 1999. [Google Scholar]

- 17.Muller M. Participatory design: the third space in HCI. In: Jacko J, A S, editors. The Human-Computer Interaction Handbook. Mahway (NJ): Lawrence Erlbaum Associates; 2003. [Google Scholar]

- 18. [Accessed 2/10/2011, 2011];Tobii T60 & T120 Eye Tracker. 2011 http://www.tobii.com/en/analysis-and-research/global/products/hardware/tobii-t60t120-eye-tracker/

- 19.Velikova G, Brown JM, Smith AB, Selby PJ. Computer-based quality of life questionnaires may contribute to doctor-patient interactions in oncology. Br J Cancer. 2002 Jan 7;86(1):51–59. doi: 10.1038/sj.bjc.6600001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gould J, Clayton L. Designing for usability: key principles and what designers think. Commun. ACM. 1985;28(3):300–311. [Google Scholar]