Abstract

Although the roles of shape and pigmentation cues in face categorization have been studied in detail, the time-course of their processing has remained elusive. We measured participants’ hand movements via the computer mouse en route to male or female responses (gender task) or young or old responses (age task) on the screen. Participants were presented with male and female faces (gender task) or with young and old faces (age task) that were typical, shape-atypical, or pigmentation-atypical. Before settling into correct categorizations, the processing of atypical cues led hand trajectories to deviate toward the opposite gender or age category. A temporal analysis of these trajectory deviations revealed dissociable dynamics in shape and pigmentation processing. Pigmentation had a privileged, early role during gender categorization, preceding shape effects by approximately 50 ms and preceding pigmentation effects in age categorization by 100 ms. In age categorization, however, pigmentation had a simultaneous onset of influence as shape. It also had a more dominant influence than shape throughout the gender and age categorization process. The results reveal the time-course of shape and pigmentation processing in gender and age categorization.

A glimpse of another’s face reveals a person’s identity, emotional state, and the social categories to which he or she belongs. The latter type of perception—social categorization—is important because it bears cognitive, affective, and behavioral consequences (Macrae & Bodenhausen, 2000). Although the outcomes of social categorization have been studied in detail, the real-time process by which these categorizations are made has remained less clear.

Studies have investigated the contribution of various perceptual cues for recognizing a face’s category memberships, such as gender or age. Individual facial features, for example, convey reliable information about a face’s gender (Brown & Perrett, 1993). They also convey reliable information about a face’s age (Berry & McArthur, 1986). With advances of statistical face modeling, recent work has been able to investigate the dissociable contributions of two distributed patterns of cues, shape and pigmentation, on face perception (e.g., Burt & Perrett, 1995; Hill, Bruce, & Akamatsu, 1995; Russell, Biederman, Nederhouser, & Sinha, 2007). Shape refers to all structural variation in the face, including differences in internal features and its three-dimensional contours. Pigmentation, on the other hand, refers to the surface reflectance properties of the face.1 This includes the texture and coloring of the skin, eyes, and mouth. Using statistical face modeling, all human variation in a face’s gender and age can be defined by these two fundamental dimensions: shape and pigmentation.2

Early work emphasized the role of shape cues, mirroring trends in the object recognition literature (e.g., Biederman, 1987; Biederman & Ju, 1988). However, later studies showed that in more fine-tuned discriminations, pigmentation can play an important role (Price & Humphreys, 1989). When face perception studies examined shape and pigmentation cues together, both types of cues were found to have important, independent roles in recognizing category memberships, such as gender and age (e.g., Burt & Perrett, 1995; Hill et al., 1995). In fact, these studies often showed evidence for a stronger utilization of pigmentation rather than shape. Regardless of which cue might be more important, however, both must be integrated together to form coherent perceptions. To date, face perception studies have primarily focused on the influence of perceptual cues on categorical judgments and response times, rather than the temporally extended processing that gives rise to those outcomes. Thus, it remains unclear how shape and pigmentation cues are integrated over hundreds of milliseconds to form ultimate categorizations.

Recently, studies examining the real-time categorization process showed evidence for temporally dynamic integration. Both the masculine and feminine cues on a particular face, for example, are dynamically integrated over time to form ultimate gender categorizations (Freeman, Ambady, Rule, & Johnson, 2008; see also Dale, Kehoe, & Spivey, 2007). Such dynamic integration was found to underlie race categorization as well (Freeman, Pauker, Apfelbaum, & Ambady, 2010). These findings are consistent with a dynamic interactive model of social categorization (Freeman & Ambady, 2011), proposing that the biases of another person’s facial cues (e.g., shape: 90% masculine, 10% feminine; pigmentation: 70% masculine, 30% feminine) converge the moment they become available during visual processing to weigh in on multiple partially active category representations (e.g., 80% male, 20% female). These parallel, partially active category representations then continuously compete over time to settle onto ultimate categorizations (e.g., ~100% male, ~0% female). It is this continuous competition that is theorized to permit the vast array of facial cues to integrate over time and form stable categorical perceptions.

Although prior work showed that facial cues are simultaneously integrated over time, the particular temporal profile of shape and pigmentation cues’ integration was not explored. Traditional accounts have posited that shape cues would be integrated into face-category representations earlier than pigmentation cues, because the visual system is thought to progressively shift from extracting coarse features of a stimulus to more fine-grained details (Marr, 1982; Sugase, Yamane, Ueno, & Kawano, 1999). According to this coarse-to-fine view, the brain’s initial processing of visual input entails a first wave of achromatic and low spatial frequency information (i.e., shape), with chromatic and high spatial frequency information (i.e., pigmentation) available only later in processing (Delorme, Richard, & Fabre-Thorpe, 2000). Thus, shape cues should be integrated earlier than pigmentation cues. On the other hand, monkey neurophysiological work shows that neurons in the temporal cortex exhibit a sensitivity to pigmentation cues just as early as shape cues (e.g., Edwards, Xiao, Keysers, Földiák, & Perrett, 2003). In fact, the neurons that exhibit the earliest responses to face stimuli are those that are most sensitive to pigmentation. Such findings suggest that pigmentation processing would emerge simultaneously with shape processing, if not earlier. Behavioral work also suggests a strong, privileged role of pigmentation processing for fine-tuned discriminations involving within-category variation, such as gender and age (Hill et al., 1995; Price & Humphreys, 1989). Thus, whereas some research predicts an earlier role of shape cues, other research predicts no earlier advantage of shape cues or, perhaps, even an earlier role of pigmentation cues.

In the present study, our aim was to distinguish between these two competing possibilities by measuring the time course of shape and pigmentation processing in gender and age categorization. To do so, we exploited the temporal sensitivity of hand movements traveling toward potential responses on a screen. This hand-tracking technique provides a continuous index of perceivers’ categorical hypotheses about a face as categorization unfolds over time (Freeman, Dale, & Farmer, 2011; Spivey, Grosjean, & Knoblich, 2005). Electrophysiological studies show that the evolving categorical hypotheses about a face during online categorization are immediately and continuously shared with the motor cortex to guide relevant hand movement over time (Freeman, Ambady, Midgley, & Holcomb, 2011). Moreover, hand movement has been shown to be tightly yoked to ongoing changes in the firing of neuronal population codes within the motor cortex (Paninski, Fellows, Hatsopoulos, & Donoghue, 2004), and these population codes are yoked to the ongoing processing of a perceptual decision (Cisek & Kalaska, 2005). Thus, we can be confident that the dynamics of hand movement can provide temporally sensitive information about an evolving categorization of the face.

For example, in one series of studies, participants categorized faces’ gender by moving the computer mouse from the bottom-center of the screen to the top-left or top-right corners, which were marked male and female (Freeman et al., 2008). When categorizing gender-atypical faces (with cues that partially overlapped with the opposite gender), participants’ mouse movements continuously deviated toward the opposite-gender response. For instance, when categorizing a feminized male, participants’ mouse movements traveled closer to the female response than when categorizing a typical male face. This indicated that gender categorization involves partially active representations of both categories that dynamically compete to achieve a stable categorization. This competition permitted the masculine and feminine facial cues to integrate over time and settle onto a correct categorization.

In the present study, we separately manipulated the shape and pigmentation of a face’s gender (or age) so that, in some conditions, one of these cues partially overlapped with the opposite gender (or age), while the other cue was kept constant. If participants are asked to categorize such faces in a mouse-tracking paradigm, the online integration of atypical shape or pigmentation cues should lead mouse trajectories to deviate toward the opposite gender or age category. Then, by applying a temporally fine-grained analysis to these deviations, we could reveal the time course of shape and pigmentation processing.

Method

Participants

Forty-four undergraduates participated in exchange for $10. Participants were randomly assigned to gender or age categorization. One participant did not follow instructions correctly, leaving 21 participants in the age condition and 22 participants in the gender condition.

Stimuli

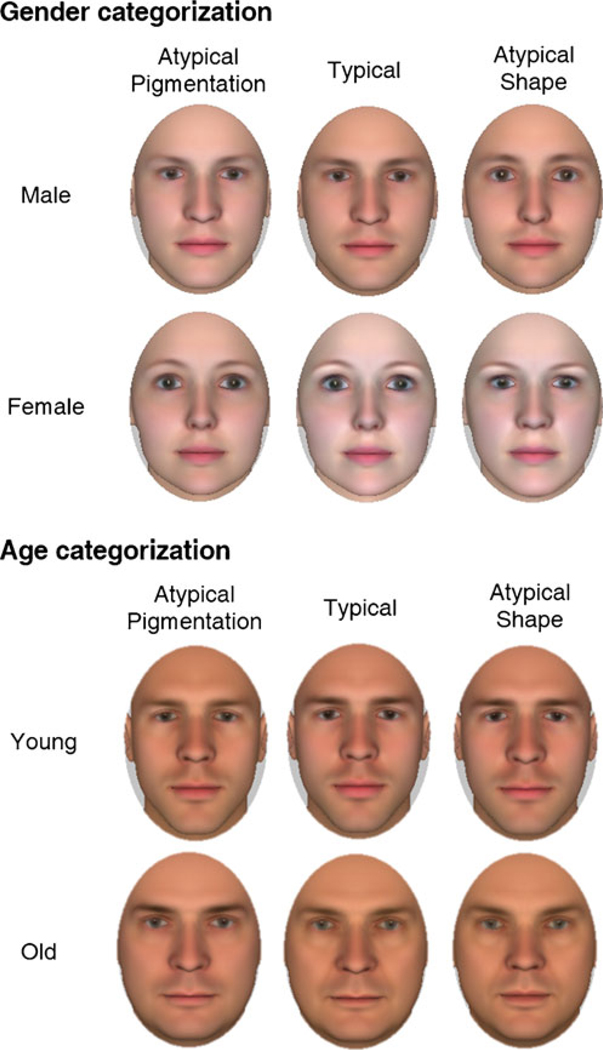

To manipulate shape and pigmentation information, we used FaceGen Modeler. This software allows shape and pigmentation cues to be independently manipulated, using a morphable face model based on anthropometric parameters of human population (Blanz & Vetter, 1999). All the stimuli were Caucasian.

For the gender categorization task, 20 unique male faces and 20 unique female faces were semirandomly generated at the anthropometric male and female means, respectively. These faces composed the typical condition. For the shape-atypical condition, the pigmentation of the 40 typical faces was kept constant, but the shape was morphed 25% toward the opposite gender. For the pigmentation-atypical condition, the shape of the 40 typical faces was kept constant, but the pigmentation was morphed 25% toward the opposite gender.3 All the stimuli were generated at an age of 21 years. For the age categorization task, 20 unique young faces and 20 unique old faces (half male, half female) were semi-randomly generated at the anthropometric 21-year-old and 55-year-old means, respectively. These faces composed the typical condition. For the shape-atypical condition, the pigmentation of the 40 typical faces was kept constant, but the shape was morphed 25% toward the opposite age-category. For the pigmentation-atypical condition, the shape of the 40 typical faces was kept constant, but the pigmentation was morphed 25% toward the opposite age-category (see Footnote 3). Participants were instructed to consider the “young” and “old” labels as relative and as a way to dichotomize age only for the purposes of the task.

Faces were cropped as to preserve only the internal face (see Fig. 1). The 120 faces in each task were presented three times across the experiment to maximize the signal-to-noise ratio of trajectory data.

Fig. 1.

Sample stimuli

Procedure

To begin each trial, participants clicked a START button at the bottom-center of the screen (resolution = 1,024 × 768 pixels), which was then replaced by a face. Targets were presented in a randomized order. In the gender condition, targets were categorized by clicking either a MALE or a FEMALE response, located in the top-left and top-right corners of the screen. In the age condition, these responses were young or old. Which response appeared on the left/right was counterbalanced across participants. While participants categorized targets, we recorded the x-, y-coordinates of the mouse (sampling rate ≈ 70 Hz). To ensure that trajectories were online with categorization, we encouraged participants to begin initiating movement early. As in previous research (e.g., Freeman et al., 2010), if participants initiated movement later than 400 ms following face presentation, a message appeared after the trial encouraging them to start moving earlier on future trials (and the trial was not discarded). If a response was not made within 3,000 ms, a “time-out” message appeared, or if a response was incorrect, a red “X” appeared. To record and analyze mouse trajectories, we used the freely available MouseTracker software package: http://mousetracker.jbfreeman.net (Freeman & Ambady, 2010).

Results

A time-out occurred on 0.4% of the trials; these trials were discarded. Prior to analysis, each trajectory was plotted and checked for aberrant movements (e.g., looping). Aberrant movements were detected in 3.1% of the trajectories, and these were discarded.

Accuracy, initiation time, and response time

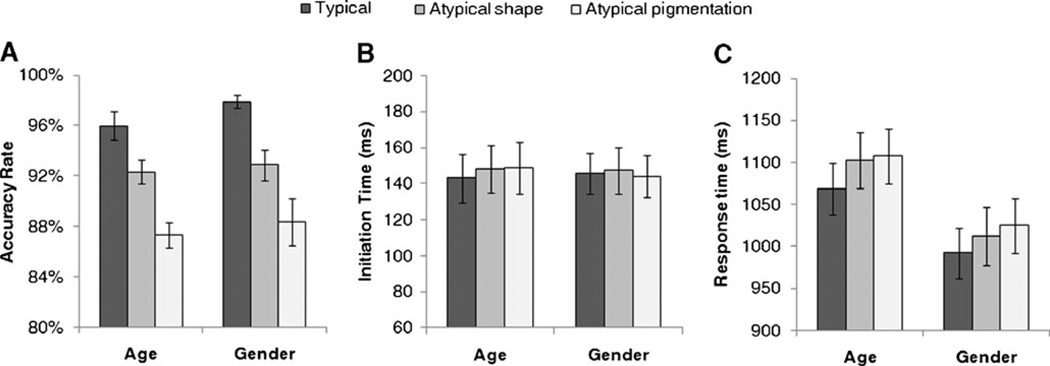

For each participant, mean accuracy rates, initiation times (when the mouse initially moved), and response times (when the response was clicked) were computed by averaging across each condition’s 120 trials (Fig. 2). These were then submitted to separate 3 (target-type: typical, shape-atypical, pigmentation-atypical) × 2 (task: gender, age) mixed-model ANOVAs.

Fig. 2.

a Accuracy rates, b initiation times, and c response times plotted as a function of categorization task and cue typicality

The ANOVA for accuracy rates revealed a significant main effect of target-type, F(2, 82) = 53.75, p < .0001 (Fig. 2a). For both the gender and age tasks, typical targets were categorized more accurately than shape-atypical targets and pigmentation-atypical targets, and shape-atypical targets were categorized more accurately than pigmentation-atypical targets (all p’s < .05). The main effect of task, F(1, 41) = 0.79, p = .38, and the interaction, F(2, 82) = 0.31, p = .73, were not significant. For subsequent analyses, incorrect trials were discarded.

Mouse movement was initiated early, with initiation times at an average of 146 ms (SE = 13 ms) following face presentation. No effects were significant in the ANOVA for initiation times, F’s < 1.05, p’s > .35. This ensures that mouse trajectories were initiated early after stimulus onset and in an identical fashion across conditions (Fig. 2b).

The ANOVA for response times revealed a significant main effect of target-type, F(2, 82) = 19.61, p < .0001 (Fig. 2c). For both the gender and age tasks, typical targets were categorized more quickly than shape-atypical targets and pigmentation-atypical targets (p’s < .05). However, response times for shape-atypical and pigmentation-atypical targets did not differ (p’s > .16). The main effect of task was marginally significant, with response times for gender categorization shorter than those for age categorization, F(1, 41) = 3.34, p = .08. The interaction was not significant, F(2, 82) = 0.75, p = .48. Due to the positive skew common to response time distributions, we log-transformed the data and reran the analysis. This had a negligible effect on the results.

Trajectory time-course

Trajectories’ coordinates were converted into a standard coordinate space: top-left = [1,1.5] and bottom-right = [1,0]. For comparison, all trajectories were remapped rightward (leftward trajectories were inverted along the x-axis). In this configuration, higher x-coordinates indicate more proximity to the correct category, whereas lower x-coordinates indicate more proximity to the incorrect category. When participants must process atypical shape or pigmentation cues, trajectories should deviate more toward the incorrect category during categorization (Freeman et al., 2008). The span of milliseconds in which trajectories for shape-atypical or pigmentation-atypical targets have lower x-coordinates than do trajectories for typical targets would thus indicate the epoch when shape or pigmentation cues are being processed.

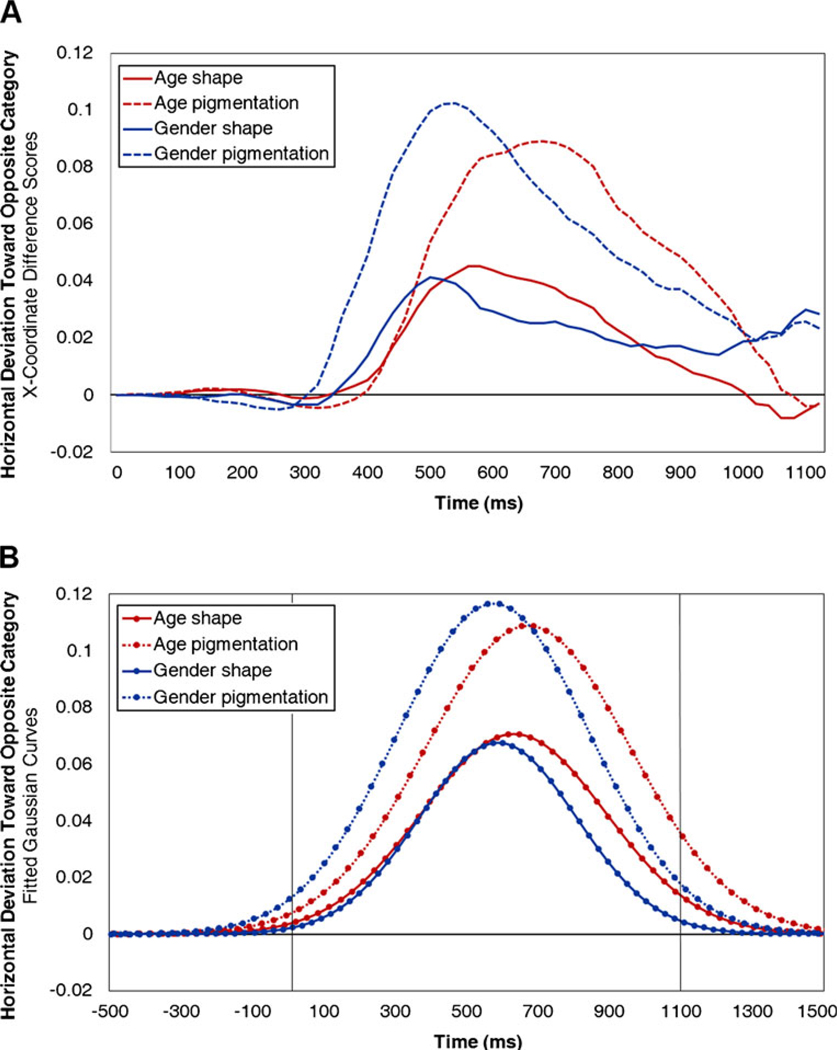

Trajectories’ x-coordinates were averaged into equally-spaced 20-ms time bins, beginning with 1 ms (face onset) and ending 1,100 ms thereafter. We chose 1,100 ms because it was approximately the largest of the mean response times (see Fig. 2b). If a trajectory terminated prior to the last time bin, it was excluded from averaging in the remaining bins for which there was no corresponding data. To index the effects of shape and pigmentation cues in the time-course of categorization, we computed x-coordinate difference scores: [typical − shape-atypical] and [typical − pigmentation-atypical] (Fig. 3a). Scores greater than zero thus indicate a deviation toward the opposite gender or age category due to processing of atypical shape or pigmentation cues. Several features of Fig. 3a are noteworthy. The pigmentation effects for both gender and age appear to be substantially larger than the shape effects. Moreover, in gender categorization, the pigmentation effect appears to begin earlier than the shape effect. However, in age categorization, the two effects appear to begin at the same time.

Fig. 3.

Time-course plots. Horizontal mouse trajectory deviation toward the opposite gender or age category (x-coordinate difference scores [atypical - typical]) is plotted as a function of time. a Actual time-courses. b Time-courses fitted to Gaussian curves

That the horizontal-deviation time-courses had a roughly Gaussian shape afforded the opportunity to fit them to Gaussian functions for statistical comparison. For each of the four conditions, we fit every participant’s x-coordinate difference scores (in 20-ms bins) to single Gaussian functions of time (0–1, 100 ms), using an iterative nonlinear optimization algorithm (Nelder–Mead). Three parameters of the fitted Gaussian curves—time-position (M), duration (SD), and strength (maximum height)—were then averaged across participants to generate mean Gaussian curves (Fig. 3b). The Ms and SEs for all parameters and conditions appear in Table 1. Each of the parameters was submitted to a 2 (cue) × 2 (task) mixed-model ANOVA.

Table 1.

Means (and SEs) of the fitted Gaussian curve parameters for the horizontal-deviation time-course effects

| Age shape | Age pigmentation | Gender shape | Gender pigmentation | |

|---|---|---|---|---|

| Time-position (M) | 632 ms (35 ms) | 671 ms (21 ms) | 588 ms (34 ms) | 575 ms (20 ms) |

| Duration (SD) | 258 ms (31 ms) | 286 ms (23 ms) | 221 ms (31 ms) | 270 ms (22 ms) |

| Strength (max height) | 0.07 (0.01) | 0.11 (0.02) | 0.06 (0.01) | 0.12 (0.01) |

In the ANOVA for time position, the main effect of cue was not significant, F(1, 41) = 0.27, p = .61, ηp2 = .01. The main effect of task, however, was significant, F(1, 41) = 5.02, p < .05, ηp2 = .11, with gender effects occurring earlier than age effects. The interaction was not significant, F(1, 41) = 1.06, p = .31, ηp2 = .03. The ANOVA for duration revealed a marginally significant effect of cue, F(1, 41) = 3.44, p = .07, ηp2 = .08, with pigmentation effects trending toward a longer duration than age effects. Neither the main effect of task nor the interaction was significant, Fs < 0.70, p’s > .40, ηp2’s < .02. The ANOVA for strength revealed a significant main effect of cue, F(1, 41) = 30.20, p < .0001, ηp2 = .42, with pigmentation cues bearing substantially stronger effects than shape cues. Neither the main effect of task nor the interaction was significant, F’s < 1.61, p’s > .20, ηp2’s < .04.

To provide estimates of the onset of shape and pigmentation effects, we determined the time bin where the difference in x-coordinates between typical and shape-atypical or pigmentation-atypical trials exceeded a value of 0.01 and maintained this minimum difference for at least 40 ms. The value of 0.01 was chosen because, as can be seen in Fig. 3, this amount of difference corresponds with the early portions of the shape and pigmentation effects. Other similar values were chosen, and the results did not substantially change. These onset times were submitted to a cue × task mixed-model ANOVA. The main effects of cue, F(1, 41) = 0.17, p = .68, ηp2 < .01, and task, F(1, 41) = 2.54, p = .12, ηp2 = .06, were not significant. There was, however, a significant cue × task interaction, F(1, 41) = 4.18, p < .05, ηp2 = .09. In gender categorization, the pigmentation effect (M = 332 ms, SE = 20 ms) emerged significantly earlier than the shape effect (M = 379 ms, SE = 26 ms), t(21) = 2.56, p < .05, r = .49. In age categorization, however, the pigmentation effect (M = 432 ms, SE = 40 ms) emerged simultaneously with the shape effect (M = 400 ms, SE = 36 ms), t(20) = 0.37, p = .92, r = .08.

Discussion

During categorization, the processing of atypical shape or pigmentation cues led participants’ hands to deviate more toward the opposite gender or age category, thereby providing a time-course of shape and pigmentation processing in gender and age categorization. Consistent with prior work (Burt & Perrett, 1995; Hill et al., 1995), the effect of pigmentation cues on categorization was substantially stronger than the effect of shape cues, for both gender and age categorization. More important, pigmentation processing had a temporally privileged role in gender categorization, with pigmentation cues exerting effects approximately 50 ms earlier than shape cues (and 100 ms earlier than pigmentation cues in age categorization). In age categorization, however, pigmentation cues began exerting effects simultaneously with shape cues.

The particular time courses of shape and pigmentation processing in face perception have remained unclear. Traditionally, the view has been that face perception is dominated by the utilization of shape cues (e.g., Biederman, 1987; Biederman & Ju, 1988) and that their utilization precedes any utilization of pigmentation cues due to the coarse-to-fine nature of processing in the visual system (Marr, 1982; Sugase et al., 1999). Specifically, early visual processing has been thought to involve achromatic and low spatial frequency information (shape), with chromatic and high spatial frequency information (pigmentation) available only later (Delorme et al., 2000). However, more recent work has found evidence for a strong role of pigmentation processing in fine-tuned facial discriminations involving within-category variation, such as gender and age (Hill et al., 1995; Price & Humphreys, 1989). Moreover, neurophysiological work shows that temporal cortex neurons begin showing sensitivity to pigmentation cues just as early as shape cues, if not earlier (e.g., Edwards et al., 2003).

The present hand-trajectory data provide real-time behavioral evidence in support of the latter account. They suggest that pigmentation indeed is important in fine-tuned facial discriminations and, further, that its sensitivity emerges either simultaneously with shape sensitivity (in age categorization) or earlier than shape sensitivity (in gender categorization). These results, therefore, do not support the claim that pigmentation processing should occur later than shape processing (Delorme et al., 2000) and, instead, are consistent with more recent neurophysiological work (e.g., Edwards et al., 2003). For instance, a recent study in humans showed that the earlier N170 electrophysiological component was sensitive to race pigmentation only, whereas the later N250 component was sensitive to both race pigmentation and shape (Balas & Nelson, 2010). Consistent with such results, the present work provides behavioral evidence that pigmentation may have a simultaneous or earlier onset of processing than shape in driving the process of social categorization.

There are several implications of this work. First, it bolsters growing evidence that pigmentation plays an important role in the perception of faces (e.g., Russell, Sinha, Biederman, & Nederhouser, 2006) and in social categorization in particular (Hill et al., 1995). Moreover, according to a dynamic interactive model (Freeman & Ambady, 2011), the finding that pigmentation and shape processing weigh in on face-category representations with different temporal dynamics would bear numerous implications for other parallel processes. The earlier processing of pigmentation in gender categorization, for example, would result in a primacy of pigmentation in triggering stereotypes tied to the male and female categories (e.g., male, aggressive; female, docile). It would also have a fundamental role in shaping how other category memberships (e.g., race, age, emotion) are simultaneously perceived, and even in shaping high-level cognitive states related to the categorization process (e.g., goals, top-down attention). Future work could examine such implications.

It is also important to note limitations of the present research. First, although shape and pigmentation were manipulated independently using a statistical face model, they may be minimally interdependent in terms of actual perception (see Footnote 2). Thus, the reported time-courses of shape and pigmentation could be slightly confounded with one another, and future work could attempt to disentangle these better. Second, shape and pigmentation may be processed differently when 2-D faces like those used in the present study are perceived, relative to perceiving 3-D faces in the real world. It will be helpful to examine both 2-D and 3-D faces in future studies.

In sum, we exploited perceivers’ hand movements to gain insight into the time-course of shape and pigmentation processing in social categorization. Pigmentation had a privileged, early onset in gender categorization and a simultaneous onset with shape in age categorization. Pigmentation also had a more dominant influence overall. These results clarify recent theoretical issues surrounding the time-course of shape and pigmentation processing in face perception. Despite shape and pigmentation having identical influences on ultimate response times, hand movements revealed these cues’ dissociable dynamics across the time-course of social categorization.

Acknowledgments

This work was supported by NIH Fellowship F31-MH092000 to J.B.F. and NSF Research Grant BCS-0435547 to N.A.

Footnotes

We refer to all of a face’s surface reflectance properties as pigmentation. Sometimes researchers also refer to these properties as color or texture.

It should be noted that whereas shape and pigmentation cues may be made independent through a statistical face model, these cues can sometimes minimally overlap in perception. This is because the shading on the surface of an object or face is a product of its three-dimensional shape, and thus pigmentation cues may contain some minimal information related to three-dimensional shape. This should pose minimal problems in the present study.

Note that because the morphing algorithm is based on standard deviations of human population parameters, the variation captured in a 25% morph along the shape dimension is equivalent to the variation captured in a 25% morph along the pigmentation dimension with respect to how these cues vary in the human population. Equal statistical variation along these two dimensions, however, may not be equal perceptually.

Contributor Information

Jonathan B. Freeman, Email: jon.freeman@tufts.edu, Department of Psychology, Tufts University, 490 Boston Avenue, Medford, MA 02155, USA.

Nalini Ambady, Department of Psychology, Tufts University, 490 Boston Avenue, Medford, MA 02155, USA.

References

- Balas B, Nelson CA. The role of face shape and pigmentation in other-race face perception: An electrophysiological study. Neuropsychologia. 2010;48:498–506. doi: 10.1016/j.neuropsychologia.2009.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry DS, McArthur LZ. Perceiving character in faces: The impact of age-related craniofacial changes on social perception. Psychological Bulletin. 1986;100:3–18. [PubMed] [Google Scholar]

- Biederman I. Recognition-by-components: A theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Biederman I, Ju G. Surface vs. edge-based determinants of visual recognition. Cognitive Psychology. 1988;20:38–64. doi: 10.1016/0010-0285(88)90024-2. [DOI] [PubMed] [Google Scholar]

- Blanz V, Vetter T. A morphable model for the synthesis of 3D faces; Paper presented at the SIGGRAPH'99; Los Angeles. 1999. Aug, [Google Scholar]

- Brown E, Perrett DI. What gives a face its gender? Perception. 1993;22:829–840. doi: 10.1068/p220829. [DOI] [PubMed] [Google Scholar]

- Burt DM, Perrett DI. Perception of age in adult Caucasian male faces: Computer graphic manipulation of shape and colour information. Proceedings of the Royal Society B. 1995;259:137–143. doi: 10.1098/rspb.1995.0021. [DOI] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural correlates of reaching decisions in dorsal premotor cortex: Specification of multiple direction choices and final selection of action. Neuron. 2005;45:801–814. doi: 10.1016/j.neuron.2005.01.027. [DOI] [PubMed] [Google Scholar]

- Dale R, Kehoe C, Spivey MJ. Graded motor responses in the time course of categorizing atypical exemplars. Memory & Cognition. 2007;35:15–28. doi: 10.3758/bf03195938. [DOI] [PubMed] [Google Scholar]

- Delorme A, Richard G, Fabre-Thorpe M. Ultra-rapid categorisation of natural scenes does not rely on color cues: A study in monkeys and humans. Vision Research. 2000;40:2187–2200. doi: 10.1016/s0042-6989(00)00083-3. [DOI] [PubMed] [Google Scholar]

- Edwards R, Xiao D, Keysers C, Földiák P, Perrett D. Color sensitivity of cells responsive to complex stimuli in the temporal cortex. Journal of Neurophysiology. 2003;90:1245–1256. doi: 10.1152/jn.00524.2002. [DOI] [PubMed] [Google Scholar]

- Freeman JB, Ambady N. MouseTracker: Software for studying real-time mental processing using a computer mouse-tracking method. Behavior Research Methods. 2010;42:226–241. doi: 10.3758/BRM.42.1.226. [DOI] [PubMed] [Google Scholar]

- Freeman JB, Ambady N. A dynamic interactive theory of person construal. Psychological Review. 2011;118:247–279. doi: 10.1037/a0022327. [DOI] [PubMed] [Google Scholar]

- Freeman JB, Ambady N, Rule NO, Johnson KL. Will a category cue attract you? Motor output reveals dynamic competition across person construal. Journal of Experimental Psychology: General. 2008;137:673–690. doi: 10.1037/a0013875. [DOI] [PubMed] [Google Scholar]

- Freeman JB, Pauker K, Apfelbaum EP, Ambady N. Continuous dynamics in the real-time perception of race. Journal of Experimental Social Psychology. 2010;46:179–185. [Google Scholar]

- Freeman JB, Ambady N, Midgley KJ, Holcomb PJ. The real-time link between person perception and action: Brain potential evidence for dynamic continuity. Social Neuroscience. 2011;6:139–155. doi: 10.1080/17470919.2010.490674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman JB, Dale R, Farmer TA. Hand in motion reveals mind in motion. Frontiers in Psychology. 2011;2:59. doi: 10.3389/fpsyg.2011.00059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill H, Bruce V, Akamatsu S. Perceiving the sex and race of faces: The role of shape and colour. Proceedings of the Royal Society B. 1995;261:367–373. doi: 10.1098/rspb.1995.0161. [DOI] [PubMed] [Google Scholar]

- Macrae CN, Bodenhausen GV. Social cognition: Thinking categorically about others. Annual Review of Psychology. 2000;51:93–120. doi: 10.1146/annurev.psych.51.1.93. [DOI] [PubMed] [Google Scholar]

- Marr D. Vision. San Francisco: Freeman; 1982. [Google Scholar]

- Paninski L, Fellows MR, Hatsopoulos NG, Donoghue JP. Spatiotemporal tuning of motor cortical neurons for hand position and velocity. Journal of Neurophysiology. 2004;91:515–532. doi: 10.1152/jn.00587.2002. [DOI] [PubMed] [Google Scholar]

- Price CJ, Humphreys GW. The effects of surface detail on object categorization and naming. Quarterly Journal of Experimental Psychology. 1989;41A:797–828. doi: 10.1080/14640748908402394. [DOI] [PubMed] [Google Scholar]

- Russell R, Sinha P, Biederman I, Nederhouser M. Is pigmentation important for face recognition? Evidence from contrast negation. Perception. 2006;35:749–759. doi: 10.1068/p5490. [DOI] [PubMed] [Google Scholar]

- Russell R, Biederman I, Nederhouser M, Sinha P. The utility of surface reflectance for the recognition of upright and inverted faces. Vision Research. 2007;47:157–165. doi: 10.1016/j.visres.2006.11.002. [DOI] [PubMed] [Google Scholar]

- Spivey MJ, Grosjean M, Knoblich G. Continuous attraction toward phonological competitors. Proceedings of the National Academy of Sciences. 2005;102:10393–10398. doi: 10.1073/pnas.0503903102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]