Abstract

Prior studies have demonstrated that the posterior superior temporal sulcus (pSTS) is involved in analyzing the intentions underlying actions and is sensitive to the context within which actions occur. However, it is debated whether the pSTS is actually sensitive to goals underlying actions, or whether previous studies can be interpreted to suggest that the pSTS is instead involved in the allocation of visual attention towards unexpected events. In addition, little is known about whether the pSTS is specialized for reasoning about the actions of social agents or whether the pSTS is sensitive to the actions of both animate and inanimate entities. Here, using functional magnetic resonance imaging, we investigated activation in response to passive viewing of successful and unsuccessful animate and inanimate goal-directed actions. Activation in the right pSTS was stronger in response to failed actions compared to successful actions, suggesting that the pSTS plays a role in encoding the goals underlying actions. Activation in the pSTS did not differentiate between animate and inanimate actions, suggesting that the pSTS is sensitive to the goal-directed actions of both animate and inanimate entities.

Keywords: biological motion, goal-directed action, animacy, social perception, superior temporal sulcus

Understanding that goals and intentions motivate behavior allows us to infer intentions from actions and predict the behavior of others. The ability of human infants to infer intentions from observed physical actions (Meltzoff, 1995; Woodward, 1998; Csibra et al., 1999; Csibra, 2008; Southgate et al., 2008; Hamlin et al., 2009; Sommerville and Crane, 2009) and the failure to do so in autism (Pelphrey et al., 2005a; Klin and Jones, 2006), suggests that the tendency to use intentions to predict and interpret behaviors is an early emerging ability that is fundamental to correctly interpreting the social world.

Cognitive neuroscientists have begun to elucidate the neural structures for representing human action in adults. The right posterior superior temporal sulcus (pSTS) has been identified as playing an important role in detecting, predicting, and reasoning about social actions and the intentions underlying actions (see review by Allison et al., 2000). In particular, the pSTS responds strongly to a range of human motion including point light displays of biological motion (Bonda et al., 1996; Grossman et al., 2000; Grossman and Blake, 2002; Beauchamp et al., 2003; Saygin et al., 2004), body motion (Pelphrey et al., 2003, 2004b) and motion of the hand (Pelphrey et al., 2004a, 2005b) and face (Puce et al., 1998; Pelphrey et al., 2005b). In addition to its role in the perception of biological motion, the pSTS has been implicated in reasoning about the intentions underlying actions. For instance, this region exhibits increased activation when participants observe a human actor perform a reaching-to-grasp movement in a manner that is incongruent with an implied goal (i.e. reaching away from an object to empty space), compared to an action that is congruent with an implied goal (i.e. reaching toward an object) (Pelphrey et al., 2004a). A subsequent study confirmed that activity in the pSTS varies as a function of situational constraints: the pSTS responds more strongly to actions perceived as implausible rather than plausible, given the context within which the action occurs (Brass et al., 2007). These studies were interpreted to suggest that the pSTS is not only sensitive to biological motion, but is also involved in reasoning about the appropriateness of biological motion given an actor's goals and the structure of the surrounding environment. However, an alternative interpretation is that the pSTS is involved in the allocation of visual attention towards unexpected events (Corbetta and Shulman, 2002). To date, conclusions about the sensitivity of the pSTS to goal-directed actions have been drawn by comparing clearly goal-directed actions (e.g. reaching towards an object) with implausible, nonsensical actions (e.g. reaching away from the object towards empty space). Because these implausible actions are very difficult to interpret, findings of increased pSTS activity in response to nonsensical motions may reflect the allocation of visual attention towards unexpected events, rather than the encoding of goals underlying actions. In other words, events such as reaching towards empty space (Pelphrey et al., 2004a) are nonsensical and so may be perceived as surprising without having to appeal to goals. Such an interpretation would be consistent with data from Corbetta and Shulman (2002) suggesting that the pSTS plays a role in the shifting and reorienting of spatial attention towards unexpected events.

One way to examine whether the pSTS is sensitive to the goals underlying actions or is involved in the allocation of attention is to compare pSTS activity in response to two forms of clearly goal-directed actions, such as failed and successful actions. Both failed and successful actions (such as failing to reach an object vs successfully reaching an object) are plausible in that in both cases the actor is interacting with objects in the environment in a meaningful way. The failed event can only be interpreted as ‘surprising’ if one encoded the goal underlying the failed action. Thus, an increase in pSTS activity in response to the failed vs successful action would provide support for the interpretation that the pSTS is sensitive to goals underlying actions.

A second remaining question is whether the pSTS is sensitive to the actions of both animate (i.e. living entities with agency) and inanimate (i.e. non-living entities without agency) forms of motion. The lack of inanimate motion controls in previous studies have left open the possibility that the pSTS may also be sensitive to the relationship between inanimate motion and the structure of the surrounding environment. In other words, it is unknown whether the pSTS is specialized for reasoning about the actions of social agents or whether the sensitivity of the pSTS extends more broadly to include the actions of both animate and inanimate entities.

There is evidence suggesting that the pSTS is engaged by inanimate forms whose motion trajectory mirrors that of an animate agent. Pelphrey et al. (2003) examined pSTS response to motion which can be conceptualized as varying along a continuum from animate to inanimate motion. Stimuli, ranging from most animate to least animate, consisted of an animation of a walking human figure, a walking robot composed of a torus, cylinders, and a sphere, a moving grandfather clock with a swinging pendulum and disjointed motion of the same elements that composed the robot but spatially rearranged. Interestingly, pSTS activation was stronger in response to both the walking human figure and the walking robot, relative to the grandfather clock and disjointed figure, suggesting that the pSTS is sensitive to the overall biological form of the observed motion, rather than the superficial characteristics of the stimulus that might convey its animate nature (e.g. a human body). However, contrasting pSTS activation in response to the animation of a human vs the robot does not provide the strongest test of whether the pSTS is involved in the perception of both animate and inanimate actions. On a continuum ranging from clearly animate to clearly inanimate motion, the walker and robot arguably lie fairly close together. Studies have shown that the use of an animated human avatar can result in less activation in brain regions sensitive to animacy compared to viewing a more veridical human form, such as a video recording of actual human motion (Perani et al., 2001; Mar et al., 2007; Moser et al., 2007). In addition, the movement of the robot in Pelphrey et al. (2003) was clearly self-propelled, which is a well-known cue to animacy (Premack, 1990). It is unknown whether using more veridical animate and inanimate forms of motion would result in differential pSTS activation.

In contrast to the evidence presented above, data from the developmental literature provides support for the existence of specialized neural systems for reasoning about the actions of animate, but not inanimate agents. For instance, a near infrared spectroscopy study of 5-month-old infants reported an increase in oxyhemoglobin at posterior temporal sensors, located roughly over the pSTS, in response to social but not to nonsocial stimuli (Lloyd-Fox et al., 2009). In addition, while infants interpret human action in terms of underlying goals, they do not readily extend this interpretation to similar forms of inanimate motion. For example, 18-month-old infants imitate the goal-directed actions of a human actor, but not that of an inanimate device (Meltzoff, 1995). In addition, infants under 12-months attribute goal directedness to a reaching-to-grasp motion made by a human hand, but not by an inanimate claw (Woodward, 1998) or a human hand whose surface properties are obscured by a metallic glove (Guajardo and Woodward, 2004). Finally, a recent study demonstrated that while 8-month-old infants interpret the failed actions of humans in terms of their underlying goals, they do not extend this interpretation to the motion of inanimate objects (Hamlin et al., 2009). Together these studies suggest that infants are able to represent the goals underlying actions and tend to apply principles of psychological reasoning to the behavior of animate, but not inanimate actions.

The pSTS may support such a mechanism for detecting and reasoning about the intentionality of social agents. But what features constitute an animate social agent? The results of Pelphrey et al. (2003) showed that an obvious inanimate form, such as an arrangement of spheres and cylinders that ‘walks’ in a human-like way, strongly activates the pSTS, suggesting that perhaps exhibiting biological motion is sufficient for the attribution of social intentions. However, the developmental studies of Woodward (1998) and Hamlin et al. (2009) showed that infants do not attribute goal directedness to inanimate action carried out by a device that has similar characteristics and motion paths as the animate agent, such as in the case of the animate hand and the inanimate claw. Because Pelphrey et al. (2003) did not examine goal-directed actions, it is unclear whether the observers would have attributed goal-directedness to the actions of the robot.

The present study aimed to investigate: (i) whether the pSTS responds more strongly to failed vs successful actions and (ii) whether the pSTS responds more strongly to animate goal-directed actions compared to inanimate goal-directed actions. Adult subjects underwent functional magnetic resonance imaging (fMRI) while viewing successful animate goal-directed actions, failed animate goal-directed actions, successful inanimate goal-directed actions and failed inanimate goal-directed actions. Given the role of the pSTS in reasoning about the relationship between goal-directed actions and the structure of the surrounding environment (Pelphrey et al., 2004a), we predicted that the pSTS would show increased activity in response to failed vs successful actions. However, if the pSTS is specialized for reasoning about the goal-directed actions of animate, but not inanimate agents, then we would expect to see a differential response to failed vs successful animate actions, and no differential response to failed vs successful inanimate actions.

METHODS

Subjects and stimuli

Fifteen subjects (8 female, mean age 22 years, all right-handed), with normal vision and no history of neurological or psychiatric illness, participated in the study. All subjects gave written, informed consent and the protocol was approved by the Yale Human Investigations Committee and carried out in accordance with the Declaration of Helsinki (1975/1983).

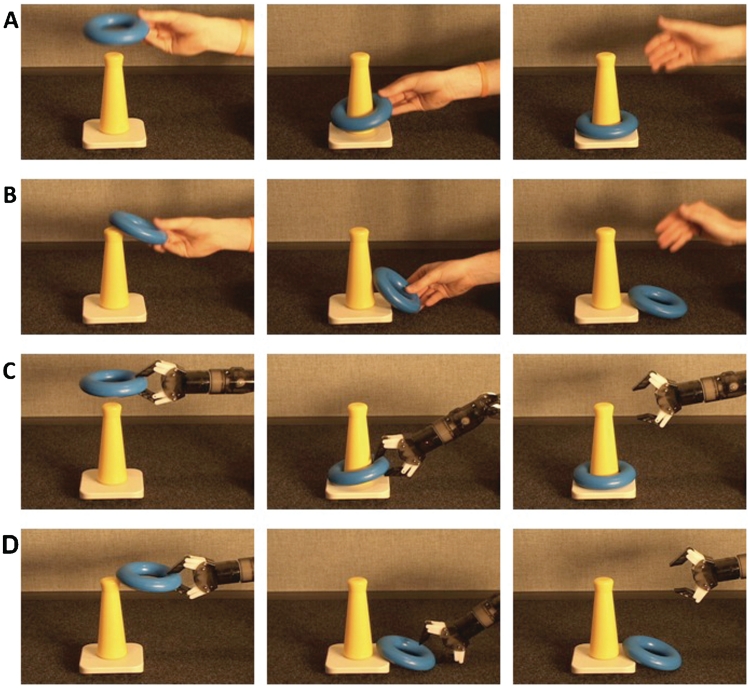

Stimuli consisted of four movies recorded from a live presentation comprising four conditions (adapted from Hamlin et al., 2009): successful animate motion, failed animate motion, successful inanimate motion and failed inanimate motion. In the successful animate motion condition, a human hand successfully places a ring on a cone. In the failed animate motion condition, the actor attempts, but fails, to place the ring on the cone. The inanimate conditions were nearly identical except that the actions were made by an inanimate robot instead of a human hand (see Figure 1 for sample still frames). The robot was chosen as an inanimate agent because it had no cues suggesting animacy such as human surface features, self-propelledness or the ability to react contingently to changes in the environment (for a review, see Biro et al., 2007).

Fig. 1.

Experimental conditions. (A) Successful animate goal-directed action. (B) Failed animate goal-directed action. (C) Successful inanimate goal-directed action. (D) Failed inanimate goal-directed action.

Each movie was presented five times per run, for six runs, for a total of 120 trials (30 trials per condition). Each movie lasted 3 s and trials were separated by a 12-s intertrial interval. Movies were presented in pseudo-random order such that the same movie was never repeated more than twice in a row. Subjects were instructed to pay attention to the stimuli at all times.

Imaging acquisition and preprocessing

Data were acquired using a 3.0T Siemens TRIO scanner. Scanning was performed using an 8-channel head coil for 10 subjects, and a 12-channel head coil for five subjects. Functional images were collected using a standard echo planar pulse sequence [parameters: repetition time (TR) = 2 s, echo time (TE) = 25 ms, flip angle α = 90°, field of view (FOV) = 220 mm, matrix = 642, slice thickness = 4 mm, 34 slices]. Two sets of structural images were collected for registration: coplanar images, acquired using a T1 Flash sequence (TR = 300 ms, TE = 2.47 ms, α = 60°, FOV = 220 mm, matrix = 2562, slice thickness = 4 mm, 34 slices); and high-resolution images, acquired using a 3D MP-RAGE sequence (TR = 2530 ms, TE = 3.34 ms, α = 7°, FOV = 256 mm, matrix = 2562, slice thickness = 1 mm, 176 slices).

Analyses were performed using the FMRIB Software Library (FSL, http://www.fmrib.ox.ac.uk/fsl/). Non-brain voxels were removed using FSL's brain extraction tool. The first two volumes (4 s) of each functional dataset were discarded to allow for MR equilibration. Data were temporally realigned to correct for interleaved slice acquisition, and corrected for head motion using FSL's MCFLIRT linear realignment tool. Images were spatially smoothed with a Gaussian kernel of full-width-half-maximum of 5 mm. Each time series was high-pass filtered (0.01 Hz cutoff) to eliminate low-frequency drift. Functional images were registered to coplanar images, which were then registered to high-resolution anatomical images, and normalized to the Montreal Neurological Institute's MNI152 template.

fMRI data analysis

Whole-brain voxel-wise regression analyses were performed using FSL's FEAT. First-level analyses were computed for each subject. The model included explanatory variables for the two factors of interest: effector (animate, inanimate), and outcome (failed, successful), as well as the interaction between effector and outcome. Each variable was modeled as boxcar functions with value 1 for the duration of the movie for a given condition, convolved with a single-gamma hemodynamic response function (HRF). A repeated-measures analysis of variance was conducted using effector (animate, inanimate) and outcome (failed, successful) as within-subject factors. Given our a priori hypotheses, we were mainly interested in those regions exhibiting a main effect of effector (animate–inanimate), a main effect of outcome (failed–successful), or an interaction between effector and outcome.

Group-level analyses were performed using a mixed effects model, with the random effects component of variance estimated using FSL's FLAME 1 + 2 procedure (Beckmann et al., 2003). Clusters were defined as contiguous sets of voxels with z (Gaussianized t) > 2.3 and then thresholded using Gaussian random field theory (cluster probability P < 0.05) to correct for multiple comparisons (Worsley et al., 1996).

RESULTS

Main effect of outcome: failed vs successful goal-directed actions

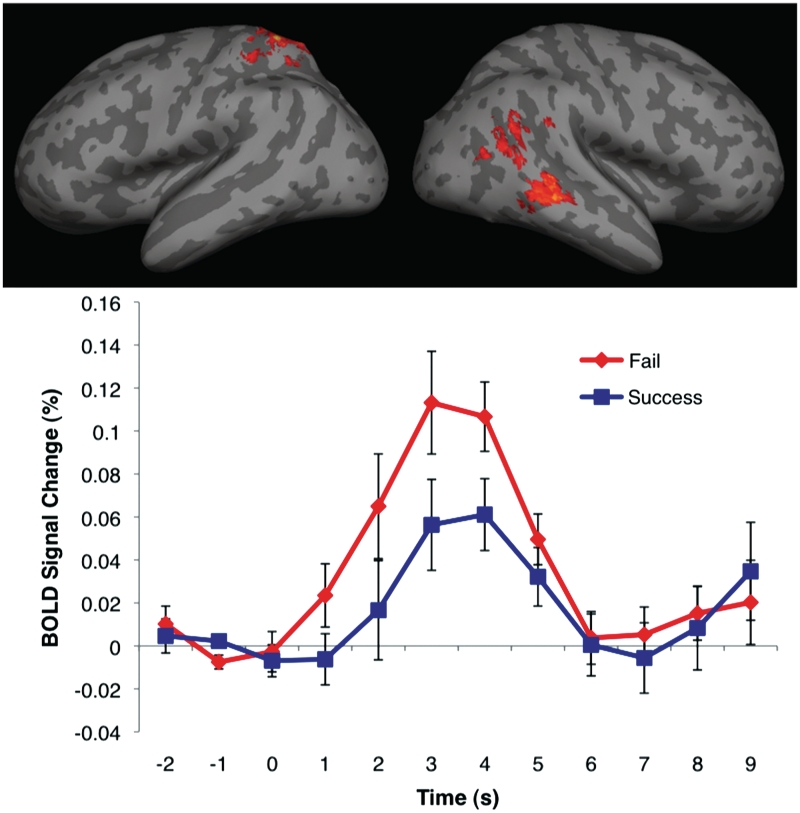

Peak coordinates and Z-scores of significant clusters from the failed vs successful motion contrast are given in Table 1. Regions of the right temporal cortex, including the pSTS and adjacent angular gyrus, and the anterior STS and adjacent middle temporal gyrus were found to respond more strongly to failed actions compared to successful actions. Active clusters were also found in the left superior parietal lobule (Figure 2).

Table 1.

Peak coordinates (in MNI space) from FAILED vs SUCCESSFUL action contrast

| Region | Coordinates (mm) |

Z-score | ||

|---|---|---|---|---|

| x | y | z | ||

| Left superior parietal lobule | −24 | −50 | 66 | 3.74 |

| Right anterior STS | 62 | −36 | −4 | 3.35 |

| Right pSTS | 62 | −52 | 12 | 3.08 |

Fig. 2.

Top panel: activation map from FAILED vs SUCCESSFUL contrast, displayed on a cortical surface representation. In all images, the color bar ranges from z = 2.3 (dark red) to 3.8 (bright yellow). Bottom panel: average BOLD signal change time courses from the activated voxels in the right pSTS from epochs corresponding to failed and successful actions. Error bars indicate standard error of BOLD signal at a given time point. Movie presentation begins at 0 s and ends at 3 s.

Main effect of effector: animate vs inanimate goal-directed actions

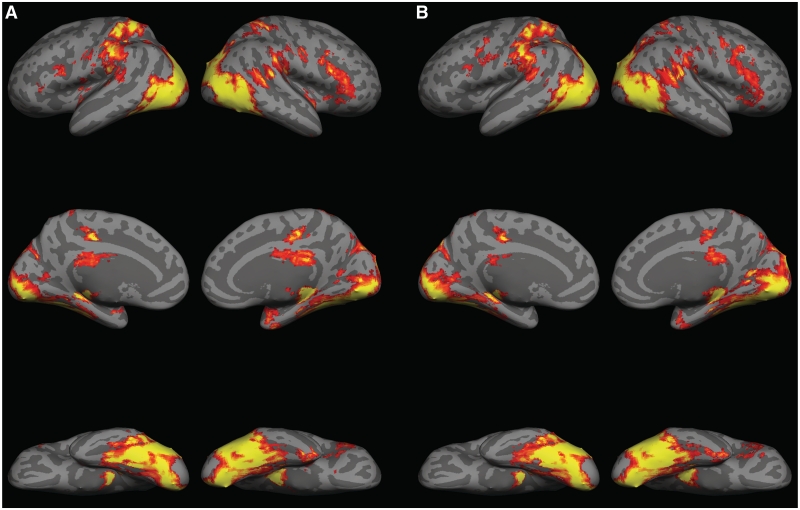

No significant voxel clusters were found to respond more strongly to movies with animate motion vs inanimate motion. Regions of the right pSTS, right-inferior frontal gyrus, bilateral middle temporal gyrus, bilateral fusiform gyrus, bilateral lateral occipital cortex, bilateral cingulate, bilateral thalamus, and bilateral superior parietal lobule were strongly active in response to both animate and inanimate conditions compared to baseline (Figure 3).

Fig. 3.

(A) Activation map for ANIMATE vs BASELINE contrast, displayed on a cortical surface representation. (B) Activation map for INANIMATE vs BASELINE contrast.

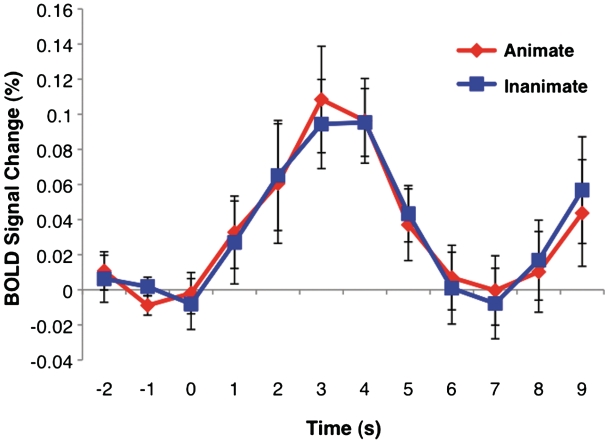

As our experimental focus was upon the pSTS, we also conducted an anatomical region-of-interest (ROI) analysis that might be more sensitive to small but consistent differences between animate and inanimate movement than the whole brain voxel-based analysis. The pSTS was defined on the Montreal Neurological Institute's MNI152 standardized brain as the crux where the right STS divides into a posterior continuation sulcus and an ascending sulcus. The ROI was defined as a 5-mm radius sphere centered on a visually identified coordinate at this anatomical landmark for each individual's brain. The fMRI signal from each subject was averaged for each voxel within this region for animate and inanimate trials. Two-tailed paired sample t-tests were used to compare percent signal change between the two conditions at each time point from 2 s before the onset of a trial to 9 s after trial onset. No difference was found at any time point [t(14) > 0.046, P > 0.05] (Figure 4).

Fig. 4.

Anatomical ROI analysis results: average BOLD signal change time course from epochs corresponding to animate and inanimate actions. Error bars indicate standard error of BOLD signal at a given time point. Movie presentation begins at 0 s and ends at 3 s.

Finally, we examined the uncorrected z-statistic map for the main effect of effector (animate–inanimate) thresholded at Z > 2.56 to ensure that our null results for the pSTS were not likely a Type II error. This analysis revealed activation in the right-lateral occipital cortex, a region inferior to the right pSTS, (peak coordinates: x = 50, y = −62 and z = 4). No activations were observed in the pSTS.

Interaction between effector and outcome

No significant interaction effects were found.

DISCUSSION

pSTS response to failed vs successful goal-directed actions

The current study provides further support for the role of the pSTS in perceiving goal-directed actions by examining another aspect of context within which goal-directed motion is perceived: failed goal-directed vs successful goal-directed actions. Our results demonstrate greater activation in the right pSTS during passive viewing of failed vs successful goal-directed actions. This result is interesting in the context of debates regarding whether the pSTS is sensitive to goals underlying actions or whether the pSTS is involved in the allocation of visual resources towards unexpected events. Unlike previous studies contrasting goal-directed actions with non-sensical, implausible actions (Pelphrey et al., 2004a; Vander Wyk et al., 2009), all of the actions produced in the current study were clearly goal-directed and therefore consistent with the subject's expectations. The critical manipulation was that both the animate hand and the inanimate robotic device sometimes succeeded and sometimes failed. The failure can only be viewed as an unexpected event if one were to encode the goal underlying the failed action, supporting the view that the pSTS is sensitive to the goals underlying actions. However, the involvement of the pSTS in studies of both goal representation and attentional reorienting to unexpected events suggests that a common cognitive process, such as comparing internal predictions with actual events, may underlie both of these tasks (Decety and Lamm, 2007; Mitchell, 2008). Nonetheless, it is interesting to note that while the pSTS responded more strongly to failed compared to successful actions, viewing successful actions also activated the pSTS compared to baseline (Figure 2). Unlike the failed actions used in the experiment, the successful actions were unsurprising in the sense that they unfolded as expected given the structure of the surrounding environment. Thus, the increase in pSTS activation in response to successful goal-directed actions compared to baseline, suggests that the pSTS is sensitive to intentions underlying actions rather than reflecting a more domain-general mechanism for directing attention towards unexpected or surprising events. However, future studies are needed to directly examine whether the pSTS is involved in domain-general or domain-specific aspects of social cognition and why the pSTS plays a role in different cognitive processes such as reorienting attention and goal attribution.

Parietal response to failed vs successful actions

In addition to observing expected differences in the pSTS, we also found greater activation in the left superior parietal lobule in response to failed vs successful actions. The superior parietal lobule has previously been implicated in the control of visually guided reaching. For instance, studies of visually guided reaching in monkeys revealed that neurons in the superior parietal lobule supply frontal motor and premotor areas with visual input required for the control of reaching (Johnson et al., 1993), and are involved in visually encoding the features and spatial location of the target to be reached and the direction of movement of the animal's hand (Johnson et al., 1996; Galletti et al., 1997). Human studies confirm these electrophysiological observations of reach-related activity in the monkey by demonstrating significant activation of the superior parietal lobule in the contralateral hemisphere to the reaching hand (Kertzman et al., 1997). In the present study, we observed activity in the left superior parietal lobule when subjects viewed failed actions, which, in the case of the animate conditions, were made by an actor's right hand. These results suggest that the superior parietal lobule may play a role not only in the execution of visually guided reaches, but also in observing and predicting the outcomes of visually guided reaching.

pSTS response to animate vs inanimate goal-directed actions

Our findings demonstrate that the pSTS is not preferentially engaged by goal-directed actions carried out by an obviously animate agent (human arm/hand) compared with an inanimate device (robotic arm/claw). To ensure that this null result was not a Type II error we examined the uncorrected Z-statistic maps for the animate and inanimate contrast. While we did not observe any activation in the pSTS, we did observe increased activation in the right lateral occipital cortex in response to the animate compared with inanimate actions. This region of activation is similar to that reported in a study examining cortical response to hand movements (Pelphrey et al., 2005b) which reported activation in a region inferior to the right pSTS and located near the EBA (Downing, et al., 2001). The EBA is known to be involved in the visual perception of human body parts (Grossman and Blake, 2002). To compare our results to past fMRI studies we tabulated peak coordinates of EBA activations from 17 studies of human body perception (Table 2). The area of the lateral occipital cortex activated by animate compared with inanimate actions in the present study (peak coordinates: x = 50, y = −62 and z = 4) falls within the range of the average peak coordinates from these studies (average peak coordinates: x = 48.9, y = −69.8, z = 0.5, standard deviation: x = 4.2, y = 4.1, z = 5.9). Thus, while the pSTS did not respond differentially to animate and inanimate actions, even when examined with a less stringent statistical threshold or by a focused ROI analysis, the right lateral occipital cortex may discriminate between actions performed by a human compared with a non-human.

Table 2.

Peak coordinates (in MNI space) of the right extrastriate body area (EBA) from studies of human body perception

| Year | MNI coordinates |

|||

|---|---|---|---|---|

| x | y | z | ||

| Authors | ||||

| Aleong and Paus | 2010 | 55 | −68 | −3 |

| Chan et al. | 2004 | 46.5 | −71.1 | −2.7 |

| David et al. | 2007 | 52.5 | −72.3 | 1.6 |

| Downing et al. | 2001 | 51 | −71 | 1 |

| Downing et al. | 2006 | 48.5 | −72.1 | −3.8 |

| Downing et al. | 2007 | 51.5 | −69.1 | −0.4 |

| Grossman and Blake | 2002 | 41 | −68.2 | 7.9 |

| Kontaris et al. | 2009 | 47.1 | −64.5 | −11.7 |

| Lamm and Decety | 2008 | 53.9 | −66.8 | 8.3 |

| Myers and Sowden | 2008 | 49.5 | −66.2 | 1.9 |

| Peelen and Downing | 2005 | 48.5 | −70 | −3.7 |

| Peelen et al. | 2006 | 48.5 | −72.1 | −2.7 |

| Peelen et al. | 2007 | 48.5 | −68.1 | −0.3 |

| Pelphrey et al. | 2009 | 39 | −83 | 15 |

| Saxe et al. | 2006 | 54 | −66 | 3 |

| Taylor et al. | 2007 | 49 | −67.3 | 0.3 |

| Taylor et al. | 2010 | 47.5 | −70.1 | −2.1 |

| Mean MNI coordinates | 48.9 | −69.8 | 0.5 | |

| Standard deviation | 4.2 | 4.1 | 5.9 | |

The current results replicate and extend previous findings of no difference in pSTS response to an animation of a human walker and a walking robot (Pelphrey et al., 2003), by using more veridical animate and inanimate forms than those used by Pelphrey et al. (2003). There are at least two possible explanations for this lack of differentiation between animate and inanimate goal-directed action in the pSTS, which have intriguing implications for the representational abilities of the pSTS and for understanding the relationship between detecting animate agents and attributing goals to observed actions.

One possibility is that the pSTS is engaged by the actions of both animate and inanimate entities. The current data support the idea that reasoning about motion and the structure of the surrounding environment may not be domain specific in the pSTS. Instead, the pSTS may be involved in reasoning about the relationship between any action (animate and inanimate) and the structure of the surrounding environment. Future studies should further investigate this claim by using even more disparate forms of animate and inanimate motion. While the robot arm used in the current study was clearly inanimate, it was similar in overall form to a human arm and thus displayed biological motion. Future studies using inanimate motion with no resemblance to a biological form, or motion that would be biomechanically impossible for a human to perform, would provide an even stronger test of whether the pSTS is sensitive to the motion of both animate and inanimate entities.

The current results also shed light on the relationship between animacy and reasoning about intentionality. A central question in both social neuroscience and developmental psychology is the conditions under which people invoke psychological principles, such as intentionality, to explain observed actions. One position is that an agent must first be categorized as animate before their behavior can be interpreted in terms of goals (Meltzoff, 1995; Woodward, 1998). Entities are characterized on the basis of cues signaling animacy, such as human surface features, self-propelled motion and contingent reactivity (for a review, see Biro et al., 2007). Under this hypothesis, psychological interpretations of behavior may be extended to inanimate objects only insofar as they exhibit traits characteristic of animate entities.

An alternative interpretation of the relationship between animacy and intentionality is that the detection of animacy and the processes involved in goal-attribution operate independently. Under this view, the interpretation of an action as goal-directed does not follow from the detection of cues signaling animacy, but is based on the successful application of a central principle used to reason about goal-directed behavior: the principle of rational action (Csibra et al., 1999, 2003; Gergely and Csibra, 2003; Csibra, 2007). The principle of rational action states that (i) the functions of actions are to bring about future goal states, and (ii) goals are achieved through the most rational action available, given the constraints of the environment. According to Biro et al., (2007) a psychological interpretation should be used when observed actions satisfy the principle of rational action which states that: ‘an action can be explained by a goal state if it appears to be the most efficient action toward the goal state that is available within the constraints of reality’ (pp. 305–306).

A further possibility is that the principle of rational action may actually serve as a cue to animacy. In other words, any action that is perceived as rational and efficient, given situational constraints, may be interpreted as being initiated by an animate agent. Such a heuristic may be a functional evolutionary adaptation: before the advent of machines and mechanical devices, actions executed in a rational manner were likely produced by an animate agent. In addition, interpreting goal-directed action as produced by an animate source may confer an advantage, as contingent, rational movement is likely to be a better diagnostic marker of animacy compared with featural or motion cues, which can be distorted (i.e. camouflage masking features of a predator, or natural sources, such as wind, causing the appearance of self-propulsion) or perhaps unfamiliar (i.e. a previously un-encountered predator).

Given that the inanimate object used in the current study did not have characteristics typical of animate agents (such as human surface features, self-propelledness and contingent reactivity), it is likely that our data support the view that goal-attribution operates independently of initial classification of an agent as animate. Subjects showed increased activation in the pSTS, a region known to be involved in goal attribution, in response to both animate and inanimate failed goal-directed actions compared to successful goal-directed actions. In addition, no differential response in pSTS was observed to animate vs inanimate actions, suggesting that observing the goal-directed motion of the robot may have served as a cue to animacy.

Despite the concordance between the current results and those of Pelphrey et al. (2003), these data appear to lie in contrast to findings in the developmental literature indicating that infants attribute goals to animate but not inanimate action (Meltzoff, 1995; Woodward, 1998; Hamlin et al., 2009). What underlies the discrepancy between adult and infant findings? One possibility is that the proposed link between goal-directed actions and animacy (i.e. goal-directedness serving as a cue for animacy) may not be a pre-wired association, but rather may be based on learning about the robust statistical association between goal-directed behavior and animacy in the world (Biro et al., 2007). In addition, mounting evidence suggests that infants may actually attribute intentions to inanimate agents under certain conditions. For example, 1-year-old infants will attribute goals to computer-animated shapes that move to efficiently complete their goal (Gergely et al., 1995; Csibra et al., 2003; Wagner and Carey, 2005). Infants as young as 6-months-old will attribute goals to a moving inanimate box and even to biomechanically impossible actions if the observed actions are physically efficient given the constraints of the environment (Csibra, 2008; Southgate et al., 2008). Further studies are needed to understand the conditions under which infants will ascribe goals to both inanimate and animate agents, and the conditions under which infants restrict psychological interpretations to animate agents.

CONCLUSIONS

The present study provides evidence that the pSTS is sensitive to the outcome of goal-directed actions, suggesting that the pSTS plays a role in representing the goals underlying actions rather than simply reorienting visual attention towards unexpected events, such as non-sensical actions. In addition, the pSTS is involved in reasoning about goal-directed actions of both animate and inanimate entities. Future studies should examine pSTS activation in response to a wider range of animate and inanimate motion, such as biomechanically impossible inanimate motion, to determine whether the pSTS truly plays a role in processing both animate and inanimate forms of motion.

Conflict of Interest

None declared.

Acknowledgments

This work was supported by National Institutes of Health NS41328 to G.M. and by Natural Science and Engineering Research Council of Canada and National Science Foundation Graduate Research Fellowships to S.S. The authors thank Will Walker for help in data collection and Peter Lewis, Marilyn Ackerman and Kiley Hamlin for their help in creating the stimuli.

REFERENCES

- Aleong R, Paus T. Neural correlates of human body perception. Journal of Cognitive Neuroscience. 2010;22:482–95. doi: 10.1162/jocn.2009.21211. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences. 2000;4:267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI responses to video and point-light displays of moving humans and manipulable objects. Journal of Cognitive Neuroscience. 2003;15:991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in fMRI. NeuroImage. 2003;20:1052–63. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Biro S, Csibra G, Gergely G. The role of behavioral cues in understanding goal-directed actions in infancy. Progress in Brain Research. 2007;164:303–23. doi: 10.1016/S0079-6123(07)64017-5. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Ostry D, Evans A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. The Journal of Neuroscience. 1996;16:3737–44. doi: 10.1523/JNEUROSCI.16-11-03737.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brass M, Schmitt RM, Spengler S, Gergely G. Investigating action understanding: inferential processes versus action simulation. Current Biology. 2007;17:2117–21. doi: 10.1016/j.cub.2007.11.057. [DOI] [PubMed] [Google Scholar]

- Chan A, Peelen M, Downing P. The effect of viewpoint on body representation in the extrastriate body area. Neuroreport. 2004;15:2407–10. doi: 10.1097/00001756-200410250-00021. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:201–15. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Csibra G. Teleological and referential understanding of action in infancy. Philosophical Transactions of The Royal Society of London. 2007;358:447–58. doi: 10.1098/rstb.2002.1235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Csibra G. Goal attribution to inanimate agents by 6.5-month-old infants. Cognition. 2008;107:705–17. doi: 10.1016/j.cognition.2007.08.001. [DOI] [PubMed] [Google Scholar]

- Csibra G, Biro S, Koos O, Gergely G. One-year-old infants use teleological representations of actions productively. Cognitive Science. 2003;27:111–33. [Google Scholar]

- Csibra G, Gergely G, Biro S, Koos O, Brockbank M. Goal attribution without agency cues: the perception of ‘pure reason’ in infancy. Cognition. 1999;72:237–67. doi: 10.1016/s0010-0277(99)00039-6. [DOI] [PubMed] [Google Scholar]

- David N, Cohen MX, Newen A, et al. The extrastriate cortex distinguishes between the consequences of one's own and others’ behavior. NeuroImage. 2007;36:1004–14. doi: 10.1016/j.neuroimage.2007.03.030. [DOI] [PubMed] [Google Scholar]

- Decety J, Lamm C. The role of the right temporoparietal junction in social interaction: How low-level computational processes contribute to meta-cognition. The Neuroscientist. 2007;13(6):580–93. doi: 10.1177/1073858407304654. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2405–7. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Downing PE, Peelen MV, Wiggett AJ, Tew BD. The role of the extrastriate body area in action perception. Social Neuroscience. 2006;1(1):52–62. doi: 10.1080/17470910600668854. [DOI] [PubMed] [Google Scholar]

- Downing PE, Wiggett AJ, Peelen MV. Functional magnetic resonance imaging investigations of overlapping lateral occipitotemporal activations using multi-voxel pattern analysis. Journal of Neuroscience. 2007;27:226–33. doi: 10.1523/JNEUROSCI.3619-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gergely G, Csibra G. Teleological reasoning in infancy: The naïve theory of rational action. Trends in Cognitive Science. 2003;7(7):287–92. doi: 10.1016/s1364-6613(03)00128-1. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Kutz DF, Battaglini PP. Arm movement-related neurons in the visual area V64 of the macaque superior parietal lobule. European Journal of Neuroscience. 1997;9:410–13. doi: 10.1111/j.1460-9568.1997.tb01410.x. [DOI] [PubMed] [Google Scholar]

- Gergely G, Nadasdy Z, Csibra G, Biro S. Taking an intentional stance at 12 months of age. Cognition. 1995;56:165–93. doi: 10.1016/0010-0277(95)00661-h. [DOI] [PubMed] [Google Scholar]

- Grossman ED, Blake R. Brain areas active during visual perception of biological motion. Neuron. 2002;35:1167–75. doi: 10.1016/s0896-6273(02)00897-8. [DOI] [PubMed] [Google Scholar]

- Grossman E, Donnelly M, Price R, et al. Brain areas involved in perception of biological motion. Journal of Cognitive Neuroscience. 2000;12(5):711–20. doi: 10.1162/089892900562417. [DOI] [PubMed] [Google Scholar]

- Guajardo JJ, Woodward AL. Is agency skin deep? Surface attributes influence infants’ sensitivity to goal-directed action. Infancy. 2004;6(3):361–84. [Google Scholar]

- Hamlin JK, Newman G, Wynn K. Eight-month-old infants infer unfulfilled goals, despite ambiguous physical evidence. Infancy. 2009;14(5):579–90. doi: 10.1080/15250000903144215. [DOI] [PubMed] [Google Scholar]

- Kontaris I, Wiggett AJ, Downing PE. Dissociation of extrastriate body and biological-motion selective areas by manipulation of visual-motor congruency. Neuropsychologia. 2009;47(14):3118–24. doi: 10.1016/j.neuropsychologia.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamm C, Decety J. Is the extrastriate body area (EBA) sensitive to the perception of pain in others? Cerebral Cortex. 2008;18:2369–73. doi: 10.1093/cercor/bhn006. [DOI] [PubMed] [Google Scholar]

- Johnson PB, Ferraina S, Bianchi L, Caminiti R. Cortical networks for visual reaching: physiological and anatomical organization of frontal and parietal lobe arm regions. Cerebral Cortex. 1996;6:102–19. doi: 10.1093/cercor/6.2.102. [DOI] [PubMed] [Google Scholar]

- Johnson PB, Ferraina S, Caminiti R. Cortical networks for visual reaching. Experimental Brain Research. 1993;97:361–5. doi: 10.1007/BF00228707. [DOI] [PubMed] [Google Scholar]

- Kertzman C, Schwarz U, Zeffiro TA, Hallett M. The role of posterior parietal cortex in visually guided reaching movements in humans. Experimental Brain Research. 1997;114:170–83. doi: 10.1007/pl00005617. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W. Attributing social and physical meaning to ambiguous visual displays in individuals with higher-functioning autism spectrum disorders. Brain and Cognition. 2006;61(1):40–53. doi: 10.1016/j.bandc.2005.12.016. [DOI] [PubMed] [Google Scholar]

- Lloyd-Fox S, Blasi A, Volein A, Everdell N, Elwell CE, Johnson MH. Social perception in infancy: A near infrared spectroscopy study. Child Development. 2009;80:986–99. doi: 10.1111/j.1467-8624.2009.01312.x. [DOI] [PubMed] [Google Scholar]

- Mar R, Kelley WM, Heatherton TF, Neil C. Detecting agency from the biological motion of veridical vs animated agents. Social Cognitive and Affective Neuroscience. 2007;2:199–205. doi: 10.1093/scan/nsm011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meltzoff AN. Understanding the intentions of others: re-enactment of intended acts by 18-month-old children. Developmental Psychology. 1995;31(5):838–50. doi: 10.1037/0012-1649.31.5.838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP. Activity in the right temporo-parietal junction is not selective for theory-of-mind. Cerebral Cortex. 2008;18(2):262–71. doi: 10.1093/cercor/bhm051. [DOI] [PubMed] [Google Scholar]

- Moser E, Derntl B, Robinson S, Fink B. Amygdala activation at 3T in response to human and avatar facial expressions of emotions. Journal of Neuroscience Methods. 2007;161(1):126–33. doi: 10.1016/j.jneumeth.2006.10.016. [DOI] [PubMed] [Google Scholar]

- Myers A, Sowden PT. Your hand or mine? The extrastriate body area. NeuroImage. 2008;42(4):1669–77. doi: 10.1016/j.neuroimage.2008.05.045. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P. Emotional modulation of body-selective visual areas. Social Cognitive Affective Neuroscience. 2007;2:274–83. doi: 10.1093/scan/nsm023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing P. Is the extrastriate body area involved in motor actions? Nature Neuroscience. 2005;8(125):125. doi: 10.1038/nn0205-125a. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE. Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron. 2006;49(6):815–22. doi: 10.1016/j.neuron.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Lopez J, Morris JP. Developmental continuity and change in response to social and nonsocial categories in human extrastriate visual cortex. Frontiers in Human Neuroscience. 2009;3:25. doi: 10.3389/neuro.09.025.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. Grasping the intentions of others: the perceived intentionality of an action influences activity in the superior temporal sulcus during social perception. Journal of Cognitive Neuroscience. 2004a;16(10):1706–16. doi: 10.1162/0898929042947900. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. Neural basis of eye gaze processing deficits in autism. Brain. 2005a;128(5):1038–48. doi: 10.1093/brain/awh404. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, Michelich CR, Allison T, McCarthy G. Functional anatomy of biological motion perception in posterior temporal cortex: an fMRI study of eye, mouth, and hand movements. Cerebral Cortex. 2005b;15:1866–76. doi: 10.1093/cercor/bhi064. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Mitchell TV, McKeown MJ, Goldstein J, Allison T, McCarthy G. Brain activity evoked by the perception of human walking: controlling for meaningful coherent motion. Journal of Neuroscience. 2003;23:6819–25. doi: 10.1523/JNEUROSCI.23-17-06819.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Viola RJ, McCarthy G. When strangers pass: processing of mutual and averted gaze in the superior temporal sulcus. Psychological Science. 2004b;15:598–603. doi: 10.1111/j.0956-7976.2004.00726.x. [DOI] [PubMed] [Google Scholar]

- Perani D, Fazio F, Borghese NA, et al. Different brain correlates for watching real and virtual hand actions. NeuroImage. 2001;14(3):749–58. doi: 10.1006/nimg.2001.0872. [DOI] [PubMed] [Google Scholar]

- Premack D. The infant's theory of self-propelled objects. Cognition. 1990;36:1–16. doi: 10.1016/0010-0277(90)90051-k. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. The Journal of Neuroscience. 1998;18(6):2188–99. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R, Jamal N, Powell L. My body or yours? The effect of visual perspective on cortical body representation. Cerebral Cortex. 2006;16:178–82. doi: 10.1093/cercor/bhi095. [DOI] [PubMed] [Google Scholar]

- Saxe R, Xiao K, Kovacs G, Perrett D, Kanwisher N. A region of right posterior superior temporal sulcus responds to observed intentional actions. Neuropsychologia. 2004;42(11):1435–46. doi: 10.1016/j.neuropsychologia.2004.04.015. [DOI] [PubMed] [Google Scholar]

- Saygin AP, Wilson SM, Hagler DJ, Bates E, Sereno MI. Point-light biological motion perception activates human premotor cortex. The Journal of Neuroscience. 2004;24(27):6181–8. doi: 10.1523/JNEUROSCI.0504-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommerville JA, Crane CC. Ten-month-old infants use prior information to identify an actor's goal. Developmental Science. 2009;12(2):314–25. doi: 10.1111/j.1467-7687.2008.00787.x. [DOI] [PubMed] [Google Scholar]

- Southgate V, Johnson MH, Csibra G. Infants attribute goals even to biomechanically impossible action. Cognition. 2008;107:1059–69. doi: 10.1016/j.cognition.2007.10.002. [DOI] [PubMed] [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. Journal of Neuropsychology. 2007;98:1626–33. doi: 10.1152/jn.00012.2007. [DOI] [PubMed] [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE. fMRI-Adaptation studies of viewpoint tuning in the extrastriate and fusiform body areas. Journal of Neuropsychology. 2010;103:1467–77. doi: 10.1152/jn.00637.2009. [DOI] [PubMed] [Google Scholar]

- Vander Wyk BC, Hudac CM, Carter EJ, Sobel DM, Pelphrey KA. Action understanding in the superior temporal sulcus region. Psychological Science. 2009;20(6):771–7. doi: 10.1111/j.1467-9280.2009.02359.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner L, Carey S. 12-month-old infants represent probable endings of motion events. Infancy. 2005;7(1):73–83. doi: 10.1207/s15327078in0701_6. [DOI] [PubMed] [Google Scholar]

- Woodward AL. Infants selectively encode the goal object of an actor's reach. Cognition. 1998;69:1–34. doi: 10.1016/s0010-0277(98)00058-4. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC. A unified statistical approach for determining significant signals in images of cerebral activation. Human Brain Mapping. 1996;4:58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]