Abstract

In a neuroimaging study focusing on young bilinguals, we explored the brains of bilingual and monolingual babies across two age groups (younger 4–6 months, older 10–12 months), using fNIRS in a new event-related design, as babies processed linguistic phonetic (Native English, Non-Native Hindi) and nonlinguistic Tone stimuli. We found that phonetic processing in bilingual and monolingual babies is accomplished with the same language-specific brain areas classically observed in adults, including the left superior temporal gyrus (associated with phonetic processing) and the left inferior frontal cortex (associated with the search and retrieval of information about meanings, and syntactic and phonological patterning), with intriguing developmental timing differences: left superior temporal gyrus activation was observed early and remained stably active over time, while left inferior frontal cortex showed greater increase in neural activation in older babies notably at the precise age when babies’ enter the universal first-word milestone, thus revealing a first-time focal brain correlate that may mediate a universal behavioral milestone in early human language acquisition. A difference was observed in the older bilingual babies’ resilient neural and behavioral sensitivity to Non-Native phonetic contrasts at a time when monolingual babies can no longer make such discriminations. We advance the “Perceptual Wedge Hypothesis”as one possible explanation for how exposure to greater than one language may alter neural and language processing in ways that we suggest are advantageous to language users. The brains of bilinguals and multilinguals may provide the most powerful window into the full neural “extent and variability” that our human species’ language processing brain areas could potentially achieve.

Keywords: fNIRS, bilingualism, infant phonetic processing, Perceptual Wedge Hypothesis, brain development, language acquisition, Broca's Area, STG, LIFC

Among the most fascinating and hotly debated questions in early brain and language development is how do young babies discover the finite set of phonetic units of their native language from the infinitely varying stream of sounds and sights around them? After nearly a half century of research, it is now understood that this process is monumentally important to discovering the “building blocks” of human language (the restricted set of meaningless phonetic and phonemic units in alternating, rhythmic-temporal bundles at the heart of natural language phonology). In turn, this foundation is vital to a cascade of other processes underlying early language acquisition, including the capacity to perform tacit “statistical” analyses over the phonetic units and their underlying phonemic categories en route to uncovering the linguistic rules by which words, clauses, and sentences are formed and arranged in their native language (theoretical articulation, Petitto, 2005; statistical analyses, e.g., Saffran, Aslin & Newport, 1996; Marcus, Vijayan, Bandi Rao, & Vishton, 1999; Marcus 2000). Yet, in addition to all else, the baby’s sensitivity to contrasting phonetic units in the linguistic stream permits it to discover an important form – “the word” – in infinite strand environmental input around it, and upon which the complex array of human meanings and concepts are built. While young monolingual babies’ capacity to discover the finite set of phonetic units in their native language is said to be daunting, the young bilingual baby’s capacity to do this may be doubly daunting, yet, research on this topic is exceedingly scarce. Here, we articulate a brain imaging study using technology (functional Near Infrared Spectroscopy, fNIRS, in a new event-related design) to uncover the neural networks and tissue that underlie the early phonetic processing capacity in young bilingual babies as compared to monolingual babies.

Adding to the wonder of early human phonetic processing, young babies also begin life capable of discriminating a universal set of phonetic contrasts. Decades of research have shown that babies under 6 months demonstrate a capacity to discriminate all of the world languages’ phonetic contrasts to which they are exposed, both Native and Non-Native (foreign language) phonetic contrasts they never heard before. By 10–12 months, however, babies lose this “universal” capacity and perform in similar ways to adults! In a twist on our assumptions about early human development as being a process of getting better over time, babies get worse as they grow up in this select way. Though losing their sensitivity to phonetic contrasts across world languages, babies are now more highly sensitive to the phonetic contrasts found in their native language—as if their initial open capacity at birth had, over development, attenuated down to the specific language contrasts present in their environment (c.f., Eimas et al., 1971; Werker, Gilbert, Humphrey & Tees, 1981; Werker & Tees, 1984; Werker & Lalonde, 1988; Polka & Werker, 1994; Stager & Werker, 1997; Werker et al., 1998; Werker & Stager, 2000; Baker, Michnick-Golinkoff, & Petitto, 2006; Kuhl, 2007; Werker & Gervain, in press).

The biological basis of the baby’s initial phonetic capacities and their change over time has been one of the most passionately pursued issues in the history of early child language research. Drawing from behavioral research, existing theoretical accounts to explain the young monolingual baby’s nascent “open” phonetic capacities follow one of two basic accounts.

One account—here, the “auditory-general” hypothesis—suggests that monolingual babies learn the phonetic units of their language using general auditory (sound processing) mechanisms, which, over the first year of life, become more and more related to language. Here, in very early life, the brain is thought to begin with general (non-dedicated) auditory neural tissue and systems that eventually become sensitive (entrained) to the patterns in the input, language or otherwise, based on the presence and strength (frequency of exposure) of the patterns in the environment. According to this view, the frequency of language exposure, or the amount of “time on task,” is key in order for typical language development to proceed, as well as the salience of specific phonetic contracts (Maye, Weiss, & Aslin, 2008). This view implies that babies need some amount of time on task, or frequency of exposure with a native language (albeit the amount is unspecified), in order to construct mental representations of the relevant underlying abstract classes of sounds, or phonemes, from the surface phonetic units that they hear (Fennell, Byers-Heinlein, & Werker, 2007; Maye et al., 2008; Sundara, Polka, & Molnar, 2008; Pereira, 2009).

A second account—here, the “language-specific” hypothesis—suggests that monolingual babies are born with specific language-dedicated mechanisms in the human brain (more below) that give rise to the capacity to discover the linguistic phonetic and phonemic units in the language(s) used in their environment. Here, in very early life, the brain is thought to begin with specific dedicated neural tissue and systems for language processing that are tuned to unique rhythmic-temporal patterns at the heart of all human language structure (Petitto 2005, 2007, 2009). Rather than frequency (or “time on task”) of exposure with a native language being key, this view implies that babies need systematicity (regularity) of exposure to a language to attune to its phonetic and phonemic regularities (here, the quality of the input — hence its systematicity— is weighted more heavily than frequency of language exposure; e.g., Juscyzk, 1997; Baker, Golinkoff & Petitto, 2006; Petitto, 2005, 2007, 2009).

Behavioral Studies & Phonetic Processing

One reason for the remarkable perseverance of this lively debate is that the question has been a priori largely unanswerable with behavioral data alone, and behavioral data using only the sound modality to boot! Previous research has used only speech and sound to test, essentially, whether speech (language) or sound (auditory) processes are most key in early phonetic processing. In our Infant Habituation Laboratory, we showed that hearing speech-exposed 4 month-old babies with no exposure to signed languages discriminated American Sign Language (ASL) phonetic handshapes by phonetic category membership (Baker, Sootsman, Golinkoff, & Petitto, 2003; Norton, Baker, & Petitto, 2003; Baker, Idsardi, Golinkoff, & Petitto, 2005; Baker, Golinkoff & Petitto, 2006). The hearing babies treated the phonetic handshapes like true linguistic-phonetic units in the same way that young hearing babies exposed to English can nonetheless discriminate phonetic units in Hindi. Crucially, these hearing babies did not do so at 14 months, just as with speech perception results. Although this study provided important behavioral support for the language-specific hypothesis, neuroimaging data of the brains of young babies would be especially revealing because we may observe directly which brain tissue and neural systems mediate linguistic phonetic auditory processing as compared to nonlinguistic auditory processing—with comparative study of bilingual versus monolingual babies being especially revealing.

When monolingual babies learn their one language, both the language-specific and the auditory-general hypotheses may equally describe early phonetic capacities. However, when bilingual babies learn their two languages, the auditory-general hypothesis suggests that phonetic discrimination in either (or both) of the baby’s two languages will suffer as a consequence of decreased (or unequal) language exposure (decreased “time on task” in one or the other language). By contrast, the language-specific hypothesis suggests that phonetic discrimination in bilingual babies will follow a similar developmental time-course to monolingual babies as long as the young bilingual baby receives regular/systematic input in its two native languages. For example, while one caretaker (who is home all day with the baby) may speak a particular language, and the other caretaker (who is away except weekends) may speak another language, comparable achievement of typical language milestones can be expected across each of the baby’s two language nonetheless, because input is systematic (irrespective of the frequencey inequalities; e.g., see Petitto et al. 2001, for a review of the relevant experimental findings).

Behavioral studies of phonetic development in bilingual babies are exceedingly sparse and controversial. Some of the few behavioral studies conducted show that babies from bilingual backgrounds perform differently than monolingual babies. When tested on select phonetic contrasts, bilingual babies can perform the same as monolingual babies, but this is not so for all phonetic contrasts. Here, it has been observed that older bilingual babies around 12–14 months may still show the open capacity to discriminate Non-Native phonetic contrasts (foreign language contrasts, such as, Hindi) at time when monolingual babies’ perception of phonetic contrasts is fixed to those found only in their native language (such as, English). Indeed, this difference between the bilingual baby’s still “open” phonetic discrimination capacity versus the monolingual baby’s already “closed,” or stabilized, phonetic discrimination capacity to native language phonetic contrasts only has led some researchers to claim that the bilingual baby’s difference is “deviant” and/or language delayed (Bosch & Sebastián-Gallés, 2001, 2003; Werker & Fennell, 2004; Burns, Yoshida, Hill, & Werker, 2007; Sundara et al., 2008). By contrast, bilingual babies are not delayed relative to monolingual babies in the achievement of all classic language-onset milestones, such as canonical babbling, first words, first two-word, and first fifty-words (Pearson, Fernández, & Oller, 1993; Oller, Eilers, Urbano, & Cobo-Lewis, 1997; Petitto, 1997, 2009; Petitto, Holowka, Sergio, & Ostry, 2001; Petitto, Katerelos et al., 2001; Petitto, Holowka et al., 2001; Holowka & Petitto, 2002; Petitto, Holowka, Sergio, Levy, & Ostry, 2004). With such mixed findings, behavioral studies have not satisfactorily illuminated the types of processes that underlie early bilingual phonetic processing so vital to all of human language acquisition.

Neuroimaging Studies & Phonetic Processing

Regarding neuroimaging of adults, fMRI studies have shown that there is a preferential response in the left Superior Temporal Gyrus, STG (Brodmann Area “BA” 21/22) during the perception of phonetic distinctions in one’s native language (Zatorre, Meyer, Gjedde, Evans, 1996; Celsis et al., 1999; Burton, Small, & Blumstein, 2000; Petitto, Zatorre et al., 2000; Burton, 2001; Zatorre & Belin, 2001; Zevin & McCandliss, 2005; Burton & Small, 2006; Joanisse, Zevin, & McCandliss, 2007; Hutchison, Blumstein, & Myers, 2008; note that, though referring to the similar phenomenon of distinguishing between two phonetic units, the field uses “phonetic perception” with adults and “phonetic discrimination” with babies. This is to avoid prejudging a baby’s capacity to discriminate between two phonetic units as having reached their perceptual awareness). In addition, the left Inferior Frontal Cortex (LIFC), a triangular region (BA 45/47; also referred to in the literature as the left Inferior Frontal Gyrus), which has been demonstrated to be involved in the search and retrieval of information about the semantic meanings of words (e.g., Petitto, Zatorre, et al., 2000), and its more posterior region overlapping Broca’s Area (BA 44/45), is involved in the segmentation and patterned (syntactic) sequences underlying all language, be it spoken or signed (spoken: e.g., Burton, Small, & Blumstein, 2000; Golestani & Zatorre, 2004; Blumstein Myers, & Rissman, 2005; Burton & Small, 2006; Meister Wilson, Deblieck, Wu, & Iacoboni, 2007; Iacoboni, 2008; Strand, Forssberg, Klingberg, & Norrelgen, 2008; signed: e.g., Petitto, Zatorre et al, 2000). Indeed, studies of adult phonological perception have consistently shown activation in this posterior region of the LIFC (BA 44/45) during phonological tasks, including phoneme discrimination, phoneme segmentation, and phonological awareness (e.g., Zatorre, Meyer, Gjedde, & Evans, 1996; Burton, Small & Blumstein, 2000; Burton, 2001; Burton & Small, 2006).

Taken together, while many parts of the brain are involved in language processing, a “bare bones” sketch would minimally entail the following three areas: The STG participates in the segmentation of the linguistic stream into elementary phonetic-syllabic units (and their underlying phonemic categories from which they are derived), on which the left IFC participates in the search and retrieval of stored meanings (is the segment a real word, a morphological part of a word?; if so, what is its meaning?). Additionally, Broca’s area participates in the motor planning, sequencing, and patterning essential for language production, as well as phonological and syntactic (phonotactic) patterning—with all happening rapidly and/or in parallel.

Regarding neuroimaging of young monolingual babies, studies of language processing using differing brain measurement technologies show a general sensitivity to speech (Dehaene-Lambertz, Dehaene, & Hertz-Pannier, 2002; Peña et al., 2003) and phonetic contrasts (Minagawa-Kawai, Mori, & Sato, 2005; Minagawa-Kawai, Mori, Naoi, & Kojima, 2007) in the left temporal region of a baby’s brain, with most studies using Evoked Response Potential (ERP; see reviews by Friederici, 2005; Kuhl & Rivera-Gaxiola, 2008). ERP measures the electrical neural response that is time-locked to the specific stimulus (e.g., presentation of a phonetic unit or syllable). Although ERP method provides excellent temporal resolution of the neural event, it provides relatively poor information about the anatomical localization of the underlying neural activity (Kuhl, 2007). This is not the case with fNIRS, which provides good anatomical localization and excellent temporal resolution. Importantly, fNIRS also is "child-friendly, " and quiet. As such, fNIRS has revolutionized the ability to neuroimage human language and higher cognition (see methods below for a more detailed description; see also Quaresima, Bisconti, & Ferrari, in press, for a review).

Most exciting, a few fNIRS neuroimaging studies of young monolingual babies have identified specific within hemisphere neuroanatomical activation sites associated with specific types of language stimuli, in particular, the left STG responds to phonetic change, as does LIFC/Broca’s area (Petitto, 2005, 2007, 2009; Dubins, White, Berens, Kovelman, Shalinsky & Petitto, 2009). One group found Broca’s area responsivity to phonetic contrasts in neonates 5 days old (Imada et al., 2006). In addition, at around one year of age, LIFC/Broca’s area shows a significant increase in responsiveness to language-like phonetic contrasts (Dubins, Berens, Kovelman, Shalinsky, Williams, & Petitto, 2009).

Regarding neuroimaging of young bilingual babies, as of yet, we have identified no published studies on both the neural and the behavioral correlates of phonetic development in this population (see Petitto, 2009). Interestingly, studies of both the neural and behavioral correlates of adult bilingual language processing have only become most vibrant over the past decade (see Ansaldo, Marcotte, Fonseca & Scherer, 2008 for thorough review of neuroimaging and the bilingual brain; see also Kovelman, Baker and Petitto 2008b). The paucity of research on bilingual babies (be it behavioral or neuroimaging), in conjunction with previous findings from our lab (see especially Petitto, 2009, for a review; Holowka, Sergio, Levy, & Ostry, 2004; Petitto, 2007; Petitto, Holowka, Sergio & Ostry, 2001) fueled our excitement to discover answers to the following questions: Will bilingual babies show similar or dissimilar recruitment of classic language areas as compared to adults and monolingual babies (especially, STG and LIFC/Broca’s area)? What is the nature of the change in neural recruitment and processing over time? Can we discern any brain-based changes that are correlated with human language developmental milestones?

The present study is among the first in the discipline to investigate phonetic processing in bilingual babies using modern brain imaging technology, here fNIRS. Bilingual and monolingual babies (across two age groups: younger babies ~4 months and older babies ~12 months) underwent fNIRS brain imaging while processing linguistic phonetic units (Native/English, Non-Native/Hindi) and nonlinguistic stimuli (Tones). Each of the two prevailing accounts emanating from decades of behavioral work imply testable hypotheses with clear neuroanatomical predictions, which, for the first time, can be directly explored with neuroimaging in the bilingual and/or monolingual baby’s brain over time.

Hypotheses

(i) If the “auditory-general” hypothesis mediates early phonetic processing, then all babies should process all stimuli as general auditory stimuli. Here, we should see no difference between babies’ early processing of linguistic stimuli (Native/English, Non-Native/Hindi) versus nonlinguistic stimuli (Tones) in the brain’s classic language tissue associated with phonetic processing (e.g., Superior Temporal Gyrus, STG, and left Inferior Frontal Cortex, LIFC or left IFC; more below).

Also following from the auditory-general view, bilingual babies may show greater frontal activity relative to monolingual babies, with comparatively minimal participation of classic language brain areas, which would ostensibly reflect the greater cognitive nature of the task (the harder/double work load) facing a bilingual baby when it learns two languages.

(ii) If the “language-specific” hypothesis mediates early phonetic processing, then we should see clear neural differentiation between linguistic versus nonlinguistic Tone stimuli in the STG. Given the hypothesized central role of phonetic processing in human language acquisition, we may observe the brain tissue classically associated with phonology and language processing in adults to be activated in babies when processing phonetic stimuli, and early (from the youngest babies).

Also following from this language-specific view, if the bilingual baby’s brain has been rendered “deviant” and/or “slower to close” due to dual language exposure, then we should see clear evidence of this ostensible neural “disruption” in its classic language brain areas. Specifically, we should not see the typical developments observed in classic language brain areas as found in monolinguals babies. Said another way, we should see an atypicality or difference in both neural recruitment and developmental timing when specific classic langauge brain areas are engaged in the brains of bilingual babies as compared monolingual babies.

We test the above hypotheses by examining the question of bilingual versus monolingual babies’ phonetic processing, with the goal of providing converging evidence to advance a decades-old debate. Specifically, we use a phonetic processing task while babies (bilingual and monolingual) undergo fNIRS brain imaging as a new lens in our pursuit of the biological and input factors that together give rise to the phonetic processing capacity at the heart of early language acquisition in our species.

Method

Participants

Sixty-three (63) babies between ages 2 and 16 months participated in this study, with two (2) being removed for fussiness, yielding sixty-one (61) babies for the study. Most of the babies were born full term (with none being born more than three weeks earlier than their due date), none were part of a multiple birth, and all were healthy babies, with all exhibiting typical (normal) developmental milestones as per their age. All babies were exposed either to English only (monolinguals), or to English and another language (bilinguals), which was unrelated to Hindi (more below).

Bilingual Babies

Bilingual babies were classified into two groups: (1) Younger babies (n=15, median age = 4 months 22 days), and (2) Older babies (n = 19, median age = 12 months 13 days). Following standard practice in experiments in child language whereupon a young monolingual baby is grouped as, for example, a “French,” “Spanish,” or “Chinese” baby based on the input language of its parents, babies in our study were grouped as either bilingual or monolingual based on the input language(s) of their parents, which underwent rigorous assessment and validation. First, parents filled in an on-line screening questionnaire (which ascertained basic facts about the baby’s name, age, siblings, health, languages used in the home, “bilingual” or “monolingual” home, contact information, willingness to be contacted for an experimental study, etc.). If parents phoned in, this same set of questions was posed. Based on a rough cut of bilingual or monolingual input, baby’s age, health, etc., parents were invited into the lab. Second, parents filled in a highly detailed standardized, previously validated and published questionnaire, which contained cross-referenced questions (internal validity questions) called the “Bilingual Language Background and Use Questionnaire” (“BLBUQ;” see Petitto et al., 2001; Holowka, Brosseau-Lapré, & Petitto, 2002; Kovelman, Baker, & Petitto, 2008a, 2008b for more details on this extensive bilingual language questionnaire). This questionnaire asked (a) detailed questions about parents’ language use and attitudes (language background, educational history, employment facts, social contexts across which each parent uses his or her languages, personal language preference containing standardized questions to assess language dominance and language preference, personal attitudes about language/s, language use with the baby participant’s other siblings, parents’ linguistic expectations for their baby, parents’ attitudes towards bilingualism, parents’ self-assessment about “balanced” bilingual input, and (b) detailed questions about the nature of language input and use with the child (languages used with the child, questions about child rearing, questions about who cares for the child and number of hours, caretaker’s language/s, child’s exposure patterns to television/radio, child’s language productions, child’s communicative gestures, child’s physical and cognitive milestones etc.). Third, an experimenter collected an on-site assessment of the parents’ language use as parents interacted with their baby (and other siblings, where applicable). After answers to the on-line screening, full questionnaire, and experimenter on-site assessments were completed and compared, a child’s final group inclusion was determined. Collectively, from these assessments, we selected babies in bilingual homes (as balanced as humanly possible), and we sought for all bilingual and monolingual families to be of comparable socio-cultural and economic status. To review, parents who deemed their households as being “bilingual,” and who described themselves as being “bilingual,” and who reported their babies to be “bilingual,” that is, receiving bilingual language exposure (as assessed with the screening, BLBUQ, and experimenter assessment tools) involving comparable consistent and regular exposure to English and another language (balanced bilingual), which was unrelated to Hindi, from multiple sources (e.g., Parents, Grandparents, Friends, Community), and from very early life, were considered to be “bilingual families,” and their babies were considered to be receiving bilingual language exposure.

Of our 34 babies with bilingual language exposure, 27 were first exposed to English within ages birth to two months, four were first exposed to English from two to six months, and three were first exposed between six to 10 months. All of these 34 bilingual babies were first exposed to another language that was not related to Hindi, such as French, Spanish, or Chinese within ages birth to two months.

Monolingual babies

Monolingual babies were classified into two groups: (1) Younger babies (n = 17, median age = 4 months 18 days), and (2) Older babies (n = 10, median age = 12 months 13 days). Monolingual language exposure was assessed from screening interviews, experimenter interviews, and, crucially, from parental responses on the standardized and widely published “Monolingual Language Background and Use Questionnaire” (MLBUQ; e.g., Petitto et al., 2001; Petitto & Kovelman, 2003). Only babies whose parents reported that the only language to which their baby was exposed to was English were considered to be monolingual. All 27 babies in the monolingual group only had systematic exposure to English from birth.

Stimuli

Participants were presented with stimuli from three distinct phonetic categories: (1) linguistic Native English phonetic units, (2) linguistic Non-Native (“foreign” language) Hindi phonetic units, and (3) nonlinguistic pure Tones. Our linguistic Native stimuli consisted of the English phonetic units (in consonant-vowel/CV syllabic organization), [ba] and [da], recorded by a male native English speaker. Our linguistic Non-Native stimuli consisted of the Hindi phonetic units (in CV syllabic organization), [ta] (dental t) and [:ta] (retroflex t), recorded by a native male Hindi speaker (from Werker & Tees, 1984; see also Fennell et al., 2007). Our nonlinguistic condition consisted of a 250 Hz pure Tone. All stimuli were equated for amplitude and sampling rate (22 KHz), with the English and Hindi conditions also equated for prosody and pitch.

Procedure

Babies were seated on a parent’s lap facing a 22 inch monitor at a distance of approximately 120 cm. Speakers (Bang & Olufsen, Beolab 4 PC) were placed 30 cm on each side of the monitor and a Sony MiniDV camcorder was positioned midline beneath the monitor. To ensure babies oriented towards the monitor, a visually compelling silent aquarium video played throughout the session (Plasma Window, 2006) and experimenters further ensured that the babies remained attentive/alert/on task with silent toys. During the session, parents listened to prerecorded music through Sony professional series headphones (Sony MDR-7506) so as not to inadvertently influence their babies.

Stimulus Presentation

One of the technical innovations of the present neuroimaging study is that we mastered how to use an Event design with fNIRS (as opposed to a Block design). For each run, one of the two phonemes in each of the English and Hindi conditions was selected to be the “standard” phoneme, while the other phoneme became the target or “deviant” phoneme. Each phoneme served as standard and target an equal number of times. Sixty percent (60%) of the phoneme trials in each run were standard phoneme trials and 10% of the phoneme trials were the target. The remaining 30% of the trials were “catch” trials in which no phoneme was presented. This ensured that babies habituated to the standard stimulus, thereby increasing the novelty of the target stimulus. In the Tone condition, 65% of the stimulus trials were Tone trials, while the remaining 35% were catch trials.

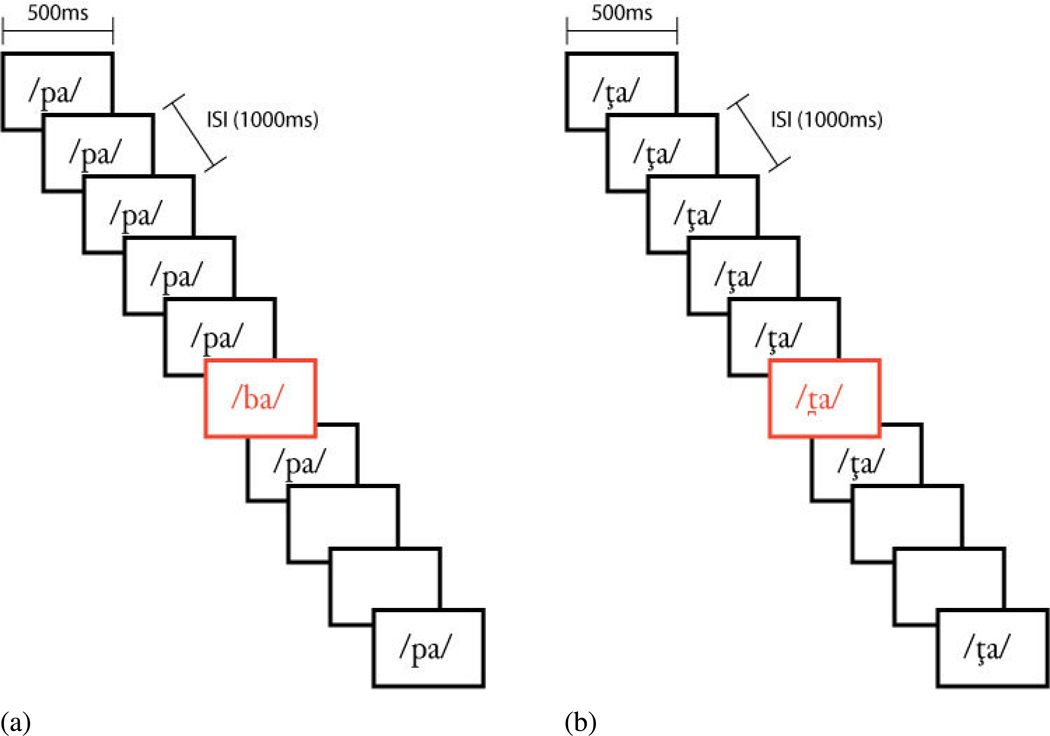

Ten run orders of 40 events (Standard, Target, Catch) were created for each linguistic condition (English and Hindi), with each of the run orders conforming to the above proportions of standard, target, and catch trials. One run order of 40 trials was created for the Tone condition conforming to the above proportions of stimulus and catch trials. Thus, there were a total of 10 English runs, 10 Hindi runs, and one Tone run. The events in each run were randomized with the following constraints: (1) a deviant event only was presented after a minimum of three standard events, and (2) no more than two catch events could occur sequentially. Each run lasted 60 s—each of the 40 stimuli were presented for 500 ms with an inter-stimulus interval (ISI) of 1000 ms. Each run was preceded by a variable lead-in time to avoid neural synchronization with stimuli. A 30 second break was given between runs (see Figure 1).

Figure 1.

Sample run orders for (a) Native linguistic and (b) Non-Native linguistic stimuli. Stimuli were presented for 500 ms with an inter-stimulus interval (ISI) of 1000 ms. [ta] = dental t and [ta]= retroflex.

fNIRS Brain Imaging: Advantages over fMRI

fNIRS has several key advantages over fMRI. fNIRS has a remarkable sampling rate of neuronal activity at 10 times per second, as compared to fMRIs sampling rate of ~ once every 2 seconds. Thus, fNIRS is regarded as a closer measure of neuronal activity than the fMRI. Unlike fMRI that yields a combined blood oxygen level density (BOLD) measure (a ratio between oxygenated and deoxygenated hemoglobin), fNIRS yields separate measures of deoxygenated and oxygenated hemoglobin in “real time” during recording. fNIRS has excellent spatial resolution and it has better temporal resolution than fMRI (~ < 5 s hemodynamic response, HR). fNIRS’ depth of recording in the human cortex is less than fMRI, measuring about ~3 to 4 cm deep, but this is well-suited for studying the brain’s higher cortical functions, such as language. Perhaps fNIRS’ greatest advantage over fMRI for cognitive neuroscience research with humans is that it is very small (the size of a desktop computer), portable, and virtually silent. This latter feature makes it outstanding for testing natural language processing, as the ambient loud auditory pings and bangs typical of the fMRI scanner are nonexistent. Other important advantages over fMRI is that fNIRS is especially participant/child friendly (adults and children sit normally in a comfortable chair, and babies can be studied while seated on their mother’s lap), and, crucially, it tolerates moderate movement. This extraordinary latter feature makes it possible to study the full complex of human language processing, from perceiving to—for the first time—producing language (be it spoken or signed), and, thus, fNIRS has revolutionized the possible studies of language that can now be conducted, as well as the ages of the populations (from babies, and across the life span). For example, in babies, for the first time, it is possible to follow specific tracts of brain tissue and neural systems known to participate in natural language processing over time. In summary, it is the fNIRS’ capacity to provide information on changes in blood oxygen level densities/BOLD (including total, oxygenated, and deoxygenated hemodynamic volumes), its rapid sampling rate, relative silence, higher motion tolerance than other systems, and participant friendly set-up, which have contributed to the rapidly growing use of fNIRS as one of today’s leading brain imaging technologies.

Apparatus and Procedure

To record the hemodynamic response we used a Hitachi ETG-4000 with 24 channels, acquiring data at 10 Hz (see Figure 2a). The lasers were factory set to 690 and 830 nm. The 16 lasers and 14 detectors were segregated into two 3 × 3 arrays corresponding to 18 probes (9 probes per array; see Figure 2b). Once the participant was comfortably seated, one array was placed on each side of the participant’s head. Positioning of the array was accomplished using the 10–20 system (Jasper, 1957) to maximally overlay regions classically involved in language, verbal, and working memory areas in the left hemisphere as well as their homologues in the right hemisphere (for additional details, and prior fMRI-fNIRS co-registration procedures to establish neuroanatomical precision of probe placements, see Kovelman, Shalinsky, Berens & Petitto, 2008; Kovelman, Shalinsky, White, Schmitt, Berens, Paymer, & Petitto, 2008).

Figure 2.

Example of fNIRS placement. (a) Key locations in Jasper (1958) 10–20 system. The detector in the lowest row of optodes was placed over T3/T4; (b) Infant with terry-cloth headband. Probe arrays were placed over left-hemisphere language areas and their right-hemisphere homologues using Jasper’s 10–20 system according to the criteria shown. The red circles represent the laser emitters. The blue circles represent the fNIRS detectors. The x-s represent the channels.

Prior to recording, every channel was tested for optimal connectivity (signal/noise ratio) using Hitachi fNIRS inbuilt software. Digital photographs were also taken of the positioning of the probe arrays on the baby’s head prior to and after the recording session to ensure that probes remained in their identical and anatomically correct pre-testing placement. The Hitachi system collects MPEG video recordings of participants simultaneous with brain recording, which is synchronized with the testing session. This outstanding feature, among other things, makes possible the identification of any larger movement artifacts that may have impacted a select channel which then be identified during offline analysis.

After the recording session, data were exported and analyzed using Matlab (The Mathworks Inc.). Conversion of the raw data to hemoglobin values was accomplished in two steps. Under the assumption that scattering is constant over the path length, we first calculated the attenuation for each wavelength by comparing the optical density of light intensity during the task to the calculated baseline of the signal. We then used the attenuation values for each wavelength and sampled time points to solve the modified Beer–Lambert equation to convert the wavelength data to a meaningful oxygenated and deoxygenated hemoglobin response (HbO and HbR, respectively). The HbO values were used in all subsequent analyses (for detailed methods see especially, Kovelman, Shalinsky, Berens & Petitto, 2008; Kovelman, White, Schmitt, Berens & Petitto, 2009; Shalinsky, Kovelman, Berens & Petitto, 2009).

Sessions were ended if babies cried for more than 2 sequential runs or demonstrated irrecoverable irritation. The average brain recording session lasted 16 minutes or 16 runs. A two-sample t-test confirmed that monolingual and bilingual babies completed a similar number of runs (t(59) = 1.39, p > .05).

Motion artifact analyses for the fNIRS brain recordings were carefully and consistently conducted for each baby’s data (all babies, all data) through the identification of movement “spikes” in their brain recordings (based on a spike’s width and timing). Spikes are defined as any change greater than or equal to 2 standard deviations from the baseline recording to the peak of a neural recording occuring over an interval of 35 consecutive recordings (hemodynamic samples) or less (i.e., 3.5 seconds; thus, if a spike occurred due to motion, it tended to take an exceedingly small amount of time from base to peak back to base). Once identified in our data, spikes were removed so as not to inflate unnaturally our event-related brain recordings of hemodynamic change in the babies’ brains during the experimental run. Removal involved first modeling the spikes using custom-specified gaussian/bell curve functions that fit the general contours of the spikes. As a rule, if the number of spikes was less than 3% of the total number of brain recording samples for a child, then no data for that baby were removed. Our inclusion criterion was stringent: If a baby had a single run constituting a total of 1200 samples (120 seconds), those data were considered usable if the total number of spikes was under 36 (.03*1200=36). In the end, the average number of channels considered too “messy” for inclusion across all babies (all data) was remarkably low and, thus, exceedingly little recorded data were removed from consideration. To be clear, less than 5% of all brain recordings for all of the 61 babies combined were removed—indeed this is a remarkably low amount as compared with the approximately 22%, 28%, and even higher data removal and/or dropout through attrition routinely reported in other infant studies (Houston, Horn, Qi, Ting & Gao, 2007; Slaughter & Suddendorg, 2007; Werker & Gervain, in press, respectively). This is due both to an inherent advantage that the fNIRS system has regarding its tolerance for movement and the fact that our experimental paradigm ensured that the babies were and stayed “on task."

Identifying Regions of Interest (ROI) for Further Analyses

In the 3 × 3 recording array, channels were the area between adjacent lasers and detectors, as is hardwired into the ETG-4000 system. Each channel had two components, attenuation values from the 690 nm and 830 nm lasers. As above, attenuation values were converted to deoxy- and oxy-Hb values using the Modified Beer–Lambert equation (Shalinsky, Kovelman, Berens, and Petitto, 2009). Once converted from laser attenuation, channels referred to the deoxy- and oxy-Hb changes in the regions between the laser and detectors. We performed a PCA to identify clusters of channels with robust activity in the left hemisphere. From these PCA results, we matched these channels to their left hemisphere brain regions and then identified their right hemisphere homologues. In turn, this helped us to identify our ROIs. Our ROIs included the brain’s classic language processing areas, especially channels maximally overlaying the LIFC (BA 45/47; Broca’s area 44/45) and the STG (BA 21/22). As an extra “safety measure” in our ROI identification process, our final ROIs were selected relative to a control site for which neural activity to linguistic stimuli would not be predicted. Following this, the same channels were grouped (selected) for further analysis for every participant, as each channel was overlaying the same brain area for every participant, as established by 10–20 probe placement.

Infant Behavior Coding

Two trained coders, blind to the experimental hypotheses, observed and coded a random twenty percent of the baby videos to ensure that babies were staying “on task” during experimental testing runs (not sleeping, alert, and neither distracted nor distressed). The videos began and ended concurrently with the measurements from each experimental testing run recorded with fNIRS. In particular, coders coded for infant alert orientation towards the visually compelling fish screen (e.g., head turns, head nods, looking). Coders identified that all babies were awake, alert, and “on task” during entire experimental testing runs. Percent agreement was 81%. This resulted in a Fleiss Kappa indicating substantial agreement among raters (k=1; Landis & Koch, 1977). The fact that all babies were, and stayed, “on task” was also corroborated by our exceedingly small number of motion artifacts observed in the present data.

Results

Whole-Brain All Channel Analyses

We first began with whole brain analyses and asked whether there were differences or similarities in linguistic versus nonlinguistic processing in the bilingual and the monolingual babies, as well as whether performance differed depending on the babies’ ages (younger vs older babies). To do this, we performed a 3 × 2 × 2 × 2 Language (Native, Non-Native, Tone) × Group (Bilingual, Monolingual) × Age (Younger, Older babies) × Hemisphere (Right, Left) Mixed Model ANOVA on the “standard” phonetic stimuli using the average of the 12 channels from each hemisphere (24 channels in total).

Language versus Tone. All babies showed greater activation to language stimuli relative to nonlinguistic Tones. The ANOVA revealed a main effect of Language (Native, Non-Native, and Tone; F(1, 51) = 16.12, p < .001). Post-hoc analyses using the Tukey HSD method revealed no significant difference between the Native and Non-Native language conditions (Z = 0.93, p > .05), while the Tone condition differed significantly from both the Native and Non-Native language conditions (Native > Tone, Z = −3.08, p < .01 and Non-Native > Tone, Z = −4.00, p < .001). Hemisphere. This whole-brain, all channel comparison did not reveal any significant difference in activation between the left and right hemispheres across participants (but see STG and IFC sections below). Group & Age. The ANOVA showed no main effect of Group (Bilingual vs. Monolingual; F(1, 51) = 0.00, p > .05), no main effect of Age (Young babies vs. Older babies; F(1, 51) = 0.02, p > .05), and no main effect of Hemisphere (Left vs. Right; F(1, 51) = 0.00, p > .05).

Region of Interest Analyses

Mean peak activation values (and SD) for babies by Group (bilingual, monolingual), Age (younger vs older), and Language type (Native, Non-Native) in the left STG and IFC can be found in Table 1. To understand how ROIs were selected see Methods, section “Identifying Regions of Interest (ROI) for Further Analyses.”

Table 1.

Mean peak activation values (and SD) for babies by Group (bilingual, monolingual), Age (younger vs older), and Language type (Native, Non-Native) in the left STG and IFC.

| Group | Age | Language | STG | IFC |

|---|---|---|---|---|

| Bilingual | Younger | Native | 0.0205 (0.0161) | 0.0190 (0.0266) |

| Bilingual | Younger | Non-Native | 0.0431 (0.0420) | 0.0130 (0.0174) |

| Bilingual | Younger | Tone | 0.0104 (0.0092) | 0.0085 (0.0091) |

| Bilingual | Older | Native | 0.0234 (0.0136) | 0.0150 (0.0130) |

| Bilingual | Older | Non-Native | 0.0327 (0.0213) | 0.0194 (0.0254) |

| Bilingual | Older | Tone | 0.0105 (0.0056) | 0.0074 (0.0060) |

| Monolingual | Younger | Native | 0.0193 (0.0134) | 0.0163 (0.0134) |

| Monolingual | Younger | Non-Native | 0.0272 (0.0244) | 0.0195 (0.0155) |

| Monolingual | Younger | Tone | 0.0123 (0.0098) | 0.0096 (0.0081) |

| Monolingual | Older | Native | 0.0234 (0.0122) | 0.0170 (0.0152) |

| Monolingual | Older | Non-Native | 0.0184 (0.0133) | 0.0173 (0.0130) |

| Monolingual | Older | Tone | 0.0113 (0.0081) | 0.0123 (0.0090) |

Superior Temporal Gyrus

Next, we asked whether there were similarities and differences in STG activations bilaterally for linguistic versus nonlinguistic processing in bilingual and monolingual babies, as well as whether performance differed depending on the babies’ ages (younger vs. older babies). To do this, we performed a 3 × 2 × 2 × 2 (Language × Group × Age × Hemisphere) Mixed Model ANOVA on the “standard” phonetic stimuli on the average oxy-Hb peak values from the STG channels bilaterally.

STG: Language vs Tone. All babies processed all language stimuli differently from Tones in the STG (a classic language area associated with the processing of the linguistic-phonological level of language organization in adults). All babies showed greater activation to linguistic as opposed to nonlinguistic stimuli. The ANOVA revealed a significant main effect of Language (Native, Non-Native, and Tone; F(1,51) = 20.20, p < .001). Post-hoc analyses using the Tukey HSD method revealed no significant difference between the Native and Non-Native language conditions (Z = 1.31, p > .05), while the Tone condition differed significantly from the Non-Native language condition (Non-Native > Tone, Z = −3.15, p < .01) and approached significance for the Native language condition (Native > Tone, Z = −1.84, p < 0.06). Hemisphere. In addition, all babies showed differences in activation between the right and left hemispheres (Right > Left, F(1, 51) = 3.77, p • .05), with these differences being most apparent in the monolingual babies (Right > Left F(1, 51) = 6.55, p < .05).

Left STG: Group and Age Effects. We then asked whether there were similarities and differences in activation for linguistic information in the bilingual and monolingual babies. We also asked whether there were differences between the younger and older babies regarding their recruitment of the left STG. To do this, we computed a 2 × 2 × 2 (Group × Age × Language) Mixed Model ANOVA on the “standard” (> deviant) phonetic stimuli on the average oxy-Hb peak values for the Native and Non-Native language conditions in the left hemisphere STG channels.

The bilingual and monolingual babies, as well as the younger and older babies, all showed similar activations in the left STG, suggesting that left STG activity comes in early and remains stable across infancy. The ANOVA revealed no significant main effect of Group (F(1, 51) = 0.56, p > .05), no significant main effect of Age (F(1, 51) = 0.04, p > .05), and no significant main effect of Language (F(1, 51) = 0.03, p > .05). In addition, there were no significant Group × Age (F(1, 51) = 0.27, p > .05), Group × Language (F(1, 51) = 2.87, p > .05), Age × Language (F(1, 51) = 2.34, p > .05), and Group × Age × Language (F(1, 51) = 0.00, p > .05) interactions.

Inferior Frontal Cortex

As with the STG, we first asked whether there were similarities and differences in IFC activations bilaterally for linguistic versus nonlinguistic processing in bilingual and monolingual babies, as well as whether performance differed depending on the babies’ ages (younger vs. older babies). To do this, we performed a 3 × 2 × 2 × 2 (Language × Group × Age × Hemisphere) Mixed Model ANOVA on the “standard” phonetic stimuli on the average oxy-Hb peak values of the IFC channels from both hemispheres.

IFC: Language vs Tone. All babies processed the Non-Native language stimuli differently from Tones in the IFC. The ANOVA revealed a significant main effect of Language (Native, Non-Native, and Tone; F(1, 51) = 16.24, p < .001). Post-hoc analyses using the Tukey HSD method revealed no significant difference between the Native and Non-Native language conditions (Z = 0.76, p > .05), and the Native language and Tone conditions (Z = −1.33, p > .05) while the Tone condition differed significantly from the Non-Native language condition (Non-Native > Tone, Z = −2.09, p < .05). Hemisphere. In addition, the IFC in the right and left hemispheres responded differently depending on whether the babies were younger or older. The left IFC, a classic language processing area associated with the search and retrieval of information about meanings (lexical, morphological, as well as syntactic/phonological, motor sequences/planning; see details above) showed similar levels of activation for both age groups. Importantly, and by contrast, the right IFC showed a significant decrease in the amount of activation between the younger and older babies, thus providing a remarkable first-time convergence of evidence between brain and linguistic behavior. To be clear, the decrease in the amount of right IFC activation in the older babies indicates a developmental change/shift in lateral dominance over time in early life (from ~4 to ~12 months)—specifically, from the right to the left hemisphere classically associated with language processing—at precisely the age when all babies begin robust production of their first words (~12 months). The ANOVA revealed a Age × Hemisphere interaction that approached significance (F(1, 51) = 2.98, p < .08; see Figure 3).

Figure 3.

IFC activations for Young and Old babies by hemisphere. The left IFC shows similar levels of activation for both age groups. The right IFC shows a decrease in activation in the older babies’ brains (from right IFC to left IFC) that corresponds in time to the universal appearance of babies’ major first-word language milestone, and suggests that this milestone may be mediated by the left IFC (as is observed in adults).

Left IFC: Group and Age Effects. We then asked whether there were similarities and differences in activation for linguistic information for the bilingual and monolingual babies, as well as whether performance differed depending on the babies’ ages (younger vs. older babies) in the LIFC (as above, a classic language area). To do this, we computed a 2 × 2 × 2 (Language × Group × Age) Mixed Model ANOVA on the “standard” (> deviant) phonetic stimuli on the average oxy-Hb peak values for the Native and Non-Native language conditions in the left hemisphere IFC channels.

All babies showed differences in activation in the left IFC between Native and Non-Native languages. However, the bilingual babies had more similar activity levels for the Native and Non-Native phonetic contrasts than the monolingual babies, who showed greater left IFC activations to their native language, thereby providing another first-time, remarkable convergence of evidence between brain and linguistic behavior, in that behavioral data has shown that older bilingual babies’ phonetic discrimination capacities remain “open” at the time when older monolingual babies’ phonetic discrimination capacities “close” to only their native language phonetic contrasts (more below in Discussion; see Figure 4). As revealed in the ANOVA, there was a significant main effect of Language (Native vs. Non-Native; F(1, 38) = 4.48, p < .05) and a Group × Language interaction (F(1, 38) = 4.48, p < .05). In addition, there was a trend towards differences in left IFC activation between the bilingual and monolingual babies in the younger and older age groups. Young bilingual and monolingual babies showed similar activation levels in the left IFC. However, older bilingual babies showed greater left IFC activations than their monolingual counterparts. The ANOVA revealed a Group × Age interaction that approached significance (F(1, 38) = 3.32, p < .08).

Figure 4.

Average difference between standard and target peak activations for bilingual and monolingual babies by age in the left IFC and left STG for the Native and Non-Native language conditions. Note that the bilingual and monolingual babies show different patterns of activation in the left IFC. The bilingual babies had more similar activity levels for the Native and Non-Native phonetic contrasts than the monolingual babies, who showed greater left IFC activations to their native language and corresponds to behavioral data showing that older bilingual babies’ phonetic discrimination capacities remain “open” at the time when older monolingual babies’ phonetic discrimination capacities “close” to only their native language phonetic contrasts.

Discussion

This study began with our commitment to advance understanding of a decades-old question at the heart of early human brain and language development. What processes mediate a young baby’s capacity to discover the finite set of phonetic units of their native language from the infinitely varying stream of information around them? But we asked it here with a unique theoretical twist. We conducted analyses of the brains of bilingual babies, as compared to monolingual babies, while processing (real) linguistic Native phonetic units, linguistic Non-Native (“foreign” language) phonetic units to which the babies had not been exposed, and nonlinguistic Tones. Our bilingual babies provided a new test of the discipline’s competing hypotheses that previous behavioral studies, alone, could not adjudicate. We asked whether phonetic discrimination in the bilingual baby suffers as a consequence of decreased (or unequal) frequency of language exposure (decreased “time on task” in one or the other language)—as predicted from the auditory-general hypothesis? Or, would phonetic discrimination in bilingual babies follow a similar developmental time-course to monolingual babies as long as the young bilingual baby receives regular/systematic input in its two native languages—as predicted from the language-specific hypothesis? To further test these two behavioral hypotheses, we asked whether specific classic language processing tissue in the babies’ brains, such as the STG, showed differential processing of linguistic versus nonlinguistic information (as, for example, according to the auditory-general hypothesis, both should be treated equally as general auditory information and, thus, it should not)? Do baby brains show multiple sites of neural activation with functional specification for language, and are these the same as the classic language processing sites observed in adults? We also asked specifically whether bilingual and monolingual babies recruited the same or different neural tissue during phonetic processing over the first year of life. Finally, does neural recruitment for linguistic (phonetic) processing change over time in a similar or dissimilar manner across the young bilingual and monolingual babies?

Our study of the neural correlates of phonetic processing in bilingual and monolingual babies revealed that the brains of all babies showed multiple sites of neural activation with functional specification for language from an early age, and that these sites were the same classic language processing tissue observed in adults. There were important similarities across the groups, an intriguing difference in the older bilingual babies as compared to older monolingual babies on one dimension (below)—which we interpret as affording an advantage to bilingual babies—and three findings having especially exciting implications for the biological foundations of early language acquisition in our species.

Superior Temporal Gyrus

The first finding with compelling implications for the biological foundations of early language acquisition involves tissue in the human brain that may facilitate the newborn baby’s discovery of the phonological level of natural language organization, specifically, the left STG. The STG is widely regarded as being one important component, among the total and varied complex of human brain areas, which makes up “classic language processing brain areas” in adults, and is now universally understood to play a role in the processing of phonetic (phonological) information in adults across diverse languages including natural signed languages (Petitto, Zatorre, et al., 2000).

Here we observed that all babies (bilingual and monolingual), across all ages (“younger” babies around 4 months and “older” babies around 12 months) demonstrated robust neural activation in the left hemisphere’s STG in response to Native and Non-Native phonetic units. There were no significant activation differences between the younger and older babies, be they bilingual or monolingual. The functional dedication of this left STG brain area does not appear to “develop” in the usual sense. At least across these age groups, one typical developmental sequence from diffuse to specialized neural dedication for function was not observed. Instead, this particular STG brain tissue, important for processing phonetic (phonological) information in adults, was also observed to be activated in the presence of the appropriate linguistic phonetic (phonological) information in all babies, and from the earliest ages. The early age of the STG’s participation in phonetic processing, the fact that this does not appear to change significatly over time, and the resiliency with which this STG tissue is associated with this particular language/phonological function irrespective of the baby receiving two or one input languages, suggests that the STG may be biologically endowed brain tissue with peaked sensitivity to rhythmic-temporal, alternating and maximally contrasting, units of around the size and temporal duration of phonetic-syllabic units found in mono- and/or bi-syllabic words in human language.

Elsewhere, Petitto has hypothesized that the left STG is uniquely tuned to be responsive to rhythmic-temporal units, in alternating and maximal contrast of approximately 1–1.5Hz (Petitto & Marentette, 1991; Petitto, Zatorre, et al., 2000; Petitto, Holowka, Sergio, & Ostry, 2001; Petitto, Holowka, Sergio, Levy, & Ostry, 2004; Petitto, 2005, 2007, 2009). Having such brain tissue would biologically predispose the baby to find salient units in the constantly varying linguistic stream with this particular rhythmic-temporal structure. Petitto has suggested that phonetic-syllabic units, as they are strung together in natural human language, have this rhythmic-temporal alternating and maximally contrasting structure, and, thus, have peaked salience for the baby. The form, itself, initially has salience for the baby irrespective of its meaning, and permits segmentation of the constantly varying linguistic stream in the baby’s environment. The initial sensitivity to this form and its presence in the input, initiates a synchronicity – a kind of synchronized oscillation between the baby’s STG structure sensitivity and the presence of the structure in the input linguistic stream. Like a lock and key, the “fit” initiates a synchronization and segmentation of the stream, and then a cascade of other processes like the grouping – that is, the clustering or categorization — of “like” phonetic-syllabic units, which, in turn, permits the baby’s tacit statistical analyses over the unit from which it can derive the rules and regularities of the structure of its native languages.

On this view, the initial peaked sensitivity to “form” (rhythmic-temporal bundles in alternating maximal contrast of ~1–1.5 Hertz) permits the baby to “find” units in, or parse, the complex input stream, en route to learning meanings. This initial peaked sensitivity to “form” may provide insight into how babies can “find,” for example, the word baby in the linguistic stream, That’s the baby’s bottle! so as to begin to solve the problem of reference and learn word meanings (c.f. Petitto, 2005).

The observations here about the left STG suggest two new hypotheses for future exploration. First, they suggest that the STG is one of many important components in nature’s complex “toolkit” to aid the child in acquiring human language in advance of having full or complete knowledge of language (its meanings, sentential rules, or full or complete social and communicative significance; c.f. Petitto, 2005, 2007). Second, it is further hypothesized that the left STG may also be the brain area makes possible the young bilingual’s achievement of similar linguistic milestones across their two languages, and similar linguistic milestones to monolinguals. Moreover, the young baby’s hypothesized sensitivity to form (rhythmic-temporal bundles in alternating maximal contrast of ~1–1.5 Hertz) may provide insight into the question, why aren’t young bilingual babies confused? How do they differentiate their two languages? When a young baby is being exposed to, for example, French and English, why don’t they treat the two different input languages as if they are getting only one fused linguistic system? The left STG sensitivity hypothesized here, may “give” the baby the basic “cut” between L1 and L2 lexicon (and beyond), blind to meaning, merely on the basis of raw form (rhythmic-temporal) differences between the languages. This may indeed be the brain area mediating the behavioral observation that young bilingual babies (from around ages 11 months and beyond) produce their first word (and subsequent language) in, for example, French, and their first word in English, appropriately to the French speaker and appropriately to English speaker right from the start (even when the speakers are unfamiliar experimenters, and not known family members, e.g., Petitto et al., 2001; Holowka, Brosseau-Lapré & Petitto, 2002; Petitto & Holowka, 2002).

Left Inferior Frontal Cortex

A second finding with implications for the biological foundations of early language acquisition concerns similarities observed in bilingual and monolingual babies’ brain tissue involving the LIFC, which includes Broca’s area. The region is judged to constitute important parts among the total and varied complex of human brain areas that make up “classic” language processing tissue in adults. Recall that in adults, the anterior portion of the LIFC is involved in the search and retrieval of meanings, while the posterior LIFC mediates motor planning, squencing, and patterning essential to language production, language syntax, and aspects of phonological processing (Zatorre et al., 1996; Burton, 2001; Burton & Small, 2006).

With the above in mind, our observations about the babies’ left IFC activation provide fresh insight into the relationship between brain and behavior in early language acquisition. Across all bilingual and monolingual babies, we observed an increase in neural activation in the left IFC in the older babies as compared with the younger babies. Importantly, and by contrast, the right IFC showed a significant decrease in the amount of activation the older babies as compared with the younger babies, thus providing converging evidence linking brain and linguistic behavior. That Broca’s area shows more robust activation later in the older babies (~12 months) captures our attention because this is when we first see babies’ behavioral explosion into the first-word language milestone at around 12 months, thereby providing corroborating support for the role that the left IFC plays in word meanings and the production of words, respectively. To the best of our knowledge, this is the first time a focal brain correlate (with change in activation in specific brain tissue) has been observed that may be mediating the appearance of a universal behavioral milestone in early human language acquisition.

A third finding with implications for the biological foundations of early language acquisition concerns differences between our bilingual and monolingual babies and provides insight into the relations among brain, behavior, and experience in early language acquisition. Our older bilingual babies’ LIFC showed robust activation to both the Native and the Non-Native phonetic contrasts. Our older monolingual babies’ LIFC, however, showed robust activation to the Native phonetic contrasts only, and not to the Non-Native phonetic contrasts. Thus, while the bilingual and monolingual babies showed similar LIFC activation when they were younger (though less robust than observed in the older babies), the brains of the older bilingual and monolingual babies are different in this way. Of note, our brain data are corroborated by the behavioral data reported in the few research teams who have, to date, conducted behavioral studies of bilingual babies (see e.g., Burns, Yoshida, Hill, & Werker, 2007). Yet this specific behavioral difference has been described as being “deviant,” a “language delay,” in early bilingual language acquisition (Bosch & Sebastián-Gallés, 2001, 2003; Kuhl et al., 2006; Kuhl, 2007; Kuhl & Rivera-Gaxiola, 2008; Sebastián-Gallés & Bosch, 2009). We are puzzled by this dramatic conclusion.

We first consider the reasoning that may underlie the “deviant” conclusion in the literature. The heart of the issue is the observation that older bilingual babies appear to remain sensitive to Non-Native phonetic contrasts longer than older monolingual babies (though no research has yet been done to determine how long this window of sensitivity remains “open”). Given that monolingual babies begin with an “open” and universal capacity to discriminate all phonetic units (Time 1, under ~10 months), and given that this later becomes “closed” (Time 2, ~10–14 months—with sensitivity to phonetic contrasts now being restricted only to the one native language to which they were exposed), bilingual babies are said to be “deviant” and “delayed.” This is because, by contrast, they appear to go from being “open” (Time 1) to being “open” (Time 2). This general conclusion is further supported as follows: Because young monolinguals movement from “open” to “closed” indicates a positive stabilization of their phonological repertoire just around the time when they need it most (the first-word milestone), and because this stabilization has been correlated with other positive indices of healthy language growth (phonological segmentation, vocabulary development, sentence complexity at 24 months, and mean length of utterance at 30 months; see Kuhl & Rivera-Gaxiola, 2008), any difference from this monolingual baseline is “atypical development,” language delayed, and/or language deviant. Indeed, being monolingual is the implicit “constant,” the “normal” base from which all is compared.

Is “difference” really “deviant?” We think not. First, on logical reasoning grounds, alone, in order for the above direction of comparison to be valid (i.e., monolingualism as “constant,” bilingualism as deviant), we would have to find evidence that being monolingual is biologically (neurally and evolutionarily) privileged in the human brain/species over being bilingual. Such evidence has not been forthcoming, as instead their comparability has been repeatedly demonstrated (see Kovelman, Baker & Petitto, 2008a, b).

Second, while overall similarity would be predicted from the comparison of two similar biological systems, identity is not. Similarity is not identity. We know this well from other comparative language studies involving, for example, chimpanzees (e.g., Cantalupo & Hopkins, 2010; Seidenberg and Petitto, 1987). Most germane, we know this from comparative brain studies of signed and spoken languages. While signed and spoken languages engage highly similar classic-language brain systems and neural tissue irrespective of modality, some differences between the input modalities do yield predicted modality-related differences in neural activation (e.g., Petitto, Zatorre et al., 2000; Emmorey, Grabowski, McCullough, Ponto, Hichwa, & Damasio, 2005; Capek, Grossi, Newman, McBurney, Corina, Roeder & Neville, 2009). The differences are appropriate, expected, and “good” because they should be there if the human brain were fully functioning and intact.

Third, and following from above, while it is held that the monolingual baby’s loss of its universal phonetic discrimination capacities is a good thing, it could equally be argued that this is a bad thing. An equally supportable position is that the bilingual baby’s sustained (more open, longer) phonetic discrimination capacities is good. It can afford the young bilingual child increased phonological and language awareness, meta-language and pragmatic awareness, as well as cognitive benefits—and, more upstream, stronger reading, language, and cognitive advantages—thereby rendering the monolingual child disadvantaged by comparison. This is precisely what has been found (for reading, phonological, and language advantages, see Kovelman, Baker & Petitto, 2008a; for cognitive advantages, see Ben-Zeev, 1977; Baker, Kovelman, Bialystok, & Petitto, 2003; Bialystok & Craik, 2010). Indeed, while monolingual babies’ perceptual systems have attenuated down to only one language, bilingual babies’ more open perceptual/neural systems appear to bolster computationally flexibility/agility across a range of linguistic and cognitive capacities. Ironically, this points to benefits that the monolingual baby could have had if only it had the good fortune of bilingual (or multiple) language exposure. Taken together, comparative analyses of early bilingual and monolingual language processing presents new insights into early neural plasticity, as well as the tangible impact that the environment, and epigenetic processes, can have on changes to human brain tissue and the brain’s perceptual systems. It further suggests the following new testable hypothesis.

Perceptual Wedge Hypothesis

Bilingual and monolingual babies showed activation in the same classic language areas as adults when the babies were processing linguistic phonetic stimuli. The very early occurrence of this brain specialization for language teaches us that aspects of human language processing are under biological control (e.g., early segmental/phonological processing; see also Petitto and Marentette, 1991; Petitto, Zatorre, et al., 2000; Petitto, 2005, 2007, 2009). At the same time, we witnessed that environmental experience matters: the number of input languages in the baby’s environment did change the human brain’s perceptual attenuation and neural processes. One language input resulted in sensitivity to one language. Two input languages altered the nature of this perceptual attenuation and neural processes, making it more expansive. We therefore hypothesize that the number of input languages to which a young baby is exposed can serve as a kind of “perceptual wedge.” Like a physical wedge that holds open a pair of powerfully closing doors, exposure to more than one language holds open the closing “doors” of the human baby’s typical developmental perceptual attenuation processes, keeping language sensitivity open for longer (witness the older bilingual babies’ more “open” sensitivity to Non-Native phonetic contrasts as compared to monolingual babies). We cast the beacon on the “perceptual” side of the wedge in name only, so as to capture the concept that babies once had a perceptual/discrimination capacity that then becomes greatly attentuated because they were not exposed to the relevant stimuli in the environment. However, the processes we hope to encompass with this label extend to central neural language processing and cognitive computational resources.

To be clear, it is hypothesized that the “wedge” is the increased neural and computational demands of multiple language exposure and processing, which, in turn, strengthens language analyses (phonological, morphological, syntactic, etc.), leaving open and agile linguistic processing in general, that is, across two or more language systems. Why “more language systems?” Previous research teaches us that one input language (monolingualism) renders perceptual attenuation down to one language. But it is hypothesized here that two input languages (bilingualism) does not perceptually attenuate down to two languages. Our experimental stimuli within was not restricted to the babies’ other native language, but used a third language, Hindi. This suggests that bilingual language exposure primes the brain’s language processing neural systems for multiple language analyses (perhaps, two languages, three languages, or more, but certainly not limited to two).

That the human brain is changed by differences in environmental language experience is clear (i.e., one versus two languages). Crucially, that the brain changes are positive, and advantageous, is also clear: as the child is rendered primed to learn multiple languages, which research has found to provide linguistic, cognitive, and reading benefits throughout childhood and beyond (Bialystok, & Petitto, 2003; Kovelman, Baker & Petitto, 2008a; Bialystok & Craik, 2010). It is the psychological processes that are as yet unclear. Is this classic “spread of activation” regarding the processing of language, per se? Is this classic bolstered “attentional” resources? Because we witnessed the robust involvement of, and change to, classic neural language tissue recruitment in very young babies, the answer may one day prove to be that the increase in the processing capacity of the linguistic system is the psychological impetus, and not merely (not solely due to) improved or "ramped up" higher cognitive attentional processes.

How long this perceptual wedge can hold back the closing doors of developmental perceptual attention, how many languages can be primed or learned before the linguistic and cognitive advantages are no longer, and study of the specific relationship between young bilingual babies’ phonological repertoire and their later language development in each of their two languages (regarding phonological segmenation, vocabulary development, sentence complexity at 24 months, etc.) all demand additional research.

Conclusions

Phonetic processing in young bilingual and monolingual babies is accomplished with the same language-specific brain tissue classically observed in adults. Overall, all young babies followed the same overarching language developmental course. An exception was noted in the older bilingual babies’ resilient neural and behavioral sensitivity to phonetic contrasts found in other (foreign) languages at a time when monolingual babies can no longer make such discriminations—a finding that we suggest affords a fundamental advantage to the developing bilingual’s healthy language processing. We found no evidence of neural “disruption”—no developmental brain atypicality—when a baby is exposed to two languages as opposed to one. We indeed found no evidence that being “monolingual” is the “normal” state of affairs for the human brain and that being exposed to two languages, in effect, presents a kind of neural trauma to the developing bilingual child, which, as we have suggested (c.f. Petitto et al., 2001), would be the logical underlying hypothesis following from the claim that dual language exposure causes language delay (e.g., Bosch & Sebastián-Gallés, 2001, 2003). Contrary to language delay, in the present work we suggest the existence of language advantages in young babies with early bilingual exposure, which has important educational implications. On this point, it could be said that our early-exposed bilingual babies represent only one positive subtype in the spectrum of types of bilingual exposure possible, some of which may not have such a bright outcome (e.g., much later bilingual or second language exposure). We suggest a different construal. The present findings lay bare some of the optimal conditions of bilingual language learning to help a young child achieve healthy and “normal” dual language mastery and growth. To be sure, early-exposed, simultaneous bilingual language exposure is optimal to achieve this end, and, contrary to some fears in Education, early dual language exposure will not linguistically harm the developing bilingual child.

Several testable hypotheses were advanced, involving the hypothesized role of the STG as nature’s propulsion helping all babies to discover the rhythmically-alternating, maximally-contrasting, phonetic-syllabic chunks at the core of natural language phonology. We further hypothesized that this very brain area may provide the young bilingual baby the means to differentiate tacitly between (and among) the linguistic systems that it is encountering well in advance of knowing meaning—and, as a consequence of this brain area—the young bilingual is afforded the neural means by which it can proceed to differentiate, and thereby disambiguate, two (or more) languages without language confusion or delay from the get go. Finally, we proposed the “Perceptual Wedge Hypothesis,” whereupon exposure to greater than one language necessarily alters neural and language processing in ways that we suggest are advantageous to the language user (Petitto, 2005, 2007). This hypothesis was corroborated by the observation that, while the bilingual and monolingual babies used the same classic language neural tissue as observed in adults, the “extent and variability” of this neural tissue was changed depending on the number of environmental input languages (see also Kovelman, Baker, and Petitto, 2008b). To be sure, the interplay between biological and epigenetic processes in bilingual language acquisition teaches us that comparisons to the monolingual brain as the exclusive yardstick of a “typically” developing brain may, in the end, provide only a partial picture of our brain’s capacity for language processing, and it suggests a fascinating hypothesis: The brains of bilinguals and multilinguals may provide the most powerful window into the full neural “extent and variability” that our human species’ language processing tissue could potentially achieve.

Acknowledgements

The research was made possible through funds to L. A. Petitto (P. I.) in her NIH R21 grant (USA: NIH R21HD05055802). Additional funding was also made possible through L. A. Petitto (P. I.)’s NIH R01 grant (USA: NIHR01HD04582203). Petitto is exceedingly grateful to NIH, and she extends special thanks to Dr. Peggy McCardle (NIH). Petitto also thanks the following for their wonderful infrastructure support and for their invaluable assistance in securing Petitto’s Hitachi ETG-4000 functional Near Infrared Spectroscopy system: Canadian Foundation for Innovation, and the Ontario Research Fund. Petitto and team thank the Hitachi Corporation for their kind assistance. We thank Janet Werker for sharing her pioneering Hindi stimuli with us. We thank Marissa Malkowski and Lynne Williams for comments on our earliest draft of this manuscript. We extend our heartfelt thanks to the parents and babies who gave us their time in order to carry out this research. We thank sincerely the three anonomous reviewers for their insightful and helpful comments, which so helped us improve the manuscript. Corresponding/senior author: petitto@utsc.utoronto.ca. For additional information see http://www.utsc.utoronto.ca/~petitto/

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ansaldo AI, Marcotte K, Fonseca RP, Scherer LC. Neuroimaging of the bilingual brain: evidence and research methodology. PSICO. 2008;39:131–138. [Google Scholar]