Abstract

Cellular processes are “noisy”. In each cell, concentrations of molecules are subject to random fluctuations due to the small numbers of these molecules and to environmental perturbations. While noise varies with time, it is often measured at steady state, for example by flow cytometry. When interrogating aspects of a cellular network by such steady-state measurements of network components, a key need is to develop efficient methods to simulate and compute these distributions. We describe innovations in stochastic modeling coupled with approaches to this computational challenge: first, an approach to modeling intrinsic noise via solution of the chemical master equation, and second, a convolution technique to account for contributions of extrinsic noise. We show how these techniques can be combined in a streamlined procedure for evaluation of different sources of variability in a biochemical network. Evaluation and illustrations are given in analysis of two well-characterized synthetic gene circuits, as well as a signaling network underlying the mammalian cell cycle entry.

Author Summary

Variability from one cell to another is a pronounced and universal trend in living organisms; much of this variability is related to varying concentrations of proteins and other chemical species across the cells. Understanding this variability is necessary if we are to fully understand cellular functions, particularly the ways in which cells differ from each other and in which cells with the same origin behave in different ways (e.g. in human development and cancer). When using a chemical model for some aspect of cellular function, one needs to consider two sources of variability: intrinsic variability, which results from the reactions proceeding as in the model but naturally varying because of the finite number of molecules in the cells and their random behavior; and extrinsic variability, which results from other kinds of variation not accounted for in the specific, deterministic model. We present new methods to model and compute both kinds of variability, to facilitate the study of cellular variability as a whole. Our methods provide advantages in speed, accuracy, and scope of mechanisms modeled, and we apply them to experimental data, demonstrating the nature of intrinsic and extrinsic noise in those systems.

Introduction

Cellular processes are “noisy”. In each cell, concentrations of molecules (e.g., mRNAs, proteins) are subject to random fluctuations (noise) due to the small numbers of these molecules and to environmental perturbations [1], [2]. Cellular noise impacts on information transmission involved in cell signaling dynamics [3]–[5], while cells may take advantage of such variability in adapting to changing environments or for cell-fate decisions [6]–[15]. Improved understanding of how noise influences and is modulated by cellular processes will greatly benefit from efficient, streamlined computational tools to quantify noise, and to use noise to probe properties of the underlying regulatory networks [16]–[19]. To date, stochastic modeling of gene expression has typically relied on forward simulations of time courses, for example via Gillespie algorithms [20], [21] or numerical solution of stochastic differential equations (SDEs) [4], [22], [23].

Flow cytometry and fluorescence microscopy currently allow for access to increasingly rich data on approximately steady-state distributions of gene expression. These distributions arise biologically when a set of reactions proceeds much faster than environmental changes, and observing such data provides a step towards understanding some aspects of the underlying cellular network. To assess how such data can be informative, we need to compute or simulate aspects of the steady-state distribution. Forward simulation can be time-consuming, and new approaches are needed. Approaches such as umbrella sampling [24] and coupling-from-the-past [25] have been introduced, but the sampling biases of the former and substantial computational expenses of the latter leave areas for improvement.

Mechanistic modeling of noise is complicated by its diverse sources, which have been classified as intrinsic or extrinsic [26], [27]. Intrinsic noise results from the stochasticity of chemical kinetics when the numbers of interacting molecules are sufficiently small; it can be described by the chemical master equation (CME). In essence, intrinsic noise represents deviation of known reactions with known rates from their results as predicted by classical chemical kinetics [28]. In contrast, extrinsic noise results from other reactions and from fluctuations in rate constants, and it is often the dominant source of variability in a system [26], [29]. Extrinsic noise may result from any process not represented in the network model itself.

A direct route to model intrinsic noise is to calculate steady-state solutions to the CME, often by using an approximation. An analytical solution based on a continuous master equation describing protein production in bursts has been formulated by Friedman and colleagues [30], while Fourier and colleagues [31] present analytical solutions for several other networks. Walczak and colleagues [32] investigate another solution approach based on using an eigenbasis from a simpler system to solve the massive linear equation resulting from setting the CME to steady-state. The approximation here lies in the difference between each system's eigenbases, and its suitability for a specific system needs to be determined on an ad hoc basis. More general methods have been investigated as well. The Hartree approximation [33] assumes probabilistic independence of molecule numbers for each species; this approximation greatly reduces the dimensionality of the system, but tends to break down seriously in multimodal systems, unless the joint distribution has a mode at each combination of the one-dimensional distributions' modes (this is frequently not the case). Cao and colleagues [34], [35] investigate accurate though computationally costly numerical solution methods for the CME, such as efficient exhaustive enumeration of microstates. Munksy and Khammash [36], focusing on the application of methods for solving the master equation, investigate the necessary data for obtaining reaction parameters in a system dominated by this type of noise.

A related approach is to calculate an “energy landscape” for a network. Ao [37] assumes an SDE model and derives a potential that yields the probability distribution as its Boltzmann distribution. Wang and colleagues [38] also use an SDE model and then construct a potential landscape based on a Hodge decomposition of the flux vector in the system. Both approaches are useful for a wide range of SDEs, including the chemical Langevin equation. However, they are thus subject to the inaccuracies of that equation—most importantly, the inaccuracy at low molecule numbers—and may also lack computational tractability for complex systems. Qian and Beard [39], in constructing potential landscapes for non-equilibrium systems based on chemical potentials, provide an approximation for the probability distribution that follows the Hartree approximation.

In contrast to intrinsic noise, extrinsic noise lacks a unique modeling framework and is often determined by empirical inference of distributions from data. One approach that accounts for some of these effects is to perturb the rate constants while modeling intrinsic fluctuations using a Gillespie algorithm-type simulation strategy [40]. This approach may also produce extrinsic fluctuations that could be produced by other sources, such as other reactions and measurement noise. However, direct steady-state calculations can instead pool together results from many extrinsically perturbed distributions, thus preventing the need to perform calculations for many parameter sets and many time points. Analytical inclusion of extrinsic noise is also possible, and indeed the use of exponentially distributed burst sizes in modeling protein production in [30] amounts to this. In addition, extrinsic noise can be accounted for by addition of random noise to molecule numbers in each time step of a timecourse simulation based on a stochastic differential equation [4], [23]. Recent single-molecule fluorescent measurements have allowed experimental determination of molecule number distributions in Escherichia coli, thus measuring both intrinsic and extrinsic noise [41].

Despite these progresses, a major challenge lies in the lack of well-defined computational framework for thorough, systematic evaluation of these methods with experimental data. As a step to address these issues, we have developed an integrated framework for modeling steady-state distributions in the context of both intrinsic and extrinsic noise sources. As an illustration, we have applied these methods to the analysis of two well-characterized bistable switches and evaluated the methods against experimental data. Furthermore, we also demonstrated the applicability of these methods to a more complex signaling network, the Myc/Rb/E2F network, which underlies the control of mammalian cell cycle entry.

Results

Overview of the Computational Framework

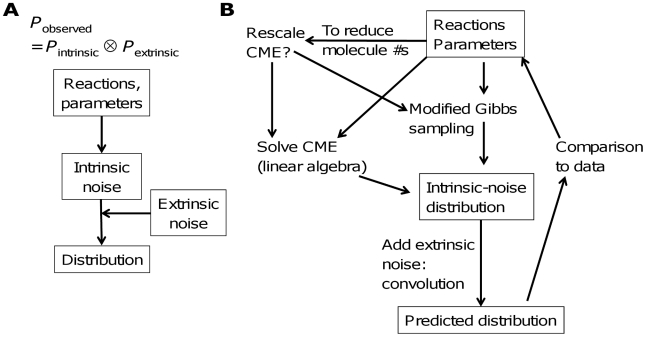

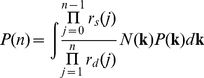

In general, the observed distribution of molecular counts (P observed) can be treated as the combination of an intrinsic component (P intrinsic) and an extrinsic component (P extrinsic) (Figure 1A). The intrinsic component is uniquely determined by the reaction mechanisms and the corresponding rate constants. Our approach (Figure 1B) takes a list of species, reactions with known rate information, and known extrinsic noise parameters, and at the first step calculates the steady-state distribution based on the chemical master equation. This first step accounts for intrinsic noise implicitly and can be done analytically for systems with a sufficiently small number of states. When the CME is too complicated to solve analytically, it can be solved numerically to generate the steady-state distributions, up to the size and dimension limits imposed by computational capabilities. The CME is of the form MP = 0, where M is a matrix and P is the steady-state probability vector.

Figure 1. A framework for combining intrinsic and extrinsic noise.

(A) Distribution prediction starts with predicting distributions based on intrinsic noise only and then adds in extrinsic noise. (B) A schematic for analysis of molecule number distributions in biochemical networks. Predicted distributions based on a model can be compared to experimental data, and information about parameters can be inferred.

With many reacting species, as the matrix size may imply prohibitive computation cost, we can rescale the CME or sample approximately from the solution. For scaling, the dimension of the space of distributions is reduced by approximating the CME in terms of directional derivatives and then re-sampling. The scaled CME is then solved by linear algebra. Even with scaling, however, the matrix computations needed to solve the CME become prohibitive when more than a few species are present or when the distribution is complex. We address these limitations by developing a modified Gibbs sampling (MGS) method to generate the steady-state solution to the CME. Gibbs sampling provides a set of samples from a distribution by sampling one dimension of the distribution (in this case, the molecule number for a given species) at a time, using the conditional distribution for that species given the current molecule numbers of all the other species. In our MGS method, detailed balance is assumed for different sets of reactions at each iteration, generating approximate conditional distributions from which exact sampling is possible. The MGS method scales much more favorably than the direct CME solution with increased numbers of species. Its scaling property is similar to that of ordinary Gibbs sampling. Importantly, it overcomes some caveats associated with alternative approximations, especially in multimodal systems. In particular, it avoids the restrictions on the distribution space caused by the Hartree approximation. Also, it overcomes the difficulties in sampling multiple local minima that occur with the standard Gibbs sampler.

The second step of our approach is to model extrinsic noise by convolution. Typically, representing extrinsic noise as perturbations to rate parameters [40] can present significant difficulties in their application to experimental data. Sampling from a parameter sample space would lead to high computational cost because of the need to redo calculations for many different parameter sets. Methods based on adding noise at each time step similarly bring the cost of calculation at many unnecessary points in time. To this end, we have developed a convolution approach to represent extrinsic noise by averaging many effects, which allows more direct application to experimental data. It is well suited to combining analysis of the modes by a deterministic model, allowing rapid and accurate estimation of reaction parameters, with estimation of further noise parameters based on the observed distribution.

Derivations

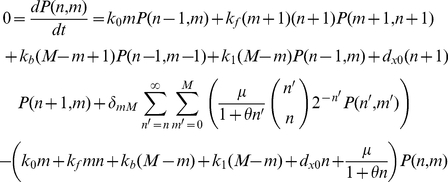

Chemical master equation (CME)

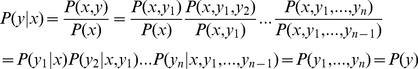

The CME describes temporal evolution of the probability of a given state, as represented by a set of molecule numbers for all species in the system:

| (1) |

where P(x) denotes the probability of the system being in state x as a function of time, t; vj denotes the change in x resulting from reaction j, and aj(x) denotes the probability of reaction j per unit time given that the system is in state x (i.e. the rate in molecular units).

At steady state, the time derivative is 0 for all P(x). This results in a linear system of equations for the probabilities of states: the reaction rates define a matrix, and the null vector of this matrix, normalized so its elements sum to 1, is the vector of probabilities of states.

Synthesis and degradation of a single molecule

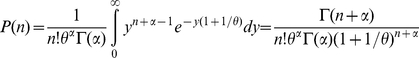

Consider a simple system consisting of n molecules, with synthesis rate as(n) and degradation rate ad(n). The corresponding CME and its steady-state solution are:

|

(2) |

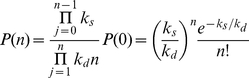

P(0) is chosen such that the sum of all probabilities is 1. For the constitutive expression of a single protein, as(n) = ks, ad(n) = kdn, where ks is the synthesis rate and kd is the decay rate constant. Here P(n) follows a Poisson distribution with mean ks/kd:

|

(3) |

This distribution describes the variability resulting from intrinsic noise. To account for extrinsic noise, one approach is to draw the parameters from their own probability distributions (assuming that the stochasticity from other cellular processes is manifested in fluctuations in the rate constants), which need to be empirically determined:

|

(4) |

where k is the vector of parameters, P(k) is its probability density function, N(k) is a normalization constant, rs is the synthesis rate constant, rd is the degradation rate constant, and the integral is over all values of k with nonzero probability.

In the simple example above, the distribution is determined by the parameter y = ks/kd, so drawing y from a gamma distribution with parameters α and θ,

|

(5) |

Here, we chose the gamma distribution because positive real values of k are needed and the gamma distribution draws values from this set and allows the integral to be performed analytically. Choosing separate distributions for ks and kd is not necessary here because only the corresponding distribution of y would affect the final distribution of n.

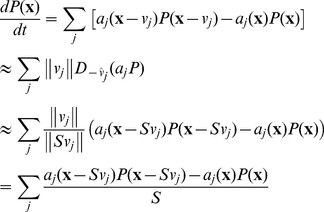

Scaling of the CME

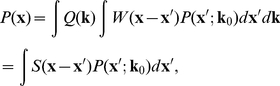

Excessive matrix size can present a major challenge for solving the CME, both as a result of high dimensionality and of high individual molecule numbers (though not high enough for classical reaction rate equations to be used). The size of this equation may be reduced by approximating it in terms of derivatives and then resampling it,

|

(6) |

where S (≥1) is a scale factor (S = 1 denotes no scaling) and D is a directional derivative, allowing general use of this formalism regardless of what reactions are in the system; the sums in the first and third lines are finite-difference approximations of the directional derivative in the second line.

Several scales may be used for the same problem: the unscaled equation can be used for small molecule numbers while less dense sampling may be used for larger ones where the linear approximation applies better. If computer memory is a greater barrier than computation time in solving the steady-state CME, it may be appropriate to choose S as small as possible without running out of memory, since the maximum molecule numbers that need to be included in an analysis are essentially independent of scaling. A discrete, rescaled CME avoids the numerical error that would likely result from a continuous approximation. Also, it reduces exactly to the unscaled master equation when S = 1. This is useful for direct comparison of systems of different sizes. It is also useful for systems where the molecule numbers for some but not all species are large enough to require scaling.

The synthesis/degradation system is a useful test case of the scaling method. Combining Eqs 2 and 6, the scaled master equation for this system is

| (7) |

thus completely determining P(n) up to normalization. Provided sufficiently small variation between each P(n) and P(n−S), the normalization

|

(8) |

may be applied.

Eq. 7 gives P(n)>P(n−S) if n<ks/kd and P(n)<P(n−S) if n>ks/kd; thus the distribution peaks at n = ks/kd, as is the case without scaling. Furthermore, for S = 1, it reduces to the ordinary CME, as is the case in general for Eq. 6.

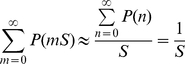

Modeling intrinsic noise via Gibbs sampling

High dimensionality can make accurate numerical solution of CME intractable, even with scaling. One way to effectively reduce the dimensionality is to use Gibbs sampling [42], [43]. Each step in Gibbs sampling requires constructing only a 1-D distribution. To sample, one cycles through the d different species, sampling a value for each molecule number xi in turn from the conditional distribution P(xi|x1,…,xi−1,xi+1,…xd) of the molecule number being sampled given the other current molecule numbers. This yields a sample from the entire distribution, (x1,…,xd) at the conclusion of each cycle. Gibbs sampling for the steady-state solution of the CME can be performed by assuming detailed balance for synthesis and degradation of a given species; that is:

| (9) |

where n is the molecule number of the species; as and ad are its synthesis and degradation rates.

This equation can be modified to account for reactions with different stoichiometries by simply replacing n and n+1 with the states interconverted by the other reactions (e.g. one can replace n+1 with n+2 if molecules are synthesized and degraded two at a time).

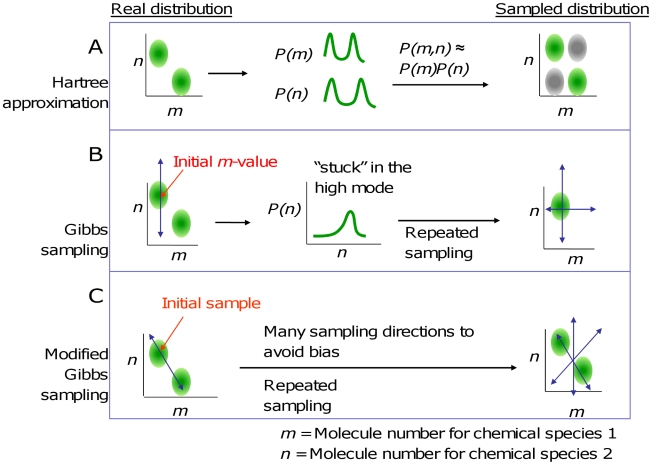

The sampling algorithm starts with an arbitrary initial value for all but one of the molecule numbers, calculates the distribution of the remaining molecule number by assuming detailed balance (Eq. 9) with the other numbers fixed. This sample is then fixed when other species are being sampled. Each complete sampling cycle yields a new sampled state. This strategy is similar to the mean-field method often used with the Hartree approximation (Figure 2A). However, because it samples from a distribution for one species that is correct for the current sample's (rather than the mean's) molecule numbers of the other species, it avoids the key pitfalls in the Hartree approximation when applied to multimodal distributions (Figure 2B).

Figure 2. A modified Gibbs sampling method in comparison to previous methods.

(A) The Hartree approximation can distort the joint distribution for multimodal distributions by generating false peaks. (B) Gibbs sampling method: sampling of each molecule number is based on current values of the other molecule numbers rather than on mean values, to avoid this distortion. This method can result in samples being “stuck” in one peak of the probability distribution. The blue arrows in (B) and (C) indicate sampling directions, which are used sequentially. (C) A modified Gibbs sampling method based on coordinate changes can avoid the sampling bias.

The basic Gibbs sampling has its own caveat when applied to multimodal distributions: Once a sample is drawn from one peak of such a distribution, it is unlikely to cross over to other peaks, unless the peaks overlap in at least one dimension or if there is sufficient probability density outside the peaks for the samples to migrate between peaks. If the total probabilities of the two peaks are known, one can solve this problem by sampling separately from each peak, at the expense of additional burn-in (samples that must be discarded because they are biased by the initial values).

To overcome this caveat, our modified Gibbs sampling (MGS) method changes coordinates between sampling steps (Figure 2C). An accurate Gibbs sampling scheme needs to accurately draw a sample x′ given that the previous sample, x, is from the correct distribution; in other words it must have P(x′) = P(x). This is achieved when P(x′|x) = P(x|x′), because this condition leads to P(x′; x)/P(x) = P(x′; x)/P(x′). Changing coordinates between steps by random rotation of the coordinate system satisfies this condition. In 2-D, for example, new axes can be created with slope m (where tan−1(m) is uniformly distributed between −90° and 90°) and −1/m (note that tan−1(−1/m) and tan(m) have the same distribution); the axes pass through the previously sampled point. Since the purpose of constructing the axes is to sample from points that lie along them, integer lattice points in one coordinate system must match integer lattice points in the original coordinate system, so that each state can be represented by integer coordinates in the new system. This can be achieved by rounding. In our example, we can let x and y be the new coordinates and u and v the old ones, and then let the x-axis pass through

| (10) |

for each value of u and the y-axis through

| (11) |

for each value of v, where the coordinates are given in the u−v system, brackets denote rounding to the nearest integer, and (u0,v0) is the previously sampled point. This technique is applied to the toggle switch below.

The basic Gibbs sampling algorithm may also fail in monomodal systems when the peak contains a fraction significantly less than 1 of the probability density and the remaining density is widely distributed over a much larger space than the peak occupies. For example, if the peak is at the origin in a high-dimensional state space, the sampler will remain at the origin for a very large amount of time once it is there, and likewise will take an extremely large amount of time to find the origin once it is removed from it in several dimensions (because it will take many steps for all the dimensions to reach 0 or sufficiently close to 0 randomly). Switching to a hyperspherical coordinate system will remove this problem: the sampler may move from the origin to any other state in one step.

Higher accuracy in high-dimensional systems can be achieved by sampling from a 2-D rather than a 1-D distribution. Gibbs sampling can use scaling to curtail excessive molecule numbers too. Overall, MGS provides an efficient way to sample from the probability distribution associated with a steady-state CME even when many species are involved. While the detailed balance approximation introduces some error, this error is mitigated relative to that found in the Hartree approximation. The change of coordinates mitigates the error further because detailed balance for different reactions is chosen for each sample.

Since each iteration of MGS is identical to an iteration of regular Gibbs sampling in the coordinate system that applies at the moment, the computational time of the algorithm is essentially the same as for regular Gibbs sampling. The cost per iteration is slightly larger because of the coordinate-selection step, but this is very fast compared to the actual sampling operation. Furthermore, convergence to the equilibrium distribution occurs on the same timescale that regular Gibbs sampling would exhibit if the latter could accurately sample the distribution. That is, the algorithms should converge similarly as long as the standard Gibbs sampling performs well.

Despite this strong system-dependence, rigorous methods have been developed to evaluate convergence: see for example the convergence criteria of Zellner and Min [44] as well as the convergence analysis of Frigessi and colleagues [45] and the burn-in analysis of Jones and Hobert [46]. In a d-dimensional system, each sample requires d one-dimensional sampling procedures; the time required for each sampling will tend to be O(d 1/2) because, even measuring in the worst dimension, the width of the region of state space needing to be sampled scales roughly as d 1/2. We note that these estimates are somewhat ambiguous because the computational cost of the algorithm varies from system to system. Since each system has a given dimensionality, a generic comparison of computational cost for different dimensionalities must be rather approximate. In particular, the convergence time may depend more on the geometry of the distribution than on the number of species or other generic information.

Furthermore, the principle behind the standard Gibbs algorithm that allows for accurate sampling from the distribution still applies. Consider a sample x = (x 1, x 2, …) from the desired distribution. One desires to provide another sample y = (y 1, y 2,…), also from the desired distribution. This is achieved because

|

(12) |

Sampling from the molecule number distribution rather than calculating it explicitly is advantageous when a model has many dimensions but only a few are needed in the output distribution. Since the observed convergence rate of the distribution will depend primarily on the dimensionality of the observed joint distribution rather than on the overall dimensionality of the model, this results in roughly O(d 1.5 m) computational cost for MGS, where the model has d dimensions and the output has m. This is much better than the approximately O(d 3) cost for solving the CME by linear algebra, e.g. by using singular value decomposition, especially if m≪d. Note that for practical applications, even with large numbers of species, one will often need the distribution of a single species or the joint distribution of a few, because of the impracticality of experimentally monitoring all the species in a complex system.

Convolution representation of extrinsic noise

Mechanistic methods of representing extrinsic noise, such as modeling perturbations in each parameter, present significant difficulties in their application to experimental data. Sampling from a parameter sample space leads to high computational cost because of the need to redo calculations for many different parameter sets; methods based on adding noise at each time step, similarly, bring the cost of calculation at many unnecessary points in time. Also, the variation in many parameters will result in an excessive number of degrees of freedom in the extrinsic noise, and still there are likely to be sources of noise unaccounted for because they are not easily represented as perturbations in reaction propensities.

To address this limitation, we present a convolution representation of extrinsic noise based on averaging together many effects and modeling the distribution of extrinsic noise with a set of parameters that can be characterized experimentally. In particular, we represent the total distribution as a weighted integral of shifted intrinsic-noise-only distributions, i.e. the convolution of the intrinsic-noise-only distribution with a distribution of shifts. The rationale for this approach is that most perturbations will induce some shift in the distribution. For example, a reaction not accounted for by the model, or an increase in one of the rate parameters, may affect the concentration of some species. They may also change the shape of the distributions for these species, but this is a less important effect and furthermore will result in a distribution that is a linear combination of shifted versions of the original, intrinsic-noise-only distribution (with the main shifted distribution dominating this combination). The distribution of shifts must have the same dimensionality as the output distribution—note that this is often much less than the dimensionality of the model, thus greatly reducing the number of parameters needed relative to the parameter-variation representation, since there are almost always more reactions (and thus reaction rate parameters) than species in a biochemical network model.

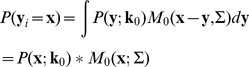

The following derivation shows how convolution can result from parameter-variation descriptions of extrinsic noise. Let P(x;k) denote the probability of a state vector given a parameter set k. Based on parameter perturbations as the source of extrinsic noise, the total probability P(x) of a given state vector is

| (13) |

where the integral is over all possible parameter sets and Q(k) denotes the probability distribution of them, centered around a value k0 (i.e. if trying to measure a single set of parameters for the system, one would be trying to measure k0). We can let

| (14) |

where W is a distribution of shifts due to a perturbation in rate parameters (which may depend on k). W will tend to be a narrow distribution, similar to δ(x′−y) for some state y: changing k0 to k will produce primarily a shift in state. Thus

|

(15) |

where S(x−x′) is a distribution of shifts, which is convolved with P(x;k) to yield P(x). S may be well approximated by a normal distribution, as it is a sum of many weakly correlated terms (corresponding to the different perturbations). In particular, values of x′ near the original state vector x are likely to have more parameter sets with shift distributions W centered near x′; also Q(k) is likely to be higher for k nearer to k0, which in general will correspond to x′ being nearer to x. Note that the strategy used in the above derivation can be applied to perturbations beyond those in the model parameters.

When measuring parameters, it is advantageous to define the extrinsic noise such that it does not induce a systematic shift in the molecule numbers (this merely represents a choice of what base parameter set k0 to use for a parameter distribution Q(k)); this would correspond to S(x−x′) having zero mean. Also, it might be useful, in a concrete representation of the extrinsic noise distribution, to add a background term to help make up for the approximations in the representation; let this be B(x). Thus, we may represent P(x) as

| (16) |

where * denotes convolution, M0 is a zero-mean multivariate normal distribution with the given covariance matrix, and a is a small constant.

M0 may have an altered normalization in order to still be a valid probability distribution in the necessary discrete state space (i.e. M0 must still sum to 1 over all states, even though the values are still proportional to what the multivariate normal distribution would ordinarily give). The covariance matrix quantifies the “spreading” of probability density in state space due to extrinsic noise. The background noise term represents the distribution of extrinsic noise far from the values predicted by intrinsic noise. Its associated parameters could be estimated from those sections of a flow cytometry data set only, reducing the number of parameters needed to fit the actual peaks. Fitting of the peaks is facilitated by the fact that their location limits the possible values of the rate parameters, allowing the spread to be used to estimate the extrinsic noise standard deviations.

While convolution can be considered as another form of explicit account for parametric perturbations (with a priori specified distributions), its formulation is more general and allows representation of noise sources not well described by perturbations in rate parameters. Many kinds of perturbations can be represented as shifts in the distribution and accounted for by convolution. Because perturbations may induce different shifts in molecule numbers depending on the specific region of state space. For example, a reaction may have a minor effect on molecule numbers when the original molecule numbers are small and a more significant effect when they are large. Therefore, it may be necessary to have the covariance matrix in Eq. 16 depend on x, or more generally to have the form of S in Eq.15 depend on x. For example, different values of the extrinsic noise standard deviation σ can be used for each of the two peaks in a bimodal distribution. In other words, it is preferable to consider Eq. 16 as an empirical equation that has solid theoretic foundation but can be readily used to describe experimental data under diverse conditions.

Either direct summation or (to save time) a discrete Fourier transform is appropriate for performing this convolution if the probability is known in functional form (i.e. if it was obtained by solving the CME exactly or approximately). If only a sample of n points x1,…xi,…,xn from the intrinsic-noise-only probability distribution is known, i.e.

| (17) |

then we can draw n new samples y1,…yi,…,yn using

| (18) |

and these obey the convoluted distribution:

|

(19) |

Once the sample based on the convolution has been drawn in this fashion, if a background noise term in the distribution is desired, then a sample of na/(1−a) points corresponding to the background term can be drawn to complete the sample based on extrinsic noise. As in any formulation of extrinsic noise, the sources, form, and magnitude of the noise must be determined based on empirical considerations for a given system.

Our convolution approach provides an intuitive relationship between the distribution of extrinsic noise and the final distribution. In principle, it is highly versatile and can account for all sources of extrinsic noise in an empirical manner. As such, determining an appropriate extrinsic–noise distribution will depend on experimental measurements of the overall distribution. Due to the exceedingly complex nature of extrinsic noise in many systems, however, a Gaussian distribution may be the best starting point. On one hand, such a simple distribution avoids overfitting experimental data. On the other, the Gaussian distribution can be thought of modeling extrinsic noise from many weakly correlated sources added together. A significant deviation from model prediction and experimental data would suggest that a large, concerted phenomenon is influencing the extrinsic noise; in that case, it would be appropriate to expand the network model to include this phenomenon explicitly. The convolution approach, as a method for calculating the steady-state distribution, is also useful in that it can model noise from processes at a variety of different timescales—whether these are similar timescales to those of the main network model or not.

Case Studies

Combining MGS, CME rescaling, and convolution model for extrinsic noise defines an integrated framework for efficient computation of the steady-state distribution of gene expression for a given set of parameters. To illustrate their use, we consider the application of the overall framework to several examples; aspects of these have been mentioned in the previous section.

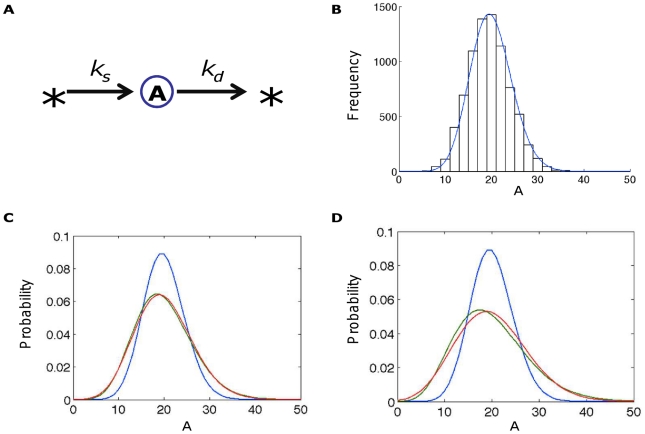

Constitutive expression of a protein (Figure 3A)

Figure 3. Calculating steady-state distributions for a simple birth-death process.

Calculating the steady-state distribution of a (A) simple birth-death process; A is expressed in terms of molecule number for all distributions. (B) Simulated distribution using the Gillespie algorithm (histogram) as compared with the analytical solution (blue line) from the CME, which is a Poisson distribution. (C) Final distributions from this systems with extrinsic noise were generated by taking ks/kd∼Γ(20,1) (green in C) or ks/kd∼Γ(10,2) (green in D); best-fit distributions based on convolution with a Gaussian (red; standard deviations 4.4 for C and 6.1 for D) and the intrinsic-noise-only distribution (blue) are shown as well. (The shift to lower molecule numbers arising from extrinsic noise in the parameter-distribution representation is equivalent to changing the base parameter set in the convolution representation; the “base” parameter set is less well defined in the parameter-distribution representation).

Here, the intrinsic noise is described exactly by a Poisson distribution (see Eq. 3). As expected, this matches the distribution given by running the Gillespie algorithm for a sufficient length of time (Figure 3B). Integrating over the prior distribution of the parameters allows the analytical addition of extrinsic noise (see Eq. 4). Addition of extrinsic noise results in spreading and, ultimately, loss of the characteristic shape of the distribution (Figure 3C,D). Also, adding extrinsic noise by perturbing parameters or by convolution with a Gaussian produces similar results, as evident from the best-fit convolved distributions to the parameter-perturbed distributions in Figure 3C,D. However, the parameter-perturbation method shifts the peak of the distribution while the convolution method does not. In this sense, the two methods define the “intrinsic-noise-only” state somewhat differently, as the parameter perturbation method considers it to be at the mean value of the parameters while the convolution method (at least when using a Gaussian) maintains the mean of the molecule number distribution, giving a more intuitive description of extrinsic noise. A better match between the techniques could be obtained by matching the distributions of extrinsic noise, as opposed to using a gamma distribution for parameters and a Gaussian for convolution because of the suitability of each of these distributions for their respective methods.

A genetic toggle switch

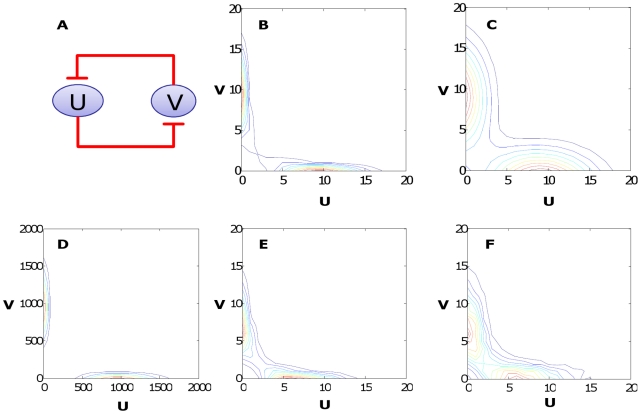

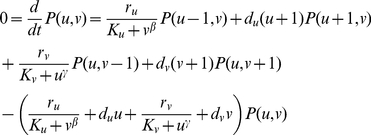

The toggle switch [47], [48] consists of two proteins that repress each other's expression (Figure 4A). Its reaction kinetics can be described by a highly simplified model consisting of two differential equations (Eqs. 20 and 21, Methods). These equations can then be used to construct the CME model (Eq 22) to describe the corresponding stochastic dynamics (accounting for intrinsic noise only).

Figure 4. Calculating steady-state distributions for a toggle switch.

(A) Circuit diagram. (B) The probability distribution, based only on intrinsic noise, for parameters Ku = Kv = 1, ru = rv = 10, β = γ = 2, du = dv = 1. This was calculated by solving the CME directly. U and V are expressed in molecule numbers for each distribution. (C) The same distribution except with extrinsic noise added (σ1 = σ2 = 2); peak spreading is evident. (D) The intrinsic-noise-only distribution except with ru = rv = 1000; calculated using scaling; peak focusing is evident. (E) A sample (10000 points) from this distribution (intrinsic noise only), using modified Gibbs sampling using the same parameters as in (B). (F) Another 10,000-point sample, generated the same way except with extrinsic noise (σ1 = σ2 = 1).

The system is bistable given appropriate parameter values, leading to a bimodal distribution by directly solving the CME (Figure 4B). Addition of extrinsic noise by convolution widens the peaks (Figure 4C), while increasing molecule numbers (and therefore, necessarily relying on scaling of the CME to solve it) narrows them (Figure 4D).

With this system, we also compared MGS with the exact solution of the CME. The MGS overall matches direct CME solution very well (compare Figure 4E and 4B); Table 1 shows a quantitative comparison. Using parameter set 1, the probability distribution obtained by directly solving the CME and two approximations based on 10,000 points each from MGS were compared using the sums of squared deviations, which were 0.0095 and 0.011 respectively. For comparison, the two approximations had a sum of squared deviations of 0.0004, and the maximum possible sum of squared deviations is 2. Overall, MGS effectively approximates the true solution of the CME with greatly reduced computational cost.

Table 1. Comparison of MGS and direct CME solution for the toggle switch.

| Parameter set | Species | Mean (direct) | Mean (MGS) | St. dev. (direct) | St. dev. (MGS) | ON% (direct) | ON% (MGS) |

| 1 | U | 6.4314 | 4.0084 | 5.0680 | 4.0241 | 69% | 55% |

| 1 | V | 2.6319 | 3.0232 | 4.1081 | 3.5994 | ||

| 2 | U | 3.3446 | 3.7456 | 2.5215 | 2.6332 | 70% | 77% |

| 2 | V | 1.3271 | 1.0201 | 1.8958 | 1.5593 |

Parameter set 1 is: Ku = Kv = 1, ru = 10, rv = 9, β = γ = 2, du = dv = 1; parameter set 2 differs in that ru = rv = 5 and β = 1.

Addition of extrinsic noise is very quick using the convolution method, since the noise is simply added to the final samples, and the results are similar to those obtained by solving the CME directly (Figure 4F). Note that the effectiveness of Gibbs sampling is in spite of the violation of detailed balance for this system. The appropriateness of the detailed balance approximation may be evaluated by comparing the actual ratios of probabilities for different states to the probabilities assumed by the detailed-balance approximation (Eq. 9). For the parameter set used in Figure 4E, the average relative error of the detailed-balance approximation, weighted by probability, is 0.58 for protein V and 0.25 for protein U.

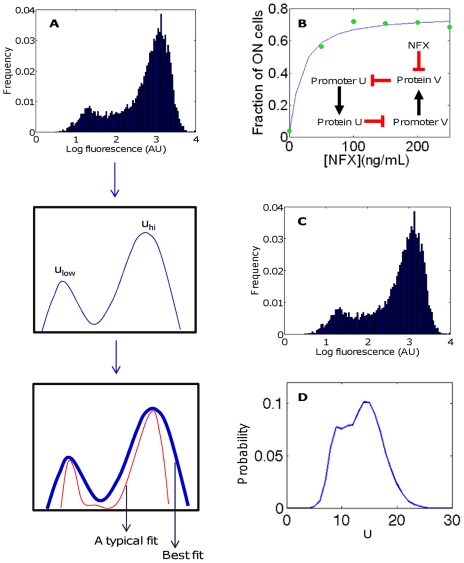

To illustrate the use of our modeling framework to the analysis of experimental data (Figure 5A), we experimentally measured the switching dynamics using the toggle switch implemented by Kobayashi et al. [48], in response to varying concentrations of the antibiotic norfloxacin (NFX). NFX causes an SOS response, which induces GFP by increasing degradation of its repressor (an additional term is added to the degradation rate constant for the repressor; see Methods for modeling details). The method described above for predicting distributions based on known parameters can be applied to this data, generating reasonable parameter sets for the perturbation. In particular, the model accurately predicted the variation of ON fractions of the population with the concentration of antibiotic (Figure 5B). Parameter sets were obtained by nonlinear fitting. Random searches of parameter space, as well as previous work with similar circuits, were used in the production of initial estimates. In general, the fitting process began with estimation of reaction parameters based on the modes of distributions observed and on previous studies, followed by refinement of these parameters and estimation of others based on nonlinear fitting of the distributions. Notably, the parameter set chosen yields a distribution dominated by unexpectedly low molecule numbers, but an adequate fit was not obtained with higher molecule numbers. The obtained parameter set was Ku = 2.22, Kv = 13.69, ru = 1306.4, rv = 35.06, β = 5.36, γ = 1, du = 79.84, dv0 = 0.42, dv1 = 1.61, kA = 36.70, σ1 = 1, and σ2 = 1 (see Eqs. 20–23 for parameter definitions; σ1 and σ2 are standard deviations of shift distributions for proteins U and V respectively). The shapes of the predicted distributions deviated somewhat from the data but were improved by using different levels of extrinsic noise for the two peaks (Figure 5 C,D). The plots show the distributions for 250 ng/mL NFX; the predicted distribution in Figure 5D uses σ = 10−5 for the ON peak and σ = 1.4 for the OFF peak, where σ is the standard deviation of the Gaussian distribution describing extrinsic noise (see Eq. 16). The peak is also shifted to account for background fluorescence or synthesis: peaks in the experimental distribution are shifted away from zero.

Figure 5. Toggle switch: comparison with experiments.

(A) A typical workflow for analyzing experimental data based on the framework for noise calculations presented in this study. Modes of the distribution(s) are determined and used with the deterministic model to estimate some reaction parameters (i.e. intrinsic noise parameters); prior knowledge may also be included in these estimates. Then, using the calculation methods (e.g. convolution for extrinsic noise) presented here, one can obtain a best fit for the full parameter set, which describes both intrinsic and extrinsic noise. (B) Theoretical (blue) and experimental (green) fraction of ON cells as a function of [NFX]. Inset shows the perturbation to the circuit. (C) Experimental distribution of GFP fluorescence in cells with 250 ng/mL NFX. (D) Predicted distribution of U molecule numbers (proportional to fluorescence) with 250 ng/mL NFX. Note that the distribution in (C) is used in (A) to illustrate the general computational procedure.

Results of the fitting indicate that a parameter set of this size is sufficient to describe some properties of the distribution (e.g. dependence of the fraction of ON cells on the circuit induction level). However, good fits of entire distributions, which have many more degrees of freedom, are not possible based on the mechanistic models described and on a simple form for extrinsic noise. The discrepancy likely results from the simplicity of the underlying model that generates the intrinsic variability, or the simplicity of the form of assumed extrinsic noise. In theory, any distribution can be fit to the intrinsic noise model if no constraints are imposed on the extrinsic noise distribution, but such a fit would yield no mechanistic insight without alternative methods to interpret the resulting extrinsic noise term. In principle, however, the computational framework proposed here can be used as an effective approach for comparing different mechanistic models for a given set of data. This aspect will require further in-depth analysis.

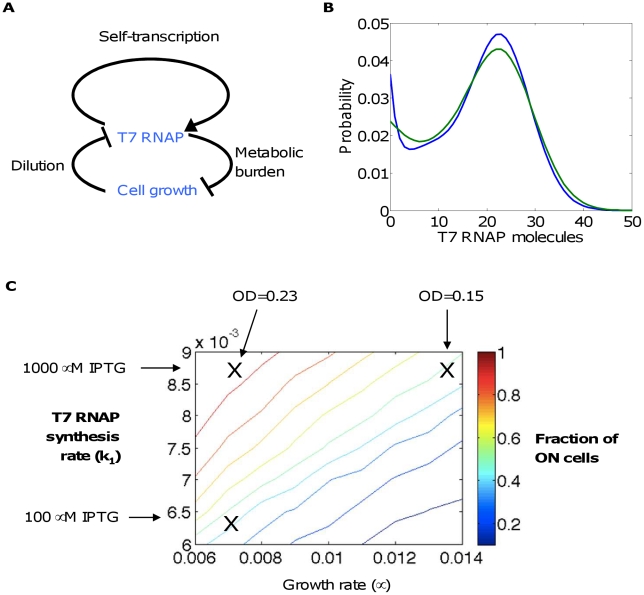

A growth-modulating positive feedback circuit

This circuit consists of a T7 RNA polymerase (T7 RNAP) activating its own transcription [49]. It provides a useful example for analyzing a unique mode of regulation of circuit dynamics: in addition to the positive feedback, expression of T7 RNAP slows growth, and growth causes dilution of the T7 RNAP (Figure 6A). As with the toggle switch, the CME model (Eq 27) predicts a bimodal distribution would result for appropriate parameters (Figure 6B,C). This distribution is based on a constant growth rate (i.e. log-phase growth): the bimodality requires constant growth, in which the growth rate and protein synthesis rate are inversely related. In the absence of growth (e.g. during stationary phase), the system would in theory approach a single, non-trivial steady state that would result in a monomodal distribution. In an appropriate time window like exponential growth phase, however, the bimodal distribution can be considered as approximately at steady state (if needed, it can be perpetuated by periodic dilution of the culture). In other words, this reaction can only reach a true steady state if the amount of medium is increasing proportionally to the bacterial growth, allowing perpetual log-phase growth, but a “pseudo-steady-state” corresponding to the limit of slow change in growth rate compared to chemical reactions is possible and interesting. This might, for example, be relevant when there is a large supply of nutrients. These states are the same on a cellular level and are modeled here.

Figure 6. T7 RNAP circuit.

(A) T7 RNAP enhances its own transcription. In addition, T7 RNAP expression slows down cell growth, which dilutes T7 RNAP. (B) Probability distribution with M = 10, k 0 = 0.001, kf = 0.01, kb = 0.1, k 1 = 0.01, dx0 = 0.003, μ = 0.01, and θ = 1; shown with (green) and without (blue) extrinsic noise (σ = 3). (C) Comparison of experiment and modeling on the perturbations to growth and T7 RNAP synthesis rates. Contours denote computed fractions of ON cells, by varying parameters k 1 and μ. X's denote experimental data points with experimental parameters (IPTG concentration and OD) as labeled; the k 1 and μ values for each data point were determined by fitting the fractions of ON cells.

According to this premise, we previously used a Gillespie algorithm to predict how the approximately steady-state distribution would be modulated by inoculum size of the bacteria, which would essentially affect the effective growth rate of the population: the larger the inoculum, the smaller the effective growth rate. The previous modeling predicted that the fraction of ON cells at the final data point would increase with the inoculum size (as controlled by the initial cell density, measured by optical density (OD)) or the circuit induction level (controlled by the concentration of IPTG). This prediction was indeed consistent with experimental measurements.

In this study, we re-evaluated these data using methods presented here. We treated the distributions of the final data point as being at a pseudo-steady state. We varied parameters to match the perturbations in ON (high-T7 RNAP) and OFF (low-T7 RNAP) populations seen in experiments (Figure 6C). As for the toggle switch, initial guesses were obtained with the aid of random searching of parameter space, and nonlinear fitting was used to obtain the final parameter set. The base case of bacterial culture with an initial OD of 0.23 and 1000 µM IPTG exhibited 94% ON cells; this matched a parameter set of k0 = 0.0011, kf = 0.01, kb = 0.11, k 1 = 0.0087, dx 0 = 0.003, μ = 0.007, and θ = 1 (see Eq. 24, 27). An experiment with the same IPTG level but an initial OD of 0.15, which would result in overall faster growth, exhibited 48% ON cells. We further performed a model fit to determine the decrease in growth rate corresponding to this shift to the OFF population, and the new growth rate was found to be μ = 0.013 (all other parameters were kept the same). Another experiment used OD = 0.23 but provided only 100 µM IPTG, resulting in 49% ON cells. This was modeled as a proportional decrease in k 0 and k 1, which, by fitting, was a 26% decrease in each of these parameters, giving k 0 = 0.0008, k 1 = 0.0065, and other parameters as in the base case. For all numerical analysis, we assumed that the number of promoters to be ten.

Again, here we find that aspects of the experimental data can be readily fitted to the simple model. However, an apparent caveat is that the distributions that we fitted were not genuinely at steady state. Furthermore, similar issues as in the case for the toggle switch also apply, which include the simplicity of the mechanistic model and that in the specific form of the extrinsic noise distribution.

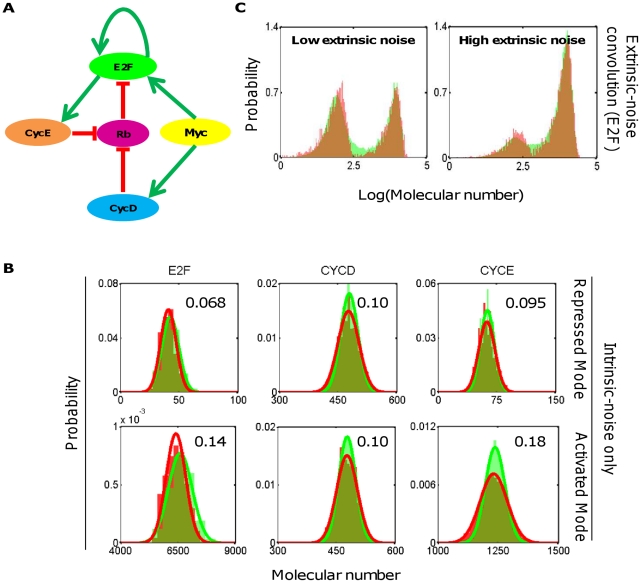

Myc/Rb/E2F network

To illustrate general applicability of our computational framework, we applied it the Myc/Rb/E2F network, which we have analyzed extensively in recent studies (Figure 7A) [50]. This network plays a critical role in regulating cell cycle progression and cell-fate decisions [50]. Here we focus on analyzing the bistable E2F response to serum stimulation [50]. To evaluate the methods described here, we use a well-established stochastic model that we recently developed [51]. The stochastic model consists of a set of stochastic differential equations in the general form of Eq. 28 [51], [52], where extrinsic noise can be introduced as an additive term. When this term is set to 0, the model will generate fluctuations due to intrinsic noise only. These stochastic dynamics can also be fully described by the corresponding CME model (Eq. 29).

Figure 7. Myc/Rb/E2F network.

(A) A network diagram of Myc/Rb/E2F at the G1-S cell cycle checkpoint. (B) Comparison of the MGS predicted probability distribution (red) and the SDEs predicted distribution (green) of selected molecular species of the repressed and the activated state of the network. The solid lines represent Gaussian distributions fitted over all random samples corresponding to each method. (C) Convolution of extrinsic noise and combination of the repressed and the activated modes based on empirical data derived from SDE simulations, with the variance of the shift distribution separately optimized. Shown in red are MGS predicted distributions and green are SDEs predicted distributions of E2F with either low or high level of extrinsic noise as defined in the SDE framework. The SDE model and the associated parameters are described in detail in Lee et al [51].

For this model, we note that ad hoc strategies can be employed to speed up the efficiency of Gibbs sampling. In particular, for the base model parameters, multiple rounds of numerical simulations using the SDE model, with either low or high initial E2F conditions and without the extrinsic noise term, predict a clear bimodal distribution in E2F, with the low mode (obtained with low initial E2F) corresponding to E2F in repressed state and the high mode (obtained with high initial E2F) corresponding to E2F being activated. In fact, the two stable states are so separated that stochastic transition between them by intrinsic noise alone is extremely rare (never observed during SDE simulations once the network settles in either state, data not shown). Therefore, we separately sample the repressed and the activated mode when applying the MGS. The locations of the different modes were estimated using the deterministic model a priori, with the same rate parameters.

As shown in Figure 7B, the intrinsic-noise-only distributions of selected species of the Myc/Rb/E2F network (E2F, CYCD, and CYCE) generated by MGS (red) closely resemble those predicted by the SDE model (green). The sums of squared deviations between SDEs-predicted distributions and MGS-predicted distributions are shown besides each plot.

Next, we incorporated an additive extrinsic noise into the SDEs (as described in [51]) to simulate empirical stochastic system output, equivalent to experimental data one would expect. Again, we note that significant extrinsic noise is required for the transition between the repressed and the activated mode. As such, we performed the extrinsic noise convolution with the intrinsic noise only distribution separately for the repressed and the activated mode, each with its own best-fit variance of the shift distribution (here assumed to be Gaussian). The total probability of each mode is calculated from the ratio of durations the system resides within the distribution around the corresponding mode based on the empirical distributions (SDE simulation), assuming steady-state and ergodicity of the stochastic system with extrinsic noise. These total probabilities are then used to combine the two modes. The resemblance between the E2F distribution generated by the SDE model (green) and that by the extrinsic noise-convolved MGS (red) is evident in Figure 7C for two different levels of extrinsic noise (as defined for the SDEs in [51]).

Discussion

Two key challenges in stochastic modeling of cellular networks are computational efficiency in describing intrinsic noise and adequate description of extrinsic noise. This study provides a modular approach that makes such computations more tractable. To compute intrinsic variability, a range of approaches for predicting intrinsic noise, ranging from modified Gibbs sampling to scaled CME solution to direct CME solution in order of increasing accuracy and decreasing efficiency, is presented. Our methods provide an efficient alternative to previous time-stepping and analytical methods for modeling noise in cellular networks. These techniques can implement a model quite accurately for certain systems. However, the time-stepping method can require great computational cost, especially in its most accurate form (the Gillespie algorithm), and does not necessarily provide an accurate representation of extrinsic noise. The direct analytical approach is desirable because it accounts exactly for intrinsic noise, but it is only feasible for the simplest biological networks. In principle, our approximate methods are generally applicable to cellular networks with arbitrary complexity.

Likewise, representation of extrinsic noise by convolution provides significant advantages both in its intuitive relationship to the final distribution and in its computational tractability (e.g. small number of parameters). Because extrinsic noise is a heterogeneous phenomenon with multiple sources, it is likely to have some components best modeled as variation in parameters and others best modeled in other ways. Applicability of each method can be evaluated by its ability to produce similar distributions to other methods, and more generally to account for different sources of noise. The convolution method, with its ability to mimic results from parameter perturbation methods, is useful in this regard. Also, we expect the convolution method to be highly flexible. Though it is developed in the context of analyzing steady-state distributions, it may also be applied to incorporate contributions of extrinsic noise into time-course simulations of stochastic network dynamics, for example by the Gillespie algorithm.

The convolution method is also not constrained to the Gaussian form of the extrinsic noise distribution used here: if appropriate in a system, different extrinsic noise distributions with different numbers of parameters could be used. The Gaussian was used here because it was likely the best distribution with a manageably low number of parameters in these cases, but other distributions, such as mixture models, could provide more realistic final distributions at the expense of larger parameter sets. In theory, this pattern could be continued to the point of using an unconstrained function as the extrinsic noise distribution to exactly fit the observed final distribution; while, as noted above, this would compromise the mechanistic insight from the analysis, it may be useful in characterizing the system in other ways, especially if the reaction parameters are known from other measurements.

Importantly, our study has defined a general, streamlined framework where one can derive unknown parameters from a distribution using fitting algorithms. For instance, our framework for extrinsic noise aids in obtaining initial estimates of reaction parameters based on the modes of the distribution, since these correspond well to the best-fit parameters. We have illustrated the basic concept of this approach through the analysis of two simple synthetic gene circuits as well as the feasibility of its application to a more complex cell cycle entry model. Due to the wide variety of perturbations that extrinsic noise can induce in all parameters and variables, however, we caution that apparent agreement with experiment could be seen for different models. To overcome this challenge, prior knowledge and alternative measurements are helpful for constraining the model, in terms of both reaction mechanisms and corresponding parameters. It is likely that this general framework is applicable for any biological network where a sufficiently mechanistic reaction mechanism is available. However, specific interpretations and applications of the fitted parameters, including those for the extrinsic noise distribution, will be context dependent. Ad hoc constraints and prior knowledge of the Markov chain describing the network dynamics, such as irreducibility, may sometimes be required, which usually demands no more mechanistic insights of the system than what's already required to carry out the actual sampling scheme.

Methods

Modeling a Toggle Switch

The following model is adapted from that of Gardner and colleagues [47]. Let the two proteins be U and V with molecule numbers u and v respectively. Based on Hill kinetics for synthesis and linear kinetics for degradation, the system can be described as:

| (20) |

| (21) |

A point in state space for this system is denoted (u, v). With appropriate parameters, the system can be bistable. In such a case, the deterministic steady states are at (umin,vmax) and (umax,vmin), where umin<umax and vmin<vmax. The number of states in this system that could potentially have nonnegligible probability is small enough that the CME at steady state can be solved analytically using linear algebra, provided the molecule numbers are small enough:

|

(22) |

Scaling allows solutions for larger amounts of protein.

The circuit can be induced by adding the antibiotic NFX.

Adding antibiotic to induce protein U's high state involves initiating an SOS response, which degrades protein V [48]; thus it brings about the perturbation

| (23) |

where dv0 is the basal degradation rate of V, dv0+dv1 is its maximal degradation rate, A is the antibiotic concentration, and kA is the half-maximal constant for the enhanced degradation.

For experimental flow cytometry data, ON and OFF fractions of the data were determined by fitting the points to a mixture model consisting of two Gaussians using Mixmod 2.1.1. The theoretical data were partitioned based on which protein had a higher molecule number.

Modeling a Growth-Modulating Positive-Feedback Circuit

Let n denote the number of T7 RNAP molecules in a given cell and let the cell have M promoters producing it, with m of them in an inactive state (O0) and M-m in an active state (O1). Tan et al. [49] modeled this system using the Gillespie algorithm with six reactions. Five are normal chemical reactions: synthesis of a T7 RNAP molecule from O0, with propensity k0 m, or from O1, with k1m; conversion of an O0 and a T7 RNAP molecule to an O1, with propensity kfmn, or the reverse, with kb(M−m); and degradation of T7 RNAP, with propensity dx0n. The sixth is cell division, which distributes the T7 RNAP molecules according to a binomial distribution and resets all the promoters to O0, and has propensity

| (24) |

Where μ0 and θ are constants, C is the carrying capacity of the system, and N is the number of cells in it. When tracking molecule numbers in a single cell for purposes of determining steady state, one thus assumes, according to the binomial distribution, that cell division moves the molecule number from n′ to n with probability

| (25) |

For n′≥n.

It is useful to apply a somewhat different definition of steady state for this system than in more typical reaction systems. If all the reactions are required to reach steady state, then the system must be at carrying capacity, and thus cell division can be eliminated from the analysis; this results in a monostable circuit. However, provided that the intracellular reactions are fast on the timescale of the growth curve, temporary quasi-steady states at other points along the growth curve, for example at log phase, can exhibit significant additional properties, including bistability; thus steady-state analysis at these times can replicate the features observed by the Gillespie algorithm. To do this, let

| (26) |

be the effective growth rate, leading to the steady-state master equation

|

(27) |

The network can be induced by IPTG, effectively increasing k0 and k1. Different steady states can be investigated by examining the system at different OD levels; in each case N/C is estimated as the ratio of the current OD to the carrying-capacity OD.

ON/OFF fractions for this network were found by identifying the two most prominent peaks in the histogram of the protein being monitored and then defining the bin in between those peaks with the lowest value as the border between ON and OFF. When peaks were found to blur together (a problem in the theoretical distributions), the point on a shoulder with the lowest derivative (approximated as a finite difference between points) was designated as the border between the main peak and the shoulder “peak.”

Characterization of the Toggle Switch Circuit

E. coli, JM2.300 was transformed with two plasmids, pTSMa and pCIRa [48]. The cells were cultured overnight at 37°C with 2 mM IPTG to ensure OFF state. The cells were then washed twice with fresh media, diluted 1000 fold, and cultured at 37°C. After three hours, cells were treated with various concentrations of NFX and further cultured at 37°C for 5 hours. Samples were then collected and subjected to flow cytometry analysis. LB medium was used throughout the experiment.

Characterization of the T7 RNAP* Circuit

The construction and characterization of the T7 RNAP* positive feedback circuit were described by Tan et al. [49]. MC4100z1 cells (from Michael Elowitz) were used throughout the study.

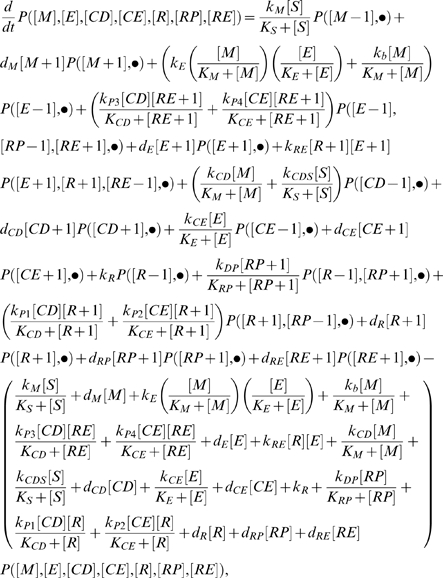

Modeling Myc/Rb/E2F Network

As an example to evaluate the feasibility of extending the proposed computational framework to a more complex model, we adopt a previously developed stochastic model for this network [51]. It consists of a set of stochastic differential equations, which has the general form of

| (28) |

where Xi(t) represents the number of molecules of a molecular species i (i = 1, …, N) at time t, and X(t) = (X1(t), .., XN(t)) is the state of the entire system at time t. X(t) evolves over time at the rate of aj[X(t)] (j = 1, …, M), and the corresponding changes in the number of individual molecules are described in vji, Γj(t) and ωi(t) are temporally uncorrelated, statistically independent Gaussian noises. Γj(t) is the standard normal distribution with mean 0 and variance 1. ωi(t) tunes the level of empirical additive extrinsic noise [51].

When ωi(t) is set to 0, the SDE simulation gives an approximation to the exact solution of the discrete stochastic chemical reaction system [52], against which the MGS distributions are compared. The inclusion of either low or high levels of extrinsic noise is realized by setting ωi(t) to 15 or 50, respectively. Based on the reactions involved in this system [51], we can write down the following CME:

|

(29) |

where • represents the state of interest for the molecular species not specified, as in ([M], [E], [CD], [CE], [R], [RP], [RE]), which represent molecular number. Refer to Lee et al [51] for detailed description of the reaction mechanism and the corresponding rate constants.

Acknowledgments

We thank Jin Wang for helpful comments, and James Collins and Hideki Kobayashi for the toggle switch constructs.

Footnotes

The authors have declared that no competing interests exist.

This work was partially supported by a National Science Foundation Career Award (BES 0953202, L.Y.), the National Institutes of Health (1P50GM081883, R01CA118486), a DuPont Young Professorship (L.Y.), a David and Lucile Packard Fellowship (L.Y.), a Medtronic Fellowship (C.T.), and a Lane Fellowship (C.T.), and a National Defense Science and Engineering Graduate Fellowship (M.A.H). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Kaern M, Elston TC, Blake WJ, Collins JJ. Stochasticity in gene expression: from theories to phenotypes. Nat Rev Genet. 2005;6:451–464. doi: 10.1038/nrg1615. [DOI] [PubMed] [Google Scholar]

- 2.Rao CV, Wolf DM, Arkin AP. Control, exploitation and tolerance of intracellular noise. Nature. 2002;420:231–237. doi: 10.1038/nature01258. [DOI] [PubMed] [Google Scholar]

- 3.Tan RZ, van Oudenaarden A. Transcript counting in single cells reveals dynamics of rDNA transcription. Mol Syst Biol. 2010;6:358. doi: 10.1038/msb.2010.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pedraza JM, van Oudenaarden A. Noise propagation in gene networks. Science. 2005;307:1965–1969. doi: 10.1126/science.1109090. [DOI] [PubMed] [Google Scholar]

- 5.Hooshangi S, Thiberge S, Weiss R. Ultrasensitivity and noise propagation in a synthetic transcriptional cascade. Proc Natl Acad Sci U S A. 2005;102:3581–3586. doi: 10.1073/pnas.0408507102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Acar M, Mettetal JT, van Oudenaarden A. Stochastic switching as a survival strategy in fluctuating environments. Nat Genet. 2008;40:471–475. doi: 10.1038/ng.110. [DOI] [PubMed] [Google Scholar]

- 7.Cağatay T, Turcotte M, Elowitz M, Garcia-Ojalvo J, Süel G. Architecture-dependent noise discriminates functionally analogous differentiation circuits. Cell. 2009;139:512–522. doi: 10.1016/j.cell.2009.07.046. [DOI] [PubMed] [Google Scholar]

- 8.Kalmar T, Lim C, Hayward P, Muñoz-Descalzo S, Nichols J, et al. Regulated fluctuations in nanog expression mediate cell fate decisions in embryonic stem cells. PLoS Biol. 2009;7:e1000149. doi: 10.1371/journal.pbio.1000149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fraser D, Kærn M. A chance at survival: gene expression noise and phenotypic diversification strategies. Mol Microbiol. 2009;71:1333–1340. doi: 10.1111/j.1365-2958.2009.06605.x. [DOI] [PubMed] [Google Scholar]

- 10.Blake WJ, Balázsi G, Kohanski MA, Isaacs FJ, Murphy KF, et al. Phenotypic consequences of promoter-mediated transcriptional noise. Mol Cell. 2006;24:853–865. doi: 10.1016/j.molcel.2006.11.003. [DOI] [PubMed] [Google Scholar]

- 11.Schultz D, Ben-Jacob E, Onuchic JN, Wolynes PG. Molecular level stochastic model for competence cycles in Bacillus subtilis. Proc Natl Acad Sci U S A. 2007;104:17582–17587. doi: 10.1073/pnas.0707965104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Losick R, Desplan C. Stochasticity and cell fate. Science. 2008;320:65–68. doi: 10.1126/science.1147888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schultz D, Wolynes PG, Ben-Jacob E, Onuchic JN. Deciding fate in adverse times: sporulation and competence in Bacillus subtilis. Proc Natl Acad Sci U S A. 2009;106:21027–21034. doi: 10.1073/pnas.0912185106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kittisopikul M, Süel GM. Biological role of noise encoded in a genetic network motif. Proc Natl Acad Sci U S A. 2010;107:13300–13305. doi: 10.1073/pnas.1003975107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ben-Jacob E, Schultz D. Bacteria determine fate by playing dice with controlled odds. Proc Natl Acad Sci U S A. 2010;107:13197–13198. doi: 10.1073/pnas.1008254107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cox CD, McCollum JM, Allen MS, Dar RD, Simpson ML. Using noise to probe and characterize gene circuits. Proc Natl Acad Sci U S A. 2008;105:10809–10814. doi: 10.1073/pnas.0804829105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dunlop MJ, Cox RS, Levine JH, Murray RM, Elowitz MB. Regulatory activity revealed by dynamic correlations in gene expression noise. Nat Genet. 2008;40:1493–1498. doi: 10.1038/ng.281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Warmflash A, Dinner AR. Signatures of combinatorial regulation in intrinsic biological noise. Proc Natl Acad Sci U S A. 2008;106:17262–17267. doi: 10.1073/pnas.0809314105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Munsky B, Trinh B, Khammash M. Listening to the noise: random fluctuations reveal gene network parameters. Mol Syst Biol. 2009;5:318. doi: 10.1038/msb.2009.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gillespie DT. Exact stochastic simulation of coupled chemical reactions. J Phys Chem. 1977;81:2340–2361. [Google Scholar]

- 21.Arkin A, Ross J, McAdams HH. Stochastic kinetic analysis of developmental pathway bifurcation in phage lambda-infected Escherichia coli cells. Genetics. 1998;149:1633–1648. doi: 10.1093/genetics/149.4.1633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Garcia-Ojalvo J, Elowitz MB, Strogatz SH. Modeling a synthetic multicellular clock: repressilators coupled by quorum sensing. Proc Natl Acad Sci U S A. 2004;101:10955–10960. doi: 10.1073/pnas.0307095101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tanouchi Y, Tu D, Kim J, You L. Noise reduction by diffusional dissipation in a minimal quorum sensing motif. PLoS Comput Biol. 2008;4:e1000167. doi: 10.1371/journal.pcbi.1000167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Warmflash A, Bhimalapuram P, Dinner AR. Umbrella sampling for nonequilibrium processes. J Chem Phys. 2007;127:154112. doi: 10.1063/1.2784118. [DOI] [PubMed] [Google Scholar]

- 25.Hemberg M, Barahona M. Perfect sampling of the master equation for gene regulatory networks. Biophys J. 2007;93:401–410. doi: 10.1529/biophysj.106.099390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Elowitz MB, Levine AJ, Siggia ED, Swain PS. Stochastic gene expression in a single cell. Science. 2002;297:1183–1186. doi: 10.1126/science.1070919. [DOI] [PubMed] [Google Scholar]

- 27.Swain PS, Elowitz MB, Siggia ED. Intrinsic and extrinsic contributions to stochasticity in gene expression. Proc Natl Acad Sci U S A. 2002;99:12795–12800. doi: 10.1073/pnas.162041399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Samoilov MS, Arkin AP. Deviant effects in molecular reaction pathways. Nature Biotech. 2006;24:1235–1240. doi: 10.1038/nbt1253. [DOI] [PubMed] [Google Scholar]

- 29.Raser JM, O'Shea EK. Control of stochasticity in eukaryotic gene expression. Science. 2004;304:1811–1814. doi: 10.1126/science.1098641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Friedman N, Cai L, Xie XS. Linking stochastic dynamics to population distribution: an analytical framework of gene expression. Phys Rev Lett. 2006;97:168302. doi: 10.1103/PhysRevLett.97.168302. [DOI] [PubMed] [Google Scholar]

- 31.Fournier T, Gabriel J-P, Pasquier J, Mazza C, Galbete J, et al. Stochastic models and numerical algorithms for a class of regulatory gene networks. Bull Math Biol. 2009;71:1394–1431. doi: 10.1007/s11538-009-9407-9. [DOI] [PubMed] [Google Scholar]

- 32.Walczak AM, Mugler A, Wiggins CH. A stochastic spectral analysis of transcriptional regulatory cascades. Proc Natl Acad Sci U S A. 2009;106:6529–6534. doi: 10.1073/pnas.0811999106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kim KY, Wang J. Potential energy landscape and robustness of a gene regulatory network: toggle switch. PLoS Comput Biol. 2007;3:e60. doi: 10.1371/journal.pcbi.0030060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cao Y, Liang J. Optimal enumeration of state space of finitely buffered stochastic molecular networks and exact computation of steady state landscape probability. BMC Syst Biol. 2008;2:30. doi: 10.1186/1752-0509-2-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cao Y, Lu H-M, Liang J. Probability landscape of heritable and robust epigenetic state of lysogeny in phage lambda. Proc Natl Acad Sci U S A. 2010;107:18445–18450. doi: 10.1073/pnas.1001455107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Munsky B, Khammash M. Identification from stochastic cell-to-cell variation: a genetic switch case. IET Syst Biol. 2010;4:356–66. doi: 10.1049/iet-syb.2010.0013. [DOI] [PubMed] [Google Scholar]

- 37.Ao P. Potential in stochastic differential equations: novel construction. J Phys A: Math Gen. 2004;37:L25–L30. [Google Scholar]

- 38.Wang J, Xu L, Wang E. Potential landscape and flux framework of nonequilibrium networks: Robustness, dissipation, and coherence of biochemical oscillations. Proc Natl Acad Sci U S A. 2008;105:12271–12276. doi: 10.1073/pnas.0800579105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Qian H, Beard DA. Thermodynamics of stoichiometric biochemical networks in living systems far from equilibrium. Biophys Chem. 2005;114:213–220. doi: 10.1016/j.bpc.2004.12.001. [DOI] [PubMed] [Google Scholar]

- 40.Shahrezaei V, Ollivier JF, Swain PS. Colored extrinsic fluctuations and stochastic gene expression. Mol Syst Biol. 2008;4:196. doi: 10.1038/msb.2008.31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Taniguchi Y, Choi PJ, Li G-W, Chen H, Babu M, et al. Quantifying E. coli proteome and transcriptome with single-molecule sensitivity in single cells. Science. 2010;329:533–538. doi: 10.1126/science.1188308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Geman S, Geman D. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans Pattern Anal and Mach Intell. 1984;PAMI-6:721–741. doi: 10.1109/tpami.1984.4767596. [DOI] [PubMed] [Google Scholar]

- 43.Casella G, George EI. Explaining the Gibbs sampler. Am Stat. 1992;46:167–174. [Google Scholar]

- 44.Zellner A, Min C-K. Gibbs sampler convergence criteria. J Am Stat Assoc. 1995;90:921–927. [Google Scholar]

- 45.Frigessi A, di Stefano P, Hwang C-R, Sheu S-J. Convergence rates of the Gibbs sampler, the Metropolis algorithm and other single–site updating dynamics. J R Stat Soc Series B Stat Methodol. 1993;55:205–219. [Google Scholar]

- 46.Jones GL, Hobert JP. Sufficient burn-in for Gibbs samplers for a hierarchical random effects model. Ann Stat. 2004;32:784–817. [Google Scholar]

- 47.Gardner TS, Cantor CR, Collins JJ. Construction of a genetic toggle switch in Escherichia coli. Nature. 2000;403:339–342. doi: 10.1038/35002131. [DOI] [PubMed] [Google Scholar]

- 48.Kobayashi H, Kaern M, Araki M, Chung K, Gardner TS, et al. Programmable cells: interfacing natural and engineered gene networks. Proc Natl Acad Sci U S A. 2004;101:8414–8419. doi: 10.1073/pnas.0402940101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Tan C, Marguet P, You L. Emergent bistability by a growth-modulating positive feedback circuit. Nat Chem Biol. 2009;5:842–848. doi: 10.1038/nchembio.218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yao G, Lee TJ, Mori S, Nevins JR, You L. A bistable Rb-E2F switch underlies the restriction point. Nat Cell Biol. 2008;10:476–482. doi: 10.1038/ncb1711. [DOI] [PubMed] [Google Scholar]

- 51.Lee TJ, Yao G, Bennett DC, Nevins JR, You L. Stochastic E2F activation and reconciliation of phenomenological cell-cycle models. PLoS Biol. 2010;8:e1000488. doi: 10.1371/journal.pbio.1000488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gillespie DT. Stochastic simulation of chemical kinetics. Annu Rev Phys Chem. 2007;58:35–55. doi: 10.1146/annurev.physchem.58.032806.104637. [DOI] [PubMed] [Google Scholar]