Abstract

Objectives:

The purposes of this study were: to determine the number of articles requested by library users that could be retrieved from the library's collection using the library catalog and link resolver, in other words, the availability rate; and to identify the nature and frequency of problems encountered in this process, so that the problems could be addressed and access to full-text articles could be improved.

Methods:

A sample of 414 requested articles was identified via link resolver log files. Library staff attempted to retrieve these articles using the library catalog and link resolver and documented access problems.

Results:

Staff were able to retrieve electronic full text for 310 articles using the catalog. An additional 21 articles were available in print, for an overall availability rate of nearly 80%. Only 68% (280) of articles could be retrieved electronically via the link resolver. The biggest barriers to access in both instances were lack of holdings and incomplete coverage. The most common problem encountered when retrieving articles via the link resolver was incomplete or inaccurate metadata.

Conclusion:

An availability study is a useful tool for measuring the quality of electronic access provided by a library and identifying and quantifying barriers to access.

Highlights.

Lack of holdings, including access to recent articles restricted by embargoes, was the most common barrier to locating full text, accounting for over 90% of all identified problems.

Availability rates for electronic articles varied by year of publication and by the database in which the OpenURL request originated.

Link resolver error rates varied widely based on the source of the request and frequently resulted from incomplete or inaccurate metadata.

Implications.

An availability study is an inexpensive, practical tool for assessing the quality of electronic access to journal articles.

The results of an availability study can help libraries identify barriers to access and thereby allocate limited resources to areas that will provide the most benefit to users.

Link resolvers might be more accurate if the quality of metadata in OpenURLs was improved and the behavior of full-text targets was standardized.

A user who attempts to access an electronic article expects the process to be seamless: click a link or two, and the article appears. Unfortunately, this process is not always so simple. Many factors can prevent users from retrieving an article, including:

Collection and acquisition problems: The library may not subscribe to the desired journal, or the article and/or journal may be unavailable for some other reason.

Cataloging and holdings problems: The journal may be cataloged or indexed incorrectly, or the library's holdings data may be wrong.

Technical problems: Problems may occur with the journal provider's site or the library's proxy server.

While many libraries use link resolvers to make it easier for users to retrieve articles, these can introduce additional points of failure. The resolver might not be configured correctly, the knowledgebase (database of library journal holdings) might include incorrect information, or article metadata from the source database might be incomplete or incorrect.

At the Oregon Health & Science University (OHSU) Library, users occasionally complained about access problems. These complaints provided anecdotal information about barriers to access, but library staff needed more solid data on which to act: How often were users able to retrieve a desired article? What problems did they encounter in the process, and how often did these problems occur? An availability study was conducted to answer those questions.

First described by Kantor [1], an availability study is a method for evaluating how well a library satisfies user requests and identifying barriers to satisfying those requests. An availability study consists of the following steps:

gather actual user requests (or simulate them)

try to fill those requests using the same tools and methods a user would use

record what happens

analyze the results

LITERATURE REVIEW

Many availability studies of print materials have been published, but only a few included electronic articles. Most of the print studies were discussed in two comprehensive review articles: Mansbridge [2] reviewed availability studies published up to 1984, while Nisonger [3] reviewed those published since that time. Nisonger also explained availability studies very well: what they are, what can be learned from them, and how to do them.

Only a few availability studies of electronic journals have been documented. In 2009, Nisonger reported the results of an availability study of “500 serial citations, randomly selected from scholarly journals in 50 different subject areas or disciplines” and found an overall availability rate of 65.4%, which varied from 45%–81% across disciplines [4]. Price studied articles cited in recent faculty publications and found that 81% of these articles were available electronically and an additional 8% were available in print [5].

In an unpublished study, Squires, Moore, and Keesee tested the availability of 400 citations at the University of North Carolina at Chapel Hill (UNC-CH) Health Sciences Library, assembled from course reserve reading lists, articles written or cited by UNC-CH affiliates, and “articles cited within the clinical queries published as the Family Practice Information Network (FPIN).” They reported an overall electronic availability rate of 78% [6].

No studies appear to have been published that evaluate the link resolver as part of an availability study. A 2010 study by Trainor and Price is somewhat similar to an availability study in that it analyzes causes of failure, along with accuracy and error rates, for link resolver requests for a variety of material types in general academic collections. Trainor and Price found that accuracy and success rates varied across the libraries studied and the type of material (book, article, dissertation, etc.) [7].

Several other studies evaluated the general performance of link resolvers, and one recent study analyzed rates and causes of resolver failures. In 2006, Jayaraman and Harker compared the performance of 2 different link resolvers at the University of Texas Southwestern Medical Center Library. They found a success rate of over 89% for one and just 58% for the other [8], suggesting that performance can vary considerably from one link resolver product to another. Wakimoto, Walker, and Dabbour studied user expectations and experiences with the SFX link resolver and found that “about 20 percent of full-text options were erroneous, either because they incorrectly showed availability (false positives) or incorrectly did not show availability (false negatives).” The vast majority of false negatives were the result of incorrectly reported holding information from database vendors or simply the result of vendors not loading specific articles. Most of the false positives were the result of incorrectly generated OpenURLs from source databases, thus sending incorrect information to SFX [9].

METHODS

The OHSU Library adapted the traditional availability study methodology to evaluate access to electronic articles via an online catalog and link resolver. The OHSU Library uses the WebBridge link resolver from Innovative Interfaces to provide access to journal literature from nearly all of the databases to which it subscribes. In addition, all journals—print and electronic—are included in the library catalog, also from Innovative Interfaces. Journal holdings data are maintained by library staff, who upload holdings data from full-text vendors (for purchased titles) or from EBSCO A–Z (for open access titles) via Innovative Interfaces' Electronic Resource Management module. The resulting knowledgebase of holdings data is used by both the catalog and WebBridge to indicate availability.

To conduct the study, library staff analyzed log files generated by WebBridge, as these log files were the best available representation of actual user demand for electronic articles. Each time a user clicks on the link resolver button in a database or other source, WebBridge records the date, time, and OpenURL for the request in a temporary log file. When the log file reaches a maximum size of 1 megabyte (MB), the oldest half of the file is discarded. This file is not normally accessible to the library, but Innovative Interfaces staff agreed to send the contents of the file every Tuesday and Thursday from November 3–30, 2009, and again from March 4–18, 2010. The log files were cleaned up in Microsoft Excel 2007 to remove extraneous entries (e.g., for web page elements such as cascading stylesheets or images), leaving only entries that represented user requests. Every third entry was tested, generating a random sample of 416 entries, exceeding the sample size of 400 that Kantor recommended [10]. Only entries representing journal articles were tested; entries for electronic books or other materials were skipped. Obvious duplicates (the same OpenURL accessed multiple times within a few minutes) were also skipped. Finally, as results were analyzed, errors in coding were discovered for 4 articles. When they were rechecked in August 2010, correct coding could be determined for 2 of the 4. The remaining 2 were deleted from the sample, leaving a final sample size of 414.

Testing retrieval via the link resolver

For each selected entry, library staff attempted to retrieve the article in question using both the link resolver and library catalog. Most testing was done from workstations in the library, but some was done from workstations located outside of the campus network, with staff logging in via the library's proxy server, EZProxy.

An article was coded as available if the tester could retrieve it via any full-text link offered by the resolver. If no full-text links were offered or none of the offered links worked, then the article was coded as unavailable.

Testers coded problems with the link resolver in two situations: (1) The resolver offered a link to full text that did not work correctly, or (2) the resolver did not offer a link to full text, but full text was available and therefore a link should have been offered. To test via the link resolver, staff copied the OpenURL in the log file and pasted it into a web browser, thereby displaying a menu of retrieval options from the link resolver. If one or more full-text, article-level links (links that go directly to the article rather than the journal web page) were offered, staff clicked the first one. If staff were able to display the full text of the article, staff recorded the result and tested retrieval in the catalog as described in the next section. If retrieval was unsuccessful, staff repeated the procedure with any other available article-level links until the article was successfully retrieved or no more article-level links were available. Staff then proceeded to journal-level links (links that go to the journal web page rather than to the specific article), if available, following a similar procedure until the article was successfully retrieved or all journal-level links had been tested. If the article was not successfully retrieved or no full-text links were available, the article was considered to be unavailable via the link resolver. All test results were recorded in an Excel spreadsheet for analysis. If any links did not work, staff analyzed why the link failed and recorded the reason in the spreadsheet. In some cases, multiple problems were associated with a single article.

Testing retrieval via the catalog

Once testing in the link resolver was completed, staff tested retrieval via the catalog. They began by searching for the journal title in a subset of the catalog that contains only journals. If that search was unsuccessful, staff searched the journal collection using title keywords. If that failed, they searched the journal's International Standard Serial Number (ISSN). If all of those searches failed, the process was repeated, searching the entire collection rather than limiting the search to journals. If those searches also failed, staff assumed that the library had no holdings for the title. Results were recorded in the spreadsheet, along with the nature and cause of any problems.

If a record was found, the tester reviewed the holdings statements to determine whether the library's subscriptions to the titles should include the requested articles. If the catalog indicated that electronic access was available, the tester clicked the first appropriate link and navigated to the article on the full-text site. If the article could be retrieved, in portable document format (PDF) or hypertext markup language (HTML), the article was considered to be available electronically. If the article could not be retrieved, the tester recorded the reason for the failure. This process was repeated with any additional full-text links until the article was retrieved successfully or no more links were available to test.

If the article could not be retrieved successfully via any electronic links, the tester checked the catalog record for print holdings. If the catalog indicated that print holdings were available, the article was considered to be available in print. Testers did not attempt to retrieve the article from the journal stacks. If the article could not be retrieved electronically and the catalog did not indicate that print holdings were available, the article was considered to be unavailable. If the library had some electronic holdings for the title but not the specific article requested, testers noted how the requested article related to existing electronic holdings: older, newer, part of a gap in holdings, or missing. Problems not related to the range of electronic holdings were also noted.

If full text was found via the catalog but not via the link resolver, testers researched the problem to determine why the resolver did not provide access to the full text and documented the reasons for the failures.

In addition to recording test results, staff recorded the following general information about the article, taken from the OpenURL metadata: source database (i.e., the database the patron was using when accessing the link resolver), journal title, and year of publication. That information enabled additional analysis and could also be used to support collection development decisions.

RESULTS

Availability rates

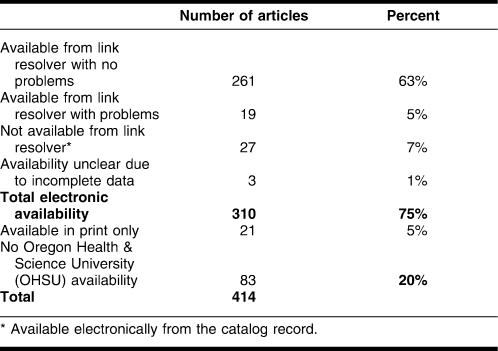

An article was considered to be available electronically if it could be retrieved using full-text links found in the library catalog. An article was considered to be available in print if the holdings statements in the library catalog indicated that the library owned the issue containing the article. Table 1 summarizes availability via both the catalog and link resolver. Of the 414 citations tested, 310 were available electronically (74.88%), and an additional 21 were available only in print (5.07%), for an overall availability rate of 79.95%. Only 280 (67.63%) were available via the link resolver, and only 261 (63.04%) were available via the link resolver with no problems. Testers were unable to retrieve 27 articles via the link resolver because of 1 or more problems, though those articles were available electronically via the catalog.

Table 1.

Summary of article availability

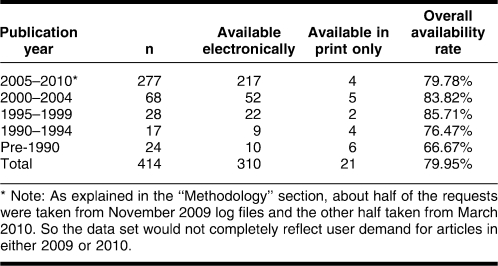

Results were analyzed by publication date in order to identify patterns that could inform collection development decisions. As shown in Table 2, articles published in the most recent 5 years had a slightly lower availability rate than those published in the next two 5-year periods, and articles published prior to 1990 had a significantly lower availability rate. Though not shown in the table, 143 articles (34.38%) were published in 2009 or early 2010, reflecting the importance of currency in biomedical literature.

Table 2.

Availability by publication date range

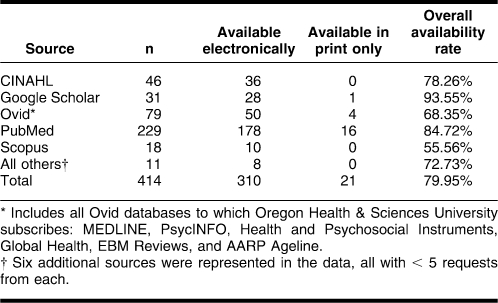

Results were further analyzed by the source database in which the request originated. As shown in Table 3, the availability rate varied considerably by source, most likely due to the source's scope of coverage. Scopus has broad coverage across the sciences and social sciences, and OHSU's Ovid platform includes PsycINFO and other social sciences databases. Because OHSU is a standalone biomedical campus, its holdings focus on the biomedical sciences (hence the relatively high rate for PubMed, the most heavily used source) and are, therefore, less comprehensive in other areas. The big surprise, however, is the high availability rate for Google Scholar, which also includes broad coverage of fields outside of biomedicine yet has the highest availability rate of any source in the study. Further research into how users use Google Scholar would be required to determine why the availability rate from that source is so high.

Table 3.

Availability by source database

Problems encountered

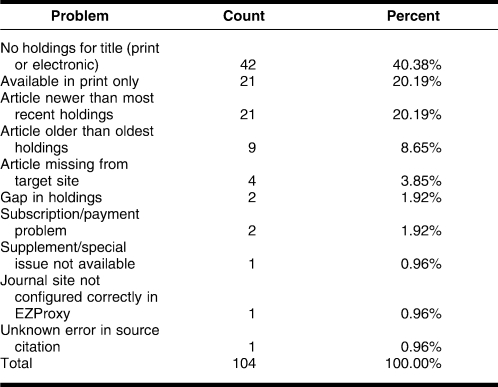

Table 4 shows the reasons why requested articles were not available electronically when searches were conducted in the catalog. Not surprisingly, lack of holdings was the biggest barrier to access. The library had some holdings for the journal in 36 of the 83 requests for unavailable articles (43.37% of unavailable items).

Table 4.

Reasons why electronic articles could not be located via the catalog

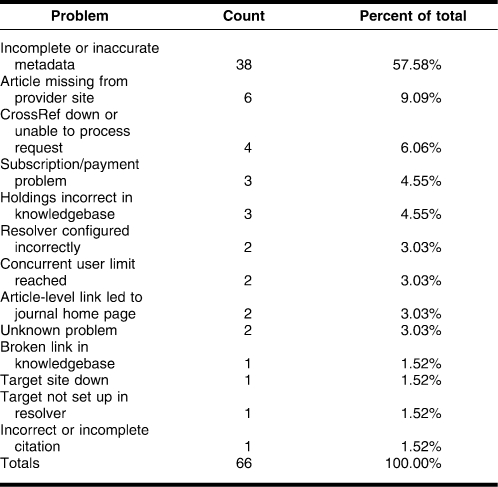

The link resolver introduced additional points of failure. In some cases, a single article generated more than one problem, because the resolver offered more than one full-text link for that article. Table 5 shows the reasons why requested articles were not available electronically via the link resolver. More than half of the sixty-six problems were related to incomplete or inaccurate metadata in the OpenURL generated from the source. Most commonly, the target required a piece of metadata (e.g., issue number) that was not included in the OpenURL. Missing articles were the next most common problem.

Table 5.

Reasons why subscribed articles could not be accessed via the link resolver

In four cases, the article could not be retrieved via the CrossRef service. The OHSU Library's link resolver routes requests through CrossRef for several major full-text providers. Doing so simplifies linking syntax and sometimes is the only way to link directly to the full text of an article. For these four articles, however, retrieval problems were determined to be related to CrossRef (i.e., the service was down or unable to match the incoming OpenURL metadata with a single digital object identifier [DOI] in its database).*

It is also worth noting the problems that were expected but did not occur. No link resolver problems were caused by incorrect configuration of sources, proxy server issues, or supplements that were not available electronically. Cataloging problems were quite rare; cataloging issues interfered with finding the correct catalog record in only 3 of the 416 items tested. Interestingly, problems with the proxy server and with supplements did occur—rarely—when accessing full text from the catalog. The reasons for these anomalies were not documented, but it is possible that full text was accessed from a different source when using the link resolver than when using the catalog. Many of the OHSU Library's journals are available electronically from more than one source, and sources sometimes appear in a different order in the link resolver window than they do in the catalog.

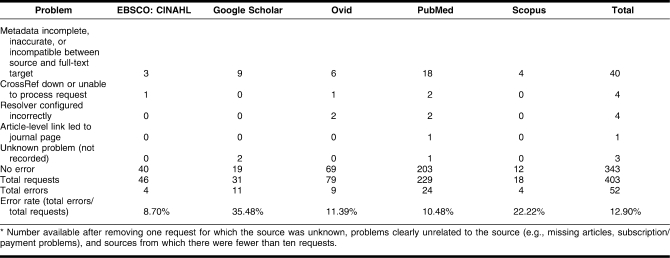

Because the source of a link resolver request was the primary source of metadata for processing the request (the other source being the resolver's knowledgebase) and the majority of link resolver errors were caused by incomplete or incorrect metadata, it was important to analyze the number and nature of link resolver errors by source. As shown in Table 6, the error rate varied considerably across sources. Requests generated from Google Scholar and Scopus had the highest error rates, and nearly all of the errors were caused by metadata problems. These results suggest that the quality of metadata from the source is a key factor in the success of link resolver requests.

Table 6.

Link resolver problems by source, n = 403*

DISCUSSION

Availability

OHSU’s overall availability rate of just under 80% is comparable to the rate that Squires, Moore and Keesee reported, in the only availability study of electronic articles in the biomedical sciences [6]. Not surprisingly, the biggest barrier OHSU users face when trying to retrieve articles is the lack of electronic holdings, accounting for over 90% of failures. Libraries certainly cannot buy everything, and as interdisciplinary work in the health sciences increases, one can expect more requests for articles outside of core biomedical disciplines. This finding, however, does emphasize the relative importance of the collection, in comparison with other issues, in determining users' success in accessing full-text electronic articles. When developing strategies to respond to users' complaints, librarians need to realize that although users may blame the system for their access problems, the actual culprit may not be the system itself but, as in this case, gaps in the collection. In such cases, using resources to improve systems may prove of very little benefit to the user. Those same resources might be better spent on improving the collection.

These results also suggest that embargoes may be a significant barrier to access in the biomedical sciences. Publishers sometimes embargo current issues, especially when making their content available through third-party full-text aggregators. During the embargo period, which can range from a few weeks to 2 years following publication, articles are not available in the aggregated database. In this study, just over 20% of access problems occurred when the requested article was newer than the most recent electronic holdings. The presence of an embargo was not documented; however, since the OHSU Library purchases considerable content from aggregators, embargoes are a likely culprit. Further study would be required to determine the extent to which embargoes prevent users from accessing the articles they need.

Another 20% of electronic access problems were for articles available only in print. While these articles were counted as available when calculating the overall availability rate, literature on user expectations suggests that users do not consider print to be an acceptable substitute for electronic access. Squires, Moore, and Keesee reported that their users favored electronic access and often eschewed articles that were not available electronically [6]. Similarly, in a review article on use of electronic journals, Rowlands cited several articles indicating that use of print journals has declined rapidly, whether or not those journals were available electronically [11]. More recently, De Groote and Barrett found that print usage varied by discipline and quality of the print collection, but that overall usage of print has been declining [12]. So, while this study treats articles available only in print as available, some evidence suggests that users do not do the same.

Link resolver issues

In many ways, link resolvers simplify access to full text. They allow libraries to create and maintain a single knowledgebase of holdings. These holdings are then available via the resolver in all of the library's databases. They also allow libraries to offer a menu of additional options (e.g., print holdings, interlibrary loan request form), which are especially important when full text is not available electronically. Perhaps most importantly, link resolvers make access to full text much more convenient for users, typically connecting the user with the full text of an article in just a few clicks. Such convenient access is possible, because link resolvers connect citations to full text by sending metadata across disparate systems, a process that introduces several potential points of failure. A source database sends OpenURL metadata to a link resolver. The resolver then uses its configuration rules and knowledgebase of library holdings to present a menu to the user and construct links to full-text targets, or in some cases, intermediate services such as CrossRef. These links contain metadata that lead the user to the full text of an article on the target site—if nothing has gone wrong along the way. Unfortunately, as the results of this study indicate, things do go wrong with some frequency. There were problems locating available content in about 15% of the articles.

The OpenURL standard defines the structure of an OpenURL but does not specify the behavior of the link resolver or the full-text target, nor does it specify which pieces of metadata must be included in an OpenURL [13]. So, the quality and completeness of metadata can vary from one source database to another. Similarly, linking syntax varies considerably among full-text targets, with some requiring certain pieces of metadata (e.g., issue number) that might not be sent by all sources. Given this situation, it is not surprising that more than half of the link resolver problems in this study were caused by incomplete, inaccurate, or incompatible metadata.

This study indicates that error rates can vary widely based on the source of the resolver request. Further study is required to identify error patterns associated with each source. It would also be useful to document the full-text target of each request to see if error patterns are associated with particular targets or source-target combinations. If patterns were identified, that information could be shared with database and full-text vendors to encourage improvements.

As potential problems with link resolvers are becoming widely recognized, two National Information Standards Organization (NISO) initiatives are underway to address quality issues. The Knowledge Bases and Related Tools (KBART) initiative is focused on “standards for the quality and timeliness of data provided by publishers to knowledgebases” [14], while Improving OpenURL Through Analytics (IOTA) is working to improve the quality and quantity of OpenURL metadata. More information about IOTA is available from their official blog [15].

Limitations of this study

While this study generated useful information, it has some limitations in both scope and methodology. Testers did not use a search engine to locate full text outside of library retrieval tools, so some articles available in institutional or open access repositories such as PubMed Central were likely missed. Work by Trainor and Price suggests that this omission may be significant: 15% of items in their study were available from free sources but not through the library's link resolver [7]. In addition, the study did not include user behavior. All searching and retrieval was done by librarians with extensive experience using the retrieval tools, so the success rates were likely higher than what users would achieve. This might particularly be the case for the catalog, as searchers had to locate the article after navigating to the journal title page. Testers did not verify print holdings by searching the stacks, so actual print availability might be lower than reported here.

In addition, the results may not be generalizable, as they are likely to have been heavily influenced by factors specific to the OHSU Library: the scope of the collection, methods for maintaining holdings data, the type and configuration of the link resolver, and local cataloging and serials management practices. Additional studies in different environments are needed to create a useful benchmark for availability of electronic articles. The methodology described here, however, may help other libraries identify the barriers their users face when trying to retrieve articles and allocate resources accordingly.

CONCLUSION

An availability study is a useful tool for measuring the quality of electronic access provided by a library and identifying and quantifying barriers to access. As Bachmann-Derthick and Spurlock explain, an availability study provides an objective measure of performance with quantitative data to support conclusions; is cheap and easy to conduct; identifies the areas or steps in a process that cause the most problems, allowing libraries to direct resources where they will do the most good; and is a method with a proven track record [16].

In this time of diminishing budgets, stakeholders and funders are demanding accountability, and libraries are expected to assess outcomes and make data-driven decisions. The results of an availability study can be used locally to allocate staff time and budget dollars where they will do the most good. The results can also be used beyond the local library to identify systemic problems, such as the quality of OpenURL metadata, and inform efforts to address them. The results of this particular study could be used to prioritize spending on new titles, backfiles, or purchases from a source that does not embargo current articles. The data could also be analyzed further to identify specific titles and date ranges that were unavailable most often, so that they could be prioritized for purchase. The library could also share the results relating to metadata problems with the associated vendors and lobby for improvements.

Acknowledgments

Special thanks to Carla Pealer, Oregon Health & Science University, who helped test citations and analyze data, and to Bob McQuillan of Innovative Interfaces, who provided the WebBridge log files that were the basis for this study.

Footnotes

Current affiliation and address: jcrum@coh.org, Director, Library Services, City of Hope, 1500 East Duarte Road, Duarte, CA 91010.

This finding should not be considered criticism of the CrossRef service. Rather, it highlights the fact that an intermediate service such as CrossRef, while providing many benefits to library users, also introduces an additional potential point of failure when retrieving full text via a link resolver.

REFERENCES

- 1.Kantor P.B. Availability analysis. J Am Soc Inf Sci. 1976 Sep;27(5):311–9. doi: 10.1002/asi.4630270507. [DOI] [Google Scholar]

- 2.Mansbridge J. Availability studies in libraries. Libr Inf Sci Res. 1986 Oct/Dec;8(4):299–314. [Google Scholar]

- 3.Nisonger T.E. A review and analysis of library availability studies. Libr Resour Tech Serv. 2007 Jan;51(1):30–49. [Google Scholar]

- 4.Nisonger T.E. A simulated electronic availability study of serial articles through a university library web page. Coll Res Libr. 2009 Sep;70(5):422–45. [Google Scholar]

- 5.Price J.S. How many journals do we have? an alternative approach to journal collection evaluation through local cited article analysis. Serials. 2007 Jul;20(2):134–41. doi: 10.1629/20134. [DOI] [Google Scholar]

- 6.Squires S.J, Moore M.E, Keesee S.H. Electronic journal availability study [Internet] Chapel Hill, NC: Health Sciences Library, University of North Carolina at Chapel Hill [cited 15 Oct 2010]; < http://www.eblip4.unc.edu/papers/Squires.pdf>. [Google Scholar]

- 7.Trainor C, Price J.S. Digging into the data. Libr Tech Reports. 2010;46(7):15–26. [Google Scholar]

- 8.Jayaraman S, Harker K. Evaluating the quality of a link resolver. J Elec Res Med Libr. 2009;6(2):152–62. doi: 10.1080/15424060902932250. [DOI] [Google Scholar]

- 9.Wakimoto J.C, Walker D.S, Dabbour K.S. The myths and realities of SFX in academic libraries. J Acad Libr. 2006 Mar;32(2):127–36. doi: 10.1016/j.acalib.2005.12.008. [DOI] [Google Scholar]

- 10.Kantor P.B. Objective performance measures for academic and research libraries. Washington, DC: Association of Research Libraries; 1984. [Google Scholar]

- 11.Rowlands I. Electronic journals and user behavior: a review of recent research. Libr Inf Sci Res. 2007 Sep;29(3):369–96. doi: 10.1016/j.lisr.2007.03.005. [DOI] [Google Scholar]

- 12.De Groote S.L, Barrett F.A. Impact of online journals on citation patterns of dentistry, nursing, and pharmacy faculty. J Med Libr Assoc. 2010 Oct;98(4):305–8. doi: 10.3163/1536-5050.98.4.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.National Information Standards Organization. The OpenURL framework for context-sensitive services [Internet] Bethesda, MD: The Organization; 2005 [cited 1 Apr 2011]. < http://www.niso.org/standards/z39-88-2004/>. [Google Scholar]

- 14.McCracken P, Arthur M.A. KBART: best practices in knowledgebase data transfer. Ser Libr. 2009 Jan;56(1–4):230–5. doi: 10.1080/03615260802687054. [DOI] [Google Scholar]

- 15.Chandler A. OpenURL quality: official blog of the NISO Improving Openurls Through Analytics (IOTA) Working Group [Internet] 2010 [2010; cited 19 Nov 2010]. < http://openurlquality.blogspot.com>.

- 16.Bachmann-Derthick J, Spurlock S. Journal availability at the University of New Mexico. Adv Ser Manage. Greenwich, CT: JAI Press; 1989. pp. 173–212. p. [Google Scholar]