Abstract

Objective

The evidence base for information technology (IT) has been criticized, especially with the current emphasis on translational science. The purpose of this paper is to present an analysis of the role of IT in the implementation of a geriatric education and quality improvement (QI) intervention.

Design

A mixed-method three-group comparative design was used. The PRECEDE/PROCEED implementation model was used to qualitatively identify key factors in the implementation process. These results were further explored in a quantitative analysis.

Method

Thirty-three primary care clinics at three institutions (Intermountain Healthcare, VA Salt Lake City Health Care System, and University of Utah) participated. The program consisted of an onsite, didactic session, QI planning and 6 months of intense implementation support.

Results

Completion rate was 82% with an average improvement rate of 21%. Important predisposing factors for success included an established electronic record and a culture of quality. The reinforcing and enabling factors included free continuing medical education credits, feedback, IT access, and flexible support. The relationship between IT and QI emerged as a central factor. Quantitative analysis found significant differences between institutions for pre–post changes even after the number and category of implementation strategies had been controlled for.

Conclusions

The analysis illustrates the complex dependence between IT interventions, institutional characteristics, and implementation practices. Access to IT tools and data by individual clinicians may be a key factor for the success of QI projects. Institutions vary widely in the degree of access to IT tools and support. This article suggests that more attention be paid to the QI and IT department relationship.

Keywords: Electronic health records, medical informatics, adoption, evaluation studies, education, medical, continuing, cognitive study, uncertain reasoning and decision theory, process modeling and hypothesis generation, knowledge acquisition and knowledge management, information storage and retrieval (text and images), machine learning, implementation

Introduction

Implementing change in practice patterns for the care of older adults is a translational challenge. Recent reviews on the quality of outpatient care for older adults have noted significant deficits, including low rates of general preventive care,1 improper prescribing,2 and fragmentation of care.3 Improving geriatric care in primary care settings is essential given that the majority of older adults receive their care from primary care physicians and that, by 2030, the number of individuals over 65 will have more than doubled.4

Systematic reviews of education programs have found significant variation in outcomes, with some studies finding positive results5 and others failing to find an effect.6 7 Successful programs appear to be multi-faceted, use information technology (IT) resources, provide feedback, are individually tailored, and offer external rewards.8–12 Programs using educational outreach are particularly successful in modifying clinicians' behavior,13–17 especially when tailored to providers' stage of change and/or the clinician's individual characteristics.18 The inconsistency suggests a knowledge gap regarding what constitute successful program components and implementation processes. In particular, the role of IT in an educational program implementation is often not fully examined, as most educational intervention studies focus on content. Examining the role of IT in the overall implementation process is a key contribution to both the literature in geriatric education and medical informatics.19 In other words, evidence-based knowledge regarding the science of implementation is an essential component of the translation process.20–28 Most importantly, such an analysis contributes to the growing debate on the mechanism of success for informatics interventions.23 29–32

The purpose of this study is to conduct a formative evaluation of the implementation process for a community-based education program entitled ‘Advancing Geriatric Education through Quality Improvement’ (AGE QI). We used both qualitative and quantitative methods starting first with a qualitative exploration of constructs from the PRECEDE/PROCEED implementation model followed by a quantitative exploration of the hypotheses that emerged from the initial qualitative analysis.33 The issues of IT, electronic records, and QI emerged from the qualitative work and were the focus of the quantitative hypotheses. The outcomes of the educational interventions were compliance rates associated with the chosen QI projects. Additional measures were created to stand as proxies for the intensity of intervention and were treated as moderators.

The PRECEDE/PROCEED model

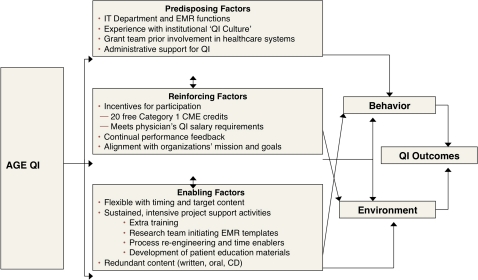

The PRECEDE (predisposing, reinforcing, and enabling constructs in educational diagnosis and evaluation) model was developed to support the design of effective healthcare interventions, as well as to provide a model to evaluate program outcomes.33 It is a motivational model that includes all levels of change recommended by Shortell34 and has been used extensively across a variety of domains, including healthcare and education.35–37 The model as adapted for our study is presented in figure 1.

Figure 1.

Advancing Geriatric Education through Quality Improvement (AGE QI) PRECEDE/PROCEED model, adapted from Green and Kreuter.33 CD, compact disks; CME, continuing medical education; EMR, electronic medical record; IT, information technology; QI, quality improvement.

According to the model, change occurs as a result of the interaction between three categories of variables: ‘predisposing factors’, which lay the foundation for success (eg, electronic medical records or strong leadership); ‘reinforcing factors’, which follow and strengthen behavior (eg, incentives and feedback); and ‘enabling factors’, which activate and support the change process (eg, support, training, computerized reminders and templates or exciting content).

Methods

The University of Utah Institutional Review Board and the research oversight committees of all three institutions approved the AGE QI educational intervention. The methods section is divided into qualitative and quantitative components.

Description of the intervention

The AGE QI educational program includes five components: (1) a 35-page previsit syllabus and CD with geriatric didactic information; (2) three outreach visits, including an initial 1–2 h lecture on geriatric assessment essentials, a second onsite visit 1 month later focusing on QI project planning, and final review; (3) customized design and implementation of computerized tools for alerting, documentation, tracking, and decision support; (4) intensive ongoing weekly support, including phone calls and e-mails, creation of clinic-specific educational materials, work-process re-engineering, and monthly performance feedback; and (5) 20 free continuing medical education (CME) credits. Table 1 has the details.

Table 1.

Topics and implementation activities by clinic and institution

| Clinic | # of providers* | System | QI topic | # of visits | # of calls/emails | Patient education material† | Staff training | Custom EMR |

| 1 | 3 | VA | Cognition | 4 | 17 | No | Yes | Yes |

| 2 | 3 | VA | Falls | 3 | 22 | No | No | Yes |

| 3 | 5 | VA | Driving | 3 | 23 | Yes | No | Yes |

| 4 | 2 | VA | – | 5 | 31 | No | No | Yes |

| 5 | 1 | IHC | Adv. direct | 3 | 20 | No | Yes | No |

| 6 | 6 | IHC | Adv. direct | 3 | 15 | No | Yes | No |

| 7 | 3 | IHC | Falls | 2 | 14 | No | Yes | No |

| 8 | 4 | IHC | Falls | 3 | 20 | No | Yes | Yes |

| 9 | 4 | IHC | Depression | 3 | 8 | No | Yes | Yes |

| 10 | 3 | IHC | Pneumovax | 3 | 11 | No | Yes | No |

| 11 | 8 | IHC | – | 3 | 8 | No | Yes | No |

| 12 | 4 | IHC | Adv. direct | 7 | 16 | No | No | No |

| 13 | 2 | IHC | – | 2 | 14 | No | Yes | No |

| 14 | 2 | IHC | Pneumovax | 3 | 20 | No | Yes | No |

| 15 | 5 | IHC | Pneumovax | 3 | 8 | No | No | No |

| 16 | 2 | IHC | – | 1 | 2 | No | No | No |

| 17 | 3 | IHC | Pneumovax | 1 | 0 | No | No | No |

| 18 | 1 | IHC | – | 1 | 3 | No | No | No |

| 19 | 7 | IHC | Falls | 1 | 3 | No | No | No |

| 20 | 9 | IHC | Falls | 1 | 1 | No | No | No |

| 21 | 2 | IHC | Falls | 1 | 2 | No | No | No |

| 22 | 2 | IHC | – | – | – | – | – | – |

| 23 | 4 | IHC | Adv. direct | 2 | 3 | No | No | No |

| 24 | 2 | UHC | Falls | 9 | 15 | Yes | Yes | Yes |

| 25 | 5 | UHC | Falls | 8 | 22 | Yes | Yes | Yes |

| 26 | 5 | UHC | Falls | 7 | 22 | Yes | Yes | Yes |

| 27 | 3 | UHC | Falls | 8 | 15 | Yes | Yes | Yes |

| 28 | 4 | UHC | Falls | 7 | 12 | Yes | Yes | Yes |

| 29 | 11 | UHC | Falls | 7 | 12 | Yes | Yes | Yes |

| 30 | 2 | UHC | Falls | 7 | 12 | Yes | Yes | Yes |

| 31 | 5 | UHC | Falls | 8 | 11 | Yes | Yes | Yes |

| 32 | 10 | UHC | Falls | 4 | 10 | Yes | Yes | Yes |

| 33 | 2 | UHC | Falls | 8 | 11 | Yes | Yes | Yes |

Refers to the number of ordering providers in the clinic.

Refers to the whether the research created patient education materials for the clinic.

Adv. direct, advanced directives; EMR, electronic medical record; IHC, Intermountain Healthcare Medical Group clinics; QI, quality improvement; UHC, University of Utah Health Care clinics; VA, VA Salt Lake City Health Care System community-based outpatient clinics.

Data sources and analysis

The study used three distinct data sources. Comprehensive data were extracted from a detailed logbook or ‘diary’ kept by the geriatric nurse educator. The logbook included specific dates and content, mode of interaction, as well as daily thoughts and impressions of the implementation team. We used reports of substantive interaction with clinics, including phone calls, extra visits and emails. The second source of data was the formative interviews conducted by the evaluation team which were tape recorded and analyzed for content. These interviews were tape recorded and analyzed thematically based on PRECEDE/PROCEED constructs. The third source of data came from compliance reports collected either from the information system in each setting or from data collected by staff during the process of care.

Settings

Thirty-three clinics across three institutional settings were approached to participate in the educational intervention: (1) 10 University of Utah Health Care (UHC) Community Clinics; (2) 19 Intermountain Healthcare Medical Group clinics (IHC); and (3) four VA Salt Lake City Health Care System community-based outpatient clinics (VA). See table 1 for details. All sites used an electronic health record (EHR).

EHR usage

The VA has had mandated provider order entry with electronic text entry for over 12 years using CPRS. The software comes with customizable fields and an alerting program that can use any orderable item to design a clinical reminder. All orders are entered electronically. The UHC uses Epic@ and includes all orders and electronic notes. Usage is generally mandated. IHC was transitioning from HELP2 to a GE product. Usage was not mandated until 2010. About 70% of drugs were ordered electronically and about 90–99% of notes were entered electronically during the study period. Laboratory results, procedures, and consultations are not included in the ordering system (Len Bowes, MD, personal communication). Usage of specific tools varied across clinics.

Description of participants

Of the 33 clinics that agreed to participate in session I, six chose not to complete the full geriatric QI project. Reasons include: (1) lack of provider participation; (2) lack of interest in a geriatric QI project; (3) low numbers of clinic geriatric population.

Qualitative methods

The analysis was conducted iteratively using the PRECEDE/PROCEED theory to guide inspection and discussion, rather than a grounded theory approach because the overarching purpose was to build on existing implementation theoretical constructs. The analysis was conducted by the research team and consisted of iterative discussions over a 6-month time period. Data were integrated from the formative interviews, weekly meeting reports, and logbooks. Mapping the data on to the constructs of the PRECEDE/PROCEED framework was performed by discussion and through consensus. Member checking was carried out with some of the clinic participants.

Quantitative methods

The quantitative analysis explored a key finding from the qualitative work that the relationship between the IT and QI departments appeared to differ between institutions and may have contributed to outcomes through the degree of support provided by the research team. Outcomes included pre–post changes, final compliance levels, duration of program implementation, and the CME/provider ratio. Analysis of covariance was used to tease out the effects of supportive activities on differences found in institutions.

Results

The results are divided into two sections. The first is the qualitative analysis of the implementation process using the PRECEDE/PROCEED model. The second reports on a quantitative analysis addressing the emergent hypothesis regarding the relationship between organizational structure, implementation processes, and outcomes.

Qualitative results of the PRECEDE/PROCEED model

The following analysis presents process evaluation results organized around the concepts identified in the PRECEDE/PROCEED model.

Predisposing or antecedent factors

Three categories of predisposing factors were identified: (1) IT department function; (2) institutional experience with QI; (3) characteristics of the implementation team.

IT department structure and function

The existence of an effective EHR in each setting was a key factor. All three institutions have some form of EHR. However, the degree of adoption varied substantially across institutions (table 2).

Table 2.

Description of clinical settings

| Name | No of clinics | Description | EHR use | Location |

| UHC | 10 | Public, academic, tertiary care center, EHR not fully used by clinics | Uses Epic in outpatient clinics for last 8 years. Use is mandated and includes all orders and notes | All 10 of the UHCs in Salt Lake City |

| VA | 4 | Public, patient population restricted, full electronic medical record used by all providers | Uses VA CPRS system for both inpatient and outpatient for last 12 years. Use is mandated and includes all orders and notes | Affiliated VA community-based outpatient clinics are located from southwestern Utah to southeastern Idaho |

| IHC | 19 | Large, not-for-profit, integrated healthcare system. EHR not mandated and used by staff only in most clinics | HELP2 is used overall, but only mandated in 2010. Only orders for meds and notes electronic. Use of various tools in the EHR varies | 19 of 46 IHCs located across Utah |

EHR, electronic health medical record; IHC, Intermountain Healthcare Medical Group clinics; UHC, University of Utah Health Care clinics; VA, VA Salt Lake City Health Care System community-based outpatient clinics.

The ease by which IT could access resources also emerged as central to the process. Data input screens had to be customized for most QI projects and data retrieval mechanisms needed to be set up in order to provide monthly feedback to clinics. As a result, identifying the mechanism for ‘activating’, the IT department support took up significant time for the implementation team. The VA required a direct request to meet the clinical application coordinator by email or phone, which could be initiated by any clinician. Data retrieval was directly under the control of clinic administrators through the set up of ‘clinical reminder’ reports, which did not require ongoing intervention by the data analyst.

IHC has also had significant experience integrating IT and QI. However, creating and changing clinical reminders, alerts, and templates required high levels of approval and was not perceived to be achievable within the timeframe of 6–9 months. As a result, few changes were made to assist the QI projects directly. However, the system was sufficiently robust to support many (but not all) of the identified QI activities to some degree. Data extraction was difficult, requiring multiple interactions at the institutional level.

The university system required relatively high-level approval from several different administrative committees. The institution decided to support a single QI activity—fall screening and prevention—for all 10 clinics. The time to obtain approvals and build new fields for falls in Epic@ was about 1.5 years. Screens and clinical reminders were built accordingly. Data retrieval requests at the institutional level required 3–6 months. However, mechanisms were in place for local clinics to retrieve some compliance data at the clinic level.

QI experience

All three institutions had substantial experience with QI activities, although there was substantial variation between institutions. IHC has a history of an exceptionally strong program and has imbedded QI principles into everyday care for the last 12 years.38 They also give incentives to clinicians to conduct QI activities. The VA has implemented systematic performance measurement reviews covering most clinical care areas. They have invested in significant QI training for clinicians, but clinicians are not, in general, given incentives to conduct QI activities. UHC has a well-established QI office whose main focus is meeting institutional requirements, and they do not mandate QI training, nor provide individual incentives for QI activities.

Implementation team

The third predisposing factor was the composition of the study team and their relationship with the three institutions. At least one member of the research team worked at each of the institutions. These personal connections allowed ‘insider’ knowledge of how the system worked, where resources were located, and what individuals would be good change agents.

Reinforcing factors (incentives and disincentives)

Reinforcing factors serve to improve motivation, sustain interest, and focus attention, both individually and as a group. We identified three reinforcing factors: (1) CME credit; (2) anonymous feedback; (3) alignment with institutional goals.

CME program

Free CME credits were given for completion of the full program with a possible total of 20 h per provider. This study was one of the first in the nation to utilize AMA PRA Category 1 credits for performance improvement in the outpatient setting. It was so well organized that it recently won the 2011 National Award for Outstanding CME Outcomes Assessment by the Alliance for Continuing Medical Education (CME). Overall, 1085 credits have been awarded to 102 providers.

Feedback

The provision of performance feedback is a key evidence-based component of education and clinical guideline implementation. The implementation team attempted to provide performance feedback monthly. This feedback took the form of compliance with the nature of the QI project (eg, number screened/number eligible per month) and was presented in the form of a control chart.

Alignment with ongoing institutional goals

Alignment with larger institutional goals appeared to be particularly important overall especially for prioritizing IT resources and time. Projects outside the institution's priorities suffered significant delays and required more effort. For example, the VA clinic that chose driving assessments for older patients required substantial new dialog language in the reminders and was only used in that clinic. In contrast, because UHC chose fall prevention for all of their clinics in order to meet the Joint Commission's National Patient Safety Goal No 9 (reducing the risk of harm resulting from falls), the IT department and the QI department were able to work synergistically (the institutional solution improved access to IT resources). At IHC, the marketing of the program as a method to fulfill QI project mandates was useful for creating interest and was also in alignment with the overarching quality goals of IHC.

Enabling or barrier factors

Enabling factors energize and stabilize the intervention. We identified four key enabling factors: (1) flexibility; (2) supportive activities; (3) implementation of supportive activities, and (4) redundant content.

Flexibility

We identified ‘flexibility’ as a key enabling factor, and included the willingness to adjust the timing, content, and resources of the program as needed ‘at the clinic level’. Session presentations were given anytime between 07:00 and 19:00. This flexibility, however, put extra demands on the IT department for variation in content, timing, and level of support.

EHR supportive activities

Across all three institutions, the research staff assisted at all levels of EHR usage and design, but the type of support varied significantly at different institutions. At the VA, data entry variables and note templates were created by the IT liaisons, taking a few weeks to create and not requiring higher-level approval. UHC required over a year of meetings to acquire the necessary institutional approvals and several months to develop and test new input templates. No attempts were made to change the input screens at IHC, but much more effort was put into training staff at clinics to use the EHR more intensively.

Implementation of supportive activities

The research staff participated in work-process re-engineering, one-on-one training, and general consultation for all clinics. Most of the clinics required extra training of staff and weekly phone calls until the QI project was more fully implemented. The need for idiosyncratic, clinic-specific, and provider-specific tools and support became increasingly more obvious as we completed implementation at all 33 clinics.

Redundant content

Methods of content delivery ranged from lectures incorporating an audience response system, written materials (PowerPoint slides and pocket cards), posters, handouts, computerized alerts, and ‘academic detailing.’ Although there was significant emphasis on using the computerized technology, computer tools were not sufficient to serve as reminders because substantial patient interaction occurred without a computer. In other words, computerized interventions had to be supplemented by non-computerized support components in order to deliver an effective program.

Summary

The qualitative analysis above identified key IT factors important for success that varied across the three organizations. The implementation team responded to these variations by adapting their strategies as needed (see the section on flexibility). Because of the variation in strategies across institutions, it is difficult to tease out the independent influence of organizational structure and implementation activities. The following analysis is an attempt to explore these questions quantitatively.

Quantitative analysis of clinic implementation metrics and QI outcomes

Analysis was performed at the clinic level only and has four components: (1) descriptive results; (2) differences in outcomes across institutions (pre–post changes, final compliance levels, duration of program, and the CME/provider ratio); (3) differences between institutions in the intensity of supportive activities; (4) differences between institutions in outcomes, after supportive activities had been controlled for in order to tease out the independent effects of organizations. Table 3 presents the data aggregated by institution.

Table 3.

Mean differences in study variables across institutions

| Study variable | VA (4) | IHC (19) | UHC (10) | Significance |

| Pre/post change | 66% | 27% | 15% | F2,24=4.33; p=0.025 |

| Duration of implementation (months) | 11 | 11 | 20 | F2,24=30.04; p=0.00 |

| CME hours per provider | 4.08 | 14.88 | 13.03 | F2,29=2.39; p=0.11 |

| No of visits | 3.33 | 2.47 | 7.30 | F2,25=35.45; p=0.00 |

| No of contacts | 20.67 | 9.67 | 14.20 | F2,25=4.58; p=0.02 |

| Patient education materials | 25% | 5% | 100% | χ2=28.68; p=0.00 |

Significance was assumed at p=0.05.

CME, continuing medical education; IHC, Intermountain Healthcare Medical Group clinics; UHC, University of Utah Health Care clinics; VA, VA Salt Lake City Health Care System community-based outpatient clinics.

Descriptive

Overall, 82% of the clinics completed the 6-month QI project (100% UHC, 74% IHC, and 75% VA) for a total of 128 providers and 252 staff. Of those programs that completed the project, 75% improved outcomes to some degree. The average percentage improvement (post–pre differences) ranged from −0.09% to 100%, with a mean of 21%. The average number of CMEs per provider (a variable indicating provider involvement) was 12.59, and the average length of time to implement (preparation time plus 6 months QI implementation) was 14 months. A total of 131 visits were made for an average of four/clinic, and a range of one to nine. In addition, over 134 additional contacts were made (by phone or email) with an average of 4.06 per clinic. Specific clinic level implementation activities and outcomes are presented in table 3.

Differences in outcomes across institutions

Pre–post changes

Pre–post changes in compliance across QI outcomes across institutions were found to differ significantly (F2,24=4.33; p=0.025). The VA had the largest increase of 66%, with IHC and UHC at 27% and 15%, respectively. Tests for heterogeneity of variance were non-significant. Table 3 shows the means.

Duration of implementation

Duration of implementation also significantly differed across institutions (F2,24=30.04; p=0.000) with the VA and IHC taking 11 months on average, and 20 months for UHC. Tests for heterogeneity of variance were non-significant. Although we only have estimates of the cause of the delay, it appeared that obtaining approval for EHR customization (templates, reminders, and fields) was the largest single reason for the delay, followed by the need for extra training to use the computer. In both cases, the VA had the shortest time, with no real need for institutional approvals and no required training. IHC did not carry out any clinic level customization (so that their time was shorter), but did require some extra training. UHC required a year and a half of meetings to obtain approvals for changes in Epic and to conduct the necessary training.

CME hours per provider

Finally, we found no significant differences in the number of hours of CME per provider earned across institutions. This outcome variable was the least sensitive to IT issues, as the providers had the most control (they could complete as much of the required work as they wanted).

Differences in supportive activities

We also found significant differences in the amount of supportive activities required between institutions. UHC received nearly twice as many extra visits on average as well as extra patient education materials (VA and IHC did more of their own). However, the VA received significantly more contacts than UHC or IHC, mostly because the research team could more directly design the clinical reminders and note templates.

Differences in outcomes controlling for supportive activities

To examine the effect of institutional differences on outcomes independent of supportive activities, we conducted an analysis of covariance, which tests for the impact of a variable after controlling for a covariate. We also controlled for the number of providers (a surrogate for the size of the clinic) and the overall number of contacts (as a surrogate for the intensity of support) on the two outcome measures (post–pre compliance and implementation time).

Significant differences between institutions remained for post–pre changes after the number of visits and the number of providers had been controlled for (F3,23=4.02; p=0.05). An even stronger effect was noted for implementation time with the difference between systems being highly significant (F3,23=26.44; p=0.00). In both analyses, the numbers of contacts and providers were non-significant.

Duration of implementation

The significant differences between institutions regarding the average duration of completion remained after the number of visits had been controlled for (F3,23=11.56; p=0.000), but, as in the above analyses, number of visits became non-significant in the overall model. Similarly, the comparison between institutions for the duration of implementation after number of contacts had been controlled for was also significant (F3,23=28.28; p=0.000), with the number of contacts becoming non-significant.

Summary

In summary, the exploratory quantitative analysis presented above indicates that institutional characteristics had a strong relationship with outcomes. Because we found that these differences persisted after controlling for supportive activities, we have tentative support for our general hypothesis of the importance of variation in institutional QI–IT relationships while disentangling the research implementation process.

Discussion

This theory-based process analysis identified predisposing, reinforcing and enabling factors that are important to the success of a multi-component educational intervention. The IT role was integral in all three categories. Two general IT themes emerged from the qualitative analysis and were explored in the quantitative section. First, the use of IT in clinical change processes is both idiosyncratic and ubiquitous, across all organizational levels. Each clinic required a unique combination of support, re-engineering, education, and customization activities. Second, the qualitative results highlighted how each organization has a unique pattern of integrating IT with QI and clinical work processes, and this relationship may be a critical implementation factor to success.

The first theme regarding the idiosyncratic requirements of each clinic and clinician suggests that it might not be possible to ever ‘standardize’ or even directly predict the implementation process. Providing individualized IT tools at both the clinic and clinician level may be a critical component of success for any clinical intervention and should be part of assessment for readiness. The importance of systematically ‘diagnosing’ a clinical context has been promulgated by a number of health service researchers in the form of readiness assessments.39–41 This perspective is also congruent with recent work highlighting the impact of micro-systems42 and the complexity of workflow modeling.43 Future evolutions of EHRs and IT should move to make tools available to support the clinician's information management goals and educational learning needs.44

The finding that each organization has a unique pattern of integrating IT with QI and clinical work processes suggests that this relationship may provide a causal mechanism for implementation success that is often not considered.34 45–51 Easy access to IT departmental resources can significantly affect how well quality and educational programs are developed and implemented, a finding noted by others.52 We identified the concept of IT department ‘activation’, which refers to both the ease of access and the degree of customization available at the clinic level. Mechanisms to access IT resources and the IT/QI department relationship has not been explicitly explored and could be an area of future work. One way to identify mechanisms is to hypothesize them directly. In their model for Realistic Evaluations, Pawson and Tilley suggest that it is important to identify context mechanism outcome configurations.53 From this perspective, the IT/QI relationship may support implementation success through several causal processes. First, it may lead to greater congruence or alignment across the institution, from leadership to staff. Second, it may bring the deep history of change processes into the hands of IT support staff. Or, third, it may simply bring resources that are hard to come by in strapped institutions (eg, spread the pain).

These findings do not diminish the role of understanding patterns of adoption at the individual level. Models, such as the Technology Adoption Model,54 55 were developed from older value-expectancy motivational models, such as the Theory of Planned Behavior56 and Theory of Reasoned Action,57 in which intentions are predicted from attitudes, social norms, values, and outcome expectancies. The Technology Adoption Model specifically focuses on usefulness and usability of the technology. Our intervention was not particularly new and, in fact, the implementation team attempted to minimize any significant change in their electronic environment. Hence we would expect that the variables measured in the Technology Adoption Model would be less useful in predicting behavior for this intervention.

Scientific importance

Several authors have called for a greater clarification of the phenomenon of information technology as a causal variable and for a more theoretical approach to design and implementation.58–61 For example, the authors from the RAND Corporation recently published a meta-analysis of the costs and benefits of health information technology,61 noting: ‘In summary, we identified no study or collection of studies, outside of those from a handful of HIT leaders, that would allow a reader to make a determination about the generalizable knowledge of the system's reported benefit … This limitation in generalizable knowledge is not simply a matter of study design and internal validity.’ (p 4). This work is a call to increase the theoretical grounding of informatics. The strength of the study is the integration of quantitative and qualitative findings. By extending the qualitative findings into exploratory quantitative analysis, the groundwork is laid for better, more theoretically based hypothesis testing in this field.

The idiosyncratic and unique requirements of each clinic meant that the supportive activities were applied differently in each setting, making it not possible to tease out the intensity of support from need. This dilemma is at the core of key issues in the tension between program evaluation and the need for scientific generalizability. It is also the point made in a recent systematic review of the impact of context on the success of QI activities.62 Although the results may not be entirely surprising, this study illustrates the great need to develop theoretical hypotheses about the mechanisms of action prior to engaging in a study and following up with those measures as part of a systematic analysis. Most current theories of implementation are too broad to lead to testable questions. Identifying these questions more specifically as they arise from formative analyses and planning can enhance generalizabiilty and the building of a science of informatics. These questions can include a focus on the conditions that might need to be triggered to create the needed mechanisms, the possible interaction of different factors that might be causally related to outcomes and an examination across all levels from individual providers to the organizational level.

The results of this study also provide practical advice to future designers of clinical interventions regarding the combination of institutional and implementation approaches that should be addressed.63 Recent reviews have noted the importance of an integrated educational model, and this study provides a model of the implementation process that integrates multiple levels of change.64 65

Finally, the new emphasis on comparative effectiveness research requires a deeper understanding of the underlying causal mechanisms of IT interventions.66 This understanding requires a stronger emphasis on theoretical perspectives if a generalizable science is to be developed.67

Limitations

This study has several limitations. Only three organizations participated and not all clinics within each institution were approached. Those who participated might be different on some immeasurable dimension than those who did not. The quantitative analysis is essentially exploratory only and should not be overinterpreted, as random assignment was not performed and sample size varied across groups and in one case was quite small. Because we did not quantify the institutional variables identified in the qualitative portion of our study (IT access and the IT/QI relationship), we cannot test for their impact directly. As a result, our conclusions are only suggestive. The investigator team served as the implementation facilitators and their role is inextricably intertwined with the outcomes. This paper was an attempt to measure those relationships directly. Finally, the three institutions are all in the same part of the country, resulting in unknown cultural and population bias.

Conclusions

This study highlights the complex role of IT in the design and implementation of educational and QI projects. Access to IT and the degree of integration between the institutional departments of IT and quality emerged as particularly salient in this study and should be investigated more systematically in future studies. Enhancing tools to bring control of IT interventions to the clinic and the individual clinician may be a necessary component of future success.

Footnotes

Funding: Funded by a grant from the Donald W Reynolds Foundation to MS. Supported by the University of Utah Center for Clinical and Translational Science NIH grant #1U54RR023426-01A1.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Wenger N, Solomon D, Roth C, et al. The quality of medical care provided to vulnerable community-dwelling older patients. Ann Intern Med 2003;140:897–901 [DOI] [PubMed] [Google Scholar]

- 2.Hanlon JT, Schmader KE, Ruby CM, et al. Suboptimal prescribing in older inpatients and outpatients. J Am Geriatr Soc 2001;49:200–9 [DOI] [PubMed] [Google Scholar]

- 3.Wenger NS, Roth CP, Shekelle PG, et al. A practice-based intervention to improve primary care for falls, urinary incontinence, and dementia. J Am Geriatr Soc 2009;51:547–55 [DOI] [PubMed] [Google Scholar]

- 4.Mold JW, Fryer GE, Phillips RL, Jr, et al. Family physicians are the main source of primary health care for the Medicare population. Am Fam Physician 2002;66:2032. [PubMed] [Google Scholar]

- 5.Reeve JF, Peterson GM, Rumble RH, et al. Programme to improve the use of drugs in older people and involve general practitioners in community education. J Clin Pharm Ther 1999;24:289–97 [DOI] [PubMed] [Google Scholar]

- 6.Goldberg HI, Wagner EH, Fihn SD, et al. A randomized controlled trial of CQI teams and academic detailing: can they alter compliance with guidelines? Jt Comm J Qual Improv 1998;24:130–42 [DOI] [PubMed] [Google Scholar]

- 7.Witt K, Knudsen E, Ditlevesen S, et al. Academic detailing has no effect on prescribing of asthma medication in Danish general practice: a 3-year randomized control trial with 12-monthly follow-ups. Fam Pract 2004;21:248–53 [DOI] [PubMed] [Google Scholar]

- 8.Davis D, O'Brian MA, Freemantle N, et al. Impact of formal continuing medical education: do conferences, workshops, rounds and other traditional continuing education activities change physician behavior or health care outcomes? JAMA 1999;282:867–74 [DOI] [PubMed] [Google Scholar]

- 9.Jamtvedt G, Young JM, Kristofferson DT, et al. Audit and feedback: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2006;(2):CD000259. [DOI] [PubMed] [Google Scholar]

- 10.Oxman AD, Thomson MA, Davis DA, et al. No magic bullets: a systematic review of 102 trials of interventions to improve professional practice. CMAJ 1995;153:1423–31 [PMC free article] [PubMed] [Google Scholar]

- 11.Smith F, Singleton S, Hilton S. General practitioners' continuing education: a review of policies, strategies and effectiveness and their implications for the future. Br J Gen Pract 1998;48:1689–95 [PMC free article] [PubMed] [Google Scholar]

- 12.Thomson O'Brien MA, Freemantle N, Oxman AD, et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2001;(2):CD003030. [DOI] [PubMed] [Google Scholar]

- 13.Grimshaw JM, Thomas RE, MacLennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess 2004;8:iii–iv, 1–72. [DOI] [PubMed] [Google Scholar]

- 14.Grol R. Successes and failures in the implementation of evidence-based guidelines for clinical practice. Med Care 2001;39(8 Suppl 2):II46–54 [DOI] [PubMed] [Google Scholar]

- 15.Hermens RP, Hak E, Hulscher ME, et al. Adherence to guidelines on cervical cancer screening in general practice: programme elements of successful implementation. Br J Gen Pract 2001;51:897–903 [PMC free article] [PubMed] [Google Scholar]

- 16.Thomson O'Brien MA, Oxman AD, Davis DA, et al. Educational outreach visits: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2000;(2):CD000409 [DOI] [PubMed] [Google Scholar]

- 17.Weingarten SR, Henning JM, Badamgarav E, et al. Interventions used in disease management programmes for patients with chronic illness-which ones work? Meta-analysis of published reports. BMJ 2002;325:925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shirazi M, Zeinaloo AA, Parikh S, et al. Effects on readiness to change of an educational intervention on depressive disorders for general physicians in primary care based on a modified Prochaska model - a randomized controlled study. Fam Pract 2008;25:98–104 [DOI] [PubMed] [Google Scholar]

- 19.Zerhouni E. Medicine. The NIH Roadmap. Science 2003;302:63–72 [DOI] [PubMed] [Google Scholar]

- 20.Cretin S, Farley DO, Dolter KJ, et al. Evaluating an integrated approach to clinical quality improvement: clinical guidelines, quality measures and supportive system design. Medi Care 2001;39(8 Suppl 2):II70–84 [DOI] [PubMed] [Google Scholar]

- 21.Glasgow R, Green LA, Ammerman A. A focus on external validity. Eval Health Prof 2007;30:115–7 [Google Scholar]

- 22.Green LA, Glasgow RE. Evaluating the relevance, generlaization and applicability of research: issues in external validity and translation methodology. Eval Health Prof 2006;29:126–53 [DOI] [PubMed] [Google Scholar]

- 23.Hagedorn H, Hogan M, Smith JL, et al. Lessons learned about implementing research evidence into clinical practice. Experiences from VA QUERI. J Gen Intern Med 2006;21(Suppl 2):S21–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hulscher ME, Wensing M, van Der Weijden T, et al. Interventions to implement prevention in primary care. Cochrane Database Syst Rev 2001;(1):CD000362. [DOI] [PubMed] [Google Scholar]

- 25.Stetler CB, Legro MW, Rycroft-Malone J, et al. Role of “external facilitation” in implementation of research findings: a qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implement Sci 2006;1:23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Stetler CB, Legro MW, Wallace CM, et al. The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med 2006;21(Suppl 2):S1–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sung NS, Crowley WF, Jr, Genel M, et al. Central challenges facing the national clinical research enterprise. JAMA 2003;289:1278–87 [DOI] [PubMed] [Google Scholar]

- 28.Glasgow RE, Klesges LM, Dzewaltowski DA, et al. The future of health behavior change research: what is needed to improve translation of research into health promotion practice? Ann Behav Med 2004;27:3–12 [DOI] [PubMed] [Google Scholar]

- 29.Bakken S, Ruland CM. Translating clinical informatics interventions into routine clinical care: how can the RE-AIM framework help? J Am Med Inform Assoc 2009;16:889–97 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 31.Shcherbatykh I, Holbrook A, Thabane L, et al. Methodologic issues in health informatics trials: the complexities of complex interventions. J Am Med Inform Assoc 2008;15:575–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Weir CR, Staggers N, Phansalkar S. The state of the evidence for computerized provider order entry: a systematic review and analysis of the quality of the literature. Int J Med Inform 2009;78:365–74 [DOI] [PubMed] [Google Scholar]

- 33.Green LW, Kreuter MW. Health Program Planning: an Educational and Ecological Approach. 4th edn New York: McGraw-Hill, 2005 [Google Scholar]

- 34.Shortell SM. Increasing value: a research agenda for addressing the managerial and organizational challenges facing health care delivery in the United States. Med Care Res Rev 2004;61(3 Suppl):12S–30S [DOI] [PubMed] [Google Scholar]

- 35.Battista RN, Williams JI, MacFarlane LA. Determinants of preventative practices in fee-for-service primary care. Am J Prev 1990;6:6–11 [PubMed] [Google Scholar]

- 36.Daniel M, Green LW, Marion SA, et al. Effectiveness of community-directed diabetes prevention and control in a rural Aboriginal population in British Columbia, Canada. Soc Sci Med 1999;48:815–32 [DOI] [PubMed] [Google Scholar]

- 37.Greenhalgh T, Robert G, Macfarlane F, et al. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q 2004;82:581–629 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.James B, Lazar J. Sustaining and extending clinical improvements: a health system's use of clinical programs to build quality infrastructure. In: Nelson EC, Batalden PB, Lazar JS, eds. Practice-Based Learning and Improvement: A Clinical Improvement Action Guide. 2nd edn Florida: Joint Commission on Accreditation, 2007:95–108 [Google Scholar]

- 39.Armenakis A, Harris S, Mossholder K. Creating readiness for organizational change. Human Relations 1993;46:681–703 [Google Scholar]

- 40.Holt D, Armenakis A, Harris S, et al. Toward a Comprehensive Definition of Readiness for Change: A Review of Research and Instrumentation. Amsterdam, Netherlands: JAI Press, 2006 [Google Scholar]

- 41.Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev 2008;65:379–436 [DOI] [PubMed] [Google Scholar]

- 42.Nelson E, Batalden P, Godfrey ME. Quality by Design: a Clinical Microsystems Approach. Jossey-Bass, ed. San Francisco, California: Jossey-Bass, 2007 [Google Scholar]

- 43.Malhotra S, Jordan D, Shortliffe E, et al. Workflow modeling in critical care: piecing together your own puzzle. J Biomed Inform 2007;40:81–92 [DOI] [PubMed] [Google Scholar]

- 44.Weir CR, Nebeker JJ, Hicken BL, et al. A cognitive task analysis of information management strategies in a computerized provider order entry environment. J Am Med Inform Assoc 2007;14:65–75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Goldzweig CL, Parkerton PH, Washington DL, et al. Primary care practice and facility quality orientation: influence on breast and cervical cancer screening rates. Am Managed Care 2004;10:265–72 [PubMed] [Google Scholar]

- 46.Hogg W, Rowan M, Russell G, et al. Framework for primary care organizations: the importance of a structural domain. Intl J Qual Health Care 2007;20:308–13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lorenzi NM, Novak LL, Weiss JB, et al. Crossing the implementation chasm: a proposal for bold action. J Am Med Inform Assoc 2008;15:290–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Mintzberg H. The Structuring 0f Organizations. New Jersey, Englewood Cliffs: Pretince -Hall, 1979 [Google Scholar]

- 49.Li R, Simon J, Bodenheimer T, et al. Organizational factors affecting the adoption of diabetes care management processes in physician organizations. Diabetes Care 2004;27:2312–16 [DOI] [PubMed] [Google Scholar]

- 50.Thompson L, Sarbaugh-McCall, Norris D. The social impacts of computing: control in organizations. Soc Sci Comput Rev 1989;7:407–17 [Google Scholar]

- 51.Yano EM. Influence of health care organizational factors on implementation research: QUERI Series. Implementation Sci 2008;3:29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Weir CR, Hicken BL, Rappaport HS, et al. Crossing the quality chasm: the role of information technology departments. Am J Med Qual 2006;21:382–93 [DOI] [PubMed] [Google Scholar]

- 53.Pawson R, Tilley N. Realistic Evaluations. London: Sage, 1997 [Google Scholar]

- 54.Davis F. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly 1989;13:319–39 [Google Scholar]

- 55.Venkatesh V, Morris M, Davis G, et al. User acceptance of information technology: toward a unified view. MIS Quarterly 2003;27:425–78 [Google Scholar]

- 56.Ajzen I. The theory of planned behavior. Organ Behav Hum Decis Process 1991;50:179–211 [Google Scholar]

- 57.Fishbein M, Ajzen I. Belief, Attitude, Intention and Behavior: an Introduction to Theory and Research. Reading, MA: Addison-Wesley, 1975 [Google Scholar]

- 58.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 59.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med 2003;163:1409–16 [DOI] [PubMed] [Google Scholar]

- 60.Koppel R. Defending computerized physician order entry from its supporters. Am J Manag Care 2006;12:369–70 [PubMed] [Google Scholar]

- 61.Shekelle PG, Morton SC, Keeler EB. Costs and Benefits of Health Information Technology. Evidence Report/Technology Assessment No. 132. (Prepared by the Southern California Evidence-based Practice Center under Contract No. 290-02-0003.) AHRQ Publication No.06-E006. Rockville, MD: Agency for Healthcare Research and Quality; April 2006 [Google Scholar]

- 62.Kaplan HC, Brady PW, Dritz MC, et al. The influence of context on quality improvement success in health care: A systematic review of the literature. Milbank Q 2010;88:500–59 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Blumenthal D, Tavenner M. The “Meaning ful Use” regulation for electronic health records. NEJM 2010. http://healthcarereform.nejm.org/?p=3732 (accessed 15 Jul 2010). [DOI] [PubMed] [Google Scholar]

- 64.Albanese M, Mejicano G, Xakellis G. Physician practice change II: implications of the integrated systems model (ISM) for the future of continuing medical education. Acad Med 2009;84:1056–65 [DOI] [PubMed] [Google Scholar]

- 65.Albanese M, Mejicano G, Xakellis G, et al. Physician practice change I: a critical review and description of an Integrated Systems Model. Acad Med 2009;84:1043–55 [DOI] [PubMed] [Google Scholar]

- 66.Institute of Medicine Initial National Priorities for Comparative Effectiveness Research. Washington, DC: National Academies, 2009 [Google Scholar]

- 67.Holden R, Karsh BT. A theoretical model of health information technology usage behavior with implications for patient safety. Behav Inform Technol 2009;28:21–38 [Google Scholar]