Abstract

Objective

To assess whether a low level of decision support within a hospital computerized provider order entry system has an observable influence on the medication ordering process on ward-rounds and to assess prescribers' views of the decision support features.

Methods

14 specialty teams (46 doctors) were shadowed by the investigator while on their ward-rounds and 16 prescribers from these teams were interviewed.

Results

Senior doctors were highly influential in prescribing decisions during ward-rounds but rarely used the computerized provider order entry system. Junior doctors entered the majority of medication orders into the system, nearly always ignored computerized alerts and never raised their occurrence with other doctors on ward-rounds. Interviews with doctors revealed that some decision support features were valued but most were not perceived to be useful.

Discussion and conclusion

The computerized alerts failed to target the doctors who were making the prescribing decisions on ward-rounds. Senior doctors were the decision makers, yet the junior doctors who used the system received the alerts. As a result, the alert information was generally ignored and not incorporated into the decision-making processes on ward-rounds. The greatest value of decision support in this setting may be in non-ward-round situations where senior doctors are less influential. Identifying how prescribing systems are used during different clinical activities can guide the design of decision support that effectively supports users in different situations. If confirmed, the findings reported here present a specific focus and user group for designers of medication decision support.

Keywords: Decision support, computerized alerts, prescribing, CPOE, qualitative research, observation, interviews, human error

Background and objective

Clinical decision support can facilitate decision-making if it provides the right information at the right time to the right person.1 A computerized decision support system (CDSS) integrated into a computerized provider order entry (CPOE) system has the potential to decrease prescribing errors because it can reduce a prescriber's reliance on memory and increase access to relevant information.2 Several studies have reported a decline in overall medication error rates following the introduction of CPOE with differing levels of CDSS, but the evidence is often inconsistent and far from conclusive.3 4

Evaluations of CPOE and CDSS typically comprise outcome-based evaluations, where the effects of an electronic system on a pre-defined outcome variable, such as medication errors or adherence to guidelines, is assessed. This research evidence is important in determining whether or not such systems should be pursued, but it is now recognized that evaluations in medical informatics that focus on process (ie, how systems are actually used in practice) can add value above and beyond traditional audit approaches.5–8

Observing how people work and interact with technology is an extremely important method for assessing technology usability.9 Observing how users interact with systems in the field allows researchers to determine whether systems are being used in expected (ie, as they were designed to be used) or unexpected ways, and to uncover issues not known or recognized by system users. For example, ethnographic observation has been employed, often in combination with user interviews, to identify unintended consequences of CPOE implementation, issues related to CPOE human–computer interaction, barriers to using computerized alerts, and emotional responses to CPOE introduction.8 10–12

There is now little doubt that ordering medications with computerized decision support can significantly reduce medication error rates in some circumstances.13 14 Research has shown that the most frequent contributing factor associated with prescribing errors is medication knowledge deficiency.15–17 One might therefore expect decision support to be more useful for prescribers who are unfamiliar with the medications they are prescribing, or the patient/condition for which they are prescribing. Surprisingly little research has investigated the impact of medical expertise on use and views of CDSS. One study examined differences between junior (registrar) and senior (consultant) doctors by reviewing data imported from a CPOE system.18 The researchers found that senior doctors, who used the system the least, generated the greatest number of warning messages per prescription and were also more likely to disregard the alerts when they appeared, compared to junior doctors. Consistent with these results, another study found that novice physicians (interns) were less likely than more experienced doctors to override alerts.19

Ward-rounds are one of the most valuable times in a clinician's day for sharing information and engaging in collaborative decision-making.20 Treatment planning, like refining diagnoses or communicating with patients, has been identified as a core ward-round behavior.21 While Sackett et al's seminal work demonstrated that paper-based decision support, in the form of an ‘evidence cart,’ is used on ward-rounds if readily available,22 little is known about how computerized decision support integrated into a CPOE system is used during ward-rounds. It has been highlighted that technology designed for a single user, like computerized decision support, poses challenges to situations, like ward-rounds, where work is performed in a group.23 In this study, we aimed to assess whether a low level of decision support within a hospital CPOE system has an observable influence on the medication ordering process on ward-rounds and to investigate prescribers' views of the system's decision support features in general.

Methods

Details of CDSS

This study was conducted at a teaching hospital with approximately 300 beds in Sydney, Australia. At the time of the study, June–November 2010, all wards were using the CPOE system, MedChart (http://www.isofthealth.com/), except for the emergency department (ED) and the intensive care unit (ICU). Wards (typically 34 beds) varied in the number of computers available, but all were equipped with an average of eight wireless laptops fixed to lightweight trolleys and eight desk-top computers located at clinical work stations. MedChart could be accessed from any computer on the hospital network.

MedChart is an electronic medication management system that links prescribing, pharmacy review, and drug administration. The system is integrated with the hospital's clinical information system, which includes online ordering, and results for laboratory and imaging tests, paging, rostering, and clinical documentation.

Implementation of MedChart (v3.2.112) commenced at the hospital as a pilot in 2005. The pilot was conducted for 1 year in one ward. Roll out to other wards commenced in 2006 and was a gradual process with all wards (except the ED and ICU) using MedChart v3.5.160.40 by mid-2010.

Electronic prescribing in MedChart can be completed in three ways: (1) long-hand prescribing, where the doctor enters all order parameters (eg, dose, administration time, etc) after he/she selects a medication name, (2) ‘quicklists,’ or pre-written orders, where the order parameters are pre-populated, and (3) ‘protocols,’ collections of pre-written orders, for example, the blood and marrow transplant hematology protocol includes 26 medication orders.

The CPOE system includes a ‘Reference Viewer’ look-up tool that allows prescribers to access reference information (eg, therapeutic guidelines) by clicking on the ‘Reference Viewer’ tab at the top of the prescribing system screen.

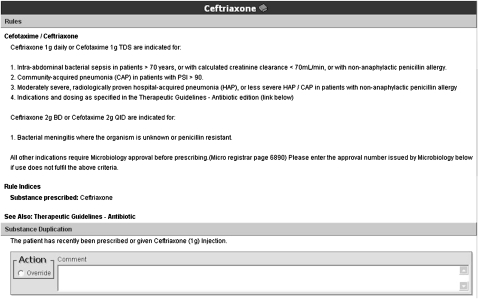

At the time of the study, the CDSS included the following alerts: allergy, pregnancy, therapeutic duplication, and approximately 100 hospital developed rule-based messages (eg, drug and therapeutics committee decisions, antibiotic stewardship guidelines). Drug–drug interaction alerts were not yet operational. The alerts appear to the prescriber immediately following the selection of a medication name. Alerts vary in length but all use Courier 10 font and include a bold heading specifying the alert type (eg, ‘Substance Duplication’). An example alert is given in figure 1.

Figure 1.

Example alert. Two alerts (1. Rules and 2. Substance Duplication) were triggered in response to Ceftriaxone being selected.

Approximately half of the alerts were for information only (ie, prescribers were not required to take action), while others required the prescriber to respond. In most cases these alerts could be overridden by ticking a box, but approximately 10% of the alerts required prescribers to enter an override reason into a text box before proceeding with the prescription.

MedChart, like all CPOE systems, also includes implicit decision support. This comprised product lists when prescribers initially typed in a medication for prescription and also drop down lists of alternatives when prescribers entered details of an order (eg, once a day, twice a day, once a week, etc).

Participants

Fourteen specialty teams (with an average of three members) were recruited to participate in the observational study via direct approach, phone, or email. To recruit, the researcher circled the wards at various times of the day and approached teams that were commencing a ward-round. This continued until a broad range of specialties agreed to participate. The teams included cardiology, clinical pharmacology, lung transplantation, colorectal surgery, gastroenterology (×2), gerontology (×2), hematology, infectious diseases, nephrology, neurology, and palliative care (×2). In total, 46 doctors were observed during rounds.

Sixteen prescribers from these teams also participated in interviews. After an observation session, the observer (MB) asked team members (usually those seen using MedChart on ward-rounds) to take part in an interview. Of these doctors, three were residents, 10 were registrars (at least 3 years in hospital practice), and three were consultants. Recruitment of prescribers for interviews continued until saturation of content was achieved (ie, no new content was being elicited).

Procedure

Observations

Specialty teams were shadowed by one of the investigators (MB) while on their ward-rounds. Teams typically included one senior doctor (consultant), one (or more) registrar, one (or more) resident, and occasionally interns (first year after graduation) and medical students. Some ward-rounds (5/37) were observed to take place without a senior doctor present. The investigator followed each team as they discussed patient cases and interacted with patients. On occasions where the computer (fixed to a lightweight trolley) was not taken to the patient's bedside, the investigator remained in the hallway with the computer and only accompanied the team to the bedside if invited to do so by a participating doctor. Each team was observed on two or three ward-rounds (except for one team that was observed only once because they reported never using a computer on ward-rounds), resulting in 58.5 h of observation in total. Over this time period, 182 interactions with MedChart were observed.

Observations were recorded via handwritten notes using a coding system to identify interactions and actions. The investigator noted all interactions with the MedChart system. All instances of medication orders being reviewed, entered, edited, or ceased were noted and information was subsequently classified into the following categories: the method used to prescribe (long hand, quicklist, or protocol); whether an alert was triggered during the initiation of an order or the edit of an order; the type of alert triggered; whether the alert was read; whether the prescriber ticked the override box and wrote a comment; whether the supporting documents accompanying the alerts were read; whether a prescription was changed following an alert; and whether the Reference Viewer tool was used. These data were entered into Excel spreadsheets for analysis.

Interviews

Prescribers participated in a 20-min semi-structured interview. During the interview doctors were asked to comment on the decision support (pre-written orders, Reference Viewer, and computerized alerts) as to whether it was useful or bothersome, what features could be improved, and what additional decision support was needed (interview questions are given in the supplementary online appendix).

Analysis

Interviews were audio recorded and transcribed. Three team members independently reviewed the interview transcripts to identify major themes in terms of prescribers' attitudes toward and reported usage of each decision support feature (ie, pre-written orders, Reference Viewer, computerized alerts, product lists, and drop down lists) and their views toward implementing additional decision support. Observational and interview data were also considered in the context of the participant's level of medical expertise (ie, junior vs senior doctors).

Results

Observable impact of decision support on ward-round prescribing

Senior doctors (consultants) were clearly the decision-makers on ward-rounds but rarely used the CPOE system. Only one senior doctor was observed logging onto MedChart and prescribing. Most frequently, a junior doctor (intern, resident, or registrar) adopted the role of ‘electronic prescriber’ and entered medication orders into the system. Consultants told the junior prescribers what medications to order, and were occasionally seen calling out medication orders from a patient's bedside to the junior doctors who stood at computers in the hallway. Decisions about what to prescribe were sometimes not made at the same time as orders were entered into the MedChart system. This was because the order-entry process took too long. After making a treatment decision, teams were often seen to move on to the next patient case while the junior doctor remained behind to enter the medication order into the system and then catch up with the round.

Pre-written orders and the Reference viewer look-up tool

Of the 96 medication orders entered into MedChart during ward-rounds, 89% were performed using the long-hand method—prescribers rarely prescribed using the ‘quicklist’ or ‘protocol’ methods. Prescribers also seldom used (n=6) the Reference viewer.

Alerts

During prescribing, 48% (n=69) of medication orders were observed to trigger one or more alerts. Table 1 shows the types of alerts received. Many of the therapeutic duplication warnings were observed to be the result of prescribers not using the system correctly (ie, initiating two related orders separately instead of simultaneously). A common example was two dose sizes of a drug needed to comprise the dose to be given (eg, frusemide 40 mg in the morning and 20 mg at noon).

Table 1.

Number of alerts observed for 96 orders initiated and 54 orders edited (n=150 medication orders)

| User | Number of alerts (presented individually) | Number of alerts (presented in combination with another alert) | Total |

| Allergy | 0 | 0 | 0 |

| Therapeutic duplication | 28 | 14 | 42 |

| Pregnancy | 1 | 7 | 8 |

| Dose range | 1 | 3 | 4 |

| Local rule | 21 | 9 | 30 |

| Total | 84 |

Only 17% (n=12) of alerts were ‘read’ by prescribers. The investigator counted an alert as read if the prescriber paused and shifted his/her gaze toward the alert text. No prescriber was seen reading the entire content of an alert. If a response to an alert was required, prescribers ticked the override tick box and rarely provided a reason for overriding the alert. No prescriptions were changed following the presentation of an alert and no junior doctor was observed mentioning the alert to the team or questioning a senior doctor's decision to prescribe a medication following the triggering of an alert.

Views of decision support

Senior doctors explained that they rarely used the system and junior doctors reported that they completed the majority of prescribing. Due to their limited experience with MedChart, assessing senior doctor views of the decision support was extremely difficult.

Pre-written orders and the Reference Viewer look-up tool

Most prescribers reported a preference for long-hand prescribing over the short-cut methods. They explained that this was because they were not familiar with the other methods or believed that their medical area was too varied to use pre-written orders or order-sets. For example, a registrar (#8) said, ‘I haven't been taught how to use them. I just press the prescribe button.’ Many doctors admitted to never trying the other prescribing methods. Most prescribers perceived the Reference Viewer to be very useful. When asked specifically about use of the tool on ward-rounds, most doctors reported that they would not usually use the tool on ward-rounds because of insufficient time and because the consultants were the ones deciding what medication to prescribe. For example, a resident (#2) said, ‘On a round I wouldn't use Reference Viewer because usually the person who's actually doing the prescribing is the boss who is saying I want this, so you do it.’ Junior doctors reported using the Reference Viewer tool more frequently than senior doctors.

Product lists and drop down lists

Many junior prescribers mentioned the product lists and drop down lists as some of their favorite features of MedChart. These lists were perceived as useful as they ‘prompted’ doctors when they struggled to remember the dose of a drug, or how to spell a drug name. For example, a registrar (#2) said, ‘It means that you can't get the scale of dosing wrong really because it usually comes up with a suggested scale, so there's no capacity for adding or taking a zero for instance.’

Alerts

No prescriber interviewed believed that the alerts were helping him/her decide what drug to prescribe. A registrar (#2) said, ‘It's certainly helpful in, like I say, avoiding errors and mistakes but I don't think it really helps in deciding say what antibiotic or what [anti]hypertensive or whatever because that's a clinical decision.’ Although doctors found the alerts to be irritating, many also mentioned that it was better to be safe than sorry. For example, a resident (#2) said, ‘I think some of the warnings that you then have to override are frustrating but at the same time I do at least half glance at them, so if there's something that I wasn't aware of then I'd probably look at it further.’

Some doctors explained that the alerts appeared after they'd already made their prescribing decision and often provided them with information that they already knew. A registrar (#8) said, ‘The decision to prescribe something is based on your clinical knowledge… by the time you type it in and prescribe it, you've already made that decision.’ Most prescribers believed that they received too many alerts and that most were redundant. A senior doctor (#2) said, ‘It's a bit like the internet, there's just so much there and it's very useful but you kind of need to filter it a bit and you need to have available what's very useful and what's important and the rest of the stuff you kind of need to exclude almost because its just getting in the way and distracting you if anything.’ Some doctors recognized that they had become desensitized to the alerts and mentioned that receiving too many alerts was leading to quick dismissal of messages. For example, a registrar (#3) said, ‘It pops up so often which can be a very bad thing because you're dismissing it so often that you develop this sort of mechanism, so it can be bad in a sense that sometimes you might miss some important things.’

Some alerts were perceived by prescribers as more useful than others. Therapeutic duplication warnings were seen as the least useful because they appeared when prescribing medications that had been ordered previously as Stat or PRN medications. Allergy alerts were perceived as most useful. Some doctors, both senior and junior, thought that customizing alerts would be helpful, so that for example, no pregnancy warnings appeared in palliative care.

Although senior doctors reported that alerts were extremely bothersome, they appreciated that they may be useful for more junior doctors. A senior registrar (#2) said, ‘On the whole, it's better to be overcautious than under cautious and it all depends on your experience in prescribing. If you have less experience then the prompts might be more useful.’

One consultant suggested that alerts should be more individualized, with many being turned off for senior doctors.

Most doctors either admitted to not reading the warnings, or explained that they skimmed the start of the alert to determine what alert had fired. A registrar (#3) said, ‘I have to admit on a ward round I probably would be reading 10% of all alerts that come up because there's so many and sometimes there's the same ones over and over and so there's just no time when you're in a rushed ward round to actually read the alerts.’

Every doctor reported that the alerts contained too much text and should be shortened. A registrar (#3) said, ‘… it just comes out so big that if you spent the time to read it, it's so much easier to click that button.’ When asked if more distinctive alerts would aid recognition (eg, color coding for different types of alert), all participants agreed. Some prescribers also mentioned that it would be useful if level of risk was made clear, for example, alerts were color coded according to severity.

Discussion

The low level of decision support within this hospital CPOE system did not have an observable influence on the medication ordering process on ward-rounds. Observing doctors revealed that users of the CPOE on ward-rounds are rarely those that make the prescribing decisions. Consultants were not observed using the system and reported that they seldom ordered medications using the system. Even when the computer was integrated into the patient encounter and medication orders were entered in real time as decisions were being made, it was the junior doctor using the computer who encountered the computerized alerts. No junior doctor was observed questioning a senior doctor's decision following the triggering of an alert and no prescription was changed following the triggering of an alert, reinforcing previous findings of the central decision-making role of senior clinicians during ward-rounds.24 25

During interviews, it was often difficult to determine whether senior doctors felt more negatively about the decision support components compared to junior doctors because so few used the CPOE system and were exposed to its features.

This work highlights the value of conducting process evaluations of this kind. It was only through observing and talking to prescribers that we were able to determine that the doctors receiving alerts on ward-rounds are often not those deciding what medication to prescribe, its dose, frequency, etc. Nor were alerts a source of education in terms of triggering discussions between senior and junior doctors. This result suggests that the greatest value of decision support in this setting may be in non-ward-round situations. Junior doctors are often required to prescribe medications without a senior doctor being present, including after-hours and on weekends. In this capacity, well-designed decision support may be useful as a learning and safety tool for junior doctors. Identifying how prescribing systems are used during different clinical activities can guide the design of decision support that effectively supports users in different situations. To date, there has been little discussion of this in the literature. A conclusion from this study is a suggestion that all alerts (perhaps excluding allergy) should be switched off during ward-rounds as they appear to provide no benefits and contribute to a learned behavior of ignoring alerts.

The decision support features currently available within the MedChart system were not all perceived to be useful by prescribers. Prescribers liked the Reference Viewer, but did not like or use the Quicklists and Protocols. The main reason for under-utilization of these system short-cuts was a lack of awareness of their usefulness, indicating a need for further training. This is a key lesson for other sites, namely that the availability of quick prescribing methods does not guarantee their use. Training has consistently been identified as an essential component of CPOE implementation,26 but it is difficult to know how much training to administer, when and where, and also to determine if training is effective in achieving proficient prescribing. A field evaluation, like the one completed here, is a useful tool for determining whether the skills and knowledge acquired during training translate into safe and effective task performance.

The computerized alerts were not perceived to be useful in aiding prescribing decisions by either junior or senior doctors. Prescribers infrequently read alerts because they received too many and many were repeated and predictable (ie, prescribers familiar with the system knew when an alert would be triggered). Doctors reported that many alerts were irrelevant and the alert content was often too long. These problems have previously been identified as factors that limit the use and effectiveness of computerized alerts27–29 and highlight how complex a process designing effective decision support can be.

While both junior and senior doctors recognized the potential for well-designed alerts to prevent some medication errors, most explained that the alerts were not helping them to decide what drug to prescribe, its dose, etc. Senior doctors were particularly adamant that their decisions are based on their clinical knowledge, not on information contained within alerts. Junior doctors admitted to ignoring alerts on ward-rounds because of insufficient time and because the decision to prescribe a medication had been made by a senior doctor. Contributing to this failure of alerts to influence prescribing on ward-rounds was the finding that some medical teams decided what medication to prescribe (and associated parameters) well before the order was entered into the system. When a junior prescriber was confronted with an alert advising that a change to an order was recommended, the decision, often made by the senior doctor, had already been made.

Limitations

This study was conducted at one site and so results may not be generalizable to other settings. Participants were observed during ward-rounds only, and prescribers use the system to prescribe throughout the day and night. Based on the results presented here, we hypothesize that the likelihood of alerts being read by junior doctors is greater in the absence of senior doctors, and that the Reference Viewer tool is used more frequently by junior doctors in non-ward-round situations. Further studies will test these hypotheses.

Conclusion

It has been suggested that clinical decision support can facilitate clinical decision-making if it provides the right information at the right time to the right person.1 Observations and interviews allowed us to determine that decision support is not having an observable influence on the medication ordering process that takes place on ward-rounds. Senior doctors were the decision makers, yet the junior doctors who used the system received the alerts. Identifying how prescribing systems are used during different clinical behaviors is important for designing decision support that effectively supports users in making appropriate prescribing decisions. The findings from this study suggest that the greatest value of decision support may be in non-ward-round situations where senior doctors are not available. If confirmed, this presents a specific focus and user group for designers of medication decision support.

Acknowledgments

The authors would like to thank Dr Darren Roberts for his help with participant recruitment.

Footnotes

Funding: This research was supported by NH&MRC Program Grant 568612. This funding source had no involvement in the design, data collection, data analysis, interpretation of data, writing of the report, or decision to submit this paper for publication.

Competing interests: None.

Ethics approval: Ethics approval was obtained from the human research ethics committee of the hospital and the University of New South Wales.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Wright A, Phansalkar S, Bloomrosen M, et al. Best practices in clinical decision support. Appl Clin Inform 2010;1:331–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Patterson ES, Cook RI, Render ML. Improving patient safety by identifying side effects from introducing bar coding in medication administration. J Am Med Inform Assoc 2002;9:540–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Reckmann MH, Westbrook JI, Koh Y, et al. Does computerized provider order entry reduce prescribing errors for hospital inpatients? A systematic review. J Am Med Inform Assoc 2009;16:613–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schedlbauer A, Prasad V, Mulvaney C, et al. What evidence supports the use of computerized alerts and prompts to improve clinicians' prescribing behavior? J Am Med Inform Assoc 2009;16:531–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kushniruk AW, Patel VL. Cognitive evaluation of decision making processes and assessment of information technology in medicine. Int J Med Inform 1998;51:83–90 [DOI] [PubMed] [Google Scholar]

- 6.Kaplan B. Evaluating informatics applications—some alternative approaches: theory, social interactionism, and call for methodological pluralism. Int J Med Inform 2001;64:39–56 [DOI] [PubMed] [Google Scholar]

- 7.Holden RJ. Cognitive performance-altering effects of electronic medical records: An application of the human factors paradigm for patient safety. Cogn Technol Work 2011;13:11–29 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Russ AL, Zillich AJ, McManus MS, et al. A human factors investigation of medication alerts: barriers to prescriber decision-making and clinical workflow. AMIA Annu Symp Proc 2009;2009:548–52 [PMC free article] [PubMed] [Google Scholar]

- 9.Nielsen J. Usability Engineering 1993. San Diego: Academic Press, 1993 [Google Scholar]

- 10.Ash JS, Sittig DF, Dykstra RH, et al. Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform 2007;76(Suppl 1):S21–7 [DOI] [PubMed] [Google Scholar]

- 11.Campbell EM, Guappone KP, Sittig DF, et al. Computerized provider order entry adoption: implications for clinical workflow. J Gen Intern Med 2008;24:21–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Guappone KP, Ash JS, Sittig DF. Field evaluation of commercial Computerized Provider Order Entry systems in community hospitals. AMIA Annu Symp Proc 2008:263–7 [PMC free article] [PubMed] [Google Scholar]

- 13.Coiera E, Westbrook J, Wyatt J. The safety and quality of decision support systems. Yearb Med Inform 2006:20–5 [PubMed] [Google Scholar]

- 14.Garg AX, Adhikari NK, McDonald H.et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 15.Bobb A, Gleason K, Husch M, et al. The epidemiology of prescribing errors: the potential impact of computerized prescriber order entry. Arch Intern Med 2004;164:785–92 [DOI] [PubMed] [Google Scholar]

- 16.Lesar TS, Briceland LL, Delcoure K, et al. Medication prescribing errors in a teaching hospital. JAMA 1990;263:2329–34 [PubMed] [Google Scholar]

- 17.Lesar TS, Briceland L, Stein DS. Factors related to errors in medication prescribing. JAMA 1997;277:312–17 [PubMed] [Google Scholar]

- 18.Anton C, Nightingale PG, Adu D, et al. Improving prescribing using a rule based prescribing system. Qual Saf Health Care 2004;13:186–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Weingart SN, Toth M, Sands DZ, et al. Physicians' decisions to override computerized drug alerts in primary care. Arch Intern Med 2003;163:2625–31 [DOI] [PubMed] [Google Scholar]

- 20.Busby A, Gilchrist B. The role of the nurse in the medical ward round. J Adv Nurs 1992;17:339–46 [DOI] [PubMed] [Google Scholar]

- 21.O'Hare JA. Anatomy of the ward round. Eur J Intern Med 2008;19:309–13 [DOI] [PubMed] [Google Scholar]

- 22.Sackett DL, Straus SE. Finding and applying evidence during clinical rounds: the “evidence cart”. JAMA 1998;280:1336–8 [DOI] [PubMed] [Google Scholar]

- 23.Morrison C, Jones M, Blackwell A, et al. Electronic patient record use during ward rounds: a qualitative study of interaction between medical staff. Crit Care 2008;12:148–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Walton JM, Steinert Y. Patterns of interaction during rounds: implications for work-based learning. Med Educ 2010;44:550–8 [DOI] [PubMed] [Google Scholar]

- 25.Pearson SA, Rolfe I, Smith T, et al. Intern prescribing decisions: few and far between. Educ Health (Abingdon) 2002;15:315–25 [DOI] [PubMed] [Google Scholar]

- 26.Ash JS, Fournier L, Stavri PZ, et al. Principles for a successful computerized physician order entry implementation. AMIA Annu Symp Proc 2003:36–40 [PMC free article] [PubMed] [Google Scholar]

- 27.Glassman PA, Simon B, Belperio P, et al. Improving recognition of drug interactions: benefits and barriers to using automated drug alerts. Med Care 2002;40:1161–71 [DOI] [PubMed] [Google Scholar]

- 28.Ahearn MD, Kerr SJ. General practitioners' perceptions of the pharmaceutical decision-support tools in their prescribing software. Med J Aust 2003;179:34–7 [DOI] [PubMed] [Google Scholar]

- 29.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]