Abstract

Objective

Expert authorities recommend clinical decision support systems to reduce prescribing error rates, yet large numbers of insignificant on-screen alerts presented in modal dialog boxes persistently interrupt clinicians, limiting the effectiveness of these systems. This study compared the impact of modal and non-modal electronic (e-) prescribing alerts on prescribing error rates, to help inform the design of clinical decision support systems.

Design

A randomized study of 24 junior doctors each performing 30 simulated prescribing tasks in random order with a prototype e-prescribing system. Using a within-participant design, doctors were randomized to be shown one of three types of e-prescribing alert (modal, non-modal, no alert) during each prescribing task.

Measurements

The main outcome measure was prescribing error rate. Structured interviews were performed to elicit participants' preferences for the prescribing alerts and their views on clinical decision support systems.

Results

Participants exposed to modal alerts were 11.6 times less likely to make a prescribing error than those not shown an alert (OR 11.56, 95% CI 6.00 to 22.26). Those shown a non-modal alert were 3.2 times less likely to make a prescribing error (OR 3.18, 95% CI 1.91 to 5.30) than those not shown an alert. The error rate with non-modal alerts was 3.6 times higher than with modal alerts (95% CI 1.88 to 7.04).

Conclusions

Both kinds of e-prescribing alerts significantly reduced prescribing error rates, but modal alerts were over three times more effective than non-modal alerts. This study provides new evidence about the relative effects of modal and non-modal alerts on prescribing outcomes.

Keywords: Electronic prescribing, medication alert systems, clinical decision support systems, medication errors, medical order entry systems, decision support systems, electronic prescribing, medication alerts, human factors, warnings

Introduction

Medication errors are the third most prevalent source of reported patient safety incidents in England,1 and prescribing errors are the most important cause of medication errors.2 The EQUIP study3 showed that, in UK hospitals, errors are made in about 9% of prescriptions, and, in primary care, errors have been identified in up to 11% of prescriptions.4 The Department of Health has recommended the wider use of electronic (e-) prescribing to reduce the risk of medication errors.5 Experience in the USA has shown that e-prescribing has the potential to reduce the rate of serious medication errors by up to 81%.6 In the UK, the use of computers in prescribing is well established in primary care, whereas e-prescribing in hospitals is limited to a small number of sites.

While e-prescribing can prevent many errors through legibility and ensuring completeness of prescriptions, more sophisticated systems linked to an electronic health record have the potential to reduce errors further and improve clinical practice. E-prescribing systems with clinical decision support (CDS) can check automatically for allergies, dose errors, and drug–drug and drug–disease interactions and provide immediate warning and guidance, allowing the prescriber to make appropriate changes before a prescription is finalized.6 7 There is evidence that using CDS in conjunction with e-prescribing reduces medication errors8 and improves practitioner performance.9 10 A Cochrane review of the evidence for computerized advice on drug dosage to improve practice11 found significant benefits, including reduced risk of toxic dose (rate ratio 0.45) and reduced length of hospital stay (standardized mean difference −0.35 days).

Despite the benefits they may bring to clinical practice, there is wide variability in the adoption of recommendations generated by CDS systems, with up to 96% of alerts being overridden or ignored.12 A systematic review has identified a range of factors that adversely affect the utilization of CDS, including unsuitable content of alerts, excessive frequency of alerts, and alerts causing unwarranted disruption to the prescriber's workflow.13 Disruption in this context is caused by the alert appearing within a ‘modal’ dialog box, which prevents user interaction with the system outside of the dialog box. In the human–computer interaction literature, the term ‘modality’ in its most general sense refers to the devices and means through which a human and computer may interact. In the current work, modal instead refers to the specific user interface design concept of a dialog box that requires the user to interact with it before they can interact with other user interface controls.14 In this way, the control of the user interface is temporarily taken over by the computer, causing an interruption of user control as they attempt to carry out a prescribing task. A ‘non-modal’, or modeless, dialog box does not prevent users from interacting with the rest of the system.

The problem of an intolerably large number of inaccurate or insignificant modal alerts is a common criticism of CDS systems.15–17 Alerts displayed in circumstances that could result in significant harm are usually presented to users with the same degree of workflow disruption as messages with lesser clinical consequences, causing the phenomenon referred to as ‘alert fatigue’.18 Users become fatigued by excessive numbers of inappropriate alerts, and consequently may bypass warnings that could prevent adverse events or improve practice.17 19 The abundance of false positive alerts has been attributed to the limited quality of the knowledge bases underlying CDS systems, poorly coded patient data, a failure to discriminate the incidence of drug interactions in patient populations, and the severity of adverse events when they occur.19–23

Although the relevance and utility of CDS alerts may be increased by improvements in data quality and decision support logic, little is known about which user interface design features of alerts are associated with an impact on prescribing errors, and there are few empiric studies assessing the effectiveness of different approaches.24 A recent systematic review of human factors research in e-prescribing alerts has highlighted the problem of the lack of acceptance of alerts in clinical systems and emphasized the need for further evidence supporting the design of alerts.25 Tailoring the degree of prescribing workflow disruption according to the severity of the alert, so that the most appropriate warning mechanism can be used, may improve the effectiveness of CDS systems.13 19 22 24 25 However, although minimizing the workflow disruption caused by low-severity alerts may reduce alert fatigue, there are no common standards that stratify alert conditions by severity,19 25 26 and the use of non-modal alerts may lead to patient harm if they do not significantly change prescribing behavior.27 No studies have directly compared the effect of modal alerts and non-modal alerts. Current evidence in the literature is therefore inadequate to support the role of non-modal alerts in e-prescribing.

Aim of the study

The aim of our study was to compare the effect of modal and non-modal CDS alerts on e-prescribing errors. We presented clinical scenarios with in-built potential prescribing errors to junior doctors and evaluated how these different types of alert influenced their prescribing performance. We believe that our results yield valuable evidence for system developers, human factors researchers, and policy makers.

Methods

Study design

We implemented a scenario-based randomized study to assess the effect of three types of CDS alert on e-prescribing errors: (1) modal; (2) non-modal; (3) no alert (control). Each participant was observed performing 30 simulated clinical scenarios using a prototype e-prescribing system with a CDS feature. Every scenario involved a specific prescribing task associated with an error. For each scenario, participants were assigned to one of the three types of alert, to be displayed should the participant make the predefined prescribing error. Participants seeing an alert would therefore be warned of their error and be able to correct it. The presence or absence of the prescribing error at the end of each scenario was recorded by the observer without it being reported to the participant.

We used a within-participant design to reduce the effect of individual differences in performance. We partitioned the set of 30 scenarios into a ‘calibration set’ of nine scenarios and a ‘randomized set’ of 21 scenarios (table 1). Each participant was randomized to one of three groups which determined the type of alert (modal, non-modal, no alert) presented for each of the randomized set scenarios (figure 1). The type of alert allocated to each of the calibration set scenarios was the same for all participants. Overall, the participants were exposed to equal numbers of the three alerts types. The order in which scenarios were carried out was as follows (table 2): the nine scenarios of the calibration set were performed in identical order by all participants, comprising the first five and final four scenarios for each participant. The 21 randomized set scenarios were completed in random order as the 6th to 26th scenarios. The calibration set therefore acted as ‘book ends’ in the sequence of scenarios performed.

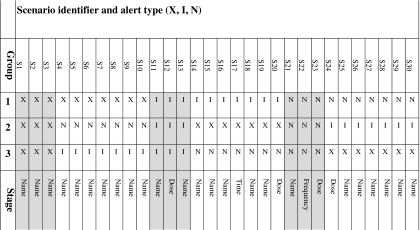

Table 1.

Assignment of alert type to scenario for the three randomization groups and the stage during the prescribing workflow when the alert was presented

|

S1.30 = scenario identifiers.

X = no alert (control); I = modal alert; N = non-modal alert; Grey background = calibration set scenarios; White background = randomized set scenarios; Name = Alert appeared when the drug name was specified; Dose = Alert appeared when the dose was specified; Frequency = Alert appeared when the frequency was specified; Time = Alert appeared when the administration time was specified.

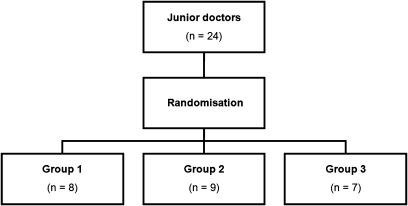

Figure 1.

Randomization of participants to alert type assignment group.

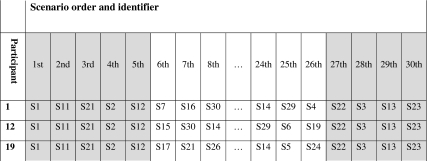

Table 2.

Ordering of scenarios for three participants (illustrative)

|

S1.30 = scenario identifiers.

Grey background = calibration set scenarios (constant order); White background = randomized set scenarios (randomized order).

Selection and recruitment of participants

The study participants were doctors within their first two years after graduation, all working in the same London teaching hospital. Rather than invite volunteers, participants were recruited by being contacted via their pagers taken directly from the hospital's roster of 68 junior doctors. Participants did not receive financial compensation for their involvement in the study.

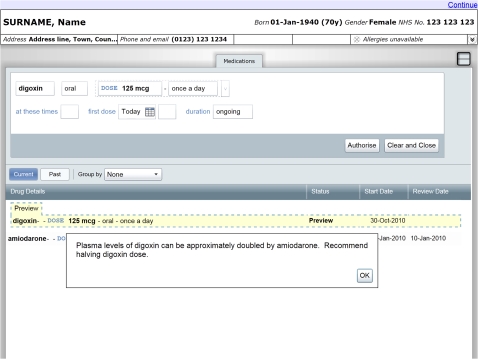

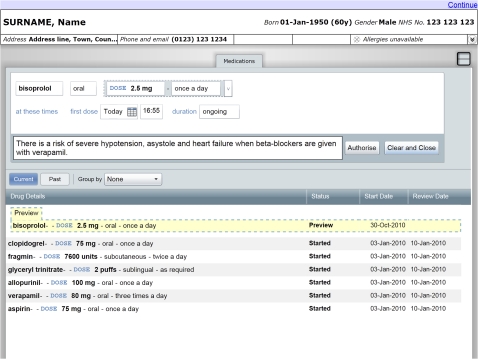

E-prescribing system and alert presentation

We used a prototype e-prescribing system developed by the Department of Health Informatics Directorate (DHID) in partnership with Microsoft (figures 2 and 3). The system features an electronic drug chart displaying the medications a patient is prescribed, together with widgets to search for and prescribe new medications. To create a prescription, the participant used a sequence of user interface widgets to specify the name, route, dose, and frequency of the medication. The administration times and time of the first dose could also be changed from the initial value automatically set according to the frequency. We extended the prototype to display decision support alerts at any of these stages in the prescribing workflow. The participant could abort the process at any point by pressing the ‘Clear and Close’ button. The participant finalized a prescription by pressing the ‘Authorise’ button, which added a corresponding entry to the electronic drug chart.

Figure 2.

Prototype e-prescribing system showing modal alert.

Figure 3.

Prototype e-prescribing system showing non-modal alert.

Alerts were displayed in either modal (figure 2) or non-modal forms (figure 3). Modal alerts included an ‘OK’ button in the corner of the alert window. The user interface prevented participants from authorizing a prescription until this button was pressed, after which the alert was hidden and the user could continue to use the prescribing widgets. Non-modal alerts did not include a button, did not interrupt prescribing workflow, and remained on screen until the prescription in progress was either authorized or canceled. Where possible, we minimized the remaining differences in user interface design between the two alert types while reflecting the design of alerts seen in commercially available systems. These design decisions were informed by expert opinion from the Common User Interface program of DHID. We developed additional software to present the necessary sequence of scenarios and alerts to each participant according to the study design.

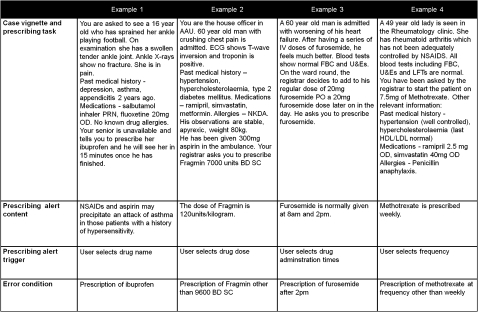

Drafting and piloting of study materials

We developed 30 clinical scenarios containing common prescribing errors seen in hospital practice. A report for DHID was used which identified the drugs most commonly associated with preventable harm.28 On the basis of this report, the following types of errors were included in the study: dose and frequency errors (four scenarios), drug–drug interactions (eight), drug–disease interactions and drug reactions (15), and pregnancy errors (three). The dataset for each scenario comprised (figure 4):

Figure 4.

Examples of scenarios.

A case vignette containing clinical information and relevant investigations.

A prescribing task, where the senior doctor (registrar) in the scenario instructs the participant (playing themselves) to prescribe one or more drugs.

The prescribing error definition. Carrying out the prescribing task will lead to the prescribing error.

The text of the e-prescribing alert displayed to warn participants of the error (displayed according to the study design).

The stage in the prescribing workflow (selection of drug name, dose, frequency or administration times, see table 1) which triggers the display of the alert (according to the study design).

Each prescribing task involved an instruction from a registrar. In 17 scenarios, the registrar described both the drug and dose to prescribe. In five scenarios, only a drug name was specified. In eight scenarios, the participant was instructed to write up one or more items from the patient's normal medication which, in the context of the scenario (eg, via a drug–drug interaction), constituted an error. The pharmacological information in each scenario was verified using the British National Formulary.29

The scenarios were piloted on paper using a standard drug chart with four junior doctors who were not included in the study. The average error rate was 42% (30–60%), suggesting the scenarios were challenging enough for the intervention of prescribing alerts to make a significant difference. Pilot participants rated each scenario for difficulty (easy, moderate, difficult) corresponding to their judgment of the probability of making the prescribing error. Scenarios were evenly distributed between the three randomization groups according to the average difficulty rating. We also piloted the written information to be given to study participants before the experimental exercise to ensure it did not provide any indication of the study's purpose or outcome measures. Four other doctors were observed using early versions of the experimental software, and their comments were taken into account in the final system. Ethics permission for the study was deemed not to be required by the local hospital ethics committee.

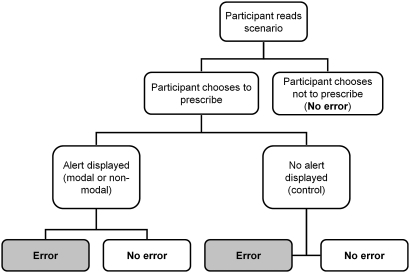

Study execution

The experimental scenarios took place in a senior doctor's office using a Windows XP personal computer with 17-inch screen, keyboard, and mouse. Participants were shown written information about the exercise, which instructed them to carry out the scenarios as they would do if they were using an e-prescribing system in their normal practice (online appendix A). Participants were blinded to the purpose of the study and the outcome measure. Participants performed an introductory ‘sandbox’ scenario to familiarize themselves with the system. This scenario involved prescribing simple analgesia and did not feature alerts. Participants were then observed completing the 30 experimental scenarios (figure 5). For each scenario, the clinical case and associated prescribing task were presented on screen. This information was also made available on paper by the observer, who sat next to the participant. After reading the scenario, the participant either proceeded to the e-prescribing system to enter a prescription or informed the observer that they did not wish to prescribe. Participants could report their thoughts on the case to the observer but were not given further clinical information or advice. Participants who elected to enter a prescription were shown a prescribing alert in accordance with the scenario and study design. Participants were free to continue, amend, or cancel a prescription in response to an alert, and to prescribe any number of drugs. Participants informed the observer when they had finished the task, and proceeded to the next scenario without receiving feedback. There was no time limit imposed on participants.

Figure 5.

Sequence of possible events and outcomes for each scenario.

Data collection methods and error definition

For each scenario, the observer recorded the following data:

whether or not the participant had proceeded to prescribe after reading the case and prescribing task;

whether or not an alert appeared during the prescribing process (the observer was aware of which alert should appear in advance);

whether or not a prescribing error had occurred at the end of the scenario (the main outcome).

A prescribing error was recorded as present when the error condition defined for that scenario was met. A prescribing error was absent if no prescriptions were made, if no prescription met the error criteria, or if the participant informed the observer that they would seek further information or advice before prescribing. The order of the scenarios performed and the type of alert allocated to each scenario were recorded automatically by the experimental software.

After completion of all the scenarios, a semi-structured interview was performed to elicit participants' preferences regarding prescribing alerts and to explore their experience of the e-prescribing system (online appendix B). Participants were not informed of their performance in the study. Data on participant age, gender, grade, specialty, and previous use of e-prescribing were also collected.

Data analysis methods

Data were analyzed with IBM SPSS Statistics V.17 and R V.2.9.0. The Kruskal–Wallis test was used to compare the characteristics of the participants between randomization groups. Mixed effects logistic regression with random effects was used to compare the error rates between the three alert types while incorporating other variables of interest. Because observations within participants are likely to correlate positively, the correlation must be accounted for by analysis appropriate to the longitudinal data. The standard logistic regression model fails in its assumptions to accurately characterize the dependence in the data. The random effects approach allows the correlation between the repeated measurements to be incorporated into the estimates of parameters.30

Results

Participant characteristics

Twenty-four (12 male, 12 female) junior doctors completed the experiment and post-test interview. The average age of participants was 27 years (24–43). Twenty-two of the participants (92%) were in their first year after graduation. None of the participants had previously used e-prescribing or a CDS system in their working practice. The average time to complete the 30 scenarios was 41 min (29–60). Kruskal–Wallis tests showed no difference in the distribution of the age (p=0.590) or gender (p=0.958) between the three randomization groups. Three participants had their sessions temporarily halted by the need to respond to hospital pagers, and one participant's session was interrupted by an unexpected operating system reboot. In these four cases, the experiment was paused for several minutes until the participants were able to continue.

Effect of alert type on prescribing error

Table 3 shows the overall range of the number of errors made by the 24 participants on the scenarios of the study. Table 4 shows the main outcomes by alert type for trials of the complete set of 30 scenarios (n=720).

Table 3.

Summary of overall error rates on the scenarios of the calibration set, the randomized set, and the set of all scenarios

| N (scenarios in set) | Minimum number of participants making an error on a scenario (%) | Maximum number of participants making an error on a scenario (%) | Mean number of participants making an error per scenario (%) | SD | |

| Calibration set | 9 | 2 (8.3%) | 19 (79.2%) | 7.44 (31%) | 6.65 |

| Randomized set | 21 | 1 (4.2%) | 14 (58.3%) | 7.14 (29.8%) | 3.77 |

| All scenarios | 30 | 1 (4.2%) | 19 (79.2%) | 7.23 (30.1%) | 4.70 |

The range, mean and SD of the number of candidates who made an error in the scenarios of the three sets is shown. For example, the most difficult scenario in the randomization set had 14 of the 24 participants (58.3%) make an error. Percentages (error rates) were calculated by dividing the total number of errors made on scenarios assigned to the alert type by 168d that is, the total number of times scenarios assigned to each alert type were performed.

Table 4.

Outcomes by alert type for the randomized set scenarios

| Outcomes for randomized set scenarios (n=504 tasks) | |

| Alert type | Number of errors (rate) |

| Modal | 17 (10.1%) |

| Non-modal | 46 (27.4%) |

| No alert (control) | 87 (51.8%) |

| Totals | 150 (29.8%) |

It is possible that the external interruptions to the experimental sessions of four participants (see Participant characteristics) had important effects on the outcomes recorded in those cases. We therefore present a repeat of this analysis that excludes the data of the participants whose sessions were interrupted (online appendix C). The same result is still obtained.

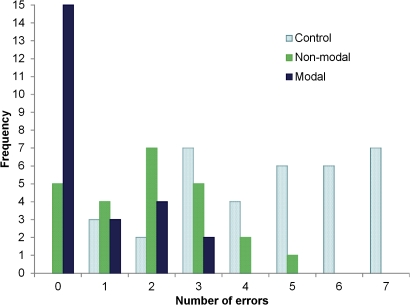

We plotted the distribution of error frequency in the randomized set of scenarios for each of the three alert types (figure 6). The distribution was skewed, with a reduced error frequency for non-modal and modal alerts compared with controls. In the scenarios lacking a prescribing alert, three of the 24 participants (12.5%) made zero or one errors. This increased to nine of 24 participants (37.5%) for scenarios shown with non-modal alerts, and further to 18 of 24 participants (75%) for scenarios shown with modal alerts.

Figure 6.

Distribution of error frequency according to alert type (randomized set).

We used mixed effects logistic regression analysis to model the relationship between error rate and alert type for the randomized set of scenarios, including participant age and gender as independent variables. The alert type variable was significant with a p value <0.0001 (table 5). The OR, the ratio of the predicted odds of error made for an alert type versus control, for modal alerts was 11.561 (95% CI 6.00 to 22.26) and 3.182 (1.91 to 5.30) for non-modal alerts. Participants presented with modal alerts were approximately 12 times less likely to make an error than controls (not shown an alert), and those shown a non-modal alert were approximately three times less likely to make a prescribing error than controls. Participants shown modal alerts were 3.6 times less likely to make an error than those shown non-modal alerts (95% CI 1.88 to 7.04). Participant age (p=0.294) and gender (p=0.734) were not found to be significantly associated with error rates in the logistic regression analysis (table 5).

Table 5.

Mixed effects logistic regression analysis

| Model involving alert type only | |||||

| Analysis of maximum likelihood estimates | |||||

| Parameter | DF | Estimate | SE | z-Value | Pr>z |

| Intercept | 1 | −0.079 | 0.216 | −0.37 | 0.717 |

| Modal alert | 1 | 2.448 | 0.317 | 7.73 | <0.0001 |

| Non-modal alert | 1 | 1.158 | 0.247 | 4.69 | 0.0006 |

| OR estimates | |||

| Point estimate | 95% Wald CIs | ||

| Modal alert | 11.561 | 6.003 | 22.262 |

| Non-modal alert | 3.182 | 1.910 | 5.303 |

| Model involving alert type, gender and age | |||||

| Analysis of maximum likelihood estimates | |||||

| Parameter | DF | Estimate | SE | z-Value | Pr>z |

| Modal alert | 1 | 2.259 | 0.329 | 6.875 | <0.0001 |

| Non-modal alert | 1 | 1.164 | 0.266 | 4.381 | <0.0001 |

| Gender | 1 | 0.139 | 0.409 | 0.339 | 0.734 |

| Age | 1 | −0.041 | 0.039 | −1.050 | 0.294 |

To exclude confounding by randomization group, we compared the performance of participants on the calibration set of nine scenarios by including randomization group as an independent variable in the logistic regression analysis. There was no significant difference in error rate between the three randomization groups (p=0.823). We found no significant evidence of overall learning or fatigue effects in the logistic regression analysis, nor when the analysis was carried out separately for modal alerts (p=0.730), non-modal alerts (p=0.301), and controls (p=0.233).

Interview findings

We carried out semi-structured interviews with each of the 24 study participants. We elicited participants' preferences regarding the type of alerts presented during the experiment (box 1). Ten participants preferred the modal form of alert, five preferred the non-modal type, and three participants felt their preference would depend on the scenario. Five participants did not recall seeing the non-modal alerts during the experiment. One doctor, who had the lowest error rate of all participants, was never displayed a modal alert because no prescription was attempted in any of the scenarios assigned to a modal alert. We asked the 18 participants who were able to comment which alert type was more noticeable. Sixteen participants felt that the modal alert was the more noticeable, either because of the interruption to workflow caused by the alert or the central position of the alert on screen. The two people who felt that non-modal alerts were more noticeable attributed this to the proximity of the alert window to the prescribing user interface widgets. Participants described positive and negative feelings toward modal alerts. They acknowledged that the attention-grabbing aspect of workflow interruption might be of benefit in a busy work environment, but that this feature might become irritating over time, especially if used for minor warnings. Several participants had assumed the two types of alert in the experiment to represent different levels of warning severity, attributing less significance to alerts presented non-modally.

Box 1. Views on the type of alert.

“When you are in a rush, the one that pops up is better—it forces you to click on OK.”

“[I prefer] modal—you're likely to miss otherwise. But I recognize the problems, irritating in daily use.”

“Modal tend to be annoying. But if it's something you don't want to miss…”

“There are pros and cons. You could miss more subtly presented alerts. It's annoying to have to say OK to minor warnings.”

“The modal one in the middle makes it seem more serious. When underneath [non-modal] it is like a note.”

There were mixed views on whether the degree of interruption caused by prescribing alerts should be tailored to the severity of alert (box 2). Half the participants believed that this should be a feature of CDS systems, and many offered that modal alerts should be reserved for only the most important circumstances. Several participants suggested tailoring other aspects of alerts such as the textual content and color scheme—for example, by using red borders for alerts of especially high importance. Others preferred to have all alerts displayed in the same way.

Box 2. Views on tailoring alert type to severity of scenario.

“If you're blatantly prescribing something completely contraindicated then show a modal alert whereas if it's a relative contraindication or cautionary, non-modal.”

“If quite serious or dire consequence—[make the alert] in your face, for example, Tazocin [piperacillin and tazobactam] and penicillin allergies. If less so, like asking you to look at or review something - [make the alert] less intrusive.”

“Penicillin allergies could be presented in a different way, for example, a red box.”

“No, regardless of seriousness, it's easier to have one system.”

“If something is a complete contraindication then can emphasize it. Say in words what the change is, rather than change the form of alert.”

“I wouldn't want non-modal at all, maybe if in different colors. If a massive contraindication then bigger, colored. Less so if less major.”

All participants could describe circumstances when they would ignore the recommendation of a prescribing alert (box 3). Most referred to making an overriding clinical judgment, incorporating information unavailable to the CDS system, such as detailed information about the patient or drug, prior experience of similar situations, and senior advice.

Box 3. Views on when to override an alert.

“If you are already aware of it. When you are prepared to take the risk. When the side effect is small so that you might want to override it.”

“By its nature an alert is generalized. There may be a specific clinical situation when you judge the risk versus benefit is acceptable over the risk stated in the alert.”

“When it's a caution, a minor issue. When you know the clinical situation, or seniors know.”

“When a senior says.”

“If it is clearly stated in the BNF [British National Formulary] then override - the alert doesn't necessarily know best.”

A range of views were elicited regarding the advantages and disadvantages of prescribing alerts (box 4). A strong theme emerged of the potential benefits for patient safety. Participants acknowledged that deficits in knowledge, prescribing experience, and concentration could be mitigated by alerts, and the immediacy of information could represent a time saving compared with searching a drug formulary or seeking advice from a pharmacist. Several participants recognized the potential educational value of alerts. Among those who offered negative views, three themes emerged. Five participants mentioned the potential time penalty of handling modal alerts. Participants referred indirectly to the potential for alert fatigue, describing how frequent triggering of insignificant or previously seen alerts may lead clinicians to be generally dismissive of alerts. Several participants expressed concerns that inaccurate or insufficient information available to the CDS system would reduce the specificity of alerts. Interviewees also warned of over-reliance on an automated alerting system and the possible detriment to clinicians' prescribing knowledge. Many of these people highlighted the risks to patient safety of automation bias, if doctors incorrectly take the absence of alerts to mean a prescription is safe.

Box 4. Advantages and disadvantages of alerts.

Advantages

“It might save lives. Some of these errors can be fatal.”

“Potential to reduce drug interactions and medication errors. Increased awareness.”

“Avoiding errors. Learning points, through alerts. Save pharmacist time. Save seniors time.”

“Useful on nights when you're not thinking clearly.”

“Safety netting is a definitive positive. As long as they are not over-obtrusive or slow you down then they're not bad at all.”

“Probably makes you safer. Makes you think. If a reg [senior doctor] tells you to prescribe something, it is easy to just go and do it without thinking about it - this forces you to think about contraindications, interactions, doses”

“Improve your knowledge - if you keep seeing an alert because you keep making the same mistakes.”

“Time saving. Safety”

“Not having to root around for a BNF.”

Disadvantages

“It slows you down, especially if you already know it or you see it all the time.”

“You might get frustrated the more senior you get, ‘yes I know that!’”

“Familiarity breeds contempt. You ignore them after a while. If alerts are present—people come to rely on them, or may come to ignore them later.”

“If an alert pops up—you assume it is the only relevant thing to consider. You take it for granted. You won't look it up. You'll get irritated.”

“Over-dependence—people will stop using the BNF. People will prescribe according to the computer's advice. It is dependent on the quality of the data input and the history.”

“How would it work? The problem with all medicine prescriptions is the clinical context. That is what is missing with the computer system, for example, the case of a penicillin allergy but the doctor finding out it is actually nothing.”

“It seems to have a low threshold for giving alerts, for example, a potassium of 3.3. It doesn't know the overall trends of a patient and what's normal for them. For some users this would be annoying.”

Discussion

We found that junior doctors presented with a modal e-prescribing alert were more than 11 times less likely to make a prescribing error than doctors not shown an alert, and modal alerts were at least three times as effective as non-modal alerts at reducing errors. To our knowledge this study is the first to experimentally evaluate through a randomized design the differential impact of different prescribing alert modalities.

We used a within-participant design to minimize inter-subject differences and learning and fatigue effects. We believe our direct experimental comparison gives a more meaningful assessment of the effects of the e-prescribing workflow disruption caused by modal alerts than previous studies which have investigated this subject. Shah et al19 assigned only critical to high severity alerts to be modal to the prescribing workflow with the remainder being non-modal. This approach led to improved compliance with modal alerts compared with studies that did not stratify alerts, yet the study did not measure prescribing errors or control for alert content or clinical scenario. Our study was designed to eliminate the effects of the content of the alert and scenario difficulty. In a randomized controlled trial of non-modal alerts in promoting requests for baseline laboratory tests, Lo et al18 found that the intervention of alerts was not effective in increasing the rate of requests, but the control group was shown no alerts and there was no comparison with modal alerts. It is of note that these authors employ the terminology of ‘interruptive’ and ‘non-interruptive’ alerts, where a non-interruptive (non-modal) alert ‘…provided a warning in a reserved information box on the screen but did not require user intervention to proceed.’ We have avoided these terms to differentiate the specific notion of interruption under investigation in the present study from the more widely accepted understanding of interruption found in the psychological and human–computer interaction literature (for examples, see Trafton et al31 and Li et al32). Many of these studies investigate the effect of interruption of a primary task by a secondary task that is ‘unrelated’, in that it deals with different information from the primary task. The interruption of the modal alerts in our experiment is quite different: the alerts are ‘closely related’ to the primary task (prescribing), in that they contain information that is directly relevant. It is perhaps of little surprise, then, that for the short-term exposure of our experiment, the interruption of the modal alerts reduced prescribing errors.

Seidling et al retrospectively analyzed the acceptance of 50 788 CDS drug–drug interaction alerts using univariate analyses and multinomial logistic regression.33 Ten variables were assessed as potential modulators of alert acceptance, including ratings of alert visibility and the quality of textual information, as well as clinical factors such as hospital setting and patient age. The study also assessed the impact of whether an alert required user acknowledgment—that is, whether the user was forced to interact with the system. All variables reported showed significant impact on alert acceptance in univariate analyses. The factor found to have the largest effect on alert acceptance was whether an acknowledgment was required. Because these ‘interruptive’ alerts were so strongly predictive of alert acceptance, non-interruptive alerts, which were accepted in only 1.4% of cases, were excluded from the multivariate analyses in order to assess the impact of other variables. This study provides supportive evidence for the weaker impact of alerts that do not interrupt prescribing workflow.

We restricted our study to doctors with less than two years of clinical prescribing experience. Junior doctors carry the greatest responsibility for prescribing errors, partly because most prescribing is undertaken by them, but also because they have a self-recognized lack of prescribing knowledge.3 34–36 It is therefore reasonable to study the population with the greatest burden of prescribing and scope for error reduction. It is possible that other groups such as senior hospital doctors or general practitioners would respond differently to the interventions tested. These groups may have also had different baseline error rates.3

Despite their lack of experience in e-prescribing, the participants we interviewed raised many of the issues highlighted in previous qualitative work on alerts, including problems of inappropriate warnings, over-interruption and possible over-reliance on alerting systems. Modal alerts were generally preferred and deemed the more noticeable, but there were mixed views about the optimal means of presenting alerts. Participants were generally positive about the potential of prescribing alerts to mitigate the effects of gaps in their knowledge.

Limitations of the present work

Our use of simulated clinical scenarios confers both benefits and limitations on the study. Bespoke scenarios allowed us to test the impact of interventions for rare adverse drug events that may not have been tested adequately in previous studies. Many of the scenarios involved prescribing in acute clinical situations where many errors are known to occur.3 28 37 However, each of our scenarios involved a prescribing task delivered as an instruction from a senior doctor, so participants did not need to choose a prescription for themselves. A weakness of the scenarios is that they did not reflect the autonomy of junior doctors in clinical practice, and tested participants' obedience more than their prescribing initiative. A further weakness is that the main outcome for a scenario was based on a single binary error definition. This is a simplification of real prescribing practice in which there is a spectrum of risk and harm, multiple errors may be made at the same time, and correcting one error may generate others. Furthermore, participants not prescribing anything would score 0% errors in our study, whereas in reality this level of inaction would not be a successful therapeutic strategy. However, our participants were junior doctors working in a close-knit team on general wards who do often defer to the advice of their more senior colleagues. The decision to categorize no prescription (irrespective of exposure to the alert) as a non-error is unlike a clinical drug trial, where not taking a drug (exposure to the intervention) might lead to both positive and negative effects with respect to the outcome measured. We believe in our case the study design partly mitigates this because the three alert types (modal, non-modal, control) are treated in the same way and all scenarios are given equal exposure to the three alert types.

The study has a number of other limitations. First, although our randomized design minimizes the effects of scenario difficulty on the findings of the study, only a limited validation of scenarios was performed. We asked four doctors to rate each scenario according to the likelihood of making an error. We did not validate the probability of the scenario occurring in practice, the seriousness of the prescribing error, nor the definition of error. Validation of this kind using a Delphi process would facilitate better analysis and improve the generalizability of our results. Several authors have highlighted the limitations of bespoke datasets and the lack of a widely available repository for research and commercial applications.19 22 25 Second, our e-prescribing software was not based on a commercial system, but its design was informed by expert opinion. It is possible that our results reflect certain features particular to our user interface design and may not generalize to other prescribing systems. It is also possible that the difference in error rate seen between the two alert types was a result of differences in their position on screen rather than the degree of workflow interruption they caused. Third, study participants were watched by the observer sitting next to them, and they may have modified their prescribing behavior in response, a Hawthorne effect.38 It is difficult to see how such an effect would have significantly influenced the findings of our study. It may be that participants were more reticent in their prescribing, which would have uniformly reduced the error rate. Participants feeling the need to improve their performance in the task may have been more receptive to all alerts, resulting in a reduction in the difference in error rate between the two alert types. Fourth, participants were observed by a single investigator and therefore it was not possible to validate the outcome measures recorded other than by corroborating each outcome with additional written notes made by the observer during the scenarios. Fifth, none of the participants had previously used e-prescribing and spent on average 41 min using our system. We found no evidence of the fatiguing effects of alerts over the short-term exposure of our study, but it is likely that, with prolonged use, the onset of alert fatigue would have a significant effect on outcomes. The effect of long-term exposure to modal versus non-modal alerts is an important subject for future research.

Future work

Future studies should evaluate the impact of other aspects of the presentation of e-prescribing alerts. There is substantial evidence from areas other than e-prescribing research that attention to both design and language of warnings can have a large effect on the effectiveness of communication.25 39 We are now investigating whether graphical warning symbols included in alerts can reduce the difference in outcomes found in this study. We believe our experimental approach is a robust method for testing many features of prescribing alerts and we are currently developing an internet-based prescribing prototype for application in larger studies. This new system records user activity, such that outcome measures can be validated by ‘replaying’ the actions taken during a scenario.

Conclusion

Prescribing alerts dramatically reduced error rates, and doctors made significantly fewer errors when an alert interrupted their prescribing workflow. We believe our findings support a role for both modal and non-modal alerts in e-prescribing systems. The smaller error reduction seen with non-modal alerts does not preclude their use within a severity-stratified alerting system, nor does it signal the end for non-modal alerts in general. The distinguishing value of a non-modal alert lies in its minimally intrusive nature. It is possible that a tiered warning system, whereby all alerts are first presented in non-modal form and then become modal if both ignored by the user and sufficiently important, could optimize the utility of both types of alert while reducing alert fatigue. System designers and human factors researchers must implement CDS systems that intelligently optimize the presentation of e-prescribing alerts in order to maximize their effectiveness.

Acknowledgments

We are grateful to Dr Pete Johnson who contributed to the development of the software used in the study. We also thank the junior doctors at the Royal London Hospital who participated.

Footnotes

Competing interests: None.

Ethics approval: Ethics permission was not required for this study according to the local hospital ethics committee. The research is classified as service development. All data were recorded in anonymous form and kept confidential.

Contributors: GS: jointly designed the study with PS and JW, co-developed the study software, jointly wrote the article, carried out the data collection and qualitative analysis, and is guarantor for the study. PS: developed the scenario materials, jointly designed the study with GS and JW, and jointly wrote the article. JW: jointly designed the study with GS and PS and carried out critical revision of the article. BM: contributed statistical expertise and carried out the quantitative data analysis. FC: participated in the study design and coordinated recruitment of study participants.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.National Patient Safety Agency Patient Safety Incident Reports in the NHS National Reporting and Learning System Quarterly Data Summary—England. Issue 14. 2009. www.nrls.npsa.nhs.uk/resources/collections/quarterly-data-summaries/?entryid45=62049 (accessed Oct 2010). [Google Scholar]

- 2.Barber N, Rawlins M, Franklin BD. Reducing prescribing error: competence, control, and culture. Qual Saf Health Care 2003;12:29–32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ashcroft D, Heathfield H, Lewis P. An in Depth Investigation into Causes of Prescribing Errors by Foundation Trainees in Relation to their Medical Education - EQUIP Study. www.gmc-uk.org/FINAL_Report_prevalence_and_causes_of_prescribing_errors.pdf_28935150.pdf (accessed Oct 2010).

- 4.Sanders J, Esmail A. The frequency and nature of medical error in primary care: understanding the diversity across studies. Fam Pract 2003;20:231–6 [DOI] [PubMed] [Google Scholar]

- 5.Smith J. Building a Safer National Health System for Patients: Improving Medication Safety. Department of Health, 2004. www.dh.gov.uk/en/Publicationsandstatistics/Publications/PublicationsPolicyAndGuidance/DH_4071443 (accessed Oct 2010). [Google Scholar]

- 6.Bates DW, Teich J, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc 1999;6:313–21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Teich JM, Osheroff JA, Pifer EA, et al. ; CDS Expert Review Panel Clinical decision support in electronic prescribing: recommendations and an action plan: report of the joint clinical decision support workgroup. J Am Med Inform Assoc 2005;12:365–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ammenwerth E, Schnell-Inderst P, Machan C, et al. The effect of electronic prescribing on medication errors and adverse drug events: a systematic review. J Am Med Inform Assoc 2008;15:585–600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 10.Shojania KG, Jennings A, Mayhew A, et al. The effects of on-screen, point of care computer reminders on processes and outcomes of care. Cochrane Database Syst Rev 2009;(3):CD001096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Durieux P, Trinquart L, Colombet I, et al. Computerized advice on drug dosage to improve prescribing practice. Cochrane Database of Systematic Rev 2008;(3):CD002894. [DOI] [PubMed] [Google Scholar]

- 12.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Moxey A, Robertson J, Newby D, et al. Computerized clinical decision support for prescribing: provision does not guarantee uptake. J Am Med Inform Assoc 2010;17:25–33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Olsen D. Building Interactive Systems: Principles for Human-Computer Interaction. Boston: Course Technology Press, 2009 [Google Scholar]

- 15.Rousseau N, McColl E, Newton J, et al. Practice based, longitudinal, qualitative interview study of computerised evidence based guidelines in primary care. BMJ 2003;326:314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wilson T, Sheikh A. Enhancing public safety in primary care. BMJ 2002;324:584–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ahearn MD, Kerr SJ. General practitioners' perceptions of the pharmaceutical decision-support tools in their prescribing software. Med J Aust 2003;179:34–7 [DOI] [PubMed] [Google Scholar]

- 18.Lo HG, Matheny ME, Seger DL, et al. Impact of non-interruptive medication laboratory monitoring alerts in ambulatory care. J Am Med Inform Assoc 2009;16:66–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006;13:5–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Reichley RM, Seaton TL, Resetar E, et al. Implementing a commercial rule base as a medication order safety net. J Am Med Inform Assoc 2005;12:383–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Weingart SN, Toth M, Sands DZ, et al. Physicians' decisions to override computerized drug alerts in primary care. Arch Int Med 2003;163:2625–31 [DOI] [PubMed] [Google Scholar]

- 22.Kuperman GJ, Bobb A, Payne TH, et al. Medication-related clinical decision support in computerized provider order entry systems: a review. J Am Med Inform Assoc 2007;14:29–40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Miller RA, Gardner RM, Johnson KB, et al. Clinical decision support and electronic prescribing systems: a time for responsible thought and action. J Am Med Inform Assoc 2005;12:403–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schedlbauer A, Prasad V, Mulvaney C, et al. What evidence supports the use of computerized alerts and prompts to improve clinicians' prescribing behavior? J Am Med Inform Assoc 2009;16:531–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Phansalkar S, Edworthy J, Hellier E, et al. A review of human factors principles for the design and implementation of medication safety alerts in clinical information systems. J Am Med Inform Assoc 2010;17:493–501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jayawardena S, Eisdorfer J, Indulkar S, et al. Prescription errors and the impact of computerized prescription order entry system in a community-based hospital. Am J Ther 2007;14:336–40 [DOI] [PubMed] [Google Scholar]

- 27.Tamblyn R, Huang A, Taylor L, et al. A randomised trial of the effectiveness of on-demand versus computer-triggered drug decision support in primary care. J Am Med Inform Assoc 2008;15:430–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Avery AJ. Report for NHS Connecting for Health on the Production of a Draft Design Specification for NHS IT Systems Aimed at Minimising risk of Harm to Patients from Medications. 2006. www.connectingforhealth.nhs.uk/systemsandservices/eprescribing/news/averyreport.pdf (accessed Oct 2010). [Google Scholar]

- 29.Joint Formulary Committee British National Formulary. 60th edn London: British Medical Association and Royal Pharmaceutical Society of Great Britain, 2010 [Google Scholar]

- 30.Hedeker D, Gibbons R. Longitudinal Data Analysis. Hoboken, New Jersey: John Wiley & Sons Inc, 2006 [Google Scholar]

- 31.Trafton JG, Altmann EM, Brock DP, et al. Preparing to resume an interrupted task: Effects of prospective goal encoding and retrospective rehearsal. Int J Human-Computer Studies 2003;58:583–603 [Google Scholar]

- 32.Li SY, Blandford A, Cairns P, et al. The effect of interruptions on postcompletion and other procedural errors: an account based on the activation-based goal memory model. J Exp Psychol Appl 2008;14:318–28 [DOI] [PubMed] [Google Scholar]

- 33.Seidling HM, Phansalkar S, Seger DL, et al. Factors influencing alert acceptance: a novel approach for predicting the success of clinical decision support. J Am Med Inform Assoc 2011;18:479–84 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dean B, Schachter M, Vincent C, et al. Causes of prescribing errors in hospital inpatient: a prospective study. Lancet 2002;359:1373–8 [DOI] [PubMed] [Google Scholar]

- 35.Maxwell S, Walley T. Teaching safe and effective prescribing in UK medical schools, a core curriculum for tomorrow's doctors. Br J Clin Pharmacol 2003;55:496–503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tobaigy M, McLay J, Ross S. Foundation year 1 doctors and clinical pharmacology and therapeutics teaching. A retrospective view in light of experience. Br J Clin Pharmacol 2002;64:363–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dean B, Schachter M, Vincent C, et al. Prescribing errors in hospital inpatients: their incidence and clinical significance. Qual Saf Health Care 2002;11:340–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.McCarney R, Warner J, Iliffe S, et al. The Hawthorne Effect: a randomised, controlled trial. BMC Med Res Methodol 2007;7:30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hellier E, Edworthy J, Derbyshire N, et al. Considering the impact of medicine label design characteristics on patient safety. Ergonomics 2006;49:617–30 [DOI] [PubMed] [Google Scholar]