Abstract

Objective

To compare the use of structured reporting software and the standard electronic medical records (EMR) in the management of patients with bladder cancer. The use of a human factors laboratory to study management of disease using simulated clinical scenarios was also assessed.

Design

eCancerCareBladder and the EMR were used to retrieve data and produce clinical reports. Twelve participants (four attending staff, four fellows, and four residents) used either eCancerCareBladder or the EMR in two clinical scenarios simulating cystoscopy surveillance visits for bladder cancer follow-up.

Measurements

Time to retrieve and quality of review of the patient history; time to produce and completeness of a cystoscopy report. Finally, participants provided a global assessment of their computer literacy, familiarity with the two systems, and system preference.

Results

eCancerCareBladder was faster for data retrieval (scenario 1: 146 s vs 245 s, p=0.019; scenario 2: 306 vs 415 s, NS), but non-significantly slower to generate a clinical report. The quality of the report was better in the eCancerCareBladder system (scenario 1: p<0.001; scenario 2: p=0.11). User satisfaction was higher with the eCancerCareBladder system, and 11/12 participants preferred to use this system.

Limitations

The small sample size affected the power of our study to detect differences.

Conclusions

Use of a specific data management tool does not appear to significantly reduce user time, but the results suggest improvement in the level of care and documentation and preference by users. Also, the use of simulated scenarios in a laboratory setting appears to be a valid method for comparing the usability of clinical software.

Keywords: Bladder cancer, informatics, electronic medical records, point of care, documentation, synoptic reporting, cancer, machine learning, predictive modeling, statistical learning, privacy technology, decision modeling, education, public health informatics

Introduction

Bladder cancer is a common malignancy with an estimated global annual incidence of 360 000 cases.1 The majority (up to 75%) of bladder cancers are low-grade, non-invasive tumors with a low risk of metastasis and death but a high risk of disease recurrence.2 The result is a high prevalence, and these patients require long-term follow-up, treatment, and supportive care. The burden of bladder cancer on the healthcare system is significant.3 Treatment of bladder cancer includes complex procedures (transurethral resections, intravesical therapy, radical surgery, chemotherapy, radiation) and follow-up (cystoscopy, urine cytology, imaging studies) over a prolonged period of time. In addition to bladder cancer, this population of older patients typically have other significant comorbidities. As a result, a large volume of clinical data is generated.

At the time of a clinical visit or consultation, the physician is challenged to efficiently and accurately reconstruct the clinical history and accurately integrate new visit information into the existing clinical record. As the time from the initial presentation increases, reconstruction of the clinical history becomes increasingly difficult and time consuming. During the protracted follow-up of patients with bladder cancer, there are multiple areas of pertinent clinical data—for example, information about surgical procedures and other complex interventions (eg, intravesical instillation of chemotherapy or immunotherapy agents), pathological information (cytological and histological reports), imaging studies and data on comorbidities and other medications in this older population. If not optimally managed, there is a risk that some of the information may be overlooked. Electronic medical records (EMRs) outperform written paper-format notes in many aspects (eg, avoidance of illegible handwriting or use of non-standard abbreviations), but otherwise assistance in data management using basic EMRs is often limited.4 Furthermore, classic dictations and other narrative data entries into EMRs are often incomplete.5 6

Technology that could decrease the time required to re-acquaint a clinician with the history of a particular patient, assist in data summarization, and ensure greater accuracy of the information would be useful for patient care. Synoptic or structured reporting using specific software has been reported in pathology.7–9 In the case of surgery, structured operation notes have been reported in cardiovascular surgery, thyroidectomy, and rectal10 and breast cancer10–13 surgery. In most of these studies, structured reporting resulted in more complete reports. However, most of these studies evaluated relatively simple software designed to produce a report or note without further data management. If developed further, additional benefits of structured reporting would include increased timeliness, improved administrative data, ease of data retrieval for research purposes, and improved education and patient safety.14–16

We are not aware of any informatics tools designed specifically for bladder cancer. As there are limited data from comprehensive studies comparing EMR and synoptic reporting, we decided to study our bladder cancer synoptic tool in head-to-head comparison with EMRs in a trial in a controlled human factors laboratory.

Background

We have developed and adopted an electronic, online, point-of-care, structured, clinical documentation tool, eCancerCareBladder or eBladder (originally named BLis or Bladder information system), to synthesize pertinent complex medical histories in an effective and time-saving manner for busy clinicians. All healthcare personnel involved in the care of patients with bladder cancer, especially urologists and uro-oncologists, are the target users for the software. The software was designed as a patient-centric browser-based application to record, organize, manage, and analyze the wealth of information on patients with bladder tumors. Oracle-based, eCancerCareBladder currently covers all relevant information on the history of over 1200 patients with bladder cancer. During the initial development, clinicians attended design meetings and iteratively developed record prototypes before finalizing the format. A list of the essential components or quality indicators to include in the cystoscopy report and timeline properties was developed by consensus with the clinicians.

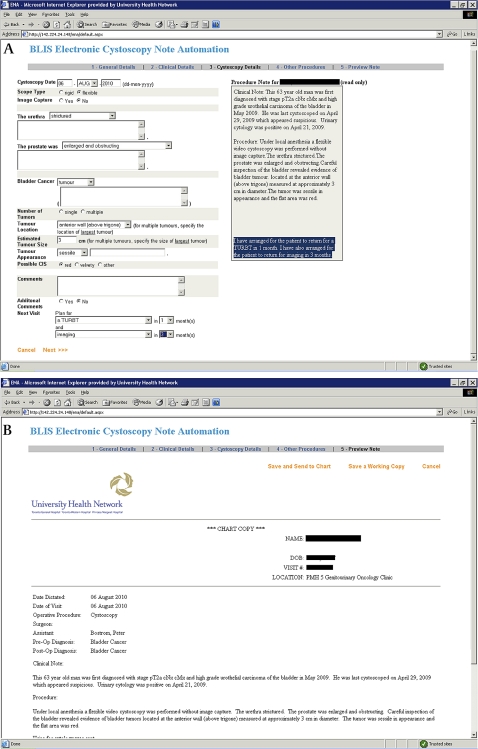

When a new patient is seen, his/her demographics (name, medical record number, contact information, and health card number) and medical history are entered into the eCancerCareBladder system. Available bladder cancer history and prospectively collected upcoming events (cystoscopy, pathology reports, surgical procedures, radiation, chemotherapy) are entered using predetermined data entry fields and free comment fields (figure 1). At each clinical visit, cystoscopy data, summary of findings, and recommendation of further treatment and follow-up are entered by the responsible physicians, and the software automatically produces a transcribed letter to the referring physician, which includes the findings of the cystoscopy, in addition to medical and bladder cancer history and also future treatment recommendation (figure 1). The letter is also exported to EPR (the EMR software used in Toronto General Hospital) where it will be presented as a procedure note, and from EPR the note is displayed for all EPR users, not only urologists. Data transferred from other physician-based sources (such as referral letters, pathology reports, and radiology reports) are entered into the system by assistants. We recently upgraded the system so that preselected categories of data are automatically uploaded from the EPR with linkage by medical record number. As a clinical tool, gathered information is immediately converted into a visual timeline display. The timeline (named DePICT (disease-specific electronic patient illustrated clinical timeline)) is generated by an application using hypertext pre-processor scripts to query the database for event data. The result is displayed in a HTML-enabled web browser (figure 1). The timeline consists of color-coded icons that provide a rapid a visual impression of the clinically significant results. For the clinician, this provides a rapid, chronological illustration of the patient's history without reviewing extensive data. Scrolling over individual icons reveals further important details not immediately present on the graphic timeline display, such as tumor stage and grade results for each pathology icon. In addition, double clicking on any icon brings up a separate window containing all of the information that has been entered into the database for that particular event. This approach fits with established work patterns, allowing the physician to quickly skim the information in a linear fashion and infer past details hopefully with a reduced risk of error.3 4

Figure 1.

Screen shots of data entry field (upper panel), clinical data summarization as a ‘timeline’ image (lower panel) and a clinical note/letter (next page) in eCancerCareBladder.

The Healthcare Human Factors group in Toronto, the largest such group involved with evaluation and design of health informatics systems, collaborated in the evaluation of the eCancerCareBladder system. Human factors methods are derived from cognitive psychology, ergonomics, and industrial engineering and ensure that systems are designed to match user expectations and capabilities and are intuitive, efficient, and safe. While human factors engineering has long been applied to safety-critical industries such as aviation and power generation, it is increasingly being recognized as an important precursor to the adoption of health information technologies. Human factors research has previously been successfully applied to the development of information systems and when the aspect of human error in data entry into information systems is studied.17–19 To assess the match to workflow, appropriateness, and ease of use of the eCancerCareBladder system, we used the state-of-the-art usability laboratories of the Center for Global eHealth Innovation. These facilities allow the remote observation of evaluations through one-way glass such that participants remain immersed in the clinical simulation. Through the use of video, voice and data capture technologies, observers are able to collect data on system performance and user satisfaction, before implementation of a system. This allows comparison of competing systems and refinements to be made in advance of actual clinical use, ensuring that the system is optimized upon implementation.

Methods

Study design and setting

This was a randomized study comparing the completeness and speed of chart review using eCancerCareBladder versus the standard EMR in the treatment and follow-up of patients with non-muscle-invasive bladder cancer. Comparative test sessions were held in the laboratory facility of the Healthcare Human Factors team. The participants used a standard hospital computer work station in the laboratory to access EPR and eCancerCareBladder. In addition to EPR and eCancerCareBladder, two additional software programs were used during testing. Adobe Connect was used to share the screen of the workstation with the test facilitator and observers, and Morae enabled the study facilitator to record the screen and participant comments during testing. These two applications were not essential to the study; however, they facilitated note taking and the verification of data after the testing sessions were over. Finally, Microsoft Excel was used to capture the task completion times using the available time-stamping macros.

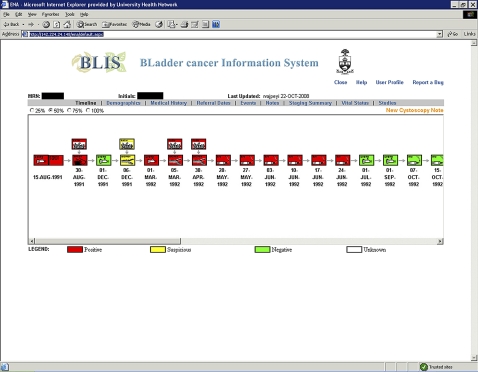

The Healthcare Human Factors team conducted the study. The participants were presented with two consecutive clinical bladder cancer scenarios simulating events in the cystoscopy suite during a normal bladder cancer follow-up visit. Both scenarios included typical patients with a protracted history of non-muscle-invasive bladder cancer. The clinical details and note quality parameters of both cases are presented in online appendix 1. The study flow is presented in figure 2. After informed consent had been obtained and instruction given, one-factor (tool) randomization was carried out, and participants were randomized to use either eCancerCareBladder or EPR in scenario 1 and the other system in scenario 2. The outline and details of the tasks in each scenario are presented in table 1 and online appendix 1, respectively. Briefly, in each scenario, the participants were given the opportunity to familiarize themselves with the patient's medical record, which was followed by a cystoscopy video simulation prepared from an actual patient case recording at the time of a bladder cancer follow-up visit. After the video, participants were asked to produce a clinical report either using eCancerCareBladder or by dictating it (if using EPR). For the purpose of this study, a digital voice recorder (RCA model No RP5030A) was used to record the dictation, which was later transcribed. In the second scenario, after creating the report, the participant was asked five clinically significant questions about bladder cancer history to test the timeliness and accuracy of data retrieval. The questions were as follows. (1) When did this patient have his last transurethral resection of bladder tumor (TURBT) that was positive for bladder cancer? (2) When did this patient have his last TURBT and what was the pathology? (3) During what time period did this patient have BCG instillations? (4) Has this patient had upper tract imaging during the last year? (5) Is this patient an active smoker, an ex-smoker, or never smoked?

Figure 2.

Study flow chart.

Table 1.

Study tasks in the two study scenarios

| Scenario | Task No | Description |

| 1 | 1 | Review the medical chart of the patient in scenario 1 |

| 2 | View the cystoscopy video for the patient in scenario 1 | |

| 3 | Produce a clinical note for the patient in scenario 1 | |

| 2 | 4 | Review the medical chart of the patient in scenario 2 |

| 5 | View the cystoscopy video for the patient in scenario 2 | |

| 6 | Produce a clinical note for the patient in scenario 2 | |

| 7 | Answer 5 questions related to the history of the patient in scenario 2 |

Finally, the participants answered a post-study questionnaire (online appendix 2). Briefly, the questionnaire included questions about participants' experience in bladder cancer management, general skills using computers, skills using eCancerCareBladder, ease of data retrieval using eCancerCareBladder or EPR, ease of generation of a cystoscopy note using eCancerCareBladder or EPR with dictation, user satisfaction when using eCancerCareBladder or EPR, and which software the participant preferred.

Participants

There were 12 participants comprising trainees and staff urologists with different levels of training and expertise: four residents in training, four uro-oncology fellows (fully trained urologists in subspecialty training) and four staff uro-oncologists. All were familiar with the use of both EPR and eCancerCareBladder. We did not perform official sample calculations because of limited resources.

Data recording and statistical analyses

The following data were recorded in the test laboratory during the study: (1) the screen of the workstation throughout each test session; (2) start and stop times for each task; (3) participant responses to questions; (4) subjective observations of participant performance as well as all comments made by the participants during testing; (5) participant responses to the post-test survey questions. Data analysis was performed using SPSS V.7.0.

Results

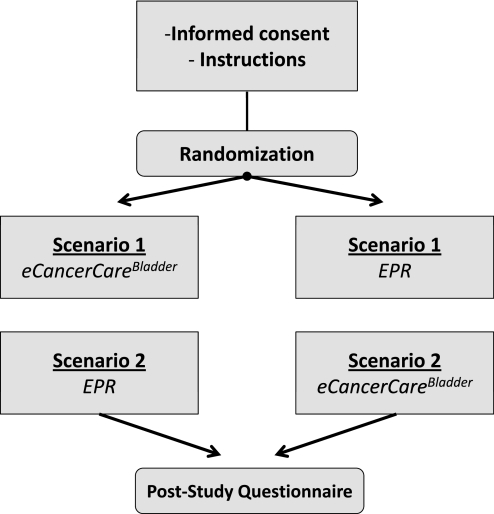

Task completion time

The tasks that the 12 participants were asked to complete are presented in the table 1. The time needed to review the chart and produce a clinical report for both clinical scenarios is presented in figure 3 (time presented in seconds). When chart review times were compared, the use of eCancerCareBladder was faster in both scenarios, but this was significant only in scenario 1 (p=0.019). The average time needed to produce a clinical note was slightly longer when eCancerCareBladder was used, but the differences were not significant (p=0.46 for scenario, and p=0.88 for scenario 2, respectively). It is notable that the time required to transcribe the dictation when EPR was used was not included in the task time of the participants.

Figure 3.

Average times to complete chart review and clinical note.

Quality of reports

The quality of the reports was quantified using predetermined quality parameters, and the completeness of the note was scored from 0 to 24. The average report quality is presented in table 2. Clinical reports produced using eCancerCareBladder were better in terms of quality in both scenarios. The differences were significant in scenario 2 (p<0.001) and non-significant in scenario 1 (p=0.11).

Table 2.

Average clinical note quality

| eCancerCareBladder | EPR | p Value | |

| Scenario 1 | 17±5.1 | 16±4.3 | 0.11 |

| Scenario 2 | 20±0.5 | 14±2.3 | <0.001 |

Clinical note quality reported as mean±SD points (out of 24 points).

EPR, electronic patient record.

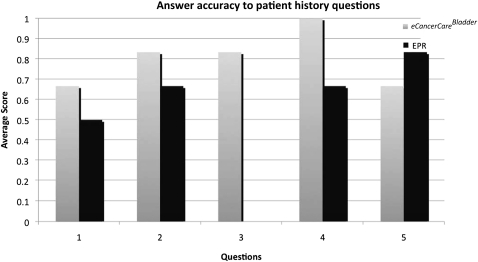

Accuracy and time required to review medical history

In the second scenario, participants were asked five clinically relevant questions about the patient's bladder cancer history. The results are presented in figure 4. The use of eCancerCareBladder was significantly more accurate and faster than EPR in all but one question (number 5, 6/12 participants answering correctly using eCancerCareBladder compared with 8/12 correct answers when EPR was used). In question 3, only participants using eCancerCareBladder were able to give the correct answer (10/12 participants answering correctly using eCancerCareBladder compared with 0/12 participants using EPR, p<0.001). When all of the answers to all five questions were combined, eCancerCareBladder was clearly superior (average 4.0 and 2.5 points for eCancerCareBladder and EPR use, respectively, p=0.004). Furthermore, the time needed to retrieve the answers was significantly shorter with eCancerCareBladder (101 vs 316 s for EPR users, p<0.001).

Figure 4.

Number of correct answers to questions about bladder cancer history.

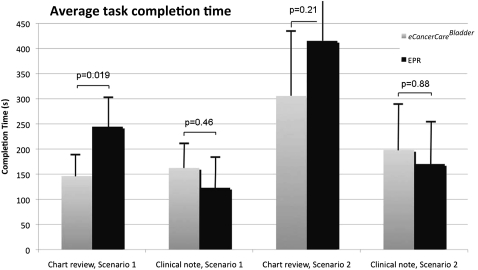

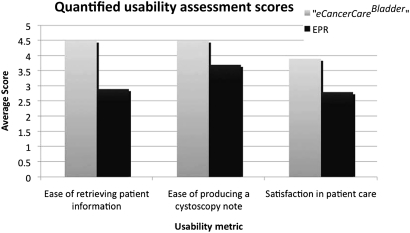

Post-study questionnaire

After completing the reports for the two clinical scenarios, the participants were asked to answer a post-study questionnaire (online appendix 2).

The participants represented all levels of clinical experience of managing bladder cancer (1–21 years). Most of the participants considered themselves to be skilled computer users (full ability, 50%; medium ability, 33%; some ability, 17%; no ability, 0%). The distribution of eCancerCareBladder skill self-assessment was identical with the general computer skill question. Most of the participants had used eCancerCareBladder recently (less than 1 month ago), but two of the 12 participants had not used eCancerCareBladder within the past month, and two within the past 3 months.

Quantified usability assessment scores are presented in figure 5, where the user satisfaction answers are transformed from discrete answers to a Likert scale (1=least easy or satisfactory rating; 5=highest satisfaction). The participants considered the use of eCancerCareBladder to be easier for retrieving clinical data and reporting. Furthermore, eCancerCareBladder was the preferred system in terms of general satisfaction. Finally, when participants were asked ‘Given a choice, which electronic health system would you prefer?’, 11/12 chose eCancerCareBladder.

Figure 5.

User satisfaction assessment scores from post-study questionnaire.

Discussion

There have been few reports of usability of structured, synoptic clinical reports. The aim of the present study was to compare the efficacy, efficiency, and user friendliness of standard electronic patient documentation and a structured, synoptic, bladder cancer-specific clinical documentation tool (eCancerCareBladder) in a randomized study in a controlled laboratory setting. The results suggest that the use of eCancerCareBladder results in faster and more accurate reconstruction of medical history compared with review of standard EMRs. Producing the structured report, including some text typing in predetermined entry fields and completing drop-down menus, did not take significantly longer than completing a standard narrative dictated report, but it resulted in significantly better report quality in terms of recorded data. Finally, the vast majority of clinicians participating in the study preferred to use eCancerCareBladder. The improved quality of clinical notes probably results from predetermined data entry fields, which reduces the amount of data missing from clinical notes; the DePICT feature is especially valuable when medical history data are reviewed. Events that span a longer period of time (eg, serial bladder instillation in our scenarios) are captured particularly efficiently using the DePICT feature, compared with opening multiple clinical notes in traditional EMRs. Our results are in agreement with previous findings suggesting user preference of graphical entry and drop-down menus over text entry; embedded objects (data entry lists) and templates are also appreciated.20 21 These features may result in more consistent documentation, fewer documentation errors, and increased compliance.21 This should translate into improved patient safety.22

eCancerCareBladder is an example of bottom-up software design with successful adoption, an approach characterized by participation of end users in all stages of development.23–29 Clinical input has led to several efficient and user-friendly applications, such as the timeline function, lists, drop-down menus, and use of shortcuts and direct data entry. Our results suggest that eCancerCareBladder is superior to EMRs in ease of software use, clinical user satisfaction, and general software preference.

Successful clinical adoption of applications can benefit from formal usability testing of software during development as well as after implementation. Evaluating success in information systems has several dimensions that encompass system quality, information quality user satisfaction, individual impact, and organizational impact.30 The last four cover the effectiveness or influence of an informatics system. In evaluating the effectiveness of our new bladder cancer information system, we focused on the individual impact on physicians, timeliness, quality of data reported, and user satisfaction. Our own previous unpublished research has indicated good user uptake and utilization of the system. We chose to conduct a controlled comparative randomized study in a human laboratory setting. The clinical scenario was simulated using real life EMR and eCancerCareBladder cases, and cystoscopy findings were presented as a video recording of real life cases as well. This approach is novel and allows investigation of various details of the end product (in this case a clinical report). Furthermore, the time used during various steps of the process can be exactly measured. An alternative study design could be a retrospective comparison of notes produced with the two methods, but this could introduce several biases. The type of case could potentially affect the chosen software (eg, simple case dictated, complicated case documented with synoptic tool). Another design could be prospective evaluation of the two systems—that is, for a period of time, clinical notes would be produced with both systems. Biases would be minimized, but the study would cause a significant work burden and potential delays in the workflow of the clinics. Also, it would be challenging to capture the timeliness of the systems used.

The limitations of this study include the artificial study environment, where participants were not rushed or disrupted, as is often the case in busy clinical practice. Further, the sample size was not large, limiting the power available to detect differences. On the other hand, the controlled laboratory environment allowed more precise analysis, and the cases used to study the two software programs were identical. We did not conduct any cost analysis, which is obviously an important aspect when implementation of software is considered. Previous cost–benefit analyses have suggested that electronic note implementation results in a reduction in transcription cost and duplicate data entry, along with increases in quality of decision support through graphical displays and searchable databases as a means to view a linear record.31 Another neglected aspect of clinical software is the ability to query a database for research purposes. The eCancerCareBladder database has been used for bladder cancer research since its development in 2005, and, to date, >10 reports have been published in peer-reviewed journals based on analysis of the database. Although the study was conducted in a dedicated uro-oncology practice in a tertiary care referral center, we expect the results to be generalizable, as the presented clinical scenarios are typical cases seen daily by general urologists in a community setting as well. Also, although some participants were experienced uro-oncologists, participants represented all levels of expertise.

Conclusion

Human factors laboratories offer a setting for controlled comparison of clinical documentation tools. When our structured, bladder cancer-specific clinical documentation tool (eCancerCareBladder) and traditional EMR were compared, use of eCancerCareBladder resulted in clinical notes that were of higher quality than those produced with EMR. There were no differences in time needed to produce the notes, but data retrieval from previous notes was significantly faster with eCancerCareBladder. Finally, from a user-friendliness perspective, eCancerCareBladder was the preferred method of clinical data documentation. Further larger and more detailed studies of eCancerCareBladder usability are needed to confirm these findings.

Footnotes

Ethics approval: Princess Margaret Hospital Ethics Committee.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Ferlay J, Bray F, Pisani P, et al. GLOBOCAN 2002: Cancer incidence, Mortality and Prevalence Worldwide. IARC Cancer base No5, version 1.0. International Agency for Research on cancer; http://www-dep.iarc.fr [Google Scholar]

- 2.Pasin E, Josephson D, Mitra A, et al. Superficial bladder cancer: an update on etiology, molecular development, classification, and natural history. Rev Urol 2008;10:31–43 [PMC free article] [PubMed] [Google Scholar]

- 3.Stenzl A, Hennenlotter J, Schilling D. Can we still afford bladder cancer? Curr Opin Urol 2008;18:488–92 [DOI] [PubMed] [Google Scholar]

- 4.Nygren E, Wyatt J, Wright P. Helping clinicians to find data and avoid delays. Lancet 1998;352:1462–6 [DOI] [PubMed] [Google Scholar]

- 5.Novitsky Y, Sing R, Kercher K, et al. Prospective, blinded evaluation of accuracy of operative reports dictated by surgical residents. Am Surg 2005;71:627–31; discussion 31–2. [PubMed] [Google Scholar]

- 6.Weir C, Hurdle J, Felgar M, et al. Direct text entry in electronic progress notes. An evaluation of input errors. Methods Inf Med 2003;42:61–7 [PubMed] [Google Scholar]

- 7.Gill A, Johns A, Eckstein R, et al. Synoptic reporting improves histopathological assessment of pancreatic resection specimens. Pathology 2009;41:161–7 [DOI] [PubMed] [Google Scholar]

- 8.Haydu L, Holt P, Karim R, et al. Quality of histopathological reporting on melanoma and influence of use of a synoptic template. Histopathology 2010;56:768–74 [DOI] [PubMed] [Google Scholar]

- 9.Kang H, Devine L, Piccoli A, et al. Usefulness of a synoptic data tool for reporting of head and neck neoplasms based on the College of American Pathologists cancer checklists. Am J Clin Pathol 2009;132:521–30 [DOI] [PubMed] [Google Scholar]

- 10.Edhemovic I, Temple W, de Gara C, et al. The computer synoptic operative report–a leap forward in the science of surgery. Ann Surg Oncol 2004;11:941–7 [DOI] [PubMed] [Google Scholar]

- 11.Brown M, Quiñonez L, Schaff H. A pilot study of electronic cardiovascular operative notes: qualitative assessment and challenges in implementation. J Am Coll Surg 2010;210:178–84 [DOI] [PubMed] [Google Scholar]

- 12.Temple W, Francis W, Tamano E, et al. Synoptic surgical reporting for breast cancer surgery: an innovation in knowledge translation. Am J Surg 2010;199:770–5 [DOI] [PubMed] [Google Scholar]

- 13.Chambers A, Pasieka J, Temple W. Improvement in the accuracy of reporting key prognostic and anatomic findings during thyroidectomy by using a novel Web-based synoptic operative reporting system. Surgery 2009;146:1090–8 [DOI] [PubMed] [Google Scholar]

- 14.Shekelle P, Morton S, Keeler E. Costs and benefits of health information technology. Evid Rep Technol Assess (Full Rep) 2006;(132):1–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Prokosch H, Ganslandt T. Perspectives for medical informatics. Reusing the electronic medical record for clinical research. Methods Inf Med 2009;48:38–44 [PubMed] [Google Scholar]

- 16.Mack L, Bathe O, Hebert M, et al. Opening the black box of cancer surgery quality: WebSMR and the Alberta experience. J Surg Oncol 2009;99:525–30 [DOI] [PubMed] [Google Scholar]

- 17.Saleem JJ, Russ AL, Sanderson P, et al. Current challenges and opportunities for better integration of human factors research with development of clinical information systems. Yearb Med Inform 2009:48–58 [PubMed] [Google Scholar]

- 18.Chan W. Increasing the success of physician order entry through human factors engineering. J Healthc Inf Manag. 2002;16:71–9 [PubMed] [Google Scholar]

- 19.Rogers ML, Patterson E, Chapman R, et al. Usability testing and the relation of clinical information systems to patient safety. In: Henriksen K. Advances in Patient Safety: From Research to Implementation, Volume 2: Concepts and Methodology. Rockville (MD): Agency for Healthcare Research and Quality (US), 2005 [PubMed] [Google Scholar]

- 20.Rodriguez N, Murillo V, Borges J, et al. A usability study of physicians interaction with a paper-based patient record system and a graphical-based electronic patient record system. Proc AMIA Symp 2002:667–71 [PMC free article] [PubMed] [Google Scholar]

- 21.Atkinson J, Zeller G, Shah C. Electronic patient records for dental school clinics: more than paperless systems. J Dent Educ 2002;66:634–42 [PubMed] [Google Scholar]

- 22.Weir C, Nebeker J. Critical issues in an electronic documentation system. AMIA Annu Symp Proc 2007:786–90 [PMC free article] [PubMed] [Google Scholar]

- 23.Lapointe L, Rivard S. Getting physicians to accept new information technology: insights from case studies. CMAJ 2006;174:1573–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Berg M. Implementing information systems in health care organizations: myths and challenges. Int J Med Inform 2001;64:143–56 [DOI] [PubMed] [Google Scholar]

- 25.Heathfield HA, Wyatt J. Philosphies for the design and development of clinical decision-support systems. Methods Inform Med 1993;32:1–8 [PubMed] [Google Scholar]

- 26.Aarts J, Doorewaard H, Berg M. Understanding implementation: the case of a computerized phycisian order entry system in a large Dutch university medical center. J Am Med Inform Assoc 2004;11:207–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rosenbloom S, Miller R, Johnson K, et al. Interface terminologies: facilitating direct entry of clinical data into electronic health record systems. J Am Med Inform Assoc 2006;13:277–88 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lapointe L, Rivard S. Clinical information systems: understanding and preventing their premature demise. Healthc Q 2005;8:92–100 [PubMed] [Google Scholar]

- 29.Kanter E. Mastering change. In: Chawla S, Renesch J, eds. Learning Organizations—Developing Cultures for Tomorrow's Workplace. Portland, Oregon, 1994:71–83 [Google Scholar]

- 30.Deloney WH, McLean ER. Information system success: the quest for the dependent variable. Inform Syst Res 1992;3:60–95 [Google Scholar]

- 31.Apkon M, Singhaviranon P. Impact of an electronic information system on physician workflow and data collection in the intensive care unit. Intensive Care Med 2001;27:122–30 [DOI] [PubMed] [Google Scholar]