Abstract

Parameters in time series and other dynamic models often show complex range restrictions and their distributions may deviate substantially from multivariate normal or other standard parametric distributions. We use the truncated Dirichlet process (DP) as a non-parametric prior for such dynamic parameters in a novel nonlinear Bayesian dynamic factor analysis model. This is equivalent to specifying the prior distribution to be a mixture distribution composed of an unknown number of discrete point masses (or clusters). The stick-breaking prior and the blocked Gibbs sampler are used to enable efficient simulation of posterior samples. Using a series of empirical and simulation examples, we illustrate the flexibility of the proposed approach in approximating distributions of very diverse shapes.

1. Introduction

The last two decades have evidenced the emergence of a new class of structural equation models (SEMs), termed latent variable models (LVMs). These models relax the traditional linearity and Gaussian assumptions of the structural equation modelling framework (Jöreskog, 1974) and offer new possibilities for fitting more complex models. Such timely advances include recent developments in fitting dynamic LVMs, defined here as longitudinal models for describing change processes that extend over substantially longer time-spans (e.g., T > 35) than those implicated in conventional panel models (typically, T < 10).

Some of the seminal work on dynamic LVMs has been spearheaded in part by progress in fitting dynamic factor analysis (DFA) models (Browne & Nesselroade, 2005; McArdle, 1982; Molenaar, 1985; Nesselroade, McArdle, Aggen, & Meyers, 2002; Zhang & Nesselroade, 2007). A DFA model can be viewed as an extension to Cattell, Cattell, and, Rhymer’s (1947) P-technique model1 in which a change model of choice is combined with the standard factor analytic model to account for lagged relationships among factors and manifest variables. The different variants of DFA models proposed in the past two decades have, however, focused exclusively on linear changes (e.g., McArdle, 1982; Molenaar, 1985; Zhang & Browne, 2009; Zhang & Nesselroade, 2007). This is due largely to the many methodological challenges involved in extending DFA models to a nonlinear framework. First, the difficulties associated with fitting cross-sectional LVMs with nonlinear relationships among factors (as discussed e.g., by Kenny & Judd, 1984) have remained one of most widely investigated research issues in the SEM literature for decades (e.g., Klein & Moosbrugger, 2000; Schumacker, 2002). Generalization to nonlinear dynamic LVMs requires methodological adaptations that can lead to further complication. Second, because different measurement occasions for a single variable are typically treated as different manifest variables in standard SEM practice, the input data covariance matrix for fitting DFA models in SEM software is non-positive definite in cases were the number measurement occasions, T, exceeds the number of participants, N. Even when T < N, numerical problems may still arise due to the need to invert a high-dimensional model-implied covariance matrix at each iteration (Hamaker, Dolan, & Molenaar, 2003). Alternative approaches have been proposed to circumvent these issues, including using a block-Toeplitz matrix to replace the data covariance or correlation matrix (Browne & Nesselroade, 2005; Hershberger, Corneal, & Molenaar, 1994; Molenaar, 1985), and using raw data maximum likelihood with special parameter constraints to ensure the positive definiteness of the model-implied covariance matrix (Hamaker et al., 2003). Still, adapting these alternative approaches for use with nonlinear dynamic LVMs is not a straightforward endeavour.

Despite the challenges involved, nonlinear DFA models, as we will illustrate using an empirical example, provide a valuable tool for evaluating substantive questions that are otherwise difficult to test within the linear framework (e.g., Frederickson & Losada, 2005; Gottman, Murray, Swanson, Tyson, & Swanson, 2002). Our aims in the present paper are twofold. First, we seek to present a Bayesian approach to estimating nonlinear dynamic LVMs and propose a novel nonlinear DFA model as a special case. Our second aim is to allow parameters that are of substantive interest in the proposed dynamic model to vary over persons and, more importantly, to conform to non-parametric distributional forms through the use of a non-parametric Dirichlet process (DP) prior. We allow the dynamic but not other modelling parameters (e.g., measurement parameters such as factor loadings) to vary over persons because, in most applications of DFA models, individual differences in the dynamic parameters (i.e., parameters that dictate the nature of the change processes) are often the focus of substantive interest (e.g., Chow, Nesselroade, Shifren, & McArdle, 2004; Ferrer & Nesselroade, 2003). Relaxing the parametric assumptions imposed on these parameters is particularly important from a substantive as well as a practical standpoint. As an example, in fitting DFA models where vector autoregressive (VAR) models or other related variations are used to describe the dynamic relationships among factors, the dynamic parameters (specifically, the auto- and cross-regression parameters) often show complex restrictions of range. That is, moving beyond the stationary ranges may, in certain cases, yield systems that show increasing variance over time2 (Hamilton, 1994; Wei, 1990) – an unlikely scenario in many empirical applications. As a result, the distributions of the dynamic parameters may be non-normal, and even asymmetric.

Prior selection is an important issue in Bayesian data analysis. In many cases, conjugate priors are used for practical reasons to yield posterior distributions of known analytic forms (see Lee, 2007). The multivariate normal distribution, for instance, is one such candidate. Despite its appeal, using multivariate normal distributions as conjugate priors may lead to incorrect posterior inferences in cases where the desired posterior distributions deviate substantially from normality. More recently, there have been a few psychometric applications that utilize non-parametric priors in fitting Bayesian models. Such applications are restricted, however, to cases involving cross-sectional SEMs and item response models (e.g., Duncan & MacEachern, 2008; Lee, Lu, & Song, 2007; Navarro, Griffiths, Steyvers, & Lee, 2006), as well as linear dynamic LVMs (Ansari & Iyengar, 2006). Our proposed approach thus extends previous work that utilizes the DP as a non-parametric prior in Bayesian models to dynamic LVMs with nonlinear change processes at the factor level. Due to the categorical nature of the data used in our empirical example, our approach also generalizes previous approaches of fitting linear DFA models to categorical data (Zhang & Browne, 2009; Zhang & Nesselroade, 2007) to cases involving nonlinear DFA models.

The rest of the present paper is organized as follows. We first summarize features of the semiparametric Bayesian dynamic modelling framework adopted in the paper. We then introduce a motivating empirical example and a novel nonlinear DFA model formulated to test a specific theory of emotions. This is followed by an outline of the broader modelling framework within which our illustrative model can be conceived as a special case. We then present the Markov chain Monte Carlo procedures for fitting the broader model, and results from empirical model fitting and a simulation study. We close with some concluding comments.

2. The Dirichlet process as a non-parametric prior

Non-parametric and semiparametric Bayesian models provide a flexible platform for evaluating the tenability of parametric assumptions in dynamic LVMs. Here, we use the term ‘semiparametric models’ to refer to models with known modelling functions, but unknown distributions for some of the modelling components. In particular, we allow the distributions of the dynamic parameters in our model to conform to non-parametric forms.

When one or more of the distributions in a model of interest are of an unknown form, one common approach is to approximate such distributions non-parametrically by using a finite mixture of parametric distributions, such as a mixture of normal distributions (Lindsay, 1995; McLachlan & Peel, 2000; Sorensen & Alspach, 1971; Titterington, Smith, & Markov, 1985). In some instances, it is of substantive importance to interpret the mixture components as different clusters or classes of individuals with similar characteristics. Unfortunately, when the distributions of interest violate the normality assumption (e.g., in cases involving skewed and heavy-tailed distributions), spurious classes are often detected even when there are no systematic between-class differences (Bauer & Curran, 2003). Non-parametric Bayesian models relax critical dependence on parametric assumptions and, in this way, they help ‘robustify’ parametric models (Antoniak, 1974; Ferguson, 1973). They also serve as a platform for assessing the appropriateness of the parametric assumptions in a model of interest (Dunson, 2008; Karabotsos & Walker, 2009).

Suppose that in an application, an r × 1 vector of modelling components of interest, bi, for person i, conforms to an unknown distribution such that bi ~ 𝒫;, where 𝒫; is of an unknown form. In practice, bi may correspond to a vector of modelling parameters, a vector of latent trait components in an item response model, or a vector of non-Gaussian residuals. One possible non-parametric approach in the Bayesian framework is to specify a DP prior for 𝒫. That is, we let bi ~ DP(αP0), where P0 is a base distribution that serves as a starting-point for constructing the non-parametric distribution. For instance, the (multivariate) normal distribution is a common choice for P0. The positive quantity α represents the weight a researcher assigns a priori to the base distribution, and it reflects the researcher’s certainty of P0 as the distribution of bi.

The practical consequences of specifying a DP prior can be better understood in terms of Sethuraman’s (1994) stick-breaking representation. The stick-breaking representation provides an alternative way of conceiving 𝒫 ~ DP(αP0) as

| (1) |

where δZg(.) denotes a unit point mass at Zg, Zg is the gth matrix consisting of possible values of bi and πg is a random probability weight between 0 and 1. Equation (1) thus posits that 𝒫 consists of a series of point masses – or ‘sticks’ – of various lengths concentrated at different values of Zg. For empirical estimation purposes, a truncated DP may be used to approximate equation (1) as (Ishwaran & James, 2001; Ishwaran & Zarepour, 2000; Lee et al., 2007)

| (2) |

where πg is obtained as

| (3) |

with

| (4) |

and νG = 1 so that .

To aid interpretation, consider the simple case where G = 2. In this case, ν2 = 1, ν1 is a random weight between 0 and 1 drawn from the beta distribution; ν1, the proportion or length of the first stick, is equal to ν1 and, by equation (3), ν2 = ν2(1 − ν1) = 1 − ν1. That is, ν1 is the proportion of a unit probability stick that is broken off and assigned to Z1, and 1 − ν1 is the remainder of the stick that is assigned to Z2. In practice, the value of G is either set to a large, predetermined value (e.g., G ≥ 150) or chosen empirically. For instance, Ishwaran and Zarepour (2000) suggested that the adequacy of the truncation level, G, can be assessed by evaluating moments of the tail probability, , whose value depends only on G and the hyperparameters that govern the prior distribution of α. A relatively small tail probability is desired, as this indicates that including additional sticks beyond G does not lead to substantial differences in the approximation. Our simulation results showed that a value of G = 300 is more than adequate for the model considered in the present context.

A variety of different distributional forms can be approximated by the discrete probability measure 𝒫 through different ways of allocating the weights, πg. The assignment of the weights is, in turn, governed by the random weight, α. Typically, a gamma hyperprior is specified for α and the associated hyperparameters for this gamma prior reflect a researcher’s best guess as to how πg should be distributed, and consequently, how the point masses in equation (2) should resemble the base distribution, P0. Such prior information is then combined with information from the empirical data to shape the resultant posterior distribution of interest.

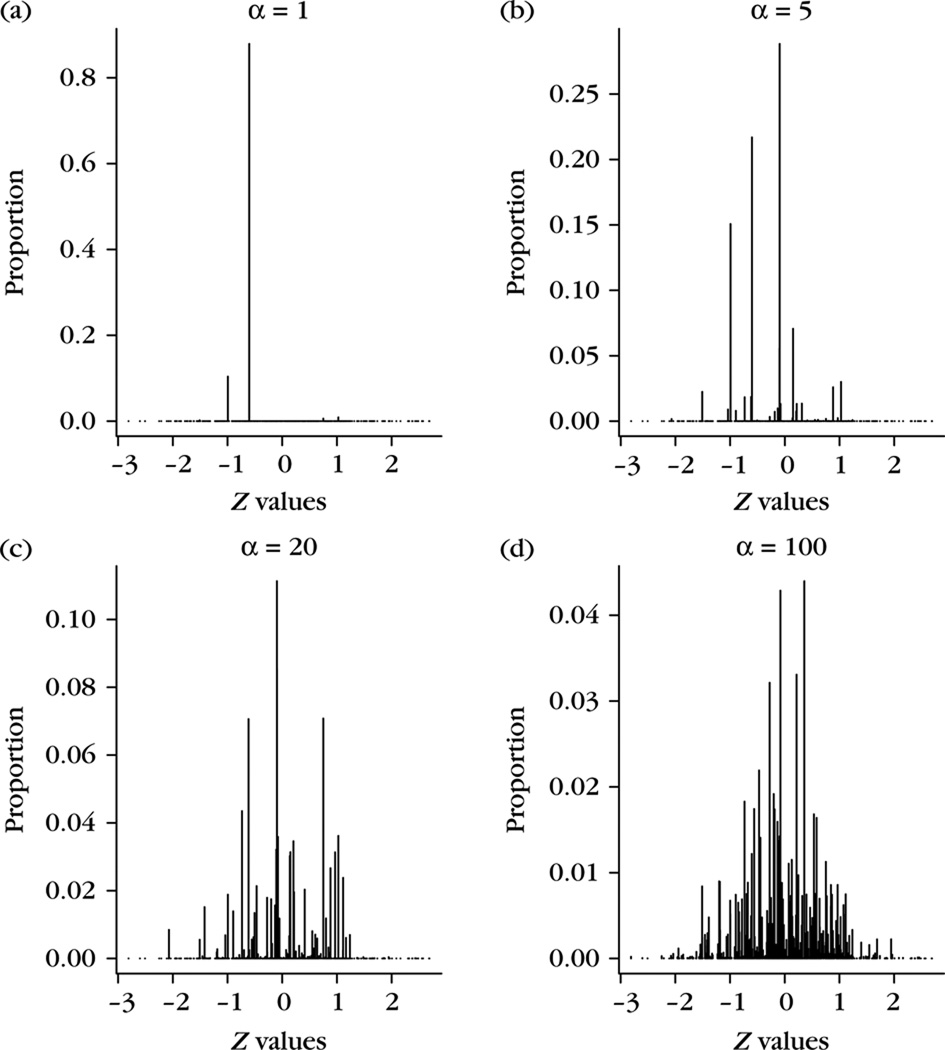

To provide a more concrete illustration of the functional role of α, we generated realizations from the DP with different values of α, G = 300, r = 1 and a univariate N(0,1) base distribution. The associated realizations from 𝒫G(.) are plotted in Figure 1. The discrete-valued nature of 𝒫G(.) resulting from the use of the DP prior is portrayed in the plots. In addition, as α increases, samples of ν change from conforming to a roughly uniform distribution to skewed distributions of increasingly restricted ranges dominated by a few weights. Consequently, the distributions of π are characterized by an increasing number of non-zero values of πg. As a result, progressively more sticks are ‘broken off’ to form more clusters, and subsequently more unique values of Zg are observed with non-zero probabilities.

Figure 1.

Plots of realizations from the DP with different values of α and a N(0,1) base distribution.

One important fact to note is that as α gets very large, realizations from 𝒫G(.) show increased resemblance to the standard normal base distribution (see Figure 1d). In fact, in the extreme case where each individual in the sample is assigned to his/her own cluster (not shown here), each individual has one unique set of values for bi and, consequently, bi would be distributed as P0 (Dunson, 2008). In our simulations, we show how the DP can be used as a non-parametric prior for representing a variety of different distributional forms for a set of individual-specific dynamic parameters of interest.

On the technical front, sampling from posterior distributions involving a DP prior can be computationally intensive. One standard approach to enable efficient sampling of bi within a Markov chain Monte Carlo (MCMC) framework is to represent bi in terms of a latent variable, Li, which records each bi’s cluster membership and conveys its values such that bi = ZLi. In other words, the classification variable Li is essentially a set of ‘pointers’ for indicating the values of Zg associated with person i so that bi is known when Li is known. Li is conditionally independent of Zg, and it is distributed as

| (5) |

3. Motivating empirical example

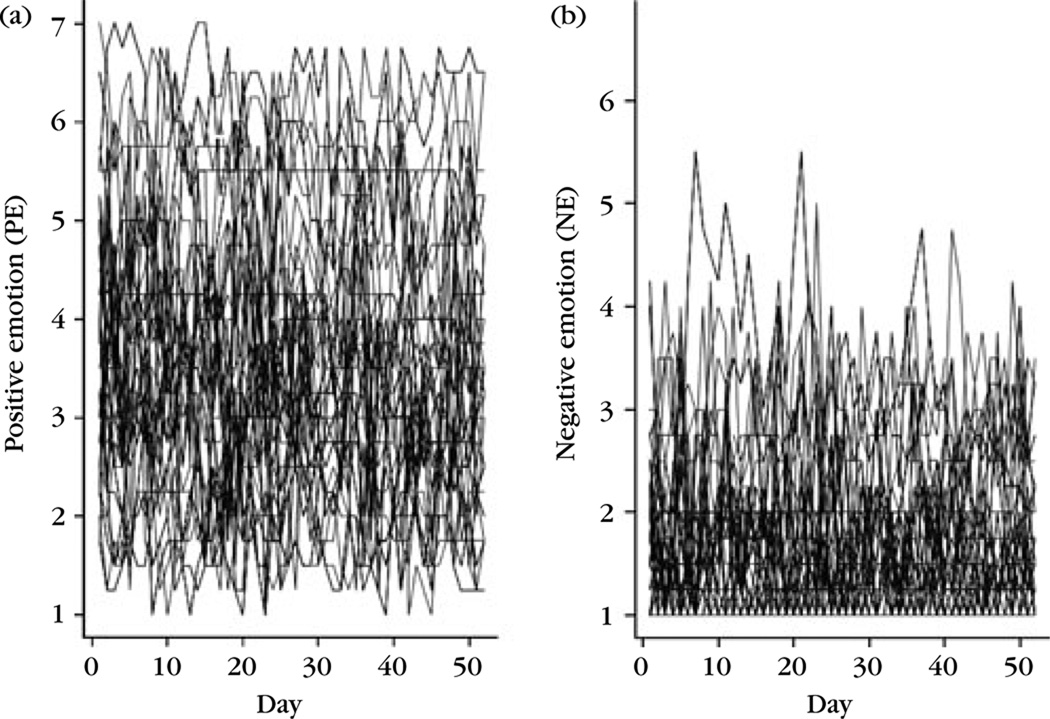

Data used in our illustrative example have been previously published elsewhere (see Chow, Ram, Boker, Fujita, & Clore, 2005; Diener, Fujita, & Smith, 1995; Ram et al., 2005). The sample consisted of 179 college students (98 male and 81 female; average age = 20.24 years, SD = 1.41) who were asked to provide self-report affect ratings daily for 52 days. After excluding participants with excessive missingness and data anomalies, a total of 174 participants were retained in the final analysis. Four ordinal positive emotion (PE) items and four ordinal negative emotion (NE) items measured on a scale from 1 ( = ‘none’) to 7 ( = ‘always’) were used for model fitting purposes. PE items include joy, contentment, love and affection whereas NE items include unhappiness, anger, depression and anxiety. Individuals’ composite PE and NE scores derived from summing these items are plotted in Figure 2.

Figure 2.

(a) PE and (b) NE ratings of the participants over 52 days. To avoid cluttering the figure, we have only plotted the trajectories of 50 randomly sampled individuals.

One linear DFA model that has been used in the past to describe day-to-day changes in PE and NE processes is a process factor analysis model that combines a factor analytic model with a VAR model for describing the relationships among factors (e.g., Chow et al., 2004; Ferrer & Nesselroade, 2003). The dynamics among the latent factors are represented as

| (6) |

In this model, PEit and NEit are continuous latent variables representing individual i’s underlying PE and NE at time t; ζ1it and ζ2it are process noise terms assumed to follow a multivariate normal distribution; b11 and b22 are the first-order (or lag-one) autoregressive (AR(1)) parameters, and b12 and b21 are the lag-one cross-regression parameters. Note that equation (6) only includes auto- and cross-regressive relationships up to the first order because previous inspection of the partial auto- and cross-correlation plots of the PE and NE sum scores from the current data set suggested that the shorter-term dynamics in the data are captured primarily by the lag-one components.3 Higher lag orders and other linear/nonlinear variations of equation (6) can, however, be readily implemented using the broader modelling framework and the associated estimation procedures proposed in the present paper. Thus, higher-order lags can be incorporated as needed in other applications.

Whereas the model shown in equation (6) is a common linear choice for capturing the kinds of relatively rapid fluctuations seen in affect data, we constructed a nonlinear extension to the VAR(1) model in equation (6) to test a theoretically driven model of emotions. Specifically, in their study of affect, Zautra, Reich, Davis, Nicolson, and Potter (2000) proposed a dynamic affect model which postulates that the relative separation between PE and NE changes dynamically as a function of stress. That is, elevated stress is associated with ‘shrinkages’ in the affective space and the coalescence of positive and negative emotions into a unipolar dimension. Thus, the dynamic affect model suggests that the linkage between PE and NE strengthens with stress but weakens at lower levels of stress. Because we did not have a time-varying indicator of stress, the dynamic affect model will be tested mathematically as

| (7) |

where b11,i and b22,i are the baseline AR(1) parameters of individual i at extremely low values of PEi,t−1 and NEi,t−1, respectively. If an individual’s PE (or NE) was high at time t − 1, the high PE (NE) affects the subsequent dynamics of the individual’s NE (PE) by altering the AR(1) parameter associated with the latter. The parameters b12,i and b21,i are the person-specific deviations in AR(1) parameters for PEit and NEit respectively, when the two factors were at extremely high values at time t − 1. That is, under high stress, it is natural for an individual to experience a high level of NE. The high NE, in turn, changes the dynamics of PE at the next time point by altering the value of PE’s AR(1) parameter by a magnitude of b12,i. Whether a reciprocal effect from PE to NE also exists at very high values of PE is reflected in the magnitude of b21,i.

In sum, the nonlinear VAR(1) model in equation (7) extends the VAR(1) model into a linear DFA model (see equation (6)) in three ways. First, the AR(1) parameter of each factor is now a time-varying function of the other factor from a previous time point, so that the dynamic model becomes nonlinear. Second, we allow four of the dynamic parameters, namely, bi = (b11,i, b22,i, b12,i, b21,i) to vary over persons. Third, we use a DP prior for bi to allow these parameters to conform to non-parametric distributional forms.

4. Dynamic latent variable modelling framework

The nonlinear VAR(1) model in equation (7) can be formulated as a special case of a nonlinear state-space model. The state-space representation provides a flexible framework for representing different dynamic processes and subsumes many dynamic and time series models as special cases. Because latent variables are still the focus of our formulation, we refer to our modelling framework as the dynamic latent variable modelling framework. The resultant modelling framework comprises a dynamic model and a measurement model, which will be described next.

4.1. Dynamic model

The general dynamic model on which the proposed estimation procedures are based is expressed as

| (8) |

where i is the person index and t is the time index; ηit is a w × 1 vector of latent variables of interest, ζit is a vector of process noise components and ft(.) is a vector of time-varying, differentiable linear or nonlinear functions describing the latent variables at time t in terms of three components (1) their previous history at time t − 1, (2) bi, an r × 1 vector of person-specific parameters, and (3) a vector of parameters that is held invariant over time and persons, denoted as θη. In our motivating example, ηit = (PEit, NEit)′, bi = (b11,i, b22,i, b12,i, b21,i)′ and θη = (Ψζ). We specify a DP prior for bi, and set the base distribution, P0, to be an r-variate normal distribution with mean vector μZ and covariance matrix ΨZ.

Beyond our illustrative model, a variety of other dynamic functions can be readily specified as special cases of equation (8). Some examples include unobserved components models with cyclic, seasonal, and irregular components (Durbin & Koopman, 2001; Harvey, 2001), non-parametric spline models (De Jong & Mazzi, 2001), and exact discrete time models (Harvey, 2001). VAR models of higher lags and other related vector autoregressive moving average (VARMA) extensions can also be formulated as special cases of equation (8) by expanding the size of ηit to include higher-order lag components.

4.2. Measurement model

Motivated by our empirical data of interest, we now consider a measurement model for ordinal data and elaborate briefly, where appropriate, on how the proposed framework can be extended to include mixed responses. In the conventional state-space framework, the measurement model is used to specify the relationships among a set of latent and manifest variables. In cases involving ordinal manifest data, the same measurement model is defined in terms of a vector of underlying, continuous latent variables as

| (9) |

where is a p × 1 vector of unobserved continuous response variables underlying a p × 1 vector of manifest ordinal data, yi, and εit is a vector of uniquenesses. The vector of time-invariant parameters in the measurement model, denoted by θε, includes elements in the vector of intercepts, μ, the p × w matrix of factor loadings, λ, and Ψε, the p × p covariance matrix of εit.

The unobserved continuous latent vector, , is linked to the manifest ordinal data, yit by

| (10) |

where τk,h is a set of threshold values held invariant across persons and time, with

| (11) |

That is, for the kth ordinal variable, yit,k, with M categories, there are M − 1 threshold parameters. The underlying variable approach summarized in equations (9) – (11) has been shown to be equivalent to the generalized latent trait approach often adopted in the item response theory framework (Bartholomew & Knott, 1999; Jöreskog & Moustaki, 2001).4 Since only ordinal information is used to identify , additional constraints need to be imposed to identify the model. For instance, the lowest and highest threshold values of each item can be fixed, as opposed to being freely estimated (Lee & Zhu, 2000). These are the identification constraint adopted in the present study.

5. Bayesian estimation procedures

Throughout, we define θ = (θε, θη), π = (π1, …, πG), L = (L1, …, Ln), H = (η1, …, ηn) with ηi = (ηi1, …, ηiT)′ as the array of latent variables, τ = (τ1,2, …, τ1,M −2, …, τp,M −2) as a vector of threshold parameters and b = (b1, …, bn) as an array of all person-specific parameters. In addition, we denote Y = (Y1, Y2, …, Yn) ≜ {Yobs, Ymis}, where Yobs is a data array with complete ordinal observations from all persons and time points, Ymis is a data array that includes all missing observations from all persons and time points, with Yi = (yi1, yi2, …, yiT)′ ≜ {yi,obs, yi,mis} being a data matrix that includes person i’s complete and missing manifest ordinal data up to time T. Their corresponding continuous counterparts are denoted by . Assuming that the data are missing at random with an ignorable missingness mechanism (Little & Rubin, 1987), estimation of all parameters and latent variables will be based only on the observed data set, Yobs.

The general model summarized in equations (7)–(11) can be rewritten as

where SB(.) denotes the stick-breaking process expressed in equation (3).

We specified the prior distributions for our modelling components as

| (12) |

where c is a constant used to specify a diffuse prior for the threshold parameters and denotes the kth row of Λ (for k = 1, …, p). The components are all hyperparameters whose values are assumed to be known. Thus, with the exception of the threshold parameters, which were assigned a non-informative prior, standard conjugate priors were specified for all other parametric components in the model. Such priors were thought to provide a reasonable representation of the characteristics of these components, and the associated hyperparameters can be determined in a relatively straightforward manner based on previous applications. Details of our hyperparameter choices are discussed later as we present the empirical results.

The Gibbs sampler will be used to simulate a sequence of random observations from the joint posterior distribution p(π, Z, L, μZ, ΨZ, α, H, θ, τ, Y*, Ymis|Yobs), where Z = (Z1, …, ZG) is a G × r matrix containing values of bi. At the first iteration, samples of Z are drawn from the base distribution, P0. Then, starting from , the Gibbs sampler involves sampling sequentially, for the next iteration, q+1 (until the last iteration, Q), as follows:

Generate .

Generate .

Generate α(q+1) from p(α|π(q)).

Generate (π(q+1), Z(q+1)) from .

Generate L(q+1) from p(L|π(q+1), Z(q+1), τ(q), Y*(q), θ(q), H(q), Yobs).

Generate H(q+1) from p(H|τ(q), Y*(q), θ(q), Yobs, b(q+1)).

Generate θ(q+1) from p(θ|τ(q), Y*(q), H(q+1), Yobs).

Generate .

Generate .

In sum, the Gibbs sampler essentially involves sampling from a series of conditional distributions while each of the modelling components is updated in turn. The conditional distributions needed to implement steps (a)–(i) are summarized in the Appendix. In cases where the associated conditional distributions are of known analytic forms, sampling from these distributions is straightforward (including steps (a)–(c), (e), (g) and (h)). In other cases, Metropolis–Hasting (MH) algorithms are used within the Gibbs sampler (e.g., steps (d), (f) and (i)) to allow sampling from the corresponding conditional distributions. Steps (a)–(e), in particular, are all part of a blocked Gibbs sampling procedure for deriving posterior samples of bi. Furthermore, no additional step is included to sample the missing ordinal data, Ymis. Because of the assumption of missingness at random, all that is needed are samples of the missing latent continuous data array, , to yield posterior samples of , obtained respectively from steps (i) and (h) and subsequently utilized in other sampling steps.

6. Empirical results

The nonlinear DFA model (see equations (7) and (9)–(11)) was fitted to the empirical data. Four ordinal items were used to identify PEit, individual i’s latent positive emotion, and four ordinal items were used to identify NEit, individual i’s latent negative emotion. We ran three independent Markov chains with different starting values and yielded similar results. We report here the results as aggregated across the three chains.

For identification purposes, we set τk,1 to Φ−1 (nk,1/n) and τk,6 to , where Φ−1(.) denotes the inverse standard normal cumulative distribution function and nk,h represents the number of responses endorsing category h on item k across all persons and time points. The hyperparameter values of the prior distributions (see equation (12)) were specified as follows. A diffuse prior was specified for all of the threshold parameters, so c can be set to any arbitrary constant value without affecting the resultant posterior distributions of the threshold parameters. We set μ0 to an 8 × 1 vector of ones, Σ0 to I8, w0 to 10, and to

Further, letting k and j denote the row and column, respectively, of the factor loading matrix, Λ, Λ0kj = 0.8 for each of the freed elements in the factor loading matrix (j = 1 for k = 2, 3, and 4; j = 2 for k = 6, 7, and 8) and H0Λkj = 1 for each of the freed elements in the factor loading matrix. For the conjugate priors of the measurement error variances, we set α0εk to 8 and β0εk to 10 to yield variance values that were relatively large and diffuse.

To ensure that the approximations obtained from posterior samples of the nonparametric components were not biased by the choice of our hyperparameters, we allowed some of the hyperparameters that governed the base distribution to vary randomly across the three independent Markov chains. Specifically, based on previous results from fitting VAR(1) models to sum scores from the present data set with parametric assumptions, we set μZ0j to 0.5 for j = 1 and 2 (i.e., corresponding to b11,i and b22,i) and to −0.1 for j = 3 and 4 (i.e., corresponding to b12,i and b21,i). Elements in ΨμZ were sampled randomly from a Unif (1, 10) distribution for b11,i and b22,i and a Unif (1, 12) distribution for b12,i and b21,i for each independent Markov chain. Furthermore, we set c1 to 10, and allowed c2 to be sampled randomly from a Unif (3, 7) distribution for elements in that corresponded to b11,i and b22,i, and from a Unif (0.5, 4) distribution for those that corresponded to b12,i and b21,i. Note that the different hyperparameter choices for elements in bi were necessary because we expected b12,i and b21,i to conform to much narrower ranges than the baseline auto regression parameters in stable systems (namely, systems that do not show increasing variance over time). With respect to hyperparameters for the prior distribution of α, we set a1 to 250 and a2 to 1 to yield large values of α (and consequently, more unique bi values) to capture some of the more subtle individual differences in these dynamic parameters.

We computed the estimated potential scale reduction (EPSR; Gelman, 1996) values based on the three independent Markov chains, each initialized with different starting values for the person-invariant parameters, and different hyperparameter values as described above. The EPSR values for all person-invariant parameters became less than 1.2 and the corresponding parameter estimates from different chains stabilized in less than 200 iterations. To allow sufficient burn-in iterations to recover the shapes of the person-specific parameters, we allowed for 18,000 burn-in iterations.

The posterior predictive probability (Gelman, Meng, & Stern, 1996; Lee & Zhu, 2000; Meng, 1994) of the fitted model computed using posterior samples of the continuous data array, Y*, averaged .67, indicating that the model provided a reasonable fit to the data. Estimates of all person- and time-invariant parameters obtained using 4,000 additional iterations after burn-in are summarized in Table 1. These estimates were averages taken over the three independent chains.

Table 1.

Bayesian estimates of time- and person-invariant parameters from fitting the nonlinear DFA model to empirical data using the DP prior

| Parameters | Mean | SD | 5th percentile | 95th percentile |

|---|---|---|---|---|

| [λ21, λ31, λ41] | [1.08, 1.03, 0.86] | [0.02, 0.02, 0.02] | [1.05, 1.00, 0.84] | [1.11, 1.06, 0.89] |

| [λ62, λ72, λ82] | [1.03, 0.77, 0.69] | [0.04, 0.03, 0.02] | [0.97, 0.72, 0.65] | [1.09, 0.83, 0.73] |

| ψε1 | 0.41 | 0.01 | 0.39 | 0.43 |

| ψε2 | 0.35 | 0.01 | 0.34 | 0.37 |

| ψε3 | 0.40 | 0.01 | 0.38 | 0.41 |

| ψε4 | 0.55 | 0.01 | 0.53 | 0.57 |

| ψε5 | 0.43 | 0.02 | 0.39 | 0.47 |

| ψε6 | 0.29 | 0.01 | 0.26 | 0.31 |

| ψε7 | 0.54 | 0.03 | 0.48 | 0.60 |

| ψε8 | 0.63 | 0.02 | 0.60 | 0.66 |

| μ1 | 0.05 | 0.03 | 0.01 | 0.09 |

| μ2 | 0.03 | 0.03 | −0.02 | 0.08 |

| μ3 | 0.03 | 0.03 | −0.01 | 0.08 |

| μ4 | 0.01 | 0.03 | −0.03 | 0.05 |

| μ5 | 0.12 | 0.03 | 0.08 | 0.17 |

| μ6 | 0.13 | 0.03 | 0.09 | 0.19 |

| μ7 | 0.10 | 0.02 | 0.06 | 0.14 |

| μ8 | 0.10 | 0.02 | 0.05 | 0.13 |

| [τ12, τ13, τ14, τ15] | [−0.21, 0.39, 0.80, 1.39] | [0.01, 0.02, 0.02, 0.02] | [−0.23, 0.36, 0.77, 1.35] | [−0.19, 0.42, 0.83, 1.42] |

| [τ22, τ23, τ24, τ25] | [−1.16, −0.47, 0.12, 0.75] | [0.02, 0.02, 0.02, 0.02] | [−1.19, −0.49, 0.09, 0.72] | [−1.13, −0.44, 0.15, 0.78] |

| [τ32, τ33, τ34, τ35] | [−0.88, −0.31, 0.21, 0.88] | [0.01, 0.01, 0.02, 0.02] | [−0.90, −0.34, 0.18, 0.85] | [−0.86, −0.29, 0.23, 0.91] |

| [τ42, τ43, τ44, τ45] | [−0.60, 0.20, 0.66, 1.22] | [0.01, 0.02, 0.02, 0.02] | [−0.62, 0.17, 0.62, 1.18] | [−0.58, 0.22, 0.69, 1.25] |

| [τ52, τ53, τ54, τ55] | [0.91, 1.73, 2.24, 2.71] | [0.03, 0.05, 0.06, 0.06] | [0.86, 1.65, 2.15, 2.61] | [0.95, 1.81, 2.33, 2.80] |

| [τ62, τ63, τ64, τ65] | [0.68, 1.48, 1.95, 2.45] | [0.02, 0.04, 0.05, 0.05] | [0.64, 1.42, 1.87, 2.35] | [0.72, 1.55, 2.04, 2.54] |

| [τ72, τ73, τ74, τ75] | [0.80, 1.65, 2.14, 2.66] | [0.03, 0.06, 0.07, 0.08] | [0.74, 1.55, 2.01, 2.52] | [0.85, 1.74, 2.25, 2.79] |

| [τ82, τ83, τ84, τ85] | [0.20, 0.98, 1.48, 1.95] | [0.02, 0.02, 0.03, 0.03] | [0.18, 0.94, 1.43, 1.90] | [0.23, 1.02, 1.53, 2.00] |

| ϕ11 | 0.11 | 0.00 | 0.10 | 0.11 |

| ϕ12 | −0.10 | 0.01 | −0.11 | −0.09 |

| ϕ22 | 0.23 | 0.01 | 0.21 | 0.25 |

Note. The values of the lowest thresholds of the ordinal items, τ11, τ21, τ31, τ41, τ51, τ61, τ71, τ81, were set to −0.71, −1.88, −1.44, −1.34, −0.33, −0.26, −0.13, and −0.67, respectively. The values of the highest thresholds of the ordinal items, τ16, τ26, τ36, τ46, τ56, τ66, τ76, τ86, were set to 2.16, 1.71, 1.72, 1.91, 3.09, 2.95, 3.12, and 2.44, respectively.

Consistent with previously published results (Ram et al., 2005), all the PE and NE indicators showed relatively little differentiation (i.e., distances between thresholds were small) among the middle categories. High levels of NE were relatively rare, resulting in the threshold values of the NE items clustering around relatively high magnitudes. The baseline levels (as indicated by values of μ) were estimated to be close to zero, with the intercepts of the NE continuous data being significantly greater than zero. At the factor level, PE and NE were found to be weakly negatively correlated, as would be expected based on previous findings concerning the structure of emotions.

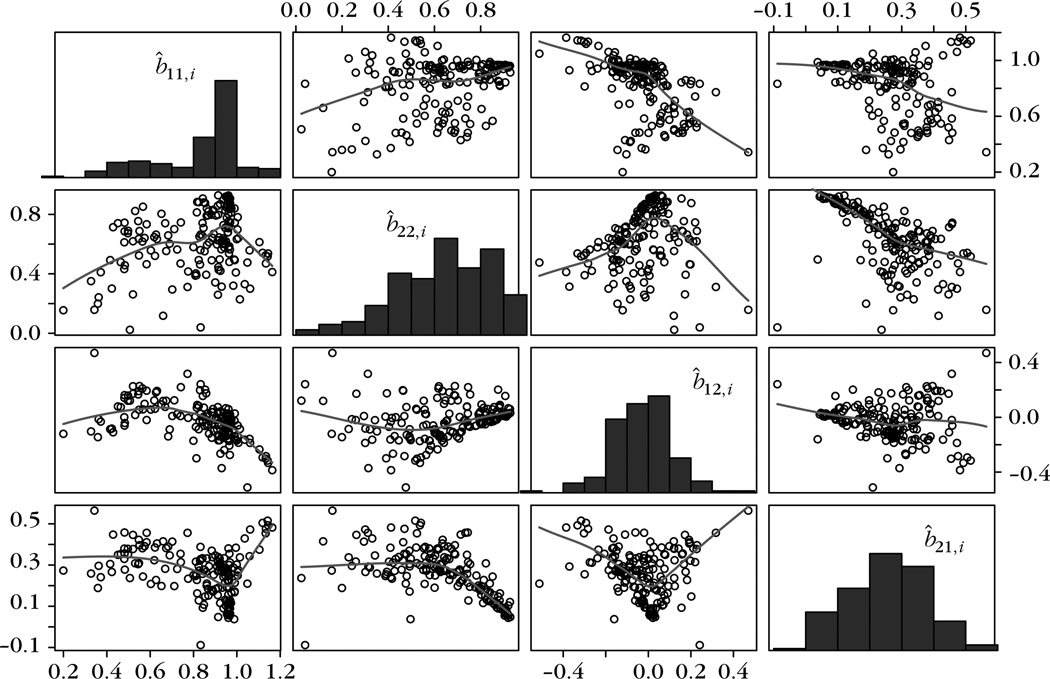

We obtained estimates of each person’s parameters in bi, denoted below as b̂i, by averaging posterior samples from the distribution p(ZLi |Li, μZ, ΨZ, τ, Y*, θ, H, Yobs) as

| (13) |

where q = 1 denotes the first iteration after burn-in and Q is the maximum number of iterations. Distributions of these b̂i estimates across participants (for i = 1, …, n) were the focus of our interest.5 These estimates were observed to show very similar distributional forms across the three Markov chains even with different hyperparameter choices. Matrix scatterplots of the b̂i estimates averaged across the three chains and the corresponding histograms are shown in Figure 3. Based on the plots, distributions of the person-specific autoregressive parameters, b̂11,i and b̂22,i, were both highly skewed. Most individuals’ estimated autoregressive parameters lay in the moderate to high range (from around 0.5 to close to 1.0), with a small number of individuals showing near-zero autoregressive estimates. That is, the latter subgroup of individuals tended to show very little stability or continuity in their PE and NE from day to day.

Figure 3.

Matrix scatterplots of the b̂i estimates using the DP prior, as averaged across three Markov chains. A loess line is imposed on each of the scatterplots. The diagonal plots are histograms of these estimates. For b11,i, M = 0.83, SD = 0.20, 90% CI [0.44, 1.05]; for b22,i, M = 0.64, SD = 0.21, 90% CI [0.26, 0.91]; for b12,i, M = −0.03, SD = 0.14, 90% CI [−0.28, 0.20]; and for b21,i, M = 0.26, SD = 0.12, 90% CI [0.06, 0.46].

The 90% credible interval6 associated with b̂12,i included zero but that for b̂21,i was barely above zero. Thus, on average, the present sample showed unidirectional coupling from PE to NE when PE is at high values, but no coupling in the reverse direction when NE was high. This finding provided partial support for the dynamic affect model postulated by Zautra et al. (2000). That is, we found that there was an increased linkage between PE and NE at high values of PE, but the association was driven more by the lagged influence of PE on NE.

Allowing the distributions of the person-specific parameters to deviate from normality also helped reveal novel interrelationships among the auto- and cross-regression parameters. First, although most individuals’ b̂12,i estimates clustered around zero, individuals who showed strong continuity in PE (with b11,i estimates close to or above 1.0) tended to also show negative lagged influence from NE to PE. That is, high values of NE served to dampen the fluctuations in PE and bring it back towards its baseline.

A slightly different scenario was observed in the individuals’ NE regulation. Almost all individuals showed a positive cross-regression weight from PE at t − 1 to NE at time t. The positive cross-regression weights suggested that if an individual experienced extremely high PE yesterday, the high PE tended to delay the individual’s NE from returning to its baseline. This may, for instance, cause an individual’s NE to continue to wander around more extreme (e.g., extremely low) values for a longer period of time. Compared with individuals with high stability in NE, individuals who showed lower stability in NE (i.e., b̂22,i estimates were low) showed greater tendency in this regard (i.e., showing higher positive b̂21,i estimates).

To evaluate whether the non-parametric DP prior yielded any practical differences in the estimation results, we replicated the analysis by using a multivariate normal distribution as a parametric prior for the distribution of bi. Specifically, we specified the parametric prior as

with μZNorm ~ Nr([0.8,0.6, −0.03,0.26]′, ΨZNorm0), based on the semiparametric empirical results. To allow for variability in the hyperparameter values across the MCMC chains, ΨZNorm0 was specified to be a diagonal matrix with the first two elements sampled from a Unif (0.3, 1) distribution and the last two elements sampled from a Unif (0.2, 0.8) distribution for each of the three independent Markov chains. Furthermore, we let with d1 set to 2 and d2 sampled randomly from a Unif (0.1, 0.5) distribution for k = 1 and 2, and a Unif (0.05, 0.3) distribution for k = 3 and 4 for each of the independent Markov chains. As in the non-parametric case, these values were selected based on a prior expectation of the ranges of the parameters in bi in stable systems. The resultant conditional distribution p(b|μZNorm, ΨZNorm, τ, Y*, θ, H, Yobs) was also non-standard and we used an MH step similar to that for deriving posterior samples of p(Z|L, μZ, ψZ, τ, Y*, θ, H, Yobs) to obtain posterior samples from this conditional distribution (see equation (A7) in the Appendix).

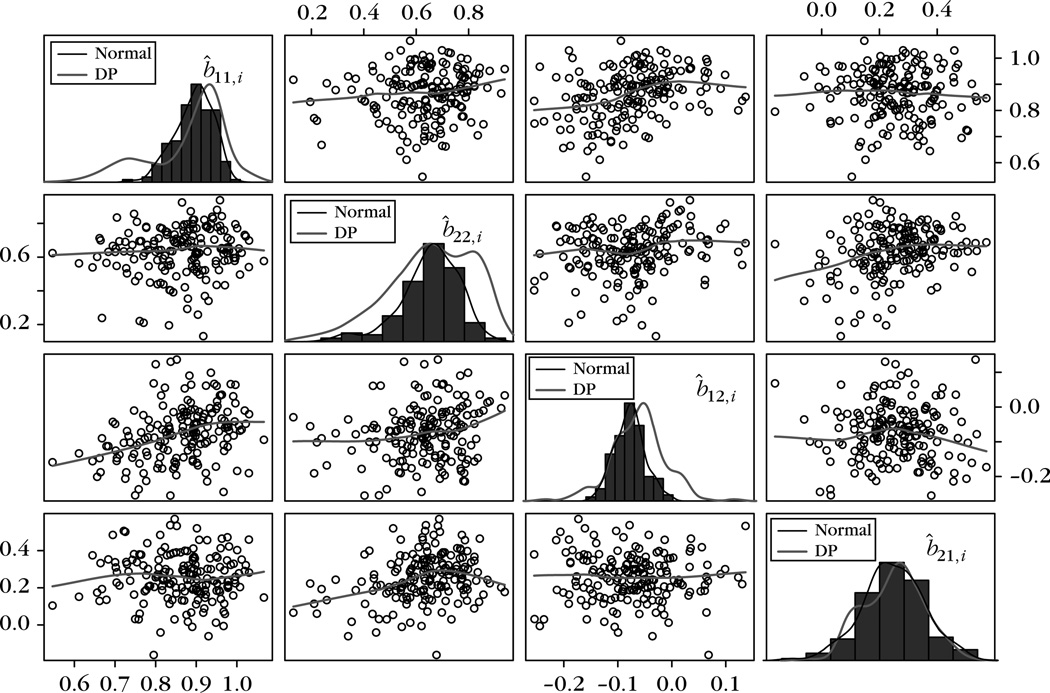

The b̂i estimates obtained from using the multivariate normal prior are plotted in Figure 4, with density plots of the corresponding non-parametric posterior samples overlaid on the histograms in the diagonal panels. It can be seen that, compared with the non-parametric posterior samples, the parametric posterior samples of b̂i did not capture the full ranges of the b̂i estimates – in particular, the heavy-tailed nature of b̂11,i and the platykurtic but asymmetric nature of b̂22,i. Evaluation of the summary statistics of the posterior samples further revealed that whereas the means of the posterior samples were generally close under both prior specifications, the standard deviations of the estimates were about twice as large in the non-parametric case as in the parametric case. This has some parallels to asymptotic results within the linear frequentist framework, where violation of distributional assumptions has been shown to affect the standard error estimates, but not so much the corresponding point estimators (Ljung & Caines, 1979). Imposing the normality assumption also led to misleading conclusions concerning the interrelationships among the b̂i estimates. For instance, whereas b̂12,i was found to show a negative relationship with b̂11,i and a quadratic relationship with b̂22,i with the use of the DP prior (see Figure 3, third panels in rows 1 and 2), no such relationships were observed when the multivariate normal prior was used (see Figure 4, third panels in rows 1 and 2).

Figure 4.

Matrix scatterplots of the person-specific parameter estimates with a multivariate normal prior, as averaged across three Markov chains. A loess line is imposed on each of the scatterplots. The diagonal plots are histograms of these estimates. For b11,i, M = 0.86, SD = 0.10, 90% CI [0.69, 1.00]; for b22,i, M = 0.63, SD = 0.14, 90% CI [0.36, 0.82]; for b12,i, M = −0.07, SD = 0.08, 90% CI [−0.19, 0.06]; and for b21,i, M = 0.26, SD = 0.13, 90% CI [0.03, 0.47].

Only minor differences were observed in the person- and time-invariant parameter estimates when the multivariate normal prior was used (see Table 2). All the estimates and their associated statistics were largely similar, with the exception of the parameters in μ, the intercepts of the continuous data array. Most of the elements in μ were greatly reduced in magnitude and included zero in most of their 90% credible intervals. Such differences likely reflected the discrepancies in the predicted trends of the individuals, due presumably to the greater restrictions of range in the b̂i estimates in the parametric than in the non-parametric case.

Table 2.

Bayesian estimates of time- and person-invariant parameters from fitting the nonlinear DFA model to empirical data using the multivariate normal prior

| Parameters | Mean | SD | 5th percentile | 95th percentile |

|---|---|---|---|---|

| [λ21, λ31, λ41] | [1.08, 1.02, 0.86] | [0.02, 0.02, 0.02] | [1.05, 1.00, 0.83] | [1.11, 1.06, 0.89] |

| [λ62, λ72, λ82] | [1.03, 0.77, 0.69] | [0.03, 0.03, 0.02] | [0.99, 0.73, 0.66] | [1.08, 0.81, 0.72] |

| ψε1 | 0.41 | 0.01 | 0.39 | 0.43 |

| ψε2 | 0.35 | 0.01 | 0.33 | 0.36 |

| ψε3 | 0.40 | 0.01 | 0.38 | 0.42 |

| ψε4 | 0.55 | 0.01 | 0.53 | 0.57 |

| ψε5 | 0.43 | 0.02 | 0.40 | 0.46 |

| ψε6 | 0.29 | 0.01 | 0.27 | 0.31 |

| ψε7 | 0.54 | 0.03 | 0.49 | 0.59 |

| ψε8 | 0.63 | 0.02 | 0.60 | 0.66 |

| μ1 | 0.04 | 0.02 | 0.01 | 0.07 |

| μ2 | 0.01 | 0.02 | −0.03 | 0.05 |

| μ3 | 0.02 | 0.02 | −0.01 | 0.05 |

| μ4 | 0.00 | 0.02 | −0.03 | 0.04 |

| μ5 | 0.00 | 0.02 | −0.03 | 0.03 |

| μ6 | 0.01 | 0.02 | −0.02 | 0.04 |

| μ7 | 0.00 | 0.02 | −0.03 | 0.03 |

| μ8 | 0.00 | 0.02 | −0.02 | 0.03 |

| [τ12, τ13, τ14, τ15] | [−0.21, 0.39, 0.80, 1.39] | [0.01, 0.02, 0.02, 0.02] | [−0.23, 0.36, 0.77, 1.35] | [−0.19, 0.41, 0.83, 1.42] |

| [τ22, τ23, τ24, τ25] | [−1.16, −0.47, 0.12, 0.75] | [0.02, 0.02, 0.02, 0.02] | [−1.19, −0.50, 0.09, 0.72] | [−1.13, −0.44, 0.15, 0.78] |

| [τ32, τ33, τ34, τ35] | [−0.88, −0.31, 0.21, 0.88] | [0.01, 0.02, 0.02, 0.02] | [−0.90, −0.34, 0.18, 0.85] | [−0.86, −0.29, 0.23, 0.91] |

| [τ42, τ43, τ44, τ45] | [−0.60, 0.19, 0.65, 1.21] | [0.01, 0.02, 0.02, 0.02] | [−0.63, 0.17, 0.62, 1.18] | [−0.58, 0.22, 0.69, 1.25] |

| [τ52, τ53, τ54, τ55] | [0.91, 1.74, 2.24, 2.72] | [0.02, 0.04, 0.04, 0.05] | [0.88, 1.68, 2.17, 2.63] | [0.95, 1.80, 2.32, 2.79] |

| [τ62, τ63, τ64, τ65] | [0.69, 1.50, 1.98, 2.48] | [0.02, 0.03, 0.04, 0.05] | [0.65, 1.45, 1.91, 2.39] | [0.72, 1.56, 2.05, 2.55] |

| [τ72, τ73, τ74, τ75] | [0.79, 1.64, 2.13, 2.66] | [0.03, 0.05, 0.06, 0.06] | [0.75, 1.57, 2.04, 2.56] | [0.84, 1.73, 2.23, 2.77] |

| [τ82, τ83, τ84, τ85] | [0.20, 0.98, 1.48, 1.95] | [0.02, 0.02, 0.03, 0.03] | [0.18, 0.94, 1.43, 1.90] | [0.23, 1.02, 1.53, 2.00] |

| ϕ11 | 0.11 | 0.00 | 0.10 | 0.12 |

| ϕ12 | −0.10 | 0.00 | −0.11 | −0.09 |

| ϕ22 | 0.23 | 0.01 | 0.22 | 0.25 |

Note. The values of the lowest thresholds of the ordinal items, τ11, τ21, τ31, τ41, τ51, τ61, τ71, τ81, were set to −0.71, −1.88, −1.44, −1.34, −0.33, −0.26, −0.13, and −0.67, respectively. The values of the highest thresholds of the ordinal items, τ16, τ26, τ36, τ46, τ56, τ66, τ76, τ86, were set to 2.16, 1.71, 1.72, 1.91, 3.09, 2.95, 3.12, and 2.44, respectively.

To summarize, by using the DP as a non-parametric prior for parameters in a nonlinear DFA model, we found that the distributions of the two autoregressive parameters, b11,i and b22,i, did deviate substantially from normality. Using other parametric (e.g., normal) distributions to approximate these skewed distributions did not reveal the full range of individual differences in these parameters and led to misleading conclusions concerning some of the more complex, and often nonlinear, interrelationships among the b̂i estimates.

7. Simulation study

To better understand the performance of the proposed procedures under known population conditions, we generated data using the nonlinear DFA model with different distributions for bi but with approximately the same complete sample size and number of time points as our empirical example, with n = 170 and T = 50. The true parameters in our simulations were chosen to mirror (though not completely identical to) the estimates obtained from our empirical example. A total of 100 Monte Carlo replications was conducted for each of the simulation conditions described below.

Parameters in the measurement model (see equations (9)–(11)) were identical in all our simulation models and they were set to the values of

| (14) |

For identification purposes, each factor’s loading on the first indicator was set to the true value of 1.0 in model fitting. In all conditions, the true process noise covariance matrix was set to

We tested the effectiveness of using the DP prior to approximate three sets of distributional conditions.

Condition 1. Here, we defined the distributions of bi as

| (15) |

where b11,i, b22,i, b12,i, and b21,i are as defined in equation (7). This condition was designed to generate positively skewed distributions for the baseline autoregressive parameters, b11,i and b22,i.

Condition 2. Here, we specified some of the distributions to be bimodal:

| (16) |

Condition 3. Here, we specified the distributions of bi to be

| (17) |

This condition was used to illustrate that even when the normality assumption holds, the DP prior is still general enough to capture characteristics of the multivariate normal distribution as a special case.

The same starting values were used in all conditions. All freed elements in Λ were set to 0.5. Initial Ψε was set to I8 and μ was set to (0.5, 0.5, …, 0.5). Initial values for τk were set to (−3.0, −1.5, −0.5, 0.5, 1.0, 2.0) for k = 1, …, 4 and (−1.0, 0.0, 0.5, 0.8, 1.3, 2.0) for k = 5, …, 8. Starting values for the process noise covariance matrix were set to

In addition, all values in the latent variable vector, ηit, were set to 0 for all persons and time points, and initial values of bi were sampled randomly from Nr(μZ0, Ir).

The same prior distributions and hyperparameters used in the empirical application were adopted in the simulation study with some minor adaptations. Specifically, based on characteristics of the distributions of bi in each simulation condition (see equations (15)–(17)) and the acceptance rates for the MH step for drawing posterior samples from p(Z|L, μZ, ΨZ, τ, Y*, θ, H, Yobs), we specified ΨμZ to be 1.0 and 15.0 and c2 to be 14.0 and 0.4, respectively, for the first two and last two elements of bi in the first condition. In the second condition, we changed c2 to 4.0 and 0.4, respectively, for the first two and last two elements of bi while keeping other hyperparameters to be the same as those in condition 1. Finally, in condition 3, we changed c2 to 1.0 and 0.3 and ΨμZ to 1.0 and 20.0, respectively, for the first two and last two elements of bi. In the first two conditions, the tails of the distributions of the bi parameters extended relatively far away from the modes of the distributions compared with condition 3. Thus, the hyperparameters were modified accordingly to yield better approximation in the tail areas. In the empirical application, these hyperparameters were drawn randomly from ranges that were broad enough to encompass all the hyperparameter values noted here.

We organized our simulation results into three sections to summarize results pertaining to factor score (i.e., state) estimation; estimation of all time- and person-invariant parameters; and estimation of all person-specific parameters. A total of 20,000 burn-in iterations was used in each Monte Carlo replication. We used a relatively high number of burn-in iterations due to the greater difficulties involved in recovering the person-specific distributions for b12,i and b21,i, whose impact on the system’s dynamics was only evident at high values of the latent variables. It is thus harder to recover the shapes of these parameters’ distributions given the moderate sample sizes of n = 170 and T = 50.

7.1. Factor score or state estimation

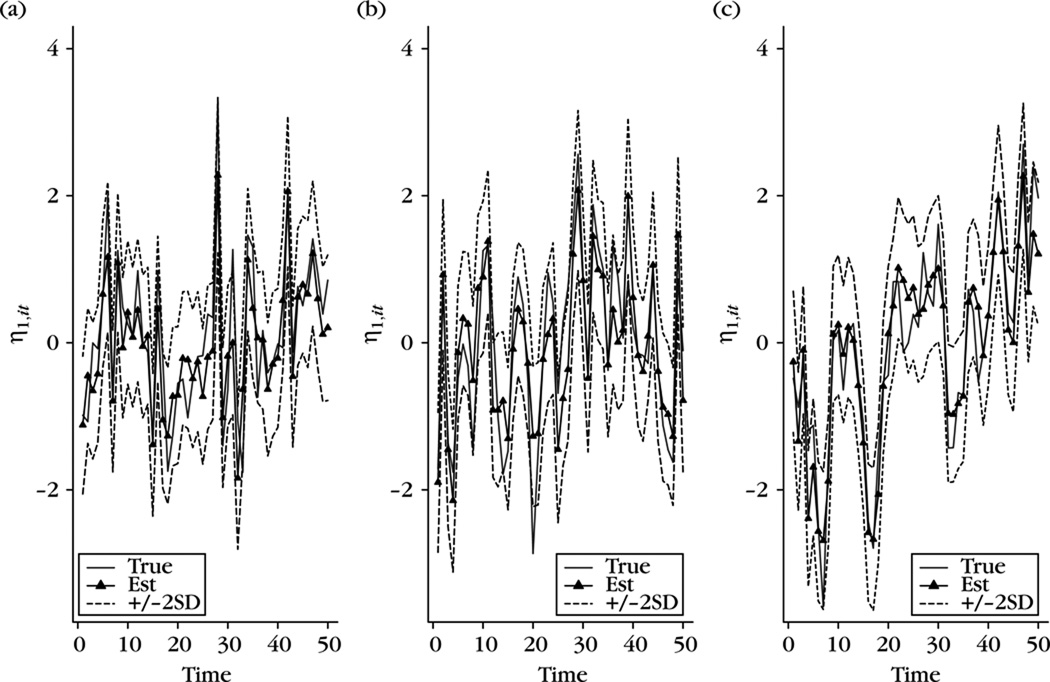

For illustration purposes, the estimated and true values of factor 1 from one randomly selected case during one particular Monte Carlo run are plotted in Figure 5. The estimates plotted in Figure 5 were the means of the posterior samples drawn from the posterior density of the selected individual, p(Hi|τ, Y*, θ, Yobs), for each time point after the burn-in iterations.

Figure 5.

True factor scores, estimated factor scores and intervals constructed from twice the standard deviation of the posterior samples of the first latent variable from for one randomly selected hypothetical subject in (a) condition 1, (b) condition 2, and (c) condition 3.

It can be seen that the proposed algorithm was able to recover the true factor scores accurately across all the conditions. Although factor score estimation is not an issue of primary interest in our simulation or empirical examples, it is an important component in studies where the primary interest is to obtain longitudinal factor score estimates or estimates of time-varying parameters that are represented as latent variables (see Young, Pedregal, & Tych, 1999).

7.2. Time- and person-invariant parameters

All time- and person-invariant parameter estimates are summarized in Tables 3–5 for conditions 1, 2, and 3, respectively. Included in the tables are the biases (for parameter l, where θl is the true value for parameter l and θ̄l,r is the average of the Gibbs samples of parameter l during the rth Monte Carlo run after burn-in), root mean squared errors (RMSE, given by , empirical standard deviations of each parameter across the 100 Monte Carlo runs (denoted by SD), standard deviations of the Gibbs samples of each parameter averaged across Monte Carlo runs (denoted by Est SD) and the 90% coverage rates (percentage of Monte Carlo runs for which the 90% credible intervals for parameter l contained the corresponding true value, θl).

Table 3.

Bayesian estimates of the person-invariant parameters with beta-distributed baseline autoregressive parameters (condition 1) in the simulation study

| Parameters | Est SD | SD | Bias | RMSE | Coverage (%) |

|---|---|---|---|---|---|

| [λ21, λ31, λ41] | [0.02, 0.02, 0.02] | [0.02, 0.02, 0.02] | [0.000, −0.006, −0.005] | [0.02, 0.02, 0.02] | [83, 85, 82] |

| [λ62, λ72, λ82] | [0.02, 0.02, 0.02] | [0.02, 0.02, 0.02] | [−0.002, −0.001, 0.000] | [0.02, 0.02, 0.02] | [87, 89, 92] |

| ψε1 | 0.02 | 0.02 | 0.003 | 0.02 | 87 |

| ψε2 | 0.02 | 0.02 | 0.005 | 0.02 | 90 |

| ψε3 | 0.02 | 0.02 | −0.005 | 0.02 | 89 |

| ψε4 | 0.02 | 0.02 | 0.004 | 0.02 | 90 |

| ψε5 | 0.02 | 0.02 | 0.003 | 0.03 | 91 |

| ψε6 | 0.02 | 0.02 | 0.002 | 0.02 | 85 |

| ψε7 | 0.02 | 0.02 | −0.001 | 0.02 | 86 |

| ψε8 | 0.02 | 0.02 | 0.005 | 0.02 | 87 |

| μ1 | 0.03 | 0.03 | 0.000 | 0.03 | 80 |

| μ2 | 0.03 | 0.03 | −0.002 | 0.03 | 88 |

| μ3 | 0.03 | 0.03 | 0.006 | 0.03 | 85 |

| μ4 | 0.03 | 0.03 | 0.004 | 0.03 | 89 |

| μ5 | 0.02 | 0.02 | 0.006 | 0.02 | 90 |

| μ6 | 0.02 | 0.02 | 0.002 | 0.02 | 93 |

| μ7 | 0.02 | 0.02 | 0.002 | 0.02 | 90 |

| μ8 | 0.02 | 0.02 | 0.004 | 0.02 | 89 |

| [τ12, τ13, τ14, τ15] | [0.03, 0.03, 0.02, 0.02] | [0.04, 0.04, 0.03, 0.03] | [−0.003, −0.003, −0.003, −0.001] | [0.04, 0.04, 0.03, 0.03] | [84, 78, 79, 76] |

| [τ22, τ23, τ24, τ25] | [0.04, 0.03, 0.03, 0.02] | [0.05, 0.04, 0.03, 0.03] | [−0.006, −0.007, −0.004, −0.003] | [0.05, 0.04, 0.03, 0.03] | [83, 83, 83, 81] |

| [τ32, τ33, τ34, τ35] | [0.04, 0.03, 0.03, 0.02] | [0.04, 0.03, 0.03, 0.02] | [0.009, 0.009, 0.005, 0.003] | [0.04, 0.03, 0.03, 0.02] | [89, 85, 87, 91] |

| [τ42, τ43, τ44, τ45] | [0.04, 0.03, 0.03, 0.03] | [0.04, 0.04, 0.03, 0.03] | [0.009, 0.004, 0.005, 0.005] | [0.04, 0.04, 0.03, 0.03] | [90, 82, 80, 84] |

| [τ52, τ53, τ54, τ55] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [0.001, 0.001, 0.003, 0.003] | [0.01, 0.02, 0.02, 0.02] | [88, 89, 82, 85] |

| [τ62, τ63, τ64, τ65] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [0.000, 0.000, −0.001, −0.001] | [0.01, 0.02, 0.02, 0.02] | [86, 85, 82, 85] |

| [τ72, τ73, τ74, τ75] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [−0.001, −0.002, −0.001, −0.002] | [0.01, 0.02, 0.02, 0.02] | [89, 84, 88, 86] |

| [τ82, τ83, τ84, τ85] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [−0.001, 0.001, 0.002, 0.002] | [0.01, 0.02, 0.02, 0.02] | [87, 89, 84, 85] |

| ϕ11 | 0.03 | 0.04 | 0.007 | 0.04 | 79 |

| ϕ12 | 0.02 | 0.02 | 0.002 | 0.02 | 89 |

| ϕ22 | 0.03 | 0.04 | 0.001 | 0.04 | 88 |

Table 5.

Bayesian estimates of the person-invariant parameters with normally distributed baseline autoregressive parameters (condition 3) in the simulation study

| Parameters | Est SD | SD | Bias | RMSE | Coverage (%) |

|---|---|---|---|---|---|

| [λ21, λ31, λ41] | [0.02, 0.02, 0.02] | [0.02, 0.02, 0.02] | [−0.001, 0.001, −0.002] | [0.02, 0.02, 0.02] | [86, 90, 80] |

| [λ62, λ72, λ82] | [0.02, 0.02, 0.02] | [0.01, 0.02, 0.01] | [−0.002, 0.000, −0.001] | [0.01, 0.02, 0.01] | [91, 87, 89] |

| ψε1 | 0.02 | 0.02 | −0.003 | 0.02 | 84 |

| ψε2 | 0.02 | 0.02 | 0.000 | 0.02 | 87 |

| ψε3 | 0.02 | 0.02 | 0.002 | 0.02 | 90 |

| ψε4 | 0.02 | 0.02 | 0.000 | 0.02 | 82 |

| ψε5 | 0.02 | 0.02 | 0.009 | 0.03 | 87 |

| ψε6 | 0.02 | 0.02 | 0.002 | 0.02 | 85 |

| ψε7 | 0.02 | 0.02 | 0.001 | 0.02 | 85 |

| ψε8 | 0.02 | 0.02 | 0.001 | 0.02 | 86 |

| μ1 | 0.03 | 0.04 | 0.004 | 0.04 | 84 |

| μ2 | 0.03 | 0.03 | 0.002 | 0.03 | 79 |

| μ3 | 0.03 | 0.03 | 0.001 | 0.03 | 84 |

| μ4 | 0.03 | 0.03 | −0.001 | 0.03 | 84 |

| μ5 | 0.03 | 0.03 | 0.000 | 0.02 | 76 |

| μ6 | 0.02 | 0.02 | −0.001 | 0.02 | 83 |

| μ7 | 0.02 | 0.03 | −0.001 | 0.03 | 82 |

| μ8 | 0.02 | 0.02 | −0.001 | 0.02 | 81 |

| [τ12, τ13, τ14, τ15] | [0.03, 0.03, 0.02, 0.02] | [0.03, 0.03, 0.02, 0.02] | [0.001, 0.002, 0.003, 0.003] | [0.03, 0.03, 0.02, 0.02] | [82, 84, 87, 85] |

| [τ22, τ23, τ24, τ25] | [0.04, 0.03, 0.03, 0.02] | [0.04, 0.04, 0.03, 0.03] | [−0.003, −0.001, 0.001, 0.001] | [0.04, 0.04, 0.03, 0.03] | [84, 79, 83, 86] |

| [τ32, τ33, τ34, τ35] | [0.04, 0.03, 0.03, 0.02] | [0.04, 0.03, 0.03, 0.02] | [−0.003, −0.001, −0.001, −0.003] | [0.04, 0.03, 0.03, 0.02] | [83, 86, 86, 86] |

| [τ42, τ43, τ44, τ45] | [0.04, 0.03, 0.03, 0.02] | [0.04, 0.03, 0.03, 0.03] | [−0.002, −0.001, −0.001, −0.002] | [0.04, 0.03, 0.03, 0.03] | [92, 85, 89, 89] |

| [τ52, τ53, τ54, τ55] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [0.000, 0.001, 0.002, 0.001] | [0.01, 0.02, 0.02, 0.02] | [89, 90, 84, 89] |

| [τ62, τ63, τ64, τ65] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [−0.001, 0.000, −0.001, 0.000] | [0.01, 0.01, 0.02, 0.02] | [94, 92, 85, 89] |

| [τ72, τ73, τ74, τ75] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [0.000, 0.001, 0.000, −0.001] | [0.01, 0.02, 0.02, 0.02] | [89, 92, 94, 89] |

| [τ82, τ83, τ84, τ85] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [−0.001, 0.000, −0.001, −0.002] | [0.01, 0.02, 0.02, 0.02] | [93, 92, 87, 98] |

| ϕ11 | 0.03 | 0.03 | 0.003 | 0.03 | 91 |

| ϕ12 | 0.02 | 0.02 | 0.001 | 0.02 | 93 |

| ϕ22 | 0.03 | 0.03 | 0.006 | 0.03 | 92 |

All the parameters were recovered accurately across all three conditions (see biases and RMSEs in Tables 3–5). The average standard deviations (Est SD) of the Gibbs samples were also close to the empirical standard deviations of the parameters. The coverage rates computed using the 5th and 95th percentiles of the Gibbs samples were on average close to but slightly below the 90% nominal rate. Average coverage rates were 85.82, 88.26, and 86.86, respectively, for the three conditions.

Condition 1, which was characterized by positively skewed autoregressive parameter distributions, showed comparable biases and RMSEs to other conditions. Slightly greater discrepancies arose in the tail percentiles, thus yielding slightly lower coverage rates for this condition than for the other two conditions. As will be discussed in Section 7.3, this is directly attributable to the biases in estimating the tail areas of the person-specific parameter distributions in condition 1. Such biases in the person-specific parameters also affected the coverage rates of other person-invariant parameters.

The relatively low average coverage rates in the normal condition were contributed largely by the low coverage rates of the parameters in μ. The coverage rates for the parameters in μ were notably lower in condition 3 than in conditions 1 and 2. This may be related to the fact that a small number of the bi parameters actually lay near the boundary of or within the non-stationary region. By ‘non-stationary region’, we mean ranges of parameters that would propel a system to show trends or, specifically, continual deviations from its baseline levels, as defined by μ (as in Figure 5c, for example). As a result, the system also shows increasing variance over time. Larger biases are typically observed in such cases irrespective of other features of the simulations. Even though we used normal distributions of relatively restricted ranges for this condition to confine most cases to the stationary region, some of the parameters in bi still crossed or lay near the boundary of the non-stationary region. These cases led to more extreme μ estimates that affected the coverage rates for μ in condition 3 directly.

7.3. Estimation of person-specific parameters, bi

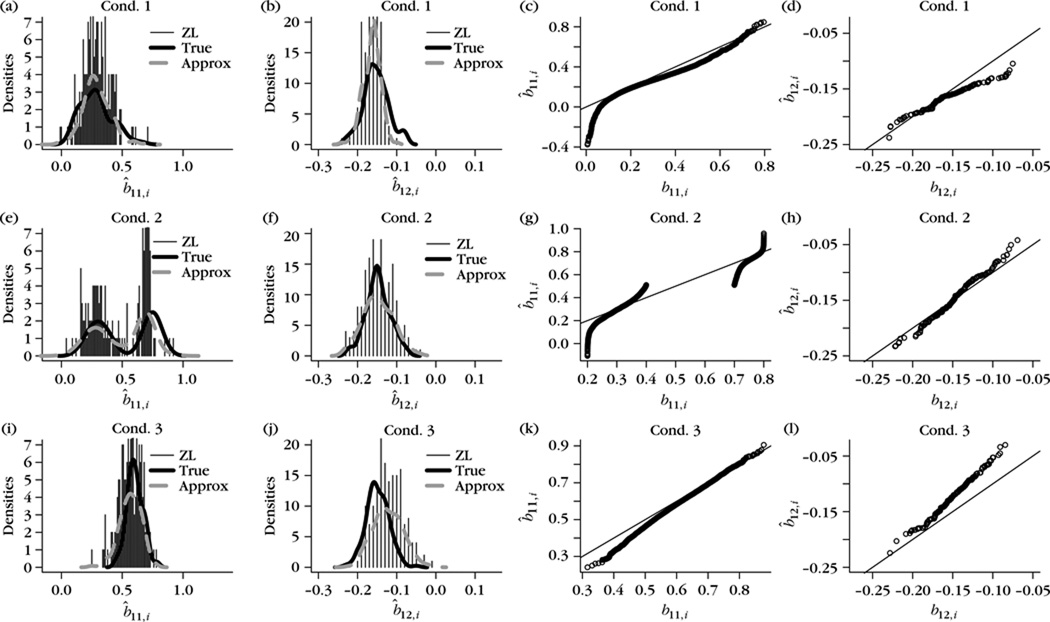

Our main focus of interest was to compare the distribution of true bi (for i = 1, …, n) to the distribution of b̂i obtained from Gibbs sampling. The means and standard deviations of b̂i (derived using equation (13)) computed across persons are summarized in Table 6. Further details are summarized in Figure 6. Included in the figure are plots of b̂11,i and b̂12,i during one particular Monte Carlo run in comparison to the densities of the true person-specific parameters generated using equations (15)–(17) (see panels (a) and (b), respectively), and quantile–quantile plots of the true b11,i and b12,i values against the b̂11,i and b̂12,i estimates pooled across all Monte Carlo runs.

Table 6.

Means and standard deviations of the bi estimates

| Condition 1 | Condition 2 | Condition 3 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameters | Mean | Est Mean | SD | Est SD | Mean | Est Mean | SD | Est SD | Mean | Est Mean | SD | Est SD |

| b11,i | 0.27 | 0.24 | 0.13 | 0.12 | 0.53 | 0.49 | 0.23 | 0.23 | 0.60 | 0.60 | 0.07 | 0.07 |

| b22,i | 0.27 | 0.24 | 0.13 | 0.11 | 0.53 | 0.49 | 0.23 | 0.22 | 0.59 | 0.59 | 0.07 | 0.08 |

| b12,i | −0.15 | −0.11 | 0.03 | 0.03 | −0.15 | −0.13 | 0.03 | 0.04 | −0.15 | −0.19 | 0.03 | 0.04 |

| b21,i | −0.15 | −0.11 | 0.03 | 0.03 | −0.15 | −0.12 | 0.03 | 0.04 | −0.15 | −0.17 | 0.03 | 0.04 |

Note. Mean, true empirical mean of the distribution; Est mean, mean of the posterior samples; SD, true empirical standard deviation of the distribution; Est SD, standard deviation of the posterior samples.

Figure 6.

(a), (e), (i) True density of b11,i, the approximation density constituted by b̂i for conditions 1, 2, and 3, respectively. (b), (f), (j) The corresponding plots associated with b12,i. (c), (g), (k) Quantile–quantile plots comparing the true and estimated b11,i pooled across all Monte Carlo runs; the straight line provides the reference for y = x. (d), (h), (l) Quantile–quantile plot comparing the true and estimated b12,i; the straight line provides the reference for y = x.

Generally, the means and standard deviations of the true bi distributions were recovered very accurately across all conditions (see Table 6). Several additional observations can be noted based on Figure 6. First, the conditional distributions derived from using the DP prior were flexible enough to recover the general shapes of the different distributions of bi used in all three conditions. Second, the shapes of the distributions were more accurately recovered in condition 3 than in the other two conditions because the associated true densities (i.e., multivariate normal) were of the same form as the base distribution.

Third, in condition 1, discrepancies in the estimation of b11,i arose primarily in the lower tail region. That is, the lower tail extended too far into the negative region, but the densities in the immediately adjacent regions did not rise rapidly enough to capture some of the more subtle changes in the lower quantiles of the positively skewed true distribution. Fourth, in condition 2, the approximation density generally resembled the bimodal density of the true distribution of b11,i, but slight discrepancies were observed near the tail areas of the two modes. In particular, the upper tails of the two modes were assigned too much weight whereas the lower tails were too sparsely represented.

Finally, greater biases were observed in the estimates of b̂12,i and b̂21,i than those of b̂11,i and b̂22,i across all conditions (see Table 6 and Figures 6d, 6h, and 6l). This is not surprising, since the nonlinear model posited that the impacts due to b12,i and b12,i would only be fully manifested at extremely high values of the latent variables. Such instances were relatively rare in the simulated data, with only 50 time points and n = 170. Thus, even though the distributions constituted by b̂12,i andb̂21,i generally provided reasonable approximations to the shapes of the distributions of b12,i and b21,i, the means of the distributions were slightly offset (i.e., biased).

In sum, the proposed estimation procedures were able to recover all the components in the nonlinear DFA model accurately under diverse distributional assumptions for the parameters in bi. The sample size considered in the present simulation (with n = 170 and T = 50) yielded reasonable estimates, although larger sample sizes might be needed to improve the accuracy of the b̂12,i and b̂21,i estimates. In addition, although one particular nonlinear DFA model was considered in all the analyses, the proposed procedures are general enough to be used with any dynamic model with differentiable linear and nonlinear functions and normally distributed process noises (i.e., in the form of equation (8)). To illustrate the performance of the proposed estimation procedures within a linear modelling framework, we conducted a supplementary simulation study using a variation of the linear DFA model in equation (6). In particular, we allowed the parameters b11, b12, b21, and b22 in equation (6) to vary over persons and used the DP to approximate their corresponding distributions (generated in the same way as in conditions 1, 2, and 3 in the present simulation). Results based on the linear model are comparable to those obtained from the nonlinear model. Further details are not reported here due to space constraints, but they are available as supplementary materials on the first author’s website at http://www.unc.edu/~symiin/Sy-Miin%27s%20website/pub.htm

8. Discussion

In the present paper, we used the DP as a non-parametric prior distribution for selected parameters in a nonlinear DFA model. Using the DP as a prior is equivalent to specifying the prior distribution as a mixture distribution composed of an unknown number of discrete point masses. This approach thus provides the flexibility of a non-parametric mixture approach without the need to define the precise number of (or the range of possible numbers of) mixture components required to approximate an unknown distribution. In addition, we also incorporated several MH procedures within a Gibbs-sampling framework to handle some of the non-standard conditional distributions implicated in the proposed nonlinear DFA model.

A series of empirical and simulation examples was used to illustrate the flexibility of the proposed approach in approximating distributions of various shapes (e.g., normal, bimodal, and skewed). Our empirical example revealed that the baseline autoregressive parameters in our proposed DFA model did in fact show substantial deviations from normality. Using a multivariate normal prior did not reveal the full ranges of the associated parameters and their complex interrelationships. Researchers may thus risk bypassing the true nature of the emotional processes being modelled if parametric assumptions are imposed without any evaluation of their tenability.

Our empirical example provided several new insights into Zautra and colleagues’ dynamic affect model. Using one particular non-linear model, we validated that the linkage between PE and NE did indeed intensify on the more emotional days. A unidirectional relation was found in the direction from PE to NE for most individuals when their PE was at high extreme values. The lack of coupling in the direction from NE to PE might be attributable to the participants’ generally low NE levels. Thus, very few participants actually manifested a similar change in the direction from NE to PE. By allowing the dynamic parameters in the model to assume non-parametric forms, we were able to evaluate such individual differences more thoroughly.

Some comments can be noted concerning the selection of hyperparameters for the base weight, α. In all our examples, we used hyperparameter choices (i.e., α1 and α2 in equation (12)) that yielded relatively high values of α to capture clusters or ‘sticks’ that were relatively far away from the means or modes of the distributions. Higher values of α are typically needed to approximate distributions that are of high dimensions. Generally, given the moderate sample size in the present study, recovering higher-order moments of the distributions of interest can be difficult. We were able to recover the first and second moments reasonably accurately, however.

The present paper is one of the first applications of semiparametric nonlinear dynamic LVMs to studying change in psychology using the DP prior. Many other extensions are, of course, possible. For instance, we did not pursue the issue of model comparison in the present paper. When different prior densities are assumed across two models, some of the common model fit indices within the Bayesian framework cannot be utilized directly without some modifications. In particular, computing the Bayes factor via path sampling (Gelman & Meng, 1998) is not a straightforward matter in this case because of the complexity involved in linking the discrepant prior densities from the different models. Other test statistics, such as those developed by Zhu and Zhang (2004) for assessing finite mixture regression models, can potentially be extended to a dynamic LVM framework to provide a more formal assessment of the need to use infinite-order mixture distributions. Another relatively recent model assessment index, termed the L measure (Chen, Dey, & Ibrahim, 2004; Ibrahim, Chen, & Sinha, 2001), has also been advocated as an alternative goodness-of-fit index that works well in situations where proper prior distributions cannot be explicitly derived.

With regard to state (or latent variable score) estimation, we only used first-order linearization to derive the proposal distribution in the MH step. The potential utility of using higher-order linearization schemes or other proposal functions (e.g., Geweke & Tanizaki, 2001) in the state density sampling step could be evaluated in future studies. Hybrid algorithms that combine more computationally efficient particle filtering techniques with MCMC algorithms (Doucet, de Freitas, & Gordon, 2001) could also be developed to aid computation speed. In addition, specification of the person-specific parameters could be reformulated within a mixed effects framework to include both fixed and random effects components. Further investigation of the tenability of assuming a missing at random mechanism for the present data set is also warranted.

Using the DP as a non-parametric prior is not without its limitations. The biggest limitation resides perhaps in the discrete nature of the DP, which dictates that different individuals who are assigned to the same ‘cluster’ (where the number of clusters is less than n) would have exactly the same parameter values. One way to circumvent this issue is to use the mixture DP (Caron, Davy, Doucet, Duflos, & Vanheeghe, 2008; Escobar & West, 1995) as an alternative choice. In this case, the prior distribution essentially consists of a mixture of different DP priors. In doing so, different individuals may be assigned similar but not identical values on the parameters or constructs of interest. A second limitation is that the accuracy of the DP approximation is still constrained by the choice of the base distribution. In cases where the true distribution of interest deviates too much from the base distribution, the accuracy of the approximation would also deteriorate accordingly.

The nonlinear DFA model proposed in the present paper is but one example of the many dynamic models that can be used to described change processes. A wide array of modelling examples along these lines can be found in the literature on dynamic linear models (West & Harrison, 1997), dynamic generalized linear models (Fahrmeir & Tutz, 1994), regime-switching state-space models (Kim & Nelson, 1999), differential equation models (Molenaar & Newell, 2003; Singer, 2007), and other nonlinear and non-Gaussian dynamic models (Chow, Ferrer, & Hsieh, 2009; Durbin & Koopman, 2001). Whereas formulating dynamic models within a Bayesian framework opens up countless new possibilities for evaluating more complex models, many of the issues inherent to the Bayesian framework also have to be handled with caution. Sensitivity of the modelling results to prior choices and misspecification of other parametric/nonparametric assumptions remains an important issue that deserves more attention from researchers. Parallel to the increase in model complexity are, of course, new challenges for deriving appropriate model fit indices and convergence diagnostics.

To conclude, the DP prior can be used as a flexible non-parametric prior for distributions whose functional forms are unknown. In time series modelling, it is not unusual to encounter parameter distributions that show complex restrictions in range. Very often, the associated distributions are not only non-normal, but also asymmetric. Taking a non-parametric or semiparametric approach allows the assumption of normality to be treated as a testable hypothesis, as opposed to a ‘gold standard’ by which modellers have to abide. We hope to have illustrated the need to relax some of these parametric assumptions in fitting dynamic LVMs.

Table 4.

Bayesian estimates of the person-invariant parameters with bimodal baseline autoregressive parameter distributions (condition 2) in the simulation study

| Parameters | Est SD | SD | Bias | RMSE | Coverage (%) |

|---|---|---|---|---|---|

| [λ21, λ31, λ41] | [0.02, 0.02, 0.02] | [0.01, 0.02, 0.02] | [0.002, 0.001, 0.002] | [0.01, 0.02, 0.02] | [90, 84, 89] |

| [λ62, λ72, λ82] | [0.02, 0.02, 0.02] | [0.02, 0.01, 0.01] | [0.000, 0.000, 0.001] | [0.02, 0.01, 0.01] | [87, 91, 93] |

| ψε1 | 0.02 | 0.03 | −0.001 | 0.02 | 83 |

| ψε2 | 0.02 | 0.02 | 0.003 | 0.02 | 88 |

| ψε3 | 0.02 | 0.02 | −0.000 | 0.02 | 90 |

| ψε4 | 0.02 | 0.02 | 0.004 | 0.02 | 88 |

| ψε5 | 0.02 | 0.02 | −0.000 | 0.03 | 87 |

| ψε6 | 0.02 | 0.02 | 0.000 | 0.02 | 88 |

| ψε7 | 0.02 | 0.02 | −0.001 | 0.02 | 90 |

| ψε8 | 0.02 | 0.02 | 0.001 | 0.02 | 87 |

| μ1 | 0.03 | 0.03 | 0.008 | 0.03 | 85 |

| μ2 | 0.03 | 0.03 | −0.001 | 0.03 | 86 |

| μ3 | 0.03 | 0.03 | 0.005 | 0.03 | 89 |

| μ4 | 0.03 | 0.03 | 0.002 | 0.03 | 83 |

| μ5 | 0.02 | 0.02 | 0.005 | 0.02 | 91 |

| μ6 | 0.02 | 0.02 | 0.005 | 0.02 | 92 |

| μ7 | 0.02 | 0.02 | 0.003 | 0.02 | 91 |

| μ8 | 0.02 | 0.02 | 0.002 | 0.02 | 92 |

| [τ12, τ13, τ14, τ15] | [0.03, 0.03, 0.02, 0.02] | [0.04, 0.03, 0.03, 0.03] | [0.007, 0.004, 0.006, 0.004] | [0.04, 0.03, 0.03, 0.03] | [87, 89, 80, 85] |

| [τ22, τ23, τ24, τ25] | [0.04, 0.03, 0.03, 0.02] | [0.04, 0.03, 0.03, 0.03] | [−0.006, −0.004, −0.007, −0.004] | [0.04, 0.03, 0.03, 0.03] | [85, 89, 84, 83] |

| [τ32, τ33, τ34, τ35] | [0.04, 0.03, 0.02, 0.02] | [0.04, 0.03, 0.03, 0.03] | [0.006, 0.002, −0.002, −0.001] | [0.04, 0.03, 0.03, 0.03] | [88, 82, 85, 84] |

| [τ42, τ43, τ44, τ45] | [0.04, 0.03, 0.02, 0.03] | [0.04, 0.03, 0.02, 0.02] | [−0.003, −0.000, −0.003, −0.001] | [0.04, 0.03, 0.02, 0.02] | [84, 87, 88, 93] |

| [τ52, τ53, τ54, τ55] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.01, 0.02, 0.01] | [−0.001, 0.001, −0.001, −0.001] | [0.01, 0.01, 0.02, 0.02] | [89, 91, 90, 92] |

| [τ62, τ63, τ64, τ65] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [0.003, 0.002, −0.001, 0.001] | [0.01, 0.02, 0.02, 0.02] | [91, 87, 89, 89] |

| [τ72, τ73, τ74, τ75] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.01, 0.02, 0.02] | [−0.003, −0.003, −0.004, −0.002] | [0.01, 0.01, 0.02, 0.02] | [87, 93, 92, 92] |

| [τ82, τ83, τ84, τ85] | [0.01, 0.02, 0.02, 0.02] | [0.01, 0.02, 0.02, 0.02] | [−0.002, −0.003, −0.002, −0.002] | [0.01, 0.02, 0.02, 0.02] | [92, 88, 88, 88] |

| ϕ11 | 0.03 | 0.03 | −0.001 | 0.04 | 89 |

| ϕ12 | 0.02 | 0.01 | 0.006 | 0.02 | 93 |

| ϕ22 | 0.03 | 0.03 | −0.002 | 0.03 | 94 |

Acknowledgements

We would like to thank Frank Fujita for allowing us to use his data for the empirical illustration in this paper. The C++ scripts used for simulations in the present article are available upon request from the first author. Funding for this study was provided by grants from NSF (BCS-0826844), NIH (UL1-RR025747-01, MH086633, P01CA142538-01 and AG033387), and NSFC (10961026).

Appendix

Conditional distributions used in the Gibbs sampling procedures

To estimate the proposed nonlinear LVM, the Gibbs sampler is implemented in which a sequence of sampling steps [steps (a)–(i)] is carried out iteratively. The conditional distributions from which Gibbs samples are obtained are summarized below.

Steps (a)–(e): Conditional distributions related to the non-parametric components

The main idea behind efficient sampling of the non-parametric components is to recast the definition of bi in terms of the latent variable Li, i = 1, …, n, which records the cluster membership of bi such that bi = ZLi. The base distribution in the present context was defined to be an r-variate normal distribution with mean vector μZ and covariance matrix ΨZ. Conjugate prior distributions were specified for μZ, ΨZ, and α as in equation (12). To explore the posterior in relation to the non-parametric components, we sample (π, Z, L, μZ, ψZ, α) by means of the blocked Gibbs sampler to encourage mixing of the Markov chain. That is, Gibbs sampling of the non-parametric components was regrouped into five subsidiary steps (or blocks), involving sampling from the conditional distributions p(π, Z|L, μZ, ψZ, α, τ, Y*, θ, H, Yobs), p(L|π, Z, τ, Y*, θ, H, Yobs), p(μZ|Z, ψZ), p(ψZ|Z, μZ), and p(α|π). These five conditional distributions are summarized below.

Block 1. Posterior samples of [μZ|Z, ΨZ] can be obtained by sampling from

| (A1) |

where .

Block 2. For j = 1, …, r, each of the diagonal elements of ΨZ given Z and μZ is distributed as

| (A2) |

where Zgj is the jth element of the values in Z associated with point mass (or cluster) g and μZj is the jth element of μZ.

Block 3. Following the derivations detailed elsewhere (Ishwaran & James, 2001; Ishwaran & Zarepour, 2000; Lee et al., 2007), the conditional distribution (α|π) can be shown to be

| (A3) |

where is a random weight sampled from the beta distribution and it is sampled within Block 4.

Block 4. As π and α are independent given (Z, τ, Y*, θ, H, Yobs), the distribution (π, Z|L, μZ, ψZ, α, τ, Y*, θ, H, Yobs) is proportional to p(π|L, α)p(Z|L, μZ, ψZ, τ, Y*, θ, H, Yobs). Thus, the conditional distribution can be decomposed into two independent components to be derived separately.

Conditional distribution p(π|L, α)

It can be shown that the conditional distribution (π|L, α) conforms to a generalized Dirichlet distribution,

| (A4) |

where , for g = 1, …, G − 1, and dg is the number of Lis (and thus individuals) whose value equals g. Sampling from the conditional distribution (π|L, α) can be accomplished as follows. First, is first drawn from a Beta distribution. Subsequently, πg is obtained for g = 1, …, G as

| (A5) |

Conditional distribution p(Z|L, μZ, ψZ, τ, Y*, θ, H, Yobs)

Let be the d unique Li values (i.e., unique number of ‘clusters’), , and let Z[L] be components in Z = (Z1, …, ZG) other than ZL. Then

where p(Z[L]| μZ, ψZ) is simply the r-variate normal distribution, Nr(μZ, ψZ) and

It can be shown that the conditional distribution is non-standard and cannot be derived directly via Gibbs sampling. Specifically,

in which is given by

| (A6) |