Abstract

Although human musical performances represent one of the most valuable achievements of mankind, the best musicians perform imperfectly. Musical rhythms are not entirely accurate and thus inevitably deviate from the ideal beat pattern. Nevertheless, computer generated perfect beat patterns are frequently devalued by listeners due to a perceived lack of human touch. Professional audio editing software therefore offers a humanizing feature which artificially generates rhythmic fluctuations. However, the built-in humanizing units are essentially random number generators producing only simple uncorrelated fluctuations. Here, for the first time, we establish long-range fluctuations as an inevitable natural companion of both simple and complex human rhythmic performances. Moreover, we demonstrate that listeners strongly prefer long-range correlated fluctuations in musical rhythms. Thus, the favorable fluctuation type for humanizing interbeat intervals coincides with the one generically inherent in human musical performances.

Introduction

The preference for a composition is expected to be influenced by many aspects such as cultural background and taste. Nevertheless, universal statistical properties of music have been unveiled. On very long time scales, comparable to the length of compositions, early numerical studies indicated “flicker noise” in musical pitch and loudness fluctuations [1], [2] characterized by a power spectral density of  type,

type,  denoting the frequency. In reverse, these findings lead physicists to create so-called stochastic musical compositions. Most listeners judged these compositions to be more pleasing than those obtained using uncorrelated noise or short-term correlated noise [1].

denoting the frequency. In reverse, these findings lead physicists to create so-called stochastic musical compositions. Most listeners judged these compositions to be more pleasing than those obtained using uncorrelated noise or short-term correlated noise [1].

Rhythms play a major role in many physiological systems. Prominent examples include wrist motion [3] and coordination in physiological systems [4]–[8]. An enormous number of examples for  -noise in many scientific disciplines, such as condensed matter [9], [10], econophysics [11], and neurophysics [12]–[14] made general concepts explaining the omnipresence of

-noise in many scientific disciplines, such as condensed matter [9], [10], econophysics [11], and neurophysics [12]–[14] made general concepts explaining the omnipresence of  -noise conceivable [15]–[19]. However, to mention only a few, studies of heartbeat intervals [20]–[23], gait intervals [7], [24], and human sensorimotor coordination [4]–[6], [8], [25] revealed long-range fluctuations significantly deviating from

-noise conceivable [15]–[19]. However, to mention only a few, studies of heartbeat intervals [20]–[23], gait intervals [7], [24], and human sensorimotor coordination [4]–[6], [8], [25] revealed long-range fluctuations significantly deviating from  noise with

noise with  . Negative deviations from

. Negative deviations from  involve fluctuations with weaker persistence than flicker noise which is superpersistent. Thus the scaling exponent

involve fluctuations with weaker persistence than flicker noise which is superpersistent. Thus the scaling exponent  is important for characterizing the universality class of a long-range correlated (physiological) system.

is important for characterizing the universality class of a long-range correlated (physiological) system.

An ancient and yet evolving example of coordination in physiological systems are human musical performances. The neuronal mechanisms of timing in the millisecond range are still largely unknown and subject of scientific research [18], [26]–[31]. However, the nature of temporal fluctuations in complex human musical rhythms has never been scrutinized as yet. In this article, we study the correlation properties of temporal fluctuations in music on the timescale of rhythms and their influence on the perception of musical performances. We found long range correlations for both simple and complex rhythmic tasks and for both laypersons and professional musicians well outside the  regime. On the other hand, our study unveils a significant preference of listeners for long-range correlated fluctuations in music.

regime. On the other hand, our study unveils a significant preference of listeners for long-range correlated fluctuations in music.

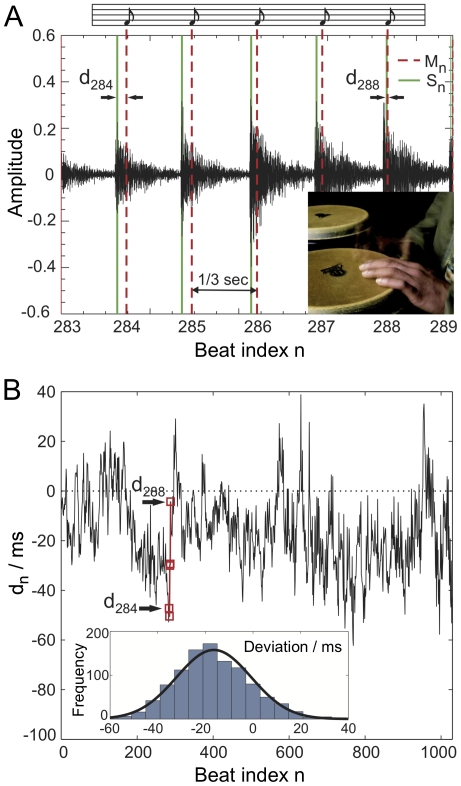

We analyzed the deviations from the exact beats for various combinations of hand, feet, and vocal performances, by both amateur and professional musicians. The data includes complex drum sequences from different musical bands obtained from a recording studio. While intentional deviations from an ideal rhythmic pattern play an important role in the interpretation of musical pieces, we focused here on the study of ‘natural’ (i.e. unintended intrinsic) deviations from a given rhythmic pattern of given complexity. In order to measure the deviations of human drumming from a rhythmic reference pattern we took a metronome as a reference and determined the temporal displacement of the recorded beat from the metronome click. A simple example of a recording is shown in Fig. 1: Here a test person had to follow the clicks of a metronome (presented over headphones) beating with one hand on a drum. Fig. 1A shows an excerpt of the audio signal and Fig. 1B the time series of deviations { }.

}.

Figure 1. Demonstration of the presence of temporal deviations and LRC in a simple drum recording.

A professional drummer (inset) was recorded tapping with one hand on a drum trying to synchronize with a metronome at  beats per minute (A). An excerpt of the recorded audio signal is shown over the beat index

beats per minute (A). An excerpt of the recorded audio signal is shown over the beat index  at sampling rate

at sampling rate  kHz. The beats detected at times

kHz. The beats detected at times  (green lines, see Methods) are compared with the metronome beats (red dashed lines). (B) The deviations

(green lines, see Methods) are compared with the metronome beats (red dashed lines). (B) The deviations  fluctuate around a mean of

fluctuate around a mean of  ms, i.e. on average the subject slightly anticipates the ensuing metronome clicks. Inset: The probability density function of the time series is well approximated by a Gaussian distribution (standard deviation

ms, i.e. on average the subject slightly anticipates the ensuing metronome clicks. Inset: The probability density function of the time series is well approximated by a Gaussian distribution (standard deviation  ms). Our main focus is on more complex rhythmic tasks, however (see Table 1). A detrended fluctuation analysis of {

ms). Our main focus is on more complex rhythmic tasks, however (see Table 1). A detrended fluctuation analysis of { } is shown in Fig. 2C (middle curve).

} is shown in Fig. 2C (middle curve).

The recordings of beat sequences for several rhythmic tasks performed by humans are compiled in Table 1. In all recordings the subjects were given metronome clicks over headphones, a typical procedure in professional drum recordings. We used the grid of metronome clicks to compute the time series of deviations of beats and offbeats of complex drum sequences (tasks  ). Each of the different drummers performed simultaneously with their feet (for bass drum and hi-hat) and hands. Furthermore we analyzed the recordings of vocal performances of four musicians (tasks

). Each of the different drummers performed simultaneously with their feet (for bass drum and hi-hat) and hands. Furthermore we analyzed the recordings of vocal performances of four musicians (tasks  ), consisting of short rhythmic sounds of the voice according to a metronome at

), consisting of short rhythmic sounds of the voice according to a metronome at  beats per minute (BPM). Short phonemes (such as [‘dee’]) were chosen to obtain well separated peaks in the envelopes of the audio signal. For comparison with less complex sensorimotor coordination tasks [4], [6], [8], [25], [28], [32] we have also included recordings of test subjects tapping with a hand on a drumhead (tasks

beats per minute (BPM). Short phonemes (such as [‘dee’]) were chosen to obtain well separated peaks in the envelopes of the audio signal. For comparison with less complex sensorimotor coordination tasks [4], [6], [8], [25], [28], [32] we have also included recordings of test subjects tapping with a hand on a drumhead (tasks  ). We recorded tapping at two different meters for each test subject,

). We recorded tapping at two different meters for each test subject,  BPM and

BPM and  BPM, omitting so-called ‘glitches’, i.e. deviations larger than

BPM, omitting so-called ‘glitches’, i.e. deviations larger than  ms. These glitches occurred in less than

ms. These glitches occurred in less than  % and do not substantially affect the long term behavior we seek to quantify.

% and do not substantially affect the long term behavior we seek to quantify.

Table 1. Set of complex rhythmic tasks exhibiting LRC.

| Rhythmic set | Task no. | Task description | Tempo (BPM) | Exponent

|

Exponent

|

|

1 | complex pattern | 190 |

|

|

| 2 | complex pattern | 132 |

|

|

|

| 3 | periodic pattern | 124 |

|

|

|

| 4 | periodic pattern | 124 |

|

|

|

| 5 | periodic pattern | 124 |

|

|

|

| 6 | tapping with stick | 124 |

|

|

|

| 7 | tapping with stick | 124 |

|

|

|

|

|

voice | 124 |

|

|

|

" | 124 |

|

|

|

|

" | 124 |

|

|

|

|

" | 124 |

|

|

|

|

|

tapping with hand | 180, 124 | see Fig. 2B | see Fig. 2B |

The exponents  shown in the table were obtained using DFA and the exponents

shown in the table were obtained using DFA and the exponents  were computed via the PSD. In the set

were computed via the PSD. In the set  the complexity of rhythmic drum patterns decreases from tasks no.

the complexity of rhythmic drum patterns decreases from tasks no.  to

to  . In tasks no.

. In tasks no.  we analyzed real drum recordings that are taken from popular music songs, the two drummers are different persons. Tasks no.

we analyzed real drum recordings that are taken from popular music songs, the two drummers are different persons. Tasks no.  were performed by a third (different) drummer, where no.

were performed by a third (different) drummer, where no.  consisted of a short rhythmic pattern (that included beats and off-beats) repeated continuously by the drummer. We analyzed the fluctuations of both beats and off-beats (no.

consisted of a short rhythmic pattern (that included beats and off-beats) repeated continuously by the drummer. We analyzed the fluctuations of both beats and off-beats (no.  ) of the pattern and in addition we considered the fluctuations of the beats (task no.

) of the pattern and in addition we considered the fluctuations of the beats (task no.  ) and off-beats (no.

) and off-beats (no.  ) separately. See main text for a description of

) separately. See main text for a description of  and

and  .

.

We focused on the extraction of possible long-range correlations (LRC) from the recorded signals. A signal is called long-range correlated if its power spectral density (PSD) asymptotically scales in a power law,  for small frequencies and

for small frequencies and  . The power law exponent

. The power law exponent  measures the strength of the persistence. The signal is uncorrelated for

measures the strength of the persistence. The signal is uncorrelated for  (white noise), while for

(white noise), while for  the time series typically originates from integrated white noise processes, such as Brownian motion. For

the time series typically originates from integrated white noise processes, such as Brownian motion. For  the Wiener Khinchin Theorem links the PSD to the autocorrelation function of the time series {

the Wiener Khinchin Theorem links the PSD to the autocorrelation function of the time series { } which also decays in a power law

} which also decays in a power law  . For the superpersistent case

. For the superpersistent case  the correlation function does not decrease within the scaling regime whereas

the correlation function does not decrease within the scaling regime whereas  indicates instationarity of the time series.

indicates instationarity of the time series.

We applied various methods that measure the strength of LRC in short time series, namely detrended fluctuations analysis (DFA) [21], [33], zero padding PSD method [34], and maximum likelihood estimation (MLE) (see Methods for details). DFA involves calculation of the fluctuation function  measuring the average variance of a time series segment of length

measuring the average variance of a time series segment of length  . For fractal scaling one finds

. For fractal scaling one finds  , where

, where  is the so-called Hurst exponent, which is a frequently used measure to quantify LRC [33]. The DFA exponent

is the so-called Hurst exponent, which is a frequently used measure to quantify LRC [33]. The DFA exponent  is related to the power spectral exponent

is related to the power spectral exponent  via

via  [35].

[35].

Results

We found LRC in musical rhythms for all tasks and subjects (summarized in Table 1) that were able to follow the rhythm for a sufficiently long time. Fig. 2C shows typical fluctuation functions for several time series of deviations { }. The power law scaling indicates

}. The power law scaling indicates  -noise. The obtained power spectral densities display exponents

-noise. The obtained power spectral densities display exponents  in a broad range,

in a broad range,  (Fig. 2 A–B). This strongly indicates that a (conceivable) unique universal exponent is very unlikely to exist (cf. the confidence intervals for

(Fig. 2 A–B). This strongly indicates that a (conceivable) unique universal exponent is very unlikely to exist (cf. the confidence intervals for  ). For the sets

). For the sets  and

and  we recorded musicians and non-musicians with different musical experience ranging from laypersons to professionals. Our findings suggest that it is not possible to assign a correlation exponent (

we recorded musicians and non-musicians with different musical experience ranging from laypersons to professionals. Our findings suggest that it is not possible to assign a correlation exponent ( or

or  ) to a certain musician, as a single person may perform differently, which is quantified by both power law exponents

) to a certain musician, as a single person may perform differently, which is quantified by both power law exponents  and

and  (see, e.g. tasks no.

(see, e.g. tasks no.  and

and  in Fig. 2B, which corresponds to beating on a drum at different metronome tempi). A systematic study of a possible dependence of the correlation exponents on the nature of the task would be interesting in itself but is beyond our focus here.

in Fig. 2B, which corresponds to beating on a drum at different metronome tempi). A systematic study of a possible dependence of the correlation exponents on the nature of the task would be interesting in itself but is beyond our focus here.

Figure 2. Evidence for long-range correlations in time series of deviations for the rhythmic tasks of table 1 .

The tasks correspond to complex drum sequences ( ) and rhythmic vocal sounds (

) and rhythmic vocal sounds ( ) (A) and are compared to hand tapping on the drumhead of a drum (

) (A) and are compared to hand tapping on the drumhead of a drum ( ) (B). The time series analysis reveals Gaussian

) (B). The time series analysis reveals Gaussian  -noise for the entire data set. The exponents

-noise for the entire data set. The exponents  obtained by different methods, maximum likelihood estimation (incl. confidence intervals), detrended fluctuation analysis (DFA) and PSD show overall good agreement. Task indices separated by vertical dashed lines in Fig. 2B are recordings from different test subjects each recorded at two different metronome tempi:

obtained by different methods, maximum likelihood estimation (incl. confidence intervals), detrended fluctuation analysis (DFA) and PSD show overall good agreement. Task indices separated by vertical dashed lines in Fig. 2B are recordings from different test subjects each recorded at two different metronome tempi:  BPM (even index) and

BPM (even index) and  BPM (odd index). In (C) a DFA analysis for three representative time series is shown, corresponding to drumming a complex pattern where hands and feet are simultaneously involved (top), and tasks taken from

BPM (odd index). In (C) a DFA analysis for three representative time series is shown, corresponding to drumming a complex pattern where hands and feet are simultaneously involved (top), and tasks taken from  (middle) and

(middle) and  (bottom curve). The upper two curves are offset vertically for clarity. The curves clearly exhibit a power law scaling

(bottom curve). The upper two curves are offset vertically for clarity. The curves clearly exhibit a power law scaling  and demonstrate LRC in the time series. The total length of the time series is

and demonstrate LRC in the time series. The total length of the time series is  .

.

Although a musician does not necessarily intend to optimize synchrony with the metronome, the lowest values of the standard deviation of the time series were found for experienced musicians ( ms, average standard deviation

ms, average standard deviation  ms). We observed the trend that small values of

ms). We observed the trend that small values of  correlated with small values of

correlated with small values of  and therefore conjecture that on average, the more a person synchronizes with the metronome clicks, the lower the exponent

and therefore conjecture that on average, the more a person synchronizes with the metronome clicks, the lower the exponent  . Hence, the degree of correlations in the interbeat time series would shrink with increasing external influence. In finger tapping experiments [8], [25] LRC were found even without the usage of a metronome [4], i.e., for weak external influence. On the other hand, in the extreme case where the test subject is triggered completely externally (as in reaction time experiments), LRC were entirely absent [4].

. Hence, the degree of correlations in the interbeat time series would shrink with increasing external influence. In finger tapping experiments [8], [25] LRC were found even without the usage of a metronome [4], i.e., for weak external influence. On the other hand, in the extreme case where the test subject is triggered completely externally (as in reaction time experiments), LRC were entirely absent [4].

In order to probe nonlinear properties in the time series, we applied the magnitude and sign decomposition method [36], [37]. The analysis showed strong evidence for an absence of nonlinear correlations in the data (see Methods and Fig. S2). Thus the applied methods of analysis designed to characterize linear LRC are appropriate for the time series of deviations that we have studied here.

Humanizing music

Do listeners prefer music the more the rhythm is accurate? In order to modify precise computer-generated music to make it sound more natural, professional audio software applications are equipped with a so-called ‘humanizing’ feature. It is also frequently used in post-processing conventional recordings. A humanized sequence is obtained by the following steps (see Methods for details): First, a musical sequence with beat-like characteristics is decomposed into beats [38], which are then shifted individually according to a random time series of deviations { }. The decomposed beats are finally merged again using advanced overlap algorithms.

}. The decomposed beats are finally merged again using advanced overlap algorithms.

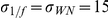

We found that the humanizing tools of widely used professional software applications generally apply white noise fluctuations to musical sequences. Based on the above results we have studied the question whether musical perception can be influenced by humanizing using different noise characteristics. Therefore, a song was created and humanized with  -noise with

-noise with  (‘version

(‘version

’), and white noise (‘version WN’), where the time series of deviations have zero mean and standard deviation

’), and white noise (‘version WN’), where the time series of deviations have zero mean and standard deviation  ms. Vocals remained unchanged, as well as all other properties of the versions such as pitch and loudness fluctuations, which have been shown to affect the auditory impression [1], [2]. Listeners were able to discriminate between the two versions, see Fig. 3. We observed a clear preference for the

ms. Vocals remained unchanged, as well as all other properties of the versions such as pitch and loudness fluctuations, which have been shown to affect the auditory impression [1], [2]. Listeners were able to discriminate between the two versions, see Fig. 3. We observed a clear preference for the  humanizing over the white noise humanized version, see the audio example in the Supporting Information where we compare the two versions.

humanizing over the white noise humanized version, see the audio example in the Supporting Information where we compare the two versions.

Figure 3. Perception analysis showing that  humanized music is preferred over white noise humanizing.

humanized music is preferred over white noise humanizing.

The versions  and WN (white noise) were compared by

and WN (white noise) were compared by  listeners. Two samples were played in random order to test subjects, all singers from Göttingen choirs, who were asked either one of two questions: (1) “Which sample sounds more precise?” (red bar) or (2) “Which sample do you prefer?” (blue bar) The answers to the first question provide clear evidence that listeners were able to perceive a difference between the two versions (t-test,

listeners. Two samples were played in random order to test subjects, all singers from Göttingen choirs, who were asked either one of two questions: (1) “Which sample sounds more precise?” (red bar) or (2) “Which sample do you prefer?” (blue bar) The answers to the first question provide clear evidence that listeners were able to perceive a difference between the two versions (t-test,  ). Furthermore, the 1/f humanized version was significantly preferred to the WN version (t-test,

). Furthermore, the 1/f humanized version was significantly preferred to the WN version (t-test,  ).

).

Rating of humanized music

Next, we describe our empirical analysis on the preference of listeners for  and WN (white noise) humanized music, cf. Fig. 3. Two segments of the song under investigation, which were about

and WN (white noise) humanized music, cf. Fig. 3. Two segments of the song under investigation, which were about  seconds long, were used. The two versions,

seconds long, were used. The two versions,  and WN of a segment were played three times to participants. Thus they listened to a total of six pairs (three times the pairs for the first segment, followed by pairs of the second segment). The order of the exact and the

and WN of a segment were played three times to participants. Thus they listened to a total of six pairs (three times the pairs for the first segment, followed by pairs of the second segment). The order of the exact and the  version was randomized across the six pairs, with each version being played the first in three out of six times. For each pair of segments participants were asked one of two questions: (1) “Which sample sounds more precise?” or (2) “Which sample do you prefer?” Half of the participants first made their preference judgments for all six pairs before they reheard the same six pairs and were asked which of the two versions sounded more precise. The second half of the participants received the same tasks in reversed order. No feedback was provided regarding which of the two versions was more precise.

version was randomized across the six pairs, with each version being played the first in three out of six times. For each pair of segments participants were asked one of two questions: (1) “Which sample sounds more precise?” or (2) “Which sample do you prefer?” Half of the participants first made their preference judgments for all six pairs before they reheard the same six pairs and were asked which of the two versions sounded more precise. The second half of the participants received the same tasks in reversed order. No feedback was provided regarding which of the two versions was more precise.

In total  members of Göttingen choirs volunteered for the study;

members of Göttingen choirs volunteered for the study;  male and

male and  female (average age

female (average age  years,

years,  ). Participants were asked to assess their musical expertise on a scale from

). Participants were asked to assess their musical expertise on a scale from  (amateur) to

(amateur) to  (professional). Participants rated their musical expertise on average as

(professional). Participants rated their musical expertise on average as

). For both tasks (preference and precision) the relative frequency of choosing the

). For both tasks (preference and precision) the relative frequency of choosing the  version was computed for each participant. The mean relative frequency with respect to preference for the

version was computed for each participant. The mean relative frequency with respect to preference for the  version was

version was  (

( ). A

). A  -test revealed that these choices deviated significantly from chance (

-test revealed that these choices deviated significantly from chance ( ,

,  ), which indicates that participants clearly preferred the

), which indicates that participants clearly preferred the  version over the white noise version. In addition they considered the

version over the white noise version. In addition they considered the  version to be more precise (

version to be more precise ( ,

,  ) with frequency

) with frequency  % (

% ( ).

).

Discussion

This study provides strong evidence for LRC in a broad variety of rhythmic tasks such as hand, feet, but also vocal performances. Therefore these fluctuations are unlikely to be evoked merely by a limb movement. Another observation rather points to mechanisms of rhythmic timing (‘internal clocks’) that involve memory processes: We found that LRC were entirely absent in individuals who frequently lose rhythm and try to reenter following the metronome. The absence of LRC plays an important role as well in other physiological systems, such as heartbeat fluctuations during deep sleep [22]. While LRC in heartbeat intervals are reminiscent to the wake phase, they were found only in the REM phase of sleep, pointing to a different regulatory mechanism of the heartbeat during non-REM sleep.

Here, the loss of LRC may originate from a resetting of memory in the neurophysical mechanisms controlling rhythmic timing (e.g. neuronal ‘population clocks’, see [29], [30], [39] for an overview on neurophysical modeling of timing in the millisecond regime). In the other cases, the existence of strong LRC shows that these clocks have a long persistence even in the presence of a metronome. In cat auditory nerve fibers, LRC were found in neural activity over multiple time scales [12]. Also human EEG data [13] as well as interspike-interval sequences of human single neuron firing activity [14] showed LRC exhibiting power-law scaling behavior. We hypothesize that such processes might be neuronal correlates of the LRC observed here in rhythmic tasks.

In conclusion, we analyzed the statistical nature of temporal fluctuations in complex human musical rhythms. We found LRC in interbeat intervals as a generic feature, that is, a small rhythmic fluctuation at some point in time does not only influence fluctuations shortly thereafter, but even after tens of seconds. Listeners as test subjects significantly preferred music with long-range correlated temporal deviations to uncorrelated humanized music. Therefore, these results may not only impact applications such as audio editing and post production, e.g. in form of a novel humanizing technique [40], but also provide new insights for the neurophysical modeling of timing. We established that the favorable fluctuation type for humanizing interbeat intervals coincide with the one generically inherent in human musical performances. Further work must be undertaken to reveal the reasons for this coincidence.

Methods

Beat detection

Let the recording be given by the audio signal amplitude  . We define the occurrence of a sound (or beat) at time

. We define the occurrence of a sound (or beat) at time  in the audio signal by

in the audio signal by

| (1) |

where  is the time interval of interest. Given the metronome clicks

is the time interval of interest. Given the metronome clicks  , where

, where  is the time interval between the clicks and

is the time interval between the clicks and  , and given the sounds at times

, and given the sounds at times  , then the deviation

, then the deviation  is obtained by the difference

is obtained by the difference

| (2) |

The time series of interbeat intervals  is given by

is given by  . The beat detection according to Eq. 1 is suitable in particular for simple drum recordings due to the compact shape of a drum sound

. The beat detection according to Eq. 1 is suitable in particular for simple drum recordings due to the compact shape of a drum sound  (see Fig. 1): The envelope of

(see Fig. 1): The envelope of  rises to a maximum value (“attack phase”) and then decays quickly (“decay phase”) [38]. Thus, if the drum sounds are well separated, a unique extremum

rises to a maximum value (“attack phase”) and then decays quickly (“decay phase”) [38]. Thus, if the drum sounds are well separated, a unique extremum  can be found. In contemporary audio editing software, typically the onset of a beat is detected [38], which is particularly useful when beats overlap. We used onset detection to find the temporal occurrences of sounds for humanizing musical sequences, as explained in the section “preparation of humanized music”.

can be found. In contemporary audio editing software, typically the onset of a beat is detected [38], which is particularly useful when beats overlap. We used onset detection to find the temporal occurrences of sounds for humanizing musical sequences, as explained in the section “preparation of humanized music”.

We generalized definition Eq. 2 in order to consider deviations of a sequence from a complex rhythmic pattern (instead of from a metronome) as shown in Fig. S1.

Audio example of humanized music

We created audio examples to investigate experimentally, whether there is a preference of listeners for LRC in music. Two segments of the pop song “Everyday, everynight”, which are about  seconds long, were used. The song was created and humanized for our study in collaboration with Cubeaudio recording studio (Göttingen, Germany). An audio example consists of two different versions of the same segment of the song, played one after the other. You can listen to an audio example in the Supporting Information section (Audio S1). First, sample A, then sample B is played, separated by a

seconds long, were used. The song was created and humanized for our study in collaboration with Cubeaudio recording studio (Göttingen, Germany). An audio example consists of two different versions of the same segment of the song, played one after the other. You can listen to an audio example in the Supporting Information section (Audio S1). First, sample A, then sample B is played, separated by a  sec pause. The two samples differ only in the rhythmic structure, all other properties such as pitch and timbre are identical.

sec pause. The two samples differ only in the rhythmic structure, all other properties such as pitch and timbre are identical.

The first part (sample A) of the audio example (Audio S1) in the Supporting Information was humanized by introducing LRC (using Gaussian  -noise), while for sample B the conventional humanizing technique using Gaussian white noise was applied. While the samples used in the experiments on music perception contained also vocals, in this audio example vocal tracks were excluded for clarity.

-noise), while for sample B the conventional humanizing technique using Gaussian white noise was applied. While the samples used in the experiments on music perception contained also vocals, in this audio example vocal tracks were excluded for clarity.

Preparation of humanized music

Here we provide details on how the music examples were prepared. For our study, a song was recorded and humanized in collaboration with Cubeaudio recording studio using the professional audio editing software ‘Pro Tools’ (Version HD 7.4). The song of  min. length has a steady beat in the eighth notes at

min. length has a steady beat in the eighth notes at  BPM, leaving a number of

BPM, leaving a number of  eighth notes in the whole song. First, the individual instruments were recorded separately, a standard procedure in professional recordings in music studios. This leads to a number of audio tracks while some instruments, such as the drum kit, are represented by several tracks. Then the beats which are supposed to be located at the eighth notes (but are displaced from their ideal positions) were detected for each individual track using onset detection [38] implemented in Pro Tools. The tracks are cut at the detected onsets of beats, resulting in

eighth notes in the whole song. First, the individual instruments were recorded separately, a standard procedure in professional recordings in music studios. This leads to a number of audio tracks while some instruments, such as the drum kit, are represented by several tracks. Then the beats which are supposed to be located at the eighth notes (but are displaced from their ideal positions) were detected for each individual track using onset detection [38] implemented in Pro Tools. The tracks are cut at the detected onsets of beats, resulting in  audio snippets for each track. Next, the resulting snippets were shifted onto their exact positions, which is commonly called ‘100% quantization’ in audio engineering. This procedure leads to an exact version of the song without any temporal deviations. At this stage humanizing comes into play. In order to humanize the song, we shifted the individual beats according to a time series of deviations

audio snippets for each track. Next, the resulting snippets were shifted onto their exact positions, which is commonly called ‘100% quantization’ in audio engineering. This procedure leads to an exact version of the song without any temporal deviations. At this stage humanizing comes into play. In order to humanize the song, we shifted the individual beats according to a time series of deviations  , where

, where  . For example, if

. For example, if  ms, then beat snippet no.

ms, then beat snippet no.  is shifted from its exact position by

is shifted from its exact position by  ms ahead (for all tracks). We generated two time series

ms ahead (for all tracks). We generated two time series  , one is Gaussian white noise used for “version WN”, while the other consists of Gaussian

, one is Gaussian white noise used for “version WN”, while the other consists of Gaussian  noise to generate “version

noise to generate “version  ”. The snippets of the shifted beats are then merged in Pro Tools. The whole procedure was done for each audio track (hence, for each instrument). Finally, the set of all audio tracks is written to a single audio file.

”. The snippets of the shifted beats are then merged in Pro Tools. The whole procedure was done for each audio track (hence, for each instrument). Finally, the set of all audio tracks is written to a single audio file.

Probing correlations

A method tailored to probe correlation properties of short time series is detrended fluctuation analysis (DFA) which operates in the time domain [21], [33]. The integrated time series is divided into boxes of equal length  . DFA involves a detrending of the data in the boxes using a polynomial of degree

. DFA involves a detrending of the data in the boxes using a polynomial of degree  (we used

(we used  throughout this study). Thereafter the variance

throughout this study). Thereafter the variance  of the fluctuations over the trend is calculated. A linear relationship in a double logarithmic plot indicates the presence of power law (fractal) scaling

of the fluctuations over the trend is calculated. A linear relationship in a double logarithmic plot indicates the presence of power law (fractal) scaling  . We considered scales

. We considered scales  in the range

in the range  , where

, where  is the length of the time series. Once it is statistically established by means of PSD and DFA that the spectral density

is the length of the time series. Once it is statistically established by means of PSD and DFA that the spectral density  is well-approximated by a power law, we use the Whittle Maximum Likelihood Estimation (MLE) to estimate the exponent

is well-approximated by a power law, we use the Whittle Maximum Likelihood Estimation (MLE) to estimate the exponent  and determine confidence intervals. The MLE is applied to

and determine confidence intervals. The MLE is applied to  in the same frequency range as for the zero padding PSD method [34], which is

in the same frequency range as for the zero padding PSD method [34], which is  , where

, where  is the Nyquist frequency.

is the Nyquist frequency.

Moreover, we analyzed the data set with the magnitude and sign decomposition method that reveals possible nonlinear correlations in short time series [36], [37]. In a first step the increments of the original time series are calculated. Second, the increments are decomposed into sign and absolute value (referred to as magnitude) and its average is subtracted. Third, both time series are integrated. Finally, a DFA analysis is applied to the integrated sign and magnitude time series, and the scaling of the fluctuation function is measured. The magnitude series accounts for nonlinear correlations in the original error time series.

Fig. S2 shows four typical fluctuation functions (DFA) for their corresponding magnitude interbeat time series. The curves' slopes are close to  (bold black line). More precisely,

(bold black line). More precisely,  (

( out of

out of  ) of the data set's magnitude exponents

) of the data set's magnitude exponents  lie in the interval

lie in the interval  ; three values for

; three values for  have values slightly above

have values slightly above  (each data set has less than

(each data set has less than  data points). Hence, the magnitude and sign decomposition method indicates a lack of nonlinear correlations in the original interbeat time series. Fig. S2 also displays the sign decompositions of the fluctuation functions. For small scales the curves have slopes below

data points). Hence, the magnitude and sign decomposition method indicates a lack of nonlinear correlations in the original interbeat time series. Fig. S2 also displays the sign decompositions of the fluctuation functions. For small scales the curves have slopes below  , whereas those for large scales have slopes around

, whereas those for large scales have slopes around  . This behavior is representative for the whole data set. As an expected consequence, the sign decomposition shows small scale anticorrelations typically found in gait intervals [7], [24], together with a rather uncorrelated behavior on larger scales.

. This behavior is representative for the whole data set. As an expected consequence, the sign decomposition shows small scale anticorrelations typically found in gait intervals [7], [24], together with a rather uncorrelated behavior on larger scales.

Ethics statement

The study was reviewed and approved by the Ethics Committee of the Psychology Department of the University of Göttingen. The Committee did not require that informed consent was given for the surveys: return of the anonymous questionnaire was accepted as implied consent.

Supporting Information

Generalization of Eq. 2 in order to consider deviations of a complex rhythm from a complex pattern (instead of from a metronome), shown is a simple example. Beats at times  (red vertical lines) and an ideal beat pattern with beats at times

(red vertical lines) and an ideal beat pattern with beats at times  (black vertical lines) are compared. The resulting deviations read

(black vertical lines) are compared. The resulting deviations read  . For illustration, the time is given in units of the length of a quarter note

. For illustration, the time is given in units of the length of a quarter note  , leading to the rhythmic pattern shown in the upper right corner.

, leading to the rhythmic pattern shown in the upper right corner.

(TIFF)

Probing nonlinear correlations in interbeat time series (the number in the legend denotes the task index, cf. table I of the article). Shown are plots of the root-mean-square fluctuation function  from second-order DFA analysis for (A) the integrated magnitude series, and for (B) the corresponding integrated sign series. The grey line has slope

from second-order DFA analysis for (A) the integrated magnitude series, and for (B) the corresponding integrated sign series. The grey line has slope  indicating anticorrelations. The black lines have slope

indicating anticorrelations. The black lines have slope  indicating no correlations.

indicating no correlations.

(TIFF)

Audio example: Pop song “Everyday, everynight”. First, the 1/f humanized version, then the white noise humanized version is played.

(MP3)

Acknowledgments

We thank Cubeaudio recording studio (Göttingen, Germany) for providing drumming data and creating the humanized musical pieces. We also thank C. Paulus for helping with the experimental setups. We wish to thank the Göttingen choirs ‘University Choir’ and ‘Unicante’ and the musicians E. Hüneke and A. Attiogbe-Redlich and all other professional and amateur musicians who participated. For valuable comments we thank F. Wolf, M. Timme, and H.W. Gutch.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: The authors acknowledge financial support by the BMBF, Germany, through the Bernstein Center for Computational Neuroscience, grant no. 01GQ0430 (AW and TG). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Voss R, Clarke J. ‘1/f noise’ in music and speech. Nature. 1975;258:317–318. [Google Scholar]

- 2.Jennings HD, Ivanov PC, Martins AM, Silva PC, Viswanathan GM. Variance uctuations in nonstationary time series: a comparative study of music genres. Physica A. 2004;336:585–594. [Google Scholar]

- 3.Ivanov PC, Hu K, Hilton MF, Shea SA, Stanley HE. Endogenous circadian rhythm in human motor activity uncoupled from circadian inuences on cardiac dynamics. Proc Nat Acad Sci USA. 2007;104:20702–20707. doi: 10.1073/pnas.0709957104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gilden DL, Thornton T, Mallon MW. 1/f noise in human cognition. Science. 1995;267:1837–1839. doi: 10.1126/science.7892611. [DOI] [PubMed] [Google Scholar]

- 5.Cabrera JL, Milton JG. On-off intermittency in a human balancing task. Phys Rev Lett. 2002;89:158702. doi: 10.1103/PhysRevLett.89.158702. [DOI] [PubMed] [Google Scholar]

- 6.Collins JJ, Luca CJ. Random walking during quiet standing. Phys Rev Lett. 1994;73:764–767. doi: 10.1103/PhysRevLett.73.764. [DOI] [PubMed] [Google Scholar]

- 7.Hausdorff JM, Peng CK, Ladin Z, Wei JY, Goldberger AL. Is walking a random walk? evidence for long-range correlations in stride interval of human gait. J Appl Physiol. 1995;78:349–358. doi: 10.1152/jappl.1995.78.1.349. [DOI] [PubMed] [Google Scholar]

- 8.Chen Y, Ding M, Kelso JAS. Long memory processes (1/f type) in human coordination. Phys Rev Lett. 1997;79:4501–4504. [Google Scholar]

- 9.Geisel T, Nierwetberg J, Zacherl A. Accelerated diffusion in josephson junctions and related chaotic systems. Phys Rev Lett. 1985;54:616–619. doi: 10.1103/PhysRevLett.54.616. [DOI] [PubMed] [Google Scholar]

- 10.Kogan S. Electronic noise and uctuations in solids. Cambridge University Press; 2008. [Google Scholar]

- 11.Mantegna R, Stanley HE. An introduction to econophysics. Cambridge University Press; 2000. [Google Scholar]

- 12.Lowen SB, Teich MC. The periodogram and allan variance reveal fractal exponents greater than unity in auditory-nerve spike trains. J Acoust Soc Am. 1996;99:3585–3591. doi: 10.1121/1.414979. [DOI] [PubMed] [Google Scholar]

- 13.Linkenkaer-Hansen K, Nikouline VV, Palva JM, Ilmoniemi RJ. Long-range temporal correlations and scaling behavior in human brain oscillations. J Neurosci. 2001;21:1370–1377. doi: 10.1523/JNEUROSCI.21-04-01370.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bhattacharya J, Edwards J, Mamelak AN, Schuman EM. Long-range temporal correlations in the spontaneous spiking of neurons in the hippocampal-amygdala complex of humans. Neuroscience. 2005;131:547–555. doi: 10.1016/j.neuroscience.2004.11.013. [DOI] [PubMed] [Google Scholar]

- 15.Procaccia I, Schuster H. Functional renormalization-group theory of universal 1/f noise in dynamical systems. Phys Rev A. 1983;28:1210–1212. [Google Scholar]

- 16.Geisel T, Zacherl A, Radons G. Generic 1/f noise in chaotic hamiltonian dynamics. Phys Rev Lett. 1987;59:2503–2506. doi: 10.1103/PhysRevLett.59.2503. [DOI] [PubMed] [Google Scholar]

- 17.Shlesinger MF. Fractal time and 1/f noise in complex systems. Ann NY Acad Sci. 1987;504:214–228. doi: 10.1111/j.1749-6632.1987.tb48734.x. [DOI] [PubMed] [Google Scholar]

- 18.Ivanov PC, Amaral LAN, Goldberger AL, Stanley HE. Stochastic feedback and the regulation of biological rhythms. Europhys Lett. 1998;43:363. doi: 10.1209/epl/i1998-00366-3. [DOI] [PubMed] [Google Scholar]

- 19.Jensen HJ. Self-organized criticality. Cambridge University Press; 1998. [Google Scholar]

- 20.Kobayashi M, Musha T. 1/f uctuation of heartbeat period. IEEE Bio-med Eng. 1982;29:456–457. doi: 10.1109/TBME.1982.324972. [DOI] [PubMed] [Google Scholar]

- 21.Peng CK, Havlin S, Stanley HE, Goldberger AL. Quantification of scaling exponents and crossover phenomena in nonstationary heartbeat time series. Chaos. 1995;5:82–87. doi: 10.1063/1.166141. [DOI] [PubMed] [Google Scholar]

- 22.Bunde A, Havlin S, Kantelhardt J, Penzel T, Peter J, et al. Correlated and uncorrelated regions in heart-rate uctuations during sleep. Phys Rev Lett. 2000;85:3736–3739. doi: 10.1103/PhysRevLett.85.3736. [DOI] [PubMed] [Google Scholar]

- 23.Schmitt DT, Ivanov PC. Fractal scale-invariant and nonlinear properties of cardiac dynamics remain stable with advanced age: a new mechanistic picture of cardiac control in healthy elderly. Am J Physiol Regul Integr Comp Physiol. 2007;293:R1923. doi: 10.1152/ajpregu.00372.2007. [DOI] [PubMed] [Google Scholar]

- 24.Ashkenazy Y, Hausdorff JM, Ivanov PC, Stanley HE. A stochastic model of human gait dynamics. Physica A. 2002;316:662–670. [Google Scholar]

- 25.Roberts S, Eykholt R, Thaut M. Analysis of correlations and search for evidence of deterministic chaos in rhythmic motor control. Phys Rev E. 2000;62:2597–2607. doi: 10.1103/physreve.62.2597. [DOI] [PubMed] [Google Scholar]

- 26.Tass P, Rosenblum MG, Weule J, Kurths J, Pikovsky A, et al. Detection of n: m phase locking from noisy data: Application to magnetoencephalography. Phys Rev Lett. 1998;81:3291–3294. [Google Scholar]

- 27.Golubitsky M, Stewart I, Buono PL, Collins JJ. Symmetry in locomotor central pattern generators and animal gaits. Nature. 1999;401:693–695. doi: 10.1038/44416. [DOI] [PubMed] [Google Scholar]

- 28.Néda Z, Ravasz E, Brechet Y, Vicsek T, Barabási AL. The sound of many hands clapping. Nature. 2000;403:849–850. doi: 10.1038/35002660. [DOI] [PubMed] [Google Scholar]

- 29.Buonomano DV, Karmarkar UR. How do we tell time? The Neuroscientist. 2002;8:42–51. doi: 10.1177/107385840200800109. [DOI] [PubMed] [Google Scholar]

- 30.Buhusi CV, Meck WH. What makes us tick? functional and neural mechanisms of interval timing. Nature Rev Neurosci. 2005;6:755–765. doi: 10.1038/nrn1764. [DOI] [PubMed] [Google Scholar]

- 31.Memmesheimer RM, Timme M. Handbook on biological networks. World Scientific, Singapore; 2009. Synchrony and precise timing in complex neural networks. [Google Scholar]

- 32.Buchanan JJ, Kelso JAS, DeGuzman GC, Ding M. The spontaneous recruitment and suppression of degrees of freedom in rhythmic hand movements. Human Movement Science. 1997;16:1–32. [Google Scholar]

- 33.Kantelhardt J, Koscielny-Bunde E, Rego H, Havlin S, Bunde A. Detecting long-range correlations with detrended uctuation analysis. Physica A. 2001;295:441–454. [Google Scholar]

- 34.Smith JO. Mathematics of the discrete fourier transform. Booksurge Llc; 2007. [Google Scholar]

- 35.Beran J. Statistics for long-memory processes. Chapman & Hall/CRC; 1994. [Google Scholar]

- 36.Ashkenazy Y, Ivanov P, Havlin S, Peng C, Goldberger AL, et al. Magnitude and sign correlations in heartbeat uctuations. Phys Rev Lett. 2001;86:1900–1903. doi: 10.1103/PhysRevLett.86.1900. [DOI] [PubMed] [Google Scholar]

- 37.Ivanov PC, Ma Q, Bartsch RP, Hausdorff JM, Amaral LAN, et al. Levels of complexity in scale-invariant neural signals. Phys Rev E. 2009;79:1–12. doi: 10.1103/PhysRevE.79.041920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bello JP, Daudet L, Abdallah S, Duxbury C, Davies M, et al. A tutorial on onset detection in music signals. IEEE Trans Speech Audio Process. 2005;13:1035–1047. [Google Scholar]

- 39.Rammsayer T, Altenmüller E. Temporal information processing in musicians and nonmusicians. Music Perception. 2006;24:37–48. [Google Scholar]

- 40.Hennig H, Fleischmann R, Theis FJ, Geisel T. Method and device for humanizing musical sequences. 2010. US Patent No. 7,777,123.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Generalization of Eq. 2 in order to consider deviations of a complex rhythm from a complex pattern (instead of from a metronome), shown is a simple example. Beats at times  (red vertical lines) and an ideal beat pattern with beats at times

(red vertical lines) and an ideal beat pattern with beats at times  (black vertical lines) are compared. The resulting deviations read

(black vertical lines) are compared. The resulting deviations read  . For illustration, the time is given in units of the length of a quarter note

. For illustration, the time is given in units of the length of a quarter note  , leading to the rhythmic pattern shown in the upper right corner.

, leading to the rhythmic pattern shown in the upper right corner.

(TIFF)

Probing nonlinear correlations in interbeat time series (the number in the legend denotes the task index, cf. table I of the article). Shown are plots of the root-mean-square fluctuation function  from second-order DFA analysis for (A) the integrated magnitude series, and for (B) the corresponding integrated sign series. The grey line has slope

from second-order DFA analysis for (A) the integrated magnitude series, and for (B) the corresponding integrated sign series. The grey line has slope  indicating anticorrelations. The black lines have slope

indicating anticorrelations. The black lines have slope  indicating no correlations.

indicating no correlations.

(TIFF)

Audio example: Pop song “Everyday, everynight”. First, the 1/f humanized version, then the white noise humanized version is played.

(MP3)