Abstract

Certain features of objects or events can be represented by more than a single sensory system, such as roughness of a surface (sight, sound, and touch), the location of a speaker (audition and sight), and the rhythm or duration of an event (by all three major sensory systems). Thus, these properties can be said to be sensory-independent or amodal. A key question is whether common multisensory cortical regions process these amodal features, or does each sensory system contain its own specialized region(s) for processing common features? We tackled this issue by investigating simple duration-detection mechanisms across audition and touch; these systems were chosen because fine duration discriminations are possible in both. The mismatch negativity (MMN) component of the human event-related potential provides a sensitive metric of duration processing and has been elicited independently during both auditory and somatosensory investigations. Employing high-density electroencephalographic recordings in conjunction with intracranial subdural recordings, we asked whether fine duration discriminations, represented by the MMN, were generated in the same cortical regions regardless of the sensory modality being probed. Scalp recordings pointed to statistically distinct MMN topographies across senses, implying differential underlying cortical generator configurations. Intracranial recordings confirmed these noninvasive findings, showing generators of the auditory MMN along the superior temporal gyrus with no evidence of a somatosensory MMN in this region, whereas a robust somatosensory MMN was recorded from postcentral gyrus in the absence of an auditory MMN. The current data clearly argue against a common circuitry account for amodal duration processing.

Introduction

The separate sensory epithelia are specialized for very different energy sources, such that light can have no impact on the hair cells along the basilar membrane, and it is nonsensical, outside of poetry, to talk of tickling the photoreceptors of the retina. There are, however, also properties of objects that can be explored and represented by more than a single sensory system. We can readily perceive the frequency of a vibration through both hearing and touch, the speed of a moving bus through vision and audition, and the duration of an event through all three of these primary senses. In this way, these latter properties can be said to be amodal or sensory-independent qualia of a given object or event. This notion of amodal properties gives rise to an obvious question when one considers the neural processes responsible for their analysis. If these features are sensory-independent, are they then analyzed by a network of multisensory regions such that all sensory systems feed into and rely upon multisensory amodal systems for the analysis of common properties? This is an attractive thesis in that it furnishes what is potentially an economical solution. The alternative, whereby each sensory system has its own specific system for analyzing such properties, seems to require unnecessary duplication of function. However, it is also possible that duration is an elemental feature that is best extracted at the level of sensory registration. Of course, a complication with the simple dichotomy outlined is that not all senses are created equal when it comes to analyzing a given amodal feature. Audition is extremely sensitive to temporal properties, with a far higher resolution than vision has. Vision is sensitive to the spatial location of objects whereas audition is a poor substitute. Touch is more appropriate for texture discrimination than vision. Thus, if a given sensory system has considerably superior tuning for a given property, one could imagine a scenario whereby more local cortical real estate would be sequestered for a largely unisensory analysis of that property. In this scenario, the sensory system with lower sensitivity might be expected to rely on its own unisensory circuitry, perhaps with ancillary input from the regions responsible for analysis within the superior sensory system.

The current study was designed to assess this notion of common circuitry, with an eye to adjudicating between the possibilities outlined above. We chose to begin our investigations with a study of duration processing across the auditory and somatosensory systems for two main reasons. First, our previous work in multisensory integration has shown intimate links between these two sensory systems, which share common neural circuitry during very early sensory processing (Foxe et al., 2000, 2002, 2005; Schroeder and Foxe, 2002, 2005; Kayser et al., 2005; Murray et al., 2005). Second, duration is an amodal property for which both sensory systems have a good degree of sensitivity and thus, neither system is so much more sensitive than the other as to render the comparison blatantly imbalanced. Our dependent measure was the mismatch negativity (MMN), an event related potential (ERP) component of the auditory and somatosensory systems (Näätänen, 1992; Kekoni et al., 1997) that has been shown to index automatic change detection for duration (Näätänen, 1992; Akatsuka et al., 2005). Here, using both high-density electrical mapping of scalp-recorded potentials and intracranial electrocorticographic recordings, we ask whether the MMN to auditory and somatosensory duration change is generated in the same cortical regions or whether each sensory system contains its own specialized duration-processing mechanism.

Materials and Methods

Participants.

Eight participants (five male) ranging in age from 22 to 33 years (mean 26.3) with normal hearing completed the experiment for a modest fee of $12 per hour. All participants were right handed and reported normal hearing and no known neurological deficits. All participants gave written informed consent, and all procedures were approved by the ethical review board of The City College of New York. Ethical guidelines were in accordance with the Declaration of Helsinki.

Apparatus.

Tones were presented to the right ear via headphones (Sennheiser HD600). Somatosensory stimulation was presented via a low-cost linear amplifier (Piezo Systems) to the index finger of the right hand. All experiments were performed in a darkened, acoustically and electrically shielded room.

Mismatch task.

The MMN component is a robust metric for studying preattentive processing of stimulus properties for both the auditory and somatosensory systems. While recording the electroencephalogram (EEG) from participants, the mismatch evoked potential is elicited automatically by deviations in an otherwise repetitive stream of stimuli (Näätänen, 1992; Näätänen et al., 2007). The auditory mismatch (aMMN) has been extensively studied for several auditory stimulus properties, such as duration (Näätänen, 1992; De Sanctis et al., 2009), frequency (Sams et al., 1985), and complex stimuli (Saint-Amour et al., 2007). The amplitude of the aMMN component reflects the participant's ability to discriminate between standard and deviant stimuli (Amenedo and Escera, 2000; De Sanctis et al., 2009). The somatosensory mismatch (sMMN), in comparison, has not been nearly as well characterized. It has, however, been similarly elicited for many different stimulus properties, such as a duration (Akatsuka et al., 2005; Spackman et al., 2007), frequency (Kekoni et al., 1997), and location (Shinozaki et al., 1998). As with the aMMN, the sMMN component correlates with the ability to discriminate between standard and deviant stimuli (Spackman et al., 2007). The MMN is elicited by the infrequent occurrence of a deviant stimulus within the context of a frequently occurring standard stimulus. It is usually measured by subtracting the response to the deviant from the response to the standard. For the auditory MMN, this results in a relative negativity ∼100 ms following the onset of deviance (which is delayed with respect to stimulus onset for a duration deviant) that is focused over frontocentral scalp areas (Näätänen, 1992). For the somatosensory MMN, the timing and scalp distribution have been shown to be quite similar, but the initial negative deflection is followed by a relative positivity (Spackman et al., 2007). Here, participants viewed a silent movie with subtitles during stimulus delivery and were told to ignore the auditory and somatosensory stimuli. In the aMMN condition, the standard stimuli (p = 0.75) were 200 Hz tones of 50 ms duration and the deviant stimuli (p = 0.25) were 200 Hz tones of 100 ms duration. In the sMMN condition, the standard stimuli were vibrotactile stimuli of 200 Hz of 50 ms duration and the deviant stimuli were vibrotactile stimuli of 200 Hz of 100 ms duration. All stimuli were sinusoids convolved with a trapezoid such that there was a 5 ms rise at the onset and a 5 ms ramp at the offset. A separate behavioral testing session in seven additional subjects, who did not serve in the main MMN experiment, indicated that there were no differences in the ability to detect the auditory and the somatosensory duration deviants (t(6) = −0.667, p = 0.52), with mean detection rates of 93.5% in both conditions.

There were two blocked conditions: aMMN and sMMN. There were a total of 1600 stimuli with 400 deviants per block. The order of presentation was counterbalanced across participants. The standard and deviant stimuli were delivered in a pseudorandom order to ensure that deviants were never presented sequentially. The interstimulus interval was fixed at 1000 ms.

EEG recording and analysis.

Brain activity was recorded using a 168-channel EEG system (BioSemi). The data were recorded at 512 Hz and bandpass filtered from 2 to 45 Hz (24 dB/octave). The data were analyzed offline using the Matlab programming language (Mathworks). Epochs of 600 ms with 100 ms prestimulus baseline were extracted from the continuous data. We applied an automatic artifact rejection criterion of ±75 μV across all electrodes in the array. Trials with more than six artifact channels were rejected. In trials with less than six such channels, we interpolated any remaining bad channels using the nearest neighbor spline (Perrin et al., 1987, 1989). The data were re-referenced to the average of all channels (Michel et al., 2004; Nunez and Srinivasan, 2006). The average accepted trials per condition were ∼300 deviants and ∼900 standards. The mismatch waveforms were classically obtained by subtracting the standard evoked potential from the deviant evoked potential.

Mismatch responses.

To test for the presence of the MMN, mean amplitude measurements were obtained in a 40 ms window, centered at the group-mean peak latency for the largest negative minimum component between 100 and 200 ms for both the aMMN and the sMMN, as well as the following largest negative minimum component of the aMMN and largest positive maximum component of the sMMN from 200 to 300 ms. From the literature, the electrode cluster surrounding the midline frontal site (Fz) was identified as a region of interest for both the somatosensory and auditory MMN. Since we recorded with a high-density montage, the Fz site hereafter indicates the average of the five nearest electrodes sites, which gives a better signal-to-noise ratio and is a more representative waveform of the frontocentral region than that given by a single electrode site.

Topographical analysis.

In line with our primary question, the key analysis strategy involved a determination of whether common circuitry underlies both the aMMN and sMMN. For this, we used the topographical ANOVA (TANOVA), as implemented in the Cartool software, to statistically test for possible topographical differences between the sMMN and aMMN using global dissimilarity and nonparametric randomized testing (Lehmann and Skrandies, 1980). Global dissimilarity is an index of configuration differences between two scalp distributions, independent of their strength as the data were normalized using the global field power. For each subject and time point, the global dissimilarity indexes a single value, which varies between 0 and 2 (0, homogeneity; 2, inversion of topography). To create an empiric probability distribution against which the global dissimilarity can be tested for statistical significance, the Monte Carlo MANOVA was applied, as described previously (Manly, 1991). To control for type I errors, a period of statistical significance was only plotted and considered as significant if an alpha criterion of 0.05 or less was obtained for at least 11 consecutive sample points (∼21 ms) (Guthrie and Buchwald, 1991; Foxe and Simpson, 2002).

Dipole source analysis.

To estimate the location of the intracranial generators of the scalp-recorded grand mean MMNs, we performed source modeling using brain electric source analysis (BESA 5.1.8; MEGIS Software) (Scherg and Von Cramon, 1985). Results of this procedure are reported in the supplemental materials (available at www.jneurosci.org).

Intracranial data.

An additional dataset was collected from a patient (female, age 48 years) who was undergoing invasive intracranial mapping for intractable epilepsy following an earlier right anterior temporal lobe resection. Intracranial EEG recordings were obtained using a multiarray grid of 48 linear contacts (6 rows × 8 columns, 10 mm intercontact spacing), which was placed over the auditory cortex and surrounding regions of the right hemisphere, and from a single linear strip of six contacts (1 row × 6 columns, 10 mm intercontact spacing), which was placed over somatosensory cortex of the right hemisphere.

The precise anatomical location of each electrode contact was determined by coregistration of the postoperative CT scan on preoperative and postoperative anatomical magnetic resonance imaging (MRI) and then normalized into the Montreal Neurological Institute space (using SPM8 developed by Wellcome Department of Imaging Neuroscience). The preoperative MRI was used for its accurate anatomical information, the postoperative CT scan provided undistorted placement of the electrode contacts, and the postoperative MRI allowed assessment of the quality of the entire coregistration process since it includes both electrodes and anatomical information. In supplemental Figure 3 (available at www.jneurosci.org as supplemental material), for the purpose of illustrating activity and relative electrode locations, the grid is depicted on a template brain.

Subdural electrodes are highly sensitive to local field potentials generated within an ∼4.0 mm2 area and are much less sensitive to distant activity (Allison et al., 1999; Lachaux et al., 2005; Molholm et al., 2006; Fiebelkorn et al., 2010), which allows for improved localization of underlying current sources relative to scalp-recorded EEG. As the electrodes were located over the right side of the brain, the tones and somatosensory stimulation were presented to the left middle finger and left ear. Brain activity was recorded using a Brain Amp system (Brain Products). Recordings were digitized online at 1000 Hz and bandpass filtered offline from 2 to 45 Hz (24 db/octave). Epochs of 600 ms with 100 ms prestimulus baseline were extracted from the continuous data. The data were analyzed offline using a ±350 μV artifact rejection criterion. Statistical analyses of intracranial data were identical to those for scalp data, except that they were based on the variance measured across single trials of the current source density rather than the variance of the evoked potential measured across participants.

Current source density.

Current source density (CSD) profiles were calculated using either a five-point formula (Equation 1, below), if the electrode was on a grid, or a three-point formula (Equation 2, below), if the electrode was on a strip, to estimate the second spatial derivative of the voltage axis. CSD profiles provide an index of the location, direction, and density of transmembrane current flow, the first-order neuronal response to synaptic input (Nicholson and Freeman, 1975):

where Vi,j denotes the recorded field potential at the ith row and jth column in the electrode grid. CSD profiles for electrode contacts located on the border of the grid or the ends of the strip were not calculated because of the lack of near neighbors, and no such electrodes were used in our analysis.

Results

Scalp recordings

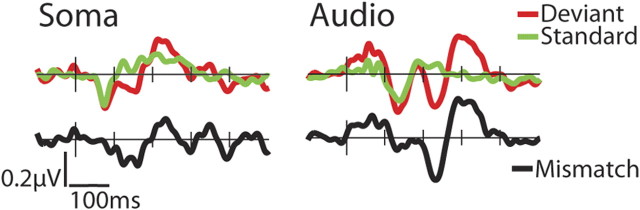

Inspection of the grand mean ERP-difference waveforms, deviant minus standard, showed clear mismatch responses for both the auditory and somatosensory conditions over the Fz (Fig. 1). For both sensory systems, there were two separable phases of the mismatch response. The auditory mismatch had a pair of successive negative peaks, one centered at ∼155 ms and the other at ∼235 ms. The somatosensory mismatch had an early negative component at ∼147 ms, followed by a positive component that peaked at ∼235 ms.

Figure 1.

Grand mean ERPs for the somatosensory (Soma) and auditory (Audio) conditions at the frontocentral site, Fz, for deviant (50 ms; red line), standard (100 ms; green line), and the subtraction waveform (mismatch, black line). The times indicate the midpoint latencies of the 40 ms windows over which the average amplitude of the MMNs were measured.

To statistically verify the presence of the components at the two phases, each participant's standard and deviant waveforms were averaged over ∼40 ms centered at each group-mean component peak and submitted to a t test. The auditory standard and deviant were found to be statistically different for both components (121–161 ms: tdf = 7 = −3.161, p < 0.05; 217–256 ms: tdf = 7 = −4.0515, p < 0.005), and the somatosensory standard and deviant were statistically different at both components (128–168 ms: tdf = 7 = −2.522, p < 0.05; 214–254 ms: tdf = 7 = 8.945, p < 0.005).

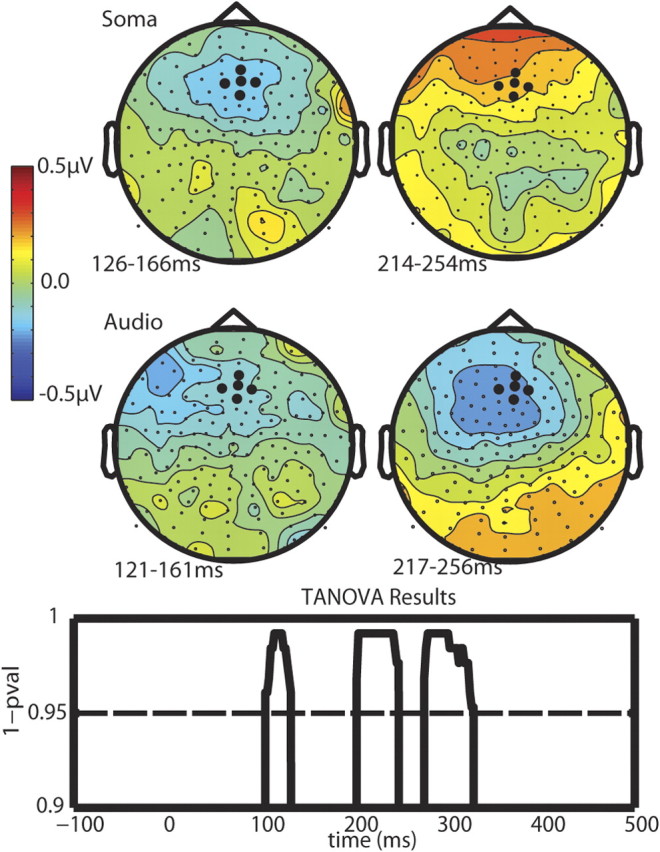

The scalp topographic maps representing the potential distributions of the grand mean somatosensory and auditory mismatches for both phases are shown in Figure 2. The first auditory mismatch component has a frontal left-lateralized negative distribution, whereas the somatosensory has a frontocentral negative distribution. The second components for the somatosensory and auditory mismatch have very different topographic distributions. The auditory topographic map has a slightly left-lateralized frontocentral negative distribution whereas the somatosensory mismatch topographic distribution has a frontal positive distribution.

Figure 2.

Topographical plots of the grand average mismatch waves for each modality (row) and each phase (column). The MMN is plotted at peak latency for each modality at the two different phases. The TANOVA tested for topographical differences at each time point between the sMMN and the aMMN.

To verify the differences in topographies of the somatosensory and auditory mismatches, a TANOVA analysis was conducted (Fig. 2). There were three clear periods of statistical difference between the topographies of the auditory and somatosensory mismatch responses. The first two periods aligned with the latencies of the two components revealed during the analysis over the frontal scalp (Fz) for both sensory modalities, at ∼105–135 ms followed by ∼200–250 ms. The third period was ∼275–325 ms, which coincided with a third positive peak of the aMMN, centered at ∼300 ms. Another possibility is that an amodal processor might not necessarily be engaged at precisely the same time by the auditory and somatosensory stimuli. We therefore performed an additional test between the topographies of the late aMMN and the early sMMN. This, too, revealed statistically significant differences between the auditory and somatosensory MMN topographies (not shown).

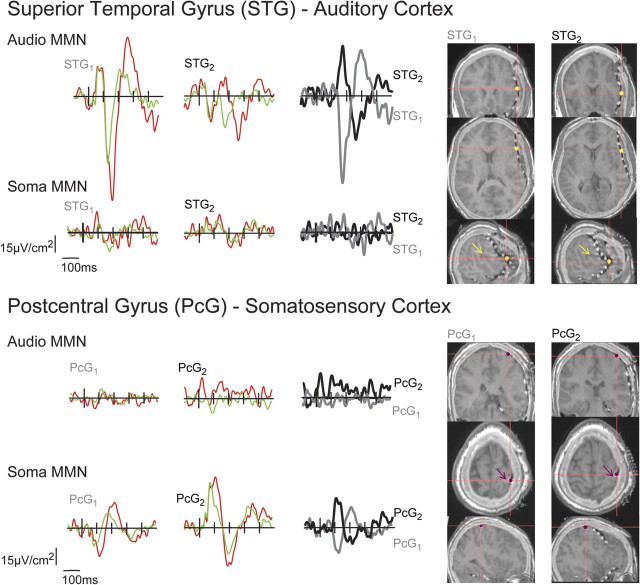

Intracranial recordings

All contacts were examined for the presence of an MMN (see supplemental Fig. S3, available at www.jneurosci.org as supplemental material, for more extensive representation of the responses across 22 of the contact sites). For the sMMN, the response was clearly focused along a small part of the strip over the postcentral gyrus (PcG) and was not seen in any of the grid contacts (see supplemental material, available at www.jneurosci.org). PcG1 (Fig. 3 and supplemental Fig. S3, available at www.jneurosci.org as supplemental material) had the largest negative and positive sMMNs and was used in our analyses; these responses appeared to invert polarity in channel PcG2 (Fig. 3 and supplemental Fig. S3), which was also used in our analyses. An aMMN was not seen in any of the strip contacts. For the aMMN, the response was somewhat more extensive due to the greater coverage over relevant sites, and was seen in contacts over the superior temporal gyrus (STG). The early negativity was of greatest amplitude in STG1, and the later negativity in STG2 (Fig. 3 and supplemental Fig. S3)—the two contacts used from the grid for our analyses. Figure 3 shows current source density waveforms from the four selected subdural electrodes for both the somatosensory and auditory mismatch. Two adjacent electrodes (STG1 and STG2) were positioned along the Sylvian fissure over inferior frontal gyrus and the STG. Two adjacent electrodes (PcG1 and PcG2) were positioned over the postcentral gyrus.

Figure 3.

Grand mean CSD, at intracranial electrodes sites deviant (red line), standard (green line), and the subtraction waveform, MMN (black and gray lines). The yellow and magenta dots represent the electrode locations on the MRI-CTs. The yellow and magenta arrows indicate the Sylvian fissure and the postcentral gyrus, respectively.

Inspection of the mean auditory CSD difference waveforms averaged over trials at electrodes STG1 and STG2 showed a clear aMMN response. The mean somatosensory CSD difference waveforms at the same electrodes did not show evidence of an sMMN response. The reverse was the case for PcG1 and PcG2, which showed clear sMMN responses but did not show aMMN responses. In the auditory condition, the mismatch waveforms at STG1 showed a clear polarity inversion at STG2, indicating a local generator. The aMMN waveform at STG1 and STG2 showed polarity-inverted components centered at ∼176 ms, followed by mirrored components at ∼295 ms. For the somatosensory stimulation, there were no discernible MMN components and no obvious inversions at STG1 and STG2. The sMMN response at PcG1, however, showed a clear polarity inversion at PcG2, indicating a local generator of the sMMN. The sMMN at PcG1 and PcG2 had inverted components centered at ∼110 and 226 ms. The aMMN at PcG1 and PcG2 had no discernible peaks and exhibited no obvious polarity inversions.

To statistically verify the presence of the MMN responses, individual trials were averaged over a 40 ms interval that was centered on the peak amplitude of the average MMN waveform. The standard and deviant trials were sorted and submitted to an unpaired t test. The auditory standard and deviant were found to be statistically different for both components at STG1 (156–196 ms: tdf = 827 = −8.753, p < 0.0001; 276–316 ms: tdf = 827 = 5.96, p < 0.0001) and STG2 (156–196 ms: tdf = 827 = 6.25, p < 0.0001; 242–282 ms: tdf = 827 = 6.70, p < 0.0001), and the somatosensory standard and deviant were not statistically different at STG1 (156–196 ms: p = 0.18; 276–316 ms: p = 0.256) and STG2 (156–196 ms: p = 0.7839; 242–282 ms: p = 0.9). The somatosensory standard and deviant were found to be statistically different for both components at PcG1 (101–141 ms: tdf = 864 = −6.67, p < 0.0001; 207–247 ms: tdf = 864 = 6.18, p < 0.0001) and PcG2 (95–135 ms: tdf = 864 = −4.27, p < 0.0001; 206–246 ms: tdf = 864 = −3.03, p < 0.0005), and the auditory standard and deviant were not statistically different at both components at PcG1 (101–141 ms: p = 0.87; 207–247 ms: p = 0.588), and only the first component was statistically different at PcG2 (95–135 ms: tdf = 827 = 2.59, p < 0.005; 206–246 ms: p = 0.22). Although this latter finding appears to suggest that there is cross-sensory MMN activity in PcG2, observation of the waveforms themselves suggests otherwise, since there is no evidence of an auditory evoked potential at this site to the auditory standard and the deviant waveform shows no obvious evoked component either. Further, follow-up running t tests indicated that the onset of differences between the standard and deviant responses actually preceded the onset of physical deviance, making it clear that this apparent effect could not be related to the duration difference.

Discussion

In launching this investigation, we theorized that there was a strong case for suspecting that the auditory and somatosensory systems might share processing resources for amodal features, since research on auditory–somatosensory integration processes had already identified considerable common circuitry. The auditory and somatosensory systems have been shown to have strong neurophysiological links (Foxe et al., 2000, 2002, 2005; Schroeder et al., 2001, 2004; Lütkenhöner et al., 2002; Schroeder and Foxe, 2002, 2005; Fu et al., 2003; Kayser et al., 2005; Murray et al., 2005), making them ideal candidates in which to investigate the processing of amodal stimulus properties. For example, multicontact linear array electrode recordings in macaques showed that both the somatosensory and auditory systems feed directly into layer IV, the afferent input layer, of the caudomedial belt area with identical timing (Schroeder and Foxe, 2002), and this multisensory region is just a single synapse from primary auditory cortex. Using functional MRI (fMRI), Foxe et al. (2002) found that tactile stimulation elicited activation in a very similar region in human auditory cortex, a finding since replicated and extended using fMRI in macaques (Kayser et al., 2005). A key question becomes what the functional role of these hierarchically early multisensory regions might be. We reasoned that one possibility might be basic feature detection for properties common to both sensory systems, in this case, simple duration processing. The MMN response was therefore used to test whether the generators of duration-change detection for somatosensory and auditory cues might rely on common multisensory circuitry. Previous work had pointed to the gross similarities between the sMMN and the aMMN (Spackman et al., 2010), but a common circuitry account had not been directly tested. Here, using high-density mapping, we found that both the morphology and the topographical distributions of the duration MMN were highly distinct across sensory systems. The sMMN topography at 235 ms showed a clear frontal positive distribution, whereas the aMMN topography at the same timing showed a distinctly different frontocentral negative distribution. Further supporting separate underlying neural generators, source modeling solutions indicated that the dominant neural generators resided in or close to auditory and somatosensory cortical regions for the aMMN and sMMN, respectively (supplemental data, available at www.jneurosci.org as supplemental material). As such, these results, in conjunction with the intracranial recordings, make it clear that the somatosensory and auditory MMNs to duration deviants are generated in separate cortical regions, and we find no evidence for shared common amodal processing regions for duration detection.

General overview of aMMN and sMMN

The aMMN responses and topographies presented in this paper are similar to other duration aMMNs reported in the literature (Näätänen, 1992; Amenedo and Escera, 2000; De Sanctis et al., 2009). The aMMN response over frontal midline scalp had two negative peaks followed by a positive peak. The second and larger amplitude negative peak at ∼235 ms is most commonly defined as the duration aMMN, and here has a topography that is entirely typical of an auditory duration MMN (Doeller et al., 2003; De Sanctis et al., 2009). The intracranial data localized the generator of the aMMN to the STG and along the Sylvian fissure, which is consistent with findings in other studies (Dittmann-Balçar et al., 2001; Schall et al., 2003; Edwards et al., 2005, Molholm et al., 2005; Rinne et al., 2005).

The sMMN response over the frontal midline scalp is also representative of what has been shown in previous literature (Kekoni et al., 1997; Shinozaki et al., 1998; Spackman et al., 2007, 2010). The sMMN waveform has two phases: an earlier negative peak at ∼145 ms, followed by a positive peak at ∼235 ms. Since this is the first study, that we are aware of, to use a high-density montage to assess the sMMN, a detailed assessment and comparison of its topographical distribution to that of the aMMN was possible. The early phase of the sMMN had a negative frontocentral distribution that evolved into a later positive frontal distribution. From the intracranial recordings, the sMMN was localized over the postcentral gyrus, with very similar timing to that seen in the scalp recordings, consistent with other intracranial recordings of the sMMN (Spackman et al., 2010).

Comparison of the auditory and somatosensory mismatch

To the best of our knowledge, only one paper has previously looked at the sMMN and aMMN using a within-subjects design (Restuccia et al., 2007). These authors recorded scalp EEG data from patients with unilateral cerebellar damage while conducting a location mismatch paradigm, where the standard and deviant were vibrotactile stimulation of the first and fifth fingers respectively. When the ipsilateral hand to the damaged cerebellum was stimulated, an abnormal sMMN was elicited, whereas stimulation on the contralateral hand elicited a normal sMMN. In a control condition, two of the patients were also tested using an auditory MMN paradigm where the deviant feature was a frequency change; the patients' aMMNs were unaffected by the cerebellar lesion, regardless of side of presentation. Thus, the data point to some dissociation of the generators of the MMN across these two modalities. However, since Restuccia et al. (2007) were not specifically investigating the processing of amodal stimulus features, they did not equate the tasks across modalities, using different feature dimensions as deviants in both (location vs frequency). An amodal system would be expected to operate on a single feature and so it is difficult to draw any direct conclusions regarding this issue based on their data. Here, we made a direct comparison of the aMMN and sMMN for the common feature of duration.

Although our results clearly demonstrate that sensory-specific cortical areas code for duration processing, the possibility remains that these regions might interact under multisensory stimulation conditions, or indeed that additional amodal regions might be activated for multisensory inputs. Some support for this thesis comes from a recent transcranial magnetic stimulation (TMS) study (Bolognini et al., 2010). In this study, a single TMS pulse was delivered over auditory processing regions along the STG at varying intervals (60, 120, and 180 ms) after the presentation of tactile stimuli upon which participants were required to perform temporal discriminations. It was shown that TMS at 180 ms over STG resulted in impairment of temporal discrimination, suggesting that auditory regions on the STG were involved in cross-sensory tactile temporal processing. These results make a compelling case for interactions between unisensory auditory and tactile duration-processing regions. We suggest that our finding of anatomically distinct automatic duration processing for auditory and tactile information makes the case for duration being processed and represented in sensory-specific areas under conditions of unisensory stimulation, but that these areas may well be expected to interact when concurrently engaged by multisensory inputs. Under this account, one could posit that the TMS manipulation of Bolognini and colleagues (2010) resulted in coactivation of auditory and tactile regions, and that it was this coactivation that lead to interference in tactile duration discriminations. Certainly there is known interconnectivity between the auditory and somatosensory systems (Smiley and Falchier, 2009), and there are numerous examples of the bidirectional influence of these sensory systems on perception and performance (Jousmäki and Hari, 1998; Guest et al., 2002; Guest and Spence, 2003; Lappe et al., 2008; Foxe, 2009; Sperdin et al., 2009; Yau et al., 2009). The highly similar timing (∼235 ms) of the primary aMMN and sMMN peak responses seen here also lends further credence to the possibility of auditory and tactile duration information interacting under multisensory stimulation conditions, but this remains to be directly tested.

Despite a clear dissociation of the areas involved in auditory and somatosensory duration processing as indexed by the MMN, these data do not rule out the possibility that amodal duration representations come online during the active performance of a duration task, or that there might be amodal processing that feeds into sensory-specific areas. For example, Moberget et al. (2008) found that patients with cerebellar degeneration showed a selective auditory deficit in the duration MMN (relative to frequency and location MMNs), and it is possible that the role of the cerebellum in the processing of temporal information is amodal. It is also possible that there are other metrics of unisensory duration processing that would reveal an amodal representation. However, the MMN provides a solid metric of duration processing for which there are no alternatives that we are aware of, and the loci of MMN generation is commonly considered to reflect where the deviant feature is processed (Molholm et al., 2005). It is also the case that the auditory MMN is influenced by multisensory inputs (Saint-Amour et al., 2007), and thus it seems an excellent candidate for measuring a potentially amodal process.

Conclusion

We tested whether processing of the amodal property of duration might rely on common circuitry across audition and touch, given that there is a large emerging body of anatomical and physiological work pointing to hierarchically early common multisensory regions that process inputs from both of these sensory systems. Instead, data from both scalp and subdural recordings point to clearly separate generators for simple duration detections across these two sensory systems, strongly implicating a sensory-specific account.

Footnotes

This work was supported by a grant from the U.S. National Institute of Mental Health J.J.F. and S.M. (MH85322). M.R.M. received additional support from a postdoctoral fellowship award from the Swiss National Science Foundation (PBELP3-123067). The Cartool software (http://brainmapping.unige.ch/Cartool.htm) was programmed by Denis Brunet of the Functional Brain Mapping Laboratory, Geneva, Switzerland, and is supported by the Center for Biomedical Imaging of Geneva and Lausanne. We thank our colleague Dr. Pierfilippo De Sanctis for helpful discussions.

References

- Akatsuka K, Wasaka T, Nakata H, Inui K, Hoshiyama M, Kakigi R. Mismatch responses related to temporal discrimination of somatosensory stimulation. Clin Neurophysiol. 2005;116:1930–1937. doi: 10.1016/j.clinph.2005.04.021. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception. I. Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cereb Cortex. 1999;9:415–430. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Amenedo E, Escera C. The accuracy of sound duration representation in the human brain determines the accuracy of behavioural perception. Eur J Neurosci. 2000;12:2570–2574. doi: 10.1046/j.1460-9568.2000.00114.x. [DOI] [PubMed] [Google Scholar]

- Bolognini N, Papagno C, Moroni D, Maravita A. Tactile temporal processing in the auditory cortex. J Cogn Neurosci. 2010;22:1201–1211. doi: 10.1162/jocn.2009.21267. [DOI] [PubMed] [Google Scholar]

- De Sanctis P, Molholm S, Shpaner M, Ritter W, Foxe JJ. Right hemispheric contributions to fine auditory temporal discriminations: high-density electrical mapping of the duration mismatch negativity (MMN) Front Integr Neurosci. 2009;3:5. doi: 10.3389/neuro.07.005.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dittmann-Balçar A, Jüptner M, Jentzen W, Schall U. Dorsolateral prefrontal cortex activation during automatic auditory duration-mismatch processing in humans: a positron emission tomography study. Neurosci Lett. 2001;308:119–122. doi: 10.1016/s0304-3940(01)01995-4. [DOI] [PubMed] [Google Scholar]

- Doeller CF, Opitz B, Mecklinger A, Krick C, Reith W, Schröger E. Prefrontal cortex involvement in preattentive auditory deviance detection: neuroimaging and electrophysiological evidence. Neuroimage. 2003;20:1270–1282. doi: 10.1016/S1053-8119(03)00389-6. [DOI] [PubMed] [Google Scholar]

- Edwards E, Soltani M, Deouell LY, Berger MS, Knight RT. High gamma activity in response to deviant auditory stimuli recorded directly from human cortex. J Neurophysiol. 2005;94:4269–4280. doi: 10.1152/jn.00324.2005. [DOI] [PubMed] [Google Scholar]

- Fiebelkorn IC, Foxe JJ, Schwartz TH, Molholm S. Staying within the lines: the formation of visuospatial boundaries influences multisensory feature integration. Eur J Neurosci. 2010;31:1737–1743. doi: 10.1111/j.1460-9568.2010.07196.x. [DOI] [PubMed] [Google Scholar]

- Foxe JJ. Multisensory integration: frequency tuning of audio-tactile integration. Curr Biol. 2009;19:R373–R375. doi: 10.1016/j.cub.2009.03.029. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Schroeder CE. The case for feedforward multisensory convergence during early cortical processing. Neuroreport. 2005;16:419–423. doi: 10.1097/00001756-200504040-00001. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Simpson GV. Flow of activation from V1 to frontal cortex in humans. A framework for defining “early” visual processing. Exp Brain Res. 2002;142:139–150. doi: 10.1007/s00221-001-0906-7. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Morocz IA, Murray MM, Higgins BA, Javitt DC, Schroeder CE. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res Cogn Brain Res. 2000;10:77–83. doi: 10.1016/s0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Wylie GR, Martinez A, Schroeder CE, Javitt DC, Guilfoyle D, Ritter W, Murray MM. Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J Neurophysiol. 2002;88:540–543. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- Fu KM, Johnston TA, Shah AS, Arnold L, Smiley J, Hackett TA, Garraghty PE, Schroeder CE. Auditory cortical neurons respond to somatosensory stimulation. J Neurosci. 2003;23:7510–7515. doi: 10.1523/JNEUROSCI.23-20-07510.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guest S, Spence C. Tactile dominance in speeded discrimination of textures. Exp Brain Res. 2003;150:201–207. doi: 10.1007/s00221-003-1404-x. [DOI] [PubMed] [Google Scholar]

- Guest S, Catmur C, Lloyd D, Spence C. Audiotactile interactions in roughness perception. Exp Brain Res. 2002;146:161–171. doi: 10.1007/s00221-002-1164-z. [DOI] [PubMed] [Google Scholar]

- Guthrie D, Buchwald JS. Significance testing of difference potentials. Psychophysiology. 1991;28:240–244. doi: 10.1111/j.1469-8986.1991.tb00417.x. [DOI] [PubMed] [Google Scholar]

- Jousmäki V, Hari R. Parchment-skin illusion: sound-biased touch. Curr Biol. 1998;8:R190. doi: 10.1016/s0960-9822(98)70120-4. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Integration of touch and sound in auditory cortex. Neuron. 2005;48:373–384. doi: 10.1016/j.neuron.2005.09.018. [DOI] [PubMed] [Google Scholar]

- Kekoni J, Hämäläinen H, Saarinen M, Gröhn J, Reinikainen K, Lehtokoski A, Näätänen R. Rate effect and mismatch responses in the somatosensory system: ERP-recordings in humans. Biol Psychol. 1997;46:125–142. doi: 10.1016/s0301-0511(97)05249-6. [DOI] [PubMed] [Google Scholar]

- Lachaux JP, George N, Tallon-Baudry C, Martinerie J, Hugueville L, Minotti L, Kahane P, Renault B. The many faces of the gamma band response to complex visual stimuli. Neuroimage. 2005;25:491–501. doi: 10.1016/j.neuroimage.2004.11.052. [DOI] [PubMed] [Google Scholar]

- Lappe C, Herholz SC, Trainor LJ, Pantev C. Cortical plasticity induced by short-term unimodal and multimodal musical training. J Neurosci. 2008;28:9632–9639. doi: 10.1523/JNEUROSCI.2254-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehmann D, Skrandies W. Reference-free identification of components of checkerboard-evoked multichannel potential fields. Electroencephalogr Clin Neurophysiol. 1980;48:609–621. doi: 10.1016/0013-4694(80)90419-8. [DOI] [PubMed] [Google Scholar]

- Lütkenhöner B, Lammertmann C, Simões C, Hari R. Magnetoencephalographic correlates of audiotactile interaction. Neuroimage. 2002;15:509–522. doi: 10.1006/nimg.2001.0991. [DOI] [PubMed] [Google Scholar]

- Manly BF. Randomization and Monte Carlo methods in biology. 1st Edition. London: Chapman and Hall; 1991. [Google Scholar]

- Michel CM, Murray MM, Lantz G, Gonzalez S, Spinelli L, Grave de Peralta R. EEG source imaging. Clin Neurophysiol. 2004;115:2195–2222. doi: 10.1016/j.clinph.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Moberget T, Karns CM, Deouell LY, Lindgren M, Knight RT, Ivry RB. Detecting violations of sensory expectancies following cerebellar degeneration: a mismatch negativity study. Neuropsychologia. 2008;46:2569–2579. doi: 10.1016/j.neuropsychologia.2008.03.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molholm S, Martinez A, Ritter W, Javitt DC, Foxe JJ. The neural circuitry of preattentive auditory change-detection: an fMRI study of pitch and duration mismatch negativity generators. Cereb Cortex. 2005;15:545–551. doi: 10.1093/cercor/bhh155. [DOI] [PubMed] [Google Scholar]

- Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, Dyke JP, Schwartz TH, Foxe JJ. Audio-visual multisensory integration in superior parietal lobule revealed by human intracranial recordings. J Neurophysiol. 2006;96:721–729. doi: 10.1152/jn.00285.2006. [DOI] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Näätänen R. Attention and brain function. Hillsdale, New Jersey: Erlbaum; 1992. [Google Scholar]

- Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin Neurophysiol. 2007;118:2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- Nicholson C, Freeman JA. Theory of current source-density analysis and determination of conductivity tensor for anuran cerebellum. J Neurophysiol. 1975;38:356–368. doi: 10.1152/jn.1975.38.2.356. [DOI] [PubMed] [Google Scholar]

- Nunez PL, Srinivasan R. Electric fields of the brain: the neurophysics of EEG. 2nd Edition. Oxford: Oxford UP; 2006. [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Giard MH, Echallier JF. Mapping of scalp potentials by surface spline interpolation. Electroencephalogr Clin Neurophysiol. 1987;66:75–81. doi: 10.1016/0013-4694(87)90141-6. [DOI] [PubMed] [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Echallier JF. Spherical splines for scalp potential and current-density mapping. Electroencephalogr Clin Neurophysiol. 1989;72:184–187. doi: 10.1016/0013-4694(89)90180-6. [DOI] [PubMed] [Google Scholar]

- Restuccia D, Della Marca G, Valeriani M, Leggio MG, Molinari M. Cerebellar damage impairs detection of somatosensory input changes: a somatosensory mismatch-negativity study. Brain. 2007;130:276–287. doi: 10.1093/brain/awl236. [DOI] [PubMed] [Google Scholar]

- Rinne T, Degerman A, Alho K. Superior temporal and inferior frontal cortices are activated by infrequent sound duration decrements: an fMRI study. Neuroimage. 2005;26:66–72. doi: 10.1016/j.neuroimage.2005.01.017. [DOI] [PubMed] [Google Scholar]

- Saint-Amour D, De Sanctis P, Molholm S, Ritter W, Foxe JJ. Seeing voices: high-density electrical mapping and source-analysis of the multisensory mismatch negativity evoked during the McGurk illusion. Neuropsychologia. 2007;45:587–597. doi: 10.1016/j.neuropsychologia.2006.03.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sams M, Paavilainen P, Alho K, Näätänen R. Auditory frequency discrimination and event-related potentials. Electroencephalogr Clin Neurophysiol. 1985;62:437–448. doi: 10.1016/0168-5597(85)90054-1. [DOI] [PubMed] [Google Scholar]

- Schall U, Johnston P, Todd J, Ward PB, Michie PT. Functional neuroanatomy of auditory mismatch processing: an event-related fMRI study of duration-deviant oddballs. Neuroimage. 2003;20:729–736. doi: 10.1016/S1053-8119(03)00398-7. [DOI] [PubMed] [Google Scholar]

- Scherg M, Von Cramon D. Two bilateral sources of the late AEP as identified by a spatio-temporal dipole model. Electroencephalogr Clin Neurophysiol. 1985;62:32–44. doi: 10.1016/0168-5597(85)90033-4. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe JJ. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res Cogn Brain Res. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Lindsley RW, Specht C, Marcovici A, Smiley JF, Javitt DC. Somatosensory input to auditory association cortex in the macaque monkey. J Neurophysiol. 2001;85:1322–1327. doi: 10.1152/jn.2001.85.3.1322. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Molholm S, Lakatos P, Ritter W, Foxe JJ. Human-simian correspondence in the early cortical processing of multisensory cues. Cogn Process. 2004;5:140–151. [Google Scholar]

- Shinozaki N, Yabe H, Sutoh T, Hiruma T, Kaneko S. Somatosensory automatic responses to deviant stimuli. Brain Res Cogn Brain Res. 1998;7:165–171. doi: 10.1016/s0926-6410(98)00020-2. [DOI] [PubMed] [Google Scholar]

- Smiley JF, Falchier A. Multisensory connections of monkey auditory cerebral cortex. Hear Res. 2009;258:37–46. doi: 10.1016/j.heares.2009.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spackman LA, Boyd SG, Towell A. Effects of stimulus frequency and duration on somatosensory discrimination responses. Exp Brain Res. 2007;177:21–30. doi: 10.1007/s00221-006-0650-0. [DOI] [PubMed] [Google Scholar]

- Spackman LA, Towell A, Boyd SG. Somatosensory discrimination: an intracranial event-related potential study of children with refractory epilepsy. Brain Res. 2010;1310:68–76. doi: 10.1016/j.brainres.2009.10.072. [DOI] [PubMed] [Google Scholar]

- Sperdin HF, Cappe C, Foxe JJ, Murray MM. Early, low-level auditory-somatosensory multisensory interactions impact reaction time speed. Front Integr Neurosci. 2009;3:2. doi: 10.3389/neuro.07.002.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yau JM, Olenczak JB, Dammann JF, Bensmaia SJ. Temporal frequency channels are linked across audition and touch. Curr Biol. 2009;19:561–566. doi: 10.1016/j.cub.2009.02.013. [DOI] [PMC free article] [PubMed] [Google Scholar]