Despite effective screening and treatment, amblyopia is still a very common cause of vision loss in children and adults. Primary care physicians miss many opportunities to screen vision using quantitative techniques like acuity, especially at preschool age. This article describes a web-based intervention developed to improve screening for amblyopia and strabismus in the medical home, and shows significantly improved knowledge by physician participants that was sustained 1 to 3 years later.

Abstract

Purpose.

To evaluate the efficacy of a physician-targeted website to improve knowledge and self-reported behavior relevant to strabismus and amblyopia (“vision”) in primary care settings.

Methods.

Eligible providers (filing Medicaid claims for at least eight well-child checks at ages 3 or 4 years, 1 year before study enrollment), randomly assigned to control (chlamydia and blood pressure) or vision groups, accessed four web-based educational modules, programmed to present interactive case vignettes with embedded questions and feedback. Each correct response, assigned a value of +1 to a maximum of +7, was used to calculate a summary score per provider. Responses from intervention providers (IPs) at baseline and two follow-up points were compared to responses to vision questions, taken at the end of the study, from control providers (CPs).

Results.

Most IPs (57/65) responded at baseline and after the short delay (within 1 hour after baseline for 38 IPs). A subgroup (27 IPs and 42 CPs) completed all vision questions after a long delay averaging 1.8 years. Scores from IPs improved after the short delay (median score, 3 vs. 6; P = 0.0065). Compared to CPs, scores from IPs were similar at baseline (P = 0.6473) and higher after the short-term (P < 0.0001) and long-term (P < 0.05) delay.

Conclusions.

Significant improvements after the short delay demonstrate the efficacy of the website and the potential for accessible, standardized vision education. Although improvements subsided over time, the IPs' scores did not return to baseline levels and were significantly better compared to CPs tested 1 to 3 years later. (ClinicalTrials.gov number, NCT01109459.)

Large gaps in the continuum of preschool vision care have been demonstrated at the screening, diagnosis, and treatment steps. Although vision screening is an accepted part of preventive care,1 many children are missed. The National Ambulatory Medical Care Survey (NAMCS) recruited a nationally representative sample averaging 2,500 physicians and 24,000 patient visits per year, and reported that 11% to 17% of children were screened with a test of acuity over a 10-year period.2 These data are in close agreement with studies using Medicaid billing data3,4 although other studies report lower5 or higher6 rates. “Quantitative” vision screening is usually performed by medical staff using a test of visual acuity or, less commonly, using photo- or autorefraction.7,8 Low rates of screening may contribute to low rates of diagnoses of amblyopia or strabismus by eye specialists: Claims data show that 1.4% of children aged 3 or 4 years were diagnosed with either condition in states participating in a randomized trial to improve preschool vision screening (PVS) in office settings.9 In contrast, population-based data showed approximately 4% to 5% of children with one or both conditions depending on race/ethnicity and geographic location.10,11 Despite reports that universal PVS in Sweden using a test of acuity at age 4 years has reduced the prevalence of amblyopia from 2% to 0.2%,12 and despite recent reports of effective screening tests13–15 and treatment16 for amblyopia, in the United States less than 13% of amblyopic children aged 30 to 72 months in the population-based studies had received appropriate treatment.10,11 Universal PVS is a necessary first step toward reducing preventable vision loss caused by amblyopia among children living in the United States.

Studies indicate that various other types of pediatric preventive care fall short of recommendations,17,18 and gaps in knowledge about the condition or recommended action are often implicated in attempts to understand and improve practice behaviors.19–24 Most theories about behavior change include knowledge about the targeted action or condition as a factor that influences behavior.23–27 This traditional knowledge–attitude–behavior approach to behavior change intervention is complementary to a range of behavioral theories from social cognitive theory25 to information–motivation–behavioral skills theory26 (see review and synthesis by Michie et al.27).

Our previous research shows that many primary care providers (PCPs) lack knowledge about amblyopia and PVS recommendations,7 prompting our efforts to design an intervention to improve both, as a preliminary step toward improving actual practice behavior. Although knowledge improvement alone is often insufficient to effect behavioral change,27–30 our research indicates that high levels of knowledge enhanced the effects of good attitudes on self-reported PVS behaviors.7 Therefore, as with most behavior change interventions, efforts to improve PVS behavior should include a knowledge component. We chose the Internet as the best delivery mode to implement facets of adult-based learning relevant to physicians (see the Methods section) as well as allowing low-cost, wide-spread dissemination of standardized information to individuals separated by time and distance. Web-based instruction has various potential advantages compared with traditional lecture-based continuing medical education (CME), including convenient access, standardization, use of multiple strategies to convey difficult or dynamic concepts, interactivity, tailoring of content based on responses, feedback allowing comparison to peers, and ease of updating to reflect new research, policies, or methods.31

In this article, we describe results of our web-based intervention, designed to increase knowledge about “vision” (strabismus, amblyopia, and self-reported PVS behavior). Our study is especially pertinent considering a recent report from the Office of the Inspector General which reviewed medical records of Medicaid-eligible children, noted low rates of vision screening, and recommended that states begin to “develop education and incentives for providers to encourage complete medical screenings.”32

Methods

Overview of the Study

We planned to enroll approximately 57 providers each into two arms of a randomized trial designed to evaluate the usefulness of an internet intervention to improve (1) knowledge about strabismus, amblyopia and PVS, hereafter called “vision”; (2) increase rates of quantitative PVS by PCPs, and (3) increase rates of diagnosis of strabismus or amblyopia by eye specialists. This article reports the analysis of only a subset of the web-based responses from participating PCPs (knowledge about vision and self- reported screening behavior that were assessed at each relevant time period). This project was approved by the University of Alabama at Birmingham Institutional Review Board for Human Use, the Alabama Medicaid Agency, the Illinois Department of Health and Family Services, the South Carolina Department of Health and Human Services, and was compliant with HIPAA and the Declaration of Helsinki.

Eligible Pool of Providers and Enrollment

Data from Medicaid agencies in Alabama, Illinois, and South Carolina for claims filed for WCVs33 were used to determine eligibility. Providers who filed claims for at least eight WCVs for children aged 3 or 4 years during 1 year were eligible for further consideration. Other eligibility criteria were (1) adequate contact information including fax numbers, (2) filing claims under the individual provider's name, and (3) having Internet access. To make sure that provider enrollment and behavior were not influenced by the goals of the study, we recruited providers to enroll in a study to “improve care for children.” Incentives for participation were the same for intervention and control groups, and included a textbook (chosen from a list of three texts about pediatric care) for participants who completed the first two modules, and category 1 CME credit (up to 4 hours if all modules were completed). Eligible participants were faxed a letter of invitation, and those who returned the fax and confirmed that they had Internet access were subsequently emailed an invitation with instructions to access the study website. Providers were sent to the intervention or control websites according to a cluster-randomized schedule that was executed on log-in. The cluster was defined as the provider (or “group practice”) along with the children seen for WCVs by that provider or practice; clustering was important for outcome measures relevant to patient outcomes.34 Providers were considered solo practitioners if they had a unique street address and fax number in our database. Providers with a shared street address or fax were merged into a single “group practice” or provider cluster.9 Providers who completed the log in screen were randomized and considered enrolled. Responses for eight IPs and eight CPs who failed to complete any questions are not included in the analyses.

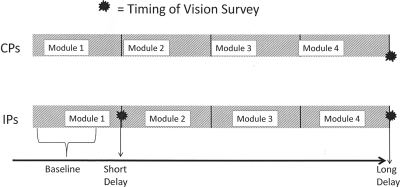

All participants were offered four sequential web-based modules consisting of physician-targeted, interactive case vignettes, along with tool kits designed to enhance the assessment of preschool “vision” in IPs or blood pressure in CPs. IPs responded to questions at three time periods: baseline (before the presentation of guidelines or evidence-based practices regarding vision assessment), after completing module 1 (short-term delay), and after completing module 4 (long-term delay). CPs responded to the same “vision” questions only once, after finishing all control modules (see Fig. 1). Questions were in identical format for the CPs' only vision evaluation and for IPs at short- and long-term delays. The short-term delay was within 1 hour after initial log-on for more than half (n = 38) the IPs. For 18 IPs, the short-term delay was 1 hour to 17 days, whereas one IP took 390 days. The long-term delay averaged 1.8 years (range, 0.81–2.69 years) after IPs initially logged in. Access to the first two modules was closed after completion. Although “Help for My Office” materials (brochures, recording forms, and acuity testing protocols) could still be accessed, none of those materials contained answers to test questions. A consequence of our decision to withhold knowledge of the topic was a 1- to 3-year lag between the initial assessment of vision by IPs versus CPs. This strategy was used to prevent providers from developing hypotheses about the study and modifying their behavior accordingly.

Figure 1.

CPs' vision responses were obtained after the study only (long-term delay point). CPs' responses at the end of the study were compared with those of IPs at baseline (during module 1), after the short-term delay (after module 1 was completed), and at the long-term delay (after finishing all intervention modules).

Design of the Website

We used principles of practice-based35 and other learning and cognitive and behavior change theories relevant to our target audience of physician users,31,36,37 to design an interactive website for intervention and control users. We chose the Internet as the best mode to deliver multiple learning strategies, such as the videos and animations that we developed to show the acuity-testing protocol conducted with typical preschool children and to show eye movements elicited during cover testing of preschool children with strabismus. In addition to presenting information from authoritative sources including clinical guidelines and relevant research, we used the website to (1) evaluate physicians' knowledge, attitudes, practice environment, and self-reported behavior by asking case-relevant questions; (2) track behaviors, such as ordering a visual acuity test (intervention providers only), and (3) present feedback comparing the individual's response with aggregate responses of the group to increase motivation and facilitate improvement.31

Intervention modules addressed (1) amblyopia and strabismus, (2) preschool visual acuity screening, (3) cover test and corneal light reflex tests for strabismus, and (4) autorefraction and stereopsis testing. Control modules 1 and 2 addressed blood pressure screening, and modules 3 and 4 addressed chlamydia screening. Intervention module 1 contained the responses for the baseline and short-term postintervention responses that we present here, whereas module 2 presented the test of acuity we designed for the project to improve rates of quantitative vision screening (a separate outcome measure for the study). We released modules 3 and 4 later, to give a complete picture of PVS in the medical home, to give additional reminders to screen vision, and to allow us to evaluate whether improvements in knowledge and self-reported behavior would be sustained over the long-term; however, we cannot assess a sustained effect of the information presented in these later modules.

Development of Knowledge Assessment

We developed a set of questions to assess aspects of knowledge, attitudes, and practice environment, judged by expert consensus to be most relevant to our overarching goal to improve PVS in the primary care setting, and thus to ultimately improve detection of strabismus and amblyopia in that setting. Whenever possible, answers were taken from authoritative sources that targeted PCPs, including the AAP (American Academy of Pediatrics) policy statement for eye examination in children.38 No formal validation of the test items was attempted. Since the website was originally designed, the evidence base for some items, including the prevalence of amblyopia and risk factors, has improved.10,11,39 For this article, we are presenting the answers that were judged correct at the time. Table 1 summarizes relevant aspects of prominent learning theories that underpin the content and presentation of the educational materials and questions we developed.31,35–37

Table 1.

Educational Strategies to Improve Vision Screening in Preschool Children

| Educational Strategy | Educational Rationale | Educational Theory35–37 |

|---|---|---|

| Content selection | Establish relevance to work setting | Andragogy (Knowles) |

| Use of cases | Bridge content to work performance | Practice-based learning (Moore) |

| Interactivity | Rehearse new behaviors | System Approach Model (Dick/Carey) |

| Clinical guidelines38 | Provide instruction on how to complete a behavior from a credible source | Social Cognitive Theory (Bandura) |

| Vicarious Learning | Social Modeling (Videotapes and animations*) | Social Cognitive Theory (McAlister)37 |

| Multiple modalities | Varied and multiple representations of content | Cognitive Flexibility Theory (Spiro) |

| Audit and feedback | Provide practice data to facilitate reflection on practice | Theory of Reflective Practice (Schon) |

Videotapes of preschoolers completing visual acuity testing and of strabismic children undergoing cover testing, the latter clarified by labeled animations.

Provider-Specific Summary Scores

A summary score was calculated per provider based on their responses to seven questions, with potential scores ranging from 0 to 7 correct. One point was given for each correct answer, and the sum of the points was calculated at each time period for IPs and after module 4 for CPs.

Results

Sixty-five providers were enrolled into the intervention arm and 71 into the control arm. For IPs, responses were available from 61 (93.8%) providers at baseline, 57 (87.7%) after the short delay, and 27 (41.5%) after the long delay. For CPs, responses to vision questions were available from 42 providers (59.2%) after completing all control modules (Fig. 1).

Table 2 presents demographic and practice characteristics for those who did and those who did not participate in the study (NP), despite being eligible. The latter group includes eight IPs and eight CPs who logged on but did not complete module 1. Participating providers were more likely to be female, to reside in the state in which the project originated (Alabama), to have graduated slightly earlier, and to have filed more claims for WCVs; they were less likely to be family physicians. Other characteristics were not different between participating and nonparticipating providers (age, U.S.-trained, residency-trained in Alabama, employment setting, and baseline PVS rate for children aged 3 to 5 years).

Table 2.

Factors Associated with Participation and Randomization

| NP | CP (n = 42) | IP (n = 57) | CP + IP | CP vs. IP | CP + IP vs. NP | |

|---|---|---|---|---|---|---|

| Sex, female | 359 (39.7) | 24 (57.1) | 28 (52.8) | 5 (54.7) | 0.69 | <0.01 |

| Age, y | 0.69 | 0.13 | ||||

| 30–40 | 251 (28.5) | 12 (28.6) | 11 (19.3) | 23 (23.2) | ||

| 41–50 | 314 (35.6) | 14 (33.3) | 21 (36.8) | 35 (35.4) | ||

| 51–60 | 225 (25.5) | 11 (26.2) | 15 (26.3) | 26 (26.3) | ||

| 61+ | 91 (10.3) | 5 (11.9) | 10 (17.5) | 15 (15.2) | ||

| Ethnicity | 0.14 | NA | ||||

| African-American | NA | 9 (21.4) | 3 (5.26) | |||

| Asian | 8 (19.1) | 17 (29.8) | ||||

| Caucasian | 21 (50.0) | 31 (54.4) | ||||

| Hispanic | 3 (7.1) | 2 (1.75) | ||||

| Other | 1 (2.4) | 4 (5.26) | ||||

| Provider Type | 0.36 | 0.05 | ||||

| Family practice | 310 (28.5) | 8 (19.1) | 9 (15.8) | 17 (17.2) | ||

| Pediatric | 708 (65.1) | 30 (71.4) | 46 (80.7) | 76 (76.8) | ||

| Type of employment | 0.44 | 0.13 | ||||

| Direct patient care | 811 (94.3) | 34 (91.9) | 47 (97.8) | 81 (95.3) | ||

| Employment | NA | |||||

| Private practice | 27 (65.9) | 41 (78.9) | ||||

| Public Health | NA | 5 (12.2) | 1 (1.9) | |||

| Hospital | 4 (9.8) | 7 (13.5) | ||||

| Other | 5 (12.2) | 3 (5.8) | ||||

| State | 0.96 | <0.01 | ||||

| Alabama | 367 (33.7) | 23 (54.8) | 30 (52.6) | 53 (53.5) | ||

| Illinois* | 461 (42.4) | 13 (30.1) | 19 (33.3) | 32 (32.3) | ||

| South Carolina | 260 (23.9) | 8 (14.3) | 8 (14.0) | 16 (16.2) | ||

| Year graduation from med school | 1986 | 1984 | 1982 | 1983 | 0.30 | <0.01 |

| % Medicaid | NA | 53.6% | 44.7% | 0.07 | NA | |

| WCC claims (mean) | 72 | 112 | 85 | 96 | 0.06 | <0.01 |

| VS rate | 0.14 | 0.18 | 0.17 | 0.18 | 0.84 | 0.16 |

Data are n (%).

Illinois enrollment was stopped early, when recruitment goals were met.

The 57 IPs who completed baseline and short-term questions and 42 CPs who completed vision questions were also compared with respect to demographic and practice characteristics, and no differences were observed. We looked at additional factors available only for participating providers and found no significant differences between CPs and IPs for ethnicity or employment setting, as well as time spent per week in the primary care setting, academic appointment, training at the University of Alabama at Birmingham (the institution of origin of the project), part-time versus full-time employment, and previous experience with online courses (data not shown). However, CPs reported marginally more WCVs claims (0.06) and the percentage of children with Medicaid in their practices (P = 0.07).

Finally, IPs who completed all versus some of the modules did not differ on any of the factors in Table 2; baseline scores on knowledge, attitudes, or practice environment5; additional factors from the AMA master file; or questions about the quality and effectiveness of the web-based learning modules. However, IPs who completed everything reported closer adherence to AAP recommendations for PVS (P = 0.04).5

IPs' responses for six of seven items significantly improved over baseline after the short delay (Table 3). Performance on the other item was high at baseline (87.7%) and did not improve further. Items showing the highest percentage change included learning that risk factors for amblyopia had to be present before neural maturation was complete, that the percentage of children with risk factors for amblyopia was greater than 4%, and that 30% or more of children with amblyopia do not have outward signs (strabismus, cataracts, or ptosis).

Table 3.

Responses Per Item Averaged Across Providers

| Intervention |

Control (n = 42) | P† | P‡ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Before | After (Short Term) (n = 57) | P | Before | After (Long Term) (n = 27) | P | ||||

| Percent whose office routinely tests visual acuity during a well child exam for a 4-year-old child | 87.7 | 87.7 | 0.99 | 92.6 | 88.9 | 0.56 | 73.8 | 0.11 | 0.22 |

| Earliest age visual acuity screening is routinely attempted, y | <0.0001 | 0.19 | 0.18 | 0.20 | |||||

| 2 | 8.8 | 12.3 | 14.8 | 18.5 | 16.7 | ||||

| 3 | 31.6 | 77.2 | <0.0001 | 33.3 | 63.0 | 0.01 | 42.9 | 0.29 | 0.14 |

| 4 | 40.3 | 8.8 | 40.7 | 11.1 | 33.3 | ||||

| >4 | 19.3 | 1.8 | 11.1 | 7.4 | 7.1 | ||||

| Interpretation of 20/50 acuity in each eye tested separately at age 4 years | <0.01 | 0.80 | 0.84 | 0.85 | |||||

| Normal | 8.8 | 5.6 | 7.4 | 11.1 | 7.1 | ||||

| Abnormal | 68.4 | 90.7 | <0.01 | 70.4 | 70.4 | 1.0000 | 73.8 | 0.66 | 0.79 |

| Inconclusive | 22.8 | 3.7 | 22.2 | 18.5 | 19.1 | ||||

| Percent indicating each relevant aspect of the definition of amblyopia | |||||||||

| Underdevelopment of neural pathway | 57.9 | 79.0 | <0.01 | 63.0 | 81.5 | 0.10 | 73.8 | 0.14 | 0.57 |

| Poor vision not due to ocular pathology | 36.8 | 71.9 | <0.0001 | 40.7 | 59.3 | 0.10 | 57.1 | 0.07 | 1.00 |

| Risk factors must be present before neural maturation is complete | 28.1 | 84.2 | <0.0001 | 37.0 | 48.2 | 0.37 | 33.3 | 0.66 | 0.31 |

| Significant refractive error must be corrected before final diagnosis | 61.4 | 84.2 | <0.01 | 55.6 | 63.0 | 0.53 | 47.6 | 0.22 | 0.23 |

| All of the above | 7.0 | 54.4 | <0.0001 | 7.4 | 11.1 | 0.65 | 14.3 | 0.32 | 1.00 |

| 5. Estimated percent of 4 yr olds with a risk factor for amblyopia | <0.0001 | 0.17 | <0.001 | 0.42 | |||||

| 1–4% | 66.7 | 3.5 | 59.3 | 33.3 | 33.3 | ||||

| 5–9% | 26.3 | 31.6 | 33.3 | 44.4 | 35.7 | ||||

| 10–20% | 5.3 | 61.4 | <0.0001 | 7.4 | 18.5 | 0.05 | 31.0 | <0.01 | 0.58 |

| >20% | 1.8 | 3.5 | 0.0 | 3.7 | 0.0 | ||||

| 6. Percent indicating each risk factor for amblyopia | |||||||||

| Strabismus | 86.0 | 98.2 | <0.05 | 92.6 | 96.3 | 0.56 | 90.5 | 0.55 | 0.64 |

| Cataracts | 80.7 | 91.2 | <0.05 | 92.6 | 88.9 | 0.65 | 66.7 | 0.16 | 0.05 |

| Ptosis | 73.7 | 91.2 | <0.01 | 81.5 | 85.2 | 0.65 | 76.2 | 0.82 | 0.54 |

| High refractive error | 96.5 | 94.5 | 0.65 | 100 | 96.3 | 0.32 | 46.6 | <0.0001 | <0.0001 |

| All of the above | 64.9 | 89.5 | <0.001 | 74.1 | 77.8 | 0.71 | 35.7 | <0.01 | 0.001 |

| Healthy 4-year-old with amblyopia who do not have strabismus, ptosis, or cataracts is ≥30% | <0.0001 | 0.36 | 0.15 | 0.74 | |||||

| 0–9% | 29.8 | 5.3 | 25.9 | 29.6 | 33.3 | ||||

| 10–19% | 12.3 | 5.3 | 14.8 | 33.3 | 28.6 | ||||

| 20–29% | 10.5 | 3.5 | 11.1 | 3.7 | 11.9 | ||||

| 30–50% | 15.8 | 79.0 | <0.0001 | 11.1 | 18.5 | 0.21 | 11.9 | <0.05 | 0.59 |

| >50% | 31.6 | 7.0 | 37.0 | 14.8 | 14.3 | ||||

Control scores after long delay compared to intervention scores at baseline.

Control scores after long delay compared to intervention scores after long delay.

After the long delay, IPs retained their knowledge regarding the age at which routine quantitative screening should start and that risk factors are more prevalent than the condition itself. However, other knowledge improvements observed at the short-term assessment appeared to have dissipated.

Table 3 shows that CPs' responses to individual vision questions are not consistently better or worse than those of IPs at baseline. For example, significantly fewer CPs knew that high percentages of children with amblyopia present without observable signs (P = 0.04) or that high refractive error is a risk factor for amblyopia; consequently, fewer CPs knew all the risk factors for amblyopia (P < 0.0001). On the other hand, more CPs knew that the percentage of children with risk factors is greater than 1% to 4%.

Table 4 shows that summed scores from IPs at baseline and from CPs were not significantly different. For the IPs, scores were significantly higher than baseline after the short-term delay but returned to near baseline levels after the long-term delay. However, despite the decrease in IP scores after the long-term delay, the scores were significantly higher than the CP scores, reflecting the fact that the IP scores did not completely return to baseline levels.

Table 4.

Mean and Median Scores from Intervention and Control Providers

| Intervention |

Control (n = 42) | P* | P† | P‡ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Before | After (Short Term) (n = 57) | P | Before | After (Long Term) (n = 27) | P | |||||

| Mean | 3.1 | 5.5 | <0.0001 | 3.3 | 3.7 | 0.14 | 3.0 | 0.49 | <0.0001 | 0.03 |

| Median | 3.0 | 6.0 | <0.0001 | 3.0 | 4.0 | 0.48 | 3.0 | 0.50 | <0.0001 | 0.04 |

Control vs. intervention at baseline.

Control vs. intervention after short term delay.

Control vs. intervention after long term delay.

Discussion

This study was an evaluation of a web-based intervention designed to increase primary care providers' knowledge about amblyopia and PVS. The baseline knowledge scores showed that many PCPs lacked knowledge about key issues related to amblyopia and visual acuity testing. We focused on screening recommendations in the 2003 AAP policy statement for eye examination38 (reaffirmed without changes in 2007), as well as research relevant to detection of strabismus and amblyopia in preschool children. We did not include other conditions that might also be detected with universal vision screening of preschoolers. Thus, our vision modules did not provide a comprehensive treatment of pediatric eye disease or assessment.

An important innovation is our use of the internet to provide a multifaceted quality-improvement strategy. For educational content, we programmed a sequence of screens, with tailored text depending on responses, employing all the features of Internet-based learning that have been linked to better outcome: interactivity, practice cases, repetition, and feedback.40 Reviews or meta-analyses of randomized controlled trials (RCTs) comparing computer-based to traditional educational methods are hampered by the heterogeneity of the studies but generally conclude that multicomponent computer-based learning is better or similar to traditional methods.41 For example, a recent meta-analysis concluded that Internet-based learning (covering a broad range of study designs and topics) had large and significant effects on knowledge, skills, and patient outcomes compared with no intervention, and similar outcome compared with non-Internet education.42 We found Internet delivery to be uniquely suited to our RCT, because the website allowed us to present a standardized educational process across the research sites, which ultimately spanned three states.

Based on our finding that short-term improvements in outcome measures were statistically significant, we conclude that the website was an efficacious method for improving knowledge about “vision.” We opted against formal evaluation of the items we presented, reasoning that review and critique by focus groups of content experts and potential users was adequate, and more feasible compared with protocols involving a large number of physicians and repeated responses.41,43 A key component of our study, which was framed to evaluate the effectiveness and usefulness of the education materials we developed, was the design of an evaluation plan to test the effectiveness of the materials. Our systematic development of behavioral objectives, performance criteria, content, and criterion-based assessment items that all matched and our findings of short-term improvement in knowledge indicate that the messages we conveyed were clear and focused on the measures we sought to improve.

We strove to develop a fast-paced, interactive tool that would engage providers in their real-world, practice settings. Most providers completed the modules during normal working hours in about half an hour (median time to complete module 1 before opening CME test was 31 minutes). We found that most physicians who continued past the first few screens completed the first two modules, both of which were immediately available. For the majority of enrolled providers, the web-based cases were sufficient to engage attention and improve knowledge.

Enrolling providers into randomized clinical trials is notoriously difficult, even when traditional interventions are used.43–45 We do not know whether uptake of web-based vision education would be better or worse than traditional methods. Since many of the current quality-improvement projects required to maintain board certification for pediatricians are web-based (http://www.aap.org/mocinfo/MOCPart4.html), and since the number of physicians participating in online CME certification increased almost 10-fold between 2002 and 2008,46 increased uptake and completion of modules like ours is likely.

Previous attempts to improve vision screening in the medical home at preschool age, using more traditional approaches, have reported similar challenges regarding recruitment, retention and patient outcomes,47,48 with greater success possibly linked to higher baseline screening rates, provider choice from a menu of topics, and/or repeated, face-to-face visits.6 However, no previous study has followed a randomized design or sought to assess and improve provider knowledge about vision.

Studies of physician behavior show that the impact of knowledge on behavior varies according to attributes of the condition,22 the recommended action,22,49,50 characteristics of the provider51 and other factors.52–54 In a previous publication, we showed that for PVS, knowledge and attitudes are both important, but their combined impact is greater than the sum of each factor alone.7 Thus, attempts to improve knowledge are an important first step in any attempt to improve PVS behaviors. Our overall study design includes additional outcome measures related to provider behavior (claims filed for quantitative vision screening) and patient outcome (diagnosis of strabismus or amblyopia by eye specialists).9 The web-based data will allow us to investigate the effect of baseline and postintervention knowledge, attitudes, and practice environment on these other measures in future publications.

Maintaining Participation

Modules 3 (strabismus) and 4 (autorefraction and stereopsis) were released after a variable delay lasting months (last enrolled providers from Illinois) to years (first enrolled providers from Alabama). We revealed the topic of the maintenance modules after providers had finished the first two modules, but did not report whether the provider was in the intervention or control group. Despite repeated reminders, only 41.5% of the IPs completed all vision modules. Perhaps many IPs felt that they had acquired adequate knowledge about vision to provide good primary care to their preschool patients and that further knowledge on the same topic was not relevant to their practice. In contrast, 59.2% of control providers continued, perhaps because they learned that subsequent modules addressed a new condition (chlamydia testing in adolescents). This higher participation rate allowed us to get a good response rate for the vision questions we asked at the end of control module 4. Also, it affirmed our decision to present relevant information and to obtain important feedback early in the process, which was informed by others' reported difficulty maintaining participation in web-based educational interventions. Other reports of attrition by physicians enrolled in similar studies with multiple modules released over time are limited, but generally report similar problems with dropout.45,55

Sustaining Improvement

After the long-term delay, IP scores were significantly better than CP scores, even though access to the first two modules was blocked after completion, and maintenance modules did not repeat any information relevant to outcome. Studies with much higher improvement rates generally employ frequent, face-to-face contact with the research team, but suffer from lack of standardization. A mixed intervention, using face-to-face interactions for motivation and technical support, and using the website to deliver educational content may be more effective.

Limitations

Our programming allowed us to track usage at the level of the computer, but we cannot be certain that only the enrolled person participated and furnished responses. Postintervention outcome measures were higher in all but two IPs; one of these differed by 5 points (five correct initially versus zero correct after the intervention), and it is possible that later responses were obtained from a different individual. Our randomized design restricted our recruitment activities to higher volume providers who were willing to enroll in a research study designed to improve care for children. We know that recruitment was challenging under these conditions, but we cannot predict how PCPs would have responded had more specific information been provided about the focus of the website, or if access had not depended on participating in the research. We were able to identify only one factor (reporting closer adherence to AAP recommendations for PVS) linked to completion by IPs; however, there may be unknown dependent or independent factors that might be more useful in selecting providers who are likely to become thoroughly engaged in web-based education. To fairly assess long-term retention of knowledge, we closed access to the first two modules on completion, thereby limiting the consolidation of new knowledge usually possible with review. Although we were not able to engage all IPs to complete the final modules by repeating email and other written communications, we did not add interventions such as in-office training that might have proven more successful.

Conclusions

The efficacy, or capacity of the website to improve knowledge about vision and PVS, has been demonstrated in a group of PCPs who showed significant improvements in knowledge over the short term, and a subgroup of providers who showed sustained, albeit small, improvement over the long-term. The website could be an important first step in future interventions to improve PVS, but different marketing approaches and/or additional types of interventions are necessary to engage more providers.

Acknowledgments

The authors thank Linda Casebeer, PhD, formerly of the University of Alabama at Birmingham Department of CME and currently President of CE Outcomes, LLC, for her help in preparing the Table of Educational Theories.

Footnotes

Supported by Award Number R01EY015893 from the National Eye Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Eye Institute or the National Institutes of Health.

Disclosure: W.L. Marsh-Tootle, None; G. McGwin, None; C.L. Kohler, None; R. E. Kristofco, None; R.V. Datla, None; T.C. Wall, None

References

- 1. Committee on Practice and Ambulatory Medicine and Bright Futures Steering Committee Recommendations for Preventive Pediatric Health Care. Pediatrics. 2007;120:1376; reaffirmed without changes in 2011. Available at http://aappolicy.aappublications.org/cgi/content/full/pediatrics;120/6/1376 Accessed on May 25, 2011 [Google Scholar]

- 2. Hambidge SJ, Emsermann CB, Federico S, Steiner JF. Disparities in pediatric preventive care in the United States. 1993–2002. Arch Pediatr Adolesc Med. 2007;161:30–36 [DOI] [PubMed] [Google Scholar]

- 3. Stinnet A, Perkins J, Olson K. Children's Health Under Medicaid: A National Review of Early and Periodic Screening, Diagnosis and Treatment 1997–1998 Update. Washington, DC: National Health Law Program; 2001 [Google Scholar]

- 4. Marsh-Tootle WL, Wall TC, Tootle JS, Person SD, Kristofco RE. Quantitative pediatric vision screening in primary care settings in Alabama. Optom Vis Sci. 2008;85(9):849–856 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Stange KC, Flocke SA, Goodwin MA, Kelly RB, Zyzanski SJ. Direct observation of rates of preventive service delivery in community family practice. Prev Med. 2000;31:167–176 [DOI] [PubMed] [Google Scholar]

- 6. Shaw JS, Wasserman RC, Barry S, et al. Statewide quality improvement outreach improves preventive services for young children. Pediatrics. 2006;118(4):e1039–1047 [DOI] [PubMed] [Google Scholar]

- 7. Marsh-Tootle WL, Funkhouser E, Frazier MG, Crenshaw MK, Wall TC. Knowledge, attitudes, environment: vision screening in pediatric settings. Optom Vis Sci. 2010;87(2):104–111 [DOI] [PubMed] [Google Scholar]

- 8. Kemper AR, Clark SJ. Preschool vision screening in pediatric practices. Clin Pediatr. 2006;45:263–266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Wall TC, Marsh-Tootle WL, Crenshaw MK, et al. Design of a randomized clinical trial to improve rates of amblyopia detection in preschool aged children in primary care settings. Contemp Clin Trials. 2011;32(2):204–214 [DOI] [PubMed] [Google Scholar]

- 10. The Multi-ethnic Pediatric Eye Disease Study Prevalence of amblyopia and strabismus in African American and Hispanic children ages 6 to 72 months. Ophthalmology. 2008;115(7):1229–1236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Friedman DS, Repka MX, Katz J, et al. Prevalence of amblyopia and strabismus in white and African American children aged 6 through 71 months the Baltimore Pediatric Eye Disease Study. Ophthalmology. 2009;116(11):2128–2134, e1–2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Kvarnstrom G, Jakobsson P, Lennerstrand G. Visual screening of Swedish children: an ophthalmological evaluation. Acta Ophthalmol Scand. 2001;79(3):240–244 [DOI] [PubMed] [Google Scholar]

- 13. Vision in Preschoolers (VIP) Study Group Preschool vision screening tests administered by nurse screeners compared with lay screeners in the Vision in Preschoolers Study. Invest Ophthalmol Vis Sci. 2005;46(8):2639–2648 [DOI] [PubMed] [Google Scholar]

- 14. Vision in Preschoolers (VIP) Study Group Does assessing eye alignment along with refractive error or visual acuity increase sensitivity for detection of strabismus in preschool vision screening? Invest Ophthalmol Vis Sci. 2007;48:3115–3125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Schmidt P, Maguire M, Dobson V, et al. Comparison of preschool vision screening tests as administered by licensed eye care professionals in the Vision in Preschoolers Study. Ophthalmology. 2004;111(4):637–650 [DOI] [PubMed] [Google Scholar]

- 16. Li T, Shotton K. Conventional occlusion versus pharmacologic penalization for amblyopia. Cochrane Database Syst Rev. 2009;(4):CD006460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Mangione-Smith R, DeCristofaro AH, Setodji CM, et al. The quality of ambulatory care delivered to children in the United States. N Engl J Med. 2007;357(15):1515–1523 [DOI] [PubMed] [Google Scholar]

- 18. Chung PJ, Lee TC, Morrison JL, Schuster MA. Preventive care for children in the United States: quality and barriers. Annu Rev Public Health. 2006;27:491–515 [DOI] [PubMed] [Google Scholar]

- 19. Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients' care. Lancet. 2003; 362(11)2407–2412 [DOI] [PubMed] [Google Scholar]

- 20. Hulscher ME, Wensing M, van Der Weijden T, Grol R. Interventions to implement prevention in primary care. Cochrane Database Syst Rev. 2001;(1):CD000362. [DOI] [PubMed] [Google Scholar]

- 21. Satterlee WG, Eggers RG, Grimes DA, Effective medical education: insights from the Cochrane Library. Obstet Gynecol Surv. 2008;63(5):329–333 [DOI] [PubMed] [Google Scholar]

- 22. Forsetlund L, Bjørndal A, Rashidian A, et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2009;15;(2):CD003030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Marinopoulos SS, Dorman T, Ratanawongsa N, et al. Effectiveness of Continuing Medical Education Evidence Report/Technology Assessment No. 149. Prepared by the Johns Hopkins Evidence-based Practice Center, under Contract No. 290-02-0018.) AHRQ Publication No. 07-E006. Rockville, MD: Agency for Healthcare Research and Quality; January 2007 [Google Scholar]

- 24. Davis D, Galbraith R. American College of Chest Physicians Health and Science Policy Committee. Continuing medical education effect on practice performance: effectiveness of continuing medical education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest. 2009;135(suppl 3):42S–48S [DOI] [PubMed] [Google Scholar]

- 25. Fishbein M, Bandura A, Triandis HC, et al. Factors influencing behavior and behavior change. Final Report, Theorists Workshop. Bethesda, MD: National Institute of Mental Health; 1992 [Google Scholar]

- 26. Fisher JD, Fisher WA, Shuper PA. The information-motivation-behavioral skills model of HIV preventive behavior. In: DiClemente RJ, Crosby RA, Kegler MC. eds. Emerging Theories in Health Promotion Research. 2nd ed San Francisco: Jossey Bass; 2009 [Google Scholar]

- 27. Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A. and the “Psychological Theory” Group Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14(1):26–33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Straus SE, Tetroe J, Graham I. Defining knowledge translation. CMAJ. 2009;181(3–4):165–168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Francke AL, Smit MC, de Veer AJ, Mistiaen P. Factors influencing the implementation of clinical guidelines for health care professionals: a systematic meta-review. BMC Med Inform Decis Mak. 2008;12:38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Ellis P, Robineson P, Ciliska D, et al. Diffusion and Dissemination of Evidence-based Cancer Control Interventions. Evidence Report/Technology Assessment N;. 79. Prepared by McMaster University under Contract No. 290-97-0017. AHRQ Publication No. 03-E033 Rockville, MD: Agency for Healthcare Research and Quality; May 2003. http://www.ncbi.nlm.nih.gov/bookshelf/br.fcgi?book=erta79 Accessed August 9, 2011 [Google Scholar]

- 31. Casebeer LL, Strasser SM, Spettell CM, et al. Designing tailored Web-based instruction to improve practicing physicians' preventive practices. J Med Internet Res. 2003;5(3):e20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Levinson DR. Most Medicaid Children in Nine States Are Not Receiving All Required Preventive Screening Services. Washington, DC: U.S. Department of Health & Human Services, Office of Inspector General, Publication OEI-05-08-00520; May 2010:2,4 [Google Scholar]

- 33. U. S. Department of Health and Human Services Centers for Medicare and Medicaid Services. Medicaid Early and Periodic Screening and Diagnostic Treatment Benefit Overview. December 14, 2005. Available at: http://www.cms.hhs.gov/MedicaidEarlyPeriodicScrn/ Accessed August 9, 2011

- 34. Campbell MJ, Donner A, Klar N. Developments in cluster randomized trials and statistics in medicine. Stat Med. 2007:26(1):2–19 [DOI] [PubMed] [Google Scholar]

- 35. Moore DE, Jr, Pennington FC. Practice based learning and improvement. J Contin Educ Health Prof. 2003;23(suppl 1):S73–S80 [DOI] [PubMed] [Google Scholar]

- 36. Kearsley G. (12/20/2010) Exploration in learning and instruction: the theory into practice database. Theory into Practice Database (TIP). Available at http://tip.psychology.org/index.html Accessed on December 20, 2010

- 37. McAlister AL, Perry CL, Parcel GS. How individuals, environments, and health behaviors interact: social cognitive theory. Health Behavior and Health Education: Theory, Research, and Practice. 4th ed San Francisco, CA: John Wiley & Sons, Inc,; 2008:169–188 [Google Scholar]

- 38. American Academy of Pediatrics, Committee on Practice and Ambulatory Medicine and Section on Ophthalmology Eye examination in infants, children, and young adults by pediatricians. Pediatrics. 2003;111(4):902–907 [PubMed] [Google Scholar]

- 39. Giordano L, Friedman DS, Repka MX, et al. Prevalence of refractive error among preschool children in an urban population: the Baltimore Pediatric Eye Disease Study. Ophthalmology. 2009;116(4):739–746, e1–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Instructional design variations in internet-based learning for health professions education: a systematic review and meta-analysis (review). Acad Med. 2010;85(5):909–922 [DOI] [PubMed] [Google Scholar]

- 41. Lam-Antoniades M, Ratnapalan S, Tait G. Electronic continuing education in the health professions: an update on evidence from RCTs. J Contin Educ Health Prof. 2009;29(1):44–51 [DOI] [PubMed] [Google Scholar]

- 42. Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181–1196 [DOI] [PubMed] [Google Scholar]

- 43. Sanddal ND, Sanddal TL, Pullum JD, et al. A randomized, prospective multisite comparison of pediatric prehospital training methods. Pediatr Emerg Care. 2004;20(2):94–100 [DOI] [PubMed] [Google Scholar]

- 44. Hoddinott P, Britten J, Harrild K, Godden DJ. Recruitment issues when primary care population clusters are used in randomised controlled clinical trials: climbing mountains or pushing boulders uphill? Contemp Clin Trials. 2007;28(3):232–241 [DOI] [PubMed] [Google Scholar]

- 45. Eysenbach G. The law of attrition. J Med Internet Res. 2005;31;7, e11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Casebeer L, Brown J, Roepke N, et al. Evidence-based choices of physicians: a comparative analysis of physicians participating in Internet CME and non-participants. BMC Med Educ. 2010;10:42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Clausen MM, Armitage MD, Arnold RW. Overcoming barriers to pediatric visual acuity screening through education plus provision of materials. J AAPOS. 2009;13(2):151–154 [DOI] [PubMed] [Google Scholar]

- 48. Hered RW, Rothstein M. Preschool vision screening frequency after an office-based training session for primary care staff. Pediatrics. 2003;112 :e17–e21 [DOI] [PubMed] [Google Scholar]

- 49. Frijling BD, Lobo CM, Hulscher ME, et al. Multifaceted support to improve clinical decision making in diabetes care: a randomized controlled trial in general practice. Diabet Med. 2002;19(10):836–842 [DOI] [PubMed] [Google Scholar]

- 50. Grimshaw JM, Shirran L, Thomas R, et al. Changing provider behavior: an overview of systematic reviews of interventions. Med Care. 2001;39(suppl 2):112–145 [PubMed] [Google Scholar]

- 51. Green LA, Wyszewianski L, Lowery JC, Kowalski CP, Krein SL. An observational study of the effectiveness of practice guideline implementation strategies examined according to physicians' cognitive styles. Implement Sci. 2007;2:41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Kinzie MB. Instructional design strategies for health behavior change. atient Educ Couns. 2005;56(1):3–15 [DOI] [PubMed] [Google Scholar]

- 53. Ceccato N, Ferris LE, Manuel D, Grimshaw JM, Adopting health behavior change theory throughout the clinical practice guideline process. J Contin Educ Health Prof. 2007;27(4):201–207 [DOI] [PubMed] [Google Scholar]

- 54. Francis JJ, Stockton C, Eccles MP, et al. Evidence-based selection of theories for designing behaviour change interventions: using methods based on theoretical construct domains to understand clinicians' blood transfusion behavior. Br J Health Psychol. 2009;14:625–646 [DOI] [PubMed] [Google Scholar]

- 55. Wangberg SC, Bergmo TS, Johnsen JA. Adherence in Internet-based interventions. Patient Prefer Adherence. 2008;2:57–65 [PMC free article] [PubMed] [Google Scholar]