Abstract

The organization of computations in networks of spiking neurons in the brain is still largely unknown, in particular in view of the inherently stochastic features of their firing activity and the experimentally observed trial-to-trial variability of neural systems in the brain. In principle there exists a powerful computational framework for stochastic computations, probabilistic inference by sampling, which can explain a large number of macroscopic experimental data in neuroscience and cognitive science. But it has turned out to be surprisingly difficult to create a link between these abstract models for stochastic computations and more detailed models of the dynamics of networks of spiking neurons. Here we create such a link and show that under some conditions the stochastic firing activity of networks of spiking neurons can be interpreted as probabilistic inference via Markov chain Monte Carlo (MCMC) sampling. Since common methods for MCMC sampling in distributed systems, such as Gibbs sampling, are inconsistent with the dynamics of spiking neurons, we introduce a different approach based on non-reversible Markov chains that is able to reflect inherent temporal processes of spiking neuronal activity through a suitable choice of random variables. We propose a neural network model and show by a rigorous theoretical analysis that its neural activity implements MCMC sampling of a given distribution, both for the case of discrete and continuous time. This provides a step towards closing the gap between abstract functional models of cortical computation and more detailed models of networks of spiking neurons.

Author Summary

It is well-known that neurons communicate with short electric pulses, called action potentials or spikes. But how can spiking networks implement complex computations? Attempts to relate spiking network activity to results of deterministic computation steps, like the output bits of a processor in a digital computer, are conflicting with findings from cognitive science and neuroscience, the latter indicating the neural spike output in identical experiments changes from trial to trial, i.e., neurons are “unreliable”. Therefore, it has been recently proposed that neural activity should rather be regarded as samples from an underlying probability distribution over many variables which, e.g., represent a model of the external world incorporating prior knowledge, memories as well as sensory input. This hypothesis assumes that networks of stochastically spiking neurons are able to emulate powerful algorithms for reasoning in the face of uncertainty, i.e., to carry out probabilistic inference. In this work we propose a detailed neural network model that indeed fulfills these computational requirements and we relate the spiking dynamics of the network to concrete probabilistic computations. Our model suggests that neural systems are suitable to carry out probabilistic inference by using stochastic, rather than deterministic, computing elements.

Introduction

Attempts to understand the organization of computations in the brain from the perspective of traditional, mostly deterministic, models of computation, such as attractor neural networks or Turing machines, have run into problems: Experimental data suggests that neurons, synapses, and neural systems are inherently stochastic

[1], especially in vivo, and therefore seem less suitable for implementing deterministic computations. This holds for ion channels of neurons [2], synaptic release [3], neural response to stimuli (trial-to-trial variability) [4], [5], and perception [6]. In fact, several experimental studies arrive at the conclusion that external stimuli only modulate the highly stochastic spontaneous firing activity of cortical networks of neurons [7], [8]. Furthermore, traditional models for neural computation have been challenged by the fact that typical sensory data from the environment is often noisy and ambiguous, hence requiring neural systems to take uncertainty about external inputs into account. Therefore many researchers have suggested that information processing in the brain carries out probabilistic, rather than logical, inference for making decisions and choosing actions [9]–[22]. Probabilistic inference has emerged in the 1960’s [23], as a principled mathematical framework for reasoning in the face of uncertainty with regard to observations, knowledge, and causal relationships, which is characteristic for real-world inference tasks. This framework has become tremendously successful in real-world applications of artificial intelligence and machine learning. A typical computation that needs to be carried out for probabilistic inference on a high-dimensional joint distribution  is the evaluation of the conditional distribution

is the evaluation of the conditional distribution  (or marginals thereof) over some variables of interest, say

(or marginals thereof) over some variables of interest, say  , given variables

, given variables  . In the following, we will call the set of variables

. In the following, we will call the set of variables  , which we condition on, the observed variables and denote it by

, which we condition on, the observed variables and denote it by  .

.

Numerous studies in different areas of neuroscience and cognitive science have suggested that probabilistic inference could explain a variety of computational processes taking place in neural systems (see [10], [11]). In models of perception the observed variables  are interpreted as the sensory input to the central nervous system (or its early representation by the firing response of neurons, e.g., in the LGN in the case of vision), and the variables

are interpreted as the sensory input to the central nervous system (or its early representation by the firing response of neurons, e.g., in the LGN in the case of vision), and the variables  model the interpretation of the sensory input, e.g., the texture and position of objects in the case of vision, which might be encoded in the response of neurons in various higher cortical areas [15]. Furthermore, in models for motor control the observed variables

model the interpretation of the sensory input, e.g., the texture and position of objects in the case of vision, which might be encoded in the response of neurons in various higher cortical areas [15]. Furthermore, in models for motor control the observed variables  often consist not only of sensory and proprioceptive inputs to the brain, but also of specific goals and constraints for a planned movement [24]–[26], whereas inference is carried out over the variables

often consist not only of sensory and proprioceptive inputs to the brain, but also of specific goals and constraints for a planned movement [24]–[26], whereas inference is carried out over the variables  representing a motor plan or motor commands to muscles. Recent publications show that human reasoning and learning can also be cast into the form of probabilistic inference problems [27]–[29]. In these models learning of concepts, ranging from concrete to more abstract ones, is interpreted as inference in lower and successively higher levels of hierarchical probabilistic models, giving a consistent description of inductive learning within and across domains of knowledge.

representing a motor plan or motor commands to muscles. Recent publications show that human reasoning and learning can also be cast into the form of probabilistic inference problems [27]–[29]. In these models learning of concepts, ranging from concrete to more abstract ones, is interpreted as inference in lower and successively higher levels of hierarchical probabilistic models, giving a consistent description of inductive learning within and across domains of knowledge.

In spite of this active research on the functional level of neural processing, it turned out to be surprisingly hard to relate the computational machinery required for probabilistic inference to experimental data on neurons, synapses, and neural systems. There are mainly two different approaches for implementing the computational machinery for probabilistic inference in “neural hardware”. The first class of approaches builds on deterministic methods for evaluating exactly or approximately the desired conditional and/or marginal distributions, whereas the second class relies on sampling from the probability distributions in question. Multiple models in the class of deterministic approaches implement algorithms from machine learning called message passing or belief propagation [30]–[33]. By clever reordering of sum and product operators occurring in the evaluation of the desired probabilities, the total number of computation steps are drastically reduced. The results of subcomputations are propagated as "messages" or "beliefs" that are sent to other parts of the computational network. Other deterministic approaches for representing distributions and performing inference are probabilistic population code (PPC) models [34]. Although deterministic approaches provide a theoretically sound hypothesis about how complex computations can possibly be embedded in neural networks and explain aspects of experimental data, it seems difficult (though not impossible) to conciliate them with other aspects of experimental evidence, such as stochasticity of spiking neurons, spontaneous firing, trial-to-trial variability, and perceptual multistability.

Therefore other researchers (e.g., [16]–[18], [35]) have proposed to model computations in neural systems as probabilistic inference based on a different class of algorithms, which requires stochastic, rather than deterministic, computational units. This approach, commonly referred to as sampling, focuses on drawing samples, i.e., concrete values for the random variables that are distributed according to the desired probability distribution. Sampling can naturally capture the effect of apparent stochasticity in neural responses and seems to be furthermore consistent with multiple experimental effects reported in cognitive science literature [17], [18]. On the conceptual side, it has proved to be difficult to implement learning in message passing and PPC network models. In contrast, following the lines of [36], the sampling approach might be well suited to incorporate learning.

Previous network models that implement sampling in neural networks are mostly based on a special sampling algorithm called Gibbs (or general Metropolis-Hastings) sampling [9], [17], [18], [37]. The dynamics that arise from this approach, the so-called Glauber dynamics, however are only superficially similar to spiking neural dynamics observed in experiments, rendering these models rather abstract. Building on and extending previous models, we propose here a family of network models, that can be shown to exactly sample from any arbitrary member of a well-defined class of probability distributions via their inherent network dynamics. These dynamics incorporate refractory effects and finite durations of postsynaptic potentials (PSPs), and are therefore more biologically realistic than existing approaches. Formally speaking, our model implements Markov chain Monte Carlo (MCMC) sampling in a spiking neural network. In contrast to prior approaches however, our model incorporates irreversible dynamics (i.e., no detailed balance) allowing for finite time PSPs and refractory mechanisms. Furthermore, we also present a continuous time version of our network model. The resulting stochastic dynamical system can be shown to sample from the correct distribution. In general, continuous time models arguably provide a higher amount of biological realism compared to discrete time models.

The paper is structured in the following way. First we provide a brief introduction to MCMC sampling. We then define the neural network model whose neural activity samples from a given class of probability distributions. The model will be first presented in discrete time together with some illustrative simulations. An extension of the model to networks of more detailed spiking neuron models which feature a relative refractory mechanism is presented. Furthermore, it is shown how the neural network model can also be formulated in continuous time. Finally, as a concrete simulation example we present a simple network model for perceptual multistability.

Results

Recapitulation of MCMC sampling

In machine learning, sampling is often considered the “gold standard” of inference methods, since, assuming that we can sample from the distribution in question, and assuming enough computational resources, any inference task can be carried out with arbitrary precision (in contrast to some deterministic approximate inference methods such as variational inference). However sampling from an arbitrary distribution can be a difficult problem in itself, as, e.g., many distributions can only be evaluated modulo a global constant (the partition function). In order to circumvent these problems, elaborate MCMC sampling techniques have been developed in machine learning and statistics [38]. MCMC algorithms are based on the following idea: instead of producing an ad-hoc sample, a process that is heuristically comparable to a global search over the whole state space of the random variables, MCMC methods produce a new sample via a “local search” around a point in the state space that is already (approximately) a sample from the distribution.

More formally, a Markov chain  (in discrete time) is defined by a set

(in discrete time) is defined by a set  of states (we consider for discrete time only the case where

of states (we consider for discrete time only the case where  has a finite size, denoted by

has a finite size, denoted by  ) together with a transition operator

) together with a transition operator  . The operator

. The operator  is a conditional probability distribution

is a conditional probability distribution  over the next state

over the next state  given a preceding state

given a preceding state  . The Markov chain

. The Markov chain  is started in some initial state

is started in some initial state  , and moves through a trajectory of states

, and moves through a trajectory of states  via iterated application of the stochastic transition operator

via iterated application of the stochastic transition operator  . More precisely, if

. More precisely, if  is the state at time

is the state at time  , then the next state

, then the next state  is drawn from the conditional probability distribution

is drawn from the conditional probability distribution  . An important theorem from probability theory (see, e.g., p. 232 in [39]) states that if

. An important theorem from probability theory (see, e.g., p. 232 in [39]) states that if  is irreducible (i.e., any state in

is irreducible (i.e., any state in  can be reached from any other state in

can be reached from any other state in  in finitely many steps with probability

in finitely many steps with probability  ) and aperiodic (i.e., its state transitions cannot be trapped in deterministic cycles), then the probability

) and aperiodic (i.e., its state transitions cannot be trapped in deterministic cycles), then the probability  converges for

converges for  to a probability

to a probability  that does not depend on the initial state

that does not depend on the initial state  . This state distribution

. This state distribution  is called the invariant distribution of

is called the invariant distribution of  . The irreducibility of

. The irreducibility of  implies that it is the only distribution over the states

implies that it is the only distribution over the states  that is invariant under its transition operator

that is invariant under its transition operator  , i.e.

, i.e.

| (1) |

Thus, in order to carry out probabilistic inference for a given distribution  , it suffices to construct an irreducible and aperiodic Markov chain

, it suffices to construct an irreducible and aperiodic Markov chain  that leaves

that leaves  invariant, i.e., satisfies equation (1). Then one can answer numerous probabilistic inference questions regarding

invariant, i.e., satisfies equation (1). Then one can answer numerous probabilistic inference questions regarding  without any numerical computations of probabilities. Rather, one plugs in the observed values for some of the random variables (RVs) and simply collects samples from the conditional distribution over the other RVs of interest when the Markov chain approaches its invariant distribution.

without any numerical computations of probabilities. Rather, one plugs in the observed values for some of the random variables (RVs) and simply collects samples from the conditional distribution over the other RVs of interest when the Markov chain approaches its invariant distribution.

A convenient and popular method for the construction of an operator  for a given distribution

for a given distribution  is looking for operators

is looking for operators  that satisfy the following detailed balance condition,

that satisfy the following detailed balance condition,

| (2) |

for all  . A Markov chain that satisfies (2) is said to be reversible. In particular, the Gibbs and Metropolis-Hastings algorithms employ reversible Markov chains. A very useful property of (2) is that it implies the invariance property (1), and this is in fact the standard method for proving (1). However, as our approach makes use of irreversible Markov chains as explained below, we will have to prove (1) directly.

. A Markov chain that satisfies (2) is said to be reversible. In particular, the Gibbs and Metropolis-Hastings algorithms employ reversible Markov chains. A very useful property of (2) is that it implies the invariance property (1), and this is in fact the standard method for proving (1). However, as our approach makes use of irreversible Markov chains as explained below, we will have to prove (1) directly.

Neural sampling

Let  be some arbitrary joint distribution over

be some arbitrary joint distribution over  binary variables

binary variables  that only takes on values

that only takes on values  . We will show that under a certain computability assumption on

. We will show that under a certain computability assumption on  a network

a network  consisting of

consisting of  spiking neurons

spiking neurons  can sample from

can sample from  using its inherent stochastic dynamics. More precisely, we show that the stochastic firing activity of

using its inherent stochastic dynamics. More precisely, we show that the stochastic firing activity of  can be viewed as a non-reversible Markov chain that samples from the given probability distribution

can be viewed as a non-reversible Markov chain that samples from the given probability distribution  . If a subset

. If a subset  of the variables are observed, modelled as the corresponding neurons being “clamped” to the observed values, the remaining network samples from the conditional distribution of the remaining variables given the observables. Hence, this approach offers a quite natural implementation of probabilistic inference. It is similar to sampling approaches which have already been applied extensively, e.g., in Boltzmann machines, however our model is more biologically realistic as it incorporates aspects of the inherent temporal dynamics and spike-based communication of a network of spiking neurons. We call this approach neural sampling in the remainder of the paper.

of the variables are observed, modelled as the corresponding neurons being “clamped” to the observed values, the remaining network samples from the conditional distribution of the remaining variables given the observables. Hence, this approach offers a quite natural implementation of probabilistic inference. It is similar to sampling approaches which have already been applied extensively, e.g., in Boltzmann machines, however our model is more biologically realistic as it incorporates aspects of the inherent temporal dynamics and spike-based communication of a network of spiking neurons. We call this approach neural sampling in the remainder of the paper.

In order to enable a network  of spiking neurons to sample from a distribution

of spiking neurons to sample from a distribution  of binary variables

of binary variables  , one needs to specify how an assignment

, one needs to specify how an assignment  of values to these binary variables can be represented by the spiking activity of the network

of values to these binary variables can be represented by the spiking activity of the network  and vice versa. A spike, or action potential, of a biological neuron

and vice versa. A spike, or action potential, of a biological neuron  has a short duration of roughly

has a short duration of roughly  . But the effect of such spike, both on the neuron

. But the effect of such spike, both on the neuron  itself (in the form of refractory processes) and on the membrane potential of other neurons (in the form of postsynaptic potentials) lasts substantially longer, on the order of

itself (in the form of refractory processes) and on the membrane potential of other neurons (in the form of postsynaptic potentials) lasts substantially longer, on the order of  to

to  . In order to capture this temporally extended effect of each spike, we fix some parameter

. In order to capture this temporally extended effect of each spike, we fix some parameter  that models the average duration of these temporally extended processes caused by a spike. We say that a binary vector

that models the average duration of these temporally extended processes caused by a spike. We say that a binary vector  is represented by the firing activity of the network

is represented by the firing activity of the network  at time

at time  for

for  iff:

iff:

| (3) |

In other words, any spike of neuron  sets the value of the associated binary variable

sets the value of the associated binary variable  to 1 for a duration of length

to 1 for a duration of length  .

.

An obvious consequence of this definition is that the binary vector  that is defined by the activity of

that is defined by the activity of  at time

at time  does not fully capture the internal state of this stochastic system. Rather, one needs to take into account additional non-binary variables

does not fully capture the internal state of this stochastic system. Rather, one needs to take into account additional non-binary variables  , where the value of

, where the value of  at time

at time  specifies when within the time interval

specifies when within the time interval  the neuron

the neuron  has fired (if it has fired within this time interval, thereby causing

has fired (if it has fired within this time interval, thereby causing  at time

at time  ). The neural sampling process has the Markov property only with regard to these more informative auxiliary variables

). The neural sampling process has the Markov property only with regard to these more informative auxiliary variables  . Therefore our analysis of neural sampling will focus on the temporal evolution of these auxiliary variables. We adopt the convention that each spike of neuron

. Therefore our analysis of neural sampling will focus on the temporal evolution of these auxiliary variables. We adopt the convention that each spike of neuron  sets the value of

sets the value of  to its maximal value

to its maximal value  , from which it linearly decays back to

, from which it linearly decays back to  during the subsequent time interval of length

during the subsequent time interval of length  .

.

For the construction of the sampling network  , we assume that the membrane potential

, we assume that the membrane potential  of neuron

of neuron  at time

at time  equals the log-odds of the corresponding variable

equals the log-odds of the corresponding variable  to be active, and refer to this property as neural computability condition:

to be active, and refer to this property as neural computability condition:

| (4) |

where we write  for

for  and

and  for the current values

for the current values  of all other variables

of all other variables  with

with  . Under the assumption we make in equation (4), i.e., that the neural membrane potential reflects the log-odds of the corresponding variable

. Under the assumption we make in equation (4), i.e., that the neural membrane potential reflects the log-odds of the corresponding variable  , it is required that each single neuron in the network can actually compute the right-hand side of equation (4), i.e., that it fulfills the neural computability condition.

, it is required that each single neuron in the network can actually compute the right-hand side of equation (4), i.e., that it fulfills the neural computability condition.

A concrete class of probability distributions, that we will use as an example in the remainder, are Boltzmann distributions:

|

(5) |

with arbitrary real valued parameters  which satisfy

which satisfy  and

and  (the constant

(the constant  ensures the normalization of

ensures the normalization of  ). For the Boltzmann distribution, condition (4) is satisfied by neurons

). For the Boltzmann distribution, condition (4) is satisfied by neurons  with the standard membrane potential

with the standard membrane potential

| (6) |

where  is the bias of neuron

is the bias of neuron  (which regulates its excitability),

(which regulates its excitability),  is the strength of the synaptic connection from neuron

is the strength of the synaptic connection from neuron  to

to  , and

, and  approximates the time course of the postsynaptic potential in neuron

approximates the time course of the postsynaptic potential in neuron  caused by a firing of neuron

caused by a firing of neuron  with a constant signal of duration

with a constant signal of duration  (i.e., a square pulse). As we will describe below, spikes of neuron

(i.e., a square pulse). As we will describe below, spikes of neuron  are evoked stochastically depending on the current membrane potential

are evoked stochastically depending on the current membrane potential  and the auxiliary variable

and the auxiliary variable  .

.

The neural computability condition (4) links classes of probability distributions to neuron and synapse models in a network of spiking neurons. As shown above, Boltzmann distributions satisfy the condition if one considers point neuron models which compute a linear weighted sum of the presynaptic inputs. The class of distributions can be extended to include more complex distributions using a method proposed in [40] which is based on the following idea. Neuron  representing the variable

representing the variable  is not directly influenced by the activities

is not directly influenced by the activities  of the presynaptic neurons, but via intermediate nonlinear preprocessing elements. This preprocessing might be implemented by dendrites or other (inter-) neurons and is assumed to compute nonlinear combinations of the presynaptic activities

of the presynaptic neurons, but via intermediate nonlinear preprocessing elements. This preprocessing might be implemented by dendrites or other (inter-) neurons and is assumed to compute nonlinear combinations of the presynaptic activities  (similar to a kernel). This allows the membrane potential

(similar to a kernel). This allows the membrane potential  , and therefore the log-odds ratio on the right-hand side of (4), to represent a more complex function of the activities

, and therefore the log-odds ratio on the right-hand side of (4), to represent a more complex function of the activities  , giving rise to more complex joint distributions

, giving rise to more complex joint distributions  . The concrete implementation of non-trivial directed and undirected graphical models with the help of preprocessing elements in the neural sampling framework is subject of current research. For the examples given in this study, we focus on the standard form of the membrane potential (6) of point neurons. As shown below, these spiking network models can emulate any Boltzmann machine (BM) [36].

. The concrete implementation of non-trivial directed and undirected graphical models with the help of preprocessing elements in the neural sampling framework is subject of current research. For the examples given in this study, we focus on the standard form of the membrane potential (6) of point neurons. As shown below, these spiking network models can emulate any Boltzmann machine (BM) [36].

A substantial amount of preceding studies has demonstrated that BMs are very powerful, and that the application of suitable learning algorithms for setting the weights  makes it possible to learn and represent complex sensory processing tasks by such distributions [37], [41]. In applications in statistics and machine learning using such Boltzmann distributions, sampling is typically implemented by Gibbs sampling or more general reversible MCMC methods. However, it is difficult to model some neural processes, such as an absolute refractory period or a postsynaptic potential (PSP) of fixed duration, using a reversible Markov chain, but they are more conveniently modelled using an irreversible one. As we wish to keep the computational power of BMs and at the same time to augment the sampling procedure with aspects of neural dynamics (such as PSPs with fixed durations, refractory mechanisms) to increase biological realism, we focus in the following on irreversible MCMC methods (keeping in mind that this might not be the only possible way to achieve these goals).

makes it possible to learn and represent complex sensory processing tasks by such distributions [37], [41]. In applications in statistics and machine learning using such Boltzmann distributions, sampling is typically implemented by Gibbs sampling or more general reversible MCMC methods. However, it is difficult to model some neural processes, such as an absolute refractory period or a postsynaptic potential (PSP) of fixed duration, using a reversible Markov chain, but they are more conveniently modelled using an irreversible one. As we wish to keep the computational power of BMs and at the same time to augment the sampling procedure with aspects of neural dynamics (such as PSPs with fixed durations, refractory mechanisms) to increase biological realism, we focus in the following on irreversible MCMC methods (keeping in mind that this might not be the only possible way to achieve these goals).

Neural sampling in discrete time

Here we describe neural dynamics in discrete time with an absolute refractory period  . We interpret one step of the Markov chain as a time step

. We interpret one step of the Markov chain as a time step  in biological real time. The dynamics of the variable

in biological real time. The dynamics of the variable  , that describes the time course of the effect of a spike of neuron

, that describes the time course of the effect of a spike of neuron  , are defined in the following way.

, are defined in the following way.  is set to the value

is set to the value  when neuron

when neuron  fires, and decays by

fires, and decays by  at each subsequent discrete time step. The parameter

at each subsequent discrete time step. The parameter  is chosen to be some integer, so that

is chosen to be some integer, so that  decays back to

decays back to  in exactly

in exactly  time steps. The neuron can only spike (with a probability that is a function of its current membrane potential

time steps. The neuron can only spike (with a probability that is a function of its current membrane potential  ) if its variable

) if its variable  . If however,

. If however,  , the neuron is considered refractory and it cannot spike, but its

, the neuron is considered refractory and it cannot spike, but its  is reduced by 1 per time step. To show that these simple dynamics do indeed sample from the given distribution

is reduced by 1 per time step. To show that these simple dynamics do indeed sample from the given distribution  , we proceed in the following way. We define a joint distribution

, we proceed in the following way. We define a joint distribution  which has the desired marginal distribution

which has the desired marginal distribution  . Further we formalize the dynamics informally described above as a transition operator

. Further we formalize the dynamics informally described above as a transition operator  operating on the state vector

operating on the state vector  . Finally, in the Methods section, we show that

. Finally, in the Methods section, we show that  is the unique invariant distribution of this operator

is the unique invariant distribution of this operator  , i.e., that the dynamics described by

, i.e., that the dynamics described by  produce samples

produce samples  from the desired distribution

from the desired distribution  . We refer to sampling through networks with this stochastic spiking mechanism as neural sampling with absolute refractory period due to the persistent refractory process.

. We refer to sampling through networks with this stochastic spiking mechanism as neural sampling with absolute refractory period due to the persistent refractory process.

Given the distribution  that we want to sample from, we define the following joint distribution

that we want to sample from, we define the following joint distribution  over the neural variables:

over the neural variables:

|

(7) |

This definition of  simply expresses that if

simply expresses that if  , then the auxiliary variable

, then the auxiliary variable  can assume any value in

can assume any value in  with equal probability. On the other hand

with equal probability. On the other hand  necessarily assumes the value

necessarily assumes the value  if

if  (i.e., when the neuron is in its resting state).

(i.e., when the neuron is in its resting state).

The state transition operator  can be defined in a transparent manner as a composition of

can be defined in a transparent manner as a composition of  transition operators,

transition operators,  , where

, where  only updates the variables

only updates the variables  and

and  of neuron

of neuron  , i.e., the neurons are updated sequentially in the same order (this severe restriction will become obsolete in the case of continuous time discussed below). We define the composition as

, i.e., the neurons are updated sequentially in the same order (this severe restriction will become obsolete in the case of continuous time discussed below). We define the composition as  , i.e.,

, i.e.,  is applied prior to

is applied prior to  . The new values of

. The new values of  and

and  only depend on the previous value

only depend on the previous value  and on the current membrane potential

and on the current membrane potential  . The interesting dynamics take place in the variable

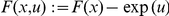

. The interesting dynamics take place in the variable  . They are illustrated in Figure 1 where the arrows represent transition probabilities greater than 0.

. They are illustrated in Figure 1 where the arrows represent transition probabilities greater than 0.

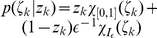

Figure 1. Neuron model with absolute refractory mechanism.

The figure shows a schematic of the transition operator  for the internal state variable

for the internal state variable  of a spiking neuron

of a spiking neuron  with an absolute refractory period. The neuron can fire in the resting state

with an absolute refractory period. The neuron can fire in the resting state  and in the last refractory state

and in the last refractory state  .

.

If the neuron  is not refractory, i.e.,

is not refractory, i.e.,  , it can spike (i.e., a transition from

, it can spike (i.e., a transition from  to

to  ) with probability

) with probability

| (8) |

where  is the standard sigmoidal activation function and the

is the standard sigmoidal activation function and the  denotes the natural logarithm. The term

denotes the natural logarithm. The term  is the current membrane potential, which depends on the current values of the variables

is the current membrane potential, which depends on the current values of the variables  for

for  . The term

. The term  in (8) reflects the granularity of a chosen discrete time scale. If it is very fine (say one step equals one microsecond), then

in (8) reflects the granularity of a chosen discrete time scale. If it is very fine (say one step equals one microsecond), then  is large, and the firing probability at each specific discrete time step is therefore reduced. If the neuron in a state with

is large, and the firing probability at each specific discrete time step is therefore reduced. If the neuron in a state with  does not spike,

does not spike,  relaxes into the resting state

relaxes into the resting state  corresponding to a non-refractory neuron.

corresponding to a non-refractory neuron.

If the neuron is in a refractory state, i.e.,  , its new variable

, its new variable  assumes deterministically the next lower value

assumes deterministically the next lower value  , reflecting the inherent temporal process:

, reflecting the inherent temporal process:

| (9) |

After the transition of the auxiliary variable  , the binary variable

, the binary variable  is deterministically set to a consistent state, i.e.,

is deterministically set to a consistent state, i.e.,  if

if  and

and  if

if  .

.

It can be shown that each of these stochastic state transition operators  leaves the given distribution

leaves the given distribution  invariant, i.e., satisfies equation (1). This implies that any composition or mixture of these operators

invariant, i.e., satisfies equation (1). This implies that any composition or mixture of these operators  also leaves

also leaves  invariant, see, e.g., [38]. In particular, the composition

invariant, see, e.g., [38]. In particular, the composition  of these operators

of these operators  leaves

leaves  invariant, which has a quite natural interpretation as firing dynamics of the spiking neural network

invariant, which has a quite natural interpretation as firing dynamics of the spiking neural network  : At each discrete time step the variables

: At each discrete time step the variables  are updated for all neurons

are updated for all neurons  , where the update of

, where the update of  takes preceding updates for

takes preceding updates for  with

with  into account. Alternatively, one could also choose at each discrete time step a different order for updates according to [38]. The assumption of a well-regulated updating policy will be overcome in the continuous-time limit, i.e., in case where the neural dynamics are described as a Markov jump process. In the methods section we prove the following central theorem:

into account. Alternatively, one could also choose at each discrete time step a different order for updates according to [38]. The assumption of a well-regulated updating policy will be overcome in the continuous-time limit, i.e., in case where the neural dynamics are described as a Markov jump process. In the methods section we prove the following central theorem:

Theorem 1

is the unique invariant distribution of operator

is the unique invariant distribution of operator

, i.e.,

, i.e.,

is aperiodic and irreducible and satisfies

is aperiodic and irreducible and satisfies

| (10) |

The proof of this Theorem is provided by Lemmata 1 – 3 in the Methods section. The statement that  (which is composed of the operators

(which is composed of the operators  ) is irreducible and aperiodic ensures that

) is irreducible and aperiodic ensures that  is the unique invariant distribution of the Markov chain defined by

is the unique invariant distribution of the Markov chain defined by  , i.e., that irrespective of the initial network state the successive application of

, i.e., that irrespective of the initial network state the successive application of  explores the whole state space in a non-periodic manner.

explores the whole state space in a non-periodic manner.

This theorem guarantees that after a sufficient “burn-in” time (more precisely in the limit of an infinite “burn-in” time), the dynamics of the network, which are given by the transition operator  , produce samples from the distribution

, produce samples from the distribution  . As by construction

. As by construction  , the Markov chain provides samples from the given distribution

, the Markov chain provides samples from the given distribution  . Furthermore, the network

. Furthermore, the network  can carry out probabilistic inference for this distribution. For example,

can carry out probabilistic inference for this distribution. For example,  can be used to sample from the posterior distribution

can be used to sample from the posterior distribution  over

over  given

given  . One just needs to clamp those neurons

. One just needs to clamp those neurons  to the corresponding observed values. This could be implemented by injecting a strong positive (negative) current into the units with

to the corresponding observed values. This could be implemented by injecting a strong positive (negative) current into the units with  (

( ). Then, as soon as the stochastic dynamics of

). Then, as soon as the stochastic dynamics of  has converged to its invariant distribution, the averaged firing rate of neuron

has converged to its invariant distribution, the averaged firing rate of neuron  is proportional to the following desired marginal probability

is proportional to the following desired marginal probability

In a biological neural system this result of probabilistic inference could for example be read out by an integrator neuron that counts spikes from this neuron  within a behaviorally relevant time window of a few hundred milliseconds, similarly as the experimentally reported integrator neurons in area LIP of monkey cortex [20], [21]. Another readout neuron that receives spike input from

within a behaviorally relevant time window of a few hundred milliseconds, similarly as the experimentally reported integrator neurons in area LIP of monkey cortex [20], [21]. Another readout neuron that receives spike input from  could at the same time estimate

could at the same time estimate  for another RV

for another RV  . But valuable information for probabilistic inference is not only provided by firing rates or spike counts, but also by spike correlations of the neurons

. But valuable information for probabilistic inference is not only provided by firing rates or spike counts, but also by spike correlations of the neurons  in

in  . For example, the probability

. For example, the probability  can be estimated by a readout neuron that responds to superpositions of EPSPs caused by near-coincident firing of neurons

can be estimated by a readout neuron that responds to superpositions of EPSPs caused by near-coincident firing of neurons  and

and  within a time interval of length

within a time interval of length  . Thus, a large number of different probabilistic inferences can be carried out efficiently in parallel by readout neurons that receive spike input from different subsets of neurons in the network

. Thus, a large number of different probabilistic inferences can be carried out efficiently in parallel by readout neurons that receive spike input from different subsets of neurons in the network  .

.

Variation of the discrete time model with a relative refractory mechanism

For the previously described simple neuron model, the refractory process was assumed to last for  time steps, exactly as long as the postsynaptic potentials caused by each spike. In this section we relax this assumption by introducing a more complex and biologically more realistic neuron model, where the duration of the refractory process is decoupled from the duration

time steps, exactly as long as the postsynaptic potentials caused by each spike. In this section we relax this assumption by introducing a more complex and biologically more realistic neuron model, where the duration of the refractory process is decoupled from the duration  of a postsynaptic potential. Thus, this model can for example also fire bursts of spikes with an interspike interval

of a postsynaptic potential. Thus, this model can for example also fire bursts of spikes with an interspike interval  . The introduction of this more complex neuron model comes at the price that one can no longer prove that a network of such neurons samples from the desired distribution

. The introduction of this more complex neuron model comes at the price that one can no longer prove that a network of such neurons samples from the desired distribution  . Nevertheless, if the sigmoidal activation function

. Nevertheless, if the sigmoidal activation function  is replaced by a different activation function

is replaced by a different activation function  , one can still prove that the sampling is “locally correct”, as specified in equation (12) below. Furthermore, our computer simulations suggest that also globally the error introduced by the more complex neuron model is not functionally significant, i.e. that statistical dependencies between the RVs

, one can still prove that the sampling is “locally correct”, as specified in equation (12) below. Furthermore, our computer simulations suggest that also globally the error introduced by the more complex neuron model is not functionally significant, i.e. that statistical dependencies between the RVs  are still faithfully captured.

are still faithfully captured.

The neuron model with a relative refractory period is defined in the following way. Consider some arbitrary refractory function  with

with  , and

, and  for

for  . The idea is that

. The idea is that  models the readiness of the neuron to fire in its state

models the readiness of the neuron to fire in its state  . This readiness has value

. This readiness has value  when the neuron has fired at the preceding time step (i.e.,

when the neuron has fired at the preceding time step (i.e.,  ), and assumes the resting state

), and assumes the resting state  when

when  has dropped to

has dropped to  . In between, the readiness may take on any non-negative value according to the function

. In between, the readiness may take on any non-negative value according to the function  . The function

. The function  does not need to be monotonic, allowing for example that it increases to high values in between, yielding a preferred interspike interval of a oscillatory neuron. The firing probability of neuron

does not need to be monotonic, allowing for example that it increases to high values in between, yielding a preferred interspike interval of a oscillatory neuron. The firing probability of neuron  in state

in state  is given by

is given by  , where

, where  is an appropriate function of the membrane potential as described below. Thus this function

is an appropriate function of the membrane potential as described below. Thus this function  is closely related to the function

is closely related to the function  (called afterpotential) in the spike response model [5] as well as to the self-excitation kernel in Generalized Linear Models [42]. In general, different neurons in the network may have different refractory profiles, which can be modeled by a different refractory function for each neuron

(called afterpotential) in the spike response model [5] as well as to the self-excitation kernel in Generalized Linear Models [42]. In general, different neurons in the network may have different refractory profiles, which can be modeled by a different refractory function for each neuron  . However for the sake of notational simplicity we assume a single refractory function in the following.

. However for the sake of notational simplicity we assume a single refractory function in the following.

In the presence of this refractory function  one needs to replace the sigmoidal activation function

one needs to replace the sigmoidal activation function  by a suitable function

by a suitable function  that satisfies the condition

that satisfies the condition

| (11) |

for all real numbers  . This equation can be derived (see Methods section Lemma 0) if one requires each neuron

. This equation can be derived (see Methods section Lemma 0) if one requires each neuron  to represent the correct distribution

to represent the correct distribution  over

over  conditioned the variables

conditioned the variables  . One can show that, for any

. One can show that, for any  as above, there always exists a continuous, monotonic function

as above, there always exists a continuous, monotonic function  which satisfies this equation (see Lemma 0 in Methods). Unfortunately (11) cannot be solved analytically for

which satisfies this equation (see Lemma 0 in Methods). Unfortunately (11) cannot be solved analytically for  in general. Hence, for simulations we approximate the function

in general. Hence, for simulations we approximate the function  for a given

for a given  by numerically solving (11) on a grid and interpolating between the grid points with a constant function. Examples for several functions

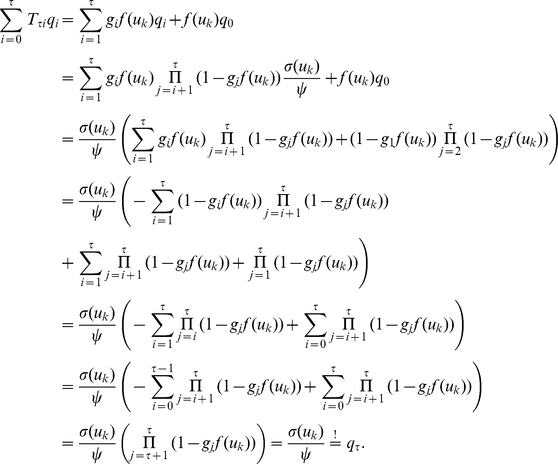

by numerically solving (11) on a grid and interpolating between the grid points with a constant function. Examples for several functions  and the associated

and the associated  are shown in Figure 2B and Figure 2C respectively. Furthermore, spike trains emitted by single neurons with these refractory functions

are shown in Figure 2B and Figure 2C respectively. Furthermore, spike trains emitted by single neurons with these refractory functions  and the corresponding functions

and the corresponding functions  are shown in Figure 2D for the case of piecewise constant membrane potentials. This figure indicates, that functions

are shown in Figure 2D for the case of piecewise constant membrane potentials. This figure indicates, that functions  that define a shorter refractory effect lead to higher firing rates and more irregular firing. It is worth noticing that the standard activation function

that define a shorter refractory effect lead to higher firing rates and more irregular firing. It is worth noticing that the standard activation function  is the solution of equation (11) for the absolute refractory function, i.e., for

is the solution of equation (11) for the absolute refractory function, i.e., for  and

and  for

for  .

.

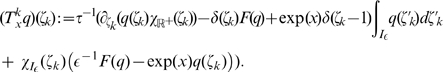

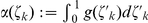

Figure 2. Neuron model with relative refractory mechanism.

The figure shows the transition operator  , refractory functions

, refractory functions  and activation functions

and activation functions  for the neuron model with relative refractory mechanism. (A) Transition probabilities of the internal variable

for the neuron model with relative refractory mechanism. (A) Transition probabilities of the internal variable  given by

given by  . (B) Three examples of possible refractory functions

. (B) Three examples of possible refractory functions  . They assume value

. They assume value  when the neuron cannot spike, and return to value

when the neuron cannot spike, and return to value  (full readiness to fire again) with different time courses. The value of

(full readiness to fire again) with different time courses. The value of  at intermediate time points regulates the current probability of firing of neuron

at intermediate time points regulates the current probability of firing of neuron  (see A). The x-axis is equivalent to the number of time steps since last spike (running from 0 to

(see A). The x-axis is equivalent to the number of time steps since last spike (running from 0 to  from left to right). (C) Associated activation functions

from left to right). (C) Associated activation functions  according to (11). (D) Spike trains produced by the resulting three different neuron models with (hypothetical) membrane potentials that jump at time

according to (11). (D) Spike trains produced by the resulting three different neuron models with (hypothetical) membrane potentials that jump at time  from a constant low value to a constant high value. Black horizontal bars indicate spikes, and the active states

from a constant low value to a constant high value. Black horizontal bars indicate spikes, and the active states  are indicated by gray shaded areas of duration

are indicated by gray shaded areas of duration  after each spike. It can be seen from this example that different refractory mechanisms give rise to different spiking dynamics.

after each spike. It can be seen from this example that different refractory mechanisms give rise to different spiking dynamics.

The transition operator  is defined for this model in a very similar way as before. However, for

is defined for this model in a very similar way as before. However, for  , when the variable

, when the variable  was deterministically reduced by

was deterministically reduced by  in the simpler model (yielding

in the simpler model (yielding  ), this reduction occurs now only with probability

), this reduction occurs now only with probability  . With probability

. With probability  the operator

the operator  sets

sets  , modeling the firing of another spike of neuron

, modeling the firing of another spike of neuron  at this time point. The neural computability condition (4) remains unchanged, e.g.,

at this time point. The neural computability condition (4) remains unchanged, e.g.,  for a Boltzmann distribution. A schema of the stochastic dynamics of this local state transition operator

for a Boltzmann distribution. A schema of the stochastic dynamics of this local state transition operator  is shown in Figure 2A.

is shown in Figure 2A.

This transition operator  has the following properties. In Lemma 0 in Methods it is proven that the unique invariant distribution of

has the following properties. In Lemma 0 in Methods it is proven that the unique invariant distribution of  , denoted as

, denoted as  , gives rise to the correct marginal distribution over

, gives rise to the correct marginal distribution over  , i.e.

, i.e.

This means that a neuron whose dynamics is described by  samples from the correct distribution

samples from the correct distribution  if it receives a static input from the other neurons in the network, i.e., as long as its membrane potential

if it receives a static input from the other neurons in the network, i.e., as long as its membrane potential  is constant. Hence the “local” computation performed by such neuron can be considered as correct. If however, several neurons in the network change their states in a short interval of time, the joint distribution over

is constant. Hence the “local” computation performed by such neuron can be considered as correct. If however, several neurons in the network change their states in a short interval of time, the joint distribution over  is in general not the desired one, i.e.,

is in general not the desired one, i.e.,  , where

, where  denotes the invariant distribution of

denotes the invariant distribution of  . In the Methods section, we present simulation results that indicate that the error of the approximation to the desired Boltzmann distributions introduced by neural sampling with relative refractory mechanism is rather minute. It is shown that the neural sampling approximation error is orders of magnitudes below the one introduced by a fully factorized distribution (which amounts to assuming correct marginal distributions

. In the Methods section, we present simulation results that indicate that the error of the approximation to the desired Boltzmann distributions introduced by neural sampling with relative refractory mechanism is rather minute. It is shown that the neural sampling approximation error is orders of magnitudes below the one introduced by a fully factorized distribution (which amounts to assuming correct marginal distributions  and independent neurons).

and independent neurons).

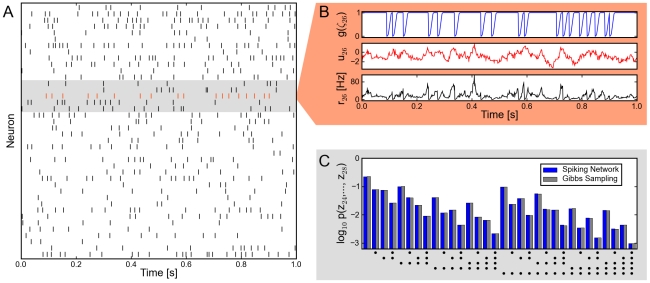

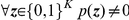

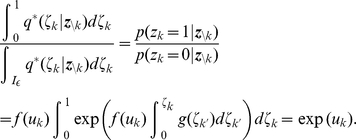

To illustrate the sampling process with the relative refractory mechanism, we examine a network of  neurons. We aim to sample from a Boltzmann distribution (5) with parameters

neurons. We aim to sample from a Boltzmann distribution (5) with parameters  ,

,  being randomly drawn from normal distributions. For the neuron model, we use the relative refractory mechanism shown in the mid row of Figure 2B. A detailed description of the simulation and the parameters used is given in the Methods section. A spike pattern of the resulting sampling network is shown in Figure 3A. The network features a sparse, irregular spike response with average firing rate of

being randomly drawn from normal distributions. For the neuron model, we use the relative refractory mechanism shown in the mid row of Figure 2B. A detailed description of the simulation and the parameters used is given in the Methods section. A spike pattern of the resulting sampling network is shown in Figure 3A. The network features a sparse, irregular spike response with average firing rate of  . For one neuron

. For one neuron  , indicated with orange spikes, the internal dynamics are shown in Figure 3B. After each action potential the neuron’s refractory function

, indicated with orange spikes, the internal dynamics are shown in Figure 3B. After each action potential the neuron’s refractory function  drops to zero and reduces the probability of spiking again in a short time interval. The influence of the remaining network

drops to zero and reduces the probability of spiking again in a short time interval. The influence of the remaining network  is transmitted to neuron

is transmitted to neuron  via PSPs of duration

via PSPs of duration  and sums up to the fluctuating membrane potential

and sums up to the fluctuating membrane potential  . As reflected in the highly variable membrane potential even this small network exhibits rich interactions. To represent the correct distribution

. As reflected in the highly variable membrane potential even this small network exhibits rich interactions. To represent the correct distribution  over

over  conditioned on

conditioned on  , the neuron

, the neuron  continuously adapts its instantaneous firing rate. To quantify the precision with which the spiking network draws samples from the target distribution (5), Figure 3C shows the joint distribution of

continuously adapts its instantaneous firing rate. To quantify the precision with which the spiking network draws samples from the target distribution (5), Figure 3C shows the joint distribution of  neurons. For comparison we accompany the distribution of sampled network states with the result obtained from the standard Gibbs sampling algorithm (considered as the ground truth). Since the number of possible states

neurons. For comparison we accompany the distribution of sampled network states with the result obtained from the standard Gibbs sampling algorithm (considered as the ground truth). Since the number of possible states  grows exponentially in the number of neurons, we restrict ourselves for visualization purposes to the distribution

grows exponentially in the number of neurons, we restrict ourselves for visualization purposes to the distribution  of the gray shaded units and marginalize over the remaining network. The probabilities are estimated from

of the gray shaded units and marginalize over the remaining network. The probabilities are estimated from  samples, i.e., from

samples, i.e., from  successive states

successive states  of the Markov chain. Stochastic deviations of the estimated probabilities due to the finite number of samples are quite small (typical errors

of the Markov chain. Stochastic deviations of the estimated probabilities due to the finite number of samples are quite small (typical errors  ) and are comparable to systematic deviations due to the only locally correct computation of neurons with relative refractory mechanism. In the Methods section, we present further simulation results showing that the proposed networks consisting of neurons with relative refractory mechanism approximate the desired target distributions faithfully over a large range of distribution parameters.

) and are comparable to systematic deviations due to the only locally correct computation of neurons with relative refractory mechanism. In the Methods section, we present further simulation results showing that the proposed networks consisting of neurons with relative refractory mechanism approximate the desired target distributions faithfully over a large range of distribution parameters.

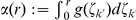

Figure 3. Sampling from a Boltzmann distribution by spiking neurons with relative refractory mechanism.

(A) Spike raster of the network. (B) Traces of internal state variables of a neuron (# 26, indicated by orange spikes in A). The rich interaction of the network gives rise to rapidly changing membrane potentials and instantaneous firing rates. (C) Joint distribution of 5 neurons (gray shaded area in A) obtained by the spiking neural network and Gibbs sampling from the same distribution. Active states  are indicated by a black dot, using one row for each neuron

are indicated by a black dot, using one row for each neuron  , the columns list all

, the columns list all  possible states

possible states  of these

of these  neurons. The tight match between both distributions suggests that the spiking network represents the target probability distribution

neurons. The tight match between both distributions suggests that the spiking network represents the target probability distribution  with high accuracy.

with high accuracy.

In order to illustrate that the proposed sampling networks feature biologically quite realistic spiking dynamics, we present in the Methods section several neural firing statistics (e.g., the inter-spike interval histogram) of the network model. In general, the statistics computed from the model match experimentally observed statistics well. The proposed network models are based on the assumption of rectangular-shaped, renewal PSPs. More precisely, we define renewal (or non-additive) PSPs in the following way. Renewal PSPs evoked by a single synapse do not add up but are merely prolonged in their duration (according to equation (6)); renewal PSPs elicited at different synapses nevertheless add up in the normal way. In Methods we investigate the impact of replacing the theoretically ideal rectangular-shaped, renewal PSPs with biologically more realistic alpha-shaped, additive PSPs. Simulation results suggest that the network model with alpha-shaped PSPs does not capture the target distribution as accurately as with the theoretically ideal PSP shapes, statistical dependencies between the RVs  are however still approximated reasonably well.

are however still approximated reasonably well.

Neural sampling in continuous time

The neural sampling model proposed above was formulated in discrete time of step size  , inspired by the discrete time nature of MCMC techniques in statistics and machine learning as well as to make simulations possible on digital computers. However, models in continuous time (e.g., ordinary differential equations) are arguably more natural and “realistic” descriptions of temporally varying biological processes. This gives rise to the question whether one can find a sensible limit of the discrete time model in the limit

, inspired by the discrete time nature of MCMC techniques in statistics and machine learning as well as to make simulations possible on digital computers. However, models in continuous time (e.g., ordinary differential equations) are arguably more natural and “realistic” descriptions of temporally varying biological processes. This gives rise to the question whether one can find a sensible limit of the discrete time model in the limit  , yielding a sampling network model in continuous time. Another motivation for considering continuous time models for neural sampling is the fact that many mathematical models for recurrent networks are formulated in continuous time [5], and a comparison to these existing models would be facilitated. Here we propose a stochastically spiking neural network model in continuous time, whose states still represent correct samples from the desired probability distribution

, yielding a sampling network model in continuous time. Another motivation for considering continuous time models for neural sampling is the fact that many mathematical models for recurrent networks are formulated in continuous time [5], and a comparison to these existing models would be facilitated. Here we propose a stochastically spiking neural network model in continuous time, whose states still represent correct samples from the desired probability distribution  at any time

at any time  . These types of models are usually referred to as Markov jump processes. It can be shown that discretizing this continuous time model yields the discrete time model defined earlier, which thus can be regarded as a version suitable for simulations on a digital computer.

. These types of models are usually referred to as Markov jump processes. It can be shown that discretizing this continuous time model yields the discrete time model defined earlier, which thus can be regarded as a version suitable for simulations on a digital computer.

We define the continuous time model in the following way. Let  , for

, for  , denote the firing times of neuron

, denote the firing times of neuron  . The refractory process of this neuron, in analogy to Figure 1 and equation (8)-(9) for the case of discrete time, is described by the following differential equation for the auxiliary variable

. The refractory process of this neuron, in analogy to Figure 1 and equation (8)-(9) for the case of discrete time, is described by the following differential equation for the auxiliary variable  , which may now assume any nonnegative real number

, which may now assume any nonnegative real number  :

:

|

(12) |

Here  denotes Dirac’s Delta centered at the spike time

denotes Dirac’s Delta centered at the spike time  . This differential equation describes the following simple dynamics. The auxiliary variable

. This differential equation describes the following simple dynamics. The auxiliary variable  decays linearly with time constant

decays linearly with time constant  when the neuron is refractory, i.e.,

when the neuron is refractory, i.e.,  . Once

. Once  arrives at its resting state

arrives at its resting state  it remains there, corresponding to the neuron being ready to spike again (more precisely, in order to avoid point measures we set it to a random value in

it remains there, corresponding to the neuron being ready to spike again (more precisely, in order to avoid point measures we set it to a random value in  , see Methods). In the resting state, the neuron has the probability density

, see Methods). In the resting state, the neuron has the probability density  to fire at every time

to fire at every time  . If it fires at

. If it fires at  , this results in setting

, this results in setting  , which is formalized in equation (12) by the sum of Dirac Delta’s

, which is formalized in equation (12) by the sum of Dirac Delta’s  . Here the current membrane potential

. Here the current membrane potential  at time

at time  is defined as in the discrete time case, e.g., by

is defined as in the discrete time case, e.g., by  for the case of a Boltzmann distribution (5). The binary variable

for the case of a Boltzmann distribution (5). The binary variable  is defined to be 1 if

is defined to be 1 if  and 0 if the neuron is in the resting state

and 0 if the neuron is in the resting state  . Biologically, the term

. Biologically, the term  can again be interpreted as the value at time

can again be interpreted as the value at time  of a rectangular-shaped PSP (with a duration of

of a rectangular-shaped PSP (with a duration of  ) that neuron

) that neuron  evokes in neuron

evokes in neuron  . As the spikes are discrete events in continuous time, the probability of two or more neurons spiking at the same time is zero. This allows for updating all neurons in parallel using a differential equation.

. As the spikes are discrete events in continuous time, the probability of two or more neurons spiking at the same time is zero. This allows for updating all neurons in parallel using a differential equation.

In analogy to the discrete time case, the neural network in continuous time can be shown to sample from the desired distribution  , i.e.,

, i.e.,  is an invariant distribution of the network dynamics defined above. However, to establish this fact, one has to rely on a different mathematical framework. The probability distribution

is an invariant distribution of the network dynamics defined above. However, to establish this fact, one has to rely on a different mathematical framework. The probability distribution  of the auxiliary variables

of the auxiliary variables  as a function of time

as a function of time  , which describes the evolution of the network, obeys a partial differential equation, the so-called Differential-Chapman-Kolmogorov equation (see [43]):

, which describes the evolution of the network, obeys a partial differential equation, the so-called Differential-Chapman-Kolmogorov equation (see [43]):

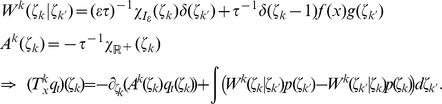

| (13) |

where the operator  , which captures the dynamics of the network, is implicitly defined by the differential equations (12) and the spiking probabilities. This operator

, which captures the dynamics of the network, is implicitly defined by the differential equations (12) and the spiking probabilities. This operator  is the continuous time equivalent to the transition operator

is the continuous time equivalent to the transition operator  in the discrete time case. The operator

in the discrete time case. The operator  consists here of two components. The drift term captures the deterministic decay process of

consists here of two components. The drift term captures the deterministic decay process of  , stemming from the term

, stemming from the term  in equation (12). The jump term describes the non-continuous aspects of the path

in equation (12). The jump term describes the non-continuous aspects of the path  associated with “jumping” from

associated with “jumping” from  to

to  at the time

at the time  when the neuron fires.

when the neuron fires.

In the Methods section we prove that the resulting time invariant distribution, i.e., the distribution that solves  , now denoted

, now denoted  as it is not a function of time, gives rise to the desired marginal distribution

as it is not a function of time, gives rise to the desired marginal distribution  over

over  :

:

| (14) |

where  and

and  if

if  and

and  otherwise.

otherwise.  denotes Kronecker’s Delta with

denotes Kronecker’s Delta with  if

if  and

and  otherwise. Thus, the function

otherwise. Thus, the function  simply reflects the definition that

simply reflects the definition that  if

if  and 0 otherwise. For an explicit definition of

and 0 otherwise. For an explicit definition of  , a proof of the above statement, and some additional comments see the Methods section.

, a proof of the above statement, and some additional comments see the Methods section.

The neural samplers in discrete and continuous time are closely related. The model in discrete time provides an increasingly more precise description of the inherent spike dynamics when the duration  of the discrete time step is reduced, causing an increase of

of the discrete time step is reduced, causing an increase of  (such that

(such that  is constant) and therefore a reduced firing probability of each neuron at any discrete time step (see the term

is constant) and therefore a reduced firing probability of each neuron at any discrete time step (see the term  in equation (8)). In the limit of

in equation (8)). In the limit of  approaching

approaching  , the probability that two or more neurons will fire at the same time approaches

, the probability that two or more neurons will fire at the same time approaches  , and the discrete time sampler becomes equal to the continuous time system defined above, which updates all units in parallel.

, and the discrete time sampler becomes equal to the continuous time system defined above, which updates all units in parallel.

It is also possible to formulate a continuous time version of the neural sampler based on neuron models with relative refractory mechanisms. In the Methods section the resulting continuous time neuron model with a relative refractory mechanism is defined. Theoretical results similar to the discrete time case can be derived for this sampler (see Lemmata 9 and 10 in Methods): It is shown that each neuron “locally” performs the correct computation under the assumption of static input from the remaining neurons. However one can no longer prove in general that the global network samples from the target distribution  .

.

Demonstration of probabilistic inference with recurrent networks of spiking neurons in an application to perceptual multistability

In the following we present a network model for perceptual multistability based on the neural sampling framework introduced above. This simulation study is aimed at showing that the proposed network can indeed sample from a desired distribution and also perform inference, i.e., sample from the correct corresponding posterior distribution. It is not meant to be a highly realistic or exhaustive model of perceptual multistability nor of biologically plausible learning mechanisms. Such models would naturally require considerably more modelling work.

Perceptual multistability evoked by ambiguous sensory input, such as a 2D drawing (e.g., Necker cube) that allows for different consistent 3D interpretations, has become a frequently studied perceptual phenomenon. The most important finding is that the perceptual system of humans and nonhuman primates does not produce a superposition of different possible percepts of an ambiguous stimulus, but rather switches between different self-consistent global percepts in a spontaneous manner. Binocular rivalry, where different images are presented to the left and right eye, has become a standard experimental paradigm for studying this effect [44]–[47]. A typical pair of stimuli are the two images shown in Figure 4A. Here the percepts of humans and nonhuman primates switch (seemingly stochastically) between the two presented orientations. [16]–[18] propose that several aspects of experimental data on perceptual multistability can be explained if one assumes that percepts correspond to samples from the conditional distribution over interpretations (e.g., different 3D shapes) given the visual input (e.g., the 2D drawing). Furthermore, the experimentally observed fact that percepts tend to be stable on the time scale of seconds suggests that perception can be interpreted as probabilistic inference that is carried out by MCMC sampling which produces successively correlated samples. In [18] it is shown that this MCMC interpretation is also able to qualitatively reproduce the experimentally observed distribution of dominance durations, i.e., the distribution of time intervals between perceptual switches. However, in lack of an adequate model for sampling by a recurrent network of spiking neurons, theses studies could describe this approach only on a rather abstract level, and pointed out the open problem to relate this algorithmic approach to neural processes. We have demonstrated in a computer simulation that the previously described model for neural sampling could in principle fill this gap, providing a modelling framework that is on the one hand consistent with the dynamics of networks of spiking neurons, and which can on the other hand also be clearly understood from the perspective of probabilistic inference through MCMC sampling.

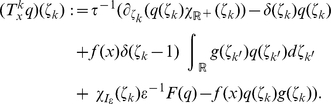

Figure 4. Modeling perceptual multistability as probabilistic inference with neural sampling.

(A) Typical visual stimuli for the left and right eye in binocular rivalry experiments. (B) Tuning curve of a neuron with preferred orientation  . (C) Distribution of dominance durations in the trained network under ambiguous input. The red curve shows the Gamma distribution with maximum likelihood on the data. (D) 2-dimensional projection (via population vector) of the distribution