Abstract

Attention and language are among the most intensively researched abilities in the cognitive neurosciences, but the relation between these abilities has largely been neglected. There is increasing evidence, however, that linguistic processes, such as those underlying the planning of words, cannot proceed without paying some form of attention. Here, we review evidence that word planning requires some but not full attention. The evidence comes from chronometric studies of word planning in picture naming and word reading under divided attention conditions. It is generally assumed that the central attention demands of a process are indexed by the extent that the process delays the performance of a concurrent unrelated task. The studies measured the speed and accuracy of linguistic and non-linguistic responding as well as eye gaze durations reflecting the allocation of attention. First, empirical evidence indicates that in several task situations, processes up to and including phonological encoding in word planning delay, or are delayed by, the performance of concurrent unrelated non-linguistic tasks. These findings suggest that word planning requires central attention. Second, empirical evidence indicates that conflicts in word planning may be resolved while concurrently performing an unrelated non-linguistic task, making a task decision, or making a go/no-go decision. These findings suggest that word planning does not require full central attention. We outline a computationally implemented theory of attention and word planning, and describe at various points the outcomes of computer simulations that demonstrate the utility of the theory in accounting for the key findings. Finally, we indicate how attention deficits may contribute to impaired language performance, such as in individuals with specific language impairment.

Keywords: attention, dual-task performance, naming, reading, response times, specific language impairment

Introduction

In his classic monograph Die Sprache, Wundt (1900) – the founder of modern scientific psychology and psycholinguistics – criticized the now classic model of normal and impaired word production and comprehension of Wernicke (1874) by arguing that processing words is an attention demanding rather than an automatic process. According to Wundt (1900), a central attention system located in the frontal lobes of the human brain actively controls a lexical network centered around perisylvian brain areas, described by the Wernicke model. More than a century later, attention and language are among the most intensively researched abilities in the cognitive neurosciences, but the relation between these abilities has largely been neglected. Modern computational models of normal and impaired picture naming and word reading build in many respects on Wernicke’s model (e.g., Dell et al., 1997; Coltheart et al., 2001), but they do not address Wundt’s concern of how word processing is controlled by attention. Word processing in these models makes no demands on non-linguistic processing mechanisms or resources and does not depend on top-down attentional control.

There is increasing evidence, however, that most language processes underlying picture naming and word reading cannot proceed without paying some form of attention. It is generally assumed that the central attention demands of a process are indexed by the extent to which the process delays the performance of a concurrent unrelated task (e.g., Johnston et al., 1995). Circumstantial evidence that language performance requires central attention is provided by the effort associated with talking or reading in a foreign language or talking while driving a car in heavy traffic. Experiments on dual-task performance provide evidence that the alleged prototype of an automatic language process, the generation of a phonological code (e.g., Ferreira and Pashler, 2002), in fact requires central attention in both word reading (Reynolds and Besner, 2006) and picture naming (Roelofs, 2008a). The evidence asks for a reexamination of the century-old dogma that most processes in naming and reading are automatic (i.e., require no attentional capacity), which is the aim of the present article.

As Wundt (1900) argued, understanding the relation between attention and language is of great theoretical and practical importance. To the extent that central attention determines language performance, psycholinguistic models that only address language processes are incomplete. Moreover, evidence suggests that inefficient allocation and deficits of attention contribute to language impairments in aphasia and dyslexia (e.g., Murray, 1999; Shaywitz and Shaywitz, 2008). Also, there is evidence that attention deficits play a role in the impaired language performance of individuals with specific language impairment (SLI; e.g., Im-Bolter et al., 2006; Spaulding et al., 2008; Finneran et al., 2009). A better understanding of the relation between attention and language may help improve therapeutic interventions.

Attention comprises several different abilities. A prominent theory proposed by Posner and colleagues (e.g., Posner and Raichle, 1994; Posner and Rothbart, 2007) distinguishes three fundamental aspects, referred to as alerting, orienting, and executive control. Alerting concerns the achievement and maintenance of an alert state. This maintenance is often referred to as sustained attention or vigilance. Orienting concerns the direction of processing toward a location in space by overtly shifting gaze or covertly shifting the locus of processing while keeping the eyes fixed. Executive control concerns the regulative processes that ensure that thoughts and actions are in accordance with goals. This ability is engaged in the selection among competitors, controlled memory retrieval, the coordination of processes, and the allocation of central attentional capacity (e.g., Baddeley, 1996). Executive control also regulates overt and covert orienting. The performance of the central executive depends on the state of vigilance (e.g., Kahneman, 1973). In the present article, we concentrate on the executive control aspect of attention, and briefly address the orienting of attention (i.e., gaze shifting) and aspects of sustained attention.

The remainder of the article is organized as follows. We start by outlining a computationally implemented theory of attention and word planning, which serves as the theoretical framework for the present article. The theory acknowledges many aspects of the work of Wernicke, but also addresses Wundt’s critique by including assumptions on how word planning is controlled. Next, we review empirical results indicating that in several task situations, processes up to and including phonological encoding in word planning delay, or are delayed by, the performance of concurrent unrelated non-linguistic tasks. These findings suggest that word planning requires central attention. Then, we review empirical results indicating that conflicts in word planning may be resolved while concurrently performing an unrelated non-linguistic task, making a task decision, or making a go/no-go decision. These findings suggest that word planning does not require full central attention, contrary to claims in the literature that processes in word planning cannot occur in parallel with processes in non-linguistic tasks if both require central attention (e.g., Ferreira and Pashler, 2002; Dell’Acqua et al., 2007; Ayora et al., 2011). At various points, we describe the outcomes of computer simulations that demonstrate the utility of our theory in accounting for the key empirical findings on word production under divided attention conditions. We end by indicating how attention deficits may contribute to impaired language performance, such as in individuals with SLI.

Outline of a Theory of Attention in Word Planning

Functional aspects

In the present article, attention to word planning is addressed using the theoretical framework of the WEAVER++ model (Roelofs, 1992, 1997, 2003, 2004, 2006, 2007, 2008a,b,c; Levelt et al., 1999; Piai et al., 2011). This model makes a distinction between declarative (i.e., associative memory) and procedural (i.e., rule system) aspects of word planning (cf. Ullman, 2004). Information about words is stored in a large associative network. WEAVER++’s lexical network is accessed by spreading activation while condition–action rules determine what is done with the activated lexical information depending on the goal (e.g., to name a picture or read aloud a word). When a goal is placed in working memory, processing in the system is focused on those rules that include the goal among their conditions. The rules mediate attentional influences by selectively enhancing the activation of target nodes in the network in order to achieve speeded and accurate picture naming and word reading.

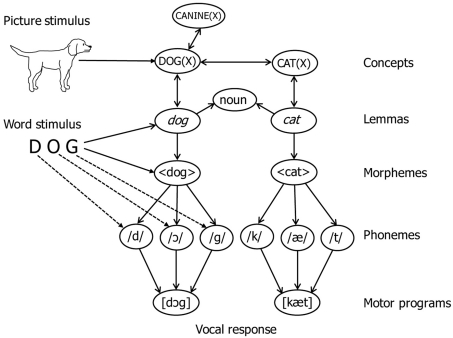

A fragment of the lexical network of WEAVER++ is illustrated in Figure 1. According to the model, the naming of pictures involves the activation of nodes for lexical concepts, lemmas, morphemes, phonemes, and syllable motor programs in associative memory. The nodes are selected by condition–action rules. For example, naming a pictured dog involves the activation and selection of the representation of the concept DOG(X), the lemma of dog specifying that the word is a noun (for languages such as Dutch, lemmas also specify grammatical gender), the morpheme 〈dog〉, the phonemes/d/,/ɔ/, and/g/, and the motor program [dɔg]. Not shown is that lemmas also allow for the specification of morphosyntactic parameters, such as number (singular, plural) for nouns and number, person (first, second, third), and tense (past, present) for verbs, so that condition–action rules can retrieve appropriate inflectional morphemes (e.g., plural or past tense endings). Activation spreads from level to level, whereby each node sends a proportion of its activation to connected nodes. Consequently, network activation induced by perceived pictures decreases with network distance. The activation flow from concepts to phonological forms is limited unless attentional enhancements are involved to boost the activation of target concept nodes.

Figure 1.

Illustration of the lexical network of WEAVER++. Perceived pictures (e.g., of a dog) directly activate concept nodes and perceived words (e.g., DOG) directly activate lemma, morpheme, and phoneme nodes, after which other nodes become activated through spreading activation. The dashed lines indicate grapheme-to-phoneme correspondences.

The model assumes that perceived pictures have direct access to concepts [e.g., DOG(X)] and only indirect access to word forms (e.g., 〈dog〉 and/d/,/ɔ/,/g/), whereas perceived words have direct access to word forms and only indirect access to concepts. Consequently, naming pictures requires concept selection, whereas words can be read aloud without concept selection. The latter is achieved by mapping input word forms (e.g., the visual word DOG) directly onto output word forms (e.g., 〈dog〉 and/d/,/ɔ/,/g/), without engaging concepts and lemmas. With such direct form-to-form mapping, activation has to travel a much shorter network distance from input to output than with a mapping via concepts and lemmas. In word reading through the form-to-form route, the activation of target morphemes is enhanced by the attention system. Given the shorter network distance for word reading than picture naming, the attentional enhancements may be less for reading than naming, and successful reading relies much less on the enhancement than does naming.

As already explained, the activation enhancements in WEAVER++ are regulated by a system of condition–action rules. When a goal is placed in working memory, word planning is controlled by those rules that include the goal among their conditions. The activation enhancements are required until appropriate motor programs have been activated sufficiently, that is, above an availability threshold. The central executive determines how strongly and for how long the enhancement occurs. The required duration of the enhancement is assessed by monitoring the progress on word planning (i.e., the updating in working memory of subgoals to retrieve lemmas, morphemes, and so forth).

In planning words while simultaneously performing another task, the central executive coordinates the processes involved in such a way as to maintain acceptable levels of speed and accuracy, to minimize resource consumption and crosstalk between tasks, and to satisfy instructions about task priorities (cf. Meyer and Kieras, 1997a). Resources include the buffering of input, throughput, or output representations (e.g., motor programs) and central attentional capacity. The model assumes that attentional capacity is limited (i.e., there is a limit to the top-down activation enhancements), but the limit depends on the effort exerted at any time. The degree of effort depends on the demand of the concurrent processes, which is evaluated during task performance (cf. Kahneman, 1973).

Neural aspects

To assess the neural basis of the word planning process, Indefrey and Levelt (2004) conducted a meta-analysis of 82 neuroimaging studies on word production. The meta-analysis included picture naming (e.g., say “dog” to a picture of a dog), word generation (producing a use for a noun, e.g., say “walk” to the word DOG), word reading (e.g., say “dog” to the word DOG), and pseudoword reading (e.g., say “doz” to DOZ). Pseudowords are letter strings that include only combinations of letters that are permissible in the spelling of a language and that are pronounceable for speakers of the language. According to the meta-analysis, percepts and concepts in picture naming are activated in occipital and inferiotemporal regions of the brain. The middle part of the left middle temporal gyrus seems to be involved in lemma retrieval. Next, activation spreads to Wernicke’s area, where morphemes of the word seem to be retrieved. Activation is then transmitted to Broca’s area for morphological assembly as well as phoneme processing and syllabification (i.e., phonological encoding), see also Sahin et al. (2009) and Ullman (2004), among others. Next, motor programs are accessed. The sensorimotor areas control articulation. The form-to-form mapping in word reading may be accomplished by activating occipital and inferiotemporal regions (i.e., the occipito-temporal sulcus) for orthographic processing, inferioparietal cortex and the areas of Wernicke and Broca for aspects of form encoding, and motor areas for articulation (cf. Shaywitz and Shaywitz, 2008; Dehaene, 2009).

Neuroimaging studies have shown that especially the anterior cingulate cortex (ACC) and lateral prefrontal cortex (LPFC) are implicated in the executive control aspect of attention to word planning. For example, the ACC and LPFC are more active in word generation (say “walk” to the word DOG) when the attention demand is high than in word reading (say “dog” to DOG) when the demand is much lower (Petersen et al., 1988; Thompson-Schill et al., 1997). The increased activity in the frontal areas disappears when word selection becomes easy after repeated generation of the same word (Petersen et al., 1998). Moreover, activity in the frontal areas is higher in picture naming when there are several good names for a picture, making selection difficult, than when there is only a single appropriate name (Kan and Thompson-Schill, 2004). Also, the frontal areas are more active when retrieval fails and words are on the tip of the tongue than when words are readily available (Maril et al., 2001). Frontal areas are also more active in naming pictures with semantically related words superimposed (e.g., naming a pictured dog combined with the word CAT) than without word distractors (e.g., a pictured dog combined with XXX), as observed by de Zubicaray et al. (2001). Thus, the neuroimaging evidence suggests that medial and lateral prefrontal areas exert control over word planning. Along with the increased frontal activity, there is an elevation of activity in temporal areas for word planning (e.g., de Zubicaray et al., 2001).

Although both the ACC and LPFC are involved in executive control aspects of attention to word planning, these areas seem to play different roles. WEAVER++’s assumption that abstract condition–action rules mediate goal-oriented retrieval and selection processes in prefrontal cortex is supported by evidence from single cell recordings and hemodynamic neuroimaging studies (e.g., Sakai, 2008, for a review). Much evidence suggests that the dorsolateral prefrontal cortex is involved in maintaining goals in working memory (for a review, see Kane and Engle, 2002). Moreover, evidence suggests that the ventrolateral prefrontal cortex plays a role in selection among competing response alternatives (Thompson-Schill et al., 1997), the control of memory retrieval, or both (Badre et al., 2005). Researchers have found no agreement on whether the ACC performs conflict monitoring (e.g., Botvinick et al., 2001) or exerts regulatory influences over word planning processes, as has been assumed for WEAVER++ (Roelofs and Hagoort, 2002; Roelofs, 2003; Roelofs et al., 2006).

Evidence that Word Planning Requires Central Attention

Central attention demands of picture naming

The assumption that word planning requires attentional activation enhancements is not only supported by neuroimaging evidence, but also by evidence from chronometric studies. In a study by Roelofs et al. (2007), participants were shown pictures of objects (e.g., a dog) while hearing a tone or a spoken word presented 600 ms after picture onset. When a spoken word was presented (e.g., desk or bell), participants indicated whether it contained a pre-specified phoneme (e.g., /d/) by pressing a button. When the tone was presented, they indicated whether the picture name contained the phoneme (Experiment 1) or they named the picture (Experiments 2 and 3). Phoneme monitoring latencies for the spoken words were shorter when the picture name contained the pre-specified phoneme (e.g., dog – desk) compared to when it did not (e.g., dog – bell). However, no priming of phoneme monitoring was obtained when the pictures required no response but were only passively viewed (Experiment 4). Thus, passive picture viewing does not lead to significant phonological activation. These results suggest that attentional enhancements are a precondition for obtaining phonological activation from perceived pictures of objects.

In the passive-viewing condition of Roelofs et al. (2007), speakers may have paid some attention to the picture, but apparently not long enough to induce phonological activation. To assess how long attention needs to be sustained to a picture, eye movements and response times to the picture may be measured. Past research showed that while individuals can shift the focus of attention without an eye movement (covert orienting), they cannot move their eyes to one spatial location while paying full attention to another location (i.e., shifts of eye position require shifts of attention). Thus, a gaze shift (overt orienting) indexes a shift of attention (Wright and Ward, 2008). In a review of the literature on gazes and language performance, Griffin (2004) stated that “the production processes that appear to be resource demanding, based on dual-task performance, pupil dilation, and other measures of mental effort, are the same ones that are reflected in the duration of name-related gazes” (p. 222).

Research on spoken word planning has shown that speakers tend to gaze at words and pictures until the completion of phonological encoding (e.g., Meyer et al., 1998; Griffin, 2001; Korvorst et al., 2006). For example, when speakers are asked to name two spatially separated pictures (e.g., one on the left side of a computer screen and the other on the right side), they look longer at first-to-be-named pictures with disyllabic names (e.g., baby) than with monosyllabic names (e.g., dog) even when the picture recognition times are the same (Meyer et al., 2003). The effect of the phonological length suggests that the shift of gaze from one picture to the other is initiated only after the phonological form of the name for the picture has been encoded and the corresponding articulatory program is available. The executive control system appears to instruct the orienting system to shift gaze depending on the completion of phonological encoding. By making gaze shifts dependent on phonological encoding, resource consumption may be diminished. Articulating a word such as “dog” can easily take half a second or more. If gaze shifts are initiated as soon as the first picture is identified, the planning of the name for the second picture may be completed well before articulation of the name for the first picture has been finished. Consequently, the motor program of the second vocal response needs to be buffered for a relatively long time. By starting perception of the second picture only after the planning of the first picture name is completed sufficiently, the use of buffering resources can be limited. Another reason why gaze shifts are made dependent on the completion of phonological encoding is to reduce or prevent interference from the other picture name, which promotes the speed and accuracy of naming performance.

Malpass and Meyer (2010) provided evidence that the name of the second picture may interfere with planning the name of the first picture. The ease of naming the second picture was manipulated. Easy and difficult second pictures were matched for difficulty of picture recognition, but they differed in average naming latencies and error rates. Participants gazed longer at the first picture when the name of the second picture was easy than when it was more difficult to retrieve. This suggests that planning the name of the first picture suffers more interference from the easy than the difficult second pictures. However, when the processing of the first picture was made more difficult by presenting it upside down, no effect of second picture difficulty on the gaze duration for the first picture was found. These results suggest that participants can retrieve the names of foveated and parafoveal pictures in parallel, but only when the processing of the foveated picture does not demand too much attention.

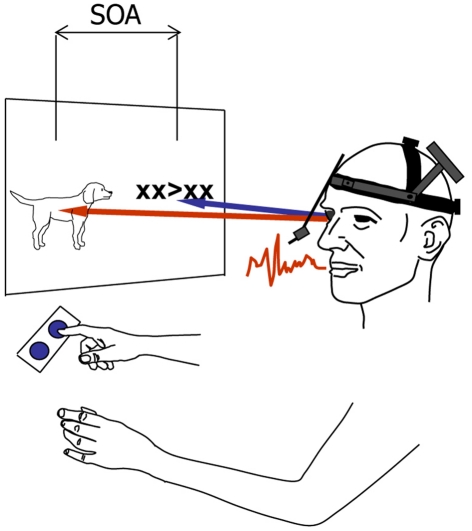

The avoidance of response buffering and the prevention of interference from the second response are not the only reasons for a phonology-dependent gaze shift. Gaze shifts still depend on phonological encoding when the second naming response is replaced by a manual response to a left- or right-pointing arrow, so that there can be no interference from a second naming response (Roelofs, 2008a). Using the so-called psychological refractory period (PRP) procedure (cf. Pashler, 1998), speakers were presented with pictures displayed on the left side of a computer screen and left- or right-pointing arrows displayed on the right side of the screen, as illustrated in Figure 2. The arrows 〈 and 〉 were flanked by two Xs on each side to prevent that they could be identified through parafoveal vision, which was the case for the second pictures in the study of Malpass and Meyer (2010). The picture and the arrow were presented simultaneously on the screen (SOA = 0 ms) or the arrow was presented 300 or 1000 ms after picture onset. The participants’ tasks were to name the picture (Task 1) and to indicate the direction in which the arrow was pointing by pressing a left or right button (Task 2). Eye movements were recorded to determine the onset of the shift of gaze between the picture and the arrow. Phonological encoding was manipulated by having the speakers name the pictures in blocks of trials where the picture names shared the onset phoneme (e.g., dog, doll, desk), the homogeneous condition, or in blocks of trials where the picture names did not share the onset phoneme (e.g., dog, bell, pin), the heterogeneous condition. Earlier research has shown that picture naming RTs are smaller in the homogeneous than heterogeneous condition.

Figure 2.

Schematic illustration of the experimental set-up used in the eye tracking study of Roelofs (2008a). On each trial, participants named a picture and shifted their gaze to a left- or right-pointing arrow to manually indicate its direction by pressing a left or right button. Picture and arrow were presented at stimulus onset asynchronies (SOAs) of 0, 300, or 1000 ms. The latencies of vocal responding, gaze shifting, and manual responding were recorded.

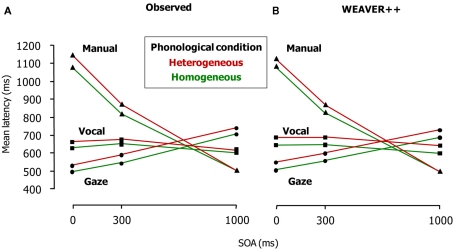

Figure 3A shows the patterns of results. Phonological overlap in a block of trials reduced picture naming and gaze shifting latencies at all SOAs. Gaze shifts were dependent on phonological encoding even when they were postponed at the non-zero SOAs. Manual responses to the arrows were delayed and reflected the phonological effect at the short SOAs (i.e., 0 and 300 ms) but not at the long one (i.e., SOA = 1000 ms). These results suggest that gaze shifts still depend on phonological encoding when speakers name a picture and manually respond to an arrow. This finding suggests that the avoidance of response buffering and the prevention of interference from the second response are not the only reasons for a phonology-dependent gaze shift. Instead, some aspect of spoken word planning itself appears to be the critical factor. If attentional enhancements are required until the word has been planned far enough, this would explain why attention, indexed by eye gazes, is sustained to word planning until the phonological form is planned. This should hold regardless of the need for response buffering and the prevention of interference, as the eye tracking results indicate. Figure 3B shows the results of computer simulations of the experiment using WEAVER++, which we explain below.

Figure 3.

Latencies of vocal responding (Task 1), gaze shifting, and manual responding (Task 2) in the dual-task study of Roelofs (2008a; Experiment 1). (A) Shows the real latency data of the eye tracking experiment and (B) shows the results of the computer simulations. SOA = stimulus onset asynchrony.

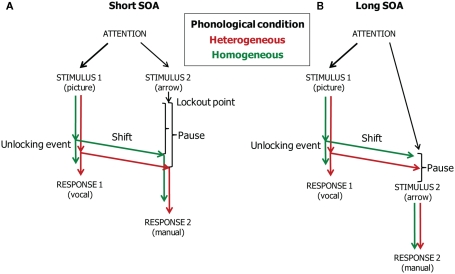

To account for these results and related ones, the model assumes that participants decide which processes may run in parallel in Task 1 and Task 2 (i.e., how attention is divided). To this end, they set a point at which Task 2 processing is strategically suspended, called the “Task 2 lockout point” by Meyer and Kieras (1997b). Moreover, they set a criterion for when the shift of attention between Task 1 and Task 2 should occur. Reaching the shift criterion is called the occurrence of the “Task 1 unlocking event,” which unlocks Task 2. The lockout point and shift criterion serve to maintain acceptable levels of speed and accuracy, to minimize resource consumption (including attentional capacity) and crosstalk between tasks, and to satisfy instructions about task priorities (i.e., the common instruction is that the Task 1 response should precede the Task 2 response). Presumably, the positions of the lockout point and shift criterion are determined on the basis of the initial trials of an experiment, when participants become familiar with the experimental situation, and the lockout point and criterion stay more or less constant throughout the experiment. At the beginning of each trial, the attention system enables both tasks, engages on Task 1 and temporarily suspends Task 2, instructs the ocular motor system to direct gaze toward the Task 1 stimulus, and maintains engagement on Task 1 and monitors performance until the task process reaches the task-shift criterion. Moreover, in homogeneous sets, the phonological encoder is instructed to prepare the phoneme that is shared by the responses in a set. Also during the planning of the target word, a saccade to the arrow is prepared. When the shift criterion is reached during the course of Task 1, attention disengages from Task 1 and shifts to Task 2, which is then resumed, directly followed by a signal to the saccadic control system to execute the prepared saccade to the Task 2 stimulus.

Figure 4 illustrates the timing of vocal responding, attention and gaze shifting, and manual responding in the model when the SOAs are short (i.e., 0 and 300 ms, Panel A) and long (i.e., 1000 ms, Panel B). The unlocking event corresponds to the completion of phonological encoding. At both short and long SOAs, the word planning reaches the unlocking event earlier in the homogeneous than the heterogeneous condition. This phonological facilitation effect is reflected in the naming and gaze shift latencies. Moreover, at short SOAs, the facilitation is reflected in the manual response latencies if there is a pause after the Task 2 lockout point. At the short SOAs, the pause is simply the waiting period until the eyes fixate the arrow so that it can be processed. Because gaze shifted earlier in the homogeneous than the heterogeneous condition, processing of the arrow (Task 2 stimulus) also started earlier in the homogeneous than the heterogeneous condition. Consequently, the phonological facilitation effect is reflected in the manual response RTs. However, at the long SOA, the phonological effect is reflected in the naming and gaze shift latencies, but not in the manual response latencies. This is because the phonological effect is absorbed when waiting for the arrow presentation. That is, at the long SOA, gaze has already shifted to the position on the screen where the arrow will later appear. If the arrow appears after the gaze has shifted in the heterogeneous condition, the processing of the arrow will start at the same moment in time for the homogeneous and heterogeneous conditions. Consequently, the phonological facilitation of vocal response planning will no longer be reflected in the manual response RTs. Figure 3B shows the WEAVER++ simulation results. A comparison with Figure 3A shows that the fit between model and data is good. The computer simulations demonstrate the utility of our theoretical account.

Figure 4.

Theoretical account of the time course of vocal responding (Task 1), attention and gaze shifting, and manual responding (Task 2) in the dual-task study of Roelofs (2008a). The stimulus onset asynchrony (SOA) is short (A) or long (B).

Further evidence that attention is sustained to word planning until the completion of phonological encoding comes from experiments by Cook and Meyer (2008) using the PRP procedure. Participants had to perform picture naming (Task 1) and manual tone discrimination (Task 2) tasks. In the critical conditions, the pictures were combined with phonologically related or unrelated distractors. Experiment 1 used distractor pictures, whereas the other experiments used distractor words, which were either clearly visible (Experiment 2) or masked (Experiment 3). Relative to the unrelated distractors, the phonologically related distractor pictures reduced the naming (Task 1) and manual (Task 2) RTs. Similarly, Roelofs (2008b) observed that the phonological effect of picture distractors on picture naming is present in the gaze durations. Cook and Meyer (2008) also obtained the phonological effect for the distractor words in picture naming, but only when the words were masked, not when they were clearly visible. The clearly visible distractor words yielded phonological facilitation in the naming RTs, but not in the manual RTs. For the manual RTs, the phonological effect tended to be one of interference (i.e., longer RTs on the related than unrelated trials) rather than facilitation. The presence of the phonological effect in the manual (Task 2) RTs for the picture and masked word distractors suggests that participants maintained attention to word planning in picture naming until the completion of phonological encoding. To explain the absence of a phonological facilitation effect in the manual RTs (or the presence of phonological interference) for the clearly visible word distractors, Cook and Meyer (2008) proposed that the phonological facilitation effect in picture naming was offset by longer self-monitoring durations in the phonologically related than unrelated condition.

Evidence from the study of Roelofs (2008a) supports the assumption of WEAVER++ that the allocation of attention in dual-task performance is not fixed but strategically determined (cf. Meyer and Kieras, 1997a). When speakers name pictures in homogeneous and heterogeneous trial blocks (Task 1) and manually respond to arrows or tones (Task 2), phonological encoding for word production delays the manual responses to the arrows (Roelofs, 2008a; Experiments 1–3) but not to the tones (Experiment 4). This suggests that speakers in the experiments of Roelofs (2008a) shifted attention earlier to the tones (i.e., before phonological encoding) than to the arrows (i.e., after phonological encoding).

Whereas (Roelofs, 2008a; Experiment 4) obtained no phonological effect in the tone task, Cook and Meyer (2008) observed a phonological effect on the response to the tones when Task 1 had picture distractors (Experiment 1) or masked word distractors (Experiment 3), whereas no phonological effect was obtained with visible word distractors (Experiment 2). These differences in results suggest that participants may set the shift criterion (i.e., when to shift attention to Task 2) differently depending on the exact circumstances. The shift criterion and lockout point are free parameters of the WEAVER++ model, but the parameter values are constrained. Evidence suggests that when Task 1 requires word planning, the shift criterion may differ in whether or not phonological encoding is completed before attention is shifted. If attention is shifted before phonological encoding, still some attentional capacity will have to be allocated to phonological encoding to make it possible. When Task 2 requires word planning, the lockout point may differ in whether or not lemma retrieval is completed before the planning process is suspended.

Evidence that attention may shift before phonological encoding was not only obtained by Roelofs (2008a; Experiment 4) and Cook and Meyer (2008; Experiment 2), but also by Ferreira and Pashler (2002). They had participants name the picture of picture–word combinations (Task 1) and indicate the pitch of a tone through button presses (Task 2). The SOAs between picture–word stimulus and tone were 50, 150, and 900 ms. The written distractor words were semantically related (e.g., pictured dog, distractor CAT), phonologically related (e.g., distractor DOLL), or unrelated to the picture names (e.g., distractor PIN). Compared to the unrelated distractor words, the semantically related words increased picture naming RTs and the phonologically related words reduced the RTs. Earlier research has suggested that the semantic interference arises in lemma retrieval, whereas the phonological facilitation arises in phonological encoding (cf. Levelt et al., 1999). Ferreira and Pashler (2002) observed that the semantic interference, but not the phonological facilitation, was propagated into the manual RTs. That is, the manual RTs were longer in the semantically related than unrelated condition, but equal in the phonologically related and unrelated conditions. These results suggest that attention was shifted from picture naming to tone discrimination before the onset of phonological encoding, in line with the results of the tone task obtained by Roelofs (2008a; Experiment 4).

Ferreira and Pashler (2002) observed that the semantic interference effect of word distractors in picture naming was carried forward to the manual RTs, suggesting that resolving the conflict underlying the interference requires attention. In line with this, Roelofs (2007) observed that participants gaze longer at picture–word stimuli in the semantically related than unrelated condition. Similarly, gaze durations depend on the amount of conflict in the color–word Stroop task (Roelofs, 2011). In a commonly used version of the Stroop task, participants name the color attribute of colored congruent or incongruent color–words (e.g., the words GREEN or RED in green ink, respectively; say “green”) or neutral series of Xs. Naming RT is longer in the incongruent than in the neutral condition and often shorter in the congruent than in the neutral condition (for reviews, see MacLeod, 1991; Roelofs, 2003). In line with the RTs, participants gaze longer at incongruent than neutral stimuli and longer at neutral than congruent stimuli (Roelofs, 2011), which suggests that there are differences in attention demand among the Stroop conditions. Greater attentional effort is often reflected in a higher skin-conductance response, which is observed for the incongruent compared with the congruent Stroop condition (Naccache et al., 2005).

Central attention demands of reading

It is often assumed that Stroop effects provide evidence for the automaticity of reading (e.g., MacLeod, 1991). The presence of interference and facilitation in this task is taken as evidence that participants automatically read the word, despite the instruction to ignore the word. However, given that the color and word are spatially integrated and part of one perceptual object (i.e., a colored word), it is also possible that Stroop effects reflect the difficulty of not allocating attention to the word in this task (cf. Kahneman, 1973; Pashler, 1998). On this view, word reading occurs in the Stroop task not because it happens automatically, but rather because the word inadvertently receives some of the attention that was meant for the color.

Accumulating evidence supports the attentional view of word reading in the Stroop task (e.g., Besner et al., 1997; Besner and Stolz, 1999). For example, when the color attribute of the color–word Stroop stimuli is removed (i.e., changed into neutral white color on a dark computer screen) 120 or 160 ms after stimulus presentation onset (e.g., RED in green ink is changed into RED in neutral white ink), the magnitude of Stroop interference is reduced compared with the standard continuous presentation of the color until trial offset (La Heij et al., 2001). As argued by La Heij et al. (2001), the duration effect on Stroop interference is paradoxical: Whereas the only stimulus attribute present on the screen for most of the trial is an incongruent word, Stroop interference is less. The finding can be explained, however, if one assumes that removing the color attribute hampers the grouping of the color and word attributes into one perceptual object (i.e., a colored word) to which attention is allocated (cf. La Heij et al., 2001; Lamers and Roelofs, 2007). Because the written color–word receives less attention in the removed than in the continuous condition, the magnitude of the Stroop interference will also be less, as empirically observed. The utility of this account was demonstrated by computer simulations of the exposure duration effect using WEAVER++ (Roelofs and Lamers, 2007). Color removal not only reduces Stroop interference, but also Stroop facilitation. Moreover, color removal reduces gaze durations, suggesting reduced attention demand (Roelofs, 2011).

Whereas the findings on Stroop task performance suggest that word reading is affected by visual (input) attention, Reynolds and Besner (2006) provided evidence on the central attention demands of reading. Earlier, we indicated that form-to-form mapping in reading involves orthographic processing and word-form encoding, including morphological, phonological, and phonetic encoding. Reynolds and Besner (2006) obtained evidence that word-form encoding in reading aloud requires central attentional capacity. They used the PRP procedure with participants performing manual tone discrimination (Task 1) and reading aloud (Task 2) tasks. Experiment 1 manipulated the duration of the form perception stage of word reading through long-lag repetition priming, which refers to shorter RTs for repeated than for novel words over lags greater than 100 intervening trials. According to Reynolds and Besner (2006), this type of priming affects orthographic–lexical processing, because it occurs for words but not for pseudowords and it is not affected by changes in case. Participants read aloud novel and repeated words presented 50 or 750 ms after tone onset. Reading RTs were shorter for the repeated than for the novel words, and this effect was present in the reading RTs at the long 750-ms SOA but not at the short 50-ms SOA. These results suggest that orthographic–lexical processing of the words (Task 2) occurred in parallel with tone processing (Task 1), before the lockout point of the word reading process, and the effect of repetition priming was absorbed by the pause, as we explain below.

Assume that participants strategically lock out the word reading process just before the onset of word-form encoding, so that processes in the tone task (Task 1) and processes up to (but not including) word-form encoding in reading (Task 2) are allowed to run in parallel. As a result of the repetition priming, word processing will reach the lockout point earlier for the repeated than the novel words. However, at the short 50-ms SOA, word reading will reach the lockout point before the tone processing has reached the unlocking event. Consequently, processing in the reading task has to wait for the unlocking event to occur and the difference in processing time for the repeated and novel words will be absorbed by the pause. In contrast, at the long 750-ms SOA, word reading will not have to wait for the tone processing, and the repetition priming effect will be observed in the reading RTs. Thus, overlap of orthographic–lexical processing and tone processing at the short SOA, but not at the long one, explains why the effects of repetition priming and SOA are underadditive.

In Experiments 2–4 of Reynolds and Besner (2006), pseudoword length and grapheme–phoneme complexity were manipulated. In dual-route models of reading, such as the one proposed by Coltheart et al. (2001), letter processing occurs in parallel across a letter string, but sublexical grapheme-to-phoneme translation occurs serially, from left to right across the string. Therefore, the RT for reading pseudowords aloud increases with the number of letters, as empirically observed in earlier research. Moreover, grapheme-to-phoneme translation is more complex and takes longer when at least one phoneme corresponds to a multiletter grapheme (e.g., TH in STETH) than when each phoneme corresponds to a single letter (e.g., STEK). Reynolds and Besner (2006) observed that the effects of pseudoword length and grapheme–phoneme complexity were additive with SOA, suggesting that participants did not divide central attention between tone discrimination and phonological encoding in reading aloud. Instead, phonological encoding was locked out, so that it did not occur in parallel with the tone discrimination task. Consequently, the effects of length and grapheme–phoneme complexity were additive with SOA. In Experiments 5–7, Reynolds and Besner (2006) examined whether participants divide attention between tone discrimination and lexical aspects of word-form encoding by manipulating orthographic neighborhood density, which refers to the number of words created by changing each letter of a word, one at a time. Reynolds and Besner (2006) reviewed evidence suggesting that the RT of reading aloud words and pseudowords decreases as neighborhood density increases. This effect of neighborhood density was argued to arise in word-form encoding. In the experiments of Reynolds and Besner (2006), the effect of neighborhood density was additive with SOA, suggesting that participants did not divide central attention between tone discrimination and lexical aspects of word-form encoding in reading aloud. To conclude, the results of Reynolds and Besner (2006) suggest that lexical and phonological stages of word-form encoding in reading aloud require central attention, whereas the orthographic–lexical processing of letter strings does not.

In all their experiments, Reynolds and Besner (2006) observed that the tone discrimination RTs (Task 1) were shorter at the 50-ms than the 750-ms SOA. If central attention is not divided between tasks, as the results of Reynolds and Besner (2006) suggest, then Task 1 RTs should be the same for long and short SOAs, because Task 1 receives full capacity in both cases. In contrast, Task 1 RTs were smaller at the short than the long SOA in the experiments of Reynolds and Besner. However, Task 1 RTs should only be constant across SOAs if attentional capacity is fixed, which does not need to hold (Tombu and Jolicoeur, 2003). Evidence suggests that the available capacity increases when participants put more effort into the tasks, which depends on the demands of concurrent activities (Kahneman, 1973). The demands are presumably higher at short than long SOAs. Exerting greater effort may decrease RTs at short SOAs, as was the case in the experiments of Reynolds and Besner (2006).

Whereas word reading requires central attention, it requires less attention than picture naming, according to the WEAVER++ model. This is because the pathway through the lexical network is shorter for reading than for picture naming, as illustrated in Figure 1. In line with the model, evidence from eye tracking suggests that shifts of gaze occur closer to articulation onset in naming pictures than in reading their names (Roelofs, 2007). An eye tracking study measured the mean latencies for the vocal responses and gaze shifts in picture naming and word reading in a semantic condition (e.g., a pictured dog combined with the word CAT), an unrelated condition (e.g., a pictured dog combined with the word PIN), and a control condition (e.g., a pictured dog combined with XXX for picture naming or the word DOG in an empty picture frame for word reading). A distractor effect was obtained in picture naming but not in word reading, suggesting differences in attention demands between the two tasks. In all three distractor conditions, the gaze shifts occurred about 66 ms before articulation onset in picture naming, whereas they happened already about 156 ms before articulation onset in word reading (Roelofs, 2007). Given the shorter network distance for word reading than picture naming (see Figure 1), attentional enhancements may be less for reading than naming. If enhancements are required until the word has been planned sufficiently, this explains why attention, as indexed by eye gazes, is sustained longer to word planning in picture naming than in word reading, regardless of whether or not distractors are present. However, such difference in gaze shift latencies was not observed when participants switched between naming the picture and reading the word aloud of picture–word combinations (Roelofs, 2008b). Pictures and words were presented in red and green. The task was picture naming or word reading depending on whether the picture or word was presented in green color, which varied randomly from trial to trial. In this task situation, gaze shifted around 100 ms before articulation onset in both picture naming and word reading. Apparently, there is a greater need to sustain attention to word reading when the distractor pictures have to be named on other trials and therefore are more likely to interfere with word reading.

Evidence that Word Planning Does not Require Full Central Attention

WEAVER++ assumes that all word planning processes up to and including phonological encoding require some attentional capacity. However, the planning processes do not require full attentional capacity, meaning that central attention may be shared between word planning and other attention demanding concurrent processes. In contrast, other researchers (i.e., Ferreira and Pashler, 2002; Dell’Acqua et al., 2007; Ayora et al., 2011) proposed a central bottleneck model in which a process requires undivided attention or no attention, with no middle ground. For example, Ferreira and Pashler (2002) argued that lemma and morpheme selection in word planning preclude any other concurrent process that also requires central attention, such as response selection in a non-linguistic task.

Recent empirical results indicate that conflicts in word planning may be resolved while concurrently performing an unrelated non-linguistic task, making a task decision, or a go/no-go decision. These findings suggest that word planning does not require full central attention. A type of conflict that has been extensively studied is the increased response competition underlying the semantic interference effect, described above: RTs are longer for picture naming when the word is semantically related to the picture name (e.g., picture of a dog combined with the word CAT) relative to unrelated words (e.g., the word PIN). Whereas in single-task performance, distractor words in picture naming yield semantic interference, this effect may be absent when simultaneously performing picture naming and a concurrent task or process.

Central attention sharing in dual-task performance

Dell’Acqua et al. (2007) observed that the semantic interference effect in picture naming may diminish or disappear at short SOAs in the PRP procedure. Participants performed a manual tone discrimination task (Task 1) and a picture–word interference task (Task 2). The tones preceded the picture–word stimuli by SOAs of 100, 350, or 1000 ms. The semantic interference effect was much smaller at the 350-ms SOA than at the 1000-ms SOA, and the interference was absent at the 100-ms SOA. These results suggest that the semantic interference in picture naming was resolved while simultaneously performing the tone discrimination task.

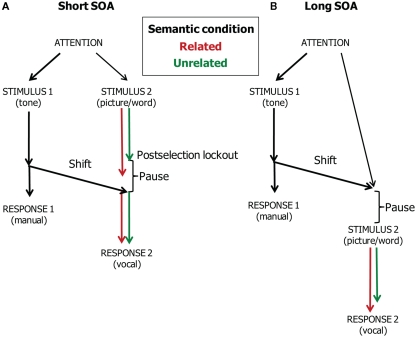

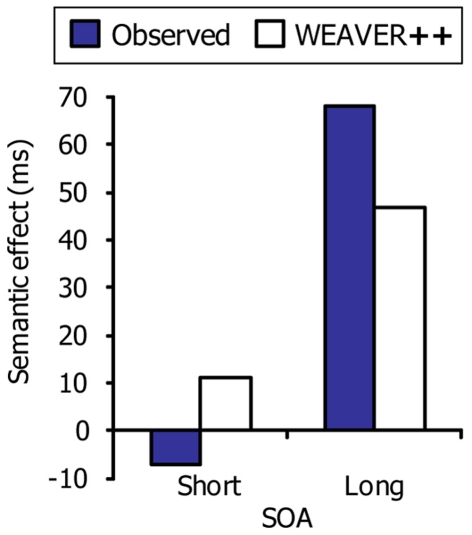

This evidence suggests that central attention may be divided between tone discrimination, on the one hand, and resolving the conflict underlying the semantic interference effect in picture naming, on the other hand. Figure 5 illustrates our account of the data of Dell’Acqua et al. (2007), which are shown in Figure 6 together with the WEAVER++ simulation results obtained by Piai et al. (2011). At the short SOA, picture naming has to pause after resolving the conflict in lemma selection. Consequently, the semantic interference in picture naming will be absorbed by the pause. In contrast, at the long SOA, attention will have shifted away from the tone task before the picture–word stimulus is presented. As a result, the conflict in lemma selection cannot be resolved while performing the tone task and semantic interference will be reflected in the naming RTs.

Figure 5.

Theoretical account of the time course of manual responding (Task 1) and vocal responding (Task 2) in the dual-task study of Dell’Acqua et al. (2007). Pictures are named in semantically related and unrelated conditions. The stimulus onset asynchrony (SOA) is short (A) or long (B).

Figure 6.

The semantic interference effect in picture naming as a function of stimulus onset asynchrony (SOA) in the psychological refractory period procedure. Shown are the real data (Dell’Acqua et al., 2007) and WEAVER++ simulation results. The short SOA was 100 ms and the long SOA was 1000 ms.

A hallmark of attentional capacity sharing is that Task 1 RT increases as SOA decreases in dual-task performance. If some proportion of the attentional capacity is allocated to Task 1 and the remainder to Task 2 when both tasks require central attention, this will increase Task 1 response latencies at short SOAs compared to long ones (when 100% of the capacity may be allocated to Task 1). We assumed that participants in the experiment of Dell’Acqua et al. (2007) shared attentional capacity between the tone discrimination task (Task 1) and the picture naming task (Task 2). However, in that study, Task 1 RTs did not increase at short SOAs, which seems to challenge the assumption that capacity was shared.

However, the Task 1 RTs should only be increased at short SOAs if attentional capacity is fixed and the capacity allocated to Task 1 and Task 2 sums to full capacity (cf. Tombu and Jolicoeur, 2003), which does not need to hold. As we indicated earlier, evidence suggests that the available capacity increases when participants put more effort into tasks (Kahneman, 1973). Exerting greater effort may compensate for the slowing of tasks caused by dividing attentional capacity at short SOAs. If the participants of Dell’Acqua et al. (2007) increased capacity by exerting greater effort at short SOAs, the Task 1 RTs do not need to become longer, as empirically observed. According to Kahneman (1973), the amount of attentional capacity available at any time depends on the demands of current activities, which is presumably less at long than short SOAs. To conclude, given the potentially confounding effect of effort across SOAs in the study of Dell’Acqua et al. (2007), the absence of an increase of Task 1 RTs at short SOAs does not exclude that attentional capacity was shared.

Central attention sharing in making task-choice and go/no-go decisions

In line with our account of the findings of Dell’Acqua et al. (2007) illustrated in Figure 5, it was found that the semantic interference effect in picture naming may also disappear when simultaneously making a task choice (Piai et al., 2011). In the task choice procedure (Besner and Care, 2003), participants receive a cue at every trial indicating which task to perform. This cue can either be given before the target or simultaneously with it. In this procedure, only the response to the target stimulus is required, so no response selection takes place for the cue stimulus. The logic of the task-choice paradigm is similar to the dual-task interference logic (Besner and Care, 2003). Under our account, one or more stages of processing for the target stimulus are postponed until the decision concerning what task to perform has been made. If processes involved in the task to be performed (e.g., picture naming) are run in parallel with the task-choice process, effects related to these processes, such as semantic interference, may (partly) be absorbed.

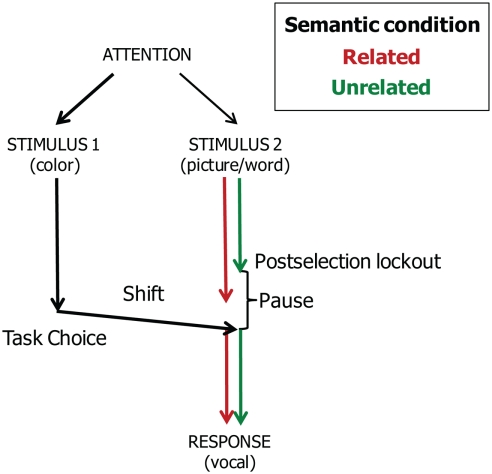

In the picture–word interference study of Piai et al. (2011), participants had to decide between naming the picture or reading the word aloud depending on the presentation color of the word. Whereas semantic interference was obtained in a standard picture–word interference experiment, the semantic interference effect disappeared when task choices had to be made. Assuming that semantic interference arises at the level of response selection, these findings suggest that participants locked out picture naming processes after response selection and that the semantic interference effect was absorbed by the pause created by the task-choice process. Figure 7 depicts the account.

Figure 7.

Theoretical account of the timing of task-choice processes (Task 1) and vocal responding (Task 2) in the task-choice study of Piai et al. (2011). Pictures are named in semantically related and unrelated conditions.

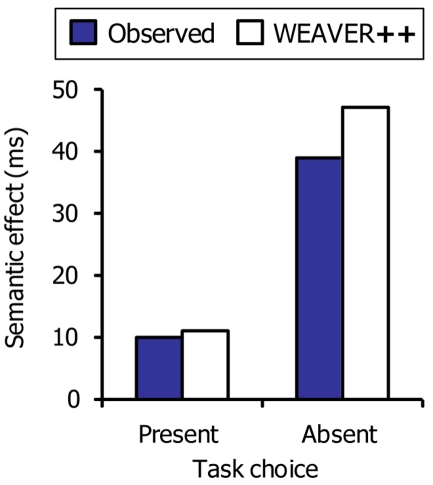

Figure 8 shows the empirical data of Piai et al. (2011) together with the WEAVER++ simulation results. Without task decision, a full-blown semantic interference effect occurs in the model, as typically observed with picture naming in picture–word interference experiments. However, when a task choice has to be made, the pause created by the task-choice process may absorb the semantic interference effect in the model, as empirically observed.

Figure 8.

The semantic interference effect in picture naming as a function of the presence or absence of a task choice. Shown are the real data (Piai et al., 2011) and WEAVER++ simulation results.

Importantly, under the assumption of a postselection lockout point for the picture naming task, semantic interference will only be absorbed if the choice processes take longer than the duration of processes up to and including lemma selection for picture naming in the semantically related condition, as illustrated in Figure 7. In contrast, if choice processes take less time than the processes up to and including lemma selection, semantic interference should be obtained. This corresponds to what Janssen et al. (2008) observed using the task-choice procedure and to what Mädebach et al. (2011) observed when the choice processes consisted of a go/no-go decision based on the color of the word. In the WEAVER++ model, decreasing the duration of the choice process a little (e.g., by 25 ms) yields a semantic interference effect (e.g., of some 30 ms), as observed in these studies.

Evidence that attention shifts occur earlier in go/no-go than dual-task situations was obtained in an eye tracking study of Lamers and Roelofs (2011). Participants vocally responded to congruent and incongruent flanker stimuli presented on the left side of a computer screen and shifted gaze to left- or right-pointing arrows presented on the right side of the screen. The arrows required a manual response (dual task) or determined whether the naming response to the flanker stimuli had to be given or not (go/no-go). The results showed that the naming RTs and gaze shift latencies were longer on incongruent than congruent trials in both dual-task and go/no-go performance. In dual-task performance, the flanker effect was also present in the manual RTs for the arrow stimulus, reflecting a propagation of the distractor effect from the naming to the manual responses. These results suggest that gaze shifts occur after response selection in both dual-task and go/no-go performance with vocal responding. However, the gaze shift latencies were on average 185 ms shorter in the go/no-go condition than in the dual-task condition. Thus, although gazes shifted after response selection in both the go/no-go and the dual-task conditions (as suggested by the presence of the flanker effects in the gaze shift latencies), attention seemed to shift earlier in the go/no-go than the dual-task condition.

Attention in Impaired Language Performance

Whereas attentional capacity may increase with effort, there is an upper limit (Kahneman, 1973). Moreover, the increase may often be insufficient to fully meet the demands of a task, especially when the task is difficult. A task may be difficult, for example, when it is complex or when the task is simple but the individual performing the task has a deficit in one or more of the component abilities that are required. For example, evidence suggests that individuals with developmental dyslexia have difficulty in performing grapheme-to-phoneme translations in reading, presumably because they fail to develop strong connections. Evidence suggests that dyslexic individuals try to compensate the weaker connections by allocation of more attention to the grapheme–phoneme translation process. Brain areas associated with word-form perception, such as the left occipito-temporal sulcus, are less activated in dyslexic than normal readers. In contrast, brain areas associated with attentional control, such as regions in prefrontal and parietal cortex, are more highly activated in dyslexic than normal readers in reading performance (see Shaywitz and Shaywitz, 2008, for a review). This suggests that dyslexic readers try to overcome or diminish their reading problem by investing more attention. However, given that problems remain (e.g., reading RTs are longer for dyslexic than normal readers), the increased attention appears insufficient to counteract the slowing caused by weak grapheme–phoneme connections. Similarly, increased attention and effort is typically insufficient to compensate for the detrimental consequences of brain damage in acquired dyslexia and aphasia (e.g., Murray, 1999). Attention problems may worsen performance in dyslexia and aphasia (e.g., Murray, 1999; Shaywitz and Shaywitz, 2008).

Evidence suggests that attention deficits also contribute to the impaired language performance of individuals with SLI. This is a disorder of language acquisition and use in children who otherwise appear to be normally developing. The disorder may persist into adulthood. The features of the impaired language performance in SLI are quite variable, but common characteristics are a delay in starting to talk in childhood, deviant production of speech sounds, a restricted vocabulary, slow and inaccurate picture naming, and use of simplified grammatical structures, including omission of articles and plural and past tense endings (see Leonard, 1998, for a review). In general, individuals with SLI seem to have a problem in dealing with (relatively) complex language structures, in both speech production and comprehension. A prominent account of SLI holds that these difficulties with complexity in language reflect a reduced capacity of systems underlying language processes, resulting from a limitation in general processing capacity (Leonard, 1998). It is becoming increasingly clear that (subclinical) attention deficits also contribute to SLI.

Individuals with SLI appear to have reduced working memory capacity, as assessed by pseudoword repetition and listening span tasks (e.g., Ellis Weismer et al., 2005, for a review). Moreover, evidence suggests that children with SLI have deficits in sustained attention (e.g., Spaulding et al., 2008; Finneran et al., 2009). The reduced working memory and sustained attention capacities may have a common ground. In an influential functional analysis of executive control by Miyake et al. (2000), three types of executive abilities are distinguished: monitoring and updating of working memory representations, inhibiting of dominant responses, and shifting of tasks or mental sets. Evidence suggests that working memory performance is specifically related to the updating ability (Miyake et al., 2000), whereas sustained attention performance is related to the updating and inhibiting abilities (Unsworth et al., 2010). Im-Bolter et al. (2006) provided evidence that the updating and inhibiting abilities are deficient in SLI.

Working memory and sustained attention play an important role in WEAVER++. In this model, the lexical network is accessed by spreading activation while the condition–action rules determine what is done with the activated lexical information depending on the task goal in working memory. The task goal is achieved by successively updating subgoals in the course of the word planning process. In conceptually driven word planning, an initial subgoal is to select a lemma for a selected concept. The next subgoal is to select one or more morphemes for the selected lemma. Next, the subgoal is to select phonemes for the selected morphemes. Then, the subgoal is to syllabify the selected phonemes and to assign word accent. A final subgoal is to select syllable motor programs for the syllabified phonemes. For the planning process to be successful, attention needs to be sustained until the phonological form has been planned and syllable motor programs may be accessed. As discussed by Leonard (1998) for a WEAVER++ type of model, difficulties in word planning may arise when there are capacity restrictions in the language processes involved. For example, a capacity restriction in activating or selecting morphemes for the selected lemma may result in an omission of inflectional morphemes, such as past tense endings. This type of problem will be reinforced by capacity restrictions in working memory and sustained attention (i.e., the updating ability). For example, problems in successively maintaining subgoals will impede the planning process, especially when a subgoal concerns a complex mapping between levels (e.g., such as the mapping between lemmas and morphemes, e.g., Janssen et al., 2002, 2004).

A role of attention in dyslexia, aphasia, and SLI has practical implications. To the extent that attention deficits contribute to the impaired language performance, therapeutic interventions that only deal with the underlying language processes are not providing the afflicted individuals with what they need. Rather, interventions should aim at improving the attention abilities as well (e.g., Murray, 1999; Shaywitz and Shaywitz, 2008; Finneran et al., 2009).

Conclusion

Evidence suggests that word planning requires some but not full central attention. Empirical results indicate that processes up to and including phonological encoding in word planning delay, or are delayed by, the performance of concurrent unrelated non-linguistic tasks. These findings suggest that word planning requires some attentional capacity. Moreover, empirical results indicate that conflicts in word planning may be resolved while concurrently performing an unrelated non-linguistic task, making a task decision, or making a go/no-go decision. These findings suggest that word planning does not require full attentional capacity.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Preparation of this article was supported by a grant (Open Competition MaGW 400-09-138) from the Netherlands Organisation for Scientific Research.

References

- Ayora P., Peressotti F., Alario F.-X., Mulatti C., Pluchino P., Job R., Dell’Acqua R. (2011). What phonological facilitation tells about semantic interference: a dual-task study. Front. Psychol. 2:57. 10.3389/fpsyg.2011.00057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A. (1996). Exploring the central executive. Q. J. Exp. Psychol. 49A, 5–28 10.1080/027249896392784 [DOI] [Google Scholar]

- Badre D., Poldrack R. A., Paré-Blagoev E., Insler R. Z., Wagner A. D. (2005). Dissociable controlled retrieval and generalized selection mechanisms in ventrolateral prefrontal cortex. Neuron 47, 907–918 10.1016/j.neuron.2005.07.023 [DOI] [PubMed] [Google Scholar]

- Besner D., Care S. (2003). A paradigm for exploring what the mind does while deciding what it should do. Can. J. Exp. Psychol. 57, 311–320 10.1037/h0087434 [DOI] [PubMed] [Google Scholar]

- Besner D., Stolz J. A. (1999). What kind of attention modulates the Stroop effect? Psychon. Bull. Rev. 6, 99–104 10.3758/BF03210834 [DOI] [PubMed] [Google Scholar]

- Besner D., Stolz J. A., Boutilier C. (1997). The Stroop effect and the myth of automaticity. Psychon. Bull. Rev. 4, 221–225 10.3758/BF03209396 [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Braver T. S., Barch D. M., Carter C. S., Cohen J. D. (2001). Conflict monitoring and cognitive control. Psychol. Rev. 108, 624–652 10.1037/0033-295X.108.3.624 [DOI] [PubMed] [Google Scholar]

- Coltheart M., Rastle K., Perry C., Langdon R., Ziegler J. (2001). DRC: a dual route cascaded model of visual word recognition and reading aloud. Psychol. Rev. 108, 204–256 10.1037/0033-295X.108.1.204 [DOI] [PubMed] [Google Scholar]

- Cook A. E., Meyer A. S. (2008). Capacity demands of phoneme selection in word production: new evidence from dual-task experiments. J. Exp. Psychol. Learn. Mem. Cogn. 34, 886–899 10.1037/0278-7393.34.4.886 [DOI] [PubMed] [Google Scholar]

- de Zubicaray G. I., Wilson S. J., McMahon K. K., Muthiah S. (2001). The semantic interference effect in the picture-word paradigm: an event-related fMRI study employing overt responses. Hum. Brain Mapp. 14, 218–227 10.1002/hbm.1054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S. (2009). Reading in the Brain. New York: Viking [Google Scholar]

- Dell G. S., Schwartz M. F., Martin N., Saffran E. M., Gagnon D. A. (1997). Lexical access in aphasic and nonaphasic speakers. Psychol. Rev. 104, 801–838 10.1037/0033-295X.104.4.801 [DOI] [PubMed] [Google Scholar]

- Dell’Acqua R., Job R., Peressotti F., Pascali A. (2007). The picture-word interference effect is not a Stroop effect. Psychon. Bull. Rev. 14, 717–722 10.3758/BF03196827 [DOI] [PubMed] [Google Scholar]

- Ellis Weismer S., Plante E., Jones M., Tomblin J. B. (2005). A functional magnetic resonance imaging investigation of verbal working memory in adolescents with specific language impairment. J. Speech Lang. Hear. Res. 48, 405–425 10.1044/1092-4388(2005/028) [DOI] [PubMed] [Google Scholar]

- Ferreira V., Pashler H. (2002). Central bottleneck influences on the processing stages of word production. J. Exp. Psychol. Learn. Mem. Cogn. 28, 1187–1199 10.1037/0278-7393.28.6.1187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finneran D. A., Francis A. L., Leonard L. B. (2009). Sustained attention in children with specific language impairment (SLI). J. Speech Lang. Hear. Res. 52, 915–929 10.1044/1092-4388(2009/07-0053) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffin Z. M. (2001). Gaze durations during speech reflect word selection and phonological encoding. Cognition 82, B1–B14 10.1016/S0010-0277(01)00139-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffin Z. M. (2004). “Why look? Reasons for eye movements related to language production,” in The Interface of Language, Vision, and Action: Eye Movements and the Visual World, eds Henderson J. M., Ferreira F. (Hove: Psychology Press; ), 213–247 [Google Scholar]

- Im-Bolter N., Johnson J., Pascual-Leone J. (2006). Processing limitations in children with specific language impairment: the role of executive function. Child Dev. 77, 1822–1841 10.1111/j.1467-8624.2006.00976.x [DOI] [PubMed] [Google Scholar]

- Indefrey P., Levelt W. J. M. (2004). The spatial and temporal signatures of word production components. Cognition 92, 101–144 10.1016/j.cognition.2002.06.001 [DOI] [PubMed] [Google Scholar]

- Janssen D. P., Roelofs A., Levelt W. J. M. (2002). Inflectional frames in language production. Lang. Cogn. Process. 17, 209–236 10.1080/01690960143000182 [DOI] [Google Scholar]

- Janssen D. P., Roelofs A., Levelt W. J. M. (2004). Stem complexity and inflectional encoding in language production. J. Psycholinguist. Res. 33, 365–381 10.1023/B:JOPR.0000039546.60121.a8 [DOI] [PubMed] [Google Scholar]

- Janssen N., Schirm W., Mahon B. Z., Caramazza A. (2008). Semantic interference in a delayed naming task: evidence for the response exclusion hypothesis. J. Exp. Psychol. Learn. Mem. Cogn. 34, 249–256 10.1037/0278-7393.34.1.249 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston J. C., McCann R. S., Remington R. W. (1995). Chronometric evidence for two types of attention. Psychol. Sci. 6, 365–369 10.1111/j.1467-9280.1995.tb00527.x [DOI] [Google Scholar]

- Kahneman D. (1973). Attention and Effort. Englewood Cliffs, NJ: Prentice-Hall [Google Scholar]

- Kan I. P., Thompson-Schill S. L. (2004). Effect of name agreement on prefrontal activity during overt and covert picture naming. Cogn. Affect. Behav. Neurosci. 4, 43–57 10.3758/CABN.4.4.466 [DOI] [PubMed] [Google Scholar]

- Kane M. J., Engle R. W. (2002). The role of prefrontal cortex in working-memory capacity, executive attention, and general fluid intelligence: an individual-differences perspective. Psychon. Bull. Rev. 9, 637–671 10.3758/BF03196323 [DOI] [PubMed] [Google Scholar]

- Korvorst M., Roelofs A., Levelt W. J. M. (2006). Incrementality in naming and reading complex numerals: evidence from eye tracking. Q. J. Exp. Psychol. 59, 296–311 10.1080/17470210500151691 [DOI] [PubMed] [Google Scholar]

- La Heij W., van der Heijden A. H. C., Plooij P. (2001). A paradoxical exposure-duration effect in the Stroop task: temporal segregation between stimulus attributes facilitates selection. J. Exp. Psychol. Hum. Percept. Perform. 27, 622–632 10.1037/0096-1523.27.3.622 [DOI] [PubMed] [Google Scholar]

- Lamers M., Roelofs A. (2007). Role of Gestalt grouping in selective attention: evidence from the Stroop task. Percept. Psychophys. 69, 1305–1314 10.3758/BF03192947 [DOI] [PubMed] [Google Scholar]

- Lamers M., Roelofs A. (2011). Attention and gaze shifting in dual-task and go/no-go performance with vocal responding. Acta Psychol. (Amst.) 137, 261–268 10.1016/j.actpsy.2010.12.005 [DOI] [PubMed] [Google Scholar]

- Leonard L. B. (1998). Children With Specific Language Impairment. Cambridge, MA: MIT Press [Google Scholar]

- Levelt W. J. M., Roelofs A., Meyer A. S. (1999). A theory of lexical access in speech production. Behav. Brain Sci. 22, 1–38 10.1017/S0140525X99451775 [DOI] [PubMed] [Google Scholar]

- MacLeod C. M. (1991). Half a century of research on the Stroop effect: an integrative review. Psychol. Bull. 109, 163–203 10.1037/0033-2909.109.2.163 [DOI] [PubMed] [Google Scholar]

- Mädebach A., Oppermann F., Hantsch A., Curda C., Jescheniak J. D. (2011). Is there semantic interference in delayed naming? J. Exp. Psychol. Learn. Mem. Cogn. 37, 522–538 10.1037/a0021970 [DOI] [PubMed] [Google Scholar]

- Malpass D., Meyer A. S. (2010). The time course of name retrieval during multiple-object naming: evidence from extrafoveal-on-foveal effects. J. Exp. Psychol. Learn. Mem. Cogn. 36, 523–537 10.1037/a0018522 [DOI] [PubMed] [Google Scholar]

- Maril A., Wagner A. D., Schacter D. L. (2001). On the tip of the tongue: an event-related fMRI study of semantic retrieval failure and cognitive conflict. Neuron 31, 653–660 10.1016/S0896-6273(01)00396-8 [DOI] [PubMed] [Google Scholar]

- Meyer A. S., Roelofs A., Levelt W. J. M. (2003). Word length effects in object naming: the role of a response criterion. J. Mem. Lang. 48, 131–147 10.1016/S0749-596X(02)00509-0 [DOI] [Google Scholar]

- Meyer A. S., Sleiderink A. M., Levelt W. J. M. (1998). Viewing and naming objects. Cognition 66, B25–B33 10.1016/S0010-0277(98)00009-2 [DOI] [PubMed] [Google Scholar]

- Meyer D. E., Kieras D. E. (1997a). A computational theory of executive cognitive processes and multiple-task performance: part 1. Basic mechanisms. Psychol. Rev. 104, 3–65 10.1037/0033-295X.104.4.749 [DOI] [PubMed] [Google Scholar]

- Meyer D. E., Kieras D. E. (1997b). A computational theory of executive cognitive processes and multiple-task performance: part 2. Accounts of psychological refractory-period phenomena. Psychol. Rev. 104, 749–791 10.1037/0033-295X.104.4.749 [DOI] [PubMed] [Google Scholar]

- Miyake A., Friedman N. P., Emerson M. J., Witzki A. H., Howerter A., Wager T. D. (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: a latent variable analysis. Cogn. Psychol. 41, 49–100 10.1006/cogp.1999.0734 [DOI] [PubMed] [Google Scholar]

- Murray L. L. (1999). Attention and aphasia: theory, research, and clinical implications. Aphasiology 13, 91–111 10.1080/026870399402226 [DOI] [Google Scholar]

- Naccache L., Dehaene S., Cohen L., Habert M.-O., Guichart-Gomez E., Galanaud D., Willer J.-C. (2005). Effortless control: executive attention and conscious feeling of mental effort are dissociable. Neuropsychologia 43, 1318–1328 10.1016/j.neuropsychologia.2004.11.024 [DOI] [PubMed] [Google Scholar]

- Pashler H. (1998). The Psychology of Attention. Cambridge, MA: MIT Press [Google Scholar]

- Petersen S. E., Fox P. T., Posner M. I., Mintun M., Raichle M. E. (1988). Positron emission tomographic studies of the cortical anatomy of single-word processing. Nature 331, 585–589 10.1038/331585a0 [DOI] [PubMed] [Google Scholar]

- Petersen S. E., van Mier H., Fiez J. A., Raichle M. E. (1998). The effects of practice on the functional anatomy of task performance. Proc. Natl. Acad. Sci. U.S.A. 95, 853–860 10.1073/pnas.95.1.310 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piai V., Roelofs A., Schriefers H. (2011). Semantic interference in immediate and delayed naming and reading: attention and task decisions. J. Mem. Lang. 64, 404–423 10.1016/j.jml.2011.01.004 [DOI] [Google Scholar]

- Posner M. I., Raichle M. E. (1994). Images of Mind. New York: W. H. Freeman [Google Scholar]

- Posner M. I., Rothbart M. K. (2007). Educating the Human Brain. Washington, DC: APA Books [Google Scholar]

- Reynolds M., Besner D. (2006). Reading aloud is not automatic: processing capacity is required to generate a phonological code from print. J. Exp. Psychol. Hum. Percept. Perform. 32, 1303–1323 10.1037/0096-1523.32.6.1303 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (1992). A spreading-activation theory of lemma retrieval in speaking. Cognition 42, 107–142 10.1016/0010-0277(92)90041-F [DOI] [PubMed] [Google Scholar]

- Roelofs A. (1997). The WEAVER model of word-form encoding in speech production. Cognition 64, 249–284 10.1016/S0010-0277(97)00027-9 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2003). Goal-referenced selection of verbal action: modeling attentional control in the Stroop task. Psychol. Rev. 110, 88–125 10.1037/0033-295X.110.1.88 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2004). Error biases in spoken word planning and monitoring by aphasic and nonaphasic speakers: comment on Rapp and Goldrick (2000). Psychol. Rev. 111, 561–572 10.1037/0033-295X.111.2.561 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2006). Context effects of pictures and words in naming objects, reading words, and generating simple phrases. Q. J. Exp. Psychol. 59, 1764–1784 10.1080/17470210500416052 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2007). Attention and gaze control in picture naming, word reading, and word categorizing. J. Mem. Lang. 5, 232–251 10.1016/j.jml.2006.10.001 [DOI] [Google Scholar]

- Roelofs A. (2008a). Attention, gaze shifting, and dual-task interference from phonological encoding in spoken word planning. J. Exp. Psychol. Hum. Percept. Perform. 34, 1580–1598 10.1037/a0012476 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2008b). Tracing attention and the activation flow in spoken word planning using eye movements. J. Exp. Psychol. Learn. Mem. Cogn. 34, 353–368 10.1037/0278-7393.34.2.353 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2008c). Dynamics of the attentional control of word retrieval: analyses of response time distributions. J. Exp. Psychol. Gen. 137, 303–323 10.1037/0096-3445.137.2.303 [DOI] [PubMed] [Google Scholar]

- Roelofs A. (2011). Attention, exposure duration, and gaze shifting in naming performance. J. Exp. Psychol. Hum. Percept. Perform. 37, 860–873 10.1037/a0021929 [DOI] [PubMed] [Google Scholar]

- Roelofs A., Hagoort P. (2002). Control of language use: cognitive modeling of the hemodynamics of Stroop task performance. Brain Res. Cogn. Brain Res. 15, 85–97 10.1016/S0926-6410(02)00218-5 [DOI] [PubMed] [Google Scholar]

- Roelofs A., Lamers M. (2007). “Modelling the control of visual attention in Stroop-like tasks,” in Automaticity and Control in Language Processing, eds Meyer A. S., Wheeldon L. R., Krott A. (Hove: Psychology Press; ), 123–142 [Google Scholar]

- Roelofs A., Özdemir R., Levelt W. J. M. (2007). Influences of spoken word planning on speech recognition. J. Exp. Psychol. Learn. Mem. Cogn. 33, 900–913 10.1037/0278-7393.33.5.900 [DOI] [PubMed] [Google Scholar]

- Roelofs A., van Turennout M., Coles M. G. H. (2006). Anterior cingulate cortex activity can be independent of response conflict in Stroop-like tasks. Proc. Natl. Acad. Sci. U.S.A. 103, 13884–13889 10.1073/pnas.0606265103 [DOI] [PMC free article] [PubMed] [Google Scholar]