Abstract

People are able to rapidly infer complex personality traits and mental states even from the most minimal person information. Research has shown that when observers view a natural scene containing people, they spend a disproportionate amount of their time looking at the social features (e.g., faces, bodies). Does this preference for social features merely reflect the biological salience of these features or are observers spontaneously attempting to make sense of complex social dynamics? Using functional neuroimaging, we investigated neural responses to social and nonsocial visual scenes in a large sample of participants (n = 48) who varied on an individual difference measure assessing empathy and mentalizing (i.e., empathizing). Compared with other scene categories, viewing natural social scenes activated regions associated with social cognition (e.g., dorsomedial prefrontal cortex and temporal poles). Moreover, activity in these regions during social scene viewing was strongly correlated with individual differences in empathizing. These findings offer neural evidence that observers spontaneously engage in social cognition when viewing complex social material but that the degree to which people do so is mediated by individual differences in trait empathizing.

Keywords: empathy, fMRI, individual differences, mentalizing, theory of mind

Introduction

People are remarkably adept at extracting social information from visual scenes. A rapid glance around the room at a dinner party is often all it takes to judge the overall mood and decide whether or not it is time to open up another bottle of wine. Research conducted in the last 15 years has shown that the visual system is highly specialized for the perception of social material, such as bodies, faces, and facial expressions. From these basic social features, people infer important information about the thoughts and intentions of others. However, it seems unlikely that the perception of these elementary social cues alone is sufficient for understanding the subtleties of complex social interactions. Making sense of social interactions requires going beyond the observable data and inferring intentions, beliefs, and desires—in short, attributing mental states (i.e., mentalizing; see Frith et al. 1991).

Recently, researchers have turned to the question of whether social information is preferentially processed when people view natural visual scenes. Studies of visual attention have demonstrated that observers automatically orient toward social information, such as faces and bodies, when viewing complex scenes and that this bias is detectable as early as the first saccade (Fletcher-Watson et al. 2008). Moreover, when viewing natural social scenes, observers spend a disproportionate amount of time looking at eyes and faces, almost to the exclusion of other content (Birmingham et al. 2008a, 2008b). This suggests that when viewing complex scenes involving people, observers spontaneously attempt to make sense of what is happening between characters in the scene by using features such as gaze direction and facial expression. That such inferences might occur spontaneously was hinted at over 60 years ago in the work of Heider and Simmel (1944). In their study, participants were asked to describe the motion of geometric shapes based on simple animations involving 2 triangles and a circle. One of these animations made it appear as though the triangles and circles were interacting with each other. When participants described what was happening in this condition, they spontaneously developed complex narratives that often described 2 triangles caught in a rivalry over the affections of a small but not unattractive circle. Just as interesting is the description made by one participant, who went to great lengths to describe the animation in purely geometric terms. This subject's attempt ultimately proved futile as, seemingly despite herself, she began referring to one of the triangles in animate terms by describing how “he” is searching around for an opening to escape from inside a rectangle (Heider and Simmel 1944). This classic study demonstrates how difficult it can be to successfully avoid attributing thoughts and intentions to anything that appears to have them—even 2D triangles and circles.

Over 15 years of neuroimaging studies on social cognition and mental state attribution have shown that inferring mental states is associated with activity in the dorsal medial prefrontal cortex (DMPFC), the anterior temporal poles, the temporal parietal junction (TPJ), and the precuneus. The involvement of these regions in social cognition has been replicated using a multitude of tasks, stimulus types, and imaging modalities (e.g., Fletcher et al. 1995; Castelli et al. 2002; Saxe and Kanwisher 2003; Jackson et al. 2006; Gobbini et al. 2007; Spreng et al. 2009). In particular, Mitchell et al. (2002, 2004, 2005) have demonstrated over a range of studies the myriad ways in which the DMPFC is involved in forming impressions and attending to social information about persons.

Despite a wealth of research on the neural systems involved in social cognition, there have been surprisingly few studies examining how these regions are recruited when viewing natural social scenes. This is important because, for instance, people tend not to encounter objects like faces in isolation but rather in social contexts that guide their interpretation of the mental and emotional states of the target (e.g., Kim et al. 2004; Aviezer et al. 2008). Prior work using short video clips of 1 or 2 people has shown that regions involved in mental state attribution exhibit greater activity when participants passively view social interactions versus single person movie clips (Iacoboni et al. 2004). In a similar vein, these same regions are engaged when passively viewing Heider and Simmel-like social animations (Gobbini et al. 2007; Wheatley et al. 2007). Although, the majority of the research on the neural basis of social cognition has examined how people infer intentions in tasks where participants are explicitly instructed to engage in mental state inferences, these and other studies suggest that regions involved in social cognition may be obligatorily recruited by complex social material (e.g., Iacoboni et al. 2004; Gobbini et al. 2007; Wheatley et al. 2007) or when engaged in tasks that strongly invite mental state reasoning (e.g., Spiers and Maguire 2006; Young and Saxe 2009). Less well understood is whether people spontaneously recruit these same brain regions when viewing social scenes even when performing tasks that do not require social cognition and, if so, whether there are individual differences that mediate the degree to which people spontaneously activate these areas.

It seems likely that some individuals may process social information more readily than others. For instance, high-functioning individuals with autism exhibit deficits in both basic social perception and mental state attribution. Indeed, individuals with autism are less likely to look at faces not only when presented in isolation (Dalton et al. 2005) but also when part of a social scene (Klin et al. 2002). Moreover, neuroimaging evidence suggests that, compared with controls, those with autism underrecruit the DMPFC when viewing social animations that are similar to those used by Heider and Simmel (Castelli et al. 2002). Research by Baron-Cohen and colleagues has examined individual differences in traits that are related to autism. The empathizing quotient is a self-report measure of an individual's propensity for engaging in both emotional empathy (i.e., feeling the pain of others) and mentalizing (i.e., inferring the thoughts and intentions of others) and has been shown to reliably discriminate between healthy participants and high-functioning individuals with autism (Baron-Cohen et al. 2003; Baron-Cohen and Wheelwright 2004). Similarly, behavioral research using the Autism Quotient, a measure related to the empathizing quotient and sharing many of the same questions, found that individual differences in autistic traits in the normal population are negatively correlated with the ability to infer dynamic changes in emotional states in others (Bartz et al. 2010).

In the present study, we sought to investigate two understudied aspects of the neural basis of social cognition. First, we examined whether regions involved in making explicit mental state attributions are spontaneously recruited when people view socially complex scenes. Second, we examined whether brain activity in these areas is related to individual differences in trait empathizing. To do so, we recruited 48 male participants who were prescreened with a measure of the empathizing quotient (Baron-Cohen and Wheelwright 2004) and selected for participation in order to maximally represent the range of scale scores. The empathizing quotient was selected over other commonly used measures of empathy (i.e., the Interpersonal Reactivity Index) because of its demonstrated ability to discriminate between individuals with high-functioning autism and healthy controls (Lombardo et al. 2007). Moreover, the empathizing quotient is one of the few measures designed specifically to measure not only emotional empathy but also individual differences in mentalizing. We note that because a primary aim of this study was to investigate individual differences, we recruited a comparatively large sample of participants. This was motivated by recent reports suggesting that common sample sizes in neuroimaging are significantly underpowered when it comes to detecting even strong effects in correlational designs (i.e., Yarkoni 2009).

During functional neuroimaging, participants completed a simple categorization task in which they classified 4 types of visual scenes (animal, vegetable, mineral, and human social scenes) as belonging either to the animal, vegetable, or mineral categories. This categorization task served 2 purposes: First, it ensured that participants were alert and attending to the stimuli and second, it provided a plausible cover story (i.e., examining how people play the 20 questions game, also sometimes called the “animal, vegetable, or mineral?” game) which ensured that participants would be unlikely to infer the social nature of the task. We predicted that viewing social scenes would recruit regions involved in mental state attribution (e.g., DMPFC, temporal poles) and that individual differences in empathizing would correlate with activity in these regions when viewing social scenes.

Materials and Methods

Subjects

Forty-eight healthy right-handed male participants (mean age = 20; range: 18–28 years old) with normal or corrected-to-normal visual acuity and no history of neurological problems participated in the present study. Participants were selected from a larger pool of male participants (n = 161) prescreened with the 60-item version of the empathizing quotient (Baron-Cohen and Wheelwright 2004) and chosen in order to maximally represent the range of empathizing scores (range: 10–74; mean score: 36, standard deviation 14). Owing to gender differences in empathizing (Baron-Cohen and Wheelwright 2004), we restricted our sample to include only men. Finally, participants remained unaware of these selection criteria until debriefing. Emotional empathy and cognitive empathy subscales of the empathizing quotient were calculated based on the method outlined in (Lawrence et al. 2004). All participants gave informed consent in accordance with the guidelines set by the Committee for the Protection of Human Subjects at Dartmouth College.

Stimuli

Stimuli consisted of 240 images of natural scenes, each belonging to a different category: 60 human social scenes, 60 scenes of nonhuman animals, 60 scenes of vegetables or plant life, and 60 scenes of minerals (see representative stimuli in Fig 1). These broad categories were chosen so as to correspond with those used in the popular “animal, vegetable, or mineral?” game, wherein a person is given 20 questions to deduce what someone else is thinking. Human social scenes consisted of social interactions or of people displaying communicative expressions (e.g., smiling, talking). All images conformed to an image dimension of 480 × 360 pixels at 72 dpi.

Figure 1.

Sample images from the categorization task. Participants were informed that the purpose of the study was to examine brain areas involved in basic categorization. The task consisted of 240 images of scenes (60 nonhuman animal, 60 vegetable, 60 mineral, and 60 human scenes) that could be categorized as either animal, vegetable, or mineral. Critically, scenes of people were categorized as animal, thus minimizing the likelihood that participants would infer the social nature of the task.

Stimulus presentation and response recording were controlled by Neurobehavioral Systems Presentation software (www.neurobs.com). Participants responded via key-press on a pair of Lumina LU-400 functional magnetic resonance imaging (fMRI) response pads. An Epson ELP-7000 LCD projector was used to project stimuli onto a screen at the end of the magnet bore which participants viewed via an angled mirror mounted on the head coil.

Task and Experimental Design

At scanning, each participant was informed that they would be completing a simple visual categorization task in which they were to sort images into animal, vegetable, or mineral categories, similar to the 20 questions game. No mention was made of social scenes, empathy, or social perception, in order to keep participants naïve to the experimental manipulation. Prior to scanning, a short practice consisting of 11 images was conducted while participants were in the scanner. During both practice and the main task, all participants spontaneously categorized humans as belonging to the animal category. Behavioral data from one participant was lost due to computer malfunction.

The experimental paradigm used a rapid event-related design with trials consisting of a single image displayed for 2000 ms. The order of trial types and the duration of the interstimulus interval (between 500 ms and 8000 ms) were pseudorandomized. During the interstimulus interval, null event trials consisting of a white fixation cross against a black background were shown. In the present study, the task to null event ratio was 0.6 and the average duration of the null events was 4000 ms. These values have previously been shown to maximize detection of the hemodynamic response at stimulus durations of 2000 ms (Birn et al. 2002).

Image Acquisition

Magnetic resonance imaging was conducted with a Philips Achieva 3.0-T scanner using an 8-channel phased array coil. Structural images were acquired using a T1-weighted magnetization prepared rapid gradient echo protocol (160 sagittal slices; time repetition [TR]: 9.9 ms; time echo [TE]: 4.6 ms; flip angle: 8°; 1 × 1 × 1 mm voxels). Functional images were acquired using a T2*-weighted echo-planar sequence (TR: 2500 ms; TE: 35 ms; flip angle: 90°; field of view: 24 cm). For each participant, 4 runs of 150 whole-brain volumes (30 axial slices per whole-brain volume, 4.5 mm thickness, 0.5 mm gap, 3 × 3 mm in-plane resolution) were collected.

Image Preprocessing and Analysis

fMRI data were analyzed using the general linear model (GLM) for event-related designs in SPM8 (Wellcome Department of Cognitive Neurology). For each functional run, data were preprocessed to remove sources of noise and artifact. Images were corrected for differences in acquisition time between slices and realigned within and across runs via a rigid body transformation in order to correct for head movement. Images were then unwarped to reduce residual movement-related image distortions not corrected by realignment. Functional data were normalized into a standard stereotaxic space (3 mm isotropic voxels) based on the SPM8 echo planar imaging template that conforms to the ICBM 152 brain template space (Montreal Neurological Institute) and approximates the Talairach and Tournoux atlas space. Finally, normalized images were spatially smoothed (8-mm full-width at half-maximum) using a Gaussian kernel to increase the signal to noise ratio and to reduce the impact of anatomical variability not corrected for by stereotaxic normalization.

For each participant, a GLM was constructed to investigate category specific brain activity. This GLM, incorporating task effects and covariates of no interest (a session mean, a linear trend to account for low-frequency drift, and 6 movement parameters derived from realignment corrections), was convolved with a canonical hemodynamic response function (HRF) and used to compute parameter estimates (β) and contrast images (containing weighted parameter estimates) for each visual scene category at each voxel. To account for differences in reaction times (RTs) across categories, each event was given a duration corresponding to the RT for categorizing that scene. RTs for events in which there was no response were replaced with the within-condition mean RT. This method of modeling RT differences by varying the duration of each event has been shown in both simulated and empirical data to be superior to parametric regression based methods in which RT is used to modulate the height of the HRF (Grinband et al. 2008).

Contrast images for each subject, comparing task effects for each condition with baseline (null fixation events), were then submitted to a second-level repeated measures analysis of variance (ANOVA) with subject effects explicitly modeled in order to account for between subject differences in mean response. This generated a statistical parametric map of F values for the main effect of condition. Monte Carlo simulations using AFNI's AlphaSim were used to calculate the minimum cluster size at an uncorrected threshold of P < 0.001 required for a whole-brain correction of P < 0.05. Simulations (10, 000 iterations) were performed on the volume of our study-wide whole-brain mask (comprising a volume of 96 061 voxels or 2 593 657 mm3) using smoothness estimated from the residuals obtained from the GLM and resulting in a minimum cluster size of 59 contiguous voxels. For visualization purposes, statistical parametric maps are overlaid onto inflated cortical renderings of the Colin atlas using Caret 5.6 (Van Essen et al. 2001).

Eight millimeter spherical regions of interest (ROIs) were centered on the peak voxel of task sensitive clusters from the repeated measures ANOVA. For clusters spanning multiple regions, additional ROIs were centered on within-cluster local maxima. In this way, ROIs are selected on the basis of demonstrating a main effect of some category but are unbiased with respect to tests of simple effects (e.g., human vs. nonhuman scenes). For each ROI, parameter estimates were extracted from each participant for each category. A difference score was created between parameter estimates for human minus those for all 3 nonhuman categories (animal, vegetable, and mineral). Difference scores were then submitted to an offline one-sample t-test in order to identify ROIs that preferentially activated to human versus nonhuman scenes. These same ROIs were then examined for their correlation with trait empathizing. As ROIs are defined based on task sensitive regions demonstrating a main effect of scene type, the correlation with trait empathizing is independent of the initial ROI selection criteria.

In addition, we performed a second random effects analysis, in which parameter estimates for the contrast of human versus nonhuman scenes for each subject were entered into a regression model with trait empathizing scores as a covariate. Statistical maps were generated for areas showing a significant correlation between trait empathizing scores and activity during the categorization of human versus nonhuman scenes (P < 0.05 corrected, using the same parameters as above). This exploratory analysis differs from the previous ROI-based correlation in that it is not restricted to task sensitive ROIs as revealed by the repeated measures ANOVA but will instead show regions where the correlation magnitude is strongest.

Results

Behavioral Results

Overall, participants correctly categorized material with a high degree of accuracy (i.e., >95% correctly categorized). A repeated measures ANOVA demonstrated a main effect of category (F3,138 = 14.71, P < 0.001), such that participants were more accurate at categorizing human social scenes (M = 98.4%) than scenes of animals (t46 = 4.25, P < 0.001; M = 96.5%), vegetables (t46 = 3.54, p = 0.001; M = 96.8%) and minerals (t46 = 5.01, P < 0.001; M = 94.8%).

RTs during categorization followed a similar pattern as accuracy scores (F3,138 = 36.47, P < 0.001), with participants showing more rapid categorization of animate categories (i.e., people < animal < vegetable and mineral). Participants had shorter RTs for categorizing human social scenes (M = 835 ms) compared with animal (t46 = 6.84, P < 0.001; M = 903 ms), vegetable (t46 = 8.82, P < 0.001; M = 930 ms), and mineral scenes (t46 = 7.72, P < 0.001; M = 930 ms). This was also true of animal scenes compared with vegetable (t46 = 3.0, P = 0.005) and mineral scenes (t46 = 2.76, P = 0.008). There was no difference in RTs between vegetable and mineral scenes (t46 = −0.4, P = 0.97).

Trait empathizing scores were unrelated to accuracy and response latency during categorization of all 4 scene types (all correlations between empathizing and accuracy and empathizing and RT are P > 0.3). Thus, any differences in brain activity related to trait empathizing are unlikely to be due to differences in perceived task difficulty, engagement, or time on task.

fMRI Results

Regions Demonstrating Greater Activity to Social Scenes

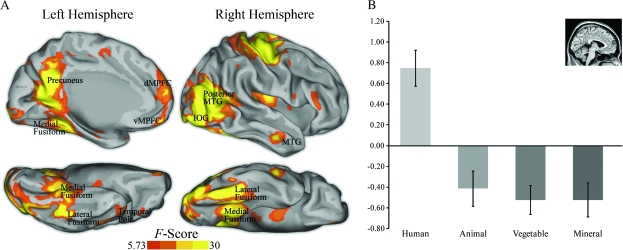

A voxelwise repeated measures ANOVA revealed several brain areas demonstrating a main effect of category, this included regions involved in visual scene perception (e.g., parahippocampal place area, retrosplenial cortex), the perception of human form (e.g., fusiform, posterior middle temporal gyrus/extrastriate body area [MTG/EBA], amygdala, superior temporal sulcus [STS]), and regions associated with social cognition (e.g., DMPFC, precuneus, temporal poles) (Fig. 2A). ROI analysis comparing parameter estimates for human scenes versus nonhuman scenes revealed that the majority of these regions were preferentially active for human scenes (Table 1). In particular, the DMPFC demonstrated increased activity to human versus nonhuman scenes (Fig. 2B). This overall pattern of results was corroborated by a logical “and” conjunction analysis of every pairwise comparison of human scenes versus other categories (Supplementary text and Fig. S1) and by a direct contrast of human and nonhuman animal scenes (Fig. 3). This last comparison is of special interest given that participants made the same behavioral response to both human scenes and nonhuman animal scenes (i.e., “animal”).

Figure 2.

(A) Brain regions showing a main effect of category from a whole-brain voxelwise repeated measures ANOVA (P < 0.05, corrected). Statistical maps of left medial hemisphere, right lateral hemisphere, and ventral surfaces of both hemispheres are overlaid onto inflated cortical renderings. (B) ROI analysis of parameter estimates in the DMPFC showed that this area was preferentially active to human social versus nonsocial scenes (t47 = 6.76, P < 0.001). Coordinates (x, y, z) are in Montreal Neurological Institute stereotaxic space. vMPFC = ventral medial prefrontal cortex.

Table 1.

Brain regions demonstrating a main effect of category and their pattern of response

| Brain region | Side | BA | F value | Coordinates of peak activation |

Human versus nonhuman | ||

| x | y | z | t Value | ||||

| Regions from the main effect of category that also show a preference for human versus nonhuman scenes | |||||||

| DMPFC | L | 10 | 22.1 | −3 | 63 | 18 | 6.8** |

| Temporal pole | L | 38 | 17.3 | −42 | 15 | −39 | 7.2** |

| Temporal pole | R | 38 | 10.3 | 42 | 18 | −39 | 5.1** |

| Precuneus | R | 31 | 53.9 | 3 | −60 | 24 | 11.0** |

| Lateral fusiform | L | 37 | 57.7 | −42 | −45 | −24 | 9.5** |

| Lateral fusiform | R | 37 | 91.8 | 42 | −57 | −24 | 13.1** |

| MTG (EBA) | L | 39 | 77.5 | −51 | −69 | 12 | 13.2** |

| MTG (EBA) | R | 39 | 99.2 | 51 | −63 | 6 | 12.5** |

| Lateral inferior occipital gyrus | L | 19 | 74.0 | −54 | −84 | 0 | 9.8** |

| Lateral inferior occipital gyrus | R | 19 | 91.9 | 48 | −81 | −3 | 11.5** |

| MTG/STS | L | 21 | 27.8 | −57 | −6 | −21 | 8.2** |

| MTG/STS | R | 20 | 29.6 | 57 | −9 | −27 | 8.4** |

| Ventral medial prefrontal cortex | — | 11 | 22.8 | 0 | 57 | −21 | 7.7** |

| Amygdala/hippocampus | R | — | 20.6 | 21 | −6 | −21 | 6.3** |

| Inferior frontal gyrus | R | 44 | 10.7 | 45 | 18 | 21 | 4.9** |

| Inferior frontal gyrus | R | 45 | 16.3 | 54 | 33 | 6 | 4.6** |

| Regions from the main effect of category that did not show a preference for human scenes versus nonhuman scenes and their pattern of response | |||||||

| Retrosplenial cortex | R | 30 | 59.2 | 18 | −57 | 12 | HM > AV |

| Retrosplenial cortex | L | 30 | 51.1 | −18 | −60 | 9 | HM > AV |

| Medial fusiform extending into PHG/PPA | L | 37 | 50.8 | −27 | −45 | −12 | M > HAV |

| Medial fusiform extending into PHG/PPA | R | 37 | 49.2 | 27 | −45 | −12 | M > HAV |

| Supramarginal gyrus/IPL | R | 40 | 9.1 | 48 | −39 | 42 | AVM > H |

Note: Regions showing a main effect of category from a voxelwise repeated measures ANOVA (P < 0.05, corrected) are listed along with the best estimate of their location. Coordinates are in Montreal Neurological Institute stereotaxic space. t Values from the comparison of human versus nonhuman scenes are listed for 8-mm spherical ROIs centered on the peak voxel for each region. Motor regions tracking response hand are listed in Supplementary Table S1. BA = approximate Brodmann's area; PHG = parahippocampal gyrus; PPA = parahippocampal place area; IPL = inferior parietal lobule; H = human scenes; A = animal scenes; V = vegetable scenes; M = mineral scenes.

**P < 0.001.

Figure 3.

Results of a whole-brain analysis comparing human social scenes with nonhuman animal scenes (P < 0.05, corrected). Statistical maps of left medial hemisphere and right lateral hemisphere are overlaid onto inflated cortical renderings. This contrast replicates the ROI analysis outlined in the results but demonstrates that the findings obtain even when comparing 2 “animate” categories for which participants made the same behavioral response (i.e., categorized as animal).

Finally, as some of these regions are also known to respond to animal depictions, we calculated the difference scores between nonhuman animal versus nonanimal categories. Although in all cases these regions preferentially responded to human scenes versus animal scenes, we did nevertheless find a graded response for lateral fusiform, MTG/EBA area, inferior occipital gyrus (IOG), right amygdala, and the precuneus following a pattern of humans scenes > animal scenes > vegetable and mineral scenes (Supplementary Table S2 and summarized in Table 3).

Table 3.

Summary of results for ROIs across all analyses

| Brain region | Side | BA | Pattern of response | Empathizing (r) | Cognitive empathy (r) | Emotional empathy (r) |

| DMPFC | — | 10 | H > AVM | 0.47*** | 0.39** | 0.49*** |

| Temporal pole | L | 38 | H > AVM | 0.45*** | 0.39** | 0.41** |

| Temporal pole | R | 38 | H > AVM | 0.39** | 0.21 | 0.47*** |

| Precuneus | — | 31 | H > A > VM | 0.06 | −0.04 | 0.08 |

| Lateral fusiform | L | 37 | H > A > VM | 0.16 | 0.13 | 0.14 |

| Lateral fusiform | R | 37 | H > A > VM | 0.3* | 0.30* | 0.18 |

| MTG (EBA) | L | 39 | H > A > VM | 0.1 | 0.05 | 0.21 |

| MTG (EBA) | R | 39 | H > A > VM | 0.34* | 0.29* | 0.33* |

| Lateral inferior occipital gyrus | L | 19 | H > A > VM | 0.12 | 0.11 | 0.11 |

| Lateral inferior occipital gyrus | R | 19 | H > A > VM | 0.16 | 0.13 | 0.13 |

| MTG/STS | L | 21 | H > AVM | 0.07 | −0.02 | 0.16 |

| MTG/STS | R | 20 | H > AVM | 0.04 | 0.07 | 0.13 |

| Ventral medial prefrontal cortex | — | 11 | H > AVM | 0.07 | −0.06 | 0.22 |

| Amygdala/hippocampus | R | — | H > A > VM | 0.09 | 0.01 | 0.12 |

| Inferior frontal gyrus | R | 44 | H > A > VM | 0.10 | 0.05 | 0.28 |

| Inferior frontal gyrus | R | 45 | H > A > VM | 0.27 | 0.16 | 0.31* |

Note: ROIs are derived from a statistical map of the main effect of category from a voxelwise repeated measures ANOVA (P < 0.05, corrected) and are listed along with the best estimate of their location. Center coordinates of spherical ROIs can be found in Table 1. BA = approximate Brodmann's area; H = human; A = animal; V = vegetable; M = mineral.

*P < 0.05, **P < 0.01, ***P < 0.001.

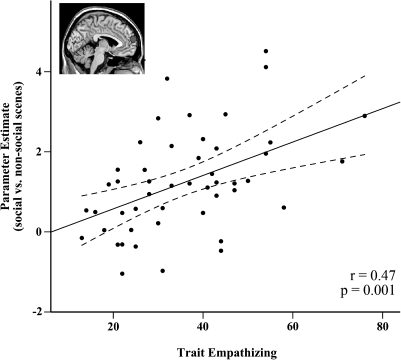

Regions Demonstrating a Correlation with Trait Empathizing

We examined the relationship between trait empathizing and activity to human versus nonhuman scenes in DMPFC and other regions associated with social cognition that also demonstrated a preference for human versus nonhuman scenes (i.e., temporal poles, precuneus). Trait empathizing was positively correlated with the DMPFC (r = 0.47, P = 0.001; Fig. 4) and the temporal poles bilaterally (left: r = 0.45, P = 0.001; right: r = 0.38, P = 0.007). In addition, trait empathizing was correlated with 2 regions involved in the perception of human form: the right lateral Fusiform (r = 0.3, P = 0.04) and the right posterior MTG/EBA area (r =0.34, P = 0.018). There were no other regions that showed a significant relationship with empathizing. The relationship with trait empathizing in these regions was specific to human social scenes and did not generalize to nonhuman animal versus nonanimal scenes (all r < 0.09, P > 0.56, except right posterior MTG/EBA area: r = 0.21, P = 0.16) nor was there any correlation between trait empathizing and ROIs which showed greater activity to nonhuman animal versus vegetable and mineral scenes (e.g., lateral fusiform, IOG, MTG/EBA; see Supplementary Table S2).

Figure 4.

Correlation between trait empathizing and the blood oxygen level–dependent response difference to human versus nonhuman scenes in the DMPFC ROI (−3, 63, 18). Inset shows location of ROI. Coordinates (x, y, z) are in Montreal Neurological Institute stereotaxic space.

For areas that demonstrated a relationship with trait empathizing, we correlated the emotional empathy and cognitive empathy subscales of the empathizing scale with activity to human versus nonhuman scenes. The DMPFC and temporal poles bilaterally showed a relationship with emotional empathy, whereas only the DMPFC and left temporal pole were correlated with cognitive empathy. A summary of these and all other findings can be found in Table 3.

These results indicate that the relationship between trait empathizing and activity in regions involved in social cognition is specific to human social scenes. However, a more stringent test of this finding is to examine the relationship between trait empathizing and activity when viewing human social versus nonhuman animal scenes, as both scenes are matched for content (i.e., animals) and behavioral response. Replicating the main analysis, we again found that trait empathizing correlated with activity in the DMPFC (r = 0.39, P = 0.007), the temporal poles bilaterally (left: r = 0.29, P = 0.046; right: r = 0.27, P = 0.063) although the correlation in the right temporal pole was at the trend level. In addition, we again found a relationship with trait empathizing in the right lateral fusiform (r = 0.30, P = 0.036) but in this case the correlation in the right MTG/EBA area failed to reach significance (r = 0.24, P = 0.095).

Finally, a whole-brain regression analysis between trait empathizing and activity during the social versus nonsocial scenes (P < 0.05, corrected) replicated the above ROI-based analysis in the DMPFC and the right lateral fusiform (Table 2, Supplementary Fig. S2). Moreover, these effects were specific to a positive relation with empathizing as the opposite regression (i.e., areas showing increased activity with lower empathizing scores) failed to find a single region of increased response to social versus nonsocial scenes.

Table 2.

Brain regions demonstrating a correlation between social versus nonsocial scenes and trait empathizing (whole-brain regression)

| Brain region | Side | BA | t Value | Coordinates of peak activation |

||

| x | y | z | ||||

| DMPFC | L | 10 | 4.61 | −12 | 60 | 15 |

| DMPFC | L | 8 | 4.36 | −6 | 42 | 42 |

| Lateral fusiform | R | 19 | 4.50 | 33 | −63 | −15 |

Note: Regions whose response to social versus nonsocial scenes correlated with trait empathizing in a voxelwise regression (P < 0.05, corrected) are listed along with the best estimate of their location. Coordinates are in Montreal Neurological Institute stereotaxic space. BA = approximate Brodmann's area.

Discussion

Over a decade of research has shown that engaging in mentalizing or person perception recruits a system of regions consisting of the DMPFC, temporal poles, precuneus, and the temporoparietal junction. Although person perception outside the laboratory usually occurs spontaneously, the extant research has primarily used tasks that explicitly ask subjects to engage in social cognition. In this study, we examined individual differences in the spontaneous recruitment of regions involved in social cognition while participants viewed natural social scenes. Compared with nonsocial scenes, categorizing social scenes recruited both regions involved in social cognition (DMPFC, temporal poles, precuneus) and areas involved in the visual perception of social features such as faces and bodies (e.g., lateral fusiform and posterior MTG). ROI analysis demonstrated that activity in the DMPFC, temporal poles, and lateral fusiform was strongly correlated with individual differences in empathizing. This relationship was found to be specific to social scenes and did not generalize to nonhuman animal versus nonanimal scenes. Moreover, this correlation held for the comparison of human social scenes to nonhuman animal scenes. This last contrast is especially noteworthy as participants made identical behavioral responses for both social and nonhuman animal scenes but exhibited a highly different pattern of brain activity when doing so.

We also found that a subset of inferotemporal regions demonstrated a graded response to animate versus inanimate categories. The posterior MTG/STS and the right lateral fusiform both responded more strongly to human scenes followed by nonhuman animal scenes and then by inanimate categories (i.e., vegetable and mineral scenes). The sensitivity of these areas to animal scenes is unsurprising given the amount of shared features between humans and animals. Moreover, similar findings were found for animal images in other studies of category perception (Chao et al. 1999; Wiggett et al. 2009). Of particular interest is that the relationship between trait empathizing and activity in these regions did not generalize to nonhuman animal scenes, suggesting that this relationship is specific to processing human social cues.

The DMPFC and Spontaneous Social Cognition

The involvement of a “mentalizing system” in tasks that explicitly require mental state inferences or forming impressions of people has been well characterized (Fletcher et al. 1995; Gallagher et al. 2000; Saxe and Kanwisher 2003; Mitchell et al. 2005; Jackson et al. 2006; Gobbini et al. 2007; Spreng et al. 2009). A smaller number of studies have also examined the response of these brain regions in tasks that strongly invite spontaneous social cognition but do not have any explicit task demands. For instance, freely viewing Heider and Simmel-like social animations has been shown to activate the mentalizing system (Castelli et al. 2002; Gobbini et al. 2007; Wheatley et al. 2007). More germane to the current findings is research showing that the mentalizing system is involved in spontaneously representing person information. For example, compared with familiar famous faces, viewing faces for which people have a wealth of person knowledge (i.e., family members) activates the DMPFC, precuneus, and posterior STS (Gobbini et al. 2004; Leibenluft et al. 2004). Moreover, this finding also obtains when participants view relatively unfamiliar faces for which they were recently trained to associate person knowledge with (Todorov et al. 2007). These findings have been interpreted as indicating that participants spontaneously retrieve person knowledge (i.e., personality traits, preferences) when viewing faces for which something about the person is known (e.g., Todorov et al. 2007) and dovetail nicely with prior work demonstrating that these same areas are involved in representing person knowledge more broadly, such as when categorizing personality traits versus object-related adjectives (Mitchell et al. 2002).

We propose that, as in the above research on the spontaneous retrieval of person knowledge, when observers are viewing social scenes, they recruit these same brain areas in order to spontaneously extract person knowledge. Compared with images of novel faces, social scenes contain a wealth of person information. For instance, a scene containing a young couple engaged in conversation is rich in terms of the social inferences that can be made about the characters in the scene (e.g., their personalities, tastes in clothing, the state of their relationship, etc.). That observers might spontaneously form impressions of the characters in the scene and extract person information is in accord with a long history of research in social psychology on the phenomenon of spontaneous trait inferences. This research has shown that reading short descriptions of a person's behavior leads to spontaneous inferences about their personality (Winter and Uleman 1984; Todorov and Uleman 2002), a process that appears to rely on the DMPFC (Mitchell et al. 2006; Ma et al. 2011).

Recent research on visual attention has shown that participants' first saccades are almost invariable toward the social features of a scene (e.g., faces, Fletcher-Watson et al. 2008). Similarly, when viewing natural social scenes, participants pay special attention to eyes and faces of the characters in the scene, almost to the exclusion of all other features (Birmingham et al. 2008a, 2008b). Although speculative, such a pattern suggests that participants are actively trying to make sense of the social interactions they observe and is consistent with our finding of increased activity in regions supporting social cognition when viewing social scenes.

Finally, it is important to point out that our findings do not indicate that spontaneous engagement of these regions is identical to what would occur in situations in which participants are explicitly required to consider the mental states of others (although see Ma et al. 2011). Abundant research has consistently demonstrated that explicitly attending to social information, inferring mental states, or forming impressions of others leads to increased recruitment of regions involved in social cognition over and above simply viewing social material (e.g., Mitchell et al. 2006; Spunt et al. 2011).

Empathizing and Social Cognition

Empathy is a complex construct that involves both a sharing of emotion (i.e., feeling someone's pain) and also a cognitive understanding of another's thoughts and intentions (i.e., cognitive empathy) (Singer 2006). In this study, we were interested primarily in individual differences that might mediate activity in brain regions involved in mentalizing and social cognition. A large body of research suggests that individuals with autism display deficits in mental state attribution (Baron-Cohen et al. 1997; Abell et al. 2000; Bowler and Thommen 2000). Similarly, functional neuroimaging research has shown that such individuals also underrecruit brain regions involved in mentalizing when viewing social material (e.g., Castelli et al. 2002). These deficits can also be detected in the normal population, as shown by recent research demonstrating that the ability to accurately infer other's emotions is related to individual differences in autism spectrum traits (Bartz et al. 2010). Although there are multiple ways to assess cognitive and emotional empathy, the empathizing quotient was specifically designed to detect empathy and mentalizing deficits associated with autism (Baron-Cohen et al. 2003; Baron-Cohen and Wheelwright 2004) and as such is more sensitive to these deficits than other similar measures of empathy (e.g., Lombardo et al. 2007).

In this study, we examined the relationship between brain activity and empathizing in a task designed to evoke person perception and mental state attributions. This is in contradistinction with previous research examining individual differences in empathy-related constructs that have primarily examined empathic responses to another person's physical pain (Singer et al. 2004, 2006; Lamm et al. 2007) or to perceiving basic social signals (i.e., facial displays of emotion, see Chakrabarti et al. 2006; Hooker et al. 2008; Montgomery et al. 2009). Here, we observed that both cognitive and emotional aspects of empathizing were correlated with activity in the mentalizing system. Although the mentalizing system is generally involved in nonemotional social cognition, it is nevertheless plausible that individuals high in emotional empathy may demonstrate an increased propensity to think about and consider the mental states of others. However, caution is required in interpreting differences between subcomponents of empathizing since cognitive and emotional empathy are highly correlated with each other and with the full-scale score.

Although our participants were recruited from a normal population, we nevertheless note similarities between our findings and prior observations of reduced spontaneous mentalizing in individuals with autism. For instance, although individuals with high-functioning autism often pass traditional false-belief tests, they show reduced spontaneous mentalizing when asked to describe the Heider and Simmel social animations mentioned earlier (e.g., Abell et al. 2000; Bowler and Thommen 2000). Similarly, a recent eye tracking study found that these individual fail to spontaneously anticipate actions in a visual false-belief task despite intact behavioral performance (Senju et al. 2009). Finally, neuroimaging work by Castelli et al. (2002), showed that, compared with control subjects, individual with autism display reduced activity in the DMPFC, temporal poles, and STS when viewing Heider and Simmel-like social animations. Further evidence for a critical role of DMPFC in social cognition comes from a recent case study of two patients with acquired autistic personality traits following extensive damage to the MPFC (Umeda et al. 2010). Of particular relevance to the present study is that this change in personality was primarily characterized by a reduction in empathizing rather than an increase in classic autistic spectrum traits such as stereotyped behavior and attention to detail.

Findings such as these have led to the suggestion that individuals with autism can engage in some aspects of mentalizing when explicitly asked to do so but may fail to spontaneously mentalize when there are no explicit task demands (e.g., Slaughter and Repacholi 2003; Senju et al. 2009). Here, we show a similar finding within the healthy population, suggesting that lower empathizing scores are associated with a reduced likelihood to spontaneously engage in social cognition when viewing complex social material. Although we can only speculate at this time, the fallout of this reduced tendency to consider the mental states of others may have real-world consequences, such as difficulties in accurately inferring others' emotions (e.g., Zaki et al. 2009; Bartz et al. 2010), a failure to notice social cues (e.g., Montgomery et al. 2009), or a reduced tendency to engage in prosocial behavior (e.g., Davis et al. 1999).

Animacy Bias and Limitations

Although the animal, vegetable mineral task was designed to be exceedingly easy, we nevertheless observed performance differences across categories, though all participants were near ceiling (i.e., accuracy was 95% or greater). The fact that participants were faster and more accurate to categorize animate versus inanimate categories is in line with research demonstrating an overwhelming preference for attending to the social features of a scene (Birmingham et al. 2008a, 2008b; Fletcher-Watson et al. 2008). That this advantage is also present for nonhuman animal scenes is unsurprising given prior research demonstrating a strong bias for people toward detecting subtle changes in animate (i.e., people and animals) versus inanimate features of a scene (New et al. 2007). The small differences in accuracy and RTs we observed across categories are unlikely to contribute to the present findings as behavioral performance was unrelated to individual differences in empathizing. Finally, we note the difficulties inherent in perfectly matching stimuli in which there is a known bias toward one of the categories. Perfectly equating animate and inanimate stimuli may require using scenes in which the animate features are in some way obscured or otherwise rendered more difficult to detect which itself would introduce unwanted confounds across categories.

A limitation of the current study is the absence of gaze fixation data. For instance, it is plausible that empathizing is related to individual differences gaze patterns when viewing social scenes. A number of studies have shown that high-functioning autistics tend to avoid fixating on the face when presented in isolation (Dalton et al. 2005) or as part of a social scene (Klin et al. 2002). However, more recent research has failed to find any differences in the pattern of fixation between autistics and control participants when viewing Heider and Simmel social animations although autistic participants nevertheless showed mentalizing deficits when asked to describe the animations (Zwickel et al. 2010). In our study, we found that trait empathizing was unrelated to behavioral performance (e.g., accuracy and RT). Although this does not exclude the possibility that lower empathizing is related to less time looking at the social features of a scene, it does suggest that these participants were nevertheless sufficiently attending to the these features to complete the categorization task.

Conclusions

Over the last 15 years, a multitude of studies have examined the neural basis of social cognition. In general, the majority of these studies require subjects to explicitly attend to the social information provided (e.g., form impressions or infer beliefs). Early on, however, it was suggested that brain regions involved in social cognition might be obligatorily recruited by any complex social stimuli (e.g., Gallagher and Frith 2003). In the current study, we examined how brain areas subserving mentalizing and person perception are spontaneously recruited when viewing natural social scenes and whether the magnitude of activity in these regions is modulated by individual differences in a measure of empathy and mentalizing. Our findings suggest that, when viewing natural social scenes, people are not simply passive viewers but, to varying degrees, are actively trying to make sense of what is happening between characters in the scene by recruiting regions involved in social cognition.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Funding

National Science Foundation (SBE-0354400); National Institutes of Health (MH059282).

Supplementary Material

Acknowledgments

We would like to thank Rebecca Boswell for comments on a previous draft of this manuscript. Conflict of Interest: None declared.

References

- Abell F, Happé F, Frith U. Do triangles play tricks? Attribution of mental states to animated shapes in normal and abnormal development. Cognit Dev. 2000;15:1–16. [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Moscovitch M, Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol Sci. 2008;19:724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: evidence from very high functioning adults with autism or Asperger syndrome. J Child Psychol Psychiatry. 1997;38:813–822. doi: 10.1111/j.1469-7610.1997.tb01599.x. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Richler J, Bisarya D, Gurunathan N, Wheelwright S. The systemizing quotient: an investigation of adults with Asperger syndrome or high-functioning autism, and normal sex differences. Philos Trans R Soc Lond B Biol Sci. 2003;358:361–374. doi: 10.1098/rstb.2002.1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S. The empathy quotient: an investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J Autism Dev Disord. 2004;34:163–175. doi: 10.1023/b:jadd.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- Bartz J, Zaki J, Bolger N, Hollander E, Ludwig N, Kolevzon A, Ochsner K. Oxytocin selectively improves empathic accuracy. Psychol Sci. 2010;21:1426–1428. doi: 10.1177/0956797610383439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birmingham E, Bischof WF, Kingstone A. Gaze selection in complex social scenes. Vis Cogn. 2008a;16:341–355. [Google Scholar]

- Birmingham E, Bischof WF, Kingstone A. Social attention and real-world scenes: the roles of action, competition and social content. Q J Exp Psychol. 2008b;61:986–998. doi: 10.1080/17470210701410375. [DOI] [PubMed] [Google Scholar]

- Birn RM, Cox RW, Bandettini PA. Detection versus estimation in event-related fMRI: choosing the optimal stimulus timing. Neuroimage. 2002;15:252–264. doi: 10.1006/nimg.2001.0964. [DOI] [PubMed] [Google Scholar]

- Bowler DM, Thommen E. Attribution of mechanical and social causality to animated displays by children with autism. Autism. 2000;4:147–171. [Google Scholar]

- Castelli F, Frith C, Happe F, Frith U. Autism, Asperger syndrome and brain mechanisms for the attribution of mental states to animated shapes. Brain. 2002;125:1839–1849. doi: 10.1093/brain/awf189. [DOI] [PubMed] [Google Scholar]

- Chakrabarti B, Bullmore E, Baron-Cohen S. Empathizing with basic emotions: common and discrete neural substrates. Soc Neurosci. 2006;1:364–384. doi: 10.1080/17470910601041317. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A, Haxby JV. Are face-responsive regions selective only for faces? Neuroreport. 1999;10:2945–2950. doi: 10.1097/00001756-199909290-00013. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander Al, Davidson RJ. Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Mitchell KV, Hal JA, Snapp T, Meyer M. Empathy, expectations, and situational preferences: personality influences on the decision to participate in volunteer helping behaviors. J Pers. 1999;67:469–503. doi: 10.1111/1467-6494.00062. [DOI] [PubMed] [Google Scholar]

- Fletcher-Watson S, Findlay JM, Leekam SR, Benson V. Rapid detection of person information in a naturalistic scene. Perception. 2008;37:571–583. doi: 10.1068/p5705. [DOI] [PubMed] [Google Scholar]

- Fletcher PC, Happe F, Frith U, Baker SC, Dolan RJ, Frackowiak RS, Frith CD. Other minds in the brain: a functional imaging study of “theory of mind” in story comprehension. Cognition. 1995;57:109–128. doi: 10.1016/0010-0277(95)00692-r. [DOI] [PubMed] [Google Scholar]

- Frith U, Morton J, Leslie AM. The cognitive basis of a biological disorder—autism. Trends Neurosci. 1991;14:433–438. doi: 10.1016/0166-2236(91)90041-r. [DOI] [PubMed] [Google Scholar]

- Gallagher HL, Frith CD. Functional imaging of ‘theory of mind’. Trends Cogn Sci. 2003;7:77–83. doi: 10.1016/s1364-6613(02)00025-6. [DOI] [PubMed] [Google Scholar]

- Gallagher HL, Happe F, Brunswick N, Fletcher PC, Frith U, Frith CD. Reading the mind in cartoons and stories: an fMRI study of ‘theory of mind’ in verbal and nonverbal tasks. Neuropsychologia. 2000;38:11–21. doi: 10.1016/s0028-3932(99)00053-6. [DOI] [PubMed] [Google Scholar]

- Gobbini MI, Koralek AC, Bryan RE, Montgomery KJ, Haxby JV. Two takes on the social brain: a comparison of theory of mind tasks. J Cogn Neurosci. 2007;19:1803–1814. doi: 10.1162/jocn.2007.19.11.1803. [DOI] [PubMed] [Google Scholar]

- Gobbini MI, Leibenluft E, Santiago N, Haxby JV. Social and emotional attachment in the neural representation of faces. Neuroimage. 2004;22:1628–1635. doi: 10.1016/j.neuroimage.2004.03.049. [DOI] [PubMed] [Google Scholar]

- Grinband J, Wager TD, Lindquist M, Ferrera VP, Hirsch J. Detection of time-varying signals in event-related fMRI designs. Neuroimage. 2008;43:509–520. doi: 10.1016/j.neuroimage.2008.07.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heider F, Simmel M. An experimental study of apparent behavior. Am J Psychol. 1944;57:243–259. [Google Scholar]

- Hooker CI, Verosky SC, Germine LT, Knight RT, D'Esposito M. Mentalizing about emotion and its relationship to empathy. Soc Cogn Affect Neurosci. 2008;3:204–217. doi: 10.1093/scan/nsn019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Lieberman MD, Knowlton BJ, Molnar-Szakacs I, Moritz M, Throop CJ, Page A. Watching social interactions produces dorsomedial prefrontal and medial parietal BOLD fMRI signal increases compared to a resting baseline. Neuroimage. 2004;21:1167–1173. doi: 10.1016/j.neuroimage.2003.11.013. [DOI] [PubMed] [Google Scholar]

- Jackson PL, Brunet E, Meltzoff AN, Decety J. Empathy examined through the neural mechanisms involved in imagining how I feel versus how you feel pain. Neuropsychologia. 2006;44:752–761. doi: 10.1016/j.neuropsychologia.2005.07.015. [DOI] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, Polis S, Alexander AL, Shin Lm, Whalen PJ. Contextual modulation of amygdala responsivity to surprised faces. J Cogn Neurosci. 2004;16:1730–1745. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Lamm C, Batson CD, Decety J. The neural substrate of human empathy: effects of perspective-taking and cognitive appraisal. J Cogn Neurosci. 2007;19:42–58. doi: 10.1162/jocn.2007.19.1.42. [DOI] [PubMed] [Google Scholar]

- Lawrence EJ, Shaw P, Baker D, Baron-Cohen S, David AS. Measuring empathy: reliability and validity of the Empathy Quotient. Psychol Med. 2004;34:911–920. doi: 10.1017/s0033291703001624. [DOI] [PubMed] [Google Scholar]

- Leibenluft E, Gobbini MI, Harrison T, Haxby JV. Mothers' neural activation in response to pictures of their children and other children. Biol Psychiatry. 2004;56:225–232. doi: 10.1016/j.biopsych.2004.05.017. [DOI] [PubMed] [Google Scholar]

- Lombardo MV, Barnes JL, Wheelwright SJ, Baron-Cohen S. Self-referential cognition and empathy in autism. PLoS One. 2007;2:e883. doi: 10.1371/journal.pone.0000883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- doi: 10.1080/17470919.2010.485884. Ma N, Vandekerckhove M, Van Overwalle F, Seurinck R, Fias W. 2011. Spontaneous and intentional trait intentional trait inferences recruit a common mentalizing network to a different degree: Spontaneous inferences activate only its core areas. Soc Neurosci. 6:123–138. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Cloutier J, Banaji MR, Macrae CN. Medial prefrontal dissociations during processing of trait diagnostic and nondiagnostic person information. Soc Cogn Affect Neurosci. 2006;1:49–55. doi: 10.1093/scan/nsl007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP, Heatherton TF, Macrae CN. Distinct neural systems subserve person and object knowledge. Proc Natl Acad Sci U S A. 2002;99:15238–15243. doi: 10.1073/pnas.232395699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP, Macrae CN, Banaji MR. Encoding-specific effects of social cognition on the neural correlates of subsequent memory. J Neurosci. 2004;24:4912–4917. doi: 10.1523/JNEUROSCI.0481-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP, Neil Macrae C, Banaji MR. Forming impressions of people versus inanimate objects: social-cognitive processing in the medial prefrontal cortex. Neuroimage. 2005;26:251–257. doi: 10.1016/j.neuroimage.2005.01.031. [DOI] [PubMed] [Google Scholar]

- Montgomery KJ, Seeherman KR, Haxby JV. The well-tempered social brain. Psychol Sci. 2009;20:1211–1213. doi: 10.1111/j.1467-9280.2009.02428.x. [DOI] [PubMed] [Google Scholar]

- New J, Cosmides L, Tooby J. Category-specific attention for animals reflects ancestral priorities, not expertise. Proc Natl Acad Sci U S A. 2007;104:16598–16603. doi: 10.1073/pnas.0703913104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R, Kanwisher N. People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind”. Neuroimage. 2003;19:1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- Senju A, Southgate V, White S, Frith U. Mindblind eyes: an absence of spontaneous theory of mind in Asperger syndrome. Science. 2009;325:883–885. doi: 10.1126/science.1176170. [DOI] [PubMed] [Google Scholar]

- Singer T. The neuronal basis and ontogeny of empathy and mind reading: review of literature and implications for future research. Neurosci Biobehav Rev. 2006;30:855–863. doi: 10.1016/j.neubiorev.2006.06.011. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303:1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty JP, Stephan KE, Dolan RJ, Frith CD. Empathic neural responses are modulated by the perceived fairness of others. Nature. 2006;439:466–469. doi: 10.1038/nature04271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slaughter V, Repacholi B. Individual differences in theory of mind: what are we investigating? In: Repacholi B, Slaughter V, editors. Individual differences in theory of mind. Implications for typical and atypical development. Hove (UK): Psychology Press. p. 1–13; 2003. [Google Scholar]

- Spiers HJ, Maguire EA. 2006. Spontaneous mentalizing during an interactive real world task: an fMRI study. Neuropsychologia. 44: 1674–1682. [DOI] [PubMed] [Google Scholar]

- Spreng RN, Mar RA, Kim AS. The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: a quantitative meta-analysis. J Cogn Neurosci. 2009;21:489–510. doi: 10.1162/jocn.2008.21029. [DOI] [PubMed] [Google Scholar]

- Spunt RP, Satpute AB, Lieberman MD. Identifying the what, why, and how of an observed action: an fMRI study of mentalizing and mechanizing during action observation. J Cogn Neurosci. 2011;23:63–74. doi: 10.1162/jocn.2010.21446. [DOI] [PubMed] [Google Scholar]

- Todorov A, Gobbini MI, Evans KK, Haxby JV. Spontaneous retrieval of affective person knowledge in face perception. Neuropsychologia. 2007;45:163–173. doi: 10.1016/j.neuropsychologia.2006.04.018. [DOI] [PubMed] [Google Scholar]

- Todorov A, Uleman JS. Spontaneous trait inferences are bound to actors' faces: evidence from a false recognition paradigm. J Pers Soc Psychol. 2002;83:1051–1065. [PubMed] [Google Scholar]

- Umeda S, Mimura M, Kato M. Acquired personality traits of autism following damage to the medial prefrontal cortex. Soc Neurosci. 2010;5:19–29. doi: 10.1080/17470910902990584. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Dickson J, Harwell J, Hanlon D, Anderson CH, Drury HA. An integrated software system for surface-based analyses of cerebral cortex. J Am Med Inform Assoc. 2001;8:443–459. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheatley T, Milleville SC, Martin A. Understanding animate agents: distinct roles for the social network and mirror system. Psychol Sci. 2007;18:469–474. doi: 10.1111/j.1467-9280.2007.01923.x. [DOI] [PubMed] [Google Scholar]

- Wiggett AJ, Pritchard IC, Downing PE. Animate and inanimate objects in human visual cortex: evidence for task-independent category effects. Neuropsychologia. 2009;47:3111–3117. doi: 10.1016/j.neuropsychologia.2009.07.008. [DOI] [PubMed] [Google Scholar]

- Winter L, Uleman JS. When are social judgments made? Evidence for the spontaneousness of trait inferences. J Pers Soc Psychol. 1984;47:237–252. doi: 10.1037//0022-3514.47.2.237. [DOI] [PubMed] [Google Scholar]

- Yarkoni T. Big correlations in little studies: inflated fMRI correlations reflect low statistical power commentary on. Perspect Psychol Sci. 2009;4:294–298. doi: 10.1111/j.1745-6924.2009.01127.x. [DOI] [PubMed] [Google Scholar]

- doi: 10.1162/jocn.2009.21137. Young L, Saxe R. 2009. An FMRI investigation of spontaneous mental state inference for moral judgement. J Cogn Neurosci 21: 1396–1405. [DOI] [PubMed] [Google Scholar]

- Zaki J, Weber J, Bolger N, Ochsner K. The neural bases of empathic accuracy. Proc Natl Acad Sci U S A. 2009;106:11382–11387. doi: 10.1073/pnas.0902666106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwickel J, White SJ, Coniston D, Senju A, Frith U. Exploring the building blocks of social cognition: spontaneous agency perception and visual perspective taking in autism. Soc Cogn Affect Neurosci. 2010 doi: 10.1093/scan/nsq088. doi:10.1093/scan/nsq088. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.