Abstract

Background

Little performance measurement has been undertaken in the area of oncology, particularly for surgery, which is a pivotal event in the continuum of cancer care. This work was conducted to develop indicators of quality for colorectal cancer surgery, using a 3-step modified Delphi approach.

Methods

A multidisciplinary panel, comprising surgical and methodological co-chairs, 9 surgeons, a medical oncologist, a radiation oncologist, a nurse and a pathologist, reviewed potential indicators extracted from the medical literature through 2 consecutive rounds of rating followed by consensus discussion. The panel then prioritized the indicators selected in the previous 2 rounds.

Results

Of 45 possible indicators that emerged from 30 selected articles, 15 were prioritized by the panel as benchmarks for assessing the quality of surgical care. The 15 indicators represent 3 levels of measurement (provincial/regional, hospital, individual provider) across several phases of care (diagnosis, surgery, adjuvant therapy, pathology and follow-up), as well as broad measures of access and outcome. The indicators selected by the panel were more often supported by evidence than those that were discarded.

Conclusions

This project represents a unique initiative, and the results may be applicable to colorectal cancer surgery in any jurisdiction.

Abstract

Contexte

On a effectué peu de mesures de rendement en oncologie, et en particulier en chirurgie, qui constitue un événement central dans le continuum du traitement du cancer. Ce travail visait à établir des indicateurs de qualité dans le cas de la chirurgie du cancer colorectal en suivant une démarche Delphi modifiée à trois étapes.

Méthodes

Un groupe multidisciplinaire constitué de coprésidents spécialisés en chirurgie et en méthodologies, de neuf chirurgiens, d'un médecin oncologue, d'un radio-oncologue, d'une infirmière et d'un pathologiste, ont étudié des indicateurs possibles extraits des publications médicales en procédant à deux classements consécutifs, suivis d'une discussion visant à dégager un consensus. Le groupe a ensuite attribué une priorité aux indicateurs choisis au cours des deux cycles précédents.

Résultats

Des 45 indicateurs possibles dégagés de 30 articles choisis, le groupe a donné priorité à 15 indicateurs comme points de repère pour l'évaluation de la qualité des soins chirurgicaux. Les 15 indicateurs représentaient trois niveaux de mesure (niveau de la province–région, de l'hôpital et du prestateur) pendant plusieurs phases des soins (diagnostic, chirurgie, traitement d'appoint, pathologie et suivi), ainsi que des mesures générales de l'accès et des résultats. Le groupe a le plus souvent retenu des indicateurs factuels et rejeté les autres.

Conclusions

Le projet représente une initiative sans pareille dont les résultats peuvent s'appliquer à la chirurgie du cancer colorectal dans n'importe quelle région.

Assessing the quality of health care has become increasingly important to providers, regulators and purchasers of care in response to growing demand for services, rising costs, constrained resources and evidence of variation in clinical practice.1 Quality of care is defined as the degree to which health services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge.2 This definition suggests that quality is a multidimensional concept best reflected by a broad range of performance measures.

Performance is often measured by establishing indicators, or standards, then evaluating whether the organization of services, patterns of care and outcomes are consistent with those criteria. Indicators can be generic measures relevant to all diseases, or disease-specific measures that describe the quality of care related to a specific diagnosis.3 Data on performance can be used to make comparisons over time between institutions that offer care, set priorities for the organization of medical care, support accountability and accreditation, and inform quality improvement.

The provincial cancer agency in Ontario has launched a performance measurement program that will examine quality of care for all types of cancer across the continuum of services, with a particular focus on surgical oncology. Most patients who develop cancer will undergo surgery for diagnosis, staging, treatment or palliation; therefore, the quality of surgical care can directly affect patient outcome and can have an indirect effect on outcome by influencing the subsequent care pathway.4

Little performance measurement has been conducted in the area of oncology, and the number of initiatives developing indicators to measure the quality of cancer care are few.5 In 1997, a group associated with the RAND Corporation used a modified Delphi approach to produce evidence- and consensus-based indicators for 6 types of cancer: lung, breast, prostate, cervical, colorectal and skin.6,7 The National Health Service (NHS) in the United Kingdom published a set of system-level indicators in 1999 that were selected according to key functions defined in the National Service Framework along with public consultation.8 The NHS indicators represent services for colorectal, lung and breast cancer.

Both of these oncology performance measurement initiatives produced indicators spanning the continuum of care and include some measures relevant to cancer surgery. No indicators have been rigorously established to specifically address the quality of cancer surgery, nor are there indicators focusing on colorectal cancer (CRC) surgery that could be used for hospital quality-improvement programs and accountability purposes.

This paper describes the systematic development of quality indicators for CRC surgery as the first step in a provincial performance measurement program in Ontario. It outlines the result of that effort, including participation, prioritized indicators and supporting evidence, and highlights key considerations in the use of indicators through a discussion of next steps.

Methods

A multidisciplinary stakeholder committee comprising organizational and external members was convened to establish principles for the selection of surgically focused cancer care indicators. External members included representatives from a health services research group, a federal agency in charge of compiling hospital discharge data, government, a hospital association, a professional group of general surgeons and a large academic cancer hospital with experience in measuring performance, as well as surgical oncologists with expertise in various disease sites.

The principles agreed to were (1) to select indicators of use to the widest possible group of surgeons, spanning the continuum of services from early diagnosis to long-term outcomes, and applicable to all levels of care from individual providers to the province-wide system; (2) to select indicators with a clear link to evidence or achieving strong consensus; (3) to make use of already-developed indicators; (4) to limit the release of data until a mechanism for provider response was in place; (5) to protect patient and provider confidentiality; and (6) to emphasize quality improvement.

Panel selection

Quality indicators for CRC were developed using a 3-step modified Delphi process (Fig. 1) involving an expert panel. The Delphi approach is differentiated from other consensus methods by the use of questionnaires to elicit anonymous responses over a number of rounds with controlled feedback; the modified Delphi process involves an in-person meeting of participants.9

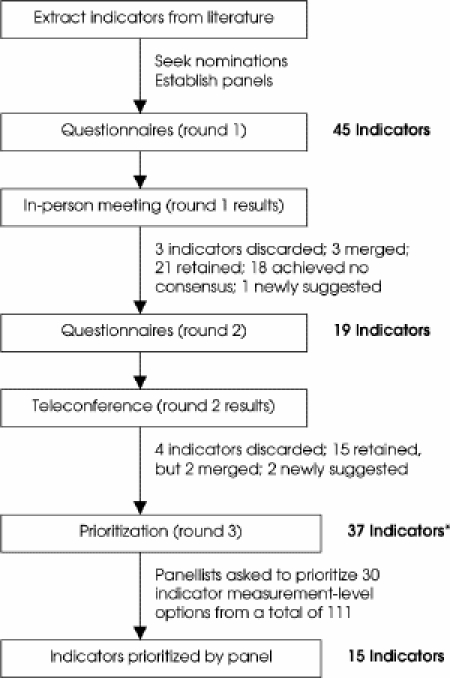

FIG. 1. Process used to select and prioritize quality indicators for colorectal cancer surgery. *Indicators suggested and retained from rounds 1 and 2.

Hospital chief executive officers and regional vice-presidents of cancer services from community and tertiary care hospitals across the province were asked to nominate practising clinicians who provided care to patients with CRC and had demonstrated leadership in quality improvement through research, administrative responsibilities or committee membership to serve as panel members. The intent was to achieve a 15-member panel composed primarily of surgeons, because the pivotal focus of this exercise was CRC surgery, but also including health professionals who could offer multidisciplinary perspectives on practice, specifically a nurse, pathologist, medical oncologist and radiation oncologist. Attempts were made to include representatives from across the province if nominations permitted.

Nominated clinicians were contacted to have the intended process and expected time commitment described to them and to confirm their interest in being involved. Although none declined the opportunity to participate, nominations were lacking for the positions of radiation oncologist, pathologist and 1 surgeon. An email request was distributed to the regional vice-presidents of cancer services, who provided further recommendations to fill these positions.

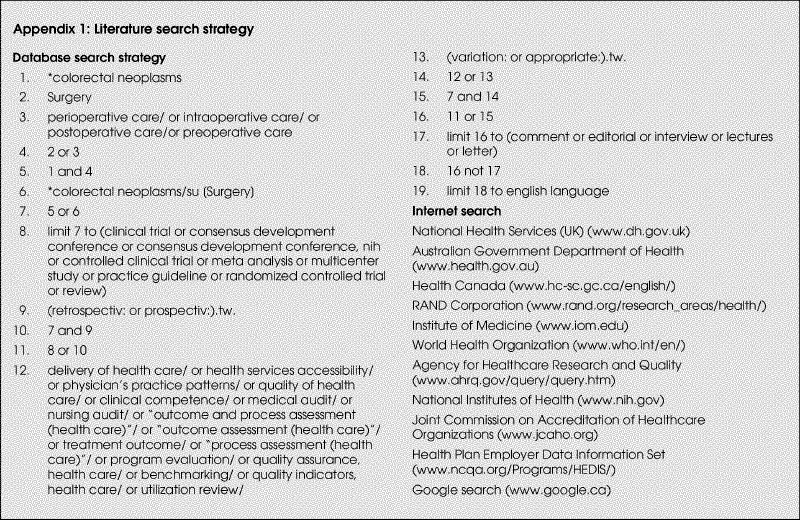

Literature search

A comprehensive literature search was conducted to identify possible quality indicators of CRC surgery. Electronic databases searched included MEDLINE, EMBASE, CINAHL, the Cochrane Library and HealthSTAR using indexing terms and keywords (Appendix 1), as well as the Internet for government and research reports. Articles were included in this review if they were published in English from 1990 to November 2002, and if they described indicators developed by other agencies or synthesized research evidence describing best practice (guidelines, consensus statements, evaluation studies, systematic reviews or meta-analyses). Studies excluded from this review were individual trials and publications in the form of abstracts, letters or editorials.

Data on type of article, citation, phase of care (overall access or outcomes, diagnosis, surgery, adjuvant therapy, pathology, follow-up) and proposed indicator were extracted and tabulated. The surgical and methodological co-chairs reviewed the extracted data to compile a list of nonduplicate indicators for CRC surgery that were organized by phase of care.

Round 1

The refined list of nonduplicate indicators was formatted as a questionnaire and distributed by regular mail, along with a stamped, addressed return envelope. Respondents were asked to rate each indicator on a 7-point scale (1 = disagree and 7 = agree) according to association with quality (overall, surgeon-specific, team level) and patient outcomes, provide written comments and suggest additional indicators not included in the questionnaire that warranted consideration by the panel. An email reminder was sent at 2 weeks from initial distribution, and nonresponders were also contacted by telephone to promote the return of all questionnaires.

Questionnaire responses were entered into Excel, frequencies were calculated and a summary report prepared. The report was organized according to indicators that achieved strong consensus for acceptance (7 or more panel members agreed that the indicator was associated with quality of cancer surgery and patient outcomes by selecting 5, 6 or 7 on the Likert scale), strong consensus for exclusion (7 or more panel members disagreed with the idea that the indicator was associated with quality of cancer surgery and patient outcomes by selecting 1, 2, 3 or 4 on the Likert scale), unclear consensus (7 or more panel members agreed the indicator was associated with patient outcomes by selecting 5, 6 or 7 on the Likert scale but 7 or more panel members disagreed with the idea that the indicator was associated with quality of cancer surgery by selecting 1, 2, 3 or 4 on the Likert scale) and the newly suggested indicators. This report was distributed to panel members along with a table listing the source of evidence from which the possible indicators were extracted. Acceptance, rejection or the need for further consideration of each indicator was reviewed and confirmed through discussion at an in-person panel meeting.

Round 2

Indicators requiring further consideration were formatted as a questionnaire similar in format to the round 1 questionnaire. This round 2 questionnaire included the frequency distribution of round 1 responses, the recipient's own round 1 response and a list of previously submitted comments. Panel members were asked to rate these indicators and recommend additional indicators for consideration. The questionnaire was distributed to panel members by regular mail, followed by an email reminder at 2 weeks and then telephone calls to nonresponders. Responses were summarized and distributed by electronic mail. A teleconference was then held during which panel members discussed the round 2 indicators and confirmed acceptance or rejection.

Round 3

All indicators selected from rounds 1 and 2 were included in a third and final questionnaire. Panel members were asked to prioritize the indicators by choosing those they perceived as most important for improving the quality of cancer surgery and the most meaningful level of measurement for each selection (regional/provincial, hospital/team, individual provider). Each choice represented a single vote, to a maximum of 30 choices (about one-quarter of the final list of indicator measurement-level options). The round 3 questionnaire was distributed by regular mail and followed by an email reminder at 2 weeks, plus telephone calls to nonresponders. Indicators were considered to be a high priority if 7 or more panel members selected the indicator and measurement level, and lower priority if selected by fewer than 7 panel members.

The type of evidence supporting the indicators selected and discarded by the panel was summarized. This involved identifying the number of case or cohort studies, reviews or guidelines in which each indicator was mentioned and calculating the mean number of articles supporting selected and discarded indicators. Indicators considered by the panel were also compared with indicators produced by the RAND Corporation and the NHS.6,7,8

Results

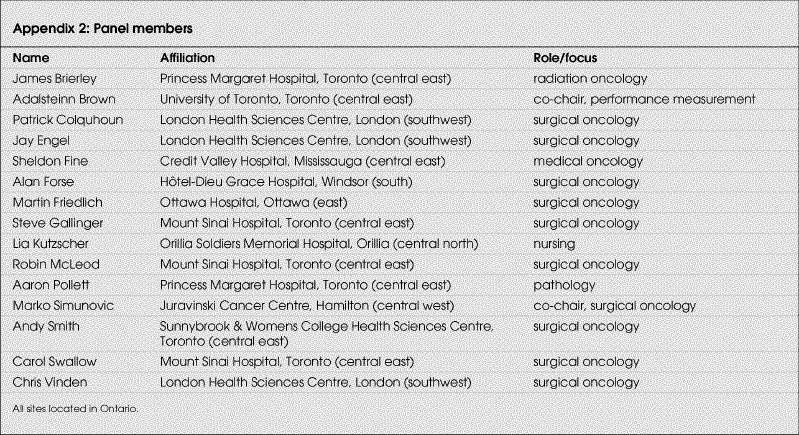

Once guiding principles were established, the indicator selection process began in December 2002 and concluded in November 2003. The expert panel included a surgical and a methodological co-chair, plus 9 surgeons, 1 medical oncologist, 1 radiation oncologist, 1 nurse and 1 pathologist, for a total of 15 members (Appendix 2). Excluding the methodological co-chair, about 43% (6/14) of panel members were from the Greater Toronto Area (central east). This included 40% (4/10) of the surgeons, plus the radiation oncologist and pathologist. Remaining panel members represented various regions of the province, including east, south, southwest, central west and central north.

Participation of panel members throughout the process was high. Excluding the methodological and clinical co-chairs, 12 (92%) panellists completed the round 1 survey; 11 (85%) participated in the round 1 discussion; 13 (100%) completed the round 2 survey; and 9 (69%) participated in the round 2 discussion. Excluding the methodological co-chair, 93% (13/14) of panellists completed the round 3 prioritization exercise.

The literature search produced 148 citations for articles and reports related to the quality of CRC surgery, of which 34 were selected for thorough review. Initially, 45 indicators were extracted from 30 of these articles for consideration by the panel. The number of indicators considered in each round of rating is summarized in Figure 1.

In round 3, panellists were presented with 37 indicators that had been retained from rounds 1 and 2. They were asked to prioritize 30 indicators (about one-quarter of the 111 indicator measurement-level options) by selecting both the indicator and desired level of measurement (surgeon, hospital, region/province). A total of 15 indicators6,7,8,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28 were selected by the panel members and constitute the final list of indicators (Table 1). The remaining indicators were clearly considered by the panel to be important, having been retained through 2 rounds of rating and consensus, but were rated in the final exercise as lower priority for reasons that were not investigated.6,7,8,11,13,14,15,16,17,18,19,21,23,26,29,30,31,32

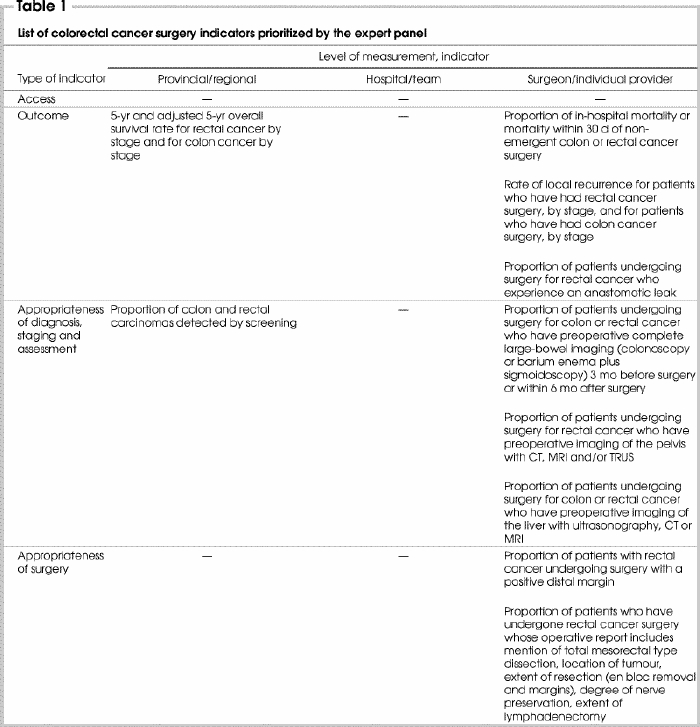

Table 1

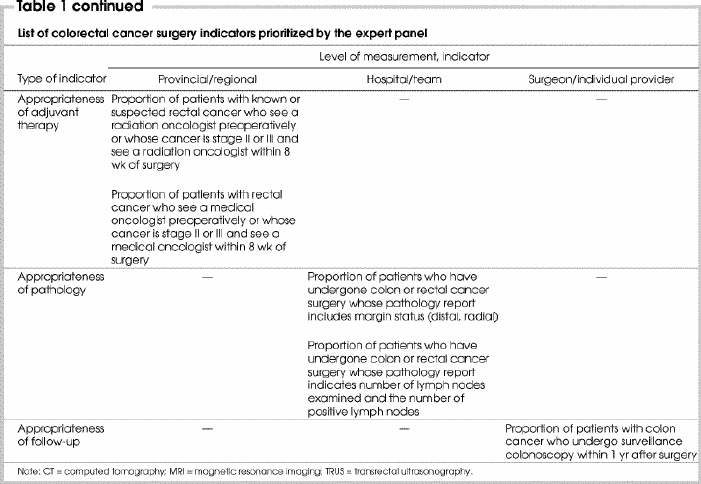

Table 1 continued

The selected indicators represent 3 levels of measurement and several phases of care, thus satisfying principle 1 (see Methods). Four indicators were selected for measurement at the provincial and/or regional level, 2 indicators at the hospital level and 9 indicators were selected for measurement at the individual surgeon level. The number of indicators selected according to appropriateness of diagnosis, surgery, adjuvant therapy, pathology and follow-up were 4, 2, 2, 2 and 1, respectively. Four indicators represented broad measures of access and outcomes.

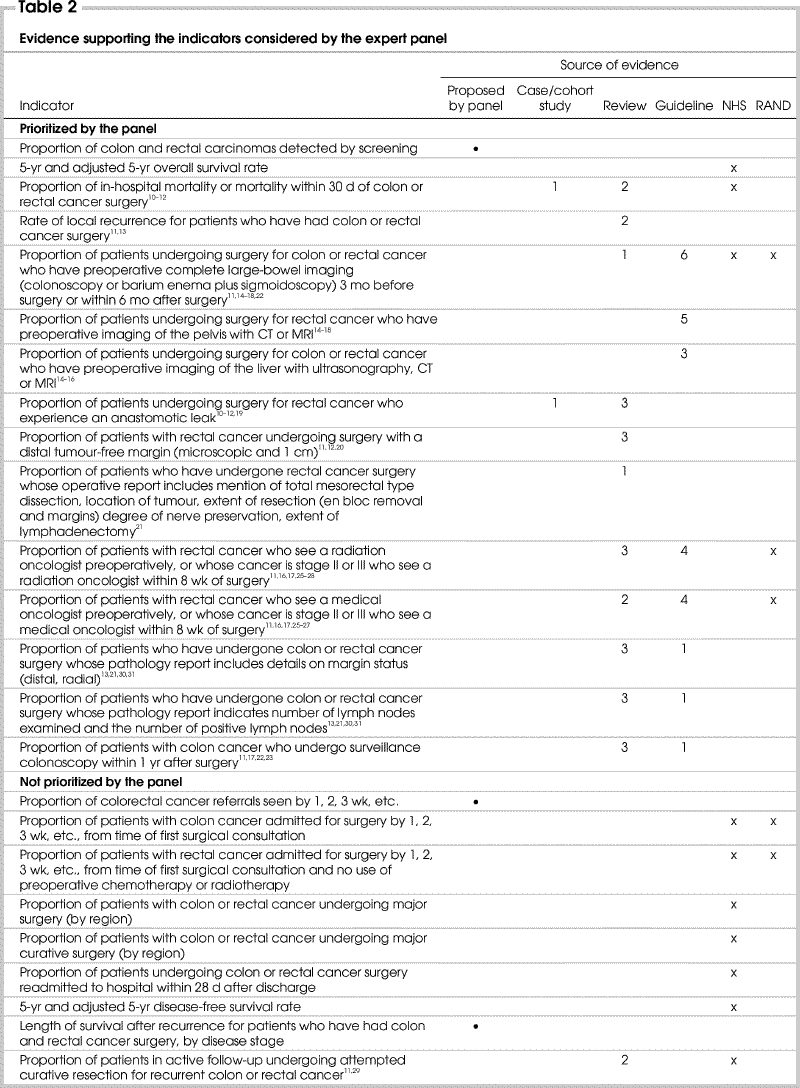

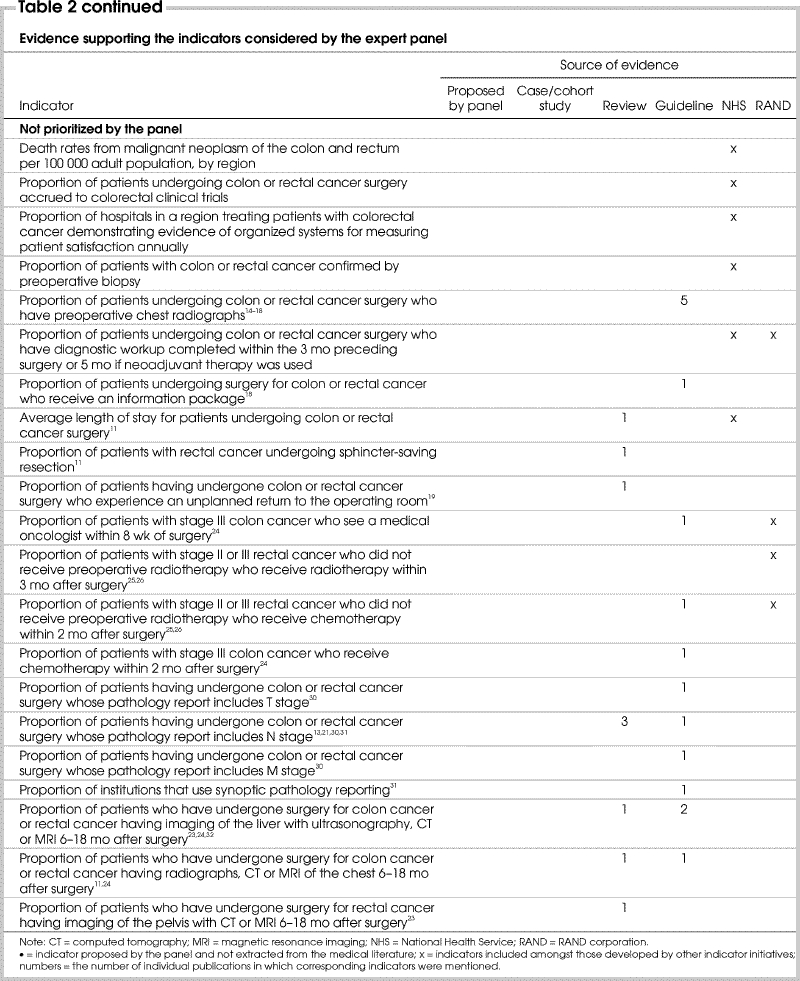

Principle 2 guiding this project was the selection of indicators with a clear link to evidence or achieving strong consensus. It appears that the prioritized indicators were supported by more evidence than those that were not prioritized (Table 2). Of the 15 indicators prioritized by the panel after round 3, 1 had been proposed by the panel, and 14 had been identified in the medical literature. Of these 14 indicators, 13 (93%) were supported by at least 1 case or cohort study, review or guideline and an average of 4.0 articles per indicator. In comparison, 30 indicators considered by the panel were discarded. Of these, 2 had been proposed by the panel, and 28 had been identified in the medical literature. Sixteen (57%) of the 28 indicators were supported by at least 1 review or guideline and an average of 1.7 articles per indicator.

Table 2

Table 2 continued

A review of the content and quality of the literature supporting the choice of indicators was not undertaken, because there appeared to be no difference in the type of article that supported indicators that were selected compared with those that were discarded in the final prioritization step. Twelve articles gave rise to both selected and discarded indicators, of which 6 were guidelines14,15,16,17,18,26 and 6 were reviews.10,11,13,19,21,23 Seven distinct articles described indicators that were prioritized: 5 reviews12,20,22,27,28 and 2 guidelines.24,25 Four articles gave rise to only discarded indicators: 2 reviews29,31 and 2 guidelines.30,32

Three indicators not supported by the reviewed evidence were suggested by the panel members. Two of these were not ultimately prioritized by the panel. The indicator “length of survival after recurrence, by stage” was proposed to describe the potential outcome of the process indicator “proportion of patients undergoing attempted curative resection for recurrent colon or rectal cancer.” The second indicator suggested, but not prioritized, involved waiting time from referral to consultation. The reviewed evidence mentioned waiting time from diagnosis to treatment. Consideration of time from referral to consultation is a reflection of provincial efforts to measure and monitor waiting time between several key events, including referral, consultation, diagnosis, treatment and receipt of pathology report. This broader view of wait times might enable identification of all factors that contribute to delays.

The proposed indicator that achieved strong consensus for prioritization was “proportion of colon and rectal carcinomas detected by screening.” Because the focus of this effort was on preoperative, perioperative and postoperative care, the screening literature had not been reviewed. However, panel members felt very strongly about including this particular indicator to highlight one of the major goals of the provincial cancer agency and, indeed, any cancer control organization, in promoting the role of screening for the early detection of cancer.

Guiding principle 3 advocated the use of already-developed indicators. The indicators prioritized and discarded by our panel were compared with those developed by the RAND Corporation and the NHS.6,7,8 This is summarized in Table 2. Concepts embodied in 5 of 15 indicators selected by the panel matched those included in general sets of quality indicators for cancer care developed by other initiatives. These included mortality within 30 days of surgery; large-bowel imaging for patients undergoing surgery for colon or rectal cancer; and referral to either a medical oncologist or radiation oncologist preoperatively or after surgery for those with stage II or III rectal cancer.

Concepts described by 16 of the 30 indicators not selected or prioritized by our panel were listed in indicator sets published by other initiatives. These included waiting time from consultation to surgery for patients with CRC with or without preoperative use of either chemotherapy or radiotherapy; proportion of patients with CRC undergoing major or curative surgery; proportion of patients with colon or rectal cancer confirmed by preoperative biopsy; readmission to hospital within 28 days following discharge after surgery; 5-year disease-free survival rate; patients accrued to colorectal clinical trials; postoperative referral of patients with stage III colon cancer to a medical oncologist; average length of stay of patients undergoing colon or rectal cancer surgery; and proportion of patients with stage II or III rectal cancer who do not receive preoperative radiation therapy but undergo either radiotherapy or chemotherapy after surgery.

Conclusions

Summary

A systematic evidence-based and consensus-based approach was used to prioritize indicators of CRC management focusing on surgery as the pivotal event in the continuum of care. A 15-member clinician panel used a 3-step modified Delphi process to select 15 indicators of quality CRC care at the system, hospital and individual surgeon level and through all phases of care from screening to follow-up and outcomes. The panel nomination process enabled input from both clinicians and researchers to ensure face validity and content validity of the selected indicators. This effort was undertaken as part of a larger provincial performance measurement program in Ontario, but the results may be applicable to CRC surgery performance measurement in any jurisdiction.

Focusing on cancer surgery distinguishes this initiative from the few that have produced mainly system-level indicators of oncology care. Although indicators prioritized by our panel were supported by more evidence than those that were not selected, several indicators developed by other initiatives were not chosen by our panel. There are 2 possible explanations for this. First, the indicators developed by the other groups were meant to reflect the continuum of care and are most relevant to the system level of care. Our indicators were intended to focus on cancer surgery, while also reflecting both preoperative and postoperative care. For example, our panel did not prioritize “accrual to clinical trials,” as did the other initiatives. This is an important aspect of cancer care, but it is perhaps relevant at a system or regional level rather than at the level of the individual hospital, surgical team or surgeon and may be evaluated by the provincial cancer agency. Second, it seems that our panel may have prioritized indicators that were more often supported by evidence from the medical literature, perhaps relying to a lesser degree on group consensus and on indicators that had been selected by the other initiatives.

Limitations

Despite employing a comprehensive literature search based on both subject headings and keywords applied to several health care databases and the Internet, the identification of possible indicators for subsequent rating and discussion may have failed to find all relevant literature. However, this limitation may have been mitigated by the fact that the members of the panel included experts in cancer care who were likely to be very familiar with the literature and had the opportunity to suggest additional indicators throughout the selection process.

Most descriptions of quality indicators advocate that they be evidence-based.33,34,35 Stronger evidence may mean that indicators are more credible and have greater potential for reducing morbidity and mortality and improving quality of care. The literature search strategy used to identify possible indicators focused on synthesized evidence such as reviews and guidelines and indicators developed in other jurisdictions, and excluded single trials, assuming that these would be described or considered within the synthesized literature. Without a more comprehensive review of the evidence used to develop the reviews, guidelines and indicators from which the CRC surgery quality indicators were extracted, it is unclear which indicators may be supported by randomized controlled trials, which are generally considered the most reliable evidence. Interestingly, it does appear that the indicators prioritized by the panel were more often supported by multiple sources of synthesized evidence.

This work took place in consecutive stages over the course of nearly a year. The protracted scheduling may have limited the ability of the panel members to carry forward learning and consensus from one step to the next. This could only be evaluated by running a duplicate process where all phases took place during 1- or 2-day working meetings and examining the outcome by comparing the indicators selected by the 2 processes, and then gathering feedback from participants on perceived continuity. Alternatively, the consecutive nature of the process may have provided panel members with the opportunity to reflect on the consensus discussion before re-rating and prioritizing the indicators — this period of reflection would not be available in a 1-day meeting format. We are exploring the implications of a compressed process to produce indicators for other types of cancer surgery.

The validity of the consensus approach has been questioned.36,37 A common critique is that particularly vocal members can influence panel decisions. In our modified Delphi approach, rating was anonymous and discussion was moderated by both a methodological and a clinical co-chair, so that selected indicators did not reflect the perspectives of any single participant.

Panel composition is also thought to influence the outcome of consensus processes. Research studies have demonstrated that single-discipline panels select different indicators than do multidisciplinary panels considering the same choices.38,39,40,41 To maximize the applicability of our indicators, we compiled a panel that was multidisciplinary in nature, including generalists and specialists and representatives from both large and small hospitals and different regions of the province. To encourage the contribution of multiple viewpoints and minimize the inclusion of individuals with particular agendas, panellists were nominated by hospital executives from across the province. Furthermore the modified Delphi process solicits anonymous feedback by questionnaire and provides the opportunity for discussion after each round of indicator rating to ensure that all opinions are voiced.36,37

About 40% of panel members were affiliated with the Greater Toronto Area which is not surprising because it is home to 45% of Ontario's population and a significant proportion of cancer services are, in consequence, provided in this region.42 Given that the remaining members represented various regions of the province, any imbalance of perspective may have been resolved through consensus discussion.

Next steps

Ongoing work presents numerous opportunities for research and evaluation as much remains to be learned about the validation of cancer- specific indicators and their implementation. The indicators selected by our panel represent an ideal set of criteria by which to measure the quality of CRC care. The feasibility of measuring the indicators must next be assessed. Administrative data may be available with which to evaluate some of the indicators in the short term. Those indicators for which data are not readily available may be examined in the context of a research or quality assurance study, involving data collection through medical record abstraction, surveys or interviews.

For those indicators where data are readily available, analysis will involve techniques such as risk adjustment and data modelling to reduce possible sources of error and ensure that the indicators reflect systematic rather than random variations in care.43,44,45,46,47 Consultations with provider organizations will further validate the accuracy of the data and the applicability of the indicators (principle 4).

Consideration must be given to how the data will be used, and this has implications for the way in which they are communicated. The more typical approach to performance measurement has involved the use of report cards to promote public accountability; yet, recent reviews summarizing 3 decades of research on performance measurement have revealed that the public release of performance data has had minimal impact on improvements in health care.48,49,50

An alternative strategy, quality improvement, focuses on enhancing performance and reducing interprovider variability through confidential sharing of performance data (principles 5 and 6) and collaborative interventions.51 For example, the US Veterans Affairs National Surgical Quality Improvement Program (NSQIP) delivers performance data to providers and managers in various ways including a comprehensive chief-of-surgery annual report comparing the outcomes of each hospital with those of the other participating anonymized hospitals; periodic assessment of performance at high and low outlier institutions; provision of self-assessment tools; structured site visits for the assessment of data quality and specific performance; and the sharing of best practice reported by hospitals that have implemented procedures to sustain or improve their outcomes.52

Even with a variety of interventions such as these in place to assist provider organizations in improving performance, emerging evidence suggests that the effectiveness of data feedback is influenced by the existence of institutional “quality culture.”53,54 Therefore incentives that reward quality must also be in place as motivation for managers and providers to implement cultural changes that embrace quality improvement.55,56

Appendix 1.

Appendix 2.

Competing interests: None declared.

Correspondence to: Dr. Adalsteinn D. Brown, Health Policy, Management and Evaluation, Faculty of Medicine, University of Toronto, McMurrich Building, 2nd Fl., 12 Queen's Park Cres. W, Toronto ON M5S 1A8; fax 416 978-1466; adalsteinn.brown@utoronto.ca

Accepted for publication Nov. 1, 2004

References

- 1.Campbell SM, Roland MO, Buetow SA. Defining quality of care. Soc Sci Med 2000;51:1611-25. [DOI] [PubMed]

- 2.Lohr KN, editor. Medicare: a strategy for quality assurance. Vol. 1. Washington: National Academy Press; 1990.

- 3.Mainz J. Defining and classifying clinical indicators for quality improvement. Int J Qual Health Care 2003;15:523-30. [DOI] [PubMed]

- 4.Edge SB, Cookfair DL, Watroba N. The role of the surgeon in quality cancer care. Curr Probl Surg 2003;40:511-90. [DOI] [PubMed]

- 5.Porter GA, Skibber JM. Outcomes research in surgical oncology. Ann Surg Oncol 2000;7:367-75. [DOI] [PubMed]

- 6.Malin JL, Asch SM, Kerr EA, McGlynn EA. Evaluating the quality of cancer care. Cancer 2000;88:701-7. [DOI] [PubMed]

- 7.Asch SM, Kerr EA, Hamilton EG, Reifel JL, McGlynn EA, editors. Quality of care for oncologic conditions and HIV: a review of the literature and quality indicators. Santa Monica (CA): RAND Corporation; 2000. Available: www.rand.org/publications/MR/MR1281 (accessed 2005 Oct 12).

- 8.National Department of Health, National Health Service Executive. NHS performance assessment framework. London (UK): NHS; 2000.

- 9.Fink A, Kosecoff J, Chassin M, Brook RH. Consensus methods: characteristics and guidelines for use. Am J Public Health 1984;74:979-83. [DOI] [PMC free article] [PubMed]

- 10.Thompson GA, Cocks J, Collopy BT, Cade RJ. Clinical indicators in colorectal surgery. J Qual Clin Pract 1996;16: 31-5. [PubMed]

- 11.Hohenberger P. Colorectal cancer – What is standard surgery? Eur J Cancer 2001;37(Suppl 7):S173-87. [DOI] [PubMed]

- 12.Landheer MLEA, Therasse P, van de Velde CJH. The importance of quality assurance in surgical oncology in the treatment of colorectal cancer. Surg Oncol Clin N Am 2001;10:885-914. [PubMed]

- 13.Higginson IJ, Hearn J. Improving outcomes in colorectal cancer: research evidence. London (UK): National Health Service; 1997.

- 14.European Society for Medical Oncology. ESMO minimum clinical recommendations for diagnosis, treatment and follow-up of advanced colorectal cancer. Ann Surg Oncol 2001;12:1055. [DOI] [PubMed]

- 15.Fearon KCH, Carter DC, Nixon SJ, Duncan W, McArdle CS, Gollock J, et al. Report on consensus conference on colorectal cancer: Royal College of Surgeons of Edinburgh 1993. Health Bull (Edinb) 1996;54:22-31. [PubMed]

- 16.Society of Surgical Oncology. Colorectal cancer surgical practice guidelines. Oncology 1997;11:1051-7. [PubMed]

- 17.Benson AB, Choti MA, Cohen AM, Doroshow JH, Fuchs C, Kiel K, et al. NCCN practice guidelines for colorectal cancer. Oncology 2000;14:203-12. [PubMed]

- 18.An international, multidisciplinary approach to the management of advanced colorectal cancer. The International Working Group in Colorectal Cancer. Eur J Surg Oncol 1997;23(Supp lA):1-66. [PubMed]

- 19.Isbister WH. Unplanned return to the operating room. Aust N Z J Surg 1998;68:143-6. [DOI] [PubMed]

- 20.Nogueras JJ, Jagelman DG. Principles of surgical resection. Influence of surgical technical reporting on treatment outcome. Surg Clin North Am 1993;73:103-16. [DOI] [PubMed]

- 21.Hermanek P, Hermanek PJ. Role of the surgeon as a variable in the treatment of rectal cancer. Semin Surg Oncol 2000;19:329-35. [DOI] [PubMed]

- 22.Kronborg O. Optimal follow-up in colorectal cancer patients: What tests and how often? Semin Surg Oncol 1994;10:217-24. [DOI] [PubMed]

- 23.Taylor I. Quality of follow-up of the cancer patient affecting outcome. Surg Oncol Clin N Am 2000;9:21-5. [PubMed]

- 24.Figueredo A, Fine S, Maroun J, Walker-Dilks C, Wong S. Adjuvant therapy for stage III colon cancer following complete resection. Cancer Prev Control 1997;1:304-19. [PubMed]

- 25.Figueredo A, Germond C, Taylor B, Maroun J, Agboola O, Wong R, et al. Postoperative adjuvant radiotherapy and/or chemotherapy for resected stage II or III rectal cancer. Curr Oncol 2000;7:37-51.

- 26.Figueredo A, Zuraw L, Wong RK, Agboola O, Rumble RB, Tandan V. The use of preoperative radiotherapy in the management of patients with clinically resectable rectal cancer. BMC Medicine 2003;1:1. Available: www.biomedcentral.com/1741-7015/1/1 (accessed 2005 Oct 12). [DOI] [PMC free article] [PubMed]

- 27.Guillem JG, Paty PB, Cohen AM. Surgical treatments of colorectal cancer. CA Cancer J Clin 1997;47:113-28. [DOI] [PubMed]

- 28.Wheeler JMD, Warren BF, Jones AC, Mortensen NJ. Preoperative radiotherapy for rectal cancer: implications for surgeons, pathologists and radiologists. Br J Surg 1999;86:1108-20. [DOI] [PubMed]

- 29.Rosen M, Chan L, Beart RW, Vukasin P, Anthone G. Follow-up of colorectal cancer. Dis Colon Rectum 1998;41:1116-26. [DOI] [PubMed]

- 30.Hammond MEH, Fitzgibbons PL, Compton CC, Grignon DJ, Page DL, Fielding LP, et al. College of American Pathologists Conference XXXV: solid tumor prognostic factors. Arch Pathol Lab Med 2000;124:958-65. [DOI] [PubMed]

- 31.Micev M, Cosic-Micev M, Todorovic V. Postoperative pathological examination of colorectal cancer. Acta Chir Iugosl 2000;47(4 Suppl 1):67-76. [PubMed]

- 32.Scholefield JH, Steele RJ. Guidelines for follow-up after resection of colorectal cancer. Gut 2002;51(Suppl 5):V3-5. [DOI] [PMC free article] [PubMed]

- 33.Hearnshaw HM, Harker RM, Cheater FM, Baker RH, Grimshaw GM. Expert consensus on the desirable characteristics of review criteria for improvement of health care quality. Qual Health Care 2001;10:173-8. [DOI] [PMC free article] [PubMed]

- 34.Pringle M, Wilson T, Grol R. Measuring “goodness” in individuals and healthcare systems. BMJ 2002;325:704-7. [DOI] [PMC free article] [PubMed]

- 35.Geraedts M, Selbmann HK, Ollenschlaeger G. Critical appraisal of clinical performance measures in Germany. Int J Qual Health Care 2003;15:79-85. [DOI] [PubMed]

- 36.Jones J, Hunter D. Consensus methods for medical and health services research. BMJ 1995;311:376-80. [DOI] [PMC free article] [PubMed]

- 37.Campbell SM, Braspenning J, Hutchinson A, Marshall M. Research methods used in developing and applying quality indicators in primary care. Qual Saf Health Care 2002;11:358-64. [DOI] [PMC free article] [PubMed]

- 38.Leape L, Park R, Kahn J, Brook R. Group judgements of appropriateness: the effect of panel composition. Qual Assur Health Care 1992;4:151-9. [PubMed]

- 39.Coulter I, Adams A, Shekelle P. Impact of varying panel membership on ratings of appropriateness in consensus panels: a comparison of a multi- and single disciplinary panel. Health Serv Res 1995;30:577-87. [PMC free article] [PubMed]

- 40.Ayanian J, Landrum M, Normand S, Guadagnnoli E, McNeil B. Rating the appropriateness of coronary angiography – Do practicing physicians agree with an expert panel and each other? N Engl J Med 1998;338:1896-904. [DOI] [PubMed]

- 41.Campbell S, Hann M, Quayle MR, Shekelle P. The effect of panel membership and feedback on ratings in a two-round Delphi survey. Med Care 1999;37: 964-8. [DOI] [PubMed]

- 42.Cancer Care Ontario. GTA 2014 Cancer Report. A roadmap to improving cancer services and access to patient care. Toronto: Cancer Care Ontario; 2004.

- 43.Maleyeff J, Kaminsky FC, Jubinville A, Fenn CA. A guide to using performance measurment systems for continuous improvement. J Healthc Qual 2001;23:33-7. [DOI] [PubMed]

- 44.Gibberd R, Pathmeswaran A, Burtenshaw K. Using clinical indicators to identify areas for quality improvement. J Qual Clin Pract 2000;20:136-44. [DOI] [PubMed]

- 45.Shahian DM, Normand SL, Torchiana DF, Lewis SM, Pastore JO, Kuntz RE, et al. Cardiac surgery report cards: comprehensive review and statistical critique. Ann Thorac Surg 2001;72:2155-68. [DOI] [PubMed]

- 46.Singh R, Smeeton N, O'Brien TS. Identifying under-performing surgeons. BJU Int 2003;91:780-4. [DOI] [PubMed]

- 47.Scott I, Youlden D, Coory M. Are diagnosis specific outcome indicators based on administrative data useful in assessing quality of hospital care? Qual Saf Health Care 2004;13:32-9. [DOI] [PMC free article] [PubMed]

- 48.Brook RH, McGlynn EA, Shekelle PG. Defining and measuring quality of care: a perspective from US researchers. Int J Qual Health Care 2000;12:281-95. [DOI] [PubMed]

- 49.Marshall MN, Shekelle PG, Leatherman S, Brook RH. The public release of performance data. JAMA 2000;283:1866-74. [DOI] [PubMed]

- 50.Mukamel DB, Mushlin AI. The impact of quality report cards on choice of physicians, hospitals and HMOs. Jt Comm J Qual Improv 2001;27:20-7. [DOI] [PubMed]

- 51.Solberg LI, Mosser G, McGonald S. The three faces of performance measurement: improvement, accountability, and research. Jt Comm J Qual Improv 1997;23:135-47. [DOI] [PubMed]

- 52.Khuri SF, Daley J, Henderson WG. The comparative assessment and improvement of quality of surgical care in the Department of Veterans Affairs. Arch Surg 2002;137:20-7. [DOI] [PubMed]

- 53.Bradley EH, Holmboe ES, Mattera JA, Roumanis SA, Radford MJ, Krumholz HM. Data feedback efforts in quality improvement: lessons learned from US hospitals. Qual Saf Health Care 2004;13:26-31. [DOI] [PMC free article] [PubMed]

- 54.Ginsburg LS. Factors that influence line managers' perceptions of hospital performance data. Health Serv Res 2003;38:261-86. [DOI] [PMC free article] [PubMed]

- 55.Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care 2003;41(1 Suppl):I30-8. [DOI] [PubMed]

- 56.Galvin RS, McGlynn EA. Using performance measurement to drive improvement. A road map for change. Med Care 2003;41(1 Suppl):I48-60. [DOI] [PubMed]