Abstract

This paper reports the outcomes of a study on an integrated situational alignment framework for picture archiving and communication systems (PACS) labeled as PISA. Following the design research cycle, complementary validation methods and pilot cases were used to assess the proposed framework and its operationalized survey. In this paper, the authors outline (a) the process of the framework’ development, (b) the validation process with its underlying iterative steps, (c) the outcomes of pilot cases, and (d) improvement opportunities to refine and further validate the PISA framework. Results of this study support empirical application of the framework to hospital enterprises in order to gain insights into their PACS maturity and alignment. We argue that the framework can be applied as a valuable tool for assessments, monitoring and benchmarking purposes and strategic PACS planning.

Keywords: PACS, Alignment, Maturity, Performance, Framework, Assessment, Hospital enterprise

Introduction

As investments in healthcare are generally large and mission critical in case of medical imaging technology, the need for structured implementation, measurement approaches, and holistic evaluation methods is expanding [1–3]. The fact that hospitals around the world are re-evaluating their picture archiving and communication system (PACS) implementations reflects this demand [4]. PACS are workflow-integrated imaging systems that are designed to streamline operations throughout the entire patient-care delivery process and have become an integrated component of today’s healthcare delivery system [5]. However, hospitals have often failed to achieve necessary productivity levels and operational efficiencies (e.g., reduction of costs, productivity increase, and optimization of patient episode throughput time) using PACS, even though pursuing a full filmless environment using PACS is a high-cost venture [6].

Currently, hospitals have a tendency toward re-evaluating implemented radiology systems to overcome technical and practical limitations of operational file systems and deployed PACS database design [7, 8]. More efficient, extensive, cost-effective, and vendor-independent infrastructure PACS solutions are available to hospitals. This re-evaluation process is also driven by the current volumes of imaging data produced by advanced modalities like CTs and MRs that have major impact on the common information system and information technology (IS/IT) architecture. This poses serious challenges in terms of storing growing amounts of data, cross enterprise document sharing, protecting patient information [9], and general alignment with evolving technologies and disruptive innovations such as the application of serial advanced technology attachment, data grid architecture development, cloud computing, scalable distributed server environment, and service-oriented architectures [10]. In doing so, hospitals are planning for major updates and even large-scale replacements trajectories to other PACS vendors in overcoming technical, practical limitations and improve overall system performance [4, 7].

Due to the above developments and growth of the radiology practice, the importance of strategic and prospective directions is becoming more urgent [11]. In practice, strategic planning approaches toward imaging technology, PACS, and PACS (re)deployment are lacking, both in hospital board rooms as well as in scientific literature. This makes it difficult to harmonize or “align” PACS goals, objectives, and improvement activities with the hospital’s strategic agenda. Achieving optimal alignment of PACS and pursuing its intended goals and objectives within the hospital enterprise seems an intricate and poorly examined process and lacks scientific grounds.

Digital radiology (management) systems are difficult to evaluate due its evolving nature, the high number of involved departments and professionals and hard to define patient outcomes [12]. Earlier, Van de Wetering et al. [3] argued that theories on business–IT alignment, organizational fit, and adoption of IS/IT can help to understand why certain key elements in clinical practice have not been realized. A method that supports the process of optimally aligning PACS and defines the synergetic effects within hospital operations will be very valuable. However, this method has not been developed, empirically applied, and validated yet.

In developing such a method, the current paper combines an existing model on PACS maturity (i.e., continuous evolvability process from immature stages of growth/maturity toward another level) [13], with the concept of business–IT alignment (i.e., investments made in organizational domains related to PACS should be balanced out in the organization in order to obtain synergizing benefits) [14, 15]. From this, the first objective is to develop a framework and associated survey to empirically assess a hospital’s current PACS maturity and alignment regarding different strategic directions. The second goal is to systematically examine the applicability of this framework and instrument and attentively explore its implications in terms of maturity and alignment on PACS performance at two different pilot hospitals in The Netherlands. Based on the responses from these pilot hospitals, improvements will be made to the PACS integrated situational alignment (PISA) framework and related survey.

The remainder of this paper is outlined as follows: We will first review a synthesized model to measure levels of PACS maturity and subsequently address principles of business/IT alignment and review the concepts of PACS performance. Subsequently, a first version of the PISA framework is proposed. “Methods and Material” section discusses applied validation methods, after which results are presented. Several framework improvements are presented, after which the PISA framework is completed. Finally, the paper concludes with a brief discussion on the implications of this study, identifies inherent limitations, sets out a research agenda, and sets out main conclusions.

Defining Framework Constructs

PACS Maturity Concepts

Theories on IS/IT maturity and adoption are well established in business and IS/IT literature, going back to the early 1970s. The concept of the IS/IT maturity stage hypothesis was introduced by Nolan [16] in 1973. Later on, this model was extended, frequently discussed, and adapted [17]. In general, IS/IT maturity models provide insight into the structure of elements that represent process effectiveness of IS/IT in organizations. They also allow organizations to define roadmaps on how to get from one level of maturity and evolve to the next [17]. Recently, a specific PACS maturity model (PMM) [14] was developed that describes PACS maturity and evolvability in the hospital enterprise. Based on a literature review of 34 scientific papers on PACS development and a subsequent meta-analysis, the PMM was built upon three general streams in PACS maturity and evolution: (1) radiological and hospital-wide process improvements, (2) integration optimization and innovation, and (3) enterprise PACS and the electronic patient record. From this, the model defines five cumulative levels of PACS maturity that hospital enterprises can achieve:

Level 1: PACS infrastructure

Level 2: PACS process

Level 3: clinical process capability

Level 4: integrated managed innovation

Level 5: optimized enterprise PACS chain

With the progress toward the top maturity level 5 operational (workflow) efficiency, IS/IT integration and qualitative care using PACS technology increases. Although the PMM model can be interpreted as a straightforward (i.e., sequential) accumulation of PACS investments, it does not explicitly define mechanisms on how hospitals can actually move from one maturity level to another. For developing a PACS alignment framework, however, the PMM serves as a foundation for its underlying body of knowledge and rigorous theoretical approach including the concept of business–IT alignment.

Concepts on Business/IT Alignment

The concept of “strategic” alignment—also called “fit” [18]—is a top concern for executives and IT practitioners for decades and refers to applying IS/IT in an appropriate and timely way, in harmony (i.e., complementarity between activities) with business strategies, goals, and needs [19]. It is a central element of strategic planning, the process by which organizations develop and deploy a competitive, long-term strategy in which internal resources are integrated into external opportunities [20].

The classical strategic alignment model of Henderson and Venkatraman [14] is undoubtedly the most cited concept in the field and extended by others [21]. Their model argues that a dynamic process is needed to ensure continuous alignment between business and IS/IT domains, to achieve “strategic fit” as well as “functional integration.” The model does, however, have its limitations. For instance, relations in the model are not operationalized, nor clearly defined [15]. Subsequently, Turban et al. [22] developed—and extended—the model for business–IT alignment containing new mutual relations among business dimensions that are assumed to contribute to the successful implementation and adoption of IS/IT. Better known as the MIT90’s framework, a descendant of Leavitt’s diamond, another alignment model, was developed based on the idea of internal “fit” as a dynamic equilibrium of five key organizational dimensions and external fit as strategy formulation based on environmental trends and changes in the market place using IT as an enabler. Many studies since then have used concepts that are incorporated in the MIT90 framework and its model. Thus, it is not surprising that recent alignment models have strong similarities. A shortcoming of most alignment models, however, is that they do not explicate how the dimensions of the model interact with and depend on each other. This shortcoming was addressed by Scheper [15] among others. Scheper starts by defining five organizational dimensions:

-

I.

Strategy and policy (S&P): organization of strategy and policy procedures

-

II.

Organization and processes (O&P): addresses processes as a basic principle for organizational development

-

III.

Monitoring and control (M&C): financial and non-financial management control

-

IV.

Information technology (IT); concerns IT management and development processes

-

V.

People and culture (P&C): reflects value and significance of employees for an organization

Different from most other alignment models, Scheper subsequently developed levels of maturity in measuring the development stage for each of the five dimensions, creating a multidimensional maturity matrix (cf. Sledgianowski et al. [23] for an equivalent approach). Next, he claims that alignment can be directly measured by comparing the maturity levels of all five dimensions at the same time. His alignment principle is based on the idea that organizations can mature each single dimension, but only equalization among all dimensions (i.e., alignment) will significantly improve organizations’ performances.

Multi-factorial PACS Performance

Evaluation methods have proven valuable in the past in order to assess consequences of changing the traditional film-based practice to digital (radiological) workflow [24]. Since then, several scholars argue that enterprise–PACS benefits should be evaluated from different perspectives [2]. As there are many interrelated steps between PACS usage and eventual patient outcome, the imaging workflow chain and substeps that affect the quality of imaging services and clinical outcomes are imperative to the evaluation of PACS [25].

Based on the wider adoption of technology acceptance models and levels of clinical efficacy [26, 27], we define PACS performance as the multi-factorial impacts and benefits produced by the application of PACS. This is expressed in terms of hospital efficiency and clinical effectiveness with respect to PACS workflow and the patients’ clinical journey. For the purpose of this paper, we adopted the outcomes of a meta-analytic approach that has synthesized original PACS sources on PACS performance and balanced evaluation models [3, 28]. Based on a total of 37 papers published between 2000 and 2009 that were included for review purposes in this approach (after applying several inclusion criteria having retrieved 980 key publications and positioning papers), four performance constructs are defined. These constructs were subsequently translated into measurements that are representative elements for the maturity performance measurement of PACS.

Defined by its multi-factorial nature, these constructs include measures that are available for the assessment of PACS in hospitals and can be applied to assess impacts of PACS, from (a) technical and IS/IT perspective, (b) organizational efficiency, (c) service outcomes, and (d) clinical impact. “Appendix” includes also all adopted PACS measures. These measures are adopted into our framework since they are valuable for empirical research and likewise relevant for radiology practice.

PISA Framework

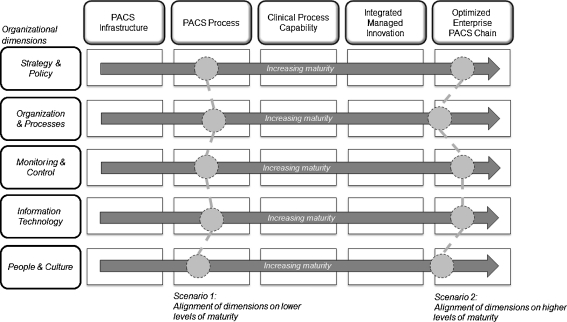

From the previous sections, our PISA framework combines the two pillars [1] PACS maturity (i.e., classifying PACS systems according to their stage of development and evolutionary plateau of process improvement) and [2] alignment (i.e., investments made in organizational dimension related to PACS should be balanced out in the organization in order to obtain synergizing benefits) and claims that both affect PACS performance of the hospital enterprise. Figure 1 provides a schematic sketch of our framework and depicts PACS maturity on the horizontal axis and organizational dimensions on the vertical axis. Of the two pillars, alignment is often the least defined one (if at all) and is often suggested as important without guidelines for practice. In our framework, we explicitly define alignment as the degree of leveling between five organizational dimensions described in “Concepts on Business/IT Alignment” section [15], i.e., alignment is measured as the degree to which the five dimensions are distinctive with regard to their maturity levels as previously defined by the PMM (see “PACS Maturity Concepts” section). Hence, alignment can be expressed within Fig. 1 as a line connecting all five (horizontal) organizational dimension (the figure also displays two “possible” scenarios of alignment among dimension on a low and high level of maturity for demonstrative purposes). Next, the framework claims that the degree of alignment and synergetic mechanisms between the several organizational dimensions (i.e., independent variable) is directly and positively related to PACS performance (i.e., dependent variable).

Fig. 1.

PISA framework

Methods and Material

Framework Development Process

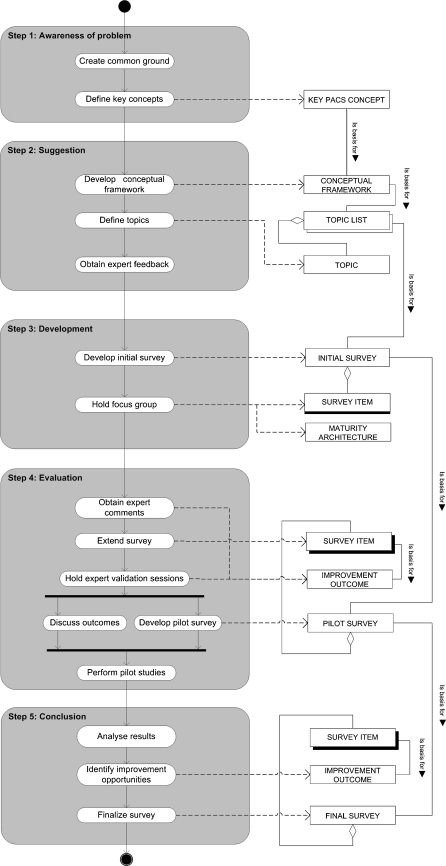

A project team1 applied an incremental development process that follows the design science methodology. In this approach, knowledge is produced by constructing and evaluating artifacts which are subsequently used as input for a better awareness of the problem [29]. To ensure quality and validity of the developed measurement instrument, we applied complementary validation methods. Guidelines were used when building the artifacts [29], securing their face and content validity, performing extensive pre-tests, and executing pilot cases at two different hospitals. Figure 2 provides an overview of the five interrelated process steps that were conducted, using a process delivery diagram [30]. During the first step, the project team reviewed literature on PACS maturity and alignment and created common ground concerning the research topic. Based on this review and the project teams’ own field experience, key concepts were developed. In step 2, these key topics were critically reviewed by two recognized PACS experts (a professor of radiology and head of a radiology department). These experts provided the project team with feedback, input, and advice on key concepts in diagnostic imaging and general radiology practices. Taking these valuable suggestions into account, an initial survey was created—step three—which was subsequently discussed with industry consultants and a PACS R&D manager during a focus group meeting. A result of this focus group was a technical architecture of each of the PACS maturity stages of the framework that was helpful in redefining technical aspect in the survey (this architecture is available upon request). The initial survey contained 28 statements—four statements for each organizational dimension (i.e., independent variable) and eight for PACS performances (i.e., dependent variable). Starting step 4, two PACS experts once again reviewed each item of this initial construct and commented on applied scales, significance and importance of each item for PACS maturity and alignment. Also, both experts evaluated the PACS maturity and alignment level of their own hospital. Outcomes suggested some extensions of the survey. All input was transformed into individual validation sessions (“Delphi method”) with three radiologists, a neurologist, a technologist, and medical informatics researcher. These sessions were used to evaluate the structure of the constructed framework and comment on the specified PACS topics and features of the operationalized survey. Using the “talk-aloud protocol,” experts articulated their thoughts and considerations as they filled in the survey. These six experts (representing four hospitals and four different economical geographical areas in The Netherlands) were recruited using personal and professional networks. Outcomes were used to improve our survey statements on validity (i.e., do the incorporated survey items measure what they are supposed to measure), reliability (i.e., is each question posed correctly and can radiologist, technologists, and PACS administrators address them), and empirical application (e.g., size of survey and tooling). Results were discussed within the project team. The survey was extended to a total of 45 statements, covering most intersections of the five horizontal axes (i.e., organizational dimensions), and the five vertical axis (i.e., PACS maturity levels). Per organizational dimension, the items were formulated according to a cumulative order, i.e., that of “increasing complexity” along the maturity scale.

Fig. 2.

Framework development process

The results of the two pilots are described in “Results” section of this paper (the piloted survey items are presented in “Appendix”). Step 5 is the conclusion, i.e., the final questionnaire as a result of the complete validation of the framework.

Pilot Sites

In the spring of 2010, two Dutch hospitals with different characteristics were selected (see Table 1) to participate as a pilot case. The two hospitals were known within the field as actively involved in optimizing their PACS deployment. As such, the pilot could focus on applying the framework for the first time on two “advanced hospitals.” This provides good opportunities to improve the contents of the survey and improve the clarity of the statements, if necessary.

Table 1.

Background characteristics of pilot sites

| Number of beds | Yearly exams | Capacity of radiologist (FTE) | PACS vendor | Experience with PACS (years) | |

|---|---|---|---|---|---|

| Hospital A | 360 | 78,146 | 4.5 | Care-stream | 6 |

| Hospital B | 900 | 200,000 | 12.5 | Agfa | 5.5 |

Per hospital two radiologists (including head of department), head of radiological technologists and a PACS administrator completed an online survey within a secured web-environment. These informants appear to be the most familiar with the subject of PACS maturity and performance, making intra-institutional validity likely. Including multiple stakeholders from the radiology department also reduces common source variance associated with sampling from the same source [19, 31], excluding face validity issues. The respondents completed the survey separately, in order to avoid systematic bias and any peer pressure to give particular answers. It took the eight respondents approximately 20–25 min to complete the survey. Comparing the individual results within each hospital allowed us to measure levels of agreement between the respondents.

The applied seven-point Likert scale for each statement consisted of the classic values “strongly disagree,” “disagree,” “somewhat disagree,” “neutral,” “somewhat agree,” “agree,” and “strongly agree.” This scale is typically used for subjective performance evaluation. It is applicable for balanced assessments rather than objective measurements. In general, outcomes of assessments in complex areas as medical processes are often qualitative and hence rely on subjective perceptions of clinicians rather than on quantitative statistics [32]. All statements were phrased in present tense, but respondents were asked to provide answers for both the current and future/preferred situation of their hospital. Next to the items measuring PACS maturity as defined by our PISA framework, the survey also contained some general questions (e.g., name, function, years of experience using PACS, etc.). PACS performance finally was measured using 13 performance statements on how well PACS contributes to efficiency and effectiveness (see “Multi-factorial PACS Performance” section).

Results

Data and Measurement

Analysis of the data from the eight completed pilot questionnaires was performed in two steps. First, the results were analyzed by comparing the descriptive statistics for both hospitals. The mean as well as standard deviation (SD) and median (M) of all maturity items were computed for the two hospitals. First, we assume that there is low variation in scores per maturity level and organization dimension, indicating that there is a level of agreement between the four respondents of each hospital. Secondly, we assume for both hospitals that there is a decreasing mean score for each next maturity level per organizational dimension confirming the cumulative order (“difficulty”) of the maturity items as defined by the PISA framework. The Table 2 sets out the results for each question (Q), where Q1 and Q2 are the two statements per maturity level (ML).

Table 2.

Descriptive statistics

| Hospital A | Hospital B | All pilot respondents | |||||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | M | |

| Organizational dimension 1: strategy and policy | |||||||

| Maturity level 3 Q1 | 4.50 | 1.29 | 3.25 | 1.26 | 3.88 | 1.36 | 3.50 |

| Maturity level 3 Q2 | 3.75 | 1.50 | 4.25 | 0.96 | 4.00 | 1.20 | 3.50 |

| Maturity level 4 Q1 | 4.25 | 0.96 | 3.00 | 1.83 | 3.63 | 1.51 | 4.00 |

| Maturity level 4 Q2 | 6.75 | 0.50 | 6.00 | 1.41 | 6.38 | 1.06 | 7.00 |

| Maturity level 5 Q1 | 3.50 | 1.00 | 4.50 | 2.52 | 4.00 | 1.85 | 4.00 |

| Maturity level 5 Q2 | 3.75 | 1.89 | 3.00 | 0.82 | 3.38 | 1.41 | 3.50 |

| Organizational dimension 2: organization and processes | |||||||

| Maturity level 3 Q1 | 4.50 | 2.38 | 3.50 | 1.73 | 4.00 | 2.00 | 4.50 |

| Maturity level 3 Q2 | 2.00 | 0.82 | 1.25 | 0.50 | 1.63 | 0.74 | 1.50 |

| Maturity level 4 Q1 | 1.50 | 0.58 | 3.75 | 0.50 | 2.63 | 1.30 | 2.50 |

| Maturity level 4 Q2 | 5.25 | 1.50 | 5.50 | 0.58 | 5.38 | 1.06 | 5.50 |

| Maturity level 5 Q1 | 1.25 | 0.50 | 1.00 | 0.00 | 1.13 | 0.35 | 1.00 |

| Maturity level 5 Q2 | 4.75 | 1.71 | 4.50 | 1.73 | 4.63 | 1.60 | 5.00 |

| Organizational dimension 3: monitoring and control | |||||||

| Maturity level 3 Q1 | 4.00 | 0.82 | 3.75 | 1.71 | 3.88 | 1.25 | 4.00 |

| Maturity level 3 Q2 | 3.50 | 1.00 | 3.25 | 0.96 | 3.38 | 0.92 | 4.00 |

| Maturity level 4 Q1 | 4.67 | 1.15 | 2.25 | 1.26 | 3.29 | 1.70 | 4.00 |

| Maturity level 4 Q2 | 2.75 | 1.50 | 3.75 | 1.26 | 3.25 | 1.39 | 4.00 |

| Maturity level 5 Q1 | 4.75 | 0.96 | 3.25 | 2.22 | 4.00 | 1.77 | 4.00 |

| Maturity level 5 Q2 | 3.75 | 1.26 | 4.00 | 1.83 | 3.88 | 1.46 | 4.00 |

| Organizational dimension 4: information technology | |||||||

| Maturity level 3 Q1 | 5.25 | 0.50 | 5.75 | 1.26 | 5.50 | 0.93 | 5.50 |

| Maturity level 3 Q2 | 5.50 | 1.29 | 4.75 | 2.63 | 5.13 | 1.96 | 5.50 |

| Maturity level 4 Q1 | 4.75 | 0.96 | 5.50 | 1.00 | 5.13 | 0.99 | 5.50 |

| Maturity level 4 Q2 | 5.50 | 1.00 | 2.75 | 2.06 | 4.13 | 2.10 | 5.00 |

| Maturity level 5 Q1 | 5.00 | 1.00 | 5.00 | 0.82 | 5.00 | 0.82 | 5.00 |

| Maturity level 5 Q2 | 3.50 | 1.29 | 3.50 | 1.91 | 3.50 | 1.51 | 3.50 |

| Organizational dimension 5: people and culture | |||||||

| Maturity level 3 Q1 | 3.00 | 1.41 | 4.25 | 2.22 | 3.63 | 1.85 | 4.00 |

| Maturity level 3 Q1 | 4.50 | 1.00 | 2.50 | 1.29 | 3.50 | 1.51 | 3.50 |

| Maturity level 4 Q2 | 3.25 | 1.71 | 5.00 | 2.00 | 4.13 | 1.96 | 4.50 |

| Maturity level 4 Q2 | 3.25 | 1.26 | 4.75 | 1.89 | 4.00 | 1.69 | 4.00 |

| Maturity level 5 Q1 | 4.25 | 0.96 | 5.00 | 0.82 | 4.63 | 0.92 | 5.00 |

| Maturity level 5 Q2 | 3.00 | 1.83 | 5.00 | 1.41 | 4.00 | 1.85 | 4.50 |

Based on the descriptives in Table 2, several remarks can be made. Regarding the mean scores per hospital, most statements comply with the assumed cumulative order, i.e., mean scores decrease with higher maturity levels for each organizational dimension. There are a few exceptions, however; these items are in “bold.” For instance, item Q2 of maturity level 4 related to “strategy and policy” has an unexpected high mean score (6.38), deviating from the premise that the mean score at lower maturity levels would be higher as these items are less “difficult” to agree with for hospitals.

Likewise, item Q2 of maturity level 3 related to “organization and processes” has a lower mean score than assumed, i.e., items at higher maturity levels of this dimension show higher instead of lower mean scores. These deviations are in fact violations of our accumulation assumption but also provide essential improvement opportunities.

In same vein, we analyzed the scores on the PACS performance items for both hospitals. PACS performance was measured through 13 questions that address the perceived benefits of PACS application in the respondents’ hospital. Table 3 shows the results.

Table 3.

PACS performance descriptives

| Hospital A | Hospital B | All pilot respondents | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | M | Mean | SD | M | Mean | SD | M | |

| Clinical contribution | |||||||||

| C1 | 5.50 | 1.29 | 5.50 | 4.50 | 0.00 | 4.50 | 5.17 | 1.17 | 5.00 |

| C2 | 6.25 | 0.50 | 6.00 | 7.00 | 0.00 | 7.00 | 6.63 | 0.52 | 7.00 |

| C3 | 4.33 | 2.08 | 5.00 | 5.25 | 1.71 | 5.50 | 4.86 | 1.77 | 5.00 |

| C4 | 5.33 | 0.58 | 5.00 | 6.50 | 0.00 | 6.50 | 5.80 | 0.84 | 6.00 |

| Organizational efficiency | |||||||||

| O1 | 4.75 | 0.96 | 4.50 | 5.00 | 0.00 | 5.00 | 4.86 | 0.69 | 5.00 |

| O2 | 3.00 | 1.41 | 3.00 | 6.33 | 0.58 | 6.00 | 5.00 | 2.00 | 6.00 |

| Service construct | |||||||||

| S1 | 4.50 | 1.00 | 5.00 | 6.00 | 0.00 | 6.00 | 5.25 | 1.04 | 5.50 |

| S2 | 5.25 | 0.50 | 5.00 | 2.25 | 0.96 | 2.50 | 3.75 | 1.75 | 4.00 |

| S3 | 5.25 | 0.50 | 5.00 | 5.75 | 0.50 | 6.00 | 5.50 | 0.53 | 5.50 |

| S4 | 6.00 | 0.00 | 6.00 | 5.75 | 0.50 | 6.00 | 5.86 | 0.38 | 6.00 |

| Technical information system | |||||||||

| T1 | 4.50 | 1.91 | 4.00 | 5.25 | 2.87 | 6.50 | 4.88 | 2.30 | 5.50 |

| T2 | 5.50 | 1.29 | 5.50 | 6.50 | 0.58 | 6.50 | 6.00 | 1.07 | 6.00 |

| T3 | 5.50 | 1.29 | 5.50 | 5.00 | 0.82 | 5.00 | 5.25 | 1.04 | 5.00 |

Productivity was calculated using the total amount of exams (and specified per modality) divided by the total capacity (i.e., FTE radiologists)

Based on comments of the respondents and the descriptive statistics, some remarks can be made. It appears during the pilot that for hospital B, that questions C1 and C4 could only be answered by radiologists, making them apparent radiologist specific. Also, statement C2 has a relatively “too high” mean and median score accompanied by a low SD, suggesting that the scale of this item needed modification.

Besides evaluation by analysis of the hospital descriptive statistics, all eight respondents commented on the items and provided suggestions for their elaboration and modification. In general, the respondents of the pilot concluded that the survey was useful, easy to interpret, and a valuable tool for further PACS development in their hospital.

Instrument Refinement

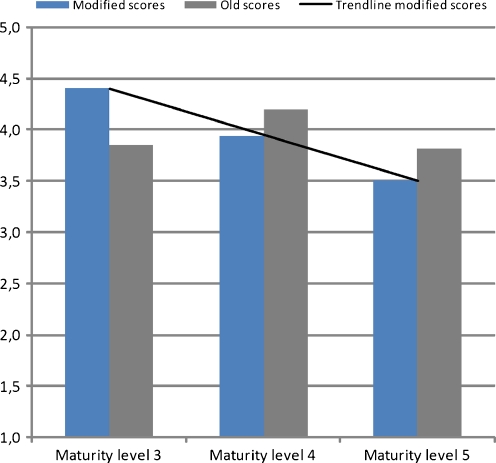

By critically considering the results of the pilot statistics, including the comments and recommendations of the respondents, we modified several survey statements. Table 4 lists the modifications proposed after the pilot studies. From the mean “modified” and “old” scores of statements for each separate maturity level—see Fig. 3—it can be gleaned that the modified survey statements were successfully ordered by PACS maturity, i.e., hierarchical order.

Table 4.

Survey modifications

| Number | Dimension | Type of modification | Impact on model |

|---|---|---|---|

| 1 | Strategy and policy | ML3Q1 was interchanged with ML4Q2 based on perceived complexity. In its new position ML4Q2 was reworded following recommendations of the respondents | Better fit of model and ordered complexity |

| 2 | Organization and processes | ML5Q2 was interchanged with ML3Q2 based on perceived complexity of the statement | Better fit of model and ordered complexity |

| 3 | Organization and processes | Statement ML4Q2 was reworded to fit the complexity of maturity level 4 based on comments of one of the respondents | Statement ML4Q2 better fits maturity level 4 and follows increased complexity |

| 4 | Information technology | Statement ML5Q1 was reworded to fit the complexity of maturity level 5 | Statement ML5Q1 better fits maturity level 4 and follows increased complexity |

| 5 | People and culture | Statement ML5Q1 was reworded to fit the complexity of maturity level 5 | Statement ML5Q1 better fits maturity level 5 and follows increased complexity |

| 6 | PACS performance | Answer categories of performance metric C2 (see “Appendix”) have been rescaled | Results in better distribution of scores |

Fig. 3.

Mean scores per PACS maturity level

Measuring PACS Maturity and Alignment

After the maturity items and measurements are evaluated, the next step is to examine how PACS “alignment” can be measured. As suggested earlier by our PISA framework, we recall that alignment can be indicated by the differences between the maturity scores on the five organizational dimensions. Hence, overall maturity scores for each of the five organizational dimensions were calculated. To adjust the survey design in which we defined subsequent maturity items, an algorithm was constructed in such a way that it captures this principle of accumulating maturity.

This algorithm includes the following assumptions:

Mean scores (ranging from 1 to 7) were used in order to calculate scores for each organizational dimension (i) for maturity levels 3–5: μ i3–5.

PACS maturity levels (λ) were assigned points, λ 3 = 200, λ 4 = 300, and λ 5 = 400. Thus, each λ has a total range of 100 points.

A mean score of 4—on the applied Likert scale—was used as a threshold (Τ). A mean per statement of ≥4 confirms the specified λ to which the statement is assigned to. A mean of <4 confirms that the preceding λ is more applicable.

Because we defined a Τ at 4 on our Likert scale, the remaining score space for both scores ≥4 (i.e., 7 − Τ) and <4 (i.e., T − 1) is applied in the calculation of maturity, represented by γ.

Using the above assumptions, the following three-step algorithm was applied to each organizational dimension:

-

I.

if μ i3 ≥ 4; then (μ i3 − Τ) × (100/γ) + λ 3; else

((μ i3 − 1)/γ )× λ 3 → π i3

-

II.

if μ i4 ≥ 4; then (μ 4 − Τ) × (100/γ) + λ 4; else

{if ((μ i4 − 1)/γ) × λ 4 ≤ π i3; then π i3; else ((μ i4 − 1)/γ) × λ → π i4 }

-

III.

if μ i5 ≥ 4; then (μ 5 − Τ) × (100/γ) + λ 5; else

{if ((μ i5 − 1)/γ) × λ 5 ≤ π i4; then (π i3 + π i4)/2; else ((μ i5 − 1)/γ) × λ 5 → π i5 }

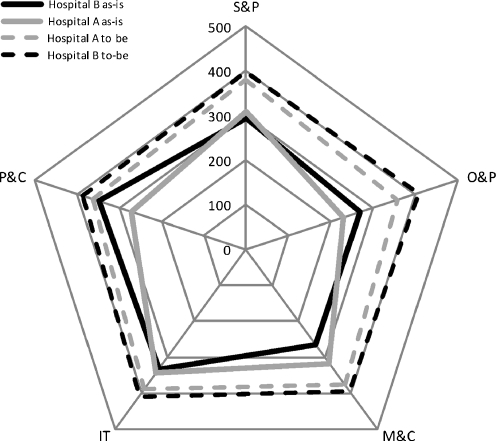

The PACS maturity scores (π i3–5) calculated following this algorithm were then normalized and summed, weighing their contribution to the overall PACS maturity (see Fig. 4).

Fig. 4.

PACS maturity and alignment scores

Now that we have created monotonous cumulating maturity scales for each organization dimensions, we next define the overall alignment measure (α). There are different methods possible to calculate the differences between the maturity scores of the five organizational dimensions. In this study, we applied the difference between the maximum and minimum maturity scores of the five dimensions as measure [33]. The “smaller” this difference, the “better” the alignment between the dimensions (see Table 5). An alternative for the alignment measurement is calculation of the standard deviation between the five dimensional maturity scores, or selecting the minimum score (as the “weakest link”). In practice, these alternative measurements for alignment strongly correlate with our initial “min–max” measurement.

Table 5.

PACS maturity and alignment scores

| Hospital | S&P | O&P | M&C | IT | P&C | α | β |

|---|---|---|---|---|---|---|---|

| A (as-is) | 308 | 229 | 317 | 344 | 273 | 115 | 5.1 |

| B (as-is) | 294 | 270 | 266 | 333 | 349 | 83 | 5.5 |

| A (to-be) | 381 | 358 | 376 | 389 | 363 | 31 | – |

| B (to-be) | 399 | 406 | 393 | 409 | 386 | 23 | – |

Table 5 shows the final and aggregated PACS maturity and alignment scores for both hospitals, including the mean score for PACS performance (β). Comparing these means allows us to explore the claim that high PACS alignment coincides with high PACS performance. Obviously this can only be done attentively, as we only have data on two hospitals.

From Table 5 can be concluded that hospital B has a relatively lower α-score than hospital A, which implies that its PACS alignment (i.e., degree of alignment and synergetic mechanisms between the several organizational dimensions) is better. Also hospital B has a higher β score than A, implying better PACS performance. While hospital A especially has lower maturity scores on O&P and P&C, hospital B has deficiencies in the M&C dimension. Hence, for hospital A, the gap between IT and O&P is to be closed to reach optimal alignment (represented by the solid gray line in Fig. 4). Likewise, optimal alignment for hospital B is inhibited by the distance between the scores on the P&C and M&C dimensions. Items related to O&P have moderately low maturity scores, whereas the IT dimension demonstrates relative higher maturity in both hospitals. This seems to resemble a (current) strong technology focus that might hinder a balanced perspective also taking operational/organizational efficiencies into account.

In doing our pilot, we also collected qualitative information to evaluate our PISA framework and underlying concepts/expectations. From interviews, it became clear that both hospitals currently have a predominant “local” (departmental) focus on PACS, rather than aiming at aligning PACS operations hospital-wide and beyond hospital boundaries. The following excerpt from a respondent from hospital B clarifies this view:

Our hospital has a clear local orientation towards operations with respect to PACS. Strategy and policy on a regional, cross-enterprise level is currently missing….

Also, the outcomes of this pilot suggest that both hospitals have improvement opportunities on several organizational dimensions. Our PISA framework supports hospitals to define specified investments that will improve PACS maturity and alignment.

Application in Practice

Now that we have described basic concepts of PACS maturity and alignment, the next step is to glean some common and practical guidelines for PACS that will support hospital decision makers in deciding how to travel in a certain direction (i.e., mature PACS), given the hospital’ specific resources and competencies and current system infrastructure. Hospital strategic planning processes are formed on the basis of internal, external, market-driven, and non-market-driven components [34] (i.e., they are thus situational). Improvement activities to realize a PACS maturity transition are likewise conditional on given situations such as the given PACS’s state of maturity and the specified strategic alignment direction. Thus, PACS maturity and alignment improvement programs depend on what is required to realize transition toward higher levels of PACS maturity. As such, we believe that desired maturity levels cannot be achieved without conscientiously governing and addressing all process focused elements and deployment activities at each of the intermediary maturity levels. This implies that careful consideration is required when toward a certain maturity level.

In order to define specified improvement activities—with accompanying investments—that can be executed along the five organizational dimensions that best meets a hospitals’ current and future needs, we suggest the following steps to be taken:

As a first step, assess the maturity, alignment, and performance state of the current deployed PACS (“as-is”) and also a “to-be” situation should be determined using the PACS maturity model—and survey—involving multiple stakeholders (e.g., radiologists, technologists and PACS administrator, etc.).

A second step concerns performing a fit-gap analysis in determining if the current PACS maturity level is either a precursor for the to-be situation or the desired maturity level “leaps” over intermediary stages. Hence, at this stage, decision makers need to be decided whether the improvement roadmap follows an incremental improvement process (stages follow one another by definition), radical changes (i.e., introducing radical changes to processes and not following the logic of monotonous sequential development), or a hybrid combination of the two. This decision implies—in terms of strategic direction—if the hospital structure and PACS process focus and/or persist on a previously chosen paths by retaining current strategies and structures.

In the third and final step, we suggest to set out all improvement activities and make deliberate investments that are required in order to achieve the desired level of PACS maturity and alignment. For this purpose, a set of measurements can be defined which are organized into projects that take into account the risks involved, investment costs, critical success factors, and benefits. In the course of the execution of all (hospital-wide) activities, the level of alignment between the five organizational dimensions should be monitored in managing similarities, overlap, and synergy between the improvement projects in order to realize strategic objectives and optimal deployment of PACS.

In practice, hospitals define their own improvement roadmaps incrementally, radical or both as a strategy. Based on the above steps and accompanying considerations, each optimization roadmap defines improvement projects that can be executed according to the five organizational dimensions. Both the alignment and fit approach that build the model imply that multi-disciplinary teams should be formed to deliver (on tactical and operational levels) the agreed objectives [2]. Consecutively, actions and results should be monitored using project management methods. Basically, evolving toward a higher level of PACS maturity includes critically reflecting on the chosen path (i.e., direction) while continuously maintaining alignment between the business and IS/IT dimensions and improve overall PACS performance.

Discussion

Motivated by what appears to be an intricate process on which scientific sources seem scarce, this paper proposes an integrated situational framework that allows hospitals to empirically assess PACS maturity and alignment. By explicitly addressing a hierarchical order (“increasing complexity”) of survey items along the maturity scale, communality, and interrelationship of stages of maturity, this paper avoided common pitfalls in business–IT alignment survey instruments and case research. Our study provided initial support for the basic claim that alignment and performance in the PACS domain coincide, thus enabling practical mechanisms for decision makers for setting goals, critically reflecting on current systems and strategically plan toward higher levels of PACS maturity and alignment.

In spite of its enticement, our framework has several limitations that suggest caution is required with the interpretation of the findings. First, this study was based on only two hospitals. This inhibits generalizability of key results. However, by restricting the scope of the study, we were able to get an in-depth view of both hospitals’ operations. Second, this study did not provide any “best practices” that other hospitals can benefit from nor does it provide extensive handles for comprehensive strategic planning to obtain optimum PACS performance. Since both studied hospitals differ in their respective PACS maturity and alignment scores, this could imply that the PISA framework may elucidate best practices. Finally, there is a need to develop a more robust measure of maturity and alignment. The applied calculation may have inherent biases since it was partly based on a rule-based algorithm.

The above limitations suggest avenues for further research. First, it is our ambition to validate the PISA framework in a representative group of Dutch hospitals. This allows the project team to examine extreme (high or low) scores and their respective impact on PACS performance by applying conventional analytical techniques, path analysis, and structural equation modeling to verify construct validity, factor reliability, and overall goodness-of-fit of the model. Second, the underlying mechanisms through which PACS performance is achieved are by no means comprehensible. Therefore, additional research is required to identify interaction effects—co-alignment [18]—of (latent) variables connecting maturity and alignment to PACS performance.

Conclusions

We argue that better PACS performance can be achieved by explicitly aligning maturity scores on each of the five organizational dimensions, done simultaneously and hence by an integrated management perspective. The PISA framework demonstrates promising results and outcomes of this study support empirical application of the framework to hospital enterprises in order to gain insights into their PACS maturity and alignment. In practice, the PISA framework appears as a useful checklist to systematically identify the improvement areas for hospitals in the PACS domain and is designed for further empirical research and clinical practice application.

Acknowledgments

The authors wish to thank respondents from both hospitals for their kind cooperation and so made this study possible. Their comments and suggestions also improved our framework and survey considerably.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Appendix: PISA Survey Statements2

This appendix contains all statements and measures of our piloted survey. It includes the five organizational dimensions—including ML—and all PACS performances. For each organizational dimension statement, a typical seven-point Likert scale was applied (see “Pilot Sites” section). For PACS performance, the scale is included in the table. For each ML, two questions (Q1, Q2) were included. Based on our rigorous approach, we believe that two questions are optimal from both a scientific and practical perspective. Also, our experts were convinced this would cover sufficient amounts of detail.

| Organizational dimension 1: strategy and policy | |

| ML 3 | (Q1) Short- and long-term (investment) plans concerning PACS are aligned between radiology and other departments/wards |

| (Q2) Within the hospital emphasis is on direct display of images from the archive instead of required storage capacity | |

| ML 4 | (Q1) Integration of PACS with the electronic patient record is an important strategic objective of the hospital |

| (Q2) The basic principle with the usage of PACS is primary interpretation by radiologists using uncompressed (highest resolution) images from all modalities | |

| ML 5 | (Q1) The external environment is consciously inquired for new developments and products to optimize PACS functionality |

| (Q2) Strategic and operational (multi-year) plans contain impact and opportunities for chain partners with respect to PACS | |

| Organizational dimension 2: organization and processes | |

| ML 3 | (Q1) The hospital actively improves its service level using quality standards and measures for digital PACS workflow |

| (Q2) All departments of the hospital enterprise can request and plan radiology exams using an electronic order-entry system (that is integrated with PACS/RIS) | |

| ML 4 | (Q1) All diagnostic images from other departments (including cardiology, nuclear medicine, endoscopy, gynecology, pathology) are stored into one central PACS archive |

| (Q2) At each dedicated workspace radiologists have all required patient information (e.g., lab results, reports, previous studies, etc.) and integrated 2D/3D reconstruction tools | |

| ML 5 | (Q1) The hospital exchanges PACS data real time with chain partners using standard exchange protocols (cross-enterprise document sharing/XDS-i) if necessary |

| (Q2) Every image (including old images for comparison) is instantly available on any workstation in the hospital for every user at any time | |

| Organizational dimension 3: monitoring and control | |

| ML 3 | (Q1) Prognosis concerning the amount of radiology exams and required PACS storage capacity are performed on a recurrent basis |

| (Q2) The hospital measures and monitors both financial and non-financial PACS data (e.g., amount of exams, quality, patient satisfaction, productivity, etc.) | |

| ML 4 | (Q1) Service level agreements with PACS vendors (for instance concerning maintenance, functionality, costs, and storage capacity) are periodically evaluated |

| (Q2) PACS generates comprehensive management information that is always on time | |

| ML 5 | (Q1) The hospital confronts PACS vendors if service level agreements are not (or partially) achieved |

| (Q2) The hospital has an accurate overview of the contribution of PACS to overall cost prizes per radiology exam (for al modalities) | |

| Organizational dimension 4: information technology | |

| ML 3 | (Q1) PACS is compatible with current international standards and classifications (Health Level 7 and Digital Imaging and Communication in Medicine) |

| (Q2) PACS exchanges information with the radiology information systems and hospital information system without any complications | |

| ML 4 | (Q1) The hospital adopts standard “off-the-shelve”—vendor independent—hardware (for archiving solutions) and software for PACS |

| (Q2) The impact on PACS storage capacity and requirements prognosed due to upgrades with respect to modalities and/or when new acquisition devices are acquired | |

| ML 5 | (Q1) The hospital applies reagent (security)protocols throughout the hospital enterprise in preserving privacy of patient data, PACS data security, and back-up (including preventing a “single point of failure”) |

| (Q2) PACS is integral part the hospitals’ electronic patient record | |

| Organizational dimension 5: people and culture | |

| ML 3 | (Q1) The hospital actively involves users of PACS with the development of customizable user interfaces |

| (Q2) PACS process and procedure knowledge are extensively applied within the hospital by clinicians and technologists | |

| ML 4 | (Q1) End-users of PACS affect the decision making process in selecting a specific PACS vendor |

| (Q2) End-users affect digital PACS workflow and functionality improvements | |

| ML 5 | (Q1) Radiologist are aware of the fact that PACS has the potential to influence the competitive position of the hospital and service delivery toward chain partners |

| (Q2) Innovative solutions (e.g., integration of new tools and applications) with PACS are discussed during clinicoradiological meetings | |

| PACS performances (and ID) | Applied answer scale |

|---|---|

| Clinical impact | |

| Interpretation time (C1): time to process a series of CT exams (defined as the time interval between availability of full data set on screen and finalization) | Likert 1–7 (<5, 5–8, 8–11, 11–14, 14,17, 17–20, >20 min) |

| Diagnostic accuracy (C2): sufficiency rate for which current radiology workspaces (including viewing monitors) are sufficient for image interpretation | Likert 1–7 (0–20%, 20–40%, 40–60%, 60–70%, 70–80%, 80–90%, 90–100%) |

| Communication efficacy (C3): PACS contribution toward communication of critical findings and interdepartmental collaboration | Likert 1–7 (no contribution at all–profound contribution) |

| Patient management contribution (C4): contribution of PACS toward decision making in diagnostic process or treatment(plan) of patient | Likert 1–7 (no contribution at all–profound contribution) |

| Organizational efficiency | |

| Report turnaround time (O1): sum of time after execution, reporting and availability of imaging exams’ finalized report of CT exams | Likert 1–7 (<2, 2–4, 4–6, 6–8, 8–10, 10–12, >12 h) |

| Budget ratio (O2): percentage (over)expenditures of allocated PACS budgets | Likert 1–7 (no over expenditure, 0–10%, 10–20%, 20–30%, 30–40%, >40%) |

| Service outcomes | |

| Patient waiting time (S1): elapsed time between a patients’ arrival at radiology (on appointment) till subsequent beginning of the radiology exam | Likert 1–7 (<5, 5–10, 10–15, 15–20, 20–25, 25–30, >30 min) |

| Referring physician satisfaction (S2): satisfaction of referring clinicians on availability of imaging data and associated reports | Likert 1–7 (totally not satisfied–totally satisfied) |

| Patient satisfaction (S3): satisfaction of patients on service delivery | Likert 1–7 (totally not satisfied–totally satisfied) |

| User satisfaction (S4): user satisfaction on the current user interface and functionality of PACS | Likert 1–7 (totally not satisfied–totally satisfied) |

| Technical and IS/IT perspective | |

| Average time to display (T1): average time to display of old CT studies (with approximately 400 images) from PACS (with full data loaded on screen) | Likert 1–7 (<10 s, 10–20 s, 20–30 s, 30–60 s, 60 s–5 min, >5 min, sometimes no retrieval) |

| Average time to display (T2): Average time to display of newly acquired CT studies (with approximately 400 images) from PACS (with full data set of screen) | Likert 1–7 (<5 s, 5–10 s, 10–20 s, 20–30 s, 30–60 s, 60 s–5 min, >5 min) |

| Display time (T3): each dedicated workstation is capable of displaying uncompressed CT studies—averaging 1,500–2,000 images—without any delay | Likert 1–7 (totally agree–totally not agree) |

Footnotes

The project team consists of two professors and an associate professor of organization and information and business–IT alignment and a PACS/medical informatics researcher.

Statements for maturity level 1 and 2 for each organizational domain are omitted for practical reasons and due to the fact that all Dutch hospital have a PACS implemented (initial maturity level). Level 2 can be deducted from assigned scores to level 3 statements.

References

- 1.Buccoliero L, Calciolari S, Marsilio M, Mattavelli E. Picture, archiving and communication system in the Italian NHS: a primer on diffusion and evaluation analysis. J Digit Imaging. 2009;22(1):34–47. doi: 10.1007/s10278-007-9101-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Duyck P, Pynoo B, Devolder P, Voet T, Adang L, Ovaere D, et al. Monitoring the PACS implementation process in a large university hospital—discrepancies between radiologists and physicians. J Digit Imaging. 2010;23(1):73–80. doi: 10.1007/s10278-008-9163-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Van de Wetering R, Batenburg R, Versendaal J, Lederman R, Firth L. A balanced evaluation perspective: picture archiving and communication system impacts on hospital workflow. J Digit Imaging. 2006;19(Suppl 1):10–17. doi: 10.1007/s10278-006-0628-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Andriole KP, Khorasani R. Implementing a replacement PACS: issues to consider. J Am Coll Radiol. 2007;4(6):416–418. doi: 10.1016/j.jacr.2007.03.009. [DOI] [PubMed] [Google Scholar]

- 5.Huang HK: PACS is only in the beginning of being used as a clinical research tool. The 24th international EuroPACS conference; June 15th to 17th; Trondheim, Norway, pp 1–10, 2006

- 6.Cheung N-T, Lam A, Chan W, Kong J. Integrating images into the electronic patient record of the hospital authority of Hong Kong. Comput Med Imaging Graph. 2005;29:137–142. doi: 10.1016/j.compmedimag.2004.09.007. [DOI] [PubMed] [Google Scholar]

- 7.Morin RL, Cecil RA. PACS archiving: a multivariate problem and solution. J Am Coll Radiol. 2006;3(1):69–73. doi: 10.1016/j.jacr.2005.09.013. [DOI] [PubMed] [Google Scholar]

- 8.Quiles J, Souto TM, Pereira M, Tahoces PG, Vidal JJ. Technical considerations for multimodality clinical workstations in a Hospital PACS project. Int Congr Ser. 2005;1281:1010–1015. doi: 10.1016/j.ics.2005.03.207. [DOI] [Google Scholar]

- 9.Langer S: Challenges for data storage in medical imaging research. J Digit Imaging 1–5, 2010. doi:10.1007/s10278-010-9311-8 [DOI] [PMC free article] [PubMed]

- 10.Nagy P. The future of PACS. Med Phys. 2007;34(7):2676–2682. doi: 10.1118/1.2743097. [DOI] [PubMed] [Google Scholar]

- 11.Chan S. The importance of strategy for the evolving field of radiology. Radiology. 2002;224(3):639–648. doi: 10.1148/radiol.2243011390. [DOI] [PubMed] [Google Scholar]

- 12.Kundel HL, Seshadri SB, Langlotz CP, Lanken PN, Horii SC, Nodine CF, et al. Prospective study of a PACS: information flow and clinical action in a medical intensive care unit. Radiology. 1996;199(1):143–149. doi: 10.1148/radiology.199.1.8633138. [DOI] [PubMed] [Google Scholar]

- 13.Van de Wetering R, Batenburg RS. A PACS maturity model: a systematic meta-analytic review on maturation and evolvability of PACS in the hospital enterprise. Int J Med Inform. 2009;78(2):127–140. doi: 10.1016/j.ijmedinf.2008.06.010. [DOI] [PubMed] [Google Scholar]

- 14.Henderson JC, Venkatraman N. Strategic alignment: leveraging information technology for transforming organisations. IBM Syst J. 1993;32(1):4–16. doi: 10.1147/sj.382.0472. [DOI] [Google Scholar]

- 15.Scheper WJ. Business IT alignment: solution for the productivity paradox (in Dutch) The Netherlands: Deloitte & Touche; 2002. [Google Scholar]

- 16.Nolan RL. Managing the computer resource: a stage hypothesis. Communications of the Association for Computing Machinery. 1973;16(7):399–405. doi: 10.1145/362280.362284. [DOI] [Google Scholar]

- 17.Galliers RD, Sutherland AR. Information systems management and strategy formulation: the ‘stages of growth’ model revisited. J Inf Syst. 1991;1(2):89–114. doi: 10.1111/j.1365-2575.1991.tb00030.x. [DOI] [Google Scholar]

- 18.Venkatraman N. The concept of fit in strategy research: towards verbal and statistical correspondence. Acad Manage Rev. 1989;14(No. 3):423–444. [Google Scholar]

- 19.Luftman J, Kempaiah R. An update on business–IT alignment: “A Line” has been drawn. MIS Quarterly Executive. 2007;6(3):165–177. [Google Scholar]

- 20.King WR, Cleland DI. A new method for strategic systems planning. Bus Horiz. 1975;18(Number 4):55–64. doi: 10.1016/0007-6813(75)90027-0. [DOI] [Google Scholar]

- 21.Chan YE, Reich BH. IT alignment: an annotated bibliography. J Inf Technol. 2008;22:316–396. doi: 10.1057/palgrave.jit.2000111. [DOI] [Google Scholar]

- 22.Turban, McLean, Wetherbe . Information technology for management: making connections for strategic advantage. Chichester: Wiley; 1999. [Google Scholar]

- 23.Sledgianowski D, Luftman JR, Reilly RR. Development and validation of an instrument to measure maturity of IT business strategic alignment mechanisms. Information Resources Management Journal. 2006;19(3):18–33. doi: 10.4018/irmj.2006070102. [DOI] [Google Scholar]

- 24.Andriole K, Luth DM, Gould RG. Workflow assessment of digital versus computed radiography and screen-film in the outpatient environment. J Digit Imaging. 2002;15(Suppl 1):124–126. doi: 10.1007/s10278-002-5079-9. [DOI] [PubMed] [Google Scholar]

- 25.Shannon RH. Computers and diagnostic radiology: the state of the art of meeting medical care objectives. J Med Syst. 1977;1(1):37–49. doi: 10.1007/BF02222875. [DOI] [PubMed] [Google Scholar]

- 26.Fineberg HV, Bauman R, Sosman M. Computerized cranial tomography. Effect on diagnostic and therapeutic plans. JAMA. 1977;238(3):224–227. doi: 10.1001/jama.238.3.224. [DOI] [PubMed] [Google Scholar]

- 27.Langlotz CP, Seshadri S. Technology assessment methods for radiology systems. Radiol Clin North Am. 1996;34(3):667–679. [PubMed] [Google Scholar]

- 28.Van de Wetering R, Batenburg R. Defining and formalizing: a synthesized review on the multi-factorial nature of PACS performance. International Journal of Computer Assisted Radiology and Surgery. 2010;5(Supplement 1/June):170. doi: 10.1007/s11548-010-0414-y. [DOI] [PubMed] [Google Scholar]

- 29.Hevner AR, March ST, Park J, Ram S. Design science in information systems research. MIS Quarterly. 2004;28(No. 1):75–105. [Google Scholar]

- 30.Van de Weerd I, Brinkkemper S. Meta-modeling for situational analysis and design methods. Hershey: Idea Group; 2008. [Google Scholar]

- 31.Kearns GS, Lederer AL. The effect of strategic alignment on the use of IS-based resources for competitive advantage. J Strateg Inf Syst. 2003;9:265–293. doi: 10.1016/S0963-8687(00)00049-4. [DOI] [Google Scholar]

- 32.Crowe B, Sim L. An assessment of the effect of the introduction of a PACS and RIS on clinical decision making and patient management at Princess Alexandra Hospital Brisbane, Australia. Int Congr Ser. 2005;1281:964–967. doi: 10.1016/j.ics.2005.03.347. [DOI] [Google Scholar]

- 33.Batenburg RS, Versendaal JM. Business alignment in the CRM domain: predicting CRM performance. In: Leino T, Saarinen T, Klein S, editors. Proceedings of the 12th European conference on information systems. Turku: Turku School of Economics and Business Administration; 2004. [Google Scholar]

- 34.Liedtka JM. Formulating hospital strategy: moving beyond a market mentality. Health Care Management Review. 1992;17:21–26. doi: 10.1097/00004010-199201710-00004. [DOI] [PubMed] [Google Scholar]