Abstract

The purpose of this investigation was to examine the relationships between young cochlear-implant users' abilities to produce the speech features of nasality, voicing, duration, frication, and place of articulation and their abilities to utilize the features in three different perceptual conditions: audition-only, vision-only, and audition-plus-vision. Subjects were 23 prelingually deafened children who had at least 2 years of experience with a Cochlear Corporation Nucleus cochlear implant, and an average of 34 months. They completed both the production and perception version of the Children's Audio–visual Feature Test, which is comprised of ten consonant-vowel syllables. An information transmission analysis performed on the confusion matrices revealed that children produced the place of articulation fairly accurately and voicing, duration, and frication less accurately. Acoustic analysis indicated that voiced sounds were not distinguished from unvoiced sounds on the basis of voice onset time or syllabic duration. Subjects who were more likely to produce the place feature correctly were likely to have worn their cochlear implants for a greater length of time. Pearson correlations revealed that subjects who were most likely to hear the place of articulation, nasality, and voicing features in an audition-only condition were also most likely to speak these features correctly. Comparisons of test results collected longitudinally also revealed improvements in production of the features, probably as a result of cochlear-implant experience and/or maturation.

INTRODUCTION

After 2 years of experience with a cochlear implant, most prelingually deafened children recognize at least some speech cues while wearing their devices (Fryauf-Bertschy et al., 1992). Potentially, their abilities to hear may have begun to influence their abilities to speak. An intriguing question to ask about experienced users is how their speech recognition and production skills correspond, for both clinical reasons (e.g., answers might provide guidance to speech-language pathologists who wish to integrate auditory training goals with speech therapy goals) and theoretical reasons (e.g., answers might illuminate general principles of motor development). Speech recognition for these children probably entails attending to both auditory and visual cues.

A. Speech recognition with auditory and visual cues

Young hearing-aid or cochlear-implant users who speak relatively well typically have good speech recognition skills also (Boothroyd, 1969; Gold, 1978; Markides, 1970; Osberger et al., 1993; Smith, 1975; Stark and Levitt, 1974; Tye-Murray et al., 1995). However, some children who have good speech recognition skills do not necessarily acquire good speech production skills.

Children who have profound hearing impairments also attend to visible speech information to acquire the sounds and words of their speech community (e.g., Smith, 1975). That is, children are more likely to produce “visible” phonemes and words correctly than “nonvisible” phonemes and words. For example, /bim/ is more likely to be spoken accurately than /kin/, because /b,m/ entail visible lip movement whereas /k,n/ do not. It is possible that once children who have profound hearing loss receive a cochlear implant, they may become less reliant on visual information for acquiring speech and more reliant on auditory information.

B. Information coded by the nucleus cochlear implant

Few investigators have performed a fine-grain analysis of how children's abilities to recognize speech information in the electrical signal influences their acquisition of speech sounds. In this section, we will consider the type of information conveyed by the Cochlear Corporation Nucleus cochlear implant, the only device currently approved by the Federal Food and Drug Administration for implantation in children. We will then consider how availability of this information might influence speech production.

The F0F1F2 version of the Nucleus cochlear implant speech processor is designed to present first formant (F1) information to the five most apical of the 22 electrodes of its electrode array, and second formant (F2) information to the remaining electrodes. Information about fundamental frequency (FO) is conveyed by pulse rate during voiced segments of the signal, while electrodes are stimulated at random intervals during unvoiced segments. Information about signal intensity is conveyed by pulse amplitude. In 1990, the multipeak (MPEAK) processing strategy became available. In addition to presenting information presented by the F0FIF2 strategy, it also presents information about the third and fourth formants during voiced segments of the signal, and more high-frequency information during unvoiced segments. Loudness is coded by both pulse amplitude and duration (Wilson, 1993).

One means that has been used to assess how well adults receive information via a cochlear implant is to perform a feature analysis of consonant confusion errors, entailing a statistical procedure called information transmission analysis (Miller and Nicely, 1955). A feature analysis reveals which features of articulation are detected and utilized, and may include analyses of the nasality, voicing, duration, frication, and place features. A feature analysis allows one to infer, at least in a preliminary way, which parameters of the speech signal children utilize for recognizing spoken language (Tye-Murray and Tyler, 1989), even though the relationship between the features and acoustic cues are one too many. For instance, in order to respond to the nasality feature, children may respond to a nasal formant, which typically falls below 300 Hz, to alterations occurring in the first formant transitions to and from the abutting vowels, and to intensity changes. How well children detect and utilize the voicing and duration features may be indicative of how well they can respond to some timing cues and/or to fundamental frequency. Analysis of their responses to the frication feature of articulation may indicate how well they detect and utilize high-frequency turbulence. Finally, the place feature may indicate children's sensitivity to spectral changes, especially changes occurring in the second formant region (Kent and Read, 1992; Pickett, 1980).

Adults who use the F0F1F2 processing strategy have been found to perceive the voicing and nasality features relatively well, and the place feature relatively poorly (Tye-Murray and Tyler, 1989; Tye-Murray et al., 1992). As such, we might expect children with cochlear-implant experience to hear the nasality and voicing features relatively well also. A particular child's ability to produce these features may be strongly related to his or her ability to perceive them auditorily because the visual signal conveys little information pertaining to either nasality or voicing (i.e., children do not obtain cues about how these features are produced by watching talkers,in their language community).

C. Purpose

In this investigation, the relationship between speech production and recognition skills was investigated. Subjects were prelingually deafened children who had received at least 2 years of experience with a cochlear implant. Using a consonant–vowel syllable test, we considered whether and how subjects' abilities to produce the speech features of voicing, nasality, duration, frication, and place of articulation corresponded with their vision-only, audition-only, and/or audition-plus-vision feature recognition performance.

I. METHODS

A. Subjects

Twenty-three prelingually deafened children who use a Nucleus cochlear implant participated in this investigation. Age at cochlear implant connection ranged from 2 years 7 months to 14 years 2 months and averaged 7 years 3 months (s.d.=3 years 6 months). Subjects had to have acquired at least 24 mos of experience with a cochlear implant to participate. On average, subjects had 34 months of experience (s.d.=13 months). All subjects were assigned an identification number for the purpose of this investigation. Biographical characteristics and audiological data are presented in Table I, which provides a list of subjects' etiology, age at onset of deafness, age at cochlear-implant connection, months of cochlear-implant experience, as well as cochlear-implant stimulus mode and coding strategy. In addition, word and phoneme percent correct scores are provided based on transcriptions of a story retell task (Tye-Murray et al., 1995). Scores ranged from 1% to 78% words correct and 14% to 92% phonemes correct, suggesting that some subjects had poor speech production skills and some had exceptionally good speech intelligibility. All subjects lived at home and attended a public school at the time of testing, with the exception of subject 15 who lived at home and attended the Iowa State School for the Deaf. Subjects were reported to use simultaneous communication (i.e., spoken and manually coded English) at home and school.

TABLE I.

Biographical and audiological data for each subject.

| Intelligibility measure |

||||||||

|---|---|---|---|---|---|---|---|---|

| Subject | Etiology | Age at onset of deafness (months) | Age at connection (year:months) | Months of experience | Processing strategy | Stimulus mode | % words correct | % phonemes correct |

| S1 | unknown | congenital | 3:11 | 72 | F0F1F2 | BPa | 78 | 92 |

| S2 | unknown | congenital | 4:10 | 36 | MPEAK | CGb | 43 | 66 |

| S3 | other | congenital | 4:04 | 24 | MPRAK | CG | 28 | 56 |

| S4 | unknown | 9 | 4:06 | 24 | MPEAK | CG | 09 | 40 |

| S5 | meningitis | 9 | 4:00 | 24 | MPEAK | CG | 14 | 42 |

| S6 | unknown | congenital | 4:10 | 36 | MPEAK | BP | 1 | 57 |

| S7 | meningitis | 12 | 4:03 | 48 | MPEAK | CG | 64 | 71 |

| S8 | unknown | congenital | 5:07 | 36 | MPEAK | CG | 25 | 60 |

| S9 | Usher's syndrome | congenital | 6:00 | 48 | MPEAK | BP+1c | 5 | 26 |

| S10 | unknown | congenital | 5:05 | 48 | MPEAK | BP+1 | 11 | 50 |

| S11 | unknown | congenital | 5:00 | 48 | MPEAK | BP+2d | 14 | 45 |

| S12 | unknown | congenital | 5:09 | 24 | MPEAK | CG | 58 | 82 |

| S13 | unknown | congenital | 5:09 | 24 | MPEAK | BP+1 | 20 | 76 |

| S14 | meningitis | 30 | 6:00 | 24 | MPEAK | CG | 12 | 59 |

| S15 | meningitis | 11 | 5:02 | 24 | MPEAK | BP+2 | 1 | 30 |

| S16 | unknown | congenital | 7:05 | 48 | F0F1F2 | CG | 50 | 81 |

| S17 | Mondini deformity | congenital | 14:02 | 24 | MPEAK | CG | 1 | 29 |

| S18 | Mondini deformity | congenital | 15:04 | 48 | F0F1F2 | BP | 9 | 39 |

| S19 | hereditary | congenital | 13:02 | 24 | MPEAK | BP+2 | 68 | 68 |

| S20 | hereditary | congenital | 11:01 | 24 | MPEAK | BP+2 | 2 | 14 |

| S21 | meningitis | 26 | 9:05 | 24 | MPEAK | BP+1 | 1 | 16 |

| S22 | meningitis | 15 | 10:10 | 24 | MPEAK | CG | 7 | 40 |

| S23 | unknown | 8 | 9:08 | 24 | MPEAK | BP+1 | 29 | 74 |

| Mean s.d. | 7.2 | 33.9 | ||||||

| 3.6 | 13.4 | |||||||

BP=bipolar.

CG=common ground.

BP+1=bipolar+1.

BP+2=bipolar+2.

B. Assessment tools

Production and perception skills were assessed with the Children's Audio–visual Feature Test (Tyler et al., 1991). The test is comprised of items most 4- and 5-year-old children can recognize, seven alphabet letters and three common words. The stimuli are P, T, C, B, D, V, Z, key, knee, and me.

When testing perception skills, an audiologist repeated one word and the subject touched an item on the response form that had an illustration of each test stimulus. For a given test condition, each item was repeated six tunes in random order. Although it would have been desirable to obtain a greater number of stimulus repetitions in order to increase reliability, this was not feasible due to the children's limited attention spans and other time constraints. When possible, the test was administered in three conditions: audition-only, where the clinician concealed her lower face; vision-only, where the subject removed the cochlear implant coil, thus removing any auditory input and watched the clinician say the stimuli; and audition-plus-vision, where the clinician spoke face-to-face to the subject who wore the cochlear implant. Due to time constraints or fatigue, S5 did not complete any audiological testing and S6 and S14 did not complete vision-only testing. The responses were compiled into consonant confusion matrices. An information transmission analysis was then performed for the purpose of determining how well subjects detected and utilized the features of nasality, voicing, duration, frication, and place. The place of articulation feature contained three categories; all other features were binary. Consonants that are periodic in nature (/b,d,v,z,n,m/) were grouped together for the voicing feature, while consonants that are spoken with prolonged turbulence (/s,v,z/) were grouped together for the frication feature. The nasality feature distinguished consonants spoken with a nasal resonance (/n,m/). The place feature distinguished consonants by where they are produced in the mouth: front (/p,b,m,v/), middle (/t,s,d,z,n/), or back (/k/). Finally, the duration feature distinguished between sounds associated with a lengthy noise segment (/s,z/) and the remaining stimuli. This classification system was adapted from Miller and Nicely (1955).

Production testing with the Children's Audio-visual Feature Test was conducted by a speech-language pathologist, and proceeded as follows: Before testing began, a subject was shown a picture of each stimulus item and asked to name it. If a subject was unable to name a picture, an imitative model was provided, and the subject was asked to name the picture again. The most common error response during this screening procedure was "I" for a picture depicting a person pointing to herself. The correct response is "me." The naming task for a particular picture could be repeated up to two times as necessary. If a subject could not name an item on the third attempt, the test was not administered.

During testing, subjects produced each stimulus three times in random order. Responses were recorded. For analysis, the responses were played to four listeners who are familiar with the speech of talkers who are severely or profoundly hearing impaired. Listeners were provided with an answer sheet containing a listing of all ten syllables for each response. After each of the subjects's productions was played, listeners circled the syllable they heard from among the closed set. Following Eguchi and Hirsh (1969), a composite confusion matrix containing the four listeners' responses was constructed for each subject. Thus each test item had a total of 12 responses (4 listeners ×3 repetitions by the child). An information transmission analysis was performed on the individual matrices.

II. RESULTS

A. Consonant production

Performance for the production version of the Children's Audio-visual Feature test varied widely, with scores ranging from 12% to 78% total correct (subjects 13 and 1, respectively). On average, subjects scored 37% consonants correct (chance performance=10%).

A consonant confusion matrix for the entire group appears in Table II. These matrices were constructed with the responses of the normal-hearing judges to the subjects' consonant productions. With the exception of /v/ (28% phonemes correct), consonants associated with visible facial movement, /p,b,m/, were produced with the highest accuracy (47% phonemes correct, 66%, and 42%, respectively). One interpretation of this finding is that subjects have learned to produce these sounds, perhaps in part, by watching others in their language community speak them. The fricatives, /z,s/, were mispronounced most often (14% phonemes correct and 24%, respectively). In the majority of errors, the target consonant and the actual production shared all features but one. For instance, subjects often substituted one sound with its voiced or unvoiced cognate. The unvoiced /p/ was most often substituted with the voiced /b/ (70% of the error responses were /b/ and vice versa (48% of the error responses for /b/ were /p/); /d/ was most often substituted with /t/ (33% of the error responses for /d/ were /t/), and /z/ was most often substituted with /s/ (30% of the error responses for /z/ were /s/).

TABLE II.

Consonant confusion matrix for the entire group of subjects, for the production version of the Children's Audio–visual Feature Test.

| Response | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Stimulus | /p/ | /t/ | /k/ | /s/ | /b/ | /d/ | /v/ | /z/ | /n/ | /m/ | Total |

| /p/ | 130 | 3 | 4 | 4 | 102 | 8 | 8 | 3 | 5 | 9 | 276 |

| /t/ | 11 | 122 | 21 | 35 | 11 | 40 | 6 | 13 | 10 | 7 | 276 |

| /k/ | 11 | 48 | 92 | 20 | 12 | 25 | 7 | 13 | 27 | 21 | 276 |

| /s/ | 11 | 38 | 24 | 66 | 15 | 55 | 8 | 33 | 13 | 13 | 276 |

| /b/ | 46 | 4 | 4 | 1 | 181 | 10 | 8 | 0 | 6 | 16 | 276 |

| /d/ | 10 | 58 | 19 | 48 | 9 | 102 | 2 | 20 | 3 | 5 | 276 |

| /v/ | 45 | 23 | 6 | 14 | 54 | 26 | 78 | 10 | 7 | 13 | 276 |

| /z/ | 6 | 50 | 11 | 70 | 10 | 54 | 13 | 40 | 12 | 10 | 276 |

| /n/ | 6 | 16 | 20 | 0 | 29 | 52 | 9 | 6 | 93 | 45 | 276 |

| /m/ | 14 | 2 | 2 | 1 | 89 | 9 | 16 | 1 | 26 | 116 | 276 |

| total | 290 | 364 | 203 | 259 | 512 | 381 | 155 | 139 | 202 | 255 | |

In order to examine further the confusions between voiced and unvoiced cognates, an acoustic analysis was performed on these speech samples (i.e., /p,b,t,d,s,z/). Two subjects were excluded from the analysis due to their breathy voice quality, making noise segments and formants not easily discernable on the visual displays. The speech samples were digitized (12-bit quantization rate, 10-kHz sampling rate, and bandpass filtering from 50 to 4800 Hz) using the Computerized Speech Lab by Kay Elemetrics. Acoustical measures were made on spectrographic displays of the samples, and included voice onset time (VOT) and syllabic duration. VOT was measured from the onset of the aperiodic noise segment (occurring in the midrange frequencies) to the onset of the fundamental frequency. Syllabic duration was measured from the onset of the aperiodic noise segment to the end of the syllable's first formant.

The mean VOT and syllable durations are presented in Table III. Paired comparison t tests were performed to compare the measures made for each member of a cognate pair. Only one comparison was significant: The VOT for syllables beginning with /p/ was significantly longer than the VOT for syllables beginning with /b/ (t=3.53, p<0.001). These acoustic similarities between members of a cognate pair might account for at least some of the perceptual confusions that were made by the listeners.

TABLE III.

Mean durations (and standard deviations) in ms for VOT and syllable duration.

| Cognate | VOT | Syllable duration |

|---|---|---|

| /p/ | 53 (77) | 456 (238) |

| /b/ | 15 (62) | 472 (209) |

| /t/ | 75 (71) | 491 (205) |

| /d/ | 57 (71) | 511 (223) |

| /s/ | 33 (53) | 543 (237) |

| /z/ | 19 (27) | 585 (227) |

Since the most common error response for /s/ was /b/ (26% of the error responses for /s/ were /d/) and /s/ was a frequent error response for /d/ (28% of the error responses for /d/ were /s/), the two acoustic measures for these sounds were also compared. We included a third measure, aperiodic noise segment duration (measured from the onset of aperiodic noise at the onset of the syllable to the offset of noise), in the comparisons because these sounds often differ on this parameter. No significant differences were found for the VOT or syllabic duration measures, but the syllables with initial /s/ had significantly longer noise segment durations than syllables with initial /d/ (t=4.84, p = 0.001).

Individual consonant confusion matrices were constructed with the data from each subject, and an information transmission analysis was performed for each. Table IV presents the average percent of transmitted information in the utterances. Overall, the highest scores occurred for the place feature, 37% information transmitted on average, and then nasality (30%). The lowest scores were obtained for duration, frication, and voicing, on average 16%–18% information transmitted.

TABLE IV.

Means and standard deviations for the results of the information transmission analysis, performed on the production and perception versions of the Children's Audio–visual Feature Test, administered in three condi-tions (audition-only, vision-only, and audition-plus-vision).

| Feature |

|||||

|---|---|---|---|---|---|

| place | ftication | voicing | nasality | duration | |

| Condition | (% information transmitted) | ||||

| Audition-only, perception | 7 (7) | 9 (13) | 20 (30) | 30 (33) | 10 (16) |

| Audition-plus-vision, perception | 82 (19) | 35 (22) | 26 (30) | 52 (37) | 29 (25) |

| Vision-only, perception | 80 (17) | 23 (10) | 6 (5) | 19 (11) | 14 (10) |

| Production | 37 (19) | 15 (19) | 16 (22) | 30 (32) | 17 (28) |

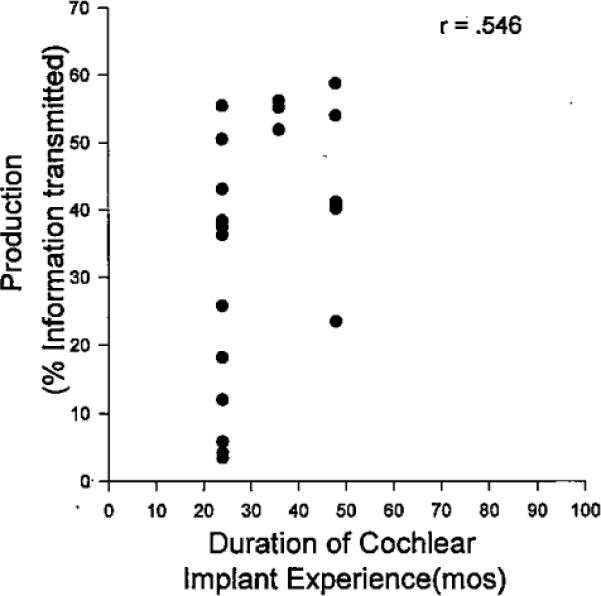

We considered whether duration of experience with a cochlear implant, age at implantation, or age at the time of testing might relate to subjects' speaking performance. Scores from the feature analysis were correlated with each of these three variables. Pearson correlations revealed only one significant relationship: Children who were more likely to produce the place of articulation feature correctly were also more likely to have worn their cochlear implants for a longer period of time (r=0.546, p=0.007). This relationship is shown in Fig. 1.

FIG. 1.

Individual percent information transmitted subject scores for the place of articulation feature, plotted as a function of duration of cochlear-implant experience.

B. Consonant perception

On average, subjects scored 25% consonants correct (s.d.=16) when the Children's Audio–visual Feature Test was administered in an audition-only condition, 44% consonants correct (s.d.=7) when it was administered in a visual condition, and 59% consonants correct (s.d.=21) in an audition-plus-vision condition. Thus performance exceeded chance (10%) in all conditions.

For each subject and each perception condition, consonant confusion matrices were constructed and information transmission analyses were performed. Table IV presents the averaged group results. The highest information transmitted scores were obtained in the audition-plus-vision condition whereas the lowest scores were obtained in the audition-only condition. In the audition-only condition, children scored highest for the nasality feature (30% information transmitted on average) and lowest for the place and frication features (7%–9% on average). Conversely, they scored highest for the place feature (80%) when the stimuli were presented in a vision-only condition, and lowest for the voicing feature (6%). In the audition-plus-vision condition, average scores ranged from 26% information transmitted for the voicing feature to 82% for the place feature.

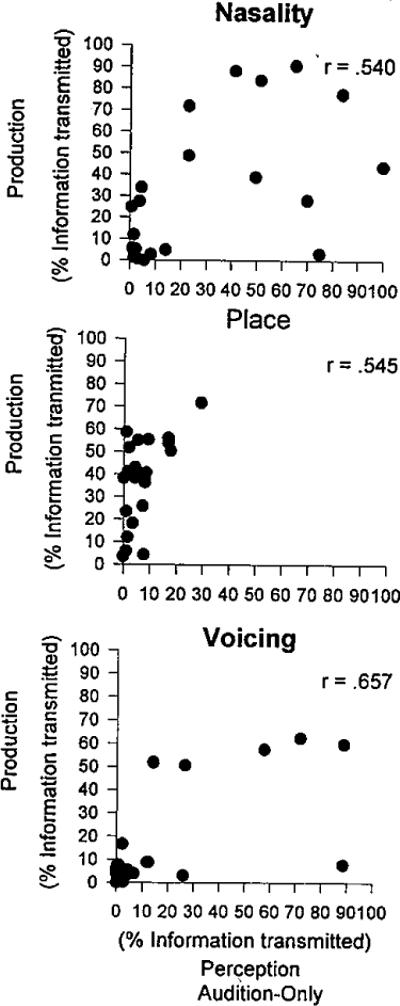

C. Relationship between speech production and perception skills

In order to examine how production and perceptual skills correspond, Pearson correlation coefficients were computed between the feature information transmitted scores of the production version and each of the three perception versions of the Children's Audio–visual Feature Test. Due to the large number of correlations performed in this set of analyses, a significance value of p=0.01 was adopted. Relationships between the production and audition-only perception conditions were significant for the following features: place of articulation (r=0.545, p=0.01), nasality (r=0.540, p=0.01), and voicing (r=0.657, p=0.001). These relationships are displayed in Fig. 2. When production performance was related to perception performance in the audition-plus-vision condition, both nasality (r=0.645, p=0.001) and voicing (r=0.676, p=0.001) features yielded significant relationships. No significant relationships emerged when production was correlated with perception performance in the vision-only condition.

FIG. 2.

Relationship between production and audition-only perception of the following features: nasality, voicing, and place.

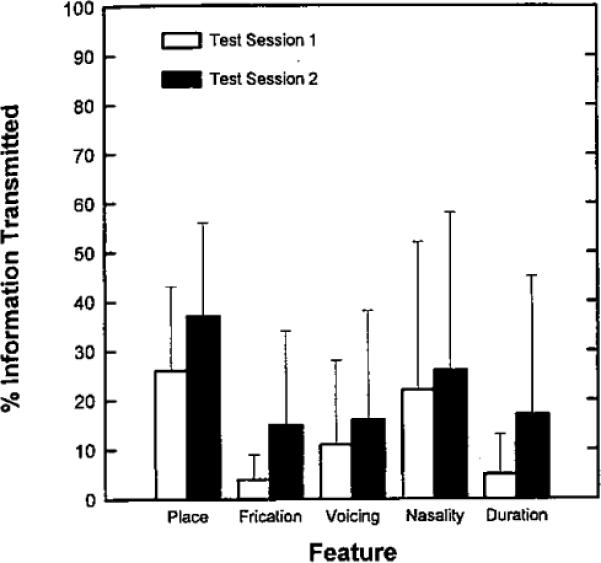

These results suggest that experience with a cochlear implant may have led to enhanced production of some features of articulation. To explore this issue in another way, 16 of the 23 subjects were examined in a longitudinal analysis. Their results from two different test dates were compared to determine whether performance improved with increased cochlear-implant experience and/or maturation. The 16 subjects were selected because they had completed the production version of the Children's Audio–visual Feature Test prior to receiving a cochlear implant or shortly thereafter (10 months), or 2 years before their most recent test date. On average, these subjects had 10.5 months (s.d.=14.2) of cochlear-implant experience on the first test date, and 28.5 months (s.d.=13.8) experience on the second test date. Individual consonant confusion matrices were constructed with the data from each subject and each test date, and an information transmission analysis was performed for each. Figure 3 presents the average percent information transmitted. Means were higher for the second test date than for the first test date for all features. Paired comparison t tests showed that these improvements were significant for two features, frication (t=3.04, p=0.001) and nasality (t=2.35, p=0.03). The comparisons for the duration and place features approached significance (t=2.10, p=0.052 and t=2.02, p=0.06, respectively).

FIG. 3.

Mean percent information transmitted for the features of articulation, computed for the production version of the Children's Audio–visual Feature Test, which was administered to 16 subjects on two separate occasions (bars represent standard deviations).

III. DISCUSSION

Prelingually deafened children who have used cochlear implants for at least 2 years spoke the consonant–vowel syllables comprising the Children's Audio–visual Feature Test with above-chance accuracy, scoring 37% consonants correct on average. In many ways, the subjects' error patterns resemble those of profoundly deafened children who use hearing aids, as reported by Smith (1975). Both Smith's subjects and the present subjects produced the visible consonants /b,m,p/ with relatively few feature errors and the fricatives /s,z/ with many errors. Previous and present subjects also produced many errors of voicing, and rarely produced errors of place of articulation. Acoustic analyses performed in this investigation suggest that subjects, on average, did not distinguish between voiced and unvoiced sounds on the basis of VOT or syllabic duration. It is somewhat surprising that subjects did not produce the voicing feature more accurately since the Nucleus cochlear implant is specifically designed to code it and adult cochlear-implant users tend to perceive this feature relatively well auditorily (Tye-Murray and Tyler, 1989). Moreover, Lane et al. (1994) have presented data from adult cochlear-implant users that suggest audition plays a critical role in helping them to re-establish and maintain the distinction between voiced and unvoiced cognate pairs. Perhaps more experience with a cochlear implant is required before children begin to change established articulatory patterns. The fact that subjects did distinguish, on average, between /p/ and /b/ on the basis of VOT may indicate that they may gradually be changing their speaking behaviors. It is possible that cognate pairs may change with continued cochlear-implant experience.

Although the present subjects' error patterns resemble those of hearing-aid users, three results suggest that information received from cochlear implants might influence young users' speaking behaviors after an average of 34 months of experience. First, subjects who were more likely to utilize nasality, voicing, and place information in an audition-only condition were also significantly more likely to produce these features accurately. Second, subjects who correctly produced the place feature were more likely to have worn their cochlear implants for a relatively long length of time, a finding that suggests experience with hearing relates to speaking performance. Finally, a comparison between data collected on two separate occasions showed significant increases in the accuracy with which subjects produced the frication and nasality features of articulation. Although maturation might also have contributed to these improvements noted over time, the results suggest that access to the acoustic signal via electrical stimulation might enhance children's speech production skills. The fact that production of many features improved as a result of cochlear-implant experience probably reflects their nonindependence; for instance, alteration of a single articulatory event (such as an opening gesture) may lead to changes in several features (such as voicing and frication).

Most children who are unable to hear do not develop intelligible speech (Smith, 1975; Hudgins and Numbers, 1942) for many reasons. Tye-Murray (1992; Tye-Murray el al., 1995) considered five ways that auditory information contributes to the acquisition and maintenance of speech. First, by attending to the speech outputs of talkers in then language community, children develop specific principles of articulatory organization. Specifically, by listening to others, they learn how to modify their breathing behaviors for speech production, they learn to flex and extend their tongue bodies abundantly, and they learn how to alternate between open and closed postures of articulation so that speech sounds rhythmical. Second, by listening to other talkers, children learn how to accomplish specific speech events. For example, they learn to vary the velocity of their opening articulatory gestures to distinguish between /b/ and /w/. Third, children deduce the system of phonological performance used by their language community. By listening, they learn which phonemes are used and the rules governing how they occur (e.g., /dn/ does not initiate a syllable). Fourth, children learn to relate their own auditory outputs (auditory feedback) to their articulatory gestures, and they learn how the consequences of their articulatory gestures compare to sounds that are produced by other talkers. Finally, children may learn to monitor their auditory feedback for the purpose of fine-tuning ongoing articulatory behavior and for detecting errors.

The numerous consonantal errors spoken by these pre-lingually deafened subjects underscore the difficulties involved in learning to talk when minimal auditory information is available. However, the significant correlations for the nasality, voicing, and place features between the production versions of the Children's Audio-visual Feature Test and the perception version administered in an audition-only condition suggest, in a preliminary way, that the degraded signal provided through a cochlear implant can fulfill some of the roles of an intact auditory signal. For instance, by attending to spectral changes in the region of the speech signal below 300 Hz, children who receive cochlear implants may learn to distinguish between nasal and non-nasal sounds in their systems of phonological performance (although they may also attend to other parameters of the acoustic signal for making these distinctions as well). We can speculate that attention to intensity changes in this same frequency region may lead them to learn the organizational principle of alternating open and closed postures of articulation rhythmically since open postures are associated with high amplitude in the low frequencies and closed postures are associated with low amplitude. Children who have profound hearing impairments typically speak with poor speech rhythm. In future research, we can determine whether implanted children who demonstrate relatively good production and perception of the nasality feature also demonstrate improved speech rhythmicity.

ACKNOWLEDGMENTS

This work was supported by the National Institutes of Health Grants Nos. DC00242 and DC00976-01, Grant No. RR59 from the General Clinical Research Centers Program, Division of Research Resources NIH, and a grant from the Lions Clubs of Iowa. The audiological data reported in this investigation were collected in an experimental protocol supervised by Dr. Richard S. Tyler. We thank Karen Iler Kirk for her assistance and suggestions in the early stages of this project. We also thank Melissa Figland for her help with data analysis, and George Woodworth for statistical consultation.

References

- Boothroyd A. Distribution of hearing levels in the student population of Clarke School for the Deaf. Clarke School for the Deaf; Northampton, MA: 1969. SARP Rep. No. 3. [Google Scholar]

- Eguchi S, Hirsh I. Development of speech sounds in children. Acta Otolaryngol. 1969;257(S):5–43. [PubMed] [Google Scholar]

- Fryauf-Bertschy H, Tyler RS, Kelsay D, Gantz B. Performance over time of congenitally deaf and postlrrigually deafened children using a multi-channel cochlear implant. J. Speech Hear. Res. 1992;35:913–920. doi: 10.1044/jshr.3504.913. [DOI] [PubMed] [Google Scholar]

- Gold T. doctoral dissertation. CUNY Graduate Center; New York: 1978. Speech and hearing: A comparison between hard of hearing and deaf children. [Google Scholar]

- Hudgins CV, Numbers GD. An investigation of the intelligibility of the speech of the deaf. Genet. Psychol. Monogr. 1942;25:289–392. [Google Scholar]

- Kent RD, Read C. The Acoustic Analysis of Speech. Singular; San Diego, CA: 1992. [Google Scholar]

- Lane H, Wozniak J, Perkell J. Changes in voice-onset time in speakers with cochlear implants. J. Acoust. Soc. Am. 1994;96:56–64. doi: 10.1121/1.410442. [DOI] [PubMed] [Google Scholar]

- Markides A. The speech of deaf and partially hearing children with special reference to factors affecting intelligibility. Br. J. Disord. Commun. 1970;5:126–140. doi: 10.3109/13682827009011511. [DOI] [PubMed] [Google Scholar]

- Miller GA, Nicely PE. An analysis of perceptual confusions among some English consonants. J. Acoust. Soc. Am. 1955;27:338–352. [Google Scholar]

- Osberger MJ, Maso M, Sam LK. Speech intelligibility of children with cochlear implants, tactile aids, or hearing aids. J. Speech Hear. Res. 1993;36:186–203. doi: 10.1044/jshr.3601.186. [DOI] [PubMed] [Google Scholar]

- Pickett JM. The Sounds of Speech Communication. University Park; Baltimore, MD: 1980. [Google Scholar]

- Smith C. Residual hearing and speech production in deaf children. J. Speech Hear. Res. 1975;18:795–811. doi: 10.1044/jshr.1804.795. [DOI] [PubMed] [Google Scholar]

- Stark R, Levitt H. Prosodic feature reception and production in deaf children. J. Acoust. Soc. Am. Suppl. 1. 1974;55:S23. [Google Scholar]

- Tye-Murray N. Articulatory organizational strategies and the roles of audition. Volta Rev. 1992;94:243–260. [Google Scholar]

- Tye-Murray N, Spencer L, Woodworth G. Acquisition of speech by children who have prolonged cochlear implant experience. J. Speech Hear. Res. 1995;38:327–337. doi: 10.1044/jshr.3802.327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tye-Murray N, Tyler RS. Auditory consonant and word recognition skills of cochlear implant users. Ear Hear. 1989;10:292–298. doi: 10.1097/00003446-198910000-00004. [DOI] [PubMed] [Google Scholar]

- Tye-Murray N, Tyler RS, Woodworth G, Gantz BJ. Performance over time with a Nucleus or Ineraid cochlear implant. Ear Hear. 1992;13(3):200–209. doi: 10.1097/00003446-199206000-00010. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Fryauf-Bertschy H, Kelsay D. Audiovisual Feature Test for Young Children. The University of Iowa; Iowa City: 1991. [Google Scholar]

- Wilson BS. Signal processing. In: Tyler RS, editor. Cochlear Implants: Audio-logical Foundations. Singular; San Diego, CA: 1993. pp. 35–85. [Google Scholar]