Abstract

Conflicts of interest between the community and its members are at the core of human social dilemmas. If observed selfishness has future costs, individuals may hide selfish acts but display altruistic ones, and peers aim at identifying the most selfish persons to avoid them as future social partners. An interaction involving hiding and seeking information may be inevitable. We staged an experimental social-dilemma game in which actors could pay to conceal information about their contribution, giving, and punishing decisions from an observer who selects her future social partners from the actors. The observer could pay to conceal her observation of the actors. We found sophisticated dynamic strategies on either side. Actors hide their severe punishment and low contributions but display high contributions. Observers select high contributors as social partners; remarkably, punishment behavior seems irrelevant for qualifying as a social partner. That actors nonetheless pay to conceal their severe punishment adds a further puzzle to the role of punishment in human social behavior. Competition between hiding and seeking information about social behavior may be even more relevant and elaborate in the real world but usually is hidden from our eyes.

Keywords: cooperation, economic experiment, signaling

What is the benefit of watching someone? Observing a person's behavior in a social dilemma may provide information about her qualities as a social partner for potential collaboration in the future: Does she contribute to a public good? Does she punish free riders? Does she reward contributors? Do I want to collaborate with her? Direct observation is more reliable than trusting gossip (1). Being watched, however, is not neutral: An individual's behavior may change in the presence of an observer (the “audience effect”), and the observed may be tempted to behave as expected to manage her reputation (2). Watchful eyes may induce altruistic behavior in the observed (refs. 3–7 but also see ref. 8). Even a mechanistic origin of recognizing watchful eyes in the brain has been described as cortical orienting circuits that mediate nuanced and context-dependent social attention (9, 10). However, watching also may induce an “arms race” of signals between observers and the observed. The observer should take into account that the behavior of the observed may change in response to observation and therefore should conceal her watching; the observed should be very alert to faint signals of being watched but should avoid any sign of having recognized that watching is occurring (11). The interaction between observing and being observed has implications for the large body of recent research on human altruism (12–22). Especially when a conflict of interest exists between observers and observed, they may use a rich toolbox of sophisticated strategies both to manipulate signals and to uncover manipulations. This kind of strategic interaction may be ubiquitous but clandestine, so that it generally has escaped attention. With human volunteers we staged an experimental social-dilemma game containing a number of important ingredients of real social interactions [e.g., choice of partners (23)]: An observer selects her partners for a follow-up game, and the observed players have a potential conflict between qualifying for that follow-up game and competing successfully in the present game. We offered both parties opportunities for deciding to observe/not observe or allow/not allow observation, respectively; if the observer chose to observe, she could opt for open or concealed observation. We offered these choices with and without providing information about the adopted strategy.

In the game, groups of five participants play two blocks of 15 rounds each. For the first block, one of the five participants is chosen randomly as the observer, and the other four participants are the players in a social-dilemma game. The observer in the first block becomes one of the players in the second block. At the end of the first block, the first-block observer may determine the second block's coplayers or opt for a random decision. Actively choosing the second-block players incurs a small cost to the first-round observer. The player not chosen becomes the observer in the second block.

The experiment consists of three games, each played by eight independent groups. In the simple public goods (SPG) game, four players play a public goods game in each round. From his initial endowment of 10 points, each player can contribute 0, 5, or 10 points to the public good. The rest is kept for his private account. The round's total contribution is doubled and redistributed evenly among the four players. In the public goods game with punishment possibility (PG&PUN), the public goods provision is followed by a punishment stage in which each player may assign a punishment point to each of the other three players after being informed about individual contributions. A punishment point deducts one point from the punisher's account and three points from the account of the punished player. In the public goods game followed by an indirect reciprocity game (PG&IR), each round of the public goods game is followed by a “give” phase in which each player is both a potential donor to another player after being informed about the last contribution and former “give” decisions of his receiver and a potential receiver from yet another player. Points given are tripled for the receiver. In each phase (contribution, punishment, and give, respectively) the observer may choose to observe up to two players. Observing does not entail visual contact of observer and player; instead the observer is informed about the player's decision and may remember the decision by the player's identification number. The decisions of nonobserved players remain unknown to the observer. At a small cost, a player can hide his decision (i.e., “close his window”) from the observer, who, at a small cost, can conceal her decision to watch. When a player's “window is open,” the player is informed when he is observed. However, he cannot distinguish between not being observed and being the subject of concealed observation. The observer may find that the window of a player that she decided to observe is closed and therefore be unable to obtain the information she seeks (see Fig. 1 and SI Methods for details).

Fig. 1.

Course of each round in PG&PUN. After round 15 the observer in the first block (rounds 1–15) becomes a player. She may select her coplayers for the second block (rounds 16–30), a choice that costs her 1/2, i.e. 0.5, points, or she may opt for random determination of players, an option hat involves no cost. The player from the first block who is not chosen as a player in the second block becomes the observer in the second block. Each round of the second block follows the same scheme as in block 1.

What are the expectations in this game? Suppose all actors maximize their monetary gains, and this strategy is common knowledge. In that case, the observer would opt for a random selection of her coplayers in the second block, because all players use their dominant strategy of free-riding in the second block, and there are no differences among the potential coplayers. Because active selection incurs a cost, a money-maximizing observer would never choose this option. Without an active selection for the second block, players do not have any incentives to display cooperativeness in the first block and thus also use their dominant strategy of free-riding in the first block. Because both closing the window and concealed observation incur costs but do not create any money-maximizing benefits when all players are using money-maximizing strategies, these options are not used. Analogously, costly punishment and costly giving are not used.

Predictions change, however, if we relax the assumption that all participants are using money-maximizing strategies and assume, as a small probability that some players are altruistic (24). Observers may try to find out who the altruistic players are, so as to include them in the second block. To obtain this information, it may be profitable to use the costly option of concealed observation. Because being a player in the second block may be more profitable than being the observer, players may try to avoid becoming the next observer. When observers actively select altruistic players, players have incentives to display cooperative acts and hide noncooperative acts in contributing as well as in punishing and giving. It may pay to use the costly option of closing the window to hide noncooperative acts. Thus, the game yields the potential for a conflict of interest between the observer and the players: The observer tries to identify the most cooperative players as coplayers for the second block, and selfish players may try to earn as many points as possible but minimize the risk of being removed from the player group for the next block by hiding selfish decisions from the observer. In turn, the observer may try to discover what players try to hide.

Results

Remarkably, only 12 of the 24 observers (two in SPG, six in PG&PUN, and four in PG&IR) opted for a random determination of the next observer. The remaining 12 observers incurred the cost of active selection. The likelihood of an observer's actively selecting her coplayers for the second block is influenced positively by the difference between the lowest and the second-lowest average contributor (z = 2.54, P = 0.011, probit regression in Table 1, Column A). If this difference is high, the most uncooperative player of the first block is distinctively more uncooperative than the remaining three players. The higher this difference, the more likely the observer is to incur the cost of actively selecting her coplayers. The observer's decision for an active selection does not seem to be influenced by the overall contribution level (z = −1.04, P = 0.300), the variance in contributions (z = −1.58, P = 0.115), or the spread between the contributions of the three most cooperative players (i.e., the difference between the highest and the second-lowest contributor of the first block; z = 0.62, P = 0.533) (all values are from the probit regression in Table 1, Column A).

Table 1.

Probit regression for the probability of the observer actively choosing the observer of the second block

| A |

B |

|||||

| Coefficient | z | P | Coefficient | z | P | |

| Average contribution of all players in block 1 over the 15 rounds of block 1 | −0.3439781 | −1.04 | 0.300 | −0.0930945 | −0.44 | 0.663 |

| Average variance in contribution of all players in block 1 over the 15 rounds of block 1 | −0.2926371 | −1.58 | 0.115 | |||

| Difference between the highest and the second-lowest contributor in block 1 | −0.3100557 | 0.62 | 0.533 | |||

| Difference between the second-lowest and the lowest contributor in block 1 | 1.007566 | 2.54 | 0.011 | 0.6602869 | 2.49 | 0.013 |

| Difference between the highest and the second-highest punisher in block 1 | 1.021376 | 0.84 | 0.402 | |||

| Difference between the lowest and the second-lowest punisher in block 1 | 10.50219 | 1.75 | 0.079 | |||

| Treatment dummy PG&PUN | −2.696186 | −2.71 | 0.007 | |||

| Constant | 1.491072 | 0.46 | 0.648 | 0.0356208 | 0.02 | 0.984 |

Phase 1 only; averages over the values the observer actually has seen (either openly or concealed); 24 independent observations; robust SEs; clustered for independent observations.

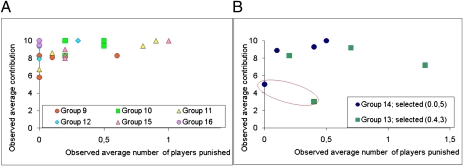

In PG&PUN observers also may consider the players’ punishment behavior in making the selection decision. Only 6.7% of the punishment acts were directed toward higher contributors (“antisocial punishment”). Thus, punishment was used almost always to “discipline” low contributors and free-riders. Remarkably, neither the difference between the highest and the second-highest punisher (z = 0.84, P = 0.402) nor the difference between the lowest and the second-lowest punisher (z = 1.75, P = 0.079) seems to be a criterion for opting active selection (probit regression in Table 1, Column B), although observers could have used this criterion in seven of the eight groups (in one group no punishment was used). Again, active selection was influenced positively by the difference between the second-lowest and the lowest contributor (z = 2.49, P = 0.013). When this difference is low, observers seem neither to prefer nor to avoid having high punishers in their group (Fig. 2A); when this difference is high, however, they exclude the lowest contributor (Fig. 2B). We can double the sample size by including data from a companion study (SI Methods) and confirm the nonsignificance of the punishment behavior for observers’ selection decisions on the basis of 16 independent groups in PG&PUN. Here, also, “antisocial” punishment was negligible (7.0%). Neither the difference between the highest and the second-highest punisher (z = −0.360, P = 0.718) nor the difference between the lowest and the second-lowest punisher (z = −0.340, P = 0.731) seems to be decisive for active selection (probit regression in Table S1). Again, active selection was influenced positively by the difference between the second-lowest and the lowest contributor (z = 2.93, P = 0.003). Remarkably, active selection by the observer also was rare in the companion study, occuring in only one of eight groups. The reason seems to be the higher contribution levels under the punishment possibility (8.04 ± 0.79 points in the companion study). Contributions are significantly higher in PG&PUN (8.54 ± 0.41 points) than in SPG (5.74 ± 0.77 points) (n = 8, z = −2.417, P = 0.016, two-tailed Mann–Whitney U test). (Fig. S1 presents the data for the companion study that are presented in Fig. 2 for the present study.)

Fig. 2.

Determination of the next observer, average of number of coplayers punished, and average contributions of the individual players observed by the observer in the first block. Each symbol represents an individual player and indicates the player's group. (A) Groups in which the observer opted for a random choice of next block's observer. (B) Groups in which the observer actively chose next block's observer. Circled players are those selected as observers in the next round.

The four low contributors who were determined as second-round observers in PG&IR also were the lowest givers. Thus, observers preferred to remove low contributors, who happened also to be low givers, as future coplayers from the group.

When observers want actively to exclude highly noncooperative players from the second block's play, players may want to hide noncooperative acts by closing their windows. Indeed, although, players rarely used the costly option of hiding their contribution decision (6.11%), they did so when their contribution was low. The probability of players allowing their activity to be observed (open window) increased with a player's contribution (probit regression z = 6.52, P < 0.001) (Table S2, Column A), and contributions made with an open window were significantly higher than those made with a closed window (n = 24; z = −3.703; P < 0.001, two-tailed Mann–Whitney U test) (Fig. 3A). Players rarely (2.91%) used the costly option of hiding their punishment decision but did conceal especially high punishment acts. The probability of players allowing their acts to be observed decreased with the number of allocated punishment points (i.e., players punished) (probit regression, z = −3.87, P < 0.001) (Table S2, Column B). In most cases when players allowed their activity to be observed, no coplayers were punished (Fig. 3B). Players did not close their windows preferentially when punishing “antisocially”: The window was closed in only 10.5% of those cases. Players used the costly option of hiding their give/not give decision in 4.37% of the cases and concealed their decisions when not giving (Fig. 3C, probit regression, z = 2.21, P = 0.027) (Table S2, Column C). Thus, players hide their activity when making low contributions, when punishing strongly, and when not giving.

Fig. 3.

Players’ decisions with open and closed windows. (A) Percentage of players contributing 0, 5, or 10 units to the public good among players who had decided to open (Left) or to close (Right) their windows. Data are from SPG, PG&PUN, and PG&IR combined. (B) Percentage of coplayers punished by players who had decided to open (Left) or to close (Right) their windows. Data are from PG&PUN. (C) Percentage of giving by players who had decided to open (Left) or to close (Right) their windows. Data are from PG&IR. For statistics, see main text.

Players’ hiding of low contributions was successful. The contributions that observers saw were higher than those that they did not see (Wilcoxon two-tailed signed rank matched-pairs test, n = 24, z = −2.808, P = 0.005). Interestingly, the probability of observers’ concealed watching of a player's contributions increased with the player's average contribution that the observer had observed so far (probit regression z = 2.15, P = 0.032) (Table S3, Column A). The probability of observing a contribution decision openly decreased with the average of past contributions observed (probit regression z = −2.68, P = 0.007) (Table S4, Column A). Thus, observers seemed to aim at uncovering whether openly high contributors also made high contributions when not observed openly.

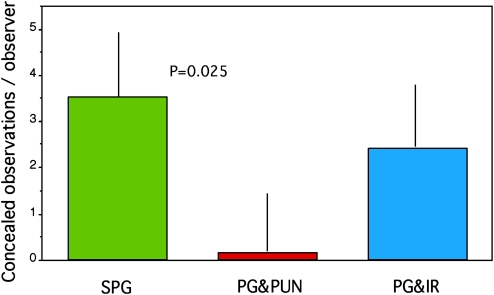

Concealed watching reveals the observers’ focus on coplayers’ behavior: Although observers opted for concealed watching of contributions and giving/not giving at similar rates, they rarely used concealed watching for punishment (Fig. 4), indicating their low interest in observing punishment.

Fig. 4.

Actions observers paid to see concealed. Average (+SEM) number of concealed observations of contributions to the public pool in SPG, of punishment behavior in PG&PUN, and of giving in PG&IR (two-tailed Mann–Whitney U test; n1 = 8; n2 = 8; z = −2.235, P = 0.0254).

Players were not really successful in duping observers. Although the contributions observers saw were higher than the contributions that players had made overall (Wilcoxon two-tailed signed rank matched-pairs test, n = 24, z = −2.138, P = 0.033), the contributions that observers saw correlated positively with all the contribution decisions of a player (Spearman correlation, n = 24, ρ = 0.951, P = 0.0001) (Fig. S2A). Similarly, punishment decisions that observers saw correlated positively with all punishment decisions (Spearman correlation, n = 8 observed players, ρ = 0.857, P = 0.007) (Fig. S2B), and give/not give decisions that observers saw correlated positively with all give/not give decisions (Spearman correlation, n = 8 observed players, ρ = 0.843, P = 0.009) (Fig. S2C). The observer aimed at selecting the lowest contributor (and giver) as the next observer. For this selection it is not necessary to observe total contributions quantitatively, which were significantly higher when observed than when unobserved. Therefore the players were able to hide a significant part of their lower contributions from the observer. However, because observed and unobserved contribution levels correlated positively, observers could pick the lowest contributor easily. If total contributions had mattered, observers would have been misled.

Whom did observers actually select as the next observer? The probability that a player would be actively selected as the next observer decreased with his openly observed contributions (z = −2.48, P = 0.013) and his openly observed giving (z = −2.67, P = 0.008). His openly observed punishment did not influence the observer's decision significantly (z = −0.59, P = 0.558). Similarly, neither acts observed by a concealed observer (z = 0.82, P = 0.413 for contributions and z = 1.06, P = 0.287 for giving) nor the number of times the observer found a closed window (z = −1.51, P = 0.132 for contributions) seemed to influence the observer's decision (all values are from probit regression in Table 2). Two observations seem to be noteworthy. First, observers seemed to focus on the openly observed contribution and giving behavior when selecting coplayers for the second block, but punishment activities did not seem to be decisive. This result nicely complements the previous analyses that contribution decisions, but not punishment, are decisive for an active selection by the observer. Second, behavior seen by a concealed observer did not seem to contribute significantly to openly observed behavior in an observer's selection decision. Players seemed to use the option of closing the window strategically to hide low contributions, but when the window was open, they acted as though they were being observed, either openly or by a concealed observer. Thus, observers seemed to gain sufficient information by relying on openly observed behavior.

Table 2.

Probit regression for the probability of the observer actively selecting a player as the next observer of the second block

| Coefficient | z | P | |

| Openly observed average contribution of the player in block 1 over the 15 rounds of block 1 | −0.5151949 | −2.48 | 0.013 |

| Concealed observed average contribution of the player in block 1 over the 15 rounds of block 1 | 0.0886011 | 0.82 | 0.413 |

| Number of times the observer wanted to see the player's contribution, but the window was closed | −0.2088638 | −1.51 | 0.132 |

| Openly observed average punishment of the player in block 1 over the 15 round s of block 1 | −0.4840024 | −0.59 | 0.558 |

| Openly observed average giving of the player in block 1 over the 15 rounds of block 1 | −1.837215 | −2.67 | 0.008 |

| Concealed observed average giving of the player in block 1 over the 15 rounds of block 1 | 0.4421519 | 1.06 | 0.287 |

| Treatment dummy PG&PUN | 0.56676 | 1.08 | 0.282 |

| Treatment dummy PG&IR | 3.996724 | 2.26 | 0.024 |

| Constant | 2.285591 | 2.41 | 0.016 |

Phase 1 only; averages over the values the observer actually has seen (either open or concealed); 12 independent observations (in which observer opted for active selection), robust SEs, panel regression, clustered for independent observations. The variables “Concealed observed average punishment of the player in block 1 over the 15 rounds of block 1,” “Number of times the observer wanted to see the player's punishment, but the window was closed,” and “Number of times the observer wanted to see the player's giving, but the window was closed” were omitted because of colinearity from the original model and therefore were removed from the regression.

Discussion

Human subjects avoided as social partners those players who provided little to a public resource. Accordingly potential partners used the costly option of hiding low contributions from the observer but openly showed high contributions. They seemed to hide and reveal decisions strategically to maximize potential future payoffs. To uncover the players’ true behavior, observers used targeted observation strategies. Observers directed their watching to high contributors and even used the costly option of concealed watching to verify whether the players made the same decisions when feeling unobserved. Interestingly, although observers appeared to be on the weaker side in the interaction, ultimately they rarely were misled when identifying the lowest contributor. However, observers were accurate only with respect to a player's relative position as a contributor in the group. Observers were misled successfully by the absolute amount of contributions that they saw. Thus, players managed both to profit through low contributions to the public good and to show higher contributions to the observer.

Our results reveal an interaction between observers and observed in seeking to observe and hide social behavior, suggesting that such interactions also occur in reality whenever these subtle strategies are both possible and profitable. Players seem to use the option of hiding low contributions strategically, but when the window is open, they expect to be observed, either openly or by a concealed observer. Thus, concealed observations made when the player's window was open did not reveal different information. However, when real-world conditions allow a bigger toolbox to be used in signaling competitions, further dimensions of interesting complexity are to be expected. Therefore, in our study we might have found many fewer concealed interactions than would be expected under real-life conditions. Our study may have uncovered only the tip of the iceberg. Social interactions may change in a sophisticated way when the options of hiding information and concealed observation are possible and potentially profitable. Studies showing that subjects are more selfish when anonymous (25–28), especially when the decision makers believed that the others would evaluate noncooperation negatively (27, 29, 30), are suggestive of a potential arms race between observers and observed. Among real-world examples are political leaders who managed to display a blameless live in public but had a polygynous sex-life behind “closed windows” in a race with journalists and paparazzi. The existence of undercover journalists, secret services, and incognito restaurant reviewers suggests numerous additional examples. Senders may manipulate many kinds of direct or indirect information [e.g., using visual cues, lying, initiating gossip, websites, making donations to charity, showing off]. Peers respond by evaluating veracity at all these levels. Societies have tried to curtail everyday signaling deceptions (e.g., by placing ever-present watchful eyes on totem poles or by suggesting that a god “sees through everything”) (11). Even nonhumans seem to be involved in a competition of hiding and seeking to obtain information. Cleaner fish groom their clients when being watched but bite off pieces of skin when not observed, and clients may profit from watching while concealed (31).

Surprisingly, although the players invested effort and money to conceal their strong punishing behavior, observers completely ignored whether and how much their future coplayers punished. A reputation for being a good future coplayer was created by high contributions. Additional punishment seemed neither to increase nor diminish a high contributor's reputation. Two studies provide hints that punishers achieve a good reputation, potentially beneficial in a follow-up game (32, 33). Nonetheless, subjects in our experiment wanted to be anonymous when punishing severely. They seemed to fear losing their reputations when severe punishment was observed. However, moralistic punishment (i.e., punishment of free-riders by a third party) was lower when the punishment was externally determined anonymously than when it was made in front of an audience (34, 35). This result suggests that the nature of the punishment (either partially self-serving and directed to coplayers, as in a social-dilemma game, or purely altruistic and directed to a third party) makes a difference in whether anonymity or publicity in punishing is preferred. Given the widespread and sophisticated use of costly punishment in social dilemmas (15, 36–42), it is hard to understand (i) why human subjects obviously ignored this behavior when choosing their social partners, (ii) why subjects did not like to be watched when heavily punishing, and (iii) whether these results were influenced by cultural differences in punishment behavior (43).

Methods

One hundred twenty undergraduate students from the University of Erfurt voluntarily participated in 24 experimental sessions (eight sessions of each of the three treatments, SPG, PG&PUN, and PG&IR) with five subjects in each session. Special care was used to recruit students from many different disciplines to increase the likelihood that the subjects had never met before. Each participant was allowed to take part in only one session. Further details are given in SI Methods.

The four players and the observer playing in the same subject group are, because of their interaction, statistically dependent observations. The independent observations for the statistical analyses thus are the groups. The number of independent observations reported therefore is 24, eight in each of the three treatments. In nonparametric testing the Mann–Whitney U test was used for two independent samples, and the Wilcoxon signed rank matched-pairs test was used for dependent samples. All tests were two-tailed and were performed over the sessions as the independent observations. The parametric analyses use robust probit regressions. The regressions consider individual decisions of all players and deal with dependencies by clustering for the independent groups. There are two ways in which our statistics account for the multiple decisions of the players. The first way is to aggregate all decisions of the same type (e.g., all contributions or all punishment decisions) over the 15 rounds to obtain a single measure (Tables 1 and 2). The second way to deal with the multiple decisions of a player is to take the decisions in a nonaggregated form and control for the time trend in the decisions by including an independent variable “period” in the regression (Tables S2–S4).

Statistical analysis was performed with the software package Stata 2009 Stata Statistical Software Release 11 (StataCorp LP).

Supplementary Material

Acknowledgments

We thank Özgür Gürerk for valuable research assistance, two anonymous reviewers for constructive criticism, and the Deutsche Forschungsgemeinschaft for financial support through Grant RO 3071.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

See Commentary on page 18195.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1108996108/-/DCSupplemental.

References

- 1.Sommerfeld RD, Krambeck HJ, Semmann D, Milinski M. Gossip as an alternative for direct observation in games of indirect reciprocity. Proc Natl Acad Sci USA. 2007;104:17435–17440. doi: 10.1073/pnas.0704598104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tennie C, Frith U, Frith CD. Reputation management in the age of the world-wide web. Trends Cogn Sci. 2010;14:482–488. doi: 10.1016/j.tics.2010.07.003. [DOI] [PubMed] [Google Scholar]

- 3.Haley KJ, Fessler DMT. Nobody's watching? Subtle cues affect generosity in an anonymous economic game. Evol Hum Behav. 2005;26:245–256. [Google Scholar]

- 4.Bateson M, Nettle D, Roberts G. Cues of being watched enhance cooperation in a real-world setting. Biol Lett. 2006;2:412–414. doi: 10.1098/rsbl.2006.0509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Burnham TC, Hare B. Engineering human cooperation – Does involuntary neural activation increase public contributions? Hum Nat. 2007;18:88–108. doi: 10.1007/s12110-007-9012-2. [DOI] [PubMed] [Google Scholar]

- 6.Rigdon M, Ishii K, Watabe M, Kitayama S. Minimal social cues in the dictator game. J Econ Psychol. 2009;30:358–367. [Google Scholar]

- 7.Mifune N, Hashimoto H, Yamagishi T. Altruism toward in-group members as a reputation mechanism. Evol Hum Behav. 2010;31:109–117. [Google Scholar]

- 8.Fehr E, Schneider F. Eyes are on us, but nobody cares: Are eye cues relevant for strong reciprocity? Proc R Soc Lond B Biol Sci. 2010;277:1315–1323. doi: 10.1098/rspb.2009.1900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Emery NJ. The eyes have it: The neuroethology, function and evolution of social gaze. Neurosci Biobehav Rev. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- 10.Klein JT, Shepherd SV, Platt ML. Social attention and the brain. Curr Biol. 2009;19:R958–R962. doi: 10.1016/j.cub.2009.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Milinski M, Rockenbach B. Economics. Spying on others evolves. Science. 2007;317:464–465. doi: 10.1126/science.1143918. [DOI] [PubMed] [Google Scholar]

- 12.Ostrom E, Burger J, Field CB, Norgaard RB, Policansky D. Revisiting the commons: Local lessons, global challenges. Science. 1999;284:278–282. doi: 10.1126/science.284.5412.278. [DOI] [PubMed] [Google Scholar]

- 13.Henrich J, et al. Foundations of Human Sociality: Economic Experiments and Ethnographic Evidence from Fifteen Small-Scale Societies. Oxford: Oxford Univ Press; 2004. [Google Scholar]

- 14.Gintis H, Bowles S, Boyd RT, Fehr E. Moral Sentiments and Material Interests: The Foundations of Cooperation in Economic Life. Boston: MIT Press; 2005. [Google Scholar]

- 15.Rockenbach B, Milinski M. The efficient interaction of indirect reciprocity and costly punishment. Nature. 2006;444:718–723. doi: 10.1038/nature05229. [DOI] [PubMed] [Google Scholar]

- 16.Dawes CT, Fowler JH, Johnson T, McElreath R, Smirnov O. Egalitarian motives in humans. Nature. 2007;446:794–796. doi: 10.1038/nature05651. [DOI] [PubMed] [Google Scholar]

- 17.Sigmund K. Punish or perish? Retaliation and collaboration among humans. Trends Ecol Evol. 2007;22:593–600. doi: 10.1016/j.tree.2007.06.012. [DOI] [PubMed] [Google Scholar]

- 18.Fehr E, Bernhard H, Rockenbach B. Egalitarianism in young children. Nature. 2008;454:1079–1083. doi: 10.1038/nature07155. [DOI] [PubMed] [Google Scholar]

- 19.Ule A, Schram A, Riedl A, Cason TN. Indirect punishment and generosity toward strangers. Science. 2009;326:1701–1704. doi: 10.1126/science.1178883. [DOI] [PubMed] [Google Scholar]

- 20.Gächter S, Herrmann B. Reciprocity, culture and human cooperation: Previous insights and a new cross-cultural experiment. Philos Trans R Soc Lond B Biol Sci. 2009;364:791–806. doi: 10.1098/rstb.2008.0275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fischbacher U, Gächter S. Social preferences, beliefs, and the dynamics of free riding in public good experiments. Am Econ Rev. 2010;100:541–556. [Google Scholar]

- 22.Gächter S, Herrmann B, Thöni C. Culture and cooperation. Philos Trans R Soc Lond B Biol Sci. 2010;365:2651–2661. doi: 10.1098/rstb.2010.0135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sylwester K, Roberts G. Cooperators benefit through reputation-based partner choice in economic games. Biol Lett. 2010;6:659–662. doi: 10.1098/rsbl.2010.0209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kreps D, Milgrom P, Roberts J, Wilson R. Rational cooperation in the finitely repeated Prisoners' Dilemma. J Econ Theory. 1982;27:245–252. [Google Scholar]

- 25.Dawes RM, McTavish J, Shaklee H. Behavior, communication, and assumptions about other people's behavior in a commons situation. J Pers Soc Psychol. 1977;35:1–11. [Google Scholar]

- 26.Bohnet I, Frey BS. The sound of silence in prisoner's dilemma and dictator games. J Econ Behav Organ. 1999;38:43–57. [Google Scholar]

- 27.De Cremer D, Bakker M. Accountability and cooperation in social dilemmas: The influence of others’ reputational concerns. Curr Psychol. 2003;22:155–163. [Google Scholar]

- 28.Milinski M, Semmann D, Krambeck HJ, Marotzke J. Stabilizing the earth's climate is not a losing game: Supporting evidence from public goods experiments. Proc Natl Acad Sci USA. 2006;103:3994–3998. doi: 10.1073/pnas.0504902103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kerr NL. In: Resolving Social Dilemmas. Foddy M, et al., editors. Philadelphia: Psychology; 1999. pp. 103–118. [Google Scholar]

- 30.Semmann D, Krambeck HJ, Milinski M. Strategic investment in reputation. Behav Ecol Sociobiol. 2004;56:248–252. [Google Scholar]

- 31.Bshary R. Biting cleaner fish use altruism to deceive image-scoring client reef fish. Proc R Soc Lond B Biol Sci. 2002;269:2087–2093. doi: 10.1098/rspb.2002.2084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Barclay P. Reputational benefits for altruistic punishment. Evol Hum Behav. 2006;27:325–344. [Google Scholar]

- 33.dos Santos M, Rankin DJ, Wedekind C. The evolution of punishment through reputation. Proc R Soc Lond B Biol Sci. 2011;278:371–377. doi: 10.1098/rspb.2010.1275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kurzban R, DeSioli P, O'Brien E. Audience effects on moralistic punishment. Evol Hum Behav. 2007;28:75–84. [Google Scholar]

- 35.Piazza J, Bering JM. The effects of perceived anonymity on altruistic punishment. Evol Psychol. 2008;6:487–501. [Google Scholar]

- 36.Yamagishi T. The provision of a sanctioning system as a public good. J Pers Soc Psychol. 1986;51:110–116. [Google Scholar]

- 37.Fehr E, Gächter S. Altruistic punishment in humans. Nature. 2002;415:137–140. doi: 10.1038/415137a. [DOI] [PubMed] [Google Scholar]

- 38.Fehr E. Human behaviour: Don't lose your reputation. Nature. 2004;432:449–450. doi: 10.1038/432449a. [DOI] [PubMed] [Google Scholar]

- 39.Gürerk Ö, Irlenbusch B, Rockenbach B. The competitive advantage of sanctioning institutions. Science. 2006;312:108–111. doi: 10.1126/science.1123633. [DOI] [PubMed] [Google Scholar]

- 40.Dreber A, Rand DG, Fudenberg D, Nowak MA. Winners don't punish. Nature. 2008;452:348–351. doi: 10.1038/nature06723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Egas M, Riedl A. The economics of altruistic punishment and the maintenance of cooperation. Proc R Soc Lond B Biol Sci. 2008;275:871–878. doi: 10.1098/rspb.2007.1558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Gächter S, Renner E, Sefton M. The long-run benefits of punishment. Science. 2008;322:1510. doi: 10.1126/science.1164744. [DOI] [PubMed] [Google Scholar]

- 43.Herrmann B, Thöni C, Gächter S. Antisocial punishment across societies. Science. 2008;319:1362–1367. doi: 10.1126/science.1153808. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.