Abstract

Successful navigation of the social world requires the ability to recognize and track emotions as they unfold and change dynamically. Neuroimaging and neurological studies of emotion recognition have primarily focused on the ability to identify the emotion shown in static photographs of facial expressions, showing correlations with the amygdala as well as temporal and frontal brain regions. In this study, we examined the neural correlates of continuously tracking dynamically changing emotions. Fifty‐nine patients with diverse neurodegenerative diseases used a rating dial to track continuously how positive or how negative the character in a film clip felt. Tracking accuracy was determined by comparing participants' ratings with the ratings of 10 normal control participants. The relationship between tracking accuracy and regional brain tissue content was examined using voxel‐based morphometry. Low tracking accuracy was primarily associated with gray matter loss in the right lateral orbitofrontal cortex (OFC). Our finding that the right OFC is critical to the ability to track dynamically changing emotions is consistent with previous research showing right OFC involvement in both socioemotional understanding and modifying responding in changing situations. Hum Brain Mapp, 2012. © 2011 Wiley Periodicals, Inc.

Keywords: emotion, emotion perception, empathic accuracy, voxel‐based‐morphometry, orbitofrontal region, dementia

INTRODUCTION

Recognizing what others are feeling is critical in social interactions [Ekman,1992], providing us with information about our interaction partners' emotions and intentions. Given the importance of emotion recognition abilities, there is great interest in understanding the brain regions that support these skills. This question has been investigated using functional imaging and patient studies, both of which have generally implicated temporal and frontal brain regions. In most of these studies, emotion recognition involves decoding emotional information from static images of emotional facial expressions. In contrast, in our everyday lives, emotion recognition requires decoding multiple modes of emotional information, such as facial expressions, prosody, body language, and contextual cues, and updating our judgments as this information changes.

The Neural Correlates of Emotion Recognition

Studies investigating the neural correlates of emotion recognition have used methods that vary in terms of (a) the format of stimuli presented to participants, and (b) the type of judgments of the stimuli that participants are required to make. In terms of stimulus format, participants have viewed either static stimuli (a single snapshot of a target's emotions in a photograph) or dynamic stimuli (a stream of changing emotional behavior of a target in a film clip). In terms of stimulus judgments, participants have either been asked to make one judgment per stimulus (i.e., identify the single, most prominent emotion expressed by a target) or continuous judgments (i.e., continuously tracking a target's changing emotions). Most neuroimaging and patient studies have used static stimuli and have had participants make a single emotion judgment; only recently have studies appeared that used dynamic stimuli and had participants make continuous emotion judgments [Zaki et al.,2009].

Static Stimuli: Single Judgments

Functional magnetic resonance imaging studies demonstrate that the temporal lobes and amygdala are activated when recognizing static emotional facial expressions [Habel et al.,2007; Morris et al.,1996; Phan et al.,2002; Sprengelmeyer et al.,1998]. Consistent with these imaging studies, patient studies report deficits recognizing static emotional facial expressions following temporal lobe and amygdala damage [Adolphs et al.,1995,2002; Anderson and Phelps,2000; Rosen et al.,2006]. In addition to temporal lobe and amygdalar regions, other functional imaging studies show that recognizing emotions is associated with activation in various frontal lobe regions, including the inferior frontal gyri [George et al.,1993; Sprengelmeyer et al.,1998], the orbitofrontal cortex [OFC; Vuilleumier et al.,2001], and the ventral prefrontal cortex [Narumoto et al.,2000]. Patient studies corroborate these findings, implicating a large swath of frontal lobe regions in emotion recognition, including the OFC [Beer et al.,2003; Blair and Cipolotti,2000], the medial prefrontal cortex [Heberlein et al.,2008; Mah et al.,2004], the ventrolateral prefrontal cortex [Hornak et al.,1996; Marinkovic et al.,2000], and the frontal operculum [Adolphs et al.,2000].

Dynamic Stimuli: Single Judgments

Although most studies of emotion recognition have used static, visual emotional stimuli, a small number have used dynamic stimuli, which arguably better mimic the dynamic nature of real‐world emotion recognition. In several studies participants viewed computer‐generated clips in which a facial expression morphed from a neutral to an emotional expression. Viewing these morphs produced greater activation in superior temporal gyrus (STG), fusiform gyrus, amygdala, and periamygdaloid cortex compared with viewing photos of static emotion expressions [Kilts et al.,2003; LaBar et al.,2003]. In another variant, Trautmann et al. [2009] had participants view clips of actors whose faces went from a neutral expression to either a strong happy or a strong disgust expression. Viewing these clips resulted in greater activation in the ventromedial and orbitofrontal cortices and in temporal lobe regions such as the STG and fusiform gyrus compared with viewing static images. McDonald et al. [2003] introduced The Awareness of Social Inference Test (TASIT) in which participants view videotaped vignettes of everyday social interactions that incorporate audio and contextual cues and identify the primary emotion expressed by the target. Impairments on this task have been documented in people with nonspecific traumatic brain injuries [McDonald et al.,2003] and in people who have undergone anterior cingulotomies [Ridout et al.,2007].

Dynamic Stimuli: Continuous Judgments

The TASIT, described above, has the advantages of using dynamic rather than static stimuli and incorporating contextual information but only requires participants to make a single emotional judgment for each film clip. In the real world, emotions change quickly [Ekman,1992] and, thus, emotional judgments need to be updated frequently. Zaki et al. [2009] had participants continuously track the emotions of targets who were describing either very positive or very negative autobiographical events. In this study, tracking accuracy was associated with activation in the superior temporal sulcus, medial prefrontal cortex, premotor cortex, and inferior parietal lobule.

Summary

There are some gross similarities in patterns of neural involvement across studies using the various methodologies reviewed here. Most generally implicate temporal or frontal lobe regions; however, there are differences at the level of specific regions. In addition, there clearly is a need for additional studies involving dynamic stimuli and continuous judgments, arguably the closest laboratory analog of real‐world emotion recognition.

This Study

In this study, we explored the neural correlates of continuous tracking of dynamic emotional stimuli by having participants use a rating dial [see Levenson and Gottman,1983] while watching a film clip to track the changing valence of the target character's emotions. In this procedure, participants made continuous ratings of the valence (positive‐neutral‐negative) of the character's emotions. Valence is considered to be the most universal form of emotion judgment [Osgood,1962; Russell,2003], enabling quick assessments of environmental stimuli as good or bad, rewarding or threatening [Zajonc, 1980]. Valence was treated as a bipolar dimension, ranging from very negative at one end, to very positive at the other end, with neutral in the middle. Participants rated how positive or negative they believed the target felt at each moment by continuously adjusting the dial to match her emotion. In the film clip, the target's emotions fluctuate from very positive to very negative and finally back to very positive; within positive and negative moments, her emotions also showed small fluctuations. This is somewhat different from the film stimuli used by Zaki et al. [2009]. In their stimulus the target's emotions were either positive or negative, and did not change between positive and negative within a single clip.

Our participants included patients diagnosed with frontotemporal lobar degeneration (FTLD), Alzheimer's disease, progressive supranuclear palsy (PSP), and corticobasal syndrome (CBS). Among FTLD patients, all three major subtypes (frontotemporal dementia, semantic dementia, progressive nonfluent aphasia) were represented. Emotion recognition deficits have been documented in FLTD patients [Diehl‐Schmid et al.,2007], AD patients [Drapeau et al.,2009], PSP patients [Ghosh et al.,2009], and CBS patients [O'Keeffe et al.,2007]. We chose a sample with a wide variety of diagnoses to increase variability in patterns of regional brain atrophy; this diverse group of diseases is known to affect nearly all cortical structures [Sollberger et al.,2009]. Areas of neural loss were quantified using voxel‐based morphometry (VBM; see below) and correlated with performance on our tracking task. The limited number of prior studies using dynamic stimuli and continuous emotion judgments made it difficult to cast highly specific a priori hypotheses. However, because the task requires participants to process and update judgments of a dynamic emotional stimulus, we expected the orbitofrontal cortex (OFC) to be involved by virtue of its role in updating appraisals of changing information [Rolls,2004].

METHODS

Participants

Patients

Neuroimaging and emotion tracking data were obtained from 59 patients with impairment from suspected neurodegenerative disease recruited through the Memory and Aging Center (MAC) at the University of California San Francisco. Eighteen patients met diagnostic criteria [Neary et al.,1998] for the frontotemporal dementia (FTD) variant of frontotemporal lobar degeneration, 13 patients met criteria for the semantic dementia (SD) variant, and three met criteria for the progressive nonfluent aphasia (PNFA) variant. Additionally, 15 patients met criteria for Alzheimer's disease (AD) based on NINDS‐ADRDA criteria [McKhann et al.,1984]. Five participants met criteria for progressive supranuclear palsy (PSP) based on Litvan criteria [Litvan et al.,1996] and five participants met criteria for corticobasal syndrome [CBS; Boxer et al., 2006]. Patients were diagnosed by a multidisciplinary team including neurologists, neuropsychologists, psychiatrists, and nurses using neurological testing, neuropsychological testing, and structural magnetic resonance imaging.

Controls

Emotion tracking data were also obtained from 10 age‐matched healthy control participants. These participants were recruited through advertisements in local newspapers and recruitment talks at local senior community centers. For inclusion, control participants had to have a normal neurologic exam, Clinical Dementia Rating Scale (CDR) = 0, Mini‐Mental State Examination (MMSE) greater than or equal to 28 (30 is the maximum possible score), and verbal and visuospatial delayed memory performance greater than or equal to the 25th percentile.

Apparatus

The rating dial consisted of a small metal box with a rotary knob and pointer that traversed a 180° path over a nine‐point scale anchored by the legends “extremely negative” (depicted by a schematic frowning face) at the extreme left, “neutral” (depicted by a schematic neutral face) in the middle, and “extremely positive” at the extreme right (depicted by a schematic smiling face). Participants were instructed to adjust the rating dial continuously to indicate how positive or negative they believed the target character in a film clip felt at each moment. The rating dial generated a voltage that reflected the dial position; a computer sampled the voltage every 3 milliseconds and computed the average dial position every second.

Procedure

Participants completed tasks with their caregivers at the MAC and at the Psychophysiology Laboratory at the University of California, Berkeley (UCB). A full experimental protocol, consisting of a series of tasks designed to assess emotional functioning [Levenson et al.,2008], was administered at UCB. Upon arrival, they (or their caregivers) provided written consent. Participants were seated in a chair located 1.25 m from a 48‐cm color television monitor and had sensors attached for physiological monitoring. This study focuses on two tasks in which participants used the rating dial to track emotional stimuli. Structural MRI scanning and neuropsychological testing with the patients as well as behavioral inventories with family members occurred at the MAC; for each participant, the MAC assessment occurred within 6 months of the UCB assessment.

Emotion tracking task

For this task, participants watched an 80‐second film clip (taken from a commercial for Disneyland) and continuously rated how positive or negative the target character felt. In this clip, the camera was focused on a woman who was having a conversation over dinner with a man. Her emotions fluctuated between positive and negative throughout the clip. Participants were instructed to adjust the rating dial as often as needed so that it always reflected the woman's emotions.

Control tracking task

This task simply assessed participants' ability to use the rating dial to track stimuli on the screen and was used to control for any motor and cognitive impairments that may have affected performance. For this control task, participants watched an 80‐second clip and continuously rated the positivity or negativity of a schematic face. In this clip, the mouth of the schematic face fluctuated between varying intensities of smiles and frowns, and a neutral straight line. Participants were instructed to adjust the rating dial continuously to match the emotion of the face presented on the screen.

For both the emotion tracking task and the control tracking task, criterion ratings were established by averaging the ratings of the 10 normal control participants for each second of the clips. Correlations among the controls' ratings were high for both the emotion tracking task (r's between 0.87 and 0.95, all P's < 0.001) and the control tracking task (r's between 0.83 and 0.98, all P's < 0.001). For each task, a tracking inaccuracy score was calculated for each patient by computing the deviation from the criterion rating for each second and summing the absolute deviations. Thus, higher scores indicated greater inaccuracy on the task.

Behavioral inventory and neuropsychological measures

Behavioral symptoms were evaluated with the Neuropsychiatric Inventory [NPI; Cummings,1997], a caregiver‐based rating system that assesses the presence and severity of 12 behavioral disorders: delusions, hallucinations, aggression/agitation, depression, anxiety, elation/euphoria, apathy, disinhibition, irritability/lability, aberrant motor behaviors, sleep disturbances, and eating disorders. A total NPI score was calculated by multiplying the frequency and severity scores for each behavioral variable and summing them. The NPI was administered to caregivers and scored by a specialist nurse who had been trained in its administration.

To assess working memory, the Digit Span of the Wechsler Adult Intelligence Scale [Version III, Wechsler, 1997] was administered. Scores were summed across the correctly completed trials on the forward and backward trials to create a single composite score, with higher scores indicating greater working memory capacity. To assess cognitive flexibility, the phonemic version of the Verbal Fluency test [Delis et al., 2001] was used. On separate one‐minute trials, participants were asked to generate words that began with the letters F, A, and S (excluding proper nouns and repetitions of the same word with different suffixes). Verbal fluency was calculated as the total number of correct words produced across the three trials with more words produced indicating better fluency. As a second measure of cognitive flexibility, the D‐KEFS Trail Making Test was used [Delis et al., 2001]. In this task, participants were instructed to alternate between connecting letters and connecting numbers printed in scrambled order on a card and to work sequentially (connect from “1” to “A” to “2” to “B”); performance was based on the speed with which participants connected all letters and numbers. To hold individual differences in number and letter processing speed constant, participants also completed two trials on which the task was to connect letters‐to‐letters and numbers‐to‐numbers sequentially. To control for individual differences on these latter trials, an overall task switching score was created by predicting time to completion on the letters‐to‐numbers trial from the letters‐to‐letters and numbers‐to‐numbers trials and saving the resulting residuals. These residual scores were used as a measure of task switching where shorter times and lower scores indicated better task‐switching.

MRI scanning and VBM analysis

All patients had a structural MRI scan on a 1.5‐T Magnetom VISION system (Siemens Inc., Iselin, N.J.) equipped with a standard quadrature head coil. A volumetric magnetization prepared rapid gradient echo MRI (MPRAGE, TR/TE/TI = 10/4/300 milliseconds) was used to obtain T1‐weighted images of the entire brain, 15‐degree flip angle, coronal orientation perpendicular to the double spin echo sequence, 1.0 × 1.0 mm2 in‐plane resolution and 1.5 mm slab. All imaging was done within 6 months of the experimental session at Berkeley.

VBM preprocessing and analyses were performed using the SPM5 software package (Wellcome Department of Cognitive Neurology, London; http://www.fil.ion.ucl.ac.uk/spm) running on Matlab 7.1.0 (MathWorks, Natick, MA). SPM5 default parameters were used in all preprocessing steps with the exception that the light clean‐up procedure was used in the morphological filtering step. Default tissue probability priors (voxel size: 2 × 2 × 2 mm3) of the International Consortium for Brain Mapping (ICBM) were used. Spatially normalized, segmented, and modulated grey matter images were smoothed with a 12 mm isotropic Gaussian kernel.

Data Analysis

Relationships between tracking inaccuracy scores and neuropsychological performance were examined using individual correlations. Covariates‐only (multiple regression design) statistical analyses were used to determine the relationship between emotion tracking inaccuracy scores and gray matter volume. The significance of each effect was determined using the theory of Gaussian fields. Age, sex, MMSE (as a proxy for overall cognitive functioning) and total intracranial volume (TIV) were entered as nuisance covariates into all designs. The statistical threshold was set at P < 0.05 after whole‐brain SPM family‐wise error (FWE) correction. Resulting SPM t‐maps were superimposed on the MNI single subject brain using Automated Anatomical Labelling [Tzourio‐Mazoyer et al.,2002] and Brodmann's atlases included in the MRIcron software package (http://www.sph.sc.edu/comd/rorden/mricro.html). Locations of clusters were reported in the MNI reference space. We did not include normal control participants in any VBM analyses because we did not expect to find significant brain volume and emotion tracking variability within this group.

Because diagnostic group membership causally influences both test performance and regional brain atrophy, it can be considered a confounding covariate. If diagnostic group is not controlled for, it increases the likelihood of identifying brain regions in the VBM analysis that are associated with disease‐specific patterns of co‐atrophy rather than with tracking performance. Controlling for diagnostic group membership ensures that any relationship between brain areas and tracking task performance generalizes across more than one diagnostic group. We controlled for the effects of diagnostic group by parameterizing each group (with 0 = no and 1 = yes for each of the six diagnostic groups: FTD, SD, PNFA, AD, CBS, PSP). These six diagnostic group variables were included as nuisance covariates in addition to the four covariates described above (TIV, age, sex, and MMSE).

RESULTS

Table I shows demographics and statistics for performance on the tracking tasks across the diagnostic groups. An analysis of variance showed no significant differences between the diagnostic groups in terms of age [F(5,53) = 1.99, P = 0.10] or MMSE [F(5,53) = 1.58, P = 0.18]; a chi‐square analysis revealed no differences between the diagnostic groups in terms of sex, χ2(5, N = 59) = 7.77, ns. For the entire group of patients, average tracking inaccuracy scores were 126.35 (47.11) for the emotion tracking task; there were no significant differences in tracking performance between the diagnostic groups (FTD, SD, PNFA, AD, CBS, PSP), F(5, 53) < 1.

Table I.

Demographic features and tracking performance of the diagnostic groups

| FTD (n = 18) | SD (n = 13) | PNFA (n = 3) | AD (n = 15) | CBS (n = 5) | PSP (n = 5) | All dx (n = 59) | Overall F (5,53) | P | |

|---|---|---|---|---|---|---|---|---|---|

| Age M(SD) | 58.2 (6.7) | 63.9 (8.2) | 67.0 (9.8) | 59.8 (5.3) | 64.4 (4.9) | 62.2 (5.6) | 61.2 (7.0) | 1.99 | 0.10 |

| Sex (M/F) | 14/4 | 9/4 | 1/2 | 9/6 | 4/1 | 1/4 | 44/25 | χ2=7.77 | 0.26 |

| MMSE M(SD) | 25.9 (5.1) | 23.4 (7.3) | 28.7 (1.5) | 24.0 (3.2) | 23.4 (5.5) | 27.8 (3.3) | 24.7 (5.2) | 1.58 | 0.18 |

| Emotion tracking task M(SD) | 129.0 (54.0) | 121.1 (35.4) | 105.3 (10.0) | 125.7 (43.1) | 120.2 (66.4) | 151.2 (61.5) | 126.4 (47.1) | 0.44 | 0.82 |

| Control tracking task M(SD) | 71.4 (54.3) | 68.8 (33.5) | 44.0 (14.1) | 79.9 (33.5) | 90.5 (25.4) | 55.8 (15.6) | 71.9 (39.4) | 0.82 | 0.54 |

FTD, frontotemporal dementia; SD, semantic dementia; PNFA, progressive non‐fluent aphasia; AD, Alzheimer's disease; CBD, corticobasal degeneration; PSP, progressive supranuclear palsy; MMSE, Mini Mental State Examination (range 0–30).

Neuroimaging Results

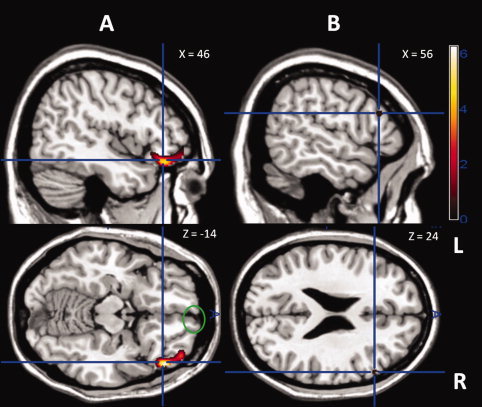

Lower performance on the emotion tracking task (i.e., higher inaccuracy scores) was significantly correlated with gray matter tissue loss in: (a) a large region of voxels in the right orbitofrontal cortex (Brodmann area [BA] 47), and (b) smaller clusters of voxels in the triangularis portion of the right inferior frontal gyrus (BA 45), the anterior portion of gyrus rectus (BA 11), and the right middle frontal gyrus (BA 46 and BA 9; see Table II and Fig. 1). Additionally, lower emotion tracking performance was associated with a small region in the left orbitofrontal cortex (BA 47). All significant correlations between brain regions and emotion tracking task performance were independent of TIV, age, sex, MMSE, and diagnosis, and were corrected for family‐wise error across the whole brain (at P < 0.05).

Table II.

Regions where tracking ability was correlated with gray matter volume, adjusting for TIV, age, sex, MMSE, and diagnosis in the emotion tracking task and control tracking task

| Anatomical region | BA | mm3 | x, y, z | t‐score | P |

|---|---|---|---|---|---|

| Emotion tracking task | |||||

| Right Inferior orbital gyrus | 47 | 2,992 | 50, 34, −14 | 6.05 | <0.01 |

| Right middle orbital gyrus | 47 | 2992 | 42, 52, −12 | 5.55 | <0.01 |

| Right gyrus rectus | 11 | 64 | 4, 64, −16 | 5.12 | <0.05 |

| Right inferior frontal gyrus, pars triangularis | 45 | 64 | 56, 26, 24 | 5.05 | <0.05 |

| Control tracking task | |||||

| Right middle temporal gyrus | 39 | 120 | 40, −60, 20 | 4.56 | <0.03 |

In the emotion tracking task, the control tracking task was included as an additional covariate. Correlations were corrected for FWE across the whole brain at a significance level of P < 0.05. Cluster size is reported in millimeters cubed (mm3).

TIV, total intracranial volume; MMSE, Mini Mental State Examination (range 0–30).

Figure 1.

Colored areas are regions where brain atrophy significantly correlated with impairment on the emotion tracking task. The analysis controlled for TIV, age, sex, MMSE, control task performance, and diagnostic group membership. Results of the analysis are superimposed on sagittal and axial slices of a standard brain from a single normal subject and corrected for family‐wise error across the whole brain at a significance level of P < 0.05 shown at a T‐score range shown on the left. A: The blue crosshairs indicate the regions of the right lateral orbitofrontal cortex with significant correlations (T = 5.62); the green circle indicates the region of the right gyrus rectus with significant correlations (T = 5.11). B) The blue crosshairs indicate the region of right pars triangularis with significant correlations (T = 5.05).

We also examined associations between brain atrophy and tracking deviation scores during positive and negative moments separately. Again, TIV, age, sex, MMSE, and diagnosis were included as covariates. Lower tracking performance during negative moments was associated with tissue loss in the right lateral OFC (BA 47); lower tracking performance during the positive moments was associated with tissue loss in a small region in the right frontopolar cortex (BA 10). Both of these areas were similar to regions found in the main analysis but were much smaller in size.

To determine whether difficulties in using the rating dial influenced findings, we included inaccuracy scores on the control tracking task as an additional covariate, along with TIV, age, sex, MMSE, and diagnosis in a follow‐up VBM analysis. Even after including this additional covariate, lower performance on the emotion tracking task was still significantly correlated with gray matter tissue loss in: (a) a large region of voxels in the right orbitofrontal cortex (BA 47), and (b) smaller clusters of voxels in the triangularis portion of the right inferior frontal gyrus (BA 45) and in the anterior portion of gyrus rectus (BA 11). However, correlations with regions in the left orbitofrontal cortex (BA 47) and right middle frontal gyrus (BA 46 and BA 9) no longer reached significance. Given the possibility that motor difficulties in CBS and PSP patients could negatively impact tracking performance, we also examined associations between brain atrophy and tracking deviation scores excluding these two patient groups. Using TIV, age, sex, MMSE, diagnosis, and control tracking task score as covariates, tracking performance was again associated with a large region of voxels in the right orbitofrontal cortex.

Behavioral Symptoms and Neuropsychological Data: Relationships with Tracking Accuracy

Neither the emotion tracking task nor the control tracking task was significantly correlated with the NPI total score [r(59) = 0.18, ns and r(59) = 0.15, ns respectively]. Although not reaching significance, these relationships were in the expected direction, with greater behavioral symptoms associated with higher deviation scores on the tracking tasks.

In the subset of participants for whom neuropsychological data was available (41 of the 59 participants), performance on the emotion tracking task was not significantly associated with any of the neuropsychological measures. Correlations were as follows: Digit Span, r(41) = 0.04, ns; fluency, r(41) = −0.12, ns, and Trail Making, r(41) = 0.01, ns. Performance on the control tracking task was not significantly associated with Digit Span [r(41) = −0.18, ns] or with fluency, [r (41) = −0.16, ns] but was significantly associated with Trail Making, r(41) = 0.42, P = 0.01.

DISCUSSION

Using a sample of patients with diverse neurological damage, we used VBM to identify brain regions where gray matter loss was correlated with an impaired ability to track continuously another person's dynamically changing emotions. Our results indicated that impairment in this ability was associated with atrophy in neural regions in the right frontal lobe, specifically the pars triangularis, the gyrus rectus, the middle frontal gyrus, and in particular, a large cluster of voxels in the lateral OFC. This finding was maintained even after controlling for total intracranial volume, age, sex, overall cognitive functioning on the MMSE, diagnosis, and performance on the control tracking task. Importantly, deficits in emotion tracking ability did not appear to reflect deficits in general cognitive ability; performance on the tracking task was unrelated to performance on several standard neuropsychological measures.

Neural Regions Associated with Continuous Emotion Tracking

Lateral OFC

We believe that our findings showing a strong association between the right lateral OFC and continuously tracking dynamically‐changing emotions can be traced to two established functions of that region: (a) deciphering and responding to socioemotional information, and (b) updating responding based on changing conditions. In terms of deciphering socioemotional information, functional imaging studies show that the OFC is activated in a wide‐range of socioemotional processes, including appraising emotional information [Bechara,2004], recalling emotional memories [Bechara,2004], decoding others' mental states [Hynes et al.,2006], and representing the affective value of stimuli in our environment [Kringelbach and Rolls,2004; O'Doherty et al.,2001]. Similarly, in patient studies, damage to the OFC has been associated with a number of socioemotional deficits, specifically poor responses to the emotional signals of others [Lough et al.,2006], difficulties determining relationships between people interacting in a complex social scene [Mah et al.,2004], poor self‐monitoring [Beer et al.,2006], and decreased perspective‐taking ability and compassion [Rankin et al.,2006]. In terms of updating responding, functional imaging studies show that the lateral OFC is activated when the need for behavioral change is signaled by unpleasant odors [Rolls,2004], losing money [O'Doherty et al.,2001], or emotional facial expressions [Kringelbach and Rolls,2003]. Similarly, in patient and non‐human primate studies, damage to the OFC has been associated with impairment in altering responses when contingencies change and previously rewarded responses become disadvantageous or punishing [Hornak et al.,2004; Kringelbach and Rolls,2004; Meunier et al.,1997; Rolls et al.,1994]. Taken together, these studies help explain our finding in patients that lateral OFC volume is related to performance on a dynamic rating task that involves both processing socioemotional information and updating responding.

Additional brain regions

In addition to the lateral OFC, we found that small regions in the pars triangularis in the right inferior frontal gyrus and the right gyrus rectus were also related to ability to track dynamically changing emotions. Previous research has shown that damage in the right pars triangularis negatively impacts patients' ability to stop a behavior quickly [Aron et al.,2007]. The task used in this study required participants to switch and adjust their ratings of another person's emotions; intrinsic to this task was the ability to stop an ongoing behavior (e.g., to stop rating a person's emotions as positive when they switch to negative). Additionally, the gyrus rectus area has been linked to short‐term iconic representations of visual stimuli, with damage to this area resulting in impairments in short‐term retention of visual information [Szatkowska et al.,2001]. The continuous tracking task used in the current study required participants to hold in mind the film character's previous emotional state in order to adjust the dial position to reflect the character's current emotional state.

Neural Regions Not Associated with Continuous Emotion Tracking: The Amygdala and Temporal Lobes

It might have been expected that the amygdala and temporal lobes, which are often implicated in studies of emotion recognition [Adolphs et al.,2002; Breiter et al.,1996; Morris et al.,1996; Rosen et al.,2006; Zaki et al.,2009] and are affected by the disease processes of patients included in this study [Rosen et al.,2002], would be implicated in our emotion tracking task. However, we found no evidence of these relationships. Evidence suggests that the amygdala is specialized for quickly detecting emotionally relevant stimuli in the environment [Keightley et al.,2003; Pessoa et al.,2002] and for decoding emotion from static facial expressions [Adolphs et al.,1999]. This region may be less important for recognizing emotions from complex, dynamic stimuli. Consistent with this interpretation, Adolphs and Tranel [2003] demonstrated that bilateral amygdala damage negatively impacted recognition of emotions from static facial expressions, but not from complex scenes that contain contextual cues in addition to emotional facial expressions (qualities found in our film stimulus).

Temporal lobe regions have also been associated with emotion recognition using static stimuli [Rosen et al.,2006], which are quite different than the dynamic stimulus used in this study. Associations between temporal regions (i.e., superior temporal sulcus; STS) have been recently reported by Zaki et al. [2009] using a dynamic stimuli that bears some similarity to ours. However, in the Zaki et al. study, the target's emotion was either negative or positive and did not change between these states as was the case with our stimulus. Thus, it may be that the STS is involved in detecting subtle fluctuations in intensity within a particular type of emotion, but not in tracking the kinds of dramatic changes in emotional valence found in the current study's film stimulus.

Although the emotion tracking task performance was not associated with any temporal lobe regions, the control tracking task was associated with a region on the border of the middle temporal gyrus and angular gyrus. This region has been associated with awareness of movement [Farrer et al.,2008] and with motor‐based executive functioning [Nakata et al.,2008]. Combining these findings with the associations we found between the control task and the Trail Making Task, performance on the control task may reflect a general cognitive ability related to motor activities.

Limitations and Future Directions

Our research findings must be interpreted with several limitations in mind: (a) VBM studies assess brain‐behavior relationships across the entire brain, they do not directly reveal information about neural circuits; (b) our sample included a diverse set of neurodegenerative diseases that collectively affect nearly all cortical structures [Sollberger et al.,2009]; accordingly, this approach lacks power to detect brain‐behavior relationships in regions where only a small number of participants show atrophy; (c) VBM analysis cannot detect nonstructural changes that may have occurred (e.g., metabolic deficits); and (d) our participants made judgments about emotional valence; the brain‐behavior relationships we found may not generalize to judgments of specific emotions (e.g., fear, anger).

Results from this study would be strengthened by examining multiple varieties of emotion tracking, different kinds of dynamic stimuli (e.g., including subtle variations around a particular emotion valence as well as shifts between valences), a more comprehensive neuropsychological assessment (e.g., including visuospatial abilities), and by confirming VBM results with patients with those derived from functional neuroimaging with normal participants.

CONCLUSION

Many previous studies have investigated the neural correlates of emotion recognition, typically using static stimuli. These studies have, generally, implicated a group of frontal and temporal lobe regions. In this study, we investigated the neural regions involved when participants continuously track dynamically changing emotions, a task that arguably mimics the way we decode emotions in the real world. Unlike the many previous studies using static stimuli, we found no indication that temporal lobe or amygdalar atrophy was associated with impaired performance. However, frontal lobe regions, in particular the right lateral OFC, were associated with our measure of dynamic emotion recognition. We believe this association is based on the special role the OFC plays in processing socioemotional information and updating responding. Dynamic emotion tracking ability is critical for success in the social world, enabling us to understand the emotions expressed by others in real time, follow them as they unfold and change, and adjust our behavior in ways that are appropriate. Thus, damage to the OFC may produce problems in processing emotional information that lead to a range of social errors, faux pas, and other impairments in social functioning.

REFERENCES

- Adolphs R, Tranel D ( 2003): Amygdala damage impairs emotion recognition from scenes only when they contain facial expressions. Neuropsychologia 41: 1281–1289. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR ( 1995): Fear and the human amygdala. J Neurosci 15: 5879–5891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, Young AW, Calder AJ, Phelps EA, et al. ( 1999): Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia 37: 1111–1117. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR ( 2000): A role for somatosensory cortices in the visual recognition of emotion as revealed by three‐dimensional lesion mapping. J Neurosci 20: 2683–2690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Baron‐Cohen S, Tranel D ( 2002): Impaired recognition of social emotions following amygdala damage. J Cogn Neurosci 14: 1264–1274. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA ( 2000): Expression without recognition: contributions of the human amygdala to emotional communication. Psychol Sci 11: 106–111. [DOI] [PubMed] [Google Scholar]

- Aron AR ( 2007): The neural basis of inhibition in cognitive control. Neuroscientist 13: 214–228. [DOI] [PubMed] [Google Scholar]

- Bechara A ( 2004): Disturbances of emotion regulation after focal brain lesions. Int Rev Neurobiol 62: 159–193. [DOI] [PubMed] [Google Scholar]

- Beer JS, Heerey EA, Keltner D, Scabini D, Knight RT ( 2003): The regulatory function of self‐conscious emotion: Insights from patients with orbitofrontal damage. J Pers Soc Psychol 85: 594–604. [DOI] [PubMed] [Google Scholar]

- Beer JS, John OP, Scabini D, Knight RT ( 2006): Orbitofrontal cortex and social behavior: Integrating self‐monitoring and emotion‐cognition interactions. J Cogn Neurosci 18: 871–879. [DOI] [PubMed] [Google Scholar]

- Blair RJ, Cipolotti L ( 2000): Impaired social response reversal. A case of ‘acquired sociopathy’. Brain 123: 1122–1141. [DOI] [PubMed] [Google Scholar]

- Boxer AL, Geschwind MD, Belfor N, Gorno‐Tempini ML, Schauer GF, Miller BL, Weiner MW, Rosen HJ ( 2006): Patterns of brain atrophy that differentiate corticobasal degeneration syndrome from progressive supranuclear palsy. Arch Neurol 63: 81–86. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR ( 1996): Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- Cummings JL ( 1997): The neuropsychiatric inventory: Assessing psychopathology in dementia patients. Neurology 48: S10–S16. [DOI] [PubMed] [Google Scholar]

- Delis DC, Kaplan E, Kramer JH ( 2001): The Delis‐Kaplan executive function scale. San Antonio; The Psychological Corporation. [Google Scholar]

- Diehl‐Schmid J, Pohl C, Ruprecht C, Wagenpfeil H, Foerstl H, Kurz A ( 2007): The Ekman 60 faces test as a diagnostic instrument in frontotemporal dementia. Arch Clin Neuropsychol 22: 459–464. [DOI] [PubMed] [Google Scholar]

- Drapeau J, Gosselin N, Gagnon L, Peretz I, Lorrain D ( 2009): Emotion recognition from face, voice, and music in dementia of the Alzheimer type. Ann N Y Acad Sci 1169: 342–345. [DOI] [PubMed] [Google Scholar]

- Ekman P ( 1992): An argument for basic emotions. Cogn Emotions 6: 169–200. [Google Scholar]

- Farrer C, Frey SH, Van Horn JD, Tunik E, Turk D, Inati S, Grafton ST ( 2008): The angular gyrus computes action awareness representations. Cereb Cortex 18: 254–261. [DOI] [PubMed] [Google Scholar]

- George MS, Ketter TA, Gill DS, Haxby JV, Ungerleider LG, Herscovitch P, et al. ( 1993): Brain regions involved in recognizing facial emotion or identity: An oxygen‐15 PET study. J Neuropsychiatry Clin Neurosci 5: 384–394. [DOI] [PubMed] [Google Scholar]

- Ghosh BC, Rowe JB, Calder AJ, Hodges JR, Bak TH ( 2009): Emotion recognition in progressive supranuclear palsy. J Neurol Neurosurg Psychiatry 80: 1143–1145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin‐Exner I, Gur RC, Moser Moser E ( 2007): Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 45: 2369–2377. [DOI] [PubMed] [Google Scholar]

- Heberlein AS, Padon AA, Gillihan SJ, Farah MJ, Fellows LK ( 2008): Ventromedial frontal lobe plays a critical role in facial emotion recognition. J Cogn Neurosci 20: 721–733. [DOI] [PubMed] [Google Scholar]

- Hornak J, Rolls ET, Wade D ( 1996): Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia 34: 247–261. [DOI] [PubMed] [Google Scholar]

- Hornak J, O'Doherty J, Bramham J, Rolls ET, Morris RG, Bullock PR, et al. ( 2004): Reward‐related reversal learning after surgical excisions in orbito‐frontal or dorsolateral prefrontal cortex in humans. J Cogn Neurosci 16: 463–478. [DOI] [PubMed] [Google Scholar]

- Hynes CA, Baird AA, Grafton ST ( 2006): Differential role of the orbital frontal lobe in emotional versus cognitive perspective‐taking. Neuropsychologia 44: 374–383. [DOI] [PubMed] [Google Scholar]

- Keightley ML, Winocur G, Graham SJ, Mayberg HS, Hevenor SJ, Grady CL ( 2003): An fMRI study investigating cognitive modulation of brain regions associated with emotional processing of visual stimuli. Neuropsychologia 41: 585–596. [DOI] [PubMed] [Google Scholar]

- Kilts CD, Egan G, Gideon DA, Ely TD, Hoffman JM ( 2003): Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage 18: 156–168. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET ( 2003): Neural correlates of rapid reversal learning in a simple model of human social interaction. Neuroimage 20: 1371–1383. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET ( 2004): The functional neuroanatomy of the human orbitofrontal cortex: Evidence from neuroimaging and neuropsychology. Prog Neurobiol 72: 341–372. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Crupain MJ, Voyvodic JT, McCarthy G ( 2003): Dynamic perception of facial affect and identity in the human brain. Cereb Cortex 13: 1023–1033. [DOI] [PubMed] [Google Scholar]

- Levenson RW, Gottman JM ( 1983): Marital interaction: Physiological linkage and affective exchange. J Pers Soc Psychol 45: 587–597. [DOI] [PubMed] [Google Scholar]

- Levenson RW, Ascher E, Goodkind M, McCarthy M, Sturm V, Werner K ( 2008): Laboratory testing of emotion and frontal cortex In: Miller B, Goldenberg G, editors. Handbook of Clinical Neurology, 3rd Series. New York; Elsevier; pp. 489–498. [DOI] [PubMed] [Google Scholar]

- Litvan I, Agid Y, Calne D, Campbell G, Dubois B, Duvoisin RC, Goetz CG, Golbe LI, Grafman J, Growdon JH, Hallett M, Jankovic J, Quinn NP, Tolosa E, Zee DS, et al. ( 1996): Clinical research criteria for the diagnosis of progressive supranuclear palsy (Steele‐Richardson‐Olszewski syndrome): Report of the NINDS‐SPSP international workshop. Neurology 47: 1–9. [DOI] [PubMed] [Google Scholar]

- Lough S, Kipps CM, Treise C, Watson P, Blair JR, Hodges JR ( 2006): Social reasoning, emotion and empathy in frontotemporal dementia. Neuropsychologia 44: 950–958. [DOI] [PubMed] [Google Scholar]

- Mah L, Arnold MC, Grafman J ( 2004): Impairment of social perception associated with lesions of the prefrontal cortex. Am J Psychiatry 161: 1247–1255. [DOI] [PubMed] [Google Scholar]

- Marinkovic K, Trebon P, Chauvel P, Halgren E ( 2000): Localised face processing by the human prefrontal cortex: Face‐selective intracerebral potentials and post‐lesion deficits. Cogn Neuropsychol 17: 187–199. [DOI] [PubMed] [Google Scholar]

- McDonald S, Flanagan S, Rollins J, Kinch J ( 2003): TASIT: A new clinical tool for assessing social perception after traumatic brain injury. J Head Trauma Rehabil 18: 219–238. [DOI] [PubMed] [Google Scholar]

- McKhann G, Drachman D, Folstein M, Katzman R, Price D, Stadlan EM ( 1984): Clinical diagnosis of Alzheimer's disease: Report of the NINCDS‐ADRDA Work Group under the auspices of Department of Health and Human Services Task Force on Alzheimer's Disease. Neurology 34: 939–944. [DOI] [PubMed] [Google Scholar]

- Meunier M, Bachevalier J, Mishkin M ( 1997): Effects of orbital frontal and anterior cingulate lesions on object and spatial memory in rhesus monkeys. Neuropsychologia 35: 999–1015. [DOI] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ ( 1996): A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383: 812–815. [DOI] [PubMed] [Google Scholar]

- Nakata H, Sakamoto K, Ferreti A, Perrucci MG, Del Gratta C, Kakigi R, Romani GL, ( 2008): Executive functions with different motor outputs in somatosensory Go/Nogo tasks: An event‐related functional MRI study. Brain Res Bull 77: 197–205. [DOI] [PubMed] [Google Scholar]

- Narumoto J, Yamada H, Iidaka T, Sadato N, Fukui K, Itoh H, Yonekura Y ( 2000): Brain regions involved in verbal or non‐verbal aspects of facial emotion recognition. Neuroreport 11: 2571–2576. [DOI] [PubMed] [Google Scholar]

- Neary D, Snowden JS, Gustafson L, Passant U, Stuss D, Black S, Freedman M, Kertesz A, Robert PH, Albert M, Boone K, Miller BL, Cummings J, Benson DF ( 1998): Frontotemporal lobar degeneration: A consensus on clinical diagnostic criteria. Neurology 51: 1546–1554. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C ( 2001): Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci 4: 95–102. [DOI] [PubMed] [Google Scholar]

- O'Keeffe FM, Murray B, Coen RF, Dockree PM, Bellgrove MA, Garavan H, Lynch T, Robertson IH ( 2007): Loss of insight in frontotemporal dementia, corticobasal degeneration and progressive supranuclear pasly. Brain 130: 753–764. [DOI] [PubMed] [Google Scholar]

- Osgood CE ( 1962): Studies on the generality of affective meaning systems. Am Psychol 17: 10–28. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Kastner S, Ungerleider LG ( 2002): Attentional control of the processing of neural and emotional stimuli. Brain Res Cogn Brain Res 15: 31–45. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I ( 2002): Functional neuroanatomy of emotion: A meta‐analysis of emotion activation studies in PET and fMRI. Neuroimage 16: 331–348. [DOI] [PubMed] [Google Scholar]

- Rankin KP, Gorno‐Tempini ML, Allison SC, Stanley CM, Glenn S, Weiner MW, Miller BL ( 2006): Structural anatomy of empathy in neurodegenerative disease. Brain 129: 2945–2956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ridout N, O'Carroll RE, Dritschel B, Christmas D, Eljamel M, Matthews K ( 2007): Emotion recognition from dynamic emotional displays following anterior cingulotomy and anterior capsulotomy for chronic depression. Neuropsychologia 45: 1735–1743. [DOI] [PubMed] [Google Scholar]

- Rolls ET ( 2004): The functions of the orbitofrontal cortex. Brain Cogn 55: 11–29. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Hornak J, Wade D, McGrath J ( 1994): Emotion‐related learning in patients with social and emotional changes associated with frontal lobe damage. J Neurol Neurosurg Psychiatry 57: 1518–1524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen HJ, Gorno‐Tempini ML, Goldman WP, Perry RJ, Schuff N, Weiner M, Feiwell R, Kramer JH, Miller BL ( 2002): Patterns of brain atrophy in frontotemporal dementia and semantic dementia. Neurology 58: 198–208. [DOI] [PubMed] [Google Scholar]

- Rosen HJ, Wilson MR, Schauer GF, Allison S, Gorno‐Tempini ML, Pace‐Savitsky C, Kramer JH, Levenson RW, Weiner M, Miller BL ( 2006): Neuroanatomical correlates of impaired recognition of emotion in dementia. Neuropsychologia 44: 365–373. [DOI] [PubMed] [Google Scholar]

- Russell JA ( 2003): Core affect and the psychological construction of emotion. Psychol Rev 110: 145–172. [DOI] [PubMed] [Google Scholar]

- Sollberger M, Stanley CM, Wilson SM, Gyurak A, Beckman V, Growdon M, Jang J, Weiner MW, Miller BL, Rankin KP ( 2009): Neural basis of interpersonal traits in neurodegenerative diseases. Neuropsychologia 47: 2812–2827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sprengelmeyer R, Rausch M, Eysel UT, Przuntek H ( 1998): Neural structures associated with recognition of facial expressions of basic emotions. Proc Biol Sci 265: 1927–1931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szatkowska I, Grabowska A, Szymanska O ( 2001): Evidence for the involvement of the ventro‐medial prefrontal cortex in a short‐term storage of visual images. Neuroreport 12: 1187–1190. [DOI] [PubMed] [Google Scholar]

- Trautmann SA, Fehr T, Herrmann M ( 2009): Emotions in motion: Dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion‐specific activations. Brain Res 1284: 100–115. [DOI] [PubMed] [Google Scholar]

- Tzourio‐Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M ( 2002): Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single‐subject brain. Neuroimage 15: 273–289. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ ( 2001): Effects of attention and emotion on face processing in the human brain: An event‐related fMRI study. Neuron 30: 829–841. [DOI] [PubMed] [Google Scholar]

- Zajonc RB ( 1980): Thinking and feeling: Preferences need no inferences. Am Psychol 35: 151–175. [Google Scholar]

- Zaki J, Weber J, Bolger N, Ochsner K ( 2009): The neural bases of empathic accuracy. Proc Natl Acad Sci USA 106: 11382–11387. [DOI] [PMC free article] [PubMed] [Google Scholar]