Abstract

Background: Social interaction depends on a multitude of signals carrying information about the emotional state of others. But the relative importance of facial and bodily signals is still poorly understood. Past research has focused on the perception of facial expressions while perception of whole body signals has only been studied recently. In order to better understand the relative contribution of affective signals from the face only or from the whole body we performed two experiments using binocular rivalry. This method seems to be perfectly suitable to contrast two classes of stimuli to test our processing sensitivity to either stimulus and to address the question how emotion modulates this sensitivity. Method: In the first experiment we directly contrasted fearful, angry, and neutral bodies and faces. We always presented bodies in one eye and faces in the other simultaneously for 60 s and asked participants to report what they perceived. In the second experiment we focused specifically on the role of fearful expressions of faces and bodies. Results: Taken together the two experiments show that there is no clear bias toward either the face or body when the expression of the body and face are neutral or angry. However, the perceptual dominance in favor of either the face of the body is a function of the stimulus class expressing fear.

Keywords: binocular rivalry, emotion, face, body, expression, consciousness

Introduction

Social interaction relies on a multitude of signals carrying information about the emotional state of others. Facial and bodily expressions are among the most salient of these social signals. But the relative importance of facial and bodily signals is still poorly understood. Past research has focused on the perception of facial expressions while perception of whole body signals has only been studied recently. Many studies now provide direct and indirect evidence for visual discriminations of facial expressions in the absence of visual awareness of the stimulus (e.g., Esteves et al., 1994; de Gelder et al., 1999; Dimberg et al., 2000; Jolij and Lamme, 2005; Tamietto et al., 2009). For bodily expressions this is shown in healthy participants (Stienen and de Gelder, 2011) and hemianopic patients (Tamietto et al., 2009). Unattended bodily expressions can influence the judgment of the emotion of facial expressions (Meeren et al., 2005; Van den Stock et al., 2007) and the emotion of crowds is determined by a relative proportion expressing the emotion (McHugh et al., 2011) and influences the recognition of the individual bodily expressions (Kret and de Gelder, 2010). However, the relative importance of facial and bodily signals and its relation to visual awareness is still poorly understood.

In this study we investigate directly the contribution of both signals in a binocular rivalry (BR) experiment. BR forces perceptual alternation when two incompatible stimuli are presented to the fovea of each eye separately. This perceptual alternation can be biased by factors such as differences in contrast, brightness, movement, and density of contours (Blake and Logothetis, 2002). In addition visual attendance is necessary for rivalry to occur (Zhang et al., 2011). Given certain parameters the two stimuli compete with each other for perceptual dominance rather creating a percept that is a fusion of both. This method seems to be perfectly suitable to contrast two classes of stimuli to test our processing sensitivity to either stimulus and to address the question how emotion modulates this sensitivity.

Previous BR studies have shown that meaning of the stimulus influences the rivalry pattern as well (e.g., Yu and Blake, 1992). Subsequent studies have used BR to investigate dominance between faces expressing different emotions (Alpers and Gerdes, 2007; Yoon et al., 2009) and found that emotional faces dominate over neutral faces. In an fMRI study Tong et al. (1998) showed that the fusiform face area (FFA), a category specific brain area for processing faces (Haxby et al., 1994), is activated with the same strength as when the faces were presented in a non-rivalrous condition.

fMRI studies using BR in which emotional faces were contrasted showed that suppressed images of fearful faces still activated the amygdala (Pasley et al., 2004; Williams et al., 2004). When visual signals are prevented to be processed by the cortical mechanisms via the striate cortex the colliculo-thalamo-amygdala pathway could still process the stimulus (de Gelder et al., 1999; Van den Stock et al., 2011). This is in line with recent functional magnetic resonance imaging studies that have suggested differential amygdala responses to fear faces as compared to neutral faces when the participants were not aware (Morris et al., 1998b; Whalen et al., 1998). However, to date no BR experiments have been conducted using bodily expressions or comparing body and face stimuli.

We performed two behavioral experiments addressing relative processing sensitivity to facial and bodily expressions and investigated how specific emotions modulate this sensitivity. First, we performed an experiment involving the rivaling of bodies and faces with fearful, angry, and neutral expressions. We always presented bodies in one eye and faces in the other and asked participants to report what they perceived while stimuli were presented simultaneously for 60 s. In line with BR studies using facial expressions (Pasley et al., 2004; Williams et al., 2004; Alpers and Gerdes, 2007; Yoon et al., 2009) we expected that emotional bodily expressions would dominate over neutral expressions. The first experiment showed a special role of fearful expressions and therefore we isolated this condition in a second, more sensitive, experiment. In this second experiment we used the rivalry pattern resulting from the contrasting of neutral facial and bodily expressions as baseline performance and created two conditions in which fearful bodily expressions were contrasted with neutral facial expressions and fearful facial expressions with neutral bodily expressions. We expected that the perceptual dominance of the stimulus would be a function of the stimulus expressing fear.

Experiment 1

In this first experiment we contrasted bodily and facial expressions directly in a BR design in which the emotion of the faces and bodies were fearful, angry, or neutral.

Materials and methods

Participants

Twenty-two undergraduate students of Tilburg University participated in exchange of course credits or a monetary reward (19 women, 3 men, M age = 19.8 years, SD = 1.2). All participants had normal or corrected-to-normal vision and gave informed consent according to the declaration of Helsinki. The protocol was approved by the local Ethics Committee Faculteit Sociale Wetenschappen of Tilburg University.

Stimuli and procedure

Photos of two male actors expressing fear and anger the same actors performing a neutral action (hair combing) were selected from a well validated photoset as body stimuli (for details see Stienen and de Gelder, 2011). All body pictures had the face covered with an opaque oval patch to prevent that the facial expression would influence the rivalry process. The color of the patch was the average gray value of the neutral and emotional faces within the same actor. The face stimuli of two actors expressing fear and anger and the same actors showing a neutral expression were taken from the McArthur set (http://www.macbrain.org/resources.htm). A total of six pictures of bodily expressions and six pictures of facial expressions were selected for use in the present study.

All stimuli were fitted into an area with a white background of 3.00 × 4.83° enclosed by a black frame of with a border thickness of.29°. The function of the black frame was to enhance a stable fusion. A white fixation dot was pasted on each of the stimuli. Because we used a method which is comparable with the mirror stereoscope the faces and bodies were pasted 11.89° left and right from the center. Pairing the face and body stimuli resulted in 18 unique displays (3 bodily expressions × 3 facial expressions × 2 identities).

One experimental run consisted of 36 trials because the displays were counterbalanced to control for eye dominance. The trials were randomly presented. The stimuli were presented on a 19″ PC screen with the refresh rate set to 60 Hz. We used Presentation 11.0 to run the experiment.

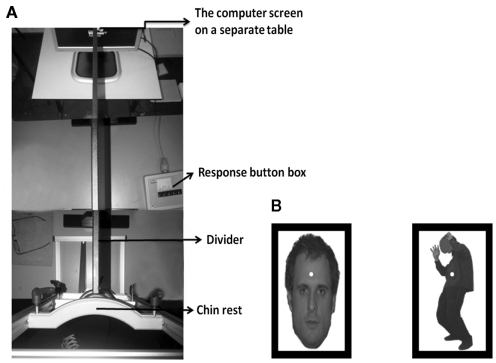

The heads of the participants were stabilized using a chin and head rest. The fMRI compatible BR method we used is described in detail by Schurger (2009) but was here adapted for use out-side of the scanner. A black 70 cm wooden divider was placed between the screen and the middle of the eyes. The total distance between the screen and eyes was 77 cm. Participants wore glasses in which two wedge-shaped prism lenses of six DVA were fitted using gum. The prisms adjusted the viewing angle from which light from the screen enters each eye ensuring that the laterally presented stimuli would fall close to the participants’ fovea. The wooden divider was placed between the eyes to keep the visual signals separated. Besides the fact that this is a low-cost method and it can be used in- and out-side the MRI scanner there is no crosstalk between the eyes (Schurger, 2009) as is the case with for example red–green filter glasses. See Figure 1 for a picture of the experimental setup.

Figure 1.

Experimental setup (A). Example of a stimulus display. We always presented bodies in one eye and faces in the other (B).

Before each trial two empty frames were shown with a black fixation dot in the middle. The participants were instructed to push and hold a button labeled “M” (Dutch for mixture = mengsel) on a response box with the middle finger to initiate a trial, but only if they saw one dot and one frame. This ensured that the participants fused the two black frames throughout the experiment. Subsequently, a facial expression and a bodily expression were presented for 60 s. For an example display see Figure 1. Whenever they saw a face or a body in isolation they were instructed to release the “M” button and push and hold the button corresponding to their percept; the “G” (Dutch for face = gezicht) if they saw a face or the “L” (Dutch for body = lichaam) if they saw a body with either their index or ring finger. The “G” and “L” button was counterbalanced across participants and they always used their right hand. When seeing both stimuli they were told to push and hold the button labeled “M” again. The program registered the time the button was pressed and released. The participants were naïve regarding the presentation techniques and during the experiment no reference to the emotions was made.

Prior to the experimental sessions the participants performed one practice session consisting of two trials. This session used different male identities taken from the same stimulus sets than the ones used in the main experiment. When the participants reported full understanding of the procedures the main experiment started. A total of two runs were presented adding up to a total of 72 trials. After each 10 trials there was a short break. Finally a short validation was performed in a separate session after a 5 min break. All stimuli were presented two times for 2 s adding up to a total of 24 trials (2 identities × 3 expressions × 2 face/body × 2 runs). Participants were instructed to categorize the bodies and faces in fearful, angry, or neutral bodily or facial expressions using three buttons labeled “A” for fearful (Dutch = angst), “B” for angry (Dutch = boos), and “N” for neutral (Dutch = neutraal).

Results and discussion

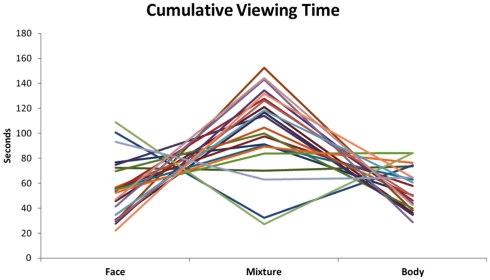

Cumulative viewing time for faces, bodies, and mixed perceptions were calculated per participant irrespective of experimental condition. Two participants indicating having seen mixed percepts more often than two SD below the group average (group mean = 104 s, SD = 34 s) were identified as outliers and excluded from analysis. See Figure 2 for the individual data.

Figure 2.

Cumulative viewing time per face, body, and mixture. The two subjects with the lowest cumulative viewing time of mixtures were removed from analysis.

Wilcoxon Signed Ranks Tests revealed that the cumulative viewing time of faces (M = 51 s, SD = 24 s) and bodies (M = 52 s, SD = 17 s) was equal (Z = −0.075, p = 0.940) while the cumulative viewing time was longer for mixed perceptions (M = 111 s, SD = 34 s) in comparison to bodies and faces (respectively Z = −3.696, p < 0.001 and Z = −3.696, p < 0.001).

Following Levelt (1965) predominance ratios were calculated. The total time participants indicated seeing the face was subtracted from the total time participants indicated seeing the body. This value was divided by the total amount of time the body and the face was seen. If this predominance ratio has a value of zero it would mean they equally perceived the body and the face in time. A positive value means that the conscious percept of the body predominated over face while a negative value means that the conscious percept of the face dominated over body.

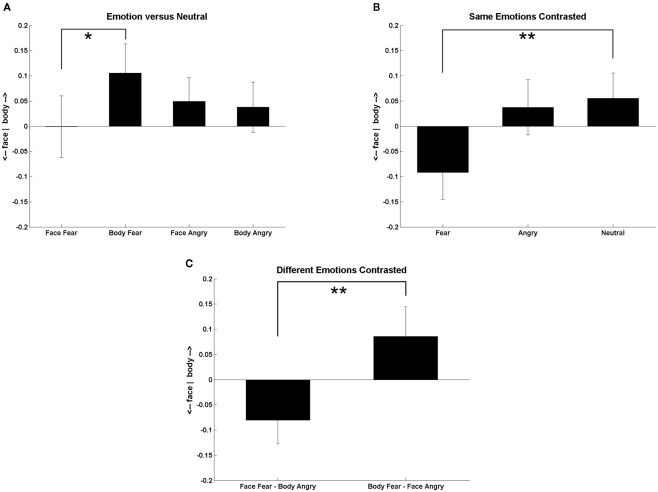

A 3 (bodily expressions) × 3 (facial expressions) GLM repeated measurements revealed a significant interaction between the bodily expressions and the facial expressions on the predominance ratios [F(4,76) = 3.877, p = 0.006] as well as a main effect of facial expressions [F(2,38) = 24.718, p < 0.001]. Figure 3 shows the predominance ratios when the bodily or the facial expression was emotional and the other was neutral (Figure 3A), when the facial and bodily expressions were the same (Figure 3B), and when the facial and bodily expressions both differed (Figure 3C). A difference was deemed significant when the p-value was lower than 0.005 (Bonferroni correction: α level divided by 10 comparisons).

Figure 3.

A positive value means that the body predominates over the face and a negative value that the face predominated over the body. (A) Predominance ratios when the bodily or facial expression was emotional and the other was neutral. (B) Predominance ratios when the facial and bodily expressions were the same. (C) Predominance ratios when the facial and bodily expressions both differed. Error bars represent SEM. One asterisk = p < 0.01, double asterisks = p < 0.005.

Figure 3A shows that when the body expressed fear and the face was neutral the participants reported more often seeing the body than when the face was fearful and the body was neutral [t(19) = 2.903, p = 0.009], but this effect did not survive the Bonferroni correction. The predominance ratios were equal when the bodily or facial expression was angry. Figure 3B shows that when both stimulus classes express fear the face dominates over the body compared when they are both neutral [t(19) = 3.471, p = 0.003]. Figure 3C shows that when the expressions were both emotional but different (fearful and angry) the fearful body triggered a stronger conscious percept of the body when the rivaling face was angry compared to when the face was fearful and the rivaling bodily expression was angry in which case the conscious percept of the face predominated [t(19) = 4.586, p < 0.001]. None of the conditions differed from zero.

To test the main effect of facial expressions pairwise Bonferroni corrected comparisons were performed between the predominance ratios irrespective of bodily expressions. When the facial expression was fearful the face dominated over the body more than when the facial expression was angry or neutral (p < 0.001).

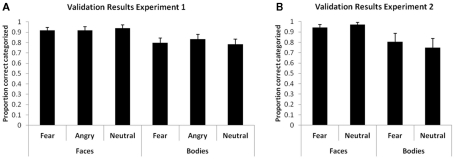

A 2 (face/body) × 3 (fear/angry/neutral) GLM repeated measurements on the correct categorizations in the validation task revealed a main effect of stimulus class [F(1,17) = 14.806, p = 0.001]. It appeared that the facial expressions were categorized better in general regardless of expression. Because the results in the main experiment are specific for fearful expressions a general effect on the recognition of faces alone cannot explain the specific effect. See Figure 4A for the validation results.

Figure 4.

Proportion correct categorizations in the validation session of experiment 1 (A) and experiment 2 (B). Error bars represent SEM.

In line with previous reports on the special role of fearful expressions (Öhman, 2002, 2005; Stienen and de Gelder, 2011) the main finding of this first experiment is that the stimulus class carrying the fearful expression suppresses the percept of the competing stimulus more than angry and neutral expressions do. In addition, participants seemed to be equally sensitive in perceiving the face and the body when the emotional expression was neutral or angry.

Past research has focused on for example the perception of facial or bodily expressions in isolation, but never compared these two important social signals together in one display. Although Meeren et al. (2005) and Van den Stock et al. (2007) showed the influence of unattended bodily expressions on the task relevant facial expressions, this study revealed how the two stimuli compete for visual awareness when they are both task relevant as it the case in natural situations.

There was no indication in this experiment that neutral or angry expressions modulated the rivalry pattern but there were clues indicating that fearful expressions modulated the resulting dominant percept. However, none of the conditions explicitly deviated from the value zero. The value zero meant an equal ratio between reporting the face or the body. To create a more sensitive design we repeated the first experiment but this time with only three conditions; one baseline condition in which neutral facial and bodily expressions were contrasted and two experimental conditions in which either the face or the body was expressing fear. By lowering the amount of conditions we could increase the number of trials.

Experiment 2

In this experiment a baseline was created by contrasting a neutral facial expression with a neutral bodily expression. The resulting perceptual alternation was compared when either the bodily or the facial expression was fearful while the other was neutral. Although these conditions were present in the first experiment as well we wanted to test these conditions in isolation. We hypothesized that based on our first experiment either the body or the face will dominate depending on which is expressing fear.

Materials and methods

Participants

Nineteen new undergraduate students of Tilburg University who had not taken part in the first experiment participated in exchange of course credits or a monetary reward (15 women, 4 men, M age = 19.9 years, SD = 1.6). All participants had normal or corrected-to-normal vision and gave informed consent according to the declaration of Helsinki. The protocol was approved by the local Ethics Committee Faculteit Sociale Wetenschappen of Tilburg University.

Stimuli and procedure

The stimuli were the same as in the first experiment, but this time only the bodily and facial neutral and fearful expressions were used. There were three conditions: a neutral body and face (baseline), a fearful body and a neutral face (fearful body), and a neutral body and a fearful face (fearful face). In total there were 12 different displays (2 body/face × 3 baseline/fearful body/fearful face × 2 identities). One complete run consisted of 24 trials because the displays were counterbalanced to control for eye dominance. A total of two runs were presented adding up to a total of 48 trials. The rest of the procedure remained the same as in Experiment 1.

Results and discussion

Wilcoxon Signed Ranks Tests revealed that the cumulative viewing time of faces (M = 11 s, SD = 6 s) was longer than for bodies (M = 7 s, SD = 3 s), Z = −3.622, p < 0.001. The cumulative viewing time was longer for mixed perceptions (M = 23 s, SD = 8 s) in comparison to bodies and faces (respectively Z = −3.702, p < 0.001 and Z = −2.696, p = 0.007).

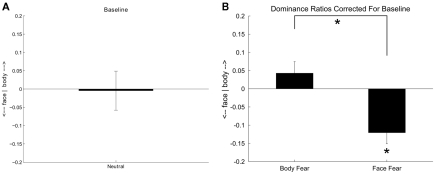

Predominance ratios for all three conditions (baseline, fearful body, and fearful face) were calculated in the same manner as the predominance ratios in the first experiment were calculated. The ratio when the baseline trials were presented was subtracted from the predominance ratios of the fearful body condition and the fearful face conditions.

Figure 5A shows the baseline condition where neutral bodies were contrasted with neutral faces. A one sample t-test showed that the predominance ratio was not significantly different from zero which means that participants equally perceived the body or the face when the expressions were neutral [t(18) = 0.085, p = 0.933]. Figure 5B shows the modulation of the fearful expression when either the neutral body or the neutral face was substituted by respectively a fearful body or a fearful face. As indicated by a paired t-test a fearful body triggered a more dominant body percept and a fearful face triggered a more dominant face percept [t(18) = −4.60, p < 0.001]. When comparing directly to the baseline only fearful faces triggered a more dominant face percept [t(18) = 3.975, p = 0.001].

Figure 5.

A positive value indicates that the body predominated over the face and a negative value that the face predominated over the body. (A) Predominance ratio when a neutral bodily expression is contrasted with a neutral facial expression. (B) Predominance ratios when a fearful body is contrasted with a neutral face and when a fearful face is contrasted with a neutral body. Error bars represent SEM. Asterisk = p < 0.01.

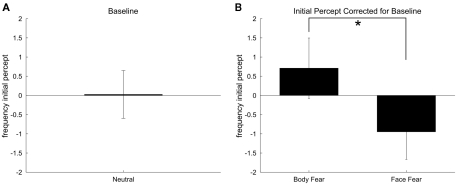

A different way of analyzing the results is by considering the participants’ initial percept per condition (Berry, 1969; Long and Olszweski, 1999; Yoon et al., 2009). The frequency of reporting a face or a body as initial percept when a trial started was indexed. Subsequently the data was treated the same way as the predominance ratios.

As Figure 6 shows these results follow approximately the same pattern. When both the bodily and facial expressions were neutral the reported initial percept was equally bodies and faces [t(18) = −0.042, p = 0.967]. Figure 5B shows that as an initial percept fearful body triggered more a body percept and a fearful face triggered more a face percept [t(18) = −4.60, p < 0.001]. Neither a fearful body nor a fearful face triggered more initial percepts of their own stimulus class when directly compared to baseline performance.

Figure 6.

A positive value means that the body is reported as the initial percept more often than the face, a negative value that the face is reported as the initial percept more often than the body. (A) Initial percept ratio when a neutral bodily expression is contrasted with a neutral facial expression. (B) Initial percept ratios when a fearful body is contrasted with a neutral face and when a fearful face is contrasted with a neutral body. Error bars represent SEM. Asterisk = p < 0.05.

See Figure 4B for the validation results. A 2 (face/body) × 2 (fear/neutral) GLM repeated measurements revealed a main effect of stimulus class on the validation scores [F(1,17) = 11.311, p = 0.004]. It appeared that facial expression was categorized again better in general regardless of emotional expression.

This second experiment shows that indeed the stimulus class expressing fear leads to perceptual dominance of the stimulus class carrying this information, although the effect seems stronger for the fearful faces.

General Discussion

Taken together our experiments show that there is no clear bias toward either the face or body when both have either a neutral or an angry expression. When both the face and the body were expressing fear participants perceived more the face compared to when both categories were neutral. As especially the results of the more sensitive second experiment showed, the perceptual dominance in favor of either the face of the body is a function of the stimulus class expressing fear while the effect was stronger for fearful faces. In the second experiment the faces were perceived longer than bodies. Finally, the validation results of both experiments show that facial expressions were recognized better.

When there is no emotion expressed, the reported conscious percept of the body and face was equal indicating that in this case we have equal processing sensitivity to either stimulus class. Only when signals of fear are transferred by the stimulus the perceptual alternation is influenced by suppressing non-fearful expressions. This is in line with Öhman (2002, 2005) suggesting that fear stimuli automatically activate fear responses and captures the attention as shown in visual search tasks where participants had to detect spiders, snakes, or schematic faces among neutral distracters (Öhman et al., 2001a,b), and real faces when the emotion was not task relevant as in our study (Hodsoll et al., 2011) although this is not always found in other studies (e.g., Calvo and Nummenmaa, 2008). It is known that voluntary endogenous involuntary exogenous attention can modulate the rivalry pattern (Blake and Logothetis, 2002; Tong et al., 2006). However, the relative dominance of perceiving bodies when the body is fearful and the face is neutral in contrast when the face is fearful and the body is neutral is also consistent with a recent study of Pichon et al. (2011) showing that threatening bodily actions evoked a constant activity in a network underlying preparation of automatic reflexive defensive behavior (periaqueductal gray, hypothalamus and premotor cortex) that was independent of the level of attention and was not influenced by the task the subjects were fully engaged in. The fact that bodies expressing fear dominate the visual percept is in line with our recent finding that the detection of fearful bodies is independent on visual awareness (Stienen and de Gelder, 2011).

The dominant perception of the faces and bodies expressing fear was mostly relative but there was one case, in the second experiment, in which the conscious percept of the fearful face dominated in absolute terms. Although the recognition of faces was better regardless of expression in both experiments; this alone cannot explain the specific effect of fearful faces on the rivalry pattern. The fearful face deviated from zero in the second experiment and not in the first probably because of two reasons. Firstly, there were fewer conditions and more trials increasing the signal-to-noise ration. Secondly, the fearful expressions are likely to pop-out more when among neutral expressions without the angry expressions being present within the same experiment. Although, as already mentioned, this pop-out effect for fearful stimuli is not always found in visual search tasks using real faces.

Furthermore, it is possible that the relative proximity to the viewer of the faces in contrast with bodies could explain why the face was more dominantly perceived than baseline and bodies were not. As suggested earlier (de Gelder, 2006, 2009; Van den Stock et al., 2007) the preferential processing of affective signals from the body and/or face may depend on a number of factors and one may be the distance at which the observer finds himself from the stimulus.

The special status of fear stimuli is still a matter of debate, specifically in relation to the role of the amygdala (Pessoa, 2005; Duncan and Barrett, 2007). Theoretical models have been advanced arguing that partly separate and specialized pathways may sustain conscious and non-conscious emotional perception (LeDoux, 1996; Morris et al., 1998a,b; Panksepp, 2004; Tamietto et al., 2009; Tamietto and de Gelder, 2010). Our results are in line with Pasley et al. (2004) and Williams et al. (2004) showing amygdala activity for suppressed emotional faces. This hints at the possibility that the suppressed fearful faces are being processed through the colliculo-thalamo-amygdala pathway.

The underlying process may play an important role in everyday vision by providing us with information about important affective signals in our surroundings. Further research using neurological measures will give us insight whether the relevant pathways are indeed mediating detection of fearful signals independently of visual awareness. In addition, future studies using a different stimulus set or broadening the set to include other emotions would be of great value for the matter of validation and to investigate the generalization of the present findings to other emotions.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Beatrice de Gelder was funded by NWO and by Tango. The project Tango acknowledges the financial support of the Future and Emerging Technologies (FET) programme within the Seventh Framework Programme for Research of the European Commission, under FET-Open grant number: 249858. Bernard M. C. Stienen was funded by EU project COBOL (FDP6-NEST-043403).

References

- Alpers G. W., Gerdes A. B. (2007). Here is looking at you: emotional faces predominate in binocular rivalry. Emotion 7, 495–506 10.1037/1528-3542.7.3.495 [DOI] [PubMed] [Google Scholar]

- Berry J. W. (1969). Ecology and socialization as factors in figural assimilation and the resolution of binocular rivalry. Int. J. Psychol. 54, 331–334 [Google Scholar]

- Blake R., Logothetis N. K. (2002). Visual competition. Nat. Rev. Neurosci. 3, 13–21 10.1038/nrn701 [DOI] [PubMed] [Google Scholar]

- Calvo M. G., Nummenmaa L. (2008). Detection of emotional faces: salient physical features guide effective visual search. J. Exp. Psychol. Gen. 137, 471–494 10.1037/a0012771 [DOI] [PubMed] [Google Scholar]

- de Gelder B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7, 242–249 10.1038/nrg1852 [DOI] [PubMed] [Google Scholar]

- de Gelder B. (2009). Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R. Soc. Lond. B Biol. Sci. 364, 3475–3484 10.1098/rstb.2009.0190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Vroomen J., Pourtois G., Weiskrantz L. (1999). Non-conscious recognition of affect in the absence of striate cortex. Neuroreport 10, 3759–3763 10.1097/00001756-199912160-00007 [DOI] [PubMed] [Google Scholar]

- Dimberg U., Thunberg M., Elmehed K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89 10.1111/1467-9280.00221 [DOI] [PubMed] [Google Scholar]

- Duncan S., Barrett L. F. (2007). The role of the amygdala in visual awareness. Trends Cogn. Sci. (Regul. Ed.) 11, 190–192 10.1016/j.tics.2007.01.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esteves F., Dimberg U., Öhman A. (1994). Automatically elicited fear: conditioned skin conductance responses to masked facial expressions. Cogn. Emot. 9, 99–108 [Google Scholar]

- Haxby J. V., Horwitz B., Ungerleider L. G., Maisog J. M., Pietrini P., Grady C. L. (1994). The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J. Neurosci. 14, 6336–6353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hodsoll S., Viding E., Lavie N. (2011). Attentional capture by irrelevant emotional distractor faces. Emotion 11, 346–353 10.1037/a0022771 [DOI] [PubMed] [Google Scholar]

- Jolij J., Lamme V. A. (2005). Repression of unconscious information by conscious processing: evidence from affective blindsight induced by transcranial magnetic stimulation. Proc. Natl. Acad. Sci. U.S.A. 102, 10747–10751 10.1073/pnas.0500834102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kret M., de Gelder B. (2010). Social context influences recognition of bodily expressions. Exp. Brain Res. 203, 169–180 10.1007/s00221-010-2220-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux J. E. (1996). The Emotional Brain: The Mysterious Underpinnings of Emotional Life. New York, NY: Simon and Schuster [Google Scholar]

- Levelt W. J. (1965). Binocular brightness averaging and contour information. Br. J. Psychol. 56, 1–13 10.1111/j.2044-8295.1965.tb00939.x [DOI] [PubMed] [Google Scholar]

- Long G. M., Olszweski A. D. (1999). To reverse or not to reverse: when is an ambiguous figure not ambiguous? Am. J. Psychol. 112, 41–71 10.2307/1423624 [DOI] [PubMed] [Google Scholar]

- McHugh J. E., Mcdonnell R., O’sullivan C., Newell F. N. (2011). Perceiving emotion in crowds: the role of dynamic body postures on the perception of emotion in crowded scenes. Exp. Brain Res. 204, 361–372 10.1007/s00221-009-2037-5 [DOI] [PubMed] [Google Scholar]

- Meeren H. K., Van Heijnsbergen C. C., de Gelder B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. U.S.A. 102, 16518–16523 10.1073/pnas.0507650102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris J. S., Friston K. J., Buchel C., Frith C. D., Young A. W., Calder A. J., Dolan R. J. (1998a). A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121(Pt 1), 47–57 10.1093/brain/121.1.47 [DOI] [PubMed] [Google Scholar]

- Morris J. S., Öhman A., Dolan R. J. (1998b). Conscious and unconscious emotional learning in the human amygdala. Nature 393, 467–470 10.1038/30976 [DOI] [PubMed] [Google Scholar]

- Öhman A. (2002). Automaticity and the amygdala: nonconscious responses to emotional faces. Curr. Dir. Psychol. Sci. 11, 62–66 10.1111/1467-8721.00169 [DOI] [Google Scholar]

- Öhman A. (2005). The role of the amygdala in human fear: automatic detection of threat. Psychoneuroendocrinology 30, 953–958 10.1016/j.psyneuen.2005.03.019 [DOI] [PubMed] [Google Scholar]

- Öhman A., Flykt A., Esteves F. (2001a). Emotion drives attention: detecting the snake in the grass. J. Exp. Psychol. Gen. 130, 466–478 10.1037/0096-3445.130.3.466 [DOI] [PubMed] [Google Scholar]

- Öhman A., Lundqvist D., Esteves F. (2001b). The face in the crowd revisited: a threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 80, 381–396 10.1037/0022-3514.80.3.381 [DOI] [PubMed] [Google Scholar]

- Panksepp J. (2004). Textbook of Biological Psychiatry. Hoboken, NJ: Wiley-Liss [Google Scholar]

- Pasley B. N., Mayes L. C., Schultz R. T. (2004). Subcortical discrimination of unperceived objects during binocular rivalry. Neuron 42, 163–172 10.1016/S0896-6273(04)00155-2 [DOI] [PubMed] [Google Scholar]

- Pessoa L. (2005). To what extent are emotional visual stimuli processed without attention and awareness? Curr. Opin. Neurobiol. 15, 188–196 10.1016/j.conb.2005.03.002 [DOI] [PubMed] [Google Scholar]

- Pichon S., de Gelder B., Grezes J. (2011). Threat prompts defensive brain responses independently of attentional control. Cereb. Cortex 10.1093/cercor/bhr060 [DOI] [PubMed] [Google Scholar]

- Schurger A. (2009). A very inexpensive MRI-compatible method for dichoptic visual stimulation. J. Neurosci. Methods 177, 199–202 10.1016/j.jneumeth.2008.09.028 [DOI] [PubMed] [Google Scholar]

- Stienen B. M. C., de Gelder B. (2011). Fear detection and visual awareness in perceiving bodily expressions. Emotion 11, 1182–1189 10.1037/a0024032 [DOI] [PubMed] [Google Scholar]

- Tamietto M., Castelli L., Vighetti S., Perozzo P., Geminiani G., Weiskrantz L., de Gelder B. (2009). Unseen facial and bodily expressions trigger fast emotional reactions. Proc. Natl. Acad. Sci. U.S.A. 106, 17661–17666 10.1073/pnas.0908994106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamietto M., de Gelder B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709 10.1038/nrg2844 [DOI] [PubMed] [Google Scholar]

- Tong F., Meng M., Blake R. (2006). Neural bases of binocular rivalry. Trends Cogn. Sci. (Regul. Ed.) 10, 502–511 10.1016/j.tics.2006.09.003 [DOI] [PubMed] [Google Scholar]

- Tong F., Nakayama K., Vaughan J. T., Kanwisher N. (1998). Binocular rivalry and visual awareness in human extrastriate cortex. Neuron 21, 753–759 10.1016/S0896-6273(00)80592-9 [DOI] [PubMed] [Google Scholar]

- Van den Stock J., Righart R., de Gelder B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7, 487–494 10.1037/1528-3542.7.3.487 [DOI] [PubMed] [Google Scholar]

- Van den Stock J., Tamietto M., Sorger B., Pichon S., de Gelder B. (2011). Cortico-subcortical visual, somatosensory and motor activations for perceiving dynamic whole-body emotional expressions with and without V1. Proc. Natl. Acad. Sci. U.S.A. 108, 16188–16193 10.1073/pnas.1107214108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen P. J., Rauch S. L., Etcoff N. L., Mcinerney S. C., Lee M. B., Jenike M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 18, 411–418 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams M. A., Morris A. P., Mcglone F., Abbott D. F., Mattingley J. B. (2004). Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. J. Neurosci. 24, 2898–2904 10.1523/JNEUROSCI.2716-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoon K. L., Hong S. W., Joormann J., Kang P. (2009). Perception of facial expressions of emotion during binocular rivalry. Emotion 9, 172–182 10.1037/a0014714 [DOI] [PubMed] [Google Scholar]

- Yu K., Blake R. (1992). Do recognizable figures enjoy an advantage in binocular rivalry? J. Exp. Psychol. Hum. Percept. Perform. 18, 1158–1173 10.1037/0096-1523.18.4.1158 [DOI] [PubMed] [Google Scholar]

- Zhang P., Jamison K., Engel S., He B., He S. (2011). Binocular rivalry requires visual attention. Neuron 71, 362–369 10.1016/j.neuron.2011.05.035 [DOI] [PMC free article] [PubMed] [Google Scholar]