Abstract

Hypotheses about the emergence of human cognitive abilities postulate strong evolutionary links between language and praxis, including the possibility that language was originally gestural. The present review considers functional and neuroanatomical links between language and praxis in brain-damaged patients with aphasia and/or apraxia. The neural systems supporting these functions are predominantly located in the left hemisphere. There are many parallels between action and language for recognition, imitation and gestural communication suggesting that they rely partially on large, common networks, differentially recruited depending on the nature of the task. However, this relationship is not unequivocal and the production and understanding of gestural communication are dependent on the context in apraxic patients and remains to be clarified in aphasic patients. The phonological, semantic and syntactic levels of language seem to share some common cognitive resources with the praxic system. In conclusion, neuropsychological observations do not allow support or rejection of the hypothesis that gestural communication may have constituted an evolutionary link between tool use and language. Rather they suggest that the complexity of human behaviour is based on large interconnected networks and on the evolution of specific properties within strategic areas of the left cerebral hemisphere.

Keywords: action, language, brain damage, gesture, pantomime, tool use

1. Introduction

Language and complex actions or praxis, including tool use, are cognitive functions that, although present to some degree in many animal species, are uniquely developed in humans. In addition to being distinctive human traits, these two behaviours are mainly controlled by the left cerebral hemisphere in the vast majority of individuals, as demonstrated by neuropsychological observations. This lateralization is reminiscent of the very strong population-level bias for dextrality in the human species, whereby approximately 90 per cent of individuals favour their right hand for fine motor skills [1]. These converging cerebral asymmetries have led researchers to consider the left hemisphere as dominant for language as well as for motor functions [2], and have triggered interest in the potential evolutionary and functional links between manual preference, tool use and language.

The origin of the left hemisphere specialization for language and praxis, including tool use, and its relation to manual preference, is still disputed. For example, some argue that dextrality might have emerged first [3], while others propose that it appeared under selective pressure for common handedness as an advantage for learning tool use through imitation [4]. Other authors suggest that human dextrality is simply a mere consequence of the ancient left lateralization of the cerebral control of vocalization, as seen in many species from birds to mammals. According to this hypothesis, the progressive incorporation of vocalization into an originally gestural language would have led to a left hemispheric specialization for language and motor control [5].

Regardless of its relationship with manual preference, the study of the link between language and tool use is highly relevant to the understanding of the development of these two unique human abilities and the origins of our species. According to archaeological records, tool use emerged about 2.5 Ma, starting with simple behaviours such as modifying rocks for pounding [6] and then progressing towards the construction of more and more refined and complex compound tools through cumulative evolution [7]. In parallel, language is thought to have emerged owing to the social interactions required by the development of human technology, in particular by learning tool-related behaviours through imitation [8]. An increasingly hierarchical organization of language would then have appeared thanks to a pre-existing left hemispheric specialization for hierarchically and sequentially ordered behaviours, initially developed for the manufacture and use of tools [4]. Developmental studies investigating language and object combination behaviours in young children, as well as work carried out in primates and apes, suggest that language and tool use do indeed share some common functional and neural foundations both phylogenetically and ontogenetically during the first years of development [9].

The cerebral basis of tool use in monkeys as well as in humans has been extensively investigated over the past two decades. Iriki et al. [10] first demonstrated that simple tool use, i.e. using a rake to retrieve food placed out of reach, is accompanied in macaques by plastic changes of sensory responses of neurons in the parietal cortex. This seminal work, together with subsequent studies done in monkeys (e.g. [11]), led Frey [12] to propose that simple tool-use behaviours, in which the tool merely constitutes a functional extension of the limb [13,14], rely on experience-dependent changes in areas within the dorsal stream of visual processing [15,16], known to be essentially involved in sensory-motor transformations for the control of actions [17]. Recent studies in humans [18] and in monkeys [19] support this hypothesis. In contrast with simple tool use, complex tool use, like most everyday familiar actions, is a uniquely human skill whereby the use of a tool ‘converts the movements of the hands into qualitatively different mechanical actions’ [20]. This ability depends not only on sensory-motor transformations for the control of action, but also on access to acquired semantic knowledge about the tool and its common uses [21]. So, complex tool use draws upon the collaboration between the aforementioned dorsal stream and the ventral visual pathway [12] thought to be responsible for object recognition and the building and storage of semantic knowledge [16]. Accordingly, data obtained from brain imaging studies of various complex tool-use tasks in able-bodied subjects show that these behaviours recruit a large distributed network within the temporal, parietal and frontal areas, primarily lateralized to the left hemisphere [22,23]. Further evidence for this integration of semantics into sensory-motor control of action can be found in the fact that conceptual knowledge influences the way people spontaneously grasp familiar tools [24]. Importantly, this effect can be disrupted in patients with left but not right side brain damage [25]. Based on these findings, it has been proposed that the unique human abilities of designing and using complex tools originate from adaptations of sensory-motor networks and their integration with cognitive processes pertaining to semantic knowledge about tools, the agent's intentions and contextual information about the task, most of these being also supported by the left hemisphere [26].

The emergence of language, on the other hand, is often conceived of as depending critically on the receiver's ability to decode the sender's message or intentions, subserved by some common representations between the two [27]. The discovery of mirror neurons in the monkey [28] might have provided the link between action execution and recognition that is necessary for communication in general. Mirror neurons fire not only when the monkey executes specific grasping actions, but also when it perceives the same action being performed by another individual. These neurons have been observed in area F5 of the ventral premotor cortex of macaques as well as in the inferior parietal lobule, where some of these neurons also show sensitivity to the goal of the action, independently from the motor details of its execution [29]. These two brain regions are known to be reciprocally connected, and are part of the dorsal visual stream subserving the sensory transformations involved in the control of reaching and grasping actions. Interestingly, in the context of the emergence of language, the putative human homologue of area F5 is the caudal part of the inferior frontal gyrus, which corresponds, on the left side, to Broca's area, known for its involvement in many aspects of language, from phonology to syntax and from production to comprehension [30,31]. In addition, evidence for the existence of a mirror system in humans has been reported [32], providing a possible neural basis for action understanding [33]. This human analogue of the monkey mirror neuron system may in addition support a variety of complex socio-cognitive phenomena, including language [34], although this view is challenged by recent work [35,36]. Regarding the evolution of language, and following Liberman's proposal mentioned earlier [27], the mirror neuron system would thus have allowed the mapping of the sender's message and intentions onto the receiver's own representations, laying the bases for a primitive gestural form of language [5,37]. If this is the case, then Broca's area as we know it now would have developed ‘atop a mirror neuron system for grasping’ through increasingly complex stages of gesture recognition and imitation [38].

Independently from the potential involvement of a mirror neuron system, the relationship between praxis, gesture and language has to be further examined on the basis of recent neuropsychological data [39]. The lateralization to the left hemisphere seems to be the key phenomenon for evolution of both language and complex action systems in humans. Indeed, clinical observations gathered for more than a century have demonstrated that a lesion of the left hemisphere may induce a disruption of language (aphasia) [40] and of complex action systems (apraxia) [41]. These disorders are very often associated in brain-damaged patients [42]. Classical neuropsychological analyses rely on the clinical dissociation of the elementary impairments constituting the aphasic and apraxic syndromes and their confrontation with post-mortem neuroanatomy in order to describe brain–behaviour relationships. This classical approach has led to the elaboration of cognitive models of language and action. Nowadays, neuropsychology has largely benefited from progress in brain imaging, which allows precise investigations of the neural bases of higher brain functions and the mechanisms of their dysfunction.

Here, we will first outline the clinical picture and the first interpretations of aphasia and apraxia. In §3, we will present the more contemporary theoretical accounts of these disorders, contrasting the early localizationist approaches with current views that these behaviours are supported by widespread, dynamic neural networks. Then, we will examine the link between praxis and language by reviewing the effects of brain lesions on several relevant behaviours such as action recognition, repetition and imitation, and gestural communication. We will attempt to compare these alterations despite the fact that most clinical studies in the literature focus on either aphasia or apraxia, and use generally different clinical approaches and different theoretical backgrounds. Finally, we will examine the possibility that both action and language share common cognitive resources.

2. Clinical description and original accounts of aphasia and apraxia

(a). Early interpretations of aphasia and the concept of brain localization

Language refers to a system of signs (indices, icons, symbols) used to encode and decode information so that the pairing of a specific sign with an intended meaning is established through social conventions. Language presents several aspects: phonological, semantic, syntactic, prosodic and pragmatic, which can be differentially impaired after brain lesions [43]. The phonological level refers to the sounds used in the language. Each language thus has a different phonology, as certain sounds will be present in one language but not in another. Semantics refers to the meaning of language, and syntax represents the principles and rules for constructing sentences. The phonological, semantic and syntactic aspects of language are to a vast extent specific to humans. Prosody refers to the voice modulation that accompanies different emotional content or intention, and is classically attributed to the right hemisphere [44,45]. Finally, the pragmatic aspect of language refers to the complex combinations of symbols used to transmit complex ideas and includes many other cognitive functions, supported by both hemispheres [46].

Aphasia corresponds to impairment, following a brain lesion, of phonological, syntactic and/or semantic processing, either in isolation or in association, and may concern either language production or comprehension, or both. These three aspects of language usually being essentially supported by the left hemisphere in right-handers [47], aphasia follows left brain damage in the vast majority of patients [48]. This is an acquired disorder: the term excludes developmental language disorders in children.

(i). The concept of localization

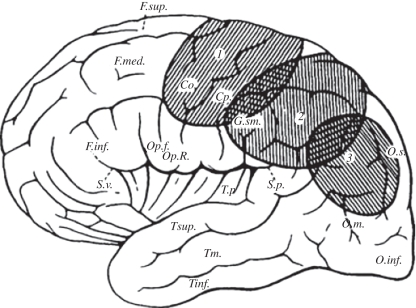

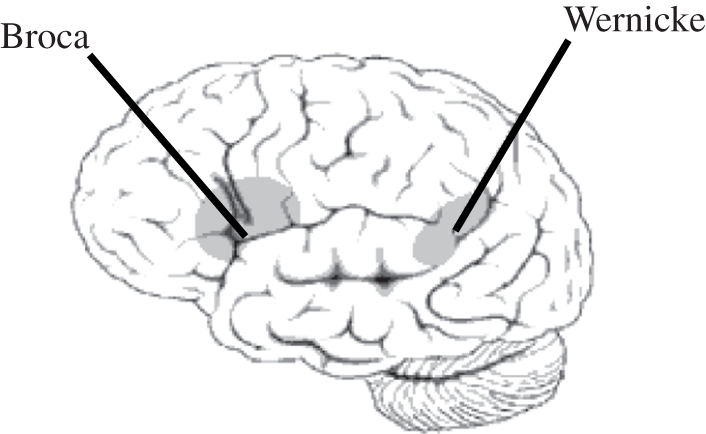

Historically, the topic of aphasia was at the centre of the debate between localized versus holistic explanations of psychological functions of the brain. Franz Joseph Gall was the first to propose separate brain localizations for different behaviours. Broca [40] presented the first clinical case in which focal brain damage was associated with altered language production (figure 1). Later, Carl Wernicke described the defect of language comprehension after a lesion of the posterior section of the superior temporal gyrus. The localization of language functions was then challenged by the holistic theory, which posited a single language function performed by the left hemisphere (review in [49]). Geschwind [50,51] reconsidered localization and proposed that the impairments were the result of disconnection between brain areas (review in [52]).

Figure 1.

Localization of Broca's and Wernicke's areas.

(ii). Types of aphasia

Since the work of Broca & Wernicke in the nineteenth century, the definition and different types of aphasia [53] have been refined. Broca's aphasia (also referred to as non-fluent or agrammatic aphasia) is caused by damage to anterior regions of the brain, in particular to Broca's area, corresponding to the caudal part of the left inferior frontal gyrus (Brodmann areas 44 and 45). It is characterized by reduced, non-fluent agrammatical spontaneous speech with relatively spared comprehension. Fluency impairments include reduced phrase length, altered melody and articulation, reduced word flow or agrammatical sentences. Some over-learned social phrases may paradoxically be preserved and fluent. Comprehension is usually preserved as long as simple, semantically non-reversible sentences are used; however, patients' performance may drastically drop when tested with syntactically complex sentences [54–57]. The severity of Broca's aphasia varies greatly. When the vascular damage includes the anterior insula, the linguistic deficit is accompanied by a motor deficit (the so-called apraxia of speech) characterized by disrupted articulation and prosody [58–61]. Wernicke's aphasia (also called fluent aphasia), on the other hand, is caused by neurological damage to the posterior part of the superior temporal gyrus (Brodmann area 22). It is characterized by paragrammatic, fluent but relatively meaningless spontaneous speech expressed with the appropriate melody or intonation. Spoken language may be limited to jargon with many neologisms, paraphasias or non-words. The comprehension of words, sentences and conversations is relatively poor: patients are typically not aware of their errors. A combination of Broca's and Wernicke's aphasia may be observed in the case of large lesions. The major language impairment observed in those patients is referred to as global aphasia.

Aphasia can also occur without damage to Broca's or Wernicke's areas. Transcortical aphasias are thus due to lesions surrounding these areas. The respective language syndromes are similar to Broca's or Wernicke's aphasia, except that word repetition is preserved. By contrast, conduction aphasia is characterized by a predominant impairment of word repetition, as well as frequent phonemic paraphasias with unsuccessful attempts at self-correction (‘conduite d'approche’), naming difficulties with relatively well-preserved auditory comprehension and fluent, grammatically correct spontaneous speech production. This particular pattern of deficit, leaving unimpaired the linguistic comprehension and production, has led Ardila [62] to suggest that conduction aphasia might not be a linguistic deficit per se. This conception is not new. Indeed, Luria [63] proposed to interpret conduction aphasia as a segmental ideomotor apraxia. Along the same line, Benson et al. [64] reported ideomotor apraxia as a secondary characteristic of conduction aphasia. We will come back to the specific case of conduction aphasia and its relation to apraxia shortly. Regardless, while conduction aphasia has been traditionally viewed as a disconnection between Broca's and Wernicke's areas owing to damage of the arcuate fasciculus [52], recent brain imaging studies have underlined the role of the supramarginal gyrus and neighbouring cortical territories in word repetition [65,66]. This region of the brain is also regarded as central in the cerebral organization of praxis.

(b). Apraxia and the localization of higher motor functions

Apraxia is a disorder of learned, purposive skilled movement that is not explained by deficits of the elemental motor or sensory systems, or by general cognitive impairment [67]. The symptoms are bilateral although they are caused by unilateral, predominantly left-sided, brain lesions. The main symptoms of apraxia are most obvious during performance of meaningful gestures as recognized since Liepmann's original description [41]. The literature on the subject of apraxia distinguishes two types of meaningful gestures: ‘transitive’ gestures, which involve a tool or an object (including tool-use pantomimes), and ‘intransitive’ gestures, which, in fact, are mainly symbolic and communicative (e.g. waving goodbye). Impairments may vary according to the mode of elicitation of the action (i.e. executed either on command or on imitation), and may also affect action recognition. Specific impairments can be selectively observed in the case of focal lesions, but they are more frequently combined. The skilfulness of the patient's movements depends on the conditions and context of their elicitation [68].

(i). Types of apraxia according to Liepmann

Liepmann proposed that performing a gesture is based on the collaborative interaction of central processes. From a visuokinaesthetic image of an intended motor act, a ‘formula’ of movement is derived within the left posterior cortical areas. During gesture performance, this representation is ‘transcoded’ to activate the appropriate muscle groups supported by ‘kinaesthesic memories’ of learned movements stored in the sensorimotor cortex. This step requires the existence of intact connections between the posterior cortical areas and the sensorimotor cortex [41].

Liepmann distinguished three types of apraxia (figure 2). Ideational apraxia corresponds either to a disturbance in the ‘movement formula’ or to a lack of access to this representation. It is characterized by inadequate use of objects or by the wrong arrangement of the various steps of sequential actions. Ideomotor apraxia corresponds to a disconnection between the formula and ‘kinaesthesic memories’ owing to a lesion within the left posterior parietal lobe. The ‘innervatory patterns’ are preserved, but their activation by the formula is impossible or impaired. It is characterized by adequate movements, performed in response to a command or self-generated, but their performance is degraded. Melokinetic apraxia corresponds to a generalized clumsiness owing to a lesion of kinaesthetic ‘memories’ stored in the sensorimotor cortex.

Figure 2.

Topography of left hemisphere lesions leading to the three types of apraxia according to Liepmann [41]. 1: Melokinetic apraxia; 2: ideokinetic or ideomotor apraxia; 3: ideational apraxia.

(ii). Other neuropsychological models of apraxia

Geschwind [50,51] reconsidered the question of localization and confirmed that in right-handed persons, the left hemisphere is dominant for complex gestures. However, he focused more on the importance of white matter lesions, than on stored representation of gestures. For him, the inability to pantomime the use of an object upon verbal command is rather the consequence of the disconnection of frontal premotor areas from Wernicke's area owing to a lesion of the left arcuate fasciculus. Geschwind [50] proposed the same interpretation and anatomical correlates to account for conduction aphasia, a syndrome that bears many functional and anatomical resemblances with ideomotor apraxia.

More recently and in line with Liepmann's original proposal, Roy & Square [69] proposed a two-system action model: a conceptual system, including semantic knowledge of tools, objects and actions, and a production system representing the sensorimotor knowledge of action as well as perceptuo-motor processes that allow its organization and implementation. The conceptual system defines the action plan according to the knowledge of objects and tools, the context-independent knowledge of action and the knowledge of the arrangement of simple actions in a sequence. The production system includes motor programmes independent of the effectors, which permit the action to be carried out according to the context and needs. Praxic disturbances can thus be interpreted in terms of impairment of the conceptual system (ideational apraxia) and/or the production system of action (ideomotor apraxia). In ideomotor apraxia, knowledge pertaining to objects and tools is preserved and patients can therefore describe and identify actions associated with tools and appreciate their adequacy, while being unable to perform them adequately.

Heilman & Rothi (review in [70,71]) proposed a cognitive model inspired by models of the language system, in order to account for all the dissociations observed in patients depending on which modality is used to elicit gestures (verbal command, presentation of objects, imitation, etc.). This model is constituted of several modules, which process specific information and are centred on an action semantic system. They propose that sensory information accesses the system via an action input lexicon that contains information about the physical attributes of perceived actions (mainly visual representations). The semantic action system then integrates information transferred from the action input lexicon and is at least partially independent from other forms of semantic knowledge. The action output lexicon subsequently includes information pertaining to the physical attributes of an action to be performed (mainly kinaesthetic representations). Apart from this indirect lexical route, a direct, non-lexical route, based mainly on visual processing of perceived gestures, controls the imitation of meaningless or unfamiliar gestures, with a possible dissociation between those routes. Based on the observation that visual recognition of action and movement can be impaired in some apraxic patients with posterior lesions, Heilman et al. [72] proposed that two forms of ideomotor apraxia exist: one owing to posterior lesions, destroying the areas containing visuokinaesthetic engrams (and thus also impairing gesture recognition) and the other owing to more frontal lesions, potentially disconnecting motor areas from visuokinaesthetic engrams, therefore preserving gesture recognition.

De Renzi & Luchelli [73] investigated ideational apraxia with specific tests: multiple-step tasks, and tasks requiring the understanding of tool and/or object properties (tool selection, alternative tool selection, gesture recognition). They found that the scores for multiple and single tool-use tasks were correlated with each other but not with the results of a test assessing ideomotor apraxia. They concluded that ‘ideational apraxia is an autonomous syndrome, linked to left hemisphere damage and pertaining to the area of semantic memory disorders rather than to that of defective motor control’.

An important characteristic of apraxia is the well-known ‘automatic/voluntary’ dissociation whereby patients fail to perform adequate gestures on command while their performance on similar self-initiated actions in daily life is preserved, showing that the full context of action is particularly important for the retrieval and execution of adequate gestures. This dissociation has been confirmed by experimental methods [74]. This observation is reminiscent of the relative sparing of over-learned social phrases described in non-fluent aphasic patients [53].

Early theories of the cerebral bases of praxis and language have thus focused on localizing different aspects of these faculties to specific areas, interpreting apraxic and aphasic disorders in terms of either damage to one of these areas or disconnection between them. However, these conceptions have failed to account for many disorders exhibited by brain-damaged patients, leading to the emergence of more complex and integrated conceptions of the brain bases of these complex cognitive faculties.

3. Contemporary theories for the cerebral organization of language and praxis

Beyond the neuropsychological approach, more recent work has attempted to explain the different manifestations of apraxia as dysfunctions of the sensorimotor systems for action and object manipulation, as recently identified by electrophysiological studies in monkeys [75]. In particular, Buxbaum [76] proposed a model of gesture production aimed at reconciling the classical neuropsychological interpretations of apraxic disorders with this more recent neurophysiological framework (review in [77]). In line with classical neuropsychological models [41,78], this model assumes the existence of gesture engrams, which are conceived of as memory-stored sensorimotor (i.e. non-verbal) representations of familiar gestures, involved in both gesture production and recognition [72]. These engrams are thought to be stored in the left inferior parietal lobule [79] at the interface between the ventral and dorsal streams of visual processing. The evocation of a familiar gesture, for example by verbal command or presentation of the associated tool, would thus activate the appropriate gestural engrams via the lexical semantic system, located primarily in ventral regions. Gesture execution would then be controlled by a dynamic system constituted of the parieto-frontal networks of the dorsal stream. In accordance with this view, patients with damage to the inferior parietal lobule may be able to normally grasp objects based solely on their physical properties (i.e. affordances), while being impaired when asked to grasp the same objects in order to use them, which requires integration of conceptual knowledge of tools and their function [80,81]. More recently, Frey [26] proposed a more dynamic conception of the role of the left inferior parietal cortex. Rather than storing gesture engrams, this region would assemble praxis representations in order to fit all the constraints imposed by conceptual knowledge about tools and their function, the task context, the agent's intentions, etc.

Recent conceptions on the functional anatomy of language have also largely departed from the classical localizationist views exposed in §2, now favouring the idea that language might be organized in networks rather than specialized brain areas. Indeed, the classical concept according to which the frontal lobe is responsible for speech production and temporal areas for language comprehension could not account for the cases of patients presenting, for example, a syndrome of Broca's aphasia with no lesions to Broca's area, or deficits of speech comprehension associated with a lesion in Broca's region [54,55,57]. Furthermore, recent studies have confirmed, for example, the involvement of Broca's area in language comprehension in healthy individuals, at the phonological [31,82,83], lexical [31,84] and syntactical levels [85–87]. These observations, together with the development of neuroimaging techniques, have led researchers to consider that different, partially overlapping networks of superior temporal, posterior parietal and ventral prefrontal areas underpin the phonological, semantic and syntactic levels of language [47].

Current theoretical accounts of language and praxis thus favour the conception that these complex cognitive faculties are subserved by neural networks widely distributed in the left hemisphere. The recruitment of different neural systems would then depend on the exact nature, constraints and context of the task [26,88]. The multiple aspects of aphasia and apraxia would therefore result from disruption of, or the imbalance in, the interactions between parts of these networks, rather than from localized damage to a brain area supporting a specific function. Regardless of this evolution of the theoretical framework for the functional neuroanatomy subserving language and praxis and their disorders, it appears clearly that both cognitive abilities rely on largely overlapping networks, with critical nodes located in the superior temporal, rostral inferior parietal and ventral premotor cortices.

We now turn to examine the implications of language and praxic disorders for action recognition, imitation and gestural communication, which, as we highlighted in §1, might have constituted critical abilities for the propagation of human technology and language evolution.

4. Action recognition, imitation and gestural communication in aphasia and apraxia

(a). Action recognition

As mentioned above, Heilman et al. [72] showed that the recognition of transitive tool-use gestures was impaired in some patients with ideomotor apraxia, who were therefore also impaired for the execution of these gestures. These findings were later confirmed [89,90]. Neuroimaging experiments also showed a similar interaction between action observation and production (and imagination) in healthy humans [91]. The observation of meaningful actions activates the left hemisphere in the frontal and temporal regions while the observation of meaningless actions involves mainly the right occipito-parietal pathway [92].

The link between observation and imitation of object-related actions in apraxic patients has been recently re-examined by Buxbaum et al. [93] and Pazzaglia et al. [94] with advanced lesion reconstruction techniques. Buxbaum et al. observed a close relationship between performance in pantomime recognition tasks and imitation of object-related actions. Further, in line with Heilman et al. [72], the neuroanatomical analysis showed that lesions located in the inferior parietal lobe and in the intraparietal sulcus were significantly associated with deficits in the recognition of transitive gestures. Pazzaglia et al. also observed a close correlation between action execution and recognition in a subgroup of apraxic patients. However, the impairment of recognition in their sample of patients was correlated with lesions at the level of the left inferior frontal gyrus, not of the inferior parietal lobe. The authors of these studies have argued that the discrepancies in their main findings were probably due to different task structures. Pazzaglia et al. thus proposed that their recognition task required judgement of the ultimate goal of transitive gestures (or the symbolic meaning of intransitive gestures), while Buxbaum et al.'s experiment relied more on identifying kinematic cues. In addition, Buxbaum & Kalenine [77] suggested that the response in Pazzaglia et al.'s action-recognition task might have been based on structural rather than functional cues. While this question remains open, these two studies confirm the involvement of the left inferior parietal and ventral premotor cortices in action recognition, possibly at different levels, as well as a tight, although not absolute, functional relationship between action recognition and imitation.

Aphasia has also been known for a long time to induce deficits in the recognition of symbolic gestures and pantomimes in some patients [95]. The question of the comprehension of non-verbal signals by aphasic patients raises an important theoretical issue: is aphasia an impairment specific to the linguistic domain, or is it due to a more general cognitive disorder affecting the use of symbols and signs (asymbolia)? The asymbolia hypothesis has been supported by the experimental work of Duffy & Duffy [96], which showed strong correlations between scores on pantomime execution and recognition, and language tasks. However, another study concluded that the deficit in pantomiming observed in some aphasic patients might be due to associated apraxia rather than to asymbolia [97]. Aphasic patients are indeed impaired in the comprehension of pantomime in comparison to healthy subjects, but with some dissociation (review in [98]). The left, predominantly frontal, localization of lesions impairing action recognition was confirmed by Tranel et al. [99]. A recent controlled study by Saygin et al. [100] compared the recognition of actions described by either linguistic (written sentences) or non-linguistic (pictures) cues. Aphasic patients tended to show deficits in both domains, but they were more impaired with linguistic cues, and were also more sensitive to semantic distracters. The authors thus rejected the interpretation of aphasia as fully caused by asymbolia, but nonetheless acknowledged the existence of strong but variable links between linguistic and non-linguistic processes involved in action recognition. Furthermore, the impairment of action recognition in these patients was associated with lesions involving the left inferior frontal areas, in line with Tranel et al.'s conclusion. The involvement of the left inferior frontal gyrus in action recognition has also been shown in other tasks. For example, Fazio et al. [101] reported that Broca's aphasic patients, though not apraxic, had specific impairment in action and tool naming with respect to object naming, thus supporting the idea that frontal regions might be crucial for action and tool recognition [102]. This specific deficit underlines the double competence of Broca's region, which is not only a language area relating to various aspects and levels of language, but is also a part of the premotor cortex, and as such, is involved in action representation [103]. This consideration has to be regarded in the actual context of embodied language comprehension. Indeed, the processing of action verbs describing leg, mouth or hand movements has been reported to activate motor and premotor areas in a somatotopic manner [104], and may interfere with or facilitate movement execution [105]. These findings suggest that cortical motor regions are involved in action word representation.

(b). Gesture imitation and speech repetition

Defective imitation of meaningful or meaningless gestures has often been considered a distinctive sign of apraxia [71,73], and has thus been studied quite extensively. The observation that apraxic patients may be impaired for the imitation of meaningless gestures while being able to reproduce meaningful ones flawlessly [106] has prompted researchers to investigate the processes underlying the imitation of both types of gestures. It has thus been proposed that gesture imitation may be subserved by two distinct routes: a first semantic and indirect route, and a second direct and non-semantic route [106,107]. The former is thought to support imitation of meaningful gestures, while the latter would allow imitation of meaningless gestures by matching the perceived action to the appropriate motor plans. The direct route, however, might subserve the imitation of meaningful gestures in case of damage to the indirect route. In his seminal work on the topic, Goldenberg and co-workers [106,108] showed that apraxic patients impaired in the imitation of meaningless gestures also showed a deficit in matching the experimenter's posture on a manikin. According to Goldenberg, this demonstrated that the transposition by the direct route of an observed posture into a motor scheme requires the movement to be coded on the basis of a general knowledge of the human body structure. A deficit in imitation of meaningless gestures would thus result from the disturbance of this structural body knowledge, a conceptual body representation that would be independent of the body involved in reproducing a movement (i.e. the subject's, the examiner's or a manikin). This representation is probably supported by the left inferior parietal lobule, which was selectively damaged in Goldenberg and Hagmann's patients [106].

Recently, Schwoebel et al. [109] sought to further investigate the involvement of different types of body representation in meaningful and meaningless gesture imitation. Scores on tasks evaluating semantic body knowledge and the body schema (i.e. a dynamic representation of the current relative position of body parts for guiding actions) strongly predicted left brain-damaged patients' performance on imitation and production of meaningful gestures. In contrast, imitation of meaningless gestures depended only on the body schema. These findings confirmed the preferential use of a semantic route for the imitation of meaningful gestures, and the existence of a direct route bypassing semantic knowledge for the imitation of meaningless gestures. Taken together, these observations suggest that imitation of meaningless gestures is more complex than a direct matching between bodies, and is likely to involve both dynamic and more abstract representations of the body.

Gesture imitation is not often evaluated in aphasic patients. However, in the linguistic domain, speech repetition may be conceived of as an equivalent to imitation for manual gestures. According to this idea, speech repetition would be an auditory rather than a visuomotor form of imitation. As mentioned earlier, the idea that language perception relies on audio-motor decoding is not recent and has been defended by Liberman & Mattingly [27] in their motor theory of speech perception. Recent experimental data seem to confirm the existence of a motor resonance of the phonemic percept [110,111]. Speech repetition is often impaired in aphasia, in particular in the case of conduction aphasia. Interestingly, for the purpose of comparing the processes involved in the control of gestures and language, conduction aphasia seems to be associated with lesions of the supramarginal gyrus and the neighbouring planum temporale [65,66], a region also thought to be critically involved in gesture imitation. In addition to impaired repetition, patients with conduction aphasia often exhibit a particular behaviour known as ‘conduite d'approche’, characterized by repeated attempts to get closer and closer to the correct utterance. The errors made by these patients are mostly phonemic paraphasias (sound-based speech errors) in which articulators are erroneously selected (e.g. ‘basecall’ for ‘baseball’, the /c/ being posterior with respect to the anterior /b/ in terms of the articulators involved). This is similar to the difficulties seen in patients with ideomotor apraxia when trying to match the position of their hand with respect to other body parts to that demonstrated by the experimenter [106]. This parallel between imitation and repetition fits very well with the case described by Ochipa et al. [107], of a patient with a lesion restricted to the inferior parietal lobule and the posterior superior temporal cortex, who exhibited conduction aphasia and apraxia with a particular deficit for imitating tool-use pantomimes. Based on the fact that gesture recognition was preserved in this patient, as speech comprehension usually is in patients with conduction aphasia, the authors even proposed to term this deficit ‘conduction apraxia’. Recently, strong support in favour of common functional and anatomical bases for repetition and imitation came from an investigation in patients suffering from primary progressive aphasia who often show various degrees of impairment in different aspects of language and praxis. In their study, Nelissen et al. [112] showed that their patients' deficit in speech repetition correlated strongly with their impairment of gesture imitation and discrimination. Lesions in the left rostral inferior parietal lobe, extending to the posterior superior temporal cortex, were significantly associated with these combined impairments. Further, tractography analyses showed that the region most often involved in the lesion was the relay for indirect connections between the superior temporal cortex and the inferior frontal gyrus, offering convincing evidence for a shared neural substrate for gesture imitation and speech repetition and a central role of the left inferior parietal cortex in these abilities.

(c). Pantomime and gestural communication

In apraxic patients, meaningful intransitive gestures have been much less studied than transitive gestures involving object or tool use. This may appear paradoxical given that intransitive gestures, as they are commonly tested, are in fact symbolic gestures (e.g. waving goodbye) strongly related to gestural expression and thus potentially linked to language. However, as argued by Goldenberg et al. [113], pantomimes of transitive gestures are also of interest for the present purpose, as they also constitute a link between tool use and communicative manual actions. Indeed, these gestures symbolize the tool and the associated action, and may be used to communicate or to demonstrate proper use of the tool. Pantomimes may thus have been essential in the development of human technology and of a gestural language.

In the context of examining the links between the cerebral control of gestures and language, it is interesting to note that the concepts of transitivity and intransitivity also apply in language to verb argument structure. Indeed, verbs can be differentiated as a function of the number of arguments they require. Intransitive verbs only need an agent, while transitive verbs need an agent and an object. Verbs can even be ditransitive, and require an agent, an object and a recipient. Broca's aphasic patients, whose linguistic production is agrammatic, tend to produce simple rather than complex verb argument structure [114], thus favouring intransitive rather than transitive verbs, as apraxic patients do with gestures (see below). Brain imaging investigations of the neural network underlying the processing of verb argument structure have highlighted not only the role of anterior language areas (i.e. the inferior frontal gyrus), but have also put forward the decisive role played by the parietal cortex, and especially the angular gyrus [115,116].

In line with classical reports of studies in apraxic patients, Mozaz et al. [117] showed that apraxic patients are less impaired when performing intransitive than transitive gestures. This was later confirmed by Buxbaum et al. [118], who found, in addition, a much weaker relation between imitation and recognition of intransitive gestures. In agreement with this, Heath et al. [119] found that a similar percentage of patients with right or left hemispheric damage were impaired for performing meaningful intransitive gestures, suggesting that these gestures are neither unequivocally linked to apraxia nor strongly lateralized. Impairment of tool-use pantomimes would thus be more specific of apraxia than that of intransitive gestures. However, recent studies have challenged the classical view of distinct anatomo-functional bases for the production of transitive and intransitive gestures. Instead, these reports [117–119] suggest that both categories of gestures might rely on the same mechanisms, with transitive gestures being simply more difficult to perform than intransitive ones. Tool-use pantomime is a particularly complicated task since it requires motor imagery and cognitive analysis of the gesture before producing it in detail. In contrast, actual tool use may be guided by the structure of the object itself (affordances) as well as sensory information during hand–object interaction. Accordingly, as pointed out by Carmo & Rumiati [120], no double dissociation has been found between the performance of transitive and intransitive gestures in left-brain-damaged patients: while some patients have been described with impaired transitive and preserved intransitive gestural performance, the reverse profile has, to our knowledge, never been reported (see Stamenova et al. [121] for cases in right-brain-damaged patients). Carmo & Rumiati thus analysed the performance of healthy individuals on an imitation task involving transitive and intransitive gestures, and found that they were better at imitating intransitive than transitive movements, in accordance with apraxic patients' difficulties with transitive gestures. In keeping with this idea, Frey [26] and Kroliczak & Frey [122] observed that, in healthy individuals, transitive and intransitive gestures activate the same, hand-independent network in the left hemisphere, suggesting indeed that the same mechanisms might be at play in both conditions. Regarding the neural substrate for the ability to pantomime tool-use actions, recent findings challenged the long-standing notion that pantomimes were primarily supported by the left inferior parietal cortex, thought to store praxic representations [41,72,93]. Indeed, a recent study in apraxic patients showed, using current lesion reconstruction and mapping techniques, that the critical region for the ability to pantomime tool-use actions is rather the posterior part of the left inferior frontal gyrus [113].

In sum, the ability to pantomime, which constitutes a link between manual tool use and communication, is very often disrupted in apraxia, and seems to rely mainly on the integrity of the left inferior frontal gyrus. The impact of brain damage and apraxia on intransitive communicative gestures, however, requires further investigation. While neuroimaging studies in healthy individuals suggest that they are supported by the same neural substrate, recent neuropsychological reports suggest that intransitive, symbolic gestures might be less tied to left hemisphere function than transitive gestures [119,121]. In particular, their relation to genuine gestural communication, language in general, and tool-use gestures, still needs to be explored.

Gestural communication, and the link between language and gestures, has been more largely studied in aphasic patients. These studies have considered several classes of communicative gestures, in contrast to the specific case of pantomime illustrated above and extensively examined in apraxic patients. McNeill [123] proposed a classification of these communicative gestures, organized along a continuum. He distinguishes co-speech gestures, spontaneously used during communication, ‘language-like gestures’ (grammatically integrated into the utterance), pantomimes (where speech is not necessary), emblems (which have a standard of well-formed-ness, like the sign ‘ok’) and finally sign languages used by the deaf. Along this continuum, idiosyncratic gestures are progressively replaced by socially regulated signs, the obligatory presence of speech declines (i.e. co-speech gestures accompany spoken language but are not sufficient to convey meaning by themselves, in contrast to sign languages), while language properties embedded in gestures increase. On the contrary to co-speech gestures, sign languages have genuine linguistic properties, with distinctive semantics and syntactic rules, like spoken languages do.

Co-speech gestures are frequent in human communication and have diverse functional roles with large cultural variations [124], but cannot be considered as linguistic gestures by themselves [123]. They are idiosyncratic and individual, and convey meaning by different ways (iconic, metaphoric, deictic, beats, cohesive, etc.) that are radically different from language. First, co-speech gestures are global and synthetic (i.e. neither combinatorial nor hierarchical). Second, they have no standard of form. Third, they lack duality of patterning (in contrast to words where sounds and meanings are both separately structured and arbitrarily linked). However co-speech gestures are intimately linked to language since gestures and speech are synchronous and ‘semantically and pragmatically co-expressive’. According to McNeill's hypothesis [123] ‘speech and gesture are elements of a single integrated process of utterance formation in which there is a synthesis of opposite modes of thought. Utterances and thought realized in them are both imagery and language’. Regarding the impact of aphasia on co-speech gestures, while it is recognized that aphasic patients may spontaneously use them, there is still no agreement on their level of gestural impairment relative to the level of verbal impairment. For some authors, gestural and verbal expressions are both impaired, owing to a common deficit in communication [125,126]. Other studies claim that gestural expression is less impaired than language, or even that it is more developed than in healthy individuals, perhaps as a result of compensation [46,127]. The neural bases of expressive gestures in healthy individuals have attracted much attention in recent years [128]. However, little is known about the control of expressive gestures in aphasic patients. Several clinical trials have analysed the use of gestures for the rehabilitation of aphasic patients but the results are still unclear [129–133].

At the opposite end of McNeill's continuum, other studies have investigated the impact of brain lesions on the ability to sign. Poizner et al. [134,135] observed deaf signers who became aphasic for sign language. Importantly, the impairment was specific to the linguistic components of sign language and dissociated from the production or recognition of non-linguistic gestures and the general ability to use symbols. Studies using functional neuroimaging in neurologically intact deaf signers demonstrated that the neural systems supporting signed language were lateralized to the left hemisphere and very similar to the systems supporting spoken languages, with the additional involvement of the left parietal lobe [136].

In sum, a direct comparison between the impact of apraxia and aphasia on gestural communication is difficult based on the existing literature. Indeed, genuine co-speech gestures are usually examined only in relation to aphasia, without a clear analysis of the impact of potentially associated apraxia. In addition, the impact of apraxia on intransitive gestures, which are mostly emblems as classically assessed in the clinical examination, needs further investigation. Conflicting data in the literature on the impact of aphasia on communicative gestures may also be due to confusion between different categories of motor behaviours along McNeil's continuum, bearing very different relationships with speech and language. In addition, little is known about the spontaneous use of different kinds of communicative gestures in aphasic and apraxic patients. Despite these limitations, however, some links between gestures and language have been demonstrated. In particular, spoken and signed languages are supported by largely overlapping networks [136] (although they both can be dissociated from the production and recognition of non-linguistic gestures [134]). In addition, pantomime of tool use relies mainly on the brain region encompassing Broca's area [113]. Together, the findings reviewed in this section clearly show that if the networks subserving the various aspects of language and praxis are not identical, they largely overlap, with key nodes in the left inferior frontal, inferior parietal and superior temporal cortices.

Further, several studies suggested functional links between language and praxis, raising the possibility of shared processes between both cognitive abilities. In §5, we will try to provide clues as to whether language and praxis may indeed share some common resources.

5. Common resources for praxis and language

The left cerebral hemisphere is considered to play a dominant role for many aspects of praxic and linguistic behaviours. It is certainly true that some functions related to praxis (e.g. naturalistic multi-step actions [137]) or language (e.g. pragmatic communication [46]) seem to be supported by both hemispheres, or even to be lateralized to the right hemisphere (e.g. matching of finger postures [138] or prosodic processing [44]). However, most praxic and linguistic processes appear consistently lateralized to the left hemisphere. As reviewed earlier, this is true for phonological, semantic and syntactic processing for speech comprehension and production [47]. As for praxis, the following functions depend on the left hemisphere: pantomime [93], actual tool use [68], gesture imitation and recognition [93,107,112] and conceptual knowledge about action and tools [73]. In sum, while cases of atypical cerebral dominance for praxis and language have been described in the neuropsychological literature [138,139], it remains that aphasia and apraxia are both caused by left hemispheric lesions in the vast majority of patients [48,71]. With respect to the evolutionary hypotheses outlined in the introduction, it is interesting that the cerebral lateralization for praxis is more strongly linked to the dominance for language than to manual preference [138,140,141]. This might be due to the necessary interactions between praxic representations and other linguistic-related processes, such as semantics and conceptual knowledge [26,76].

Beyond the observation that these symptoms usually arise after lesions to the same hemisphere, it is striking that apraxia and aphasia are very often associated in right-handed patients with left brain damage [71]. However, the frequent co-occurrence and common hemispheric lateralization are not sufficient to conclude that aphasia and apraxia reflect the same impairment. For example, apraxic patients may exhibit deficits that are linked to non-linguistic processes, such as mechanical reasoning. Indeed, they often have difficulties in solving mechanical puzzles, which require inferring the function of a tool or of an object solely from its structure [142], or in technical reasoning [143]. Thus, praxis implies some left lateralized cognitive ability important for actual tool use but independent from linguistic capacity.

The frequent association of aphasia and apraxia is often seen as the mere consequence of the fact that the cortical regions mediating language and praxis overlap and are vascularized by a common arterial blood supply; thus, there is a high probability that they will both be damaged in the case of stroke. The fact that the co-occurrence of aphasia and apraxia is almost systematic [144] has brought some support to this conception. A clinical study specifically aimed at evaluating the frequency of the co-occurrence of apraxia and aphasia in a large sample of left-brain-damaged right-handed patients indeed reported the existence of a double dissociation between these two disorders in a minority of cases: of 699 patients, 10 had apraxia without aphasia, and 149 were aphasic but not apraxic [42]. In neuropsychology, the existence of a double dissociation between two disorders is usually considered as evidence for a functional independence of the two corresponding cognitive functions (e.g. [145]). However, as argued by Iacoboni & Wilson [146], it is well known that cerebral organization shows large inter-individual variability at many levels. It is thus possible that the minority of patients showing this double dissociation between aphasia and apraxia, especially in such low proportions as for apraxia without aphasia, may rather represent the two tails of the probabilistic distribution of inter-individual variability for the anatomo-functional organization of language and praxis systems. According to this view, a large majority of individuals would actually have shared neural networks for both abilities. Other interpretations of the frequent association of aphasia and apraxia have thus proposed that both disorders reflect the disturbance of common mechanisms, which may be conceived, for example, as a global communicative or semantic competence [96], or as a left hemisphere specialization for the control of complex sequences [2,124,147]. While it seems unlikely, in light of the literature reviewed here, that apraxia and aphasia strictly reflect a common disorder, many findings coming from neuropsychology and other fields suggest that language and praxis networks may actually intersect and share some common processes.

In particular, the motor aspects of speech and praxis, especially their requirements for sequentially selecting and combining successively different effectors, have long been considered to be potentially underpinned by a common specialization of the left hemisphere for such behaviours [147]. Furthermore, as we have discussed previously, speech repetition and gesture imitation, in addition to bearing similarities as gestural or linguistic imitative behaviours, seem to share common anatomo-functional bases. Indeed, the left inferior parietal cortex, and in particular the supramarginal gyrus, is critical for gesture imitation [79,107,112,148] as well as for repetition [65,66,112]. In addition, functional magnetic resonance imaging studies have allowed a network subserving audio-motor transformations and phonological processing, which are necessary for speech production and repetition, to be delineated [47,149]. This network links the anterior part of Broca's region (see also Kotz et al. [31] for the involvement of Broca's area in phonology perception) to the posterior part of the planum temporale and the supramarginal gyrus. A similar network has also been implicated in gesture imitation [113]. In line with these observations, a common underlying mechanism for repetition and imitation has recently been proposed by Iacoboni & Wilson [146]. In their model, the left inferior parietal cortex is thought to have the critical role of reinforcing associations between the appropriate forward and inverse models for language and gestures perception and production. Inverse modelling, allowing the translation of perceived speech or actions into motor plans, is implemented by connections (via inferior parietal areas) between the superior temporal cortex (which encompasses Wernicke's area), involved in the perception of speech and actions, and ventral premotor areas (including Broca's region), which support motor planning and programming for speech and gesture production. Forward modelling, on the other hand, allows the sensory consequences of the intended motor acts, critical for online motor control, to be predicted. These forward models are thought to be implemented by projections, again via the inferior parietal cortex, from the ventral premotor cortex to superior temporal areas. In this framework, damage to the inferior parietal lobule would thus cause difficulties in updating the inverse model based on the forward model, resulting in impaired repetition and imitation.

Furthermore, language and praxis also seem to interact strongly at the semantic level. Recent studies have shown that the semantic system is much more distributed than originally thought. Binder et al. [150] carried out a meta-analysis of functional neuroimaging studies on semantic processing in healthy individuals. They concluded that the semantic system clearly depends on large networks distributed in the temporal, frontal and parietal cortices, predominantly, but not exclusively, in the left hemisphere. This network is constituted of heteromodal association areas, similar to the ‘default network1, but with little overlap with the distributed network activated by sensorimotor activity. Valuable insight into the cerebral bases of semantic processing has also been provided by neuropsychological investigations in brain-damaged patients. These studies have shown that some patients with very focal brain lesions may present with selective impairment of naming objects of specific semantic categories (e.g. living things versus inanimate objects), thus allowing inferences about the precise semantic function supported by the damaged area. In particular, a double dissociation has been shown between the capacity to name verbs or nouns ([151], review in [152]). Interestingly, while naming nouns involves cortical areas closer to the regions activated by object recognition tasks, naming verbs is supported by areas closer to the frontal motor regions [153]. This suggests that the motor system may be involved in action representation, which could serve action recognition with the aim of pantomiming or imitating, or with the aim of denominating [34]. In this context, a recent study in healthy subjects showed that symbolic gestures and spoken language activated the same left lateralized network, corresponding to Broca's & Wernicke's areas. According to the authors, this suggests that this system ‘is not committed to language processing but may function as a modality-independent semiotic system that plays a broader role in human communication, linking meaning to symbols’ [154]. In line with this, MacSweeney et al. [136] also identified this network, with the addition of the left inferior parietal cortex, as the neural substrate for signed language in deaf individuals.

Finally, as suggested earlier, another potential functional relation between praxis and language lies in the hierarchical organization of those behaviours. Is syntax exclusive to the linguistic domain, or are complex actions and music endowed with hierarchical rules akin to linguistic syntax [155,156]? In the domain of language, Broca's aphasia is classically qualified as ‘agrammatic’ owing to impairment in producing grammatical sentences and in processing syntactic markers. Accordingly, a wealth of brain imaging studies has reported the activation of the caudal part of Broca's area (Broadmann area 44) in tasks involving syntactic processing [85–87]. In accordance with these observations, Grodzinsky & Santi [157] proposed that abstract linguistic abilities are neurologically coded, and that Broca's area might play a specific role in syntax processing. However, other authors have proposed that Broca's aphasics' impairment in syntactic processing might rather be due to a lessening of available resources [56], and that the role of Broca's area might instead be to bind together the semantic, syntactic and phonological levels of language [158]. In the praxic domain, on the other hand, complex actions can be conceived of as structured according to three levels of organization: hierarchical (goals and subgoals), temporal (action sequences) and spatial (embodiment of tools). This structure could be paralleled with the organization of language [34,38]. Interestingly, the hierarchical control of action appears to involve Broca's region and its right homologue [159,160]. This is consistent with the fact that planning deficits, characteristic of dysexecutive syndromes, are attributed to lesions of the frontal lobes [161] and that both complex sequential actions (e.g. preparing a cup of coffee) [137] and pragmatic communication in a social context [46] seem to depend on both hemispheres. In addition, there is evidence for a convergence between language and praxis at the syntactic level as well. For example, Fazio et al. [101] have recently provided support for the existence of a link, potentially supported by Broca's area, between language syntax and sequential organization of observed actions. These authors have demonstrated that agrammatic patients with lesions involving Broca's area are also impaired in a non-linguistic test consisting of ordering action sequences. This functional relation between action recognition and language perception has recently been confirmed and further characterized by Sitnikova et al. [162], who examined event-related potentials in an action structural violation paradigm. In this study, healthy participants were presented with movies depicting everyday familiar tasks involving the use of tools. The authors found that the introduction of a tool irrelevant to the action context (e.g. an iron in the context of cutting bread) elicited a neurophysiological response usually linked to syntactic processes and violation detection. Interestingly, the stimuli used in this paradigm are highly reminiscent of the errors made by some patients with conceptual apraxia. These patients may indeed be unable to judge the appropriateness of the gesture demonstrated by an experimenter in association with a given tool or object [78], or they may also make similar errors when asked to demonstrate the gestures themselves, either choosing the wrong tool in a given context or executing the wrong action in response to a visually presented object [73].

6. Conclusion

Several hypotheses on the emergence of human culture and cognitive capacities have proposed a close evolutionary link between praxis, including tool use, and language, which are uniquely developed in the human species. Some of them have in addition proposed that gestural communication, involving action recognition and imitation, might have constituted an intermediary stage between the development of tool manufacture and use, and the emergence of spoken language. These hypotheses predict a strong relationship between the neural substrates and cognitive processes involved in language and praxis. Here we have addressed this question by reviewing the neuropsychological literature pertaining to the impairments of language and praxis after brain lesions, respectively termed aphasia and apraxia. In particular, we examined their impact on action recognition, imitation and communicative gestures, as well as the possible anatomo-functional links between the two neural systems supporting the two uniquely human cognitive abilities.

Research in brain-damaged patients as well as healthy individuals has shown that the functional anatomy of language and praxis is complex and organized in several networks, mainly lateralized to the left hemisphere. These praxic and linguistic networks partly overlap, with critical nodes located in the superior temporal, rostral inferior parietal and ventral premotor cortices. In contrast to what has often been argued, the frequent co-occurrence of aphasia and apraxia in left-brain-damaged patients may not be only the mere consequence of the proximity of the cortical areas involved. Rather, this phenomenon might reflect the fact that praxis and language networks actually intersect and share some common functional processes. This view is compatible with the existence of joint linguistic and praxic impairments, reflecting common deficient mechanisms, as well as with the dissociation between other manifestations of aphasia and apraxia. One demonstrative example of dissociation is the case of aphasic patients who previously used sign language and have selective impairment of the linguistic aspects of their gestures [134–136].

Accordingly, several recent studies in left-brain-damaged patients have suggested strong links between speech repetition and gesture imitation, which may involve common neural substrates and mechanisms, with a critical role played by the left inferior parietal cortex. Both aphasia and apraxia may induce impairments of action recognition, but some evidence suggests that the mechanisms involved are at least partially dissociable. Regardless, investigations in aphasic and apraxic patients suggest involvement of the left inferior frontal gyrus, including Broca's area, as well as the left inferior parietal lobe. As for gestural communication, the available literature does not allow comparison of the consequences of the aphasia and apraxia, since different categories of gestures have been studied in relation to each syndrome. This question will thus need further and more specific investigation. The available literature leads to the conclusion that the hypothesis of asymbolia is too general to explain the complex relations between gesture communication, aphasia and apraxia [100,163,164]. However, evidence from neuropsychological and neuroimaging studies converges to suggest that tool-use pantomimes, which may have been critical for the transmission and propagation of human tool manufacture and use, depend at least partly on the same neural network as actual tool use and symbolic, intransitive gestures. In particular, lesions involving the left inferior frontal gyrus, known to be strongly involved in speech production and comprehension, seem to be especially associated with impairment of tool-use pantomimes. In sum, neuropsychological studies of linguistic and praxic disorders show that both systems interact more or less depending on the context and on which aspects these complex cognitive behaviours are considered. Mounting evidence suggests that the phonological, semantic and syntactic levels of language share some common cognitive resources with the praxis system. This is consistent with the hypothesis of common phylogenetic and ontogenetic origins for language and praxis [9]. However, neuropsychological data do not allow confirmation or rejection of the hypothesis of an intermediary stage of gestural communication between the development of tool use and the emergence of language.

In addition to the development of long-range interconnected networks, evolution within some strategic areas in the left hemisphere might have conditioned the appearance and lateralization of complex human behaviour. The left lateralization might be attributed to an asymmetry of the columnar micro-architecture of the cortex inducing an asymmetry of some general processes then leading to a differential development of functions [165]. Along this line of reasoning, Goldenberg [67] proposes that the specific role of the left parietal lobe is based on categorical apprehension of spatial relationships, consistently with the left hemisphere preference for categorical coding (by opposition to coordinate coding). Similarly, the hemispheric lateralization for speech could result from an asymmetry of cortical temporal tuning, itself inducing an asymmetry of audio-motor processes [166]. According to this hypothesis, the left hemisphere might be specialized for the perception and production of sounds in the 28–40 Hz frequency domain (i.e. perception of phonemes and tongue movements) while the right hemisphere might be specialized in the 3–6 Hz frequency domain (i.e. syllabic rate and jaw movements). The role of Broca's area (or more generally speaking, the left inferior frontal gyrus) is now being revisited and ardently disputed [157]. In addition to its contribution to the human mirror system [167], it could have a generic function for hierarchical processing and nesting of chunks and sequences [160], unification of the different aspects of language [158] or binding meaning and symbol [154]. This kind of generic function might be a common resource for action and language, grounded in the left hemisphere and acting like a node in a complex and bilateral distributed network. These processes probably condition the richness and complexity of human activity.

Acknowledgements

This text is dedicated to the memory of Catherine Bergego. The research was supported by a grant from the European Communities: NEST project Hand-to-Mouth contract no. 029065. A.R.-B. is supported by INSERM. The authors thank Georg Goldenberg, Katharina Hogrefe, Wolfram Ziegler and Etienne Koechlin for useful comments and two anonymous reviewers for their suggestions. Françoise Marchand from the Federative Institute on Research on the Handicap (IFR25) and Johanna Robertson helped in the editing of the text.

Endnote

The default network is activated in resting states for specific tasks ‘interrupting the stream of consciousness’.

References

- 1.Annett M. 2006. The distribution of handedness in chimpanzees: estimating right shift in Hopkins' sample. Laterality 11, 101–109 [DOI] [PubMed] [Google Scholar]

- 2.Kimura D., Archibald Y. 1974. Motor functions of the left hemisphere. Brain 97, 337–350 10.1093/brain/97.1.337 (doi:10.1093/brain/97.1.337) [DOI] [PubMed] [Google Scholar]

- 3.Corbetta D. 2003. Right-handedness may have come first: evidence from studies in human infants and nonhuman primates. Behav. Brain Sci. 26, 217–218 10.1017/S0140525X03320060 (doi:10.1017/S0140525X03320060) [DOI] [Google Scholar]

- 4.Bradshaw J. L., Nettleton N. C. 1982. Language lateralization to the dominant hemisphere: tool use, gesture and language in hominid evolution. Curr. Psychol. Rev. 2, 171–192 10.1007/BF02684498 (doi:10.1007/BF02684498) [DOI] [Google Scholar]

- 5.Corballis M. C. 2003. From mouth to hand: gesture, speech, and the evolution of right-handedness. Behav. Brain Sci. 26, 199–208 (doi:10.1017/S0140525X03000062) [DOI] [PubMed] [Google Scholar]

- 6.Ambrose S. H. Paleolithic technology and human evolution. Science 291, 1748–1753 10.1126/science.1059487 (doi:10.1126/science.1059487) [DOI] [PubMed] [Google Scholar]

- 7.Tomasello M. 1999. The human adaptation for culture. Annu. Rev. Anthropol. 28, 509–529 10.1146/annurev.anthro.28.1.509 (doi:10.1146/annurev.anthro.28.1.509) [DOI] [Google Scholar]

- 8.Steele J., Uomini N. 2009. Can the archaeology of manual specialization tell us anything about language evolution? A survey of the state of play. Steps to a (neuro-) archaeology of mind (eds L. Malafouris & C. Renfrew) Camb. Archaeol. J. 19, 97–110 [Google Scholar]

- 9.Greenfield P. M. 1991. Language, tools and brain: the ontogeny and phylogeny of hierarchically organized sequential behavior. Behav. Brain Sci. 14, 531–551 10.1017/S0140525X00071235 (doi:10.1017/S0140525X00071235) [DOI] [Google Scholar]

- 10.Iriki A., Tanaka M., Iwamura Y. 1996. Coding of modified body schema during tool use by macaque postcentral neurones. Neuroreport 7, 2325–2330 10.1097/00001756-199610020-00010 (doi:10.1097/00001756-199610020-00010) [DOI] [PubMed] [Google Scholar]

- 11.Obayashi S., Suhara T., Kawabe K., Okauchi T., Maeda J., Akine Y., Onoe H., Iriki A. 2001. Functional brain mapping of monkey tool use. Neuroimage 14, 853–861 10.1006/nimg.2001.0878 (doi:10.1006/nimg.2001.0878) [DOI] [PubMed] [Google Scholar]

- 12.Frey S. H. 2007. What puts the how in where? Tool use and the divided visual streams hypothesis. Cortex 43, 368–375 10.1016/S0010-9452(08)70462-3 (doi:10.1016/S0010-9452(08)70462-3) [DOI] [PubMed] [Google Scholar]

- 13.Arbib M. A., Bonaiuto J. B., Jacobs S., Frey S. H. 2009. Tool use and the distalization of the end-effector. Psychol. Res. 73, 441–462 10.1007/s00426-009-0242-2 (doi:10.1007/s00426-009-0242-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cardinali L., Frassinetti F., Brozzoli C., Urquizar C., Roy A. C., Farne A. 2009. Tool-use induces morphological updating of the body schema. Curr. Biol. 19, R478–R479 10.1016/j.cub.2009.05.009 (doi:10.1016/j.cub.2009.05.009) [DOI] [PubMed] [Google Scholar]

- 15.Ungerleider L. G., Mishkin M. 1982. Two cortical visual system. In Analysis of visual behaviour (eds Ingle D. L., Goodale M. A., Mansfield R. J. W.), pp. 549–586 Cambridge, MA: MIT Press [Google Scholar]

- 16.Goodale M. A., Milner A. D. 1992. Separate visual pathways for perception and action. Trends Neurosci. 15, 20–25 10.1016/0166-2236(92)90344-8 (doi:10.1016/0166-2236(92)90344-8) [DOI] [PubMed] [Google Scholar]

- 17.Rizzolatti G., Luppino G. 2001. The cortical motor system. Neuron 31, 889–901 10.1016/S0896-6273(01)00423-8 (doi:10.1016/S0896-6273(01)00423-8) [DOI] [PubMed] [Google Scholar]

- 18.Jacobs S., Danielmeier C., Frey S. H. 2010. Human anterior intraparietal and ventral premotor cortices support representations of grasping with the hand or a novel tool. J. Cogn. Neurosci. 22, 2594–2608 10.1162/jocn.2009.21372 (doi:10.1162/jocn.2009.21372) [DOI] [PubMed] [Google Scholar]

- 19.Umilta M. A., Escola L., Intskirveli I., Grammont F., Rochat M., Caruana F., Jezzini A., Gallese V., Rizzolatti G. 2008. When pliers become fingers in the monkey motor system. Proc. Natl Acad. Sci. USA. 105, 2209–2213 10.1073/pnas.0705985105 (doi:10.1073/pnas.0705985105) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Johnson-Frey S. H. 2003. What's so special about human tool use? Neuron 39, 201–204 10.1016/S0896-6273(03)00424-0 (doi:10.1016/S0896-6273(03)00424-0) [DOI] [PubMed] [Google Scholar]

- 21.Hodges J. R., Bozeat S., Lambon Ralph M. A., Patterson K., Spatt J. 2000. The role of conceptual knowledge in object use evidence from semantic dementia. Brain 123, 1913–1925 10.1093/brain/123.9.1913 (doi:10.1093/brain/123.9.1913) [DOI] [PubMed] [Google Scholar]

- 22.Johnson-Frey S. H. 2004. The neural bases of complex tool use in humans. Trends Cogn. Sci. 8, 71–78 10.1016/j.tics.2003.12.002 (doi:10.1016/j.tics.2003.12.002) [DOI] [PubMed] [Google Scholar]

- 23.Lewis J. W. 2006. Cortical networks related to human use of tools. Neuroscientist 12, 211–231 10.1177/1073858406288327 (doi:10.1177/1073858406288327) [DOI] [PubMed] [Google Scholar]

- 24.Creem S. H., Proffitt D. R. 2001. Grasping objects by their handles: a necessary interaction between cognition and action. J. Exp. Psychol. Hum. Percept. Perform. 27, 218–228 10.1037/0096-1523.27.1.218 (doi:10.1037/0096-1523.27.1.218) [DOI] [PubMed] [Google Scholar]

- 25.Randerath J., Li Y., Goldenberg G., Hermsdorfer J. 2009. Grasping tools: effects of task and apraxia. Neuropsychologia 47, 497–505 10.1016/j.neuropsychologia.2008.10.005 (doi:10.1016/j.neuropsychologia.2008.10.005) [DOI] [PubMed] [Google Scholar]

- 26.Frey S. H. 2008. Tool use, communicative gesture and cerebral asymmetries in the modern human brain. Phil. Trans. R. Soc. B 363, 1951–1957 10.1098/rstb.2008.0008 (doi:10.1098/rstb.2008.0008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Liberman A. M., Mattingly I. G. 1985. The motor theory of speech perception revised. Cognition 21, 1–36 10.1016/0010-0277(85)90021-6 (doi:10.1016/0010-0277(85)90021-6) [DOI] [PubMed] [Google Scholar]

- 28.Rizzolatti G., Craighero L. 2004. The mirror-neuron system. Annu. Rev. Neurosci. 27, 169–192 10.1146/annurev.neuro.27.070203.144230 (doi:10.1146/annurev.neuro.27.070203.144230) [DOI] [PubMed] [Google Scholar]

- 29.Fogassi L., Luppino G. 2005. Motor functions of the parietal lobe. Curr. Opin. Neurobiol. 15, 626–631 10.1016/j.conb.2005.10.015 (doi:10.1016/j.conb.2005.10.015) [DOI] [PubMed] [Google Scholar]

- 30.Fadiga L., Craighero L., D'Ausilio A. 2009. Broca's area in language, action, and music. Ann. N Y Acad. Sci. 1169, 448–458 10.1111/j.1749-6632.2009.04582.x (doi:10.1111/j.1749-6632.2009.04582.x) [DOI] [PubMed] [Google Scholar]