Abstract

Image-based morphometry is an important area of pattern recognition research, with numerous applications in science and technology (including biology and medicine). Fisher Linear Discriminant Analysis (FLDA) techniques are often employed to elucidate and visualize important information that discriminates between two or more populations. We demonstrate that the direct application of FLDA can lead to undesirable errors in characterizing such information and that the reason for such errors is not necessarily the ill conditioning in the resulting generalized eigenvalue problem, as usually assumed. We show that the regularized eigenvalue decomposition often used is related to solving a modified FLDA criterion that includes a least-squares-type representation penalty, and derive the relationship explicitly. We demonstrate the concepts by applying this modified technique to several problems in image-based morphometry, and build discriminant representative models for different data sets.

Keywords: Image-based Morphometry, Fisher Discriminant Analysis, Discriminant Representative Model

1. Introduction

In biology and medicine, morphology refers to the study of the form, structure and configuration of an organism and its component parts. Clinicians, biologists, and other researchers have long used information about shape, form, and texture to make inferences about the state of a particular cell, organ, or organism (normal vs. abnormal) or to gain insights into important biological processes [1, 2, 3]. Earlier quantitative works often focused on numerical feature-based approaches (e.g. measuring size, form factor, etc.) that aim to quantify and measure differences between different forms in carefully constructed feature spaces [3, 4]. In recent times, many researchers working in applications in medicine and biology have shifted to a more geometric approach, where the entire morphological exemplar (as depicted in an image) is viewed as a point in a carefully constructed metric space [5, 6, 7], often facilitating visualization. When a linear embedding for the data can be assumed, standard geometric data processing techniques such as principal component analysis can be used to extract and visualize major trends in the morphologies of organs and cells [8, 9, 10, 11, 7, 12, 13, 14, 15]. While representation of summarizing trends is important, so is the application of discrimination techniques for elucidating and visualizing trends that differentiate between two or more populations [16, 17, 18, 19, 20, 21].

In part due to its simplicity and effectiveness, as well as its connection to the Student’s t-test, Fisher Linear Discriminant Analysis (FLDA) is often employed to summarize discriminating trends [21, 22, 23]. When employed in high dimensional spaces, the technique is often adapted and a regularized version of the associated generalized eigenvalue problem is used instead of the original eigenvalue problem, in order to avoid ill conditioning problems [24, 25, 26, 27]. The geometric meaning of such adaptation, to the best of our knowledge, is not fully understood [28]. Here we show that even in problems where ill conditioning does not exist, the straightforward application of the FLDA technique can lead to erroneous interpretation of the results. We show that a modified FLDA criterion that includes a representation penalty error can be used in such cases to extract meaningful discriminating information. We show the solution of the modified problem is related to the commonly used regularized eigenvalue problem, and derive the relationship explicitly. In contrast to the standard FLDA technique, the combination of a discrimination term with a data representation term allows for a decomposition whereby, in a two class problem, several discriminating trends can be computed and ranked according to their discrimination power (together with a least squares-type representation penalty), and discriminant representative models can be built accordingly. We also describe a kernalization of the procedure, similar to the one described in [28]. Finally, we apply the modified FLDA technique to several example problems in image-based morphometry, and contrast the technique to the straightforward FLDA method, as well as a method that combines PCA and FLDA serially [29, 24].

2. Methods

The method we describe can be applied whenever a linear embedding for the image data can be assumed and obtained. That is, given an image Ii depicting one structure to be analyzed, a function f can be used to map the image to a point in a linear subspace. This point may or may not be unique, depending on the embedding method being used. Mathematically: f(Ii) = xi, with xi ∈ ℛm, with m the dimension of the linear subspace. In addition, it is important for the linear embedding to be able to represent well the morphological structure present in Ii. Though other linear embeddings could also be utilized [12, 14, 15], in this work we utilize the landmark-based approach as described by [30, 31]. Briefly each image Ii is reduced to a set of landmarks, stored in a vector x ∈ ℛ2n (we use two dimensional images, and n is the number of landmarks). Although an inverse function does not exist (one cannot recover the image Ii from the set of landmarks xi), the set of landmarks is densely chosen, so that visual interpretation of morphology is possible. Given two images I1 and I2, with landmarks x1, x2, the landmarks are stored in corresponding order.

In some of the examples shown below we use contours to describe a given structure. In these examples, the correspondence between two sets of points describing two contours is not known a priori. We use a methodology similar to the one described in [9, 30], where the points in the contour are first converted to a polar coordinate system, with respect to the center of the contour. The contour is then sampled at n equidistant angles evenly distributed between angles 0 and 2π (n landmarks). This procedure maps each image Ii to a point xi in the standard ℛ2n vector space. Finally, we note that in all examples shown below, the sets of landmarks were first aligned by setting their center of mass to zero. Each set of landmarks was also aligned such that its principal axis aligned with the vertical axis.

2.1. Fisher discriminant analysis

Given a set of data points xi, for i = 1,⋯, N, with each index i belonging to class c, the problem proposed by Fisher [3, 32] relates to solving the following optimization problem

| (1) |

where SB = Σc Nc(μc − x̄)(μc − x̄)T represents the ’between class scatter matrix’, SW = ΣcΣi∈c(xi − μc)(xi − μc)T represents the ’within classes scatter matrix’, represents center of the entire data set, Nc is the number of data in class c and μc is the center of class c. As usually done, we subtract each data point by this mean before computing the scatter matrices SW, SB. The solution for the FLDA problem can be computed by solving the generalized eigenvalue problem [32]

| (2) |

We note that for a two class problem, maximizing the Fisher criterion is related to finding the linear one dimensional projection that maximizes the t-statistic for the two-sample t-test. Let the mean and variance of the two classes be denoted by (m1,m2) and respectively. When the variances are unequal, usually Welch’s adaptation of the t-test [33] is used:

| (3) |

Recall the objective function of FLDA defined in equation (1):

where μ1, μ2 represent the mean vectors of the two classes, and c1, c2 represent the different class labels, N1,N2 represent the number of samples in classes c1, c2 respectively, and N = N1 + N2. Let be the sample means and standard deviations over the projection w. We rewrite the Fisher criterion as:

When the number of data points in the two classes are the same, the Fisher criterion is equal to the scaled t2(w). We believe that in part due to its simplicity and its connections to the t-test (which are widely used in image-based morphometry [21, 22, 23]), FLDA-related techniques can play an important role in morphometry problems, especially in biology and medicine. As we show next, however, the FLDA technique must be modified before it can be used meaningfully in arbitrary morphometry problems.

2.2. A simulated data example

Here we show that the straightforward application of the FLDA method may not lead to a direction that represents real differences present in the data. In this example, two classes of two-dimensional vertical lines (each class with 100 lines) were generated. The lengths for the lines in class 1 ranged from 0.42 to 0.62, while lengths in class 2 ranged from 0.28 to 0.48. We aligned the center of each line to a fixed coordinate. Because the horizontal coordinates of each line do not change, for the purpose of visualizing the concepts we are about to describe, we characterize each simulated line by taking the vertical coordinates of upper and bottom-most points, where the Y 1 coordinate represents the y coordinate of the upper sample point on the line, while the Y 2 represents the y coordinate bottom-most sample point. Each line can then be uniquely mapped to a point in the two dimensional space R2. The data (set of all lines), however, occupies a linear one-dimensional subspace of R2, because only one parameter (length) varied in our simulation. From the coordinates xi = (Y 1i, Y 2i)T any line can be reconstructed. In order to avoid the ill-conditioning of the data covariance matrix, independent Gaussian noise was added to Y 1i, Y 2i (see Fig 1 (C)).

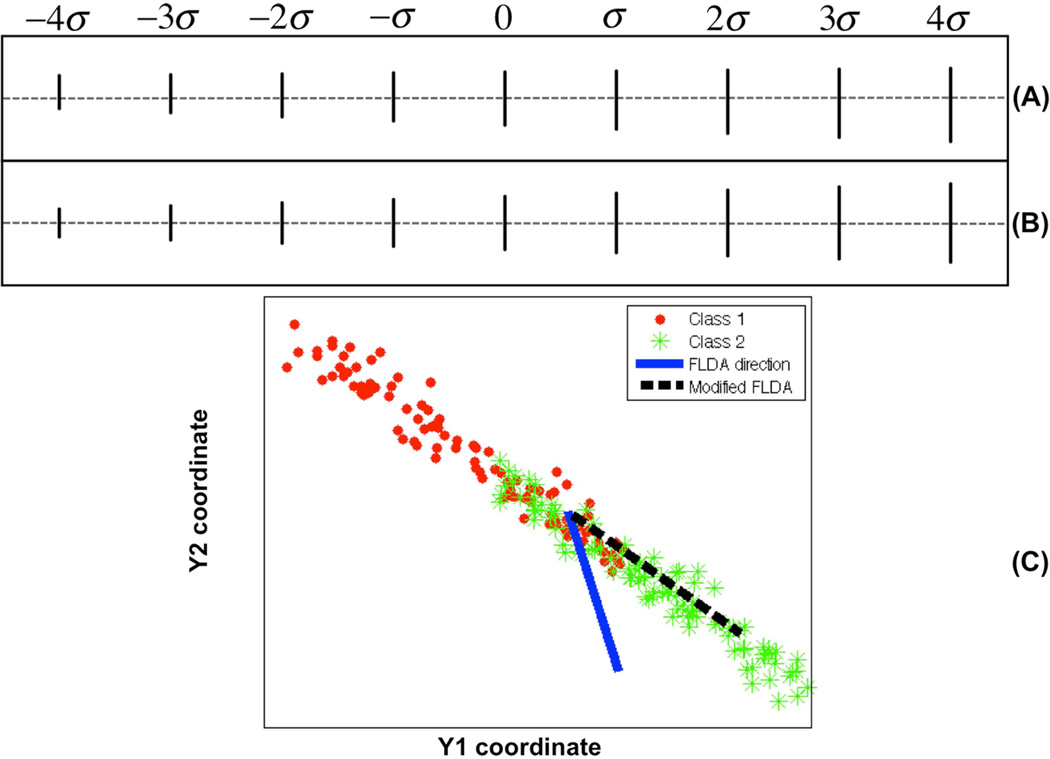

Figure 1.

Discriminant information computed for a simulated data set. A: Visualization of computed most discriminant direction by applying the standard FLDA method. B: Visualization of computed most discriminant direction by applying the penalized FLDA method. C: Plot of two sample points on the contour for the whole data set. See text for more details.

The solution w* of the FLDA problem discussed above can be visualized by plotting xγ = x̄ + γw* for some range of γ. Fig 1(A)(B) contains the lines corresponding to xγ for −4σ ≤ γ 4σ, where σ is the standard deviation (square root of the largest eigenvalue from eq. (2)). Visual inspection of the results in Fig 1(A) quickly reveals the problem. The method indicates that line translation in combination with a change in length is the geometric variation that best separates the two distributions according the Fisher criterion. While such a direction may allow for high classification accuracy, by construction, the data contained no variation in the position (translation) of the lines. We can therefore understand that such results are misleading, since the translation effect is manufactured by the FLDA procedure and does not exist in the data. The problem is further illustrated in part C of the figure, where the two distributions are plotted. The short lines (red dots) will have relatively bigger Y 1 and smaller Y 2 coordinates compared with the long lines (green dots). The solid blue line corresponds to the solution computed by FLDA. While this direction may be good for classifying the two populations (long vs short lines), it is not guaranteed to be well populated by data. If a visual understanding is to be obtained, the FLDA solution can thus provide misleading information (as shown in Fig 1 (A)).

2.3. A modified FLDA criterion

The FLDA criterion can be modified by adding a term that ’penalizes’ directions w that do not pass close to the data. To that end, we combine the standard FLDA criterion with a penalty term that measures, on average, how far the data is from a given direction w. Mathematically, an arbitrary line in the shape space Rm can be represented as λw + b, with line direction and offset w, b ∈ Rm, λ ∈ R. The squared distance from a data point xi in the shape space Rm to the line can be represented as [32]:

For a data set of N points, the mean of squared distances from each point in that data set to that line is:

| (4) |

We note that the term defined in equation (4) contains b that multiplies the terms containing w. Since it should be minimum for all possible choices of w, b can be chosen independently of w and can be shown to be (see section Appendix A for details): (this is equivalent to normalizing the data set by the mean, and, in that case, we could just assume b = 0). This indicates that this line must go through the center of the data set. Equation (4) can then be rewritten as:

| (5) |

where represents the ’total scatter matrix’. The optimization problem defined in equation (5) is equivalent to:

| (6) |

Recall that our goal is to maximize the Fisher criterion defined in equation (1) while minimizing the mean of squared distances defined in equation (4) (or (6)) to guarantee the discriminating direction found is well populated by the data. First we note that equation (1) is equivalent to the following optimization problem [32]

| (7) |

where ST is the ’total scatter matrix’ as defined in equation (5), and ST = SB +SW. The criterion is then optimized by solving the generalized eigenvalue problem [32] STw = λSWw, and selecting the eigenvector associated with the largest eigenvalue.

We note that maximizing the Fisher criterion defined in equation (7) is equivalent to maximizing [3, 32]. Since our goal is to maximize the Fisher criterion and at the same time minimize the penalty term defined in equation (6) (or maximize the reciprocal of it), we combine both terms and define

| (8) |

| (9) |

where α is a scalar weight term, as the criterion to optimize. Maximizing equation (9) is equivalent to

| (10) |

where I is the identity matrix. The solution for the problem above is also given by the well-known generalized eigenvalue decomposition STw = λ (SW + αI)w. This solution is similar to the solution to the traditional FLDA problem, however, with the regularization provided by αI. We note once again that although the regularized eigenvalue problem has been utilized in the past, to our best knowledge, the geometric meaning of such regularization is not well understood. According to the derivation above, the geometric meaning of the regularization is the minimization of the least squares-type projection error, in combination with the Fisher criterion. Moreover the rank of the generalized eigen decomposition problem defined in eq. (2) [34] is one. This means that, for two-class problem, only one discrimination direction is available. On the other hand, the minimization of the objective function (9) allows for a PCA-like decomposition, yielding a decomposition with as many directions as allowed by the rank of ST (assuming a large enough α). All directions are orthogonal to each other, and each direction in this decomposition maximizes the objective function (9), with the constraint of being norm one and orthogonal to other directions.

2.4. Relationship with FLDA and PCA

It is clear that if we set the parameter α = 0, the objective function defined in equation (9) will be the same as optimizing the traditional FLDA criterion. On the other hand, when the parameter α → ∞, the generalized eigenvalue decomposition problem for equation (10) can be rewritten as STw = λ′w (because ), which is the well-known PCA solution with the same eigenvectors (with eigenvalues multiplied by α). By changing the penalty parameter α from 0 to ∞, the solution of the modified FLDA problem described in (9) ranges from the traditional FLDA solution to the PCA one.

2.5. Parameter selection for α

The discriminant direction computed by equation (10) can be regarded as a function w(α) of the parameter α. For a given problem or application, one must select an appropriate value for α to ensure meaningful results. Too low a value for α and problems related to poor representation (as well as ill-conditioning in the associated eigenvalue problem) can occur. Too high a value and little or no discrimination information will be contained in the solution. We propose to select α such that it is close to the value of zero and that also is stable in the sense that a small variation in α does not yield a large change in the computed direction w(α). To that end, in each problem demonstrated below we compute (m is the dimensionality of w) numerically and compare it to a fixed threshold (10−4 in this paper). Several values of α are scanned starting from zero (or close to zero when the system is ill conditioned), and the first value of alpha for which dw(α)/dα < 10−4 is true is chosen as the α for that dataset.

2.6. ”Kernelizing” the modified FLDA

Morphometry problems, in particular in biology and medicine, can often involve high-dimensional data analysis (e.g. three dimensional deformation fields [20, 21]). Computation of the full covariance matrices involved in such problems is often infeasible. To address this problem, the technique we propose above can be ”kernelized,” in an approach similar to the one described in [28]. Assume Φ be a mapping function to higher that Φ : Rn → Rm. The modified FLDA defined in equation (9) can be transformed to:

| (11) |

where . If we assume , equation (12) can be transformed to:

| (12) |

where G = (G)i,j := Φ(xi)TΦ(xj) is a N × N matrix, is a N × Nc matrix with (Kc)i,j := Φ(xi)TΦ(xj∈c), T = G(I − 1N)GT is a N × N matrix, and 1N is a matrix with all entries 1/N. Although the computational examples we show below are of low enough dimension and do not require such an approach, we anticipate that the kernel version of our method will be useful in higher dimensional morphometry problems.

3. Results

3.1. Simulated experiments

We tested the modified FLDA method above on the simulated dataset depicted in Figure 1. We compared the result of applying the FLDA method Fig 1 (A) with our modified FLDA method (α = 500) in Fig 1 (B). As mentioned above, the value of α was chosen automatically as the one that satisfied dw(α)/dα < 10−4. The same criterion was used for all the experiments described in this section. We can see the method we propose does indeed recover the correct information that discriminates between the two populations (in this case, the length of each line). While this is not necessarily the most discriminating information in the FLDA sense, it is the most discriminating information that is well populated by the data, in the sense made explicit by equation (9). In this specific simulation the modified FLDA method yields the same result as the standard PCA method would. However, as shown in other examples below, that is not a general rule.

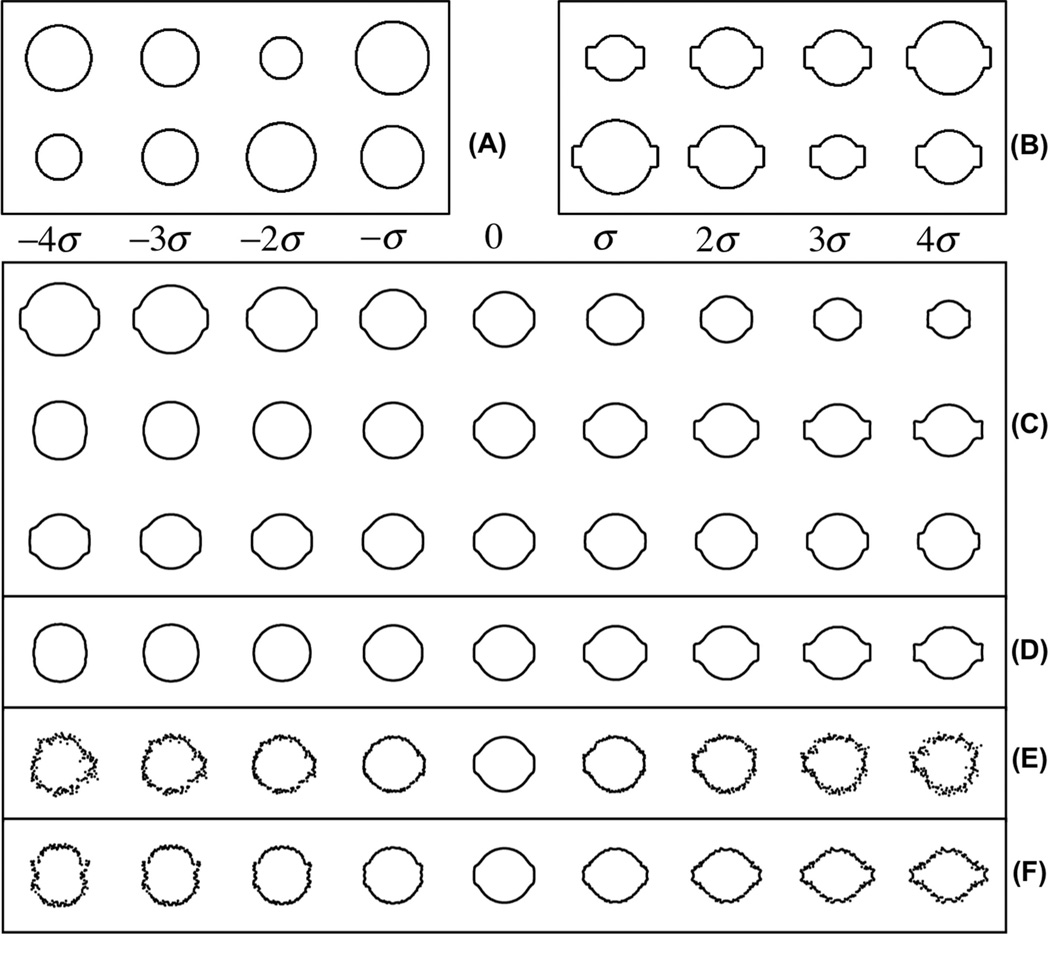

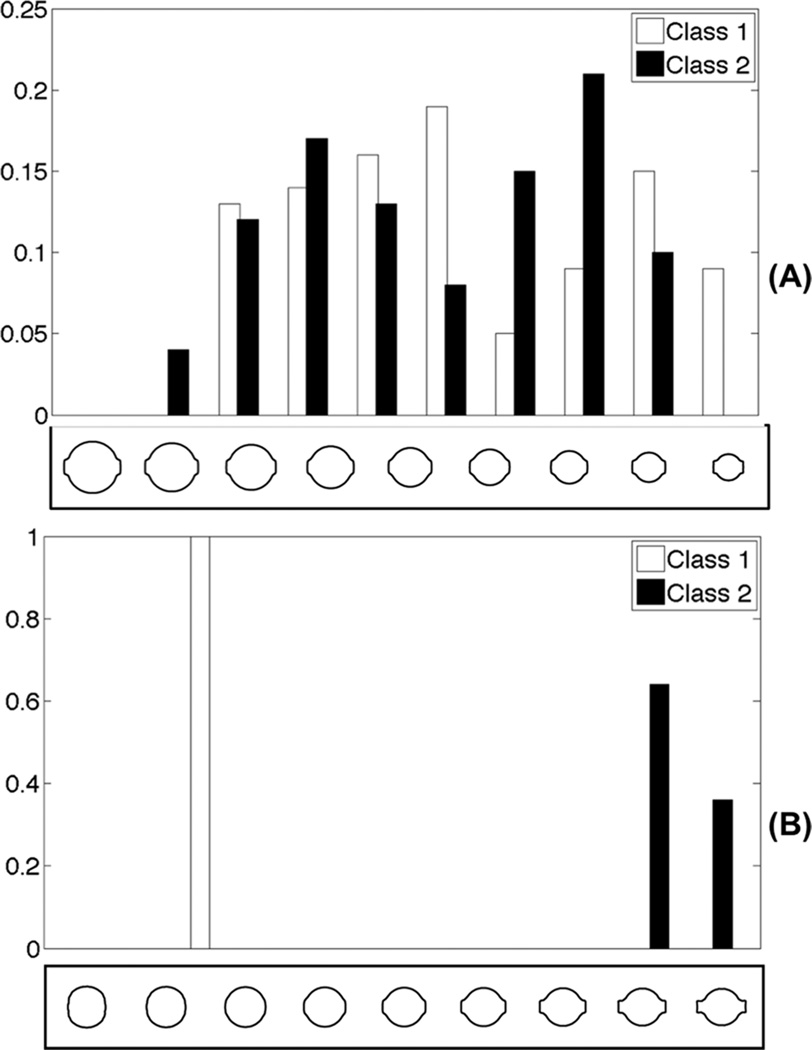

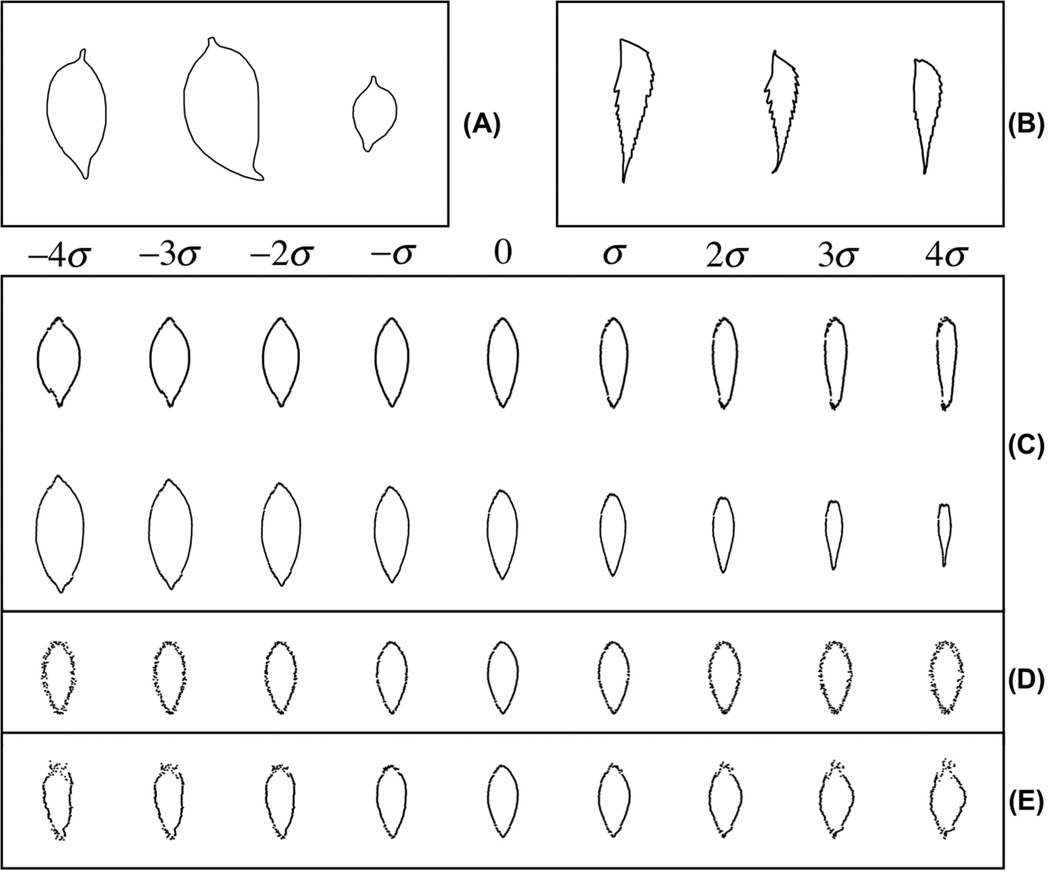

We also tested the modified FLDA method on another simulated data set, where two classes of shapes were analyzed. One class was composed of circles (as shown in Fig 2(A)), and the other class was composed of circles with square protrusions emanating from opposite sides (as shown in Fig 2(B)). We used 100 samples for each class, and in each class the radii of circles ranged uniformly from 0.2 to 0.8. We generated these images in the way that we expect the discriminating information for this simulated data to be the rectangular protrusion. We used the contour-based metric to extract 90 sample points along the contour of each image, and used both [X,Y] coordinates of the sample points. Each image was thus mapped to a point in a 180 dimensional vector space. In Fig 2(C), the first three PCA modes (computed using both classes) are shown. The first mode of variation is related to circle size and the second seems to show the difference in shape. In Fig 2(D), we demonstrate the discriminating mode computed by the modified FLDA (α = 800) method. We can see that the method successfully recovers the discriminating information in the data set, without being confused by the misleading information such as size and the shape of the ovals. To verify that indeed the projection recovered by the modified FLDA is more discriminant than the size (radii) of the circles, we project the data onto the directions found by PCA (the first mode) and the modified FLDA (Fig 3(A) and (B)). As can be seen from this figure, by construction, the differences in shape (rectangular protrusions) are more discriminating than differences in size. We also applied the traditional FLDA (without regularization) on this simulated data. Results are shown in part (E) of Fig. 2. As can be seen, although the generalized eigenvalue problem can be solved and some discriminant information can be detect, the data cannot be easily visualized. The contours start to break and sample points along the contour start to move irregularly. In addition, we compare the methods mentioned in [29, 24], where PCA and FLDA are used sequentially. In the PCA step, we discard all the eigen-vectors whose corresponding eigen-value is smaller than a threshold (set at 0.1% of the biggest eigen-value). The result, shown in Fig 2(F), indicates that although some interpretable discriminant information can be detected, the direction provided also suffers from similar artifacts as the traditional FLDA method. We also compared the classification accuracy obtained by the three difference methods (traditional FLDA, sequential PCA and FLDA, and our penalized FLDA method). We used a K-fold (K = 10) cross-validation strategy [32] to separate the whole data set into 10 parts. In each fold, one part was left out and the remaining parts were used for training to compute the discriminant directions by the three methods, and these directions were used to classify the testing set. We repeated the procedure until each part has been selected once, and computed the final classification accuracy. The classification accuracies obtained were: 90% (traditional FLDA), 98% (PCA plus FLDA) and 100% (penalized FLDA).

Figure 2.

Principle variations and discriminant information computed for a simulated data set. A: Sample images from the first class. B: Sample images from the second class. C: First 3 principle variations computed by Principle Component Analysis (PCA). D: Discriminant variation computed by the penalysed FLDA method. E: Discriminant variation computed by directly applying the traditional FLDA on this simulated data. F: Discriminant variation computed by sequentially applying PCA then FLDA on this simulated data.

Figure 3.

Histograms of data projected onto different directions. A: projection histogram on direction computed by the PCA method. B: projection histogram onto the direction computed by the modified FLDA method.

3.2. Real data experiments

We applied the modified FLDA method on a real biomedical image data set to quantify the difference in nuclear morphology between normal versus cancerous cell nuclei. The raw data consisted of histopathology images originating from five cases of liver hepatoblastoma (HB), each containing adjacent normal liver tissue (NL). The data was taken for the archives at the Children’s Hospital of Pittsburgh, and is described in more detail in [18, 35]. The data set is available online at [36]. Briefly, the images were segmented by a semi automatic method involving a level set contour extraction. They were normalized for translation, rotations, and coordinate inversions (flips) as described in our earlier work [11, 18, 35]. The dataset we used consisted of a total of 500 nuclear contours: 250 for (NL), and 250 for (HB). Some sample images are shown in Fig 4(A)(B) for HB and NL classes. The contours of each image were mapped to a 180 dimensional vector xi as described earlier.

Figure 4.

Discriminant information computed for real liver nuclei data. A: Sample nuclear contours from the cancerous tissue (HB). B: Sample nuclear contours from normal tissue (NL). C: First three discriminating modes computed by the modified FLDA method. D: Discriminant variation computed by directly applying the traditional FLDA method on this data. E: Discriminant variation computed by sequentially applying PCA then FLDA on this data. See text for more details.

Fig 4(C) contains the first three discriminating modes computed by the modified FLDA method (with α = 600). For this specific cancer, we can see that a combination of size differences and protrusions is the most discriminating directional information. The elongation of nuclei is the second most discriminant morphological information (that is orthogonal to the first). The third direction contains a protrusion effect. The p values of the t-test for each direction are 0.0021, 0.071, 0.27, respectively. As in the previous experiment, we also applied the traditional FLDA method on the contours of data set, as shown in Fig 4(D). Some sample points on contours seem to move perpendicularly to the contour with relatively big variation, while some remain unchanged. The direction computed by FLDA does not seem to capture visually interpretable information. In addition, as in section 3.1, we also applied the method described in [29]. The result is shown in Fig 4(E). Finally, classification accuracies for the three methods were computed as described above. The classification accuracies obtained were: 76% (traditional FLDA), 79% (PCA plus FLDA) and 81% (penalized FLDA).

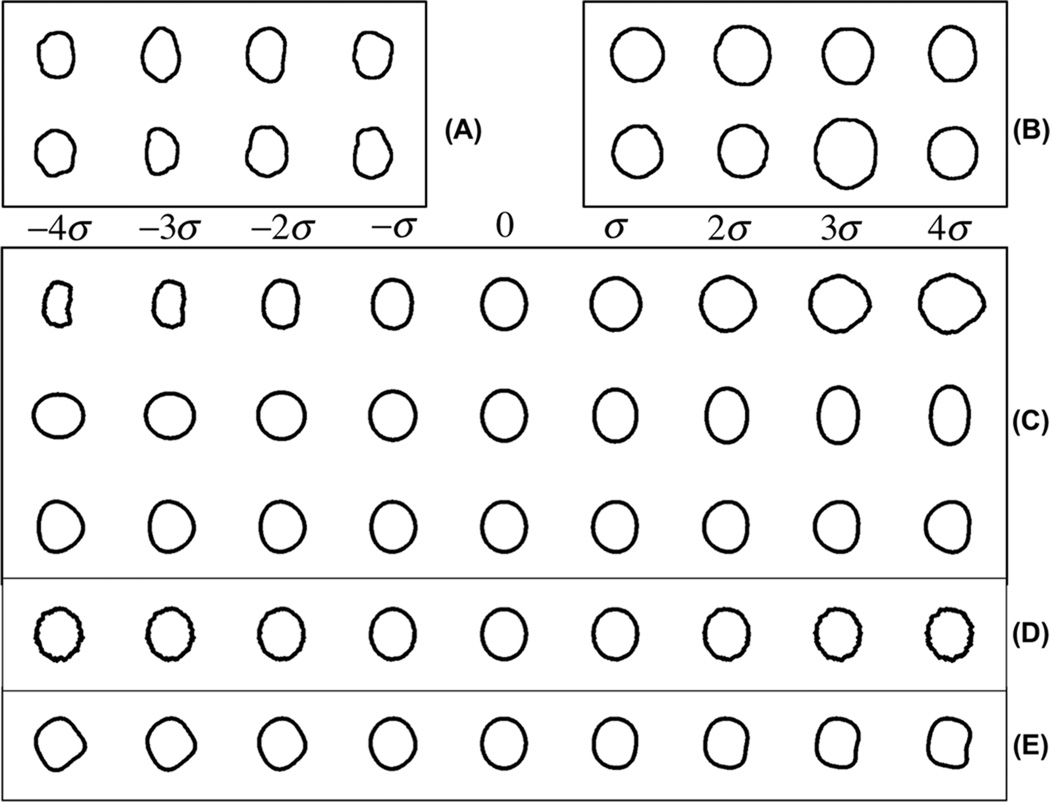

We also applied the penalized FLDA method on a leaf shape data set [37] to quantify the difference in morphology between two types of leaves. The raw data consisted of gray-level images of different classes of leaves, with roughly the same size, and each class having 10 images. Some sample images for the two types of leaves are provided in Fig 5(A)(B) respectively. The contours of the leaves are provided. We followed the same procedures as described earlier to preprocess the contours. In Fig 5(C), we plot the first two discriminating modes of variations computed by the modified FLDA (with α = 200). We can see that the first discriminating mode successfully detects elongation differences as the discriminant information for this data set. The second discriminating mode is the size differences combined with the shape differences. The p-values for the t-tests on these directions were 3.09 × 10−5, 0.056 respectively. In Fig 5(D), we demonstrate the discriminating mode computed by the traditional FLDA method. In Fig 5(E), we show the discriminant variation computed by sequentially applying PCA then FLDA (as before the eigenvalues of the reconstructed vectors in the PCA portion were thresholded at 0.1% of the largest eigenvalue, to avoid ill conditioning). Because the dataset only contained 10 images in each class, we do not report classification accuracies in this case.

Figure 5.

Discriminant information computed for leaf dataset. A: Sample images for one class of leaves. B: Sample images for another class of leaves. C: First two discriminant variations computed by our modified FLDA method. D: Discriminant variation computed by directly applying the traditional FLDA method on this data. E: Discriminant variation computed by sequentially applying PCA then FLDA on this data. See text for more details.

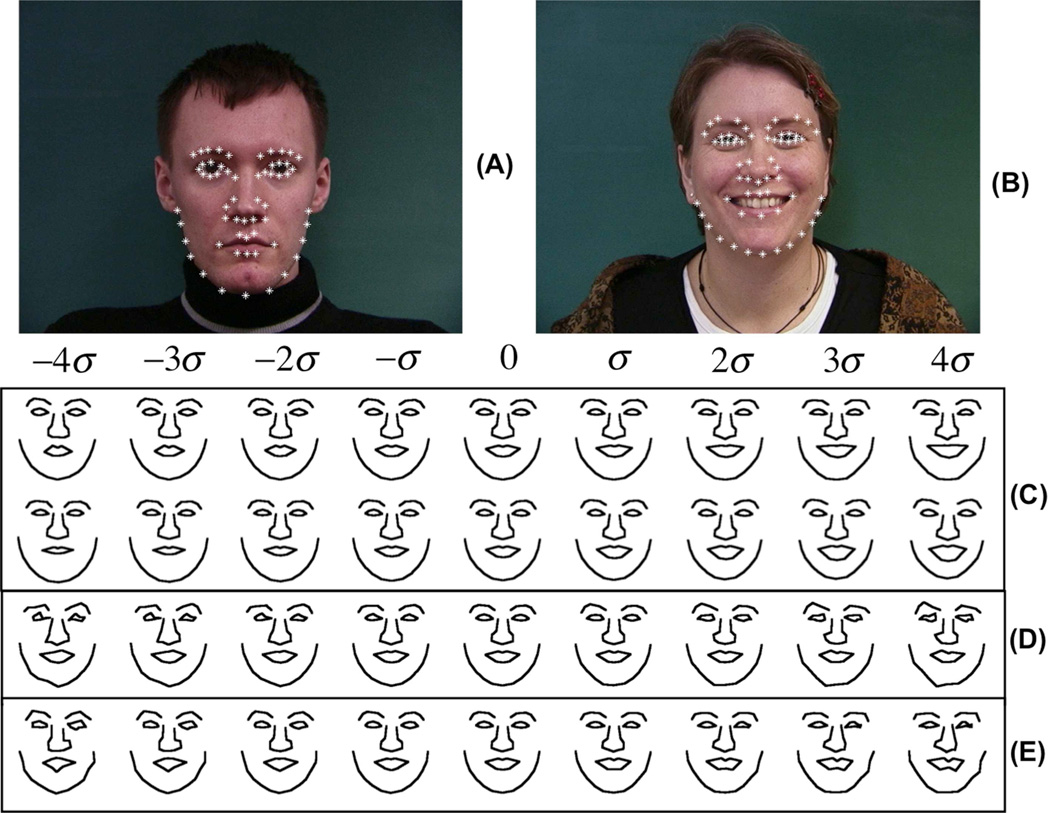

Finally, we also applied the penalized FLDA method to a facial image data set to quantify the difference between two groups. The data is described in [31], and available online [38]. The manually annotated landmarks (obtained from the eyebrows, eyes, nose, mouth and jaw) were used in our analysis. Generalised Procrustes Analysis (GPA) was used to eliminate the translations, orientations, and scalings. Therefore, each human face Ii was decoded by a 116 dimensional vector xi. The dataset we chose contained two classes: faces with normal expression, and faces smiling. As in the previous experiments, we compared the results from the first two modes of variations computed by the penalized FLDA method (with α = 500), traditional FLDA method, and sequentially applying PCA then FLDA (where again the threshold of 0.1% of the largest eigenvalue was used for a threshold). Figure 6 shows the corresponding results. The p-values computed from the penalized FLDA procedure were 5.88 × 10−5, 2.91 × 10−3. Classification accuracies were 92% (traditional FLDA), 88% (PCA plus FLDA) and 91% (penalized FLDA).

Figure 6.

Discriminant information computed for face data. A: Sample image for neutral expression. B: Sample image for smiling face. C: First two principle variations computed by the modified FLDA method. We can see that the modified FLDA method can correctly detect the different facial expression information. D: Discriminant variation computed by directly applying the traditional FLDA method on this data. E: discriminant variation computed by sequentially applying PCA then FLDA on this data.

4. Summary and discussion

Quantifying the information that is different between two groups of objects is an important problem in biology, medicine as well as general morphological analysis. We have shown that the application of the standard FLDA criterion (other discrimination methods can also suffer from similar shortfalls, see for example [17]) can lead to erroneous results in interpretation not necessarily related to ill conditioning in the data covariance matrix. We showed that the regularized version of the associated generalized eigenvalue problem is related to minimizing a modified cost function that combines both the standard FLDA term together with a least squares-type criterion. The method yields a family of solutions that varies according to the weighting (α) applied the least squares-type penalty term. At one extreme (α = 0) the solution is equal to that of the traditional FLDA method, while a the other extreme (α → ∞) the solution approaches that of the standard PCA method. We also described a method for choosing an appropriate value for weighting the penalty term. We note again that while others have also used the same regularized version of the associated generalized eigenvalue problem (see [28] for an example), geometrical explanations for this regularization are not known, to our best knowledge. We also note that the method we propose tends to select regularization values α much larger than the ones often used.

We applied the method to several discrimination tasks using both real and simulated data. We also compared the results to results generated by other methods. In most cases the traditional FLDA can be computed (ill conditioning is not an issue). Its results however, are not always visually interpretable (e.g. are far from being closed contours, etc.). Likewise, the application of PCA and FLDA serially (as in the method described in [29]) can also yield uninterpretable results, since the FLDA procedure is ultimately applied independently of the PCA method. Moreover, as shown in Fig 1(C), even if we apply PCA to discard the eigen-vectors corresponding to small eigen-values, the direction computed by the traditional FLDA is not guaranteed to be well populated by data. Results show that the penalized FLDA method we describe can help overcome these limitations.

Finally, we emphasize that although we have used contours and landmarks extracted from image data as our linear embeddings, it is possible to use the same method on other linear embeddings (for example [12]). For some such linear embeddings, however, distance measurements, projections over directions, etc., over large distances (large deformations) may not be appropriate. In such cases we believe the same modified FLDA method could be used locally, in an idea similar to that presented in [39].

-

>

We modify the Fisher Discriminant Analysis method to include an average distance penalty.

-

>

We apply the modified FDA method to problems in image-based morphometry and compare its results to other methods.

-

>

We build discriminant representative models to interpret differences in morphometry in two populations.

Acknowledgements

This work was partially supported by NIH grant 5R21GM088816. The authors thank Dr. Dejan Slepcev and Dr. Ann B. Lee, from Carnegie Mellon University for discussions related to this topic.

Appendix A

We note that the term defined in equation (4) contains b that multiplies the terms containing w. Since it should be minimum for all possible choices of w, b can be chosen independently of w. Therefore, we can first focus on . It is equivalent to

| (A.1) |

Differentiating with respect to b in equation (A.1) and setting it to 0 we have that . The optimal b* satisfiesn .

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Papanicolaou G. New cancer diagnosis. CA: A Cancer Journal for Clinicians. 1973;23:174. [PubMed] [Google Scholar]

- 2.Thomson J. On growth and form. Nature. 1917;100:21–22. [Google Scholar]

- 3.Fisher RA. The use of multiple measurements in taxonomic problems. Annals of Eugenics. 1936;7:179–188. [Google Scholar]

- 4.Prewitt J, Mendelsohn M. The analysis of cell images. Annals of the New York Academy of Sciences. 1965;128:1035–1053. doi: 10.1111/j.1749-6632.1965.tb11715.x. [DOI] [PubMed] [Google Scholar]

- 5.Kendall DG. Shape manifolds, procrustean metrics, and complex projective spaces. Bull Lond Math Soc. 1984;16:81–121. [Google Scholar]

- 6.Bookstein FL. The Measurement of Biological Shape and Shape Change. Springer; 1978. [Google Scholar]

- 7.Grenander U, Miller MI. Computational anatomy: an emerging discipline. Quart. Appl. Math. 1998;56:617–694. [Google Scholar]

- 8.Blum H, et al. A transformation for extracting new descriptors of shape. Models for the perception of speech and visual form. 1967;19:362–380. [Google Scholar]

- 9.Pincus Z, Theriot JA. Comparison of quantitative methods for cell-shape analysis. J Microsc. 2007;227:140–156. doi: 10.1111/j.1365-2818.2007.01799.x. [DOI] [PubMed] [Google Scholar]

- 10.Zhao T, Murphy RF. Automated learning of generative models for subcellular location: building blocks for systems biology. Cytometry A. 2007;71A:978–990. doi: 10.1002/cyto.a.20487. [DOI] [PubMed] [Google Scholar]

- 11.Rohde GK, Ribeiro AJS, Dahl KN, Murphy RF. Deformation-based nuclear morphometry: capturing nuclear shape variation in hela cells. Cytometry A. 2008;73:341–350. doi: 10.1002/cyto.a.20506. [DOI] [PubMed] [Google Scholar]

- 12.Rueckert D, Frangi AF, Schnabel JA. Automatic construction of 3-d statistical deformation models of the brain using nonrigid registration. IEEE Trans. Med. Imag. 2003;22:1014–1025. doi: 10.1109/TMI.2003.815865. [DOI] [PubMed] [Google Scholar]

- 13.Fletcher PT, Lu CL, Pizer SA, Joshi S. Principal geodesic analysis for the study of nonlinear statistics of shape. IEEE Trans. Med. Imag. 2004;23:995–1005. doi: 10.1109/TMI.2004.831793. [DOI] [PubMed] [Google Scholar]

- 14.Makrogiannis S, Verma R, Davatzikos C. Anatomical equivalence class: A morphological analysis framework using a lossless shape descriptor. IEEE Trans. Med. Imaging. 2007;26:619–631. doi: 10.1109/TMI.2007.893285. [DOI] [PubMed] [Google Scholar]

- 15.Vaillant M, Miller M, Younes L, Trouvé A. Statistics on diffeomorphisms via tangent space representations. NeuroImage. 2004;23:S161–S169. doi: 10.1016/j.neuroimage.2004.07.023. [DOI] [PubMed] [Google Scholar]

- 16.Golland P, Grimson W, Shenton M, Kikinis R. Detection and analysis of statistical differences in anatomical shape. Medical Image Analysis. 2005;9:69–86. doi: 10.1016/j.media.2004.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhou L, Lieby P, Barnes N, Réglade-Meslin C, Walker J, Cherbuin N, Hartley R. Hippocampal shape analysis for alzheimer’s disease using an efficient hypothesis test and regularized discriminative deformation. Hippocampus. 2009;19:533–540. doi: 10.1002/hipo.20639. [DOI] [PubMed] [Google Scholar]

- 18.Wang W, Ozolek JA, Slepcev D, Lee AB, Chen C, Rohde GK. An optimal transportation approach for nuclear structure-based pathology. IEEE Trans Med Imaging. 2010 doi: 10.1109/TMI.2010.2089693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wang W, Chen C, Peng T, Slepcev D, Ozolek JA, Rohde GK. A graph-based method for detecting characteristic phenotypes from biomedical images; Proc. IEEE Int. Symp. Biomed. Imaging; pp. 129–132. [Google Scholar]

- 20.Miller MI, Priebe CE, Qiu A, Fischl B, Kolasny A, Brown T, Park Y, Ratnanather JT, Busa E, Jovicich J, Yu P, Dickerson BC, Buckner RL. Collaborative computational anatomy: an mri morphometry study of the human brain via diffeomorphic metric mapping. Hum Brain Mapp. 2009;30:2132–2141. doi: 10.1002/hbm.20655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wang L, Beg F, Ratnanather T, Ceritoglu C, Younes L, Morris J, Csernansky J, Miller M. Large deformation diffeomorphism and momentum based hippocampal shape discrimination in dementia of the alzheimer type. Medical Imaging, IEEE Transactions on. 2007;26:462–470. doi: 10.1109/TMI.2005.853923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wang S-L, Wu M-T, Yang S-F, Chan H-M, Chai C-Y. Computerized nuclear morphometry in thyroid follicular neoplasms. Pathol Int. 2005;55:703–706. doi: 10.1111/j.1440-1827.2005.01895.x. [DOI] [PubMed] [Google Scholar]

- 23.Wolfe P, Murphy J, McGinley J, Zhu Z, Jiang W, Gottschall EB, Thompson HJ. Using nuclear morphometry to discriminate the tumorigenic potential of cells: a comparison of statistical methods. Cancer Epidemiol Biomarkers Prev. 2004;13:976–988. [PubMed] [Google Scholar]

- 24.Yu H, Yang J. A direct lda algorithm for high-dimensional data-with application to face recognition. Pattern Recognition. 2001;34:2067. [Google Scholar]

- 25.Bouveyron C, Girard S, Schmid C. High-dimensional discriminant analysis. Communications in Statistics-Theory and Methods. 2007;36:2607–2623. [Google Scholar]

- 26.Friedman J. Regularized discriminant analysis. Journal of the American statistical association. 1989;84:165–175. [Google Scholar]

- 27.Zhang Z, Dai G, Xu C, Jordan M. Regularized discriminant analysis, ridge regression and beyond. Journal of Machine Learning Research. 2010;11:2199–2228. [Google Scholar]

- 28.Mika S, Ratsch G, Weston J, Scholkopf B, Mullers K. Fisher discriminant analysis with kernels; Proceedings of the 1999 IEEE Signal Processing Society Workshop, Neural Networks for Signal Processing IX; pp. 41–48. [Google Scholar]

- 29.Belhumeur P, Hespanha J, Kriegman D. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2002;19:711–720. [Google Scholar]

- 30.Cootes T, Taylor C, Cooper D, Graham J, et al. Active shape models-their training and application. Computer vision and image understanding. 1995;61:38–59. [Google Scholar]

- 31.Stegmann M, Ersboll B, Larsen R. Fame-a flexible appearance modeling environment. IEEE Transactions on Medical Imaging. 2003;22:1319–1331. doi: 10.1109/tmi.2003.817780. [DOI] [PubMed] [Google Scholar]

- 32.Bishop CM. Pattern Recognition and Machine Learning. Springer; 2006. (Information Science and Statistics) [Google Scholar]

- 33.Welch B. The generalization of’student’s’problem when several different population varlances are involved. Biometrika. 1947;34:28. doi: 10.1093/biomet/34.1-2.28. [DOI] [PubMed] [Google Scholar]

- 34.Fukunaga K. Introduction to statistical pattern recognition. Academic Pr. 1990 [Google Scholar]

- 35.Wang W, Ozolek JA, Rohde GK. Detection and classification of thyroid follicular lesions based on nuclear structure from histopathology images. Cytometry Part A. 2010;77:485–494. doi: 10.1002/cyto.a.20853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ozolek JA, Rohde GK, Wang W. 2010 http://tango.andrew.cmu.edu/~gustavor/segmented_nuclei.zip.

- 37.Waghmare V. Leaf shapes database. http://www.imageprocessingplace.com/downloads_V3/root_downloads/image_databases/

- 38. http://www2.imm.dtu.dk/~aam/

- 39.Zhang H, Berg A, Maire M, Malik J. Svm-knn: Discriminative nearest neighbor classification for visual category recognition; IEEE Computer Society Conference on Computer Vision and Pattern Recognition; 2006. pp. 2126–2136. [Google Scholar]