Abstract

Impaired perception of consonants by poor readers is reflected in poor subcortical encoding of speech timing and harmonics. We assessed auditory brainstem representation of higher harmonics within a consonant-vowel formant transition to identify relationships between speech fine structure and reading. Responses were analyzed in three ways: a single stimulus polarity, adding responses to inverted polarities (emphasizing low harmonics), and subtracting responses to inverted polarities (emphasizing high harmonics). Poor readers had reduced representation of higher speech harmonics for subtracted polarities and a single polarity. No group differences were found for the fundamental frequency. These findings strengthen evidence of subcortical encoding deficits in poor readers for speech fine structure, and delineate effective strategies for capturing these neural impairments in humans.

Keywords: Electrophysiology, reading, auditory brainstem, speech, evoked-potentials

Introduction

Children with reading impairments often perform poorly on psychophysical auditory processing tasks relative to typically-developing peers [1], and auditory processing skills in infancy or pre-school can predict later language and reading ability [2–3]. Deficits in auditory processing likely contribute to the well-documented impairment in discriminating and utilizing stop consonants by children with reading disorders [4–6], due to the transient elements and rapidly changing frequency sweeps (i.e., formants) characterizing stop-consonants [7]. Poor perception and misuse of stop consonants by children with reading impairments may be due to a combination of impaired neural representation of stimulus features and an inability to utilize regularly occurring acoustic cues in their environment to modulate sensory representation (i.e., the particular temporal and spectral features that represent linguistic information [8–10]).

Consistent with behavioral deficits in auditory processing, many children with reading disorders exhibit impaired subcortical encoding of speech and speech-like signals relative to good readers [11–16]. These impairments are particularly evident for the acoustic cues important for distinguishing stop consonants, namely the neural representation of stimulus timing and higher harmonics (including those that fall in the range of speech formants). However the neural representation of the fundamental frequency (an important pitch cue) is not disrupted in poor readers. Children with poor reading ability or language skills have significantly slower neural response timing, less robust neural encoding of formant-related stimulus harmonics, and less robust tracking of frequency contours than typically-developing children, and the responses to these acoustic cues are predictive of reading across children with a wide range of ability [11–16]. Although the relationship between neural response timing and reading has been established with multiple speech and speech-like stimuli, further exploration is needed to understand the nature of deficits seen for poor readers in the encoding of stimulus harmonics. Speech harmonics are critical cues for perceiving stop consonants [7], and their neural representation is likely to be tightly related to reading ability.

Deficits in the representation of formant-related harmonic encoding have been found using an abbreviated speech stimulus (formant transition only), for which responses to inverted stimulus polarities (180° out of phase) were added [11–12]. This use of added polarities accentuates the lower frequency components in the response that contribute to the amplitude envelope, including the fundamental frequency (F0; [17–19]. In our current analysis, we utilized a second processing method of subtracting the responses to inverted polarities to extract the components of the response reflecting the stimulus fine structure (i.e., formant-related harmonics; [17,20]. Unlike previous work with an isolated formant transition, here we measured responses to a full consonant-vowel syllable, which was longer, containing a full vowel, and consequently presented at a slower rate than the isolated formant transition stimulus. Due to the slower presentation rate and full vowel of the consonant vowel syllable, we chose to additionally utilize the method of subtracting polarities because we anticipated it would be more fruitful in revealing group differences through the maintenance of stimulus fine structure in the response. Additionally we analyzed responses to a single stimulus polarity, in which both the amplitude envelope and the fine structure would be represented. We compared poor readers to average readers in their encoding of the F0 and harmonics using the two processing techniques and responses to an individual polarity. We hypothesized that deficits in the neural representation of formant-related harmonics account in part for reading difficulties in children. We predicted that these deficits in processing speech fine structure would be more evident in analysis techniques that emphasize or maintain the fine structure response elements (subtracting polarities and a single polarity) rather than those which emphasize envelope-related elements (adding polarities).

Methods

Fifty-one children ages 8–13 (M =10.7, 16 girls) participated. All had normal hearing (air- and bone-conduction thresholds < 20 dB HL for octaves from 250–8000 Hz), click-evoked auditory brainstem responses within normal limits (100 μs presented at 31.3 Hz), and full-scale IQ scores above 85 on the Wechsler Abbreviated Scale of Intelligence (WASI; [21]). Reading ability was assessed using the Test of Silent Word Reading Fluency (TOSWRF [22]). Poor readers were defined as children with scores ≤ 90 (n = 25; 8 girls). All other participants had TOSWRF scores within the average range (95–105; n = 26; 8 girls). All procedures were approved by the Northwestern University Institutional Review Board and children and a parent/guardian gave their informed assent and consent, respectively.

Stimuli and Recording Parameters

All stimuli, presentation parameters and recording and processing techniques have been used previously [11,14]. The speech stimulus was a synthesized 170-ms [da] syllable, with dynamic first, second, and third formants during the first 50 ms, presented monaurally using inverted polarities (180° out of phase) to the right ear at 80 dB SPL at 4.3 Hz via shielded insert earphones (ER-3, Etymotic Research) by NeuroScan Stim 2 (Compumedics). Responses were collected using Ag-AgCl electrodes in a vertical electrode montage, digitized at 20,000 Hz by NeuroScan Aquire (Compumedics), and bandpass filtered from 70 to 2000 Hz to isolate the brainstem response.

Add and Subtract Processing Techniques

Polarity-specific averages were collected and were analyzed individually (Single Polarity), added (Add), or subtracted (Subtract). The Add technique minimizes phase-dependent activity, including artifact from the transducer and cochlea, while the phase-independent component of the neural response remains. The Add technique also particularly emphasizes the response to lower frequency harmonics while minimizing the response to the high frequency stimulus fine structure [17–19]. On the other hand, the Subtract technique retains the response to the fine structure of the signal and minimizes the envelope response by correcting the half-wave rectification occurring in cochlear transduction [17,20]. Although the Subtract technique does not reduce cochlear or transducer artifact, our testing protocol minimizes artifact contamination by using transducers with tube earphones, common mode rejection referencing, and alternating polarities [23] and we have not observed artifact in this dataset or others. The Single Polarity response reflects both the amplitude envelope and the fine structure of the stimulus (see Figure 1 A–D).

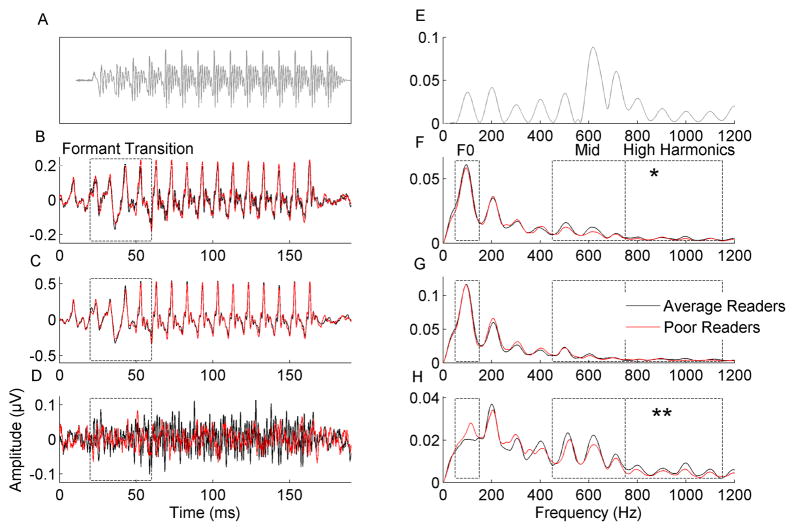

Figure 1. Poor readers have reduced representation of higher harmonics when stimulus fine structure is preserved in the response.

A. Time-domain representation of the stimulus (gray) has been shifted in time for visual purposes to reflect the neural conduction lag. B. Responses for average readers (black) and poor readers (red) to a Single Polarity of the stimulus. C. Responses for average and poor readers when using the Add technique (adding responses to alternate polarities). D. Responses for average and poor readers when using the Subtract technique (subtracting responses to alternate polarities). The entire response waveforms are plotted and the spectral analysis window (20–60 ms) is marked with hashed lines. E. The stimulus spectrum (gray) shows greater energy in the higher harmonics than the fundamental frequency (F0). F. Compared to average readers (black), poor readers (red) trend towards weaker encoding of High Harmonics in response to a Single Polarity. G. Average and poor readers do not differ in their representation of harmonics when using the Add technique. H. Poor readers have significantly weaker representation of the High Harmonics when using the Subtract technique. No group differences were found for the F0 in any condition. F0 (50–150 Hz), Mid Harmonics (450–750 Hz), and High Harmonics (750–1150 Hz) analysis regions are marked by boxes. Due to the phase locking limits of the auditory brainstem, spectral energy is plotted up to 1200 Hz only despite the presence of higher speech formants in the stimulus. Note: Given the nature of the processing methods and the phase-locking properties of the auditory brainstem, response amplitudes to a Single Polarity and when using the Add technique (B, C, F, G) are much larger than amplitudes when using the Subtract method (D, H). In order to visualize group differences regardless of overall amplitude, the y-axis limits in each panel were scaled to 110% of the largest amplitude present for the average readers in each condition.

* p < 0.05, ** p < 0.005

Data Analyses

Fast Fourier transforms were conducted over the response to the stimulus formant transition (20–60 ms). Spectral amplitudes were averaged over 50–150Hz (F0), 450–750Hz (Mid Harmonics), and 750–1150Hz (High Harmonics). Group differences between good and poor readers using a 40ms formant transition occur for 410–755Hz and 750–1150Hz, but not for lower harmonic ranges that are likely important for the perception of pitch [11,24].

The poor readers were compared to the average readers on the spectral magnitudes of their responses in the three frequency ranges (F0, Low Harmonics, High Harmonics) for each of the three analysis techniques (Single Polarity, Add, Subtract) using independent t-tests. Multiple comparisons were corrected for by setting alpha to be 0.005.

Results

Poor readers had significantly smaller response amplitudes for the High Harmonics when using the Subtract technique (t49 = 3.07, p = 0.004) and this effect was trending for the Single Polarity response (t49 = 2.56, p = 0.014). These group differences were not revealed using the Add technique (t49 = 1.33, p = 0.19), which suggests these higher harmonic effects are magnified by the Subtract technique and Single Polarity responses which more robustly represent stimulus fine structure (see Figure 1 F–G). No group differences were found for the F0 (Single Polarity: t49 = 0.62, p = 0.54; Add: t49 = 0.54, p = 0.60; Subtract: t49 = −1.49, p = 0.14) or for the Mid Harmonics (Single Polarity: t49 = 1.49, p = 0.14; Add: t49 = 0.35, p = 0.73; Subtract: t49 = 1.15, p = 0.26) in any condition.

Discussion

The current results reinforce and expand upon previous findings that brainstem encoding of formant-related harmonics is impaired in poor readers. Poor readers had weaker representation of the High Harmonics than average readers, revealed using the Subtract technique and when analyzing responses to a Single Polarity. Group differences were not found for the neural representation of the F0 for any analysis technique, highlighting that neural encoding deficits in poor readers are restricted to speech fine structure important for distinguishing consonants.

We saw no group differences in the representation of speech fine structure for the Add method likely due to an interaction between the stimulus characteristics and processing techniques. Because the formant transition of the full consonant vowel syllable is longer than the previously utilized formant transition stimulus, changes in formant frequencies are slower. While the duration and presentation rate of this syllable-length stimulus was rapid enough to be difficult for children with reading and language impairments to perceive [25], the slightly longer formant transition and the slower presentation rate used here may have not taxed the auditory system to the same extent as the formant transition stimulus, minimizing group differences in response to the speech envelope. Because the Add technique highlights the amplitude envelope and low harmonic component of the response and minimizes the contribution of the fine structure elements [17,20], it is not surprising that group differences in harmonic encoding were not found with the Add processing technique. However, when the same responses are analyzed using the Subtract technique, group differences in encoding of formant-related high harmonics did emerge. The Subtract technique emphasizes the components of the response relating to the stimulus fine structure, which directly reflects the speech formants [17,19–20]. Group differences were also trending for responses to a Single Polarity, which contains response elements reflecting both the stimulus amplitude envelope and fine structure. Thus, the Subtract technique most effectively revealed group differences in High Harmonic representation by minimizing the representation of the amplitude-envelope and emphasizing the representation of the stimulus fine structure in response to a full consonant vowel syllable.

Conclusion

Overall, poor readers had weaker encoding of stimulus fine structure than average readers, with no group differences for the F0, replicating previous results [11–12]. The present results lend further support for the hypothesis that reading ability is linked to auditory processing. Recent analytical modeling has shown that brainstem measures representing timing and harmonic elements significantly predict variance in reading ability, even when taking into account phonological awareness [16]. Impairments in the neural encoding of acoustic elements crucial for differentiating consonants, such as formant frequencies (reflected in speech fine structure), may contribute to poor phonological development and the poor consonant differentiation and phonological skills seen in children with reading impairments. Overall, these results highlight the contribution of auditory processing in learning and communication skills and reveal the effectiveness of different strategies for capturing these neural signatures in humans..

Acknowledgments

This work was supported by National Institutes of Health (R01DC01510) and the Hugh Knowles Center at Northwestern University.

We thank Jennifer Krizman, Alexandra Parbery-Clark, and Trent Nicol for their review of the manuscript, the members of the Auditory Neuroscience lab for their assistance with data collection, and the children and their families for participating.

Footnotes

The authors declare no conflicts of interest, financial or otherwise.

References

- 1.Sharma M, Purdy SC, Newall P, Wheldall K, Beaman R, Dillon H. Electrophysiologyical and behavioral evidence of auditory processing deficits in children with reading disorder. Clinical Neurophysiology. 2006;117:1130–1114. doi: 10.1016/j.clinph.2006.02.001. [DOI] [PubMed] [Google Scholar]

- 2.Benasich AA, Tallal P. Infant discrimination of rapid auditory cues predicts later language development. Behavioural Brain Research. 2002;136:31–49. doi: 10.1016/s0166-4328(02)00098-0. [DOI] [PubMed] [Google Scholar]

- 3.Boets B, Wouters J, Van Wieringen A, De Smedt B, Ghesquiere P. Modelling relations between sensory processing, speech perception, orthographic and phonological ability, and literacy achievement. Brain and Language. 2008;106:29–40. doi: 10.1016/j.bandl.2007.12.004. [DOI] [PubMed] [Google Scholar]

- 4.Serniclaes W, Van Heghe S, Mousty P, Carre R, Sprenger-Charolles L. Allophonic mode of speech perception in dyslexia. Journal of Experimental Child Psychology. 2004;87:336–361. doi: 10.1016/j.jecp.2004.02.001. [DOI] [PubMed] [Google Scholar]

- 5.Tallal P, Piercy M. Developmental aphasia: The perception of brief vowels and extended stop consonants. Neuropsychologia. 1975;13:69–74. doi: 10.1016/0028-3932(75)90049-4. [DOI] [PubMed] [Google Scholar]

- 6.Bradlow AR, Kraus N, Nicol T, McGee T, Cunningham J, Zecker SG. Effects of lengthened formant transition duration on discrimination and neural representation of synthetic CV syllables by normal and learning-disabled children. Journal of the Acoustical Society of America. 1999;106:2086–2096. doi: 10.1121/1.427953. [DOI] [PubMed] [Google Scholar]

- 7.Delattre PC, Liberman AM, Cooper FS. Acoustic loci and transitional cues for consonants. Journal of the Acoustical Society of America. 1955;27:769–773. [Google Scholar]

- 8.Ahissar M. Dyslexia and the anchoring deficit hypothesis. TRENDS in Cognitive Sciences. 2007;11:458–465. doi: 10.1016/j.tics.2007.08.015. [DOI] [PubMed] [Google Scholar]

- 9.Chandrasekaran B, Hornickel J, Skoe E, Nicol T, Kraus N. Context-dependent encoding in the human auditory brainstem relates to hearing speech in noise: Implications for developmental dyslexia. Neuron. 2009;64:311–319. doi: 10.1016/j.neuron.2009.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sussman E, Steinschneider M. Neurophysiological evidence for context-dependent encoding of sensory input in human auditory cortex. Brain Research. 2006;1075:165–174. doi: 10.1016/j.brainres.2005.12.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Banai K, Hornickel J, Skoe E, Nicol T, Zecker SG, Kraus N. Reading and subcortical auditory function. Cerebral Cortex. 2009;19:2699–2707. doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wible B, Nicol T, Kraus N. Atypical brainstem representation of onset and formant structure of speech sounds in children with language-based learning problems. Biological Psychology. 2004;67:299–317. doi: 10.1016/j.biopsycho.2004.02.002. [DOI] [PubMed] [Google Scholar]

- 13.Basu M, Krishnan A, Weber-Fox C. Brainstem correlates of temporal auditory processing in children with specific lanugage impairment. Developmental Science. 2010;13:77–91. doi: 10.1111/j.1467-7687.2009.00849.x. [DOI] [PubMed] [Google Scholar]

- 14.Hornickel J, Skoe E, Nicol T, Zecker SG, Kraus N. Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception. Proceedings of the National Academy of Sciences. 2009;106:13022–13027. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Billiet CR, Bellis TJ. The relationship between brainstem temporal processing and performance on tests of central auditory function in children with reading disorders. Journal of Speech, Language, and Hearing Research. 2010 doi: 10.1044/1092-4388(2010/09-0239). [DOI] [PubMed] [Google Scholar]

- 16.Hornickel J, Chandrasekaran B, Zecker SG, Kraus N. Auditory brainstem measures predict reading and speech-in-noise perception in school-aged children. Behavioural Brain Research. 2011;216:597–605. doi: 10.1016/j.bbr.2010.08.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Aiken SJ, Picton TW. Envelope and spectral frequency-following responses to vowel sounds. Hearing Research. 2008;245:35–47. doi: 10.1016/j.heares.2008.08.004. [DOI] [PubMed] [Google Scholar]

- 18.Sohmer H, Pratt H, Kinarti R. Sources of frequency following responses (FFR) in man. Electroencephalography and Clinical Neurophysiology. 1977;42:656–664. doi: 10.1016/0013-4694(77)90282-6. [DOI] [PubMed] [Google Scholar]

- 19.Skoe E, Kraus N. Auditory brainstem response to complex sounds: A tutorial. Ear and Hearing. 2010;31:302–324. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Greenberg S, Marsh JT, Brown WS, Smith JC. Neural temporal coding of low pitch. I. Human frequency-following responses to complex tones. Hearing Research. 1987;25:91–114. doi: 10.1016/0378-5955(87)90083-9. [DOI] [PubMed] [Google Scholar]

- 21.Woerner C, Overstreet K. Wechsler Abbreviated Scale of Intelligence (WASI) San Antonio, TX: The Psychological Corporation; 1999. [Google Scholar]

- 22.Mather N, Hammill DD, Allen EA, Roberts R. Test of Silent Word Reading Fluency (TOSWRF) Austin, TX: Pro-Ed; 2004. [Google Scholar]

- 23.Campbell T, Kerlin JR, Bishop CW, Miller LM. Methods to eliminate stimulus transduction artifact from insert earphones during electroencephalography. Ear and Hearing. doi: 10.1097/AUD.0b013e3182280353. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Meddis R, O’Mard L. A unitary model of pitch perception. Journal of the Acoustical Society of America. 1997;102:1811–1820. doi: 10.1121/1.420088. [DOI] [PubMed] [Google Scholar]

- 25.Tallal P, Piercy M. Developmental aphasia: Impaired rate of non-verbal processing as a function of sensory modality. Neuropsychologia. 1973;11:389–398. doi: 10.1016/0028-3932(73)90025-0. [DOI] [PubMed] [Google Scholar]