Abstract

An electromiographic (EMG)-based human-machine interface (HMI) is a communication pathway between a human and a machine that operates by means of the acquisition and processing of EMG signals. This article explores the use of EMG-based HMIs in the steering of farm tractors. An EPOC, a low-cost human-computer interface (HCI) from the Emotiv Company, was employed. This device, by means of 14 saline sensors, measures and processes EMG and electroencephalographic (EEG) signals from the scalp of the driver. In our tests, the HMI took into account only the detection of four trained muscular events on the driver’s scalp: eyes looking to the right and jaw opened, eyes looking to the right and jaw closed, eyes looking to the left and jaw opened, and eyes looking to the left and jaw closed. The EMG-based HMI guidance was compared with manual guidance and with autonomous GPS guidance. A driver tested these three guidance systems along three different trajectories: a straight line, a step, and a circumference. The accuracy of the EMG-based HMI guidance was lower than the accuracy obtained by manual guidance, which was lower in turn than the accuracy obtained by the autonomous GPS guidance; the computed standard deviations of error to the desired trajectory in the straight line were 16 cm, 9 cm, and 4 cm, respectively. Since the standard deviation between the manual guidance and the EMG-based HMI guidance differed only 7 cm, and this difference is not relevant in agricultural steering, it can be concluded that it is possible to steer a tractor by an EMG-based HMI with almost the same accuracy as with manual steering.

Keywords: agricultural vehicles, human-machine interface (HMI), human-computer interface (HCI), brain-computer interface (BCI), electroencephalography (EEG), control, global positioning system (GPS), tractor, guidance

1. Introduction

In recent years, research in agricultural vehicle guidance has been focused on autonomous tractor guidance, which has been mainly performed using a satellite-based Global Positioning System (GPS) [1–5]. Machine vision [6–9] and multiple sensors [10–13] are positioning methods that have also been employed to achieve autonomous guidance. Research in the teleoperation of tractors [14], the use of multiple autonomous robots [15], augmented reality, [16] and tractor architecture and communications [17] can also be found.

Scientific literature shows that the employment of human-computer interfaces (HCIs) and brain-computer interfaces (BCIs) has allowed some interesting advances in areas loosely related to tractor guidance. In the medical research area, HMI and BCI have been employed to allow people with disabilities to guide wheelchairs [18–20]. Vehicle guidance could benefit from the use of HMI and BCI, which allows for the prediction of voluntary human movement more than one-half second before it occurs [21–23], and allows for the detection of driver fatigue [24–26] and driver sleepiness [27–30].

This article explores the use of new interfaces in the agricultural field by employing an HMI to steer a tractor. To the best of our knowledge, no similar research has been reported in an agricultural scenario.

2. Electrical Signals on the Scalp Surface

The human nervous system is an organ system composed of the brain, the spinal cord, the retina, nerves and sensory neurons [31]. These elements produce electrical activity that can be measured in different ways and places. The measurement of this electrical activity in the scalp using noninvasive electrodes offers electromyography (EMG) signals related to muscle activation and electroencephalographic (EEG) signals related to brain activity.

The measurement of the EMG signals associated with a muscle’s activation is usually performed near it. But the high relative power of the EMG signals makes them propagate far from the muscles. EMG signals from the jaw, tongue, eye, face, arm and leg muscles can be measured on specific points of the scalp surface. The specific EMG signals corresponding to eye movements are named electrooculographic (EOG) signals.

EEG signals related to brain activity can also be measured on the scalp surface. Due to the high power of EMG signals, the measurement of the EEG signals is often contaminated by EMG artifacts, which EMG signals present in the EEG recordings. To achieve EEG signals without EMG artifacts: (i) the user must avoid moving muscles in the EEG signal acquisition; and (ii) some signal processing algorithms can be accomplished to remove EMG artifacts from the EEG signals acquired [32–34].

3. Surface EMG and EEG Signals Applied for Control

The acquisition and process of EMG signals from voluntary activated muscles offers a communication path that, for either disabled or healthy people, can be used in many tasks and in different environments. Some of these tasks applied for disabled people are the control of a robotic prosthesis [35–37] or a wheelchair [38–40], as well as computer [41–43] or machine [44–46] interaction. The interfaces for games [47–49] and virtual reality [50–52] are environments where healthy people can communicate through EMG signals.

In contrast to the acquisition and process of EMG signals from voluntarily activated muscles, the acquisition and process of EEG signals is focused for people with severe disabilities that lose all voluntary muscle control, including eye movements and respiration. In this way, robotic prosthesis [53–55] or wheelchair control [56–58] are also tasks in which EEG-based interfaces can be useful. Moreover, healthy people can also employ EEG-based interface environments such as, again, interfaces for games [59–61] and virtual reality [62–64].

EMG computer interface [65], human-computer interface (HCI) [66], EMG-based human-computer interface [67], EMG-based human-robot interface [68], muscle-computer interface (MuCI) [69], man-machine interface (MMI) [70], and biocontroller interface [71] are different terms used in the scientific literature to name communication interfaces that can employ EMG signals, among others. In contrast, the widely accepted name for brain communication through exclusively EEG signals that are independent of peripheral nerves and muscles is brain-computer interface (BCI).

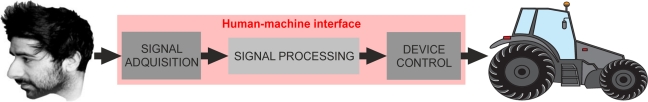

The block diagram of an EMG-based HMI or a BCI applied to control a machine usually comprises three blocks: a signal acquisition block where EMG or EEG signals are acquired from the user by means of electrodes, a signal processing block where the signals acquired are processed to obtain information about the user status, and a device control block that acts on the machine (Figure 1). The EMG or EEG signal acquisition can be done with electrodes placed on the body or scalp of the user, or with electrodes placed inside the body, being these acquisitions referred to as non-invasive or invasive, respectively. The statistical analysis [72], Bayesian approaches [73], neural networks [74], time frequency procedures [75], and parametric modeling [76] are usual techniques employed in the signal processing block, estimating the user status from the acquired EMG or EEG signals. This is the most complex part of EMG-based HCIs or BCIs, because it needs to process jointly the signals acquired from all electrodes. Furthermore, each electrode signal is composed in turn by the sum of a large number of signals at the same and at different frequencies, which comes to each electrode from different parts of the user body or brain. On-off switch [77], proportional-integral-derivative [78], and fuzzy logic [79] control are control types usually employed in the device control block.

Figure 1.

Block diagram of the application of a human-machine interface applied into a tractor steering.

4. Materials and Methods

4.1. The Emotiv EPOC Interface

The EPOC is a low cost Human-Computer Interface (HCI) that is comprised of: (i) a neuroheadset hardware device to acquire and preprocess EEG and EMG user brainwaves, and (ii) the software development kit (SDK) to process and interpret these signals. It can be purchased from the Emotiv Company website for less than one thousand US dollars [80].

The neuroheadset acquires brain neuro-signals with 14 saline sensors placed on the user scalp. It also integrates two internal gyroscopes to provide user head position information. The communication of this device with a PC occurs wirelessly by means of a USB receiver.

Emotiv provides software in two ways: (i) some suites, or developed applications, with graphical interface to process brain signals, to train the system, and to test the neuroheadset; and (ii) an application programing interface (API) to allow users to develop C or C++ software to be used with the neuroheadset.

The Emotiv EPOC can capture and process brainwaves in the Delta (0.5–4 Hz), Theta (4–8 Hz), Alpha (8–14 Hz), and Beta (14–26 Hz) bands. With the information from signals in these bands, it can detect expressive actions, affective emotions, and cognitive actions.

The expressive actions correspond to face movements. Most movements have to be initially trained by the user, and as the user supplies more training data, the accuracy in the detection of these actions typically improves. The eye and eyelid-related expressions blink, wink, look left, and look right cannot be trained because information about these expressions relies on the Emotiv software.

The affective emotions detectable by the Emotiv EPOC are engagement, instantaneous excitement, and long-term excitement. None of these three has to be trained.

Finally, the Emotiv EPOC works with 13 different cognitive actions: the push, pull, left, right, up and down directional movements, the clockwise, counter-clockwise, left, right, forward and backward rotations and a special action that makes an object disappear in the user mind.

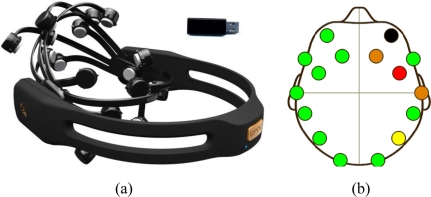

Figure 2(a) shows an Emotiv EPOC neuroheadset photograph, and Figure 2(b) shows with intuitive colors the contact quality of the neuroheadset on the user head. This picture was screen-captured from a software application provided by Emotiv.

Figure 2.

(a) The Emotiv EPOC neuroheadset and the wireless USB receiver. (b) A picture that shows with intuitive colors the contact quality of the neuroheadset on the user head.

4.2. Hardware of the Developed System

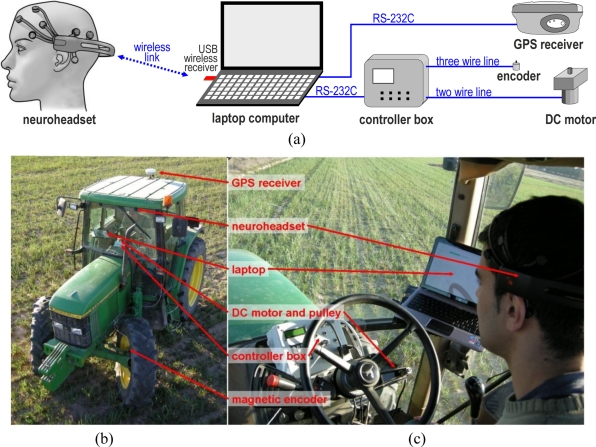

Figure 3(a) shows the hardware components of the system and the connections between them. All components were mounted on a 6400 John Deere tractor (Figure 3(b,c)). As mentioned, the HMI model was an EPOC, from the Emotiv Company [80].

Figure 3.

(a) Schematic of the connections between the hardware components of the developed system. (b) Tractor used in the tests. (c) Photo of the driver inside the tractor.

A DC RE-30 Maxon motor was installed to move the steering wheel by means of a reducer gear and a striated pulley. A controller box was specially designed to steer the tractor continuously according to the commanded orders sent by the laptop [81]. To achieve the desired angle, the box uses fuzzy logic control technology to power the DC motor by means of a PWM signal. This controller box measures the steering angle with a magnetic encoder.

An R4 Trimble receiver was used to measure the real trajectories of the results section and to perform the autonomous GPS guidance. The update of positions was configured to a rate of 5 Hz. This receiver employed real time kinematic (RTK) corrections to achieve an estimated precision of 2 cm. The corrections were provided by a virtual reference station (VRS) managed by the ITACyL, a Spanish regional agrarian institute.

A laptop computer ran our developed application, which was continuously: (i) obtaining information from the BCI about the driver brain activity, (ii) sending steering commands to the controller box about the desired steering angle, and (iii) saving the followed trajectory, obtained from the GPS.

4.3. Software of the Developed System

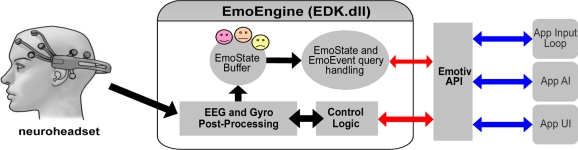

The Emotiv EPOC includes the Emotiv API, a C++ API, which allows communication with the Emotiv headset, reception of preprocessed EEG/EMG and gyroscope data, management of user-specific or application-specific settings, post-processing performing, and translation of the detected results into an easy-to-use structure called EmoState. The EmoEngine is the logical abstraction of the Emotiv API that performs all the processing of the data from the Emotiv headset. The EmoEngine is provided in a edk.dll file, and its block diagram is shown in Figure 4.

Figure 4.

Diagram of the integration of the EmoEngine and the Emotiv API with an application.

The Emotiv EPOC, by means of the Emotiv API, provides to external applications information about the event type that the device estimates emanates from the user brain and reports the event power, which represents the certainty of the event estimation. A neutral event is reported when no actions are detected.

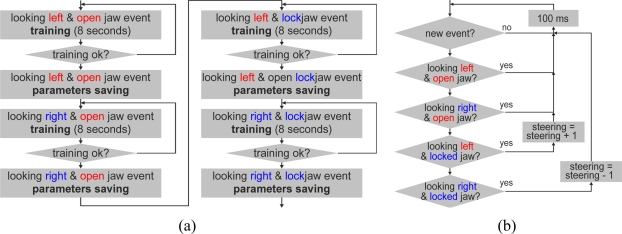

A C++ application was developed to receive the processed user brain information through the Emotiv API and to steer the tractor through the controller box. This application was configured to train some events according to the flow chart of Figure 5(a), and then, according to the flow chart of Figure 5(b), to operate with these events to steer the tractor. The chosen events in the developed application were four combinations of muscle movements:

the user eyes looking to the left when the user’s jaw is open;

the user eyes looking to the right when the user’s jaw is open;

the user eyes looking to the left when the user’s jaw is closed;

the user eyes looking to the right when the user’s jaw is closed.

Figure 5.

Simplified flow chart of the (a) system training of the four events that the BCI has to detect and (b) system test following a trajectory with the tractor.

The test driver was trained to use these events. In the training process, the EmoEngine analyzes the driver brainwaves to achieve a personalized signature of each particular event as well as one of a neutral background state. These signatures are stored in the EmoEngine memory. In the tractor steering process, the EmoEngine analyzes in real time the brainwaves acquired to detect signatures that match one of the previously stored signatures in the EmoEngine memory, and when this occurs, it communicates to the application that a specific event with a specific power emanated from the user brain.

4.4. Methods

The steering using the Emotiv EPOC was compared with the two usual methods of tractor steering: manual steering and autonomous GPS steering. A healthy driver tested the tractor guidance manually and through the Emotiv EPOC interface. The Emotiv EPOC training was completed by the driver before testing the system with the tractor. The guidance speed to test the system was approximately 1 m/s. The 5 Hz GPS rate allowed acquiring positions in the tractor trajectories approximately 20 cm apart.

The trajectories where this comparison was accomplished were: (i) a straight line longer than 50 m; (ii) a 10 m step; and (iii) a circumference of 15 m radius. These three trajectories were drawn over the plot with a mattock, taking into account GPS reference points, in order that the driver testers could follow the trajectories in the tests of manual guidance and in the tests performed through the Emotiv EPOC Interface. These three trajectories were programed with the computer for the autonomous GPS guidance tests.

The control law of Equation (1) was employed in the automatic GPS guidance. In this equation δ is the steering angle, x is the distance of the tractor from the desired trajectory, θ is the difference between the tractor orientation and the reference trajectory orientation in the trajectory point nearest to the tractor, L is the distance between the tractor axles, and k1, k2 are the control gains [3,10,13]:

| (1) |

The four muscle events enumerated in the Software of the Developed System section were initially trained with the driver who tested the system. Later, these events were used to perform the guidance through HMI along the three different trajectories. When the driver failed to follow the desired trajectory by EMG-based HMI guidance because he was not completely attentive, another attempt was performed. The authors’ initial intention was to train and use only the first two events, but we noticed that the trained events were detected during real tests in the HMI system when the driver only looked to the right or to the left, independently of the jaw status. To provide the system more information about jaw status, it was necessary to train and use all four events instead of only two.

5. Results and Discussion

5.1. Experimental Results

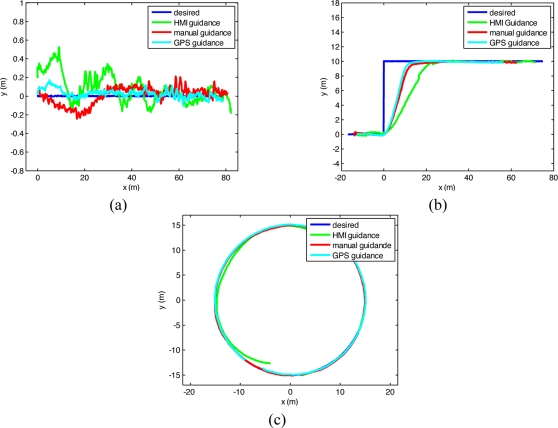

Real tests were accomplished in Pozal de Gallinas, Spain, in March 2011, along the trajectories and with the procedures presented in the Methods section. The autonomous guidance control law of Equation (1) was experimentally tuned, and k1 = 0.1 and k2 = 0.35 were obtained. Figure 6 shows the obtained results along the three trajectories. Table 1 presents the mean, standard deviation, and range of the distance from the performed trajectory by the tractor to the 50 m straight desired trajectory (Figure 6(a)). The step trajectory of Figure 6(b) was considered as a step input to the system for obtaining the step response. Table 2 presents the settling distance produced by the 10 m step response in the system. The settling distance is the horizontal distance that the tractor needed to advance after the 10 m step for the tractor to be in ±5% of the step size from the final desired trajectory, that is, to be between ±0.5 m from the final desired trajectory. Table 3 presents the mean, standard deviation, and range of the distance from the performed trajectory to the 15 m radius circular desired trajectory (Figure 6(c)).

Figure 6.

Real test guidance results through the HMI, with manual guidance, and with automatic GPS steering, taking as desired trajectories (a) a straight line, (b) a step and (c) a circumference.

Table 1.

Mean, standard deviation, and range of the distance from the performed trajectory to the desired trajectory in the 50 m straight line.

| GPS guidance | Manual guidance | HMI guidance | |

|---|---|---|---|

| Mean (cm) | 1.2 | 2.9 | 10.6 |

| Standard deviation (cm) | 4.2 | 8.7 | 15.8 |

| Range (cm) | 0–17.2 | 0–24.3 | 0–52.3 |

Table 2.

Settling distances for the 10 m step reference trajectory.

| GPS guidance | Manual guidance | HMI guidance | |

|---|---|---|---|

| Settling distance (m) | 13.3 | 14.3 | 23.1 |

Table 3.

Mean, standard deviation, and range of the distance from the performed trajectory to the desired trajectory in the 15 m radius circumference.

| GPS guidance | Manual guidance | HMI guidance | |

|---|---|---|---|

| Mean (cm) | 1.9 | 3.9 | 13.7 |

| Standard deviation (cm) | 6.6 | 11.2 | 26.6 |

| Range (cm) | 0–25.0 | 0–27.6 | 0–74.5 |

As it can be perceived from the trajectories of and from the data of Tables 1, 2 and 3, the guidance accuracy through the HMI was lower than that obtained when the driver employed his hands, and this was lower than that obtained by the autonomous GPS guidance.

5.2. Discussion

The tests comparing the HMI guidance with the manual or autonomous GPS tractor guidance show that the EMG-based HMI guidance system: (i) offers lower accuracy, because the precision achieved with the HMI was lower than that obtained with manual steering, which was also below that obtained by the autonomous GPS tractor guidance; (ii) requires extra training time, because the guidance through the HMI required a lengthy training process; and (iii) requires higher user concentration, because the drivers employing the HMI needed to be very focused to follow the desired trajectories successfully. Therefore, the authors consider that at present, vehicle guidance through EMG-based HMIs might be of interest only for people with disabilities who cannot manage a steering wheel by hand. Nevertheless, the EMG-based HMI guidance offers reasonably good accuracy, with only 16 cm standard deviation of error, which is acceptable for most agricultural tasks, and is not very different from the 9 and 4 cm obtained by means of manual steering and automatic GPS steering, respectively.

The Emotiv Company declares that the EPOC device acquires and processes EEG signals [80], and therefore, is a BCI. Moreover, most scientific literature considers the Emotiv EPOC as a BCI [59,82–89]. A BCI is a direct communication between the brain and a computer. This communication is based on the capture and process of EEG signals of brain activity and is independent of nerve and muscle activity. In turn, HCI and HMI are communications methods that encompass a wide variety of mechanisms, including the acquisition and processing of EMG signals associated to muscle movements. In our research, guidance tests by means of the Emotiv EPOC interface were unsuccessful when the Emotiv EPOC training and testing did not imply muscle movements, which means, when the drivers have only the cognition but have not performed the movements, steering was not possible. Therefore, the authors trained and employed events related with eye and jaw muscle movements, which were better detected by the EPOC. For this reason, the authors consider that the Emotiv EPOC is an HCI that proceses mainly EMG signals of muscle movements, but not a real BCI that only proceses EEG brainwaves. Moreover, since the tests performed with this HCI device is applied to a machine, the authors refer to the developed EPOC system as an EMG-based HMI.

The steering of vehicles by means of devices such as steering wheels or joysticks need to update the steering wheel or the joystick positions approximately every second. This steering can be performed by EMG-based HMIs, as this article proves. The actual BCI technology can only update the steering wheel or the joystick position at rates lower than 0.5 Hz, because the mean time to transmit a command is greater than 2 s [90–95]. Therefore, the vehicle guidance by means of BCI technology is usually achieved in the research literature by just choosing destinations from a list or selecting the branch in each intersection of the possible paths [56,57,96]. After the selections of the destination by means of the BCI, a completely autonomous guidance system steers the vehicle without user intervention. In summary, an EMG-based HMI guidance system allows for continuously updating the steering, but this updating is hard to perform through BCIs because the time to transmit a command by BCIs is greater than 2 s. One limitation of the EMG-based HMI tractor guidance is that the drivers need to be completely focused to follow the desired trajectory successfully.

The EMG-based HMI presented may be useful in practice compared to standard manual control for people with physical disabilities. Comparing the EMG-based HMI presented by other interfaces for people with disabilities based on mechanical sensors that measure movements in the user body produced by healthy muscles, the proposed system could offer three advantages. First, an easier installation and removal, because it is simpler to don and doff a helmet than install a mechanical sensor on some body parts. Second, a simpler calibration, because it could be simpler to train movements by the Emotiv EPOC software than to calibrate specific sensors attached to the driver’s body. Third, a lower price, because the Emotiv EPOC is a general purpose device, and this allows the Emotiv EPOC hardware to be purchased for less than $500, whereas specific purpose acquisition and installation of sensors on the body of the user would probably surpass this cost.

Moreover, future lines of research with tractors steering through HMIs that integrate both EMG and EEG signals could provide additional advantages over conventional guidance. One possible advantage may be the capability of this system to detect fatigue [24–26] or sleepiness [27–29] from the EEG signals, and to employ this information for evaluating the concentration of the driver and for suggesting necessary breaks. In this way, safer farm work might be achieved. Another line of research may be if HMIs could detect in advance, with regard to muscle movement, some special situations where the tractor needs to be immediately stopped. Research literature indicates that voluntary human movements can be predicted more than one-half second before they occur [21–23]. This advantage may also contribute to safer farm work. Finally, future research will also have to show if the BCI communication could allow people with severe physical disabilities to steer tractors only by thinking.

6. Conclusions

In summary, it is possible to steer a tractor through an EMG-based HMI. In comparison with manual or automatic GPS guidance, the accuracy was lower in the EMG-based HMI. Nevertheless, since the difference between the standard deviation of error to the desired trajectory in the real test between EMG-based HMI guidance and manual steering was only a few centimeters, and this difference is not relevant for most agricultural tasks, it can be concluded that is possible to steer a tractor by an EMG-based HMI with almost the same accuracy as with manual steering.

Acknowledgments

This work was partially supported by the 2010 Regional Research Project Plan of the Junta de Castilla y León, (Spain), under project VA034A10-2. It was also partially supported by the 2009 ITACyL project entitled Realidad aumentada, BCI y correcciones RTK en red para el guiado GPS de tractores (ReAuBiGPS). J. Gomez-Gil worked on this article in Lexington, KY, USA, under a José Castillejo JC-2010-286 mobility grant from the Programa Nacional de Movilidad de Recursos Humanos de Investigación, Ministerio de Educación, Spain. The authors appreciate the contributions of the staff of the University of Kentucky Writing Center, Lexington, KY, USA, that improved the style of this article.

References

- 1.O’Connor M, Bell T, Elkaim G, Parkinson B. Automatic steering of farm vehicles using GPS. Proceedings of the 3rd International Conference on Precision Agriculture; Minneapolis, MN, USA. 23–26 June 1996. [Google Scholar]

- 2.Cho SI, Lee JH. Autonomous speedsprayer using differential global positioning system, genetic algorithm and fuzzy control. J. Agric. Eng. Res. 2000;76:111–119. [Google Scholar]

- 3.Stoll A, Dieter Kutzbach H. Guidance of a forage harvester with GPS. Precis. Agric. 2000;2:281–291. [Google Scholar]

- 4.Thuilot B, Cariou C, Martinet P, Berducat M. Automatic guidance of a farm tractor relying on a single CP-DGPS. Auton. Robot. 2002;13:53–71. [Google Scholar]

- 5.Gan-Mor S, Clark RL, Upchurch BL. Implement lateral position accuracy under RTK-GPS tractor guidance. Comput. Electron. Agric. 2007;59:31–38. [Google Scholar]

- 6.Gerrish JB, Fehr BW, Ee GRV, Welch DP. Self-steering tractor guided by computer-vision. Appl. Eng. Agric. 1997;13:559–563. [Google Scholar]

- 7.Cho SI, Ki NH. Autonomous speed sprayer guidance using machine vision and fuzzy logic. Trans. ASABE. 1999;42:1137–1143. [Google Scholar]

- 8.Benson ER, Reid JF, Zhang Q. Machine vision-based guidance system for an agricultural small-grain harvester. Trans. ASABE. 2003;46:1255–1264. [Google Scholar]

- 9.Kise M, Zhang Q, Rovira Más F. A stereovision-based crop row detection method for tractor-automated guidance. Biosyst. Eng. 2005;90:357–367. [Google Scholar]

- 10.Noguchi N, Ishii K, Terao H. Development of an agricultural mobile robot using a geomagnetic direction sensor and image sensors. J. Agric. Eng. Res. 1997;67:1–15. [Google Scholar]

- 11.Zhang Q, Reid JF, Noguchi N. Agricultural vehicle navigation using multiple guidance sensors. Proceedings of the International Conference of Field and Service Robotics; Pittsburgh, PA, USA. 29–31 August 1999; pp. 293–298. [Google Scholar]

- 12.Pilarski T, Happold M, Pangels H, Ollis M, Fitzpatrick K, Stentz A. The demeter system for automated harvesting. Auton. Robot. 2002;13:9–20. [Google Scholar]

- 13.Nagasaka Y, Umeda N, Kanetai Y, Taniwaki K, Sasaki Y. Autonomous guidance for rice transplanting using global positioning and gyroscopes. Comput. Electron. Agric. 2004;43:223–234. [Google Scholar]

- 14.Murakami N, Ito A, Will JD, Steffen M, Inoue K, Kita K, Miyaura S. Development of a teleoperation system for agricultural vehicles. Comput. Electron. Agric. 2008;63:81–88. [Google Scholar]

- 15.Slaughter DC, Giles DK, Downey D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008;61:63–78. [Google Scholar]

- 16.Santana-Fernández J, Gómez-Gil J, del-Pozo-San-Cirilo L. Design and implementation of a GPS guidance system for agricultural tractors using augmented reality technology. Sensors. 2010;10:10435–10447. doi: 10.3390/s101110435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rovira-Más F. Sensor architecture and task classification for agricultural vehicles and environments. Sensors. 2010;10:11226–11247. doi: 10.3390/s101211226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Barea R, Boquete L, Mazo M, López E. Wheelchair guidance strategies using EOG. J. Intell. Robot. Syst. 2002;34:279–299. [Google Scholar]

- 19.Wei L, Hu H, Yuan K. Use of forehead bio-signals for controlling an intelligent wheelchair. Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO’08); Bangkok, Tahiland. 21–26 February 2008; pp. 108–113. [Google Scholar]

- 20.Neto AF, Celeste WC, Martins VR, Filho TFB, Filho MS. Human-Machine interface based on electro-biological signals for mobile vehicles. Proceedings of the IEEE International Symposium on Industrial Electronics (ISIE’06); Montreal, QC, Canada. 9–13 July 2006; pp. 2954–2959. [Google Scholar]

- 21.Bai O, Rathi V, Lin P, Huang D, Battapady H, Fei DY, Schneider L, Houdayer E, Chen X, Hallett M. Prediction of human voluntary movement before it occurs. Clin. Neurophysiol. 2011;122:364–372. doi: 10.1016/j.clinph.2010.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Funase A, Yagi T, Kuno Y, Uchikawa Y. Prediction of eye movements from EEG. Proceedings of the 6th International Conference on Neural Information Processing (ICONIP’99); Perth, Austria. 16–20 November 1999; pp. 1127–1131. [Google Scholar]

- 23.Morash V, Bai O, Furlani S, Lin P, Hallett M. Classifying EEG signals preceding right hand, left hand, tongue, and right foot movements and motor imageries. Clin. Neurophysiol. 2008;119:2570–2578. doi: 10.1016/j.clinph.2008.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhao C, Zheng C, Zhao M, Tu Y, Liu J. Multivariate autoregressive models and kernel learning algorithms for classifying driving mental fatigue based on electroencephalographic. Expert Syst. Appl. 2011;38:1859–1865. [Google Scholar]

- 25.Jap BT, Lal S, Fischer P, Bekiaris E. Using EEG spectral components to assess algorithms for detecting fatigue. Expert Syst. Appl. 2009;36:2352–2359. [Google Scholar]

- 26.Lin CT, Ko LW, Chung IF, Huang TY, Chen YC, Jung TP, Liang SF. Adaptive EEG-based alertness estimation system by using ICA-Based fuzzy neural networks. IEEE Trans. Circuits Syst. I-Regul. Pap. 2006;53:2469–2476. [Google Scholar]

- 27.Lin CT, Chen YC, Huang TY, Chiu TT, Ko LW, Liang SF, Hsieh HY, Hsu SH, Duann JR. Development of wireless brain computer interface with embedded multitask scheduling and its application on real-time driver’s drowsiness detection and warning. IEEE Trans. Biomed. Eng. 2008;55:1582–1591. doi: 10.1109/TBME.2008.918566. [DOI] [PubMed] [Google Scholar]

- 28.De Rosario H, Solaz JS, Rodri X, Guez N, Bergasa LM. Controlled inducement and measurement of drowsiness in a driving simulator. IET Intell. Transp. Syst. 2010;4:280–288. [Google Scholar]

- 29.Eoh HJ, Chung MK, Kim SH. Electroencephalographic study of drowsiness in simulated driving with sleep deprivation. Int. J. Ind. Ergon. 2005;35:307–320. [Google Scholar]

- 30.Lin CT, Ko LW, Chiou JC, Duann JR, Huang RS, Liang SF, Chiu TW, Jung TP. Noninvasive neural prostheses using mobile and wireless EEG. Proc. IEEE. 2008;96:1167–1183. [Google Scholar]

- 31.Kandel E, Schwartz JH, Jessel TM. Principies of Neural Science. 4th ed. McGraw-Hill; Palatino, CA, USA: 2000. [Google Scholar]

- 32.Croft RJ, Barry RJ. Removal of ocular artifact from the EEG: A review. Neurophysiol. Clin.-Clin. Neurophysiol. 2000;30:5–19. doi: 10.1016/S0987-7053(00)00055-1. [DOI] [PubMed] [Google Scholar]

- 33.Jervis B, Ifeachor E, Allen E. The removal of ocular artefacts from the electroencephalogram: A review. Med. Biol. Eng. Comput. 1988;26:2–12. doi: 10.1007/BF02441820. [DOI] [PubMed] [Google Scholar]

- 34.Fatourechi M, Bashashati A, Ward RK, Birch GE. EMG and EOG artifacts in brain computer interface systems: A survey. Clin. Neurophysiol. 2007;118:480–494. doi: 10.1016/j.clinph.2006.10.019. [DOI] [PubMed] [Google Scholar]

- 35.Shenoy P, Miller KJ, Crawford B, Rao RPN. Online electromyographic control of a robotic prosthesis. IEEE Trans. Biomed. Eng. 2008;55:1128–1135. doi: 10.1109/TBME.2007.909536. [DOI] [PubMed] [Google Scholar]

- 36.Foldes ST, Taylor DM. Discreet discrete commands for assistive and neuroprosthetic devices. IEEE Trans. Neural Syst. Rehabil. Eng. 2010;18:236–244. doi: 10.1109/TNSRE.2009.2033428. [DOI] [PubMed] [Google Scholar]

- 37.Soares A, Andrade A, Lamounier E, Carrijo R. The development of a virtual myoelectric prosthesis controlled by an emg pattern recognition system based on neural networks. J. Intell. Inf. Syst. 2003;21:127–141. [Google Scholar]

- 38.Oonishi Y, Sehoon O, Hori Y. A new control method for power-assisted wheelchair based on the surface myoelectric signal. IEEE Trans. Ind. Electron. 2010;57:3191–3196. [Google Scholar]

- 39.Wei L, Hu H, Lu T, Yuan K. Evaluating the performance of a face movement based wheelchair control interface in an indoor environment. Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO’10); Tianjin, China. 14–18 December 2010; pp. 387–392. [Google Scholar]

- 40.Seki H, Takatsu T, Kamiya Y, Hikizu M, Maekawa M. A powered wheelchair controlled by EMG signals from neck muscles. In: Eiji A, Tatsuo A, Masaharu T, editors. Human Friendly Mechatronics. Elsevier Science; Amsterdam, The Netherlands: 2001. pp. 87–92. [Google Scholar]

- 41.Barreto AB, Scargle SD, Adjouadi M. A practical EMG-based human-computer interface for users with motor disabilities. J. Rehabil. Res. Dev. 2000;37:53–64. [PubMed] [Google Scholar]

- 42.Tecce JJ, Gips J, Olivieri CP, Pok LJ, Consiglio MR. Eye movement control of computer functions. Int. J. Psychophysiol. 1998;29:319–325. doi: 10.1016/s0167-8760(98)00020-8. [DOI] [PubMed] [Google Scholar]

- 43.Williams MR, Kirsch RF. Evaluation of head orientation and neck muscle emg signals as command inputs to a human-computer interface for individuals with high tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 2008;16:485–496. doi: 10.1109/TNSRE.2008.2006216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Chang GC, Kang WJ, Luh JJ, Cheng CK, Lai JS, Chen JJJ, Kuo TS. Real-time implementation of electromyogram pattern recognition as a control command of man-machine interface. Med. Eng. Phys. 1996;18:529–537. doi: 10.1016/1350-4533(96)00006-9. [DOI] [PubMed] [Google Scholar]

- 45.Artemiadis PK, Kyriakopoulos KJ. An EMG-based robot control scheme robust to time-varying EMG signal features. IEEE T. Inf. Technol. Biomed. 2010;14:582–588. doi: 10.1109/TITB.2010.2040832. [DOI] [PubMed] [Google Scholar]

- 46.Rovetta A, Cosmi F, Tosatti LM. Teleoperator response in a touch task with different display conditions. IEEE Trans. Syst. Man Cybern. 1995;25:878–881. [Google Scholar]

- 47.Wheeler KR, Jorgensen CC. Gestures as input: Neuroelectric joysticks and keyboards. IEEE Pervasive Comput. 2003;2:56–61. [Google Scholar]

- 48.Oppenheim H, Armiger RS, Vogelstein RJ. WiiEMG: A real-time environment for control of the Wii with surface electromyography. Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS’10); Paris, France. 30 May–2 June 2010; pp. 957–960. [Google Scholar]

- 49.Lyons GM, Sharma P, Baker M, O’Malley S, Shanahan A. A computer game-based EMG biofeedback system for muscle rehabilitation. Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC’03); Cancun, Mexico. 17–21 September 2003; pp. 1625–1628. [Google Scholar]

- 50.Polak S, Barniv Y, Baram Y. Head motion anticipation for virtual-environment applications using kinematics and EMG energy. IEEE Trans. Syst. Man Cybern. A-Syst. Hum. 2006;36:569–576. [Google Scholar]

- 51.Wada T, Yoshii N, Tsukamoto K, Tanaka S. Development of virtual reality snowboard system for therapeutic exercise. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’04); Sendai, Japan. 28 September–2 October 2004; pp. 2277–2282. [Google Scholar]

- 52.Takeuchi T, Wada T, Mukobaru M, Doi S. A training system for myoelectric prosthetic hand in virtual environment. Proceedings of the IEEE/ICME International Conference on Complex Medical Engineering (CME’07); Beijing, China. 22–27 May 2007; pp. 1351–1356. [Google Scholar]

- 53.Muller-Putz GR, Pfurtscheller G. Control of an electrical prosthesis with an SSVEP-based BCI. IEEE Trans. Biomed. Eng. 2008;55:361–364. doi: 10.1109/TBME.2007.897815. [DOI] [PubMed] [Google Scholar]

- 54.Horki P, Solis-Escalante T, Neuper C, Müller-Putz G. Combined motor imagery and SSVEP based BCI control of a 2 DoF artificial upper limb. Med. Biol. Eng. Comput. 2011;49:567–577. doi: 10.1007/s11517-011-0750-2. [DOI] [PubMed] [Google Scholar]

- 55.Patil P, Turner D. The development of brain-machine interface neuroprosthetic devices. Neurotherapeutics. 2008;5:137–146. doi: 10.1016/j.nurt.2007.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Galán F, Nuttin M, Lew E, Ferrez PW, Vanacker G, Philips J, Millán JDR. A brain-actuated wheelchair: Asynchronous and non-invasive brain-computer interfaces for continuous control of robots. Clin. Neurophysiol. 2008;119:2159–2169. doi: 10.1016/j.clinph.2008.06.001. [DOI] [PubMed] [Google Scholar]

- 57.Perrin X, Chavarriaga R, Colas F, Siegwart R, Millán JDR. Brain-coupled interaction for semi-autonomous navigation of an assistive robot. Robot. Auton. Syst. 2010;58:1246–1255. [Google Scholar]

- 58.Millan JR, Renkens F, Mourino J, Gerstner W. Noninvasive brain-actuated control of a mobile robot by human EEG. IEEE Trans. Biomed. Eng. 2004;51:1026–1033. doi: 10.1109/TBME.2004.827086. [DOI] [PubMed] [Google Scholar]

- 59.Nijholt A, Bos DPO, Reuderink B. Turning shortcomings into challenges: Brain-Computer interfaces for games. Entertain. Comput. 2009;1:85–94. [Google Scholar]

- 60.Scherer R, Lee F, Schlogl A, Leeb R, Bischof H, Pfurtscheller G. Toward self-paced brain-computer communication: Navigation through virtual worlds. IEEE Trans. Biomed. Eng. 2008;55:675–682. doi: 10.1109/TBME.2007.903709. [DOI] [PubMed] [Google Scholar]

- 61.Finke A, Lenhardt A, Ritter H. The MindGame: A P300-based brain-computer interface game. Neural Netw. 2009;22:1329–1333. doi: 10.1016/j.neunet.2009.07.003. [DOI] [PubMed] [Google Scholar]

- 62.Chen WD, Zhang JH, Zhang JC, Li Y, Qi Y, Su Y, Wu B, Zhang SM, Dai JH, Zheng XX, Xu DR. A P300 based online brain-computer interface system for virtual hand control. J. Zhejiang Univ. Sci. C. 2010;11:587–597. [Google Scholar]

- 63.Bayliss JD. Use of the evoked potential P3 component for control in a virtual apartment. IEEE Trans. Neural Syst. Rehabil. Eng. 2003;11:113–116. doi: 10.1109/TNSRE.2003.814438. [DOI] [PubMed] [Google Scholar]

- 64.Liu B, Wang Z, Song G, Wu G. Cognitive processing of traffic signs in immersive virtual reality environment: An ERP study. Neurosci. Lett. 2010;485:43–48. doi: 10.1016/j.neulet.2010.08.059. [DOI] [PubMed] [Google Scholar]

- 65.Choi C, Micera S, Carpaneto J, Kim J. Development and quantitative performance evaluation of a noninvasive EMG computer interface. IEEE Trans. Biomed. Eng. 2009;56:188–191. doi: 10.1109/TBME.2008.2005950. [DOI] [PubMed] [Google Scholar]

- 66.Nojd N, Hannula M, Hyttinen J. Electrode position optimization for facial EMG measurements for human-computer interface. Proceedings of the 2nd International Conference on Pervasive Computing Technologies for Healthcare; Tampere, Finland. 29 January–1 February 2008; pp. 319–322. [DOI] [PubMed] [Google Scholar]

- 67.Moon I, Lee M, Mun M. A novel EMG-based human-computer interface for persons with disability. Proceedings of the IEEE International Conference on Mechatronics (ICM’04); Istanbul, Turkey. 3–5 June 2004; pp. 519–524. [Google Scholar]

- 68.Bu N, Okamoto M, Tsuji T. A hybrid motion classification approach for EMG-based human-robot interfaces using bayesian and neural networks. IEEE Trans. Robot. 2009;25:502–511. [Google Scholar]

- 69.Xu Z, Xiang C, Lantz V, Ji-Hai Y, Kong-Qiao W. Exploration on the feasibility of building muscle-computer interfaces using neck and shoulder motions. Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC’09); Mineapolis, MN, USA. 2–6 September 2009; pp. 7018–7021. [DOI] [PubMed] [Google Scholar]

- 70.de la Rosa R, Alonso A, Carrera A, Durán R, Fernández P. Man-machine interface system for neuromuscular training and evaluation based on EMG and MMG signals. Sensors. 2010;10:11100–11125. doi: 10.3390/s101211100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Eckhouse RH, Maulucci RA. A multimedia system for augmented sensory assessment and treatment of motor disabilities. Telemat. Inform. 1997;14:67–82. [Google Scholar]

- 72.Jain AK, Duin RPW, Jianchang M. Statistical pattern recognition: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2000;22:4–37. [Google Scholar]

- 73.Zhu X, Wu J, Cheng Y, Wang Y. A unified framework to exploit information in BCI data for continuous prediction. Neurocomputing. 2008;71:1022–1031. [Google Scholar]

- 74.Felzer T, Freisieben B. Analyzing EEG signals using the probability estimating guarded neural classifier. IEEE Trans. Neural Syst. Rehabil. Eng. 2003;11:361–371. doi: 10.1109/TNSRE.2003.819785. [DOI] [PubMed] [Google Scholar]

- 75.Farquhar J. A linear feature space for simultaneous learning of spatio-spectral filters in BCI. Neural Netw. 2009;22:1278–1285. doi: 10.1016/j.neunet.2009.06.035. [DOI] [PubMed] [Google Scholar]

- 76.Burke DP, Kelly SP, de Chazal P, Reilly RB, Finucane C. A parametric feature extraction and classification strategy for brain-computer interfacing. IEEE Trans. Neural Syst. Rehabil. Eng. 2005;13:12–17. doi: 10.1109/TNSRE.2004.841881. [DOI] [PubMed] [Google Scholar]

- 77.Lipták BG. Process Control and Optimization. CRC Press; Boca Raton, FL, USA: 2006. [Google Scholar]

- 78.Ogata K. Modern Control Engineering. 4th ed. Prentice Hall; Englewood Cliffs, NJ, USA: 2001. [Google Scholar]

- 79.Reznik L. Fuzzy Controllers. Newnes; Melbourne, Australia: 1997. [Google Scholar]

- 80.Emotiv—Brain Computer Interface Technology. Available online: http://www.emotiv.com (accesed on 14 June 2011)

- 81.Carrera-González A, Alonso-García S, Gómez-Gil J. Design, development and implemementation of a steering controller box for an automatic agricultural tractor guidance system, using fuzzy logic. In: Iskander M, Kapila V, Karim MA, editors. Technological Developments in Education and Automation. Springer; New York, NY, USA: 2010. pp. 153–158. [Google Scholar]

- 82.Ranky GN, Adamovich S. Analysis of a commercial EEG device for the control of a robot arm. Proceedings of the 36th Annual Northeast Bioengineering Conference (NEBEC’10); New York, NY, USA. 27–28 March 2010; pp. 1–2. [Google Scholar]

- 83.Rosas-Cholula G, Rami X, Rez-Cortes JM, Alarcon-Aquino V, Martinez-Carballido J, Gomez-Gil P. On signal P-300 detection for BCI applications based on wavelet analysis and ICA preprocessing. Proceedings of the IEEE Electronics, Robotics and Automotive Mechanics Conference (CERMA’10); Cuernavaca, Mexico. 25–28 September 2010; pp. 360–365. [Google Scholar]

- 84.Esfahani ET, Sundararajan V. Classification of primitive shapes using brain-computer interfaces. Comput-Aided Des. 2011 in press. [Google Scholar]

- 85.Paulson LD. A new Wi-Fi for peer-to-peer communications. Computer. 2008;41:19–21. [Google Scholar]

- 86.Zintel E. Tools and products. IEEE Comput. Graph. Appl. 2008;28:103–104. [Google Scholar]

- 87.Stamps K, Hamam Y. Towards inexpensive BCI control for wheelchair navigation in the enabled environment—A hardware survey. In: Yao Y, Sun R, Poggio T, Liu J, Zhong N, Huang J, editors. Brain Informatics. Springer; Heidelberg, Germany: 2010. pp. 336–345. [Google Scholar]

- 88.Jackson MM, Mappus R. Applications for brain-computer interfaces. In: Tan DS, Nijholt A, editors. Brain-Computer Interfaces. Springer; London, UK: 2010. pp. 89–103. [Google Scholar]

- 89.Allison BZ. Toward ubiquitous BCIs. In: Graimann B, Pfurtscheller G, Allison B, editors. Brain-Computer Interfaces. Springer; Heidelberg, Germany: 2010. pp. 357–387. [Google Scholar]

- 90.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 91.Maggi L, Parini S, Piccini L, Panfili G, Andreoni G. A four command BCI system based on the SSVEP protocol. Proceedings of the 28th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS’06); New York, NY, USA. 30 August–3 September 2006; pp. 1264–1267. [DOI] [PubMed] [Google Scholar]

- 92.Blankertz B, Dornhege G, Krauledat M, Muller KR, Kunzmann V, Losch F, Curio G. The Berlin brain-computer interface: EEG-based communication without subject training. IEEE Trans. Neural Syst. Rehabil. Eng. 2006;14:147–152. doi: 10.1109/TNSRE.2006.875557. [DOI] [PubMed] [Google Scholar]

- 93.Gao X, Xu D, Cheng M, Gao S. A BCI-based environmental controller for the motion-disabled. IEEE Trans. Neural Syst. Rehabil. Eng. 2003;11:137–140. doi: 10.1109/TNSRE.2003.814449. [DOI] [PubMed] [Google Scholar]

- 94.Wang Y, Wang YT, Jung TP. Visual stimulus design for high-rate SSVEP BCI. Electron. Lett. 2010;46:1057–1058. [Google Scholar]

- 95.Krausz G, Scherer R, Korisek G, Pfurtscheller G. Critical decision-speed and information transfer in the “Graz Brain–Computer Interface”. Appl. Psychophysiol. Biofeedback. 2003;28:233–240. doi: 10.1023/a:1024637331493. [DOI] [PubMed] [Google Scholar]

- 96.Rebsamen B, Cuntai G, Zhang H, Wang C, Teo C, Ang MH, Burdet E. A brain controlled wheelchair to navigate in familiar environments. IEEE Trans. Neural Syst. Rehabil. Eng. 2010;18:590–598. doi: 10.1109/TNSRE.2010.2049862. [DOI] [PubMed] [Google Scholar]