1. Introduction

1.1. Light and Electron Microscopy and Their Impact in Biology

To fully understand biological processes from the metabolism of a bacterium to the operation of a human brain, it is necessary to know the three-dimensional (3D) spatial arrangement and dynamics of the constituent molecules, how they assemble into complex molecular machines, and how they form functional organelles, cells, and tissues. The methods of X-ray crystallography and NMR spectroscopy can provide detailed information on molecular structure and dynamics. At the cellular level, optical microscopy reveals the spatial distribution and dynamics of molecules tagged with fluorophores. Electron microscopy (EM) overlaps with these approaches, covering a broad range from atomic to cellular structures. The development of cryogenic methods has enabled EM imaging to provide snapshots of biological molecules and cells trapped in a close to native, hydrated state.1,2

Because of the importance of macromolecular assemblies in the machinery of living cells and progress in the EM and image processing methods, EM has become a major tool for structural biology over the molecular to cellular size range. There have been tremendous advances in understanding the 3D spatial organization of macromolecules and their assemblies in cells and tissues, due to developments in both optical and electron microscopy. In light microscopy, super-resolution and single molecule methods have pushed the resolution of fluorescence images to ∼50 nm, using the power of molecular biology to fuse molecules of interest with fluorescent marker proteins.(3) X-ray cryo-tomography is developing as a method for 3D reconstruction of thicker (10 μm) hydrated samples, with resolution reaching the 15 nm resolution range.(4) In EM, major developments in instrumentation and methods have advanced the study of single particles (isolated macromolecular complexes) in vitrified solution as well as in 3D reconstruction by tomography of irregular objects such as cells or subcellular structures.1,5−7 Cryo-sectioning can be used to prepare vitrified sections of cells and tissues that would otherwise be too thick to image by transmission EM (TEM).8,9

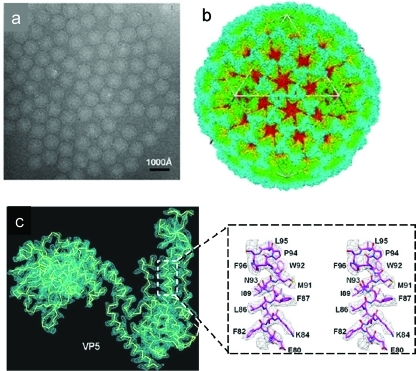

In parallel, software improvements have facilitated 3D structure determination from the low contrast, low signal-to-noise ratio (SNR) images of projected densities provided by TEM of biological molecules.10−14 Alignment and classification of images in both 2D and 3D are key methods for improving SNR and detection and sorting of heterogeneity in EM data sets.(14) The resolution of single-particle reconstructions is steadily improving and has gone beyond 4 Å for some icosahedral viruses and 5.5 Å for asymmetric complexes such as ribosomes, giving a clear view of protein secondary structure elements and, in the best cases, resolving the protein or nucleic acid fold.15,16

1.2. EM of Macromolecular Assemblies, Isolated and in Situ

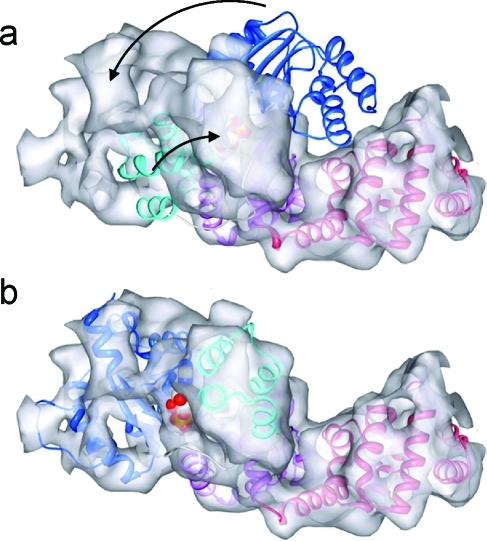

A variety of molecular assemblies of different shapes, sizes, and biochemical states can be studied by TEM, provided the sample thickness is well below 1000 nm. There is a range of sample types typified by two extreme cases: biochemically purified, isolated complexes (single particles or ordered assemblies such as 2D crystals) and unique, individual objects such as tissue sections, cells, or organelles. From preparations of isolated complexes with many identical single particles present on an EM grid, many views of the same molecule can be obtained, so that their 3D structure can be calculated. Near-atomic resolution maps were first obtained from samples in ordered arrays such as 2D crystals and helices.17,18 Membrane proteins can be induced to form 2D crystals in lipid bilayers, although examples of highly ordered crystals leading to high-resolution 3D structures are still rare. If membrane-bound complexes are large enough, they can also be prepared as single particles using detergents or in liposomes. In general, the single-particle approach is widely applicable and has caught up with the crystallographic one. This approach is applicable to homogeneous preparations of single particles with any symmetry and molecular masses in the range of 0.5–100 MDa (e.g., viruses, ribosomes) and can reveal fine details of the 3D structure.(15) The study of single particles by cryo-EM in the 0.1–0.5 MDa size range still needs great care to avoid producing false but self-consistent density maps. In addition, the single-particle approach can be used to correct for local disorder in ordered arrays, improving the yield of structural information. Regarding the quality of this structural information, the resolution of cryo-EM is steadily improving, and comparisons of cryo-EM results with X-ray crystallography or NMR of the same molecules indicate that cryo-EM often provides faithful snapshots of the native structure in solution. A detailed account of the basic principles of imaging and diffraction can be found in ref (19).

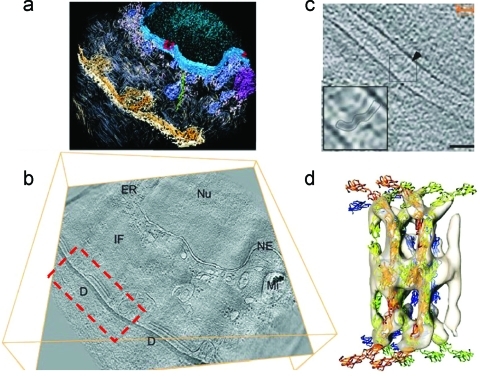

For cells, organelles, and tissue sections, electron tomography provides a wealth of 3D information, and methods for harvesting this information are in an active state of development. Automated tomographic data collection is well established on modern microscopes. A major factor limiting resolution in cryo-electron tomography is radiation damage of the specimen by the electron beam during acquisition of a tilt series. At the forefront of this field are efforts to optimize contrast at low electron dose, in order to locate and characterize macromolecular complexes within tomograms of cells and tissues. At present, complexes must be well over 1 MDa to be clearly identifiable in an EM tomographic reconstruction. Examples of important biological structures characterized by electron tomography include the nuclear pore complex(20) and the flagellar axoneme.(21) For thicker, cellular samples, X-ray microscopy (tomography) provides information in the 15–100 nm resolution range, bridging EM tomography and fluorescence methods.

The above developments have led to a flourishing field enabling multiscale imaging to link atomic structure to cellular function and dynamics. In this Review, we aim to cover the theoretical background and technical advances in instrumentation, software, and experimental methods underlying the major developments in 3D structure determination of macromolecular assemblies by EM and to review the current state of the art in the field.

2. EM Imaging

2.1. Sample Preparation

Electron imaging is a powerful technique for visualizing 3D structural details. However, because electrons interact strongly with matter, the electron path of the microscope must be kept under high vacuum to avoid unwanted scattering by gas molecules in the electron path. Consequently, the EM specimen must be in the solid state for imaging, and special preparation techniques are necessary to either dehydrate or stabilize hydrated biological samples under vacuum.(22)

2.1.1. Negative Staining of Isolated Assemblies

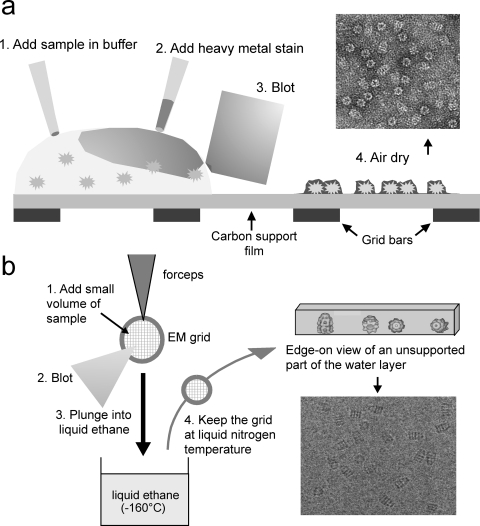

The simplest method for examining a solution or suspension of isolated particles such as viruses or other macromolecules is negative staining, in which a droplet of the suspension is spread on an EM support film and then embedded in a heavy metal salt solution, typically uranyl acetate, blotted to a thin film and allowed to dry23,24 (Figure 1a). Although uranyl acetate is the most widely used stain and gives the highest contrast, some structures are better preserved in other stains such as tungsten or molybdenum salts.25,26 The heavy metal stain is deposited as a dense coat outlining the surfaces of the biological assembly, giving information about the size, shape, and symmetry of the particle, as well as an overview of the homogeneity of the preparation. The method is called negative staining because the macromolecular shape is seen by exclusion rather than binding of stain. The method is quick and simple, although not foolproof. Some molecules are well preserved in negative stain, but fragile assemblies can collapse or disintegrate during staining and drying. In general, the 3D structure becomes flattened to a greater or lesser degree, and the stain may not cover the entire molecule, so that parts of the structure may be distorted or absent from the image data. Therefore, it is normally preferable to use cryo-methods for 3D structure determination. The exception is for small structures, below ∼100–200 kDa, for which the signal in cryo-EM may be too weak for accurate detection and orientation determination. For such structures, 3D reconstruction is done from negative stain images and can provide much useful information.

Figure 1.

Negative stain and cryo EM sample preparation. (a) Schematic view of sample deposition, staining, and drying, with an example negative stain image. (b) Schematic of plunge freezing and of a vitrified layer, and an example cryo EM image. Panel (b) is adapted with permission from ref (24). Copyright 2000 International Union of Crystallography.

2.1.2. Cryo EM of Isolated and Subcellular Assemblies

Macromolecules and cells are normally in aqueous solution, and hydration is necessary for their structural integrity. Cryo EM makes it possible to stabilize samples in the native, hydrated state, even under high vacuum. The main technical effort of cryo EM is to keep the specimen cold and free of surface contamination in an otherwise warm microscope while retaining mechanical and thermal stability. Rapid freezing is used to bring the sample to the solid state without dehydration or ice crystallization, and the sample is maintained at low temperature during transfer and observation in the EM. The method widely used for freezing aqueous solutions is to blot them to a thin layer and immediately plunge into liquid ethane or propane (−182 °C) cooled by liquid nitrogen for rapid heat transfer from the specimen1,27 (Figure 1b(24)). Cooling by plunging into liquid ethane is much faster than plunging directly into liquid nitrogen because liquid ethane is used near to its freezing point rather than at its boiling point, so it does not evaporate and produce an insulating gas layer. Rapid cooling traps the biological molecules in their native, hydrated state embedded in glass-like, solid water, vitrified ice, and prevents the formation of ice crystals, which would be very damaging to the specimen. There are two tremendous advantages of cryo EM: the sample, which is kept around −170 °C, near liquid nitrogen temperature (−196 °C), is trapped in a native-like, hydrated state in the high vacuum of the microscope column, and the low temperature greatly slows the effects of electron beam damage.

An important consideration in cryo EM sample preparation is the type of support film. Some samples adhere to the carbon support film, but continuous carbon films contribute additional background scattering and reduce the image contrast. Therefore, perforated films are often used, in which the sample is imaged in regions of ice suspended over holes in the support film. Home-made holey films provide a random distribution of holes. Nanofabricated grids (e.g., Quantifoil grids, Quantifoil Micro Tools GmbH; C-flat grids, Protochips, Inc.) with regularly arranged holes are used for automated and manual data collection. A significantly higher sample concentration is usually needed for good particle distribution in holes. The ice thickness is extremely critical for achieving good contrast while preserving the integrity of the structure. It takes some experience to adjust the blotting so that the ice is an optimal thickness for each sample. The general rule is to have the ice as thin as possible without squashing the molecules of interest.

In addition to thermal stability, a major issue is sample conductance; ice unsupported by carbon film is an insulator, and charging effects caused by the electron beam can seriously degrade the image, especially at high tilt. This problem is lessened by including an adjacent carbon layer in the illuminated area. New support materials with higher conductivity than carbon are being investigated.28,29

The single-particle approach can be applied to preparations of isolated objects such as particles in aqueous solution or membrane complexes in detergent solution. EM is experimentally more difficult in detergent, which may give extra background and change the properties of the ice. Membrane complexes can also be imaged in lipid vesicles,30,31 in a variant of single-particle analysis in which the particle images are excised from larger assemblies.

There is a lower size limit for single-particle analysis, because the object must generate enough contrast to be detected and for its orientation to be determined. Single-particle cryo-EM becomes very difficult when the particle is less than a few hundred kDa in mass. The size limit is affected by the shape of the particle; an extended structure with distinct projections in different directions will be much easier to align than a compact spherical particle of similar mass. For small particles, negative stain EM is used. A hybrid approach to sample preparation, cryo-negative staining, has been developed.22,32,33 The sample is embedded in stain solution and then vitrified, after partial drying. This method allows smaller complexes to be studied by cryo EM, but has the disadvantage that the sample is in a high concentration of heavy metal salt, far from physiological conditions.

2.1.3. Stabilization of Dynamic Assemblies

As molecular biology moves toward studies of more complex systems, the focus of interest has moved toward more biochemically heterogeneous samples. Although there are computational methods for sorting particles with structural variations (section 9), the success of the experiment depends critically on the quality of the biochemical preparation. One approach for dealing with unstable, heterogeneous assemblies is to use protein cross-linkers such as glutaraldehyde to stabilize complexes during density gradient separation, a procedure termed GraFix.22,34 Promising results have been obtained with very difficult samples such as complexes in RNA editing,(35) but it should be noted that cross-linkers may also introduce artifacts in flexible assemblies.

2.1.4. EM Preparation of Cells and Tissues

2.1.4.1. High Pressure Freezing

Most cellular structures are too thick for TEM imaging, and samples are prepared as thin sections. Standard chemical fixation has provided the classical view of cell structure, in which the sample is cross-linked with fixatives and then dehydrated and embedded in plastic resin so that it can be readily sectioned for EM examination. Plastic-embedded sections are contrasted with heavy metal staining. Although this treatment can lead to extensive rearrangement and extraction of cell and tissue contents, the great majority of cell structure information at the EM level has been derived from such material.

High-pressure freezing has made it possible to avoid chemical fixation so that cell and tissue sections can be imaged in the vitreous state.36−38 To vitrify specimens thicker than a few micrometers, it is necessary to do the rapid freezing at high pressures, around 2000 bar, because the freezing rate in thicker samples at ambient pressure is not high enough to prevent ice crystal growth. Instruments for high-pressure freezing (HPF)(36) were first developed in the 1960s and are widely used in cell preparation, in combination with freeze-substitution (see section 2.1.4.2). The specimen is introduced into a pressure chamber at room temperature and rapidly pressurized, with cooling provided by liquid N2 flow through the metal sample holder. Samples such as yeast or bacterial cells in 100–200 μm thick pellets or pastes can readily be vitrified by HPF. Samples with higher water content, such as embryonic or brain tissue, are more difficult to vitrify in this manner, because water is a poor thermal conductor, and thinner tissue slices (≤100 μm) must be used. For the same reason, aqueous media surrounding the sample must be supplemented by antifreeze agents such as 1-hexadecene(39) or 20% dextran before vitrification by HPF.(40)

2.1.4.2. Freeze-Substitution

Freeze-substitution (FS) eliminates some of the artifacts of chemical fixation and dehydration and provides greatly improved structural preservation, while retaining the ease of working with plastic sections and room temperature microscopy.(41) The sample is initially vitrified as for cryo-sectioning, but then gradually warmed for substitution of the water with acetone, followed by staining and resin embedding at −90 to −50 °C (see, for example, ref (42)). Some resins can be polymerized by UV illumination at low temperature, so that all processing is completed at low temperature. With this treatment, cytoplasmic contents such as ribosomes are retained and rearrangement of cell structures is reduced(36) (Figure 2a). However, small ice crystals form during FS processing, and staining is not uniform, so that the results are not reliable on the molecular scale.

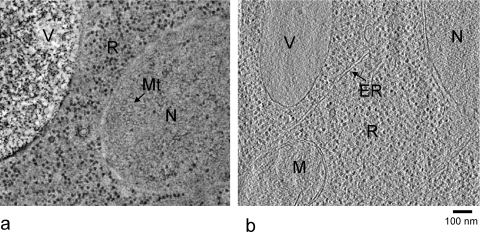

Figure 2.

Slices of single axis tomograms of yeast cell sections prepared by freeze-substitution (a) and cryo-sectioning of vitrified samples (b). The black dots are mainly ribosomes. R, ribosomes; N, nucleus; V, vacuole; MT, microtubules (ring shaped cross sections); M, mitochondrion; ER, endoplasmic reticulum. Panel (b) was produced by Dan Clare.

Importantly, antigenicity is often conserved in FS material, so that immunolabeling or other chemical labeling can be done on the sections.(36) This is a major advantage over vitreous sectioning, for which antibody labeling is impossible. FS is an important adjunct to cryo-sectioning for tomography of cell structures, because large volumes are far more readily imaged and structures of interest tracked in the 200–300 nm thick sections that can be examined with FS. In addition, the fluorescence of GFP is retained in the freeze-substituted sections, facilitating correlative fluorescence/EM.(43) A chemical reaction of the GFP chromophore with diaminobenzidine produces an electron dense product, allowing GFP tags to be precisely localized in EM sections.(44) Therefore, the combination of cryo-sectioning and freeze-substitution on the same sample can provide an overview of the 3D structure, chemical labeling, and detailed structural information on regions of interest.

2.1.4.3. Cryo-Sectioning of Frozen-Hydrated Specimens

Sectioning removes the restriction of cryo EM to examination of only the thinnest bacterial cells or cell extensions. After a long period of development, vitreous sectioning has started to become generally accessible.8,9 The vitrified block is sectioned at −140 to −160 °C with a diamond knife. Compression of the sections along the cutting direction and crevasses on the surface of thicker sections present mechanical artifacts. Nevertheless, HPF and cryo-sectioning currently provide the best view into the native structures of cells and tissues (Figure 2b). Because the native structures are preserved, macromolecular structures can be imaged in vitrified sections. Therefore, cryo-ET is an important step toward the ultimate goal of understanding the atomic structure of the cell.(45)

Cryo-sections must be around 50–150 nm thick, to find the best compromise between formation of crevasses (thicker) and section compression (thinner). Because of the low contrast and the tiny fraction of cell volume sampled in such thin sections, it can be very hard to locate the object of interest, unless the structure is large and very abundant, or associated with large-scale landmarks, such as membranes or large organelles. For this reason, and also for biochemical identification, a very important recent development is correlative cryo-fluorescence and EM.(46) Cryo stages are being developed for fluorescence microscopes, and if the signal is strong, the fluorescence can be first mapped out on the cryo section or cell culture on an indexed (“finder”) grid, and then the same grid can be examined by cryo EM.

2.1.4.4. Focused Ion Beam Milling

An alternative to cryo-sectioning currently being explored is focused ion beam milling, in which material is removed from the surface of a frozen specimen by irradiation with a beam of gallium ions, until the sample is thin enough for TEM.47,48 Milling is done under visual control in a cryo-scanning EM, followed by cryo-transfer to the TEM for tomography. Preliminary work suggests that the thinned layer remains vitrified, without noticeable effects of the ion beam exposure. The method produces a smooth surface, importantly, without section compression or crevasses, thus avoiding the mechanical artifacts of cryo-sectioning.

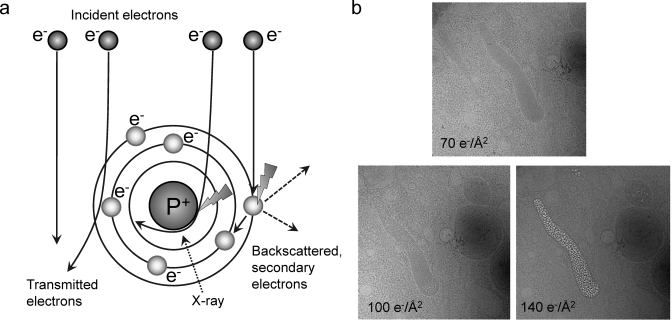

2.2. Interaction of Electrons with the Specimen

Imaging with electrons provides the advantage of high resolution, due to their short wavelength. However, the strong interaction of electrons from the primary electron beam with the sample causes radiation damage in the sample. The nature of the interaction of the electrons with the sample depends on the electron energy and sample composition.(49) Some electrons pass through the sample without any interactions, others are deflected by the electrostatic field of the nucleus, screened by the outer orbital electrons of specimen atoms, and some electrons may collide or nearly collide with the atomic nuclei, suffering high angle deflections or even backscattering. Of the interacting electrons, some are scattered without energy loss (elastic scattering), but others transfer energy to the specimen (inelastic scattering) (Figure 3a). Energy transfer from incident electrons can ionize atoms in the specimen, induce X-ray emission, chemical bond rearrangement, and free radicals, or induce secondary electron scattering, all of which change the specimen structure. Radiation damage of specimens is a significant limitation in high-resolution imaging of biological molecules. Prolonged exposure to an intense electron beam in an EM produces a level of damage comparable to that caused to living organisms exposed to an atomic explosion.(50) Typical values of electron exposure used for biological samples range from 1 to 20 electron/Å2. Although biological specimens can tolerate an exposure of 100–500 e–/Å2, depending on specimen temperature and chemical composition, the highest resolution features of the specimen are already affected at electron exposures of 10 e–/Å2 or less.(51) Therefore, radiation damage dictates the experimental conditions and limits the resolution of biological structure determination, especially for cryo-tomography. To reduce radiation damage during area selection, alignment, and focusing, special “low dose” systems are used to deflect the beam until the final step of image recording.52,53 An example of electron beam damage is shown in Figure 3b.(54) Lower electron doses can be used for two-dimensional (2D) crystals than for single particles, because the signal from all unit cells is averaged in each diffraction spot. Therefore, the diffraction spots are visible even when the unit cells are not visible in the image.55,56

Figure 3.

Interaction of the electron beam with the sample. (a) Schematic of elastic and inelastic electron scattering. Collision of beam electrons with atomic electrons or nuclei leads to energy loss (inelastic scattering), while deflection by the electron cloud does not change the energy of the electron (elastic scattering). (b) Effect of electron beam damage on a cryo image of a cell. The electron dose is shown on the images. Increasing dose causes damage to cellular structures, but different cell regions and materials show the effects of damage at different levels of radiation. In this case, damage first appears on the Weibel Palade bodies. Reproduced with permission from ref (54). Copyright 2009 National Academy of Sciences.

2.3. Image Formation

The basic principle of electron optical lenses is the deflection of electrons, negatively charged particles of small mass, by an electro-magnetic field. Similar to a conventional light microscope, the EM consists of an electron source, a series of lenses, and an image detecting system, which can be a viewing screen, a photographic film, or a digital camera. Electron microscopy has made it possible to obtain images at a resolution of ∼0.8 Å for radiation-insensitive materials science samples,(57) 1.9 Å for electron crystallography of well-ordered 2D protein crystals,(58) 3.3 Å for symmetrical biological single-particle macromolecular complexes,(15) and 5.5 Å for the ribosome.16,17

2.3.1. Electron Sources

The standard electron source is a tungsten filament heated to 2000–3000 °C. At this temperature, the electron energy is greater than the work function of tungsten. The thermally emitted electrons are accelerated by an electric field between the anode and filament. Another common electron source is a LaB6 crystal, which produces electrons from a smaller effective area of the crystal vertex whose emitting surface is at a lower temperature because of a lower work function. This beam has higher coherence and current density. At present, the most advanced electron source, used in high performance microscopes, is the field emission gun (FEG).(59) The FEG beam is still smaller in diameter, more coherent, and ∼500× brighter, with a very small spread of energies.(59) This is achieved by using single crystal tungsten sharpened to give a tip radius ∼10–25 nm, as compared to 5–10 μm for LaB6 crystals. The tip is coated with ZrO2, which lowers the work function for electrons. Thermally emitted electrons are extracted from the crystal tip by a strong potential gradient at the emitter surface (field emission), and then accelerated through voltages of 100–300 kV.

2.3.2. The Electron Microscope Lens System

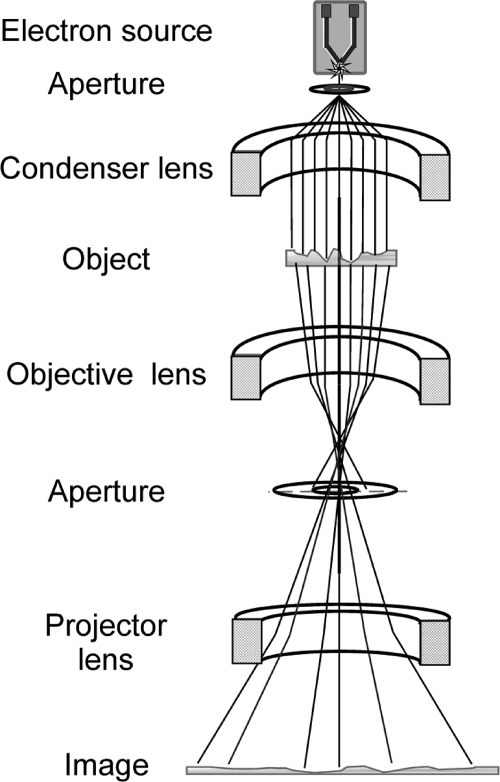

As in light microscopy, condenser lenses convert the diverging electron beam into a parallel one illuminating the specimen (Figure 4). The specimen in modern electron microscopes is located in the middle of the objective lens, fully immersed in the magnetic field. An objective aperture is placed in the back focal plane of this lens; the aperture prevents electrons scattered at high angles from reaching the image plane, thus improving the image contrast. The objective lens provides the primary magnification (20–50×) and is the most important optical element of the electron microscope. Its aberrations play a key role in imaging. The image is further magnified by intermediate and projector lenses before the electrons arrive at the detector. Alternatively, the electron diffraction pattern at the back focal plane of the objective can be recorded after being magnified.

Figure 4.

Simplified schematic representation of an electron microscope.

2.3.3. Electron Microscope Aberrations

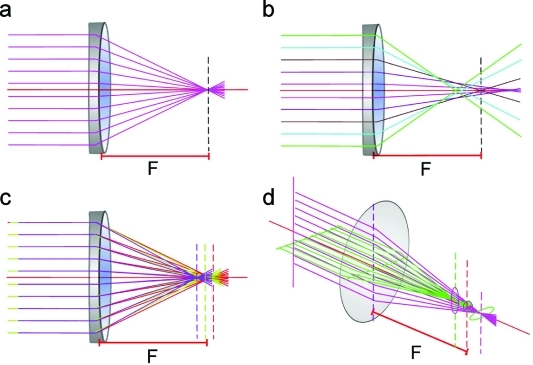

Electromagnetic lenses have the same types of defects as optical lenses, spherical and chromatic aberrations, curvature of the field, astigmatism, and coma,(60) of which the most significant are spherical, chromatic, and astigmatic aberrations (Figure 5). The quality of the beam source is essential for coherence of the electron beam needed for high-resolution imaging. Spherical aberration is an image distortion due to the dependence of the ray focus on the distance from the optical axis (Figure 5b). Rays passing through the periphery of the lens are refracted more strongly than paraxial rays. Chromatic aberration is caused by the lens focusing rays with longer wavelengths more strongly so that part of the image is formed in a plane closer to the object, resulting in “colored” halos around edges in the images (Figure 5c). Chromatic aberration in electron microscopes results from variations in electron energy caused by voltage variation in the electron source, electron energy spread in the primary beam, and energy loss inelastic events in the sample, and blurs the fine details in images. Astigmatic aberration is produced by deviation from axial symmetry in the lens, so that the lens is slightly stronger in one direction than in the perpendicular direction. Astigmatism in electron microscopes is caused by an asymmetric magnetic field in the lenses and can be compensated by stigmator coils. It results in two different image planes corresponding to these directions so that the image of a point becomes an ellipse (Figure 5d). The aberrations described here are the major ones that affect the images, although there are also other, higher order aberrations, which must be considered for high-resolution analysis.(61)

Figure 5.

Ray diagrams of lens aberrations: (a) perfect lens, (b) spherical, (c) chromatic, and (d) astigmatic aberration. F is the focal length of the lens.

2.4. Contrast Transfer

Normally, images represent intensity variations caused by regional variations in specimen transmission. These variations are recorded by a detector system; the image contrast Contim is defined as the ratio of the difference between brightest ρmax and darkest ρmin points in the image and the average intensity ρ̅ of the whole image:

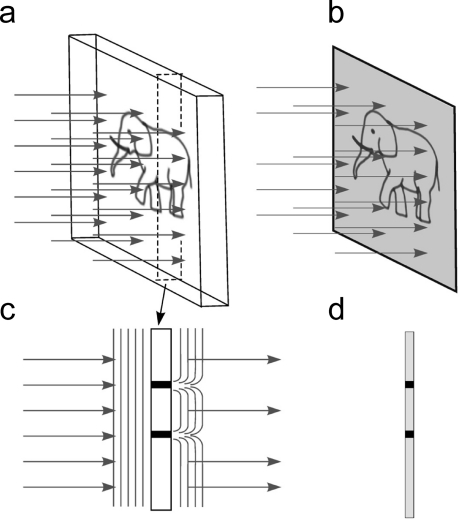

The image contrast resulting from absorption of part of the incident beam is known as amplitude contrast (Figure 6). Because only a small fraction of the electrons is actually absorbed by the biological specimen in inelastic interactions, the amplitude contrast can also be increased by using the objective lens aperture to eliminate electrons scattered at high angles.(59)

Figure 6.

Amplitude contrast. (a) An amplitude object illuminated by a parallel beam. (b) The image resulting from interaction of the beam with the sample. (c) A cross section of the object outlined by the dashed lines in (a); some of the rays are absorbed in the sample. Arrows show the changes in the wavefront after interaction with the sample. (d) Intensity of the rays creating the image in the region of the cross section. Black dots in the image correspond to areas of beam absorption (c).

One of the difficulties of biological electron microscopy is that biological molecules produce very little amplitude contrast. They consist of light atoms (H, O, N, and C) and do not absorb electrons from the incident beam but rather deflect them, so that the total number of electrons in the exit wave immediately after the specimen remains the same. That means that the specimens do not produce any intensity modulation of the incident beam and the image features are not visible. Yet these specimens still change the exit wave because electrons interact with the material. Electrons undergo scattering at varying angles so they have different path lengths through the specimen, giving phase contrast (Figure 7). One can say that they experience a location-dependent phase shift. These phase variations encoded in the exit wave are made visible by being converted into amplitude variations that are directly detectable by a sensor. In practice, this is achieved by introducing a 90° phase shift between incident and scattered waves.

Figure 7.

Phase contrast. (a) A phase object illuminated by a parallel beam. (b) The resulting image shows only weak features. (c) A cross section of the object outlined by dashed lines in (A). Arrows show the changes in the wavefront (parallel lines) after interaction with the sample. The intensity is not changed, but the wavefront becomes curved. (D) Intensity of the rays creating the image in the region of the cross section. Note that the intensity differences are small.

2.4.1. Formation of Projection Images

In the case of elastic scattering, the scattering angle is proportional to the electron potential of the atom (the higher is the atomic number, the higher is its electron potential). The exit wave that has passed through the sample can be described as

where Ψsam is the exit wave emerging from the specimen; Ψ0 is the incident wave; σ = meλ/(2πℏ2); me is electron mass; λ is electron wavelength; ℏ = h/2π; h is Planck’s constant; and φpr(r⃗) = ∫–t/2+t/2 φ(r⃗,z) dz is the specimen potential projected along the z direction, which coincides with the optical axis of the microscope, r⃗ is a vector in the image plane, and t corresponds to the thickness of the sample.

Because biological specimens in general are weak electron scatters, the phase shift introduced by the sample is small; that is, the exponent term in eq 2 is close to unity, which makes it possible to describe the exit wave by the following approximation:

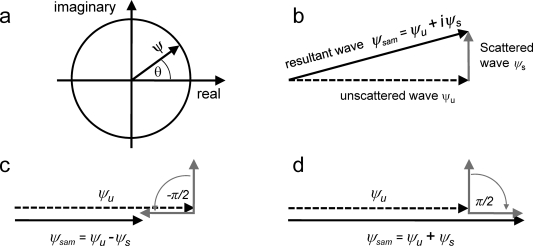

The transmitted wave Ψsam(r⃗) consists of two parts: the first term corresponds to the unscattered wave, and the second term, corresponding to the deflected (scattered) electrons, is linearly proportional to the specimen potential. The second term Ψs = Ψ0iσφpr(r⃗) has a phase shift of 90°, because it corresponds to the imaginary part of the expression indicated by the factor i (Figure 8a and b). In the following discussion, we assume that the amplitude of the incident wave Ψ0 = 1.

Figure 8.

Graphical representation of phase contrast. (a) Complex plane representation of a wave vector ψ with phase θ. (b) Vector representation of the scattered wave ψs, unscattered wave ψu, and resultant wave ψsam = ψu + iψs. Amplitudes (vector lengths) of ψsam and ψu are very similar, resulting in low image contrast. (c) −π/2 phase shift of the scattered wave leads to a noticeable decrease in the resultant wave amplitude relative to the unscattered wave (negative phase contrast). (d) π/2 phase shift of the scattered wave increases the amplitude of the resultant wave relative to the unscattered wave (positive phase contrast).

2.4.2. Contrast for Thin Samples

The image is formed by all electrons, both scattered and unscattered, giving very little contrast for thin, unstained biological specimens. This is known as phase contrast, which results from interference of the unscattered beam with the elastically scattered electrons (Figures 7 and 8). Thin transparent samples scatter electrons through small angles and are described as weak phase objects.(59) The intensity distribution observed in the image plane will be given by

where “*” denotes the complex conjugate. However, the magnitude of (σφpr(r⃗))2 is ≪1, so the image will have practically no contrast. To increase the contrast, it is necessary to change the phase of the scattered beam φpr(r⃗) by 90° (Figure 8c and d), which changes the exit wave as follows:

The intensity in the image plane then can be approximated by the following expression:

Here, the intensity becomes proportional to the projection of the electron potential of the sample, and the magnitude of 2σφpr(r⃗) will be much greater than (σφpr(r⃗))2. Therefore, the phase shift of the scattered beam transfers the invisible “phase” contrast into amplitude contrast that can be recorded. How in practice can the contrast be increased? One method is to use substances that increase the scattering, such as negative stains. Another possibility is to utilize imperfections of the microscope such as spherical aberration.

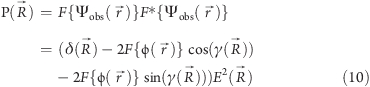

In practice, the image contrast obtained depends on the operating conditions of the microscope such as the level of focus and aberrations. Multiple scattering of electrons within thick specimens obscures the relation between object and image. There are several factors that affect the appearance and contrast of an EM image including lens aberrations, limited incident beam coherence, quantum noise because of the discrete nature of electrons (shot noise), radiation stability of the sample, instabilities in the microscope, and environment, for example, vibrations, stray electromagnetic fields, temperature changes, and imperfect mechanical stability of the EM column. Instabilities and limited coherence of the electron beam cause falloff of the signal transfer by the microscope for fine image details, leading to blurring of small features. In simple terms, a sharp dot will not be imaged as a dot but as a blur. The link between the original dot and its image is described by the point spread function (PSF) of the imaging device, in our case of the electron microscope. The PSF is a function that describes the imperfections of the imaging system in real space. However, a convenient way to describe the influence of these factors on the image is to use Fourier (diffraction) space:

where F{Ψobs} is the Fourier transform of the observed image; R⃗ is spatial frequency (Fourier space coordinate); F{Ψsam} is the Fourier transform of the specimen; CTF(R⃗) is the contrast transfer function of the microscope; and E(R⃗) is an envelope function. E(R⃗) describes the influence of various instabilities and specimen decay under the beam.(62) The envelope decay can be partly compensated by weighting, for example, with small angle scattering curves (see section 8.3). The optical distortions are usually approximated as a product of functions attributed to individual damping factors (e.g., lens current instability). The link to the PSF is given by the following equation:

The amplitude and phase changes arising from objective lens aberration, or CTF(R⃗), are usually described by the function exp(iγ), where γ describes the phase shift arising from spherical aberration and image defocus:(59)

where γ is the phase shift caused by aberrations, R⃗ is spatial frequency, a vector in the focal plane of the objective lens, Cs is the coefficient of spherical aberration, λ is the wavelength of the electron beam, and Δ is the defocus, the distance of the image plane from the true focal plane.

It was found that the image contrast of biological objects could be improved by the combined effects of spherical aberration and image defocus, moving the image plane away from exact focus.(63) The basis for the contrast enhancement is that spherical aberration combined with defocus induces a phase shift between scattered and unscattered electrons. The greater phase shift between scattered and unscattered rays leads to stronger image contrast.

The diffraction pattern of the image (image plane of the microscope) is given by

|

where δ(R⃗) is the Dirac delta function.

In the plane of the image, the amplitude contrast is described by 2 cos γ and the phase contrast by 2 sin γ (for a more detailed description of image formation, see refs (60) and (63)). For phase objects, the sine term of eq 10 has the major influence. It describes an additional phase shift due to spherical aberration and changes in the image contrast depending on defocus. The major effect of the CTF on images of weak phase objects arises from oscillations of the sin γ term that reverse the phases of alternate Thon rings in the Fourier transform of the image(64) (Figure 9). For thin cryo specimens, the amplitude contrast for 120 keV electrons has been estimated at 7%, whereas for negatively stained samples the amplitude contrast can rise to 25%.(53) For 300 keV cryo images, it drops to ∼4%.(65)

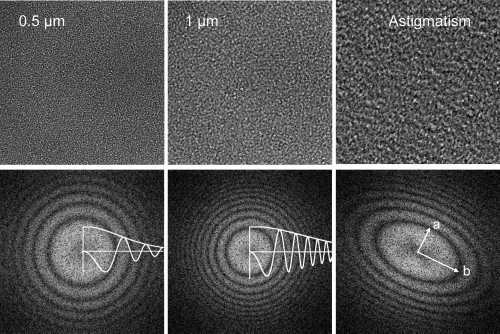

Figure 9.

Images of carbon film and their diffraction patterns, showing Thon rings and corresponding CTF curves. The left image was obtained at 0.5 μm defocus, and the middle image was at 1 μm defocus. The Thon rings of the second image are located closer to the origin and oscillate more rapidly. The rings alternate between positive and negative contrast, as seen in the plotted curves. An example of an astigmatic image and its diffraction pattern is shown in the right panel. The largest defocus is along axis a, and the smallest is along axis b.

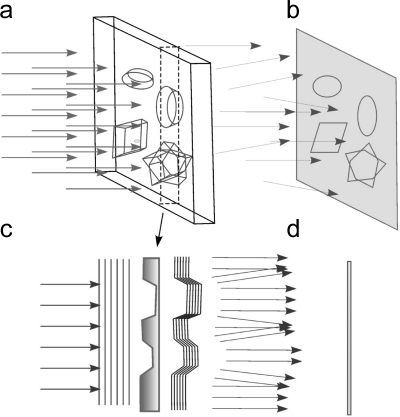

2.5. Phase Plates and Energy Filters

The very small phase shifts induced by biological samples in the scattered electrons result in poor image contrast. In phase contrast light microscopy, the imaging of phase objects is enabled by the use of a quarter-wave phase plate,(66) which produces visible contrast by shifting the phase of the scattered light relative to that of the transmitted beam by 90°, leading to constructive interference (Figure 10a,b(67)). An equivalent solution for electron microscopy was pioneered by Boersch.(68)

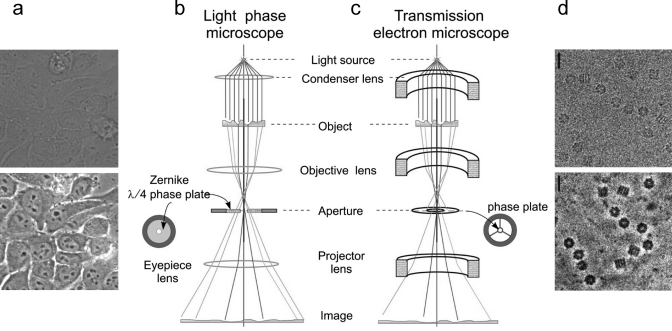

Figure 10.

Phase contrast in optical and electron microscopy. (a) Bright field (upper panel) and phase contrast (lower panel) images of a field of cells. The cells are approximately 50 μm in length. Panel (a) was provided by Maud Dumoux and Richard Hayward. (b) Schematic representation of the light microscope. (c) Schematic representation of the electron microscope for comparison, showing the major lenses. The positions of phase plates are indicated. (d) Images of GroEL taken without (upper panel) and with the phase plate (lower panel). Scale bars, 20 nm. Image (d) is reproduced with permission from ref (67). Copyright 2008 Royal Society Publishing.

An early attempt to create a device to change the phase of the central beam relative to the scattered rays was done by Unwin, who devised a simple electrostatic phase plate that was inserted into the optical system.(69) He used a thin poorly conducting cylinder (a spider’s thread) over a circular aperture inserted in the back focal plane of the objective lens (where the electron diffraction pattern is formed). This cylinder partly obstructed the central beam and became charged when illuminated by the electron beam, thus creating an electrostatic phase plate. The first experiments with negatively stained samples clearly demonstrated increased contrast.

The practical realization of this idea has only recently become feasible. Microfabrication has allowed the construction of the first miniature phase plates, which are positioned in the back focal plane of the objective lens (Figure 10c,d). The phase plate functions as an electrostatic lens and is placed in the path of the scattered electrons, shifting their phase by 90° so that contrast is improved when they recombine with the unscattered electrons in the image plane of the TEM.67,70−72 The resulting large increase in contrast over a wide resolution range, especially at low resolution, is particularly useful for electron cryo-tomography.(73)

An additional approach to improving image contrast is energy filtering. A fraction of the electrons reaching the objective lens are inelastically scattered, having lost energy by interaction with the sample atoms. The lower energy (corresponding to longer wavelength) of these electrons causes them to be focused in different planes from the elastically scattered electrons, in other words, chromatic aberration. Therefore, inelastic electrons degrade the recorded image with additional background and blurring, in addition to damaging the sample. Energy filters can be used to stop these electrons from contributing to the image after their interaction with the specimen. Filtering works on the basis that electrons of different wavelength can be deflected along different paths, and the filter can be either in the column (Ω filter) or post column (GIF). The use of energy filtering to improve the contrast of cryo EM images was introduced by Trinick and Berriman(74) and Schröder.(75) Energy filtering is most important for tomography, because of the long path length of the beam through the tilted sample. An in-column filter has recently been used to obtain very high-quality images of actin filaments,(76) and the authors attribute a significant part of the contrast improvement to the filtering.

3. Image Recording and Preprocessing

3.1. Electron Detectors

3.1.1. Photographic Film

Until recently, the conventional image detector was photographic emulsion. The light-sensitive components in films are microscopic silver halide crystals embedded in a gelatin matrix. Absorption of incident radiation by the crystals induces their transition into a metastable state, thus recording the image. The image remains hidden until photographic developer transforms silver halide into visible silver grains. The optical density OD = log(1/T), where T is the transmission of the film. The OD of the film in response to illumination dose, the number of photons/electrons per unit area, initially increases linearly with dose, then starts to saturate and eventually reaches a plateau at high dose, although it is S-shaped for light. At low energy (10–80 keV), optical density is directly proportional to electron energy, and the peak sensitivity is around 80–100 keV. Within the current working range of electron energy (100–300 keV), the speed of EM films is inversely related to the electron energy: higher-energy electrons interact less with the silver halide crystals, leading to lower OD for the same irradiation dose. Therefore, emulsions produced for electron microscopy are optimized for sensitivity to 100–300 keV electrons, with large grain size and high silver halide content. The film mainly used for TEM is Kodak SO-163, which provides good contrast at low dose.(77) The advantage of photographic film is the extremely fine “pixels” and the large image detection area. Photographic film is still the most effective electron detector, in terms of spatial resolution over a large area (number of effective pixels) and cost. The inconveniences of using films are that they introduce an additional load on the microscope vacuum system due to the presence of adsorbed water and they need chemical processing, drying, and digitization.

3.1.2. Digitization of Films

Films must be digitized for computer analysis. To convert the optical densities of the film into digital format, the film is scanned with a focused bright beam of light. The transmitted light is focused on a photo diode connected to a photo amplifier that produces an electrical signal. The intensity of the current is converted into a number related to the optical density of the film. The densitometer measures the average density within square elements (pixels) whose size is determined by the sampling resolution. Linear CCD detectors are used to measure a line of pixels in parallel.

Digitisation does not provide a faultless transfer of optical density into digital format. The accuracy is determined by the quality of the optical system and the sensitivity of the photo detectors and amplifiers. Densitometer performance can be described in terms of the modulation transfer function (MTF), which is defined as the modulus of the densitometer’s transfer function.(78) The output image is considered as the convolution of the input image with the point spread function of the densitometer. The dependence of MTF on spatial frequency describes the quality of signal transfer. A strong falloff of the MTF at high frequencies indicates the loss of fine details in the digitized images. Densitometer characteristics and assessments have been described in several articles.79,80

3.1.3. Digital Detectors

Digital imaging has practically replaced recording on films in photography and is widely used in EM due to developments in automated data collection and tomography methods. The most popular cameras are based on charge-coupled device (CCD) sensors that convert the analogue optical signal into digital format. The CCD was invented in 1969 by W. S. Boyle and G. E. Smith at Bell Telephone Laboratories (Nobel prize, 2009).(81) The physical principle of this device is analog transformation of photon energy (light) into a small electrical charge in a photo sensor and its conversion into an electronic signal. CCD chips consist of an array or a matrix of photosensitive elements (wells) that converts light into electric charge accumulated in the wells (Figure 11). “Charge-coupled” refers to the readout mechanism, in which charges are serially transferred between neighboring pixels to a readout register, amplified, and converted to a digital signal. The readout is done through one or several ports, which determines the speed of CCD image recording.

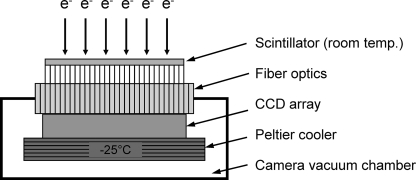

Figure 11.

Diagram of a CCD detector for EM. The incident electrons are converted to photons by the scintillator. Fiber optics transmit the image to the CCD (charge coupled device) sensor where the photons generate electrical charge (CCD electrons). The charge is accumulated in parallel registers. During readout, this charge is shifted line by line to the serial register from where it is transferred pixel by pixel to the output analog-to-digital converter. Image reproduced with permission from H. Tietz.

Because high-energy electrons irreversibly damage the photosensitive wells in CCDs, currently available devices employ mono- or polycrystalline scintillators to convert the electrons to photons, which are then relayed to the CCD chip (Figure 11). Although the graininess of the scintillator and electron-to-photon conversion add noise to the images, the use of a scintillator greatly extends the usable life of a CCD chip. This detection scheme works quite well for accelerating voltages up to 120 kV. At higher voltages, the camera sensitivity decreases, and, to compensate, thicker scintillator layers are needed to improve the electron detection efficiency. In addition, image quality is degraded because the higher-energy electrons are scattered in the scintillator, reducing image resolution.

The CCD is a very sensitive and effective electron detector with remarkably linear response and very large dynamic range (16 bit resolution). This allows recording of both low contrast images and electron diffraction patterns in which diffraction intensities can range over several orders of magnitude. The disadvantage of this type of camera is the high cost and limited sensor size. CCD chips of 5 × 5 cm2 (4k × 4k pixels) are now widely used, and digital cameras with 8k × 8k sensors and 12 cm in diameter are available. The typical size of current CCD pixels is 14–15 μm, which imposes additional restrictions on the minimal magnification used to record images, because the image sampling by the CCD should be finer than the target resolution by about a factor of 4 (see section 8). Examples of successful use of CCD imaging for high-resolution cryo-EM at 300 kV are shown by Chen with coauthors(82) and Clare and Orlova.(83)

The current generation of digital detectors for electron microscopy includes a direct detection device (DDD), which can be exposed directly to the high energy electron beam. Hybrid pixel detectors such as Medipix2 are direct electron detectors that count individual electrons, rather than producing a signal proportional to the accumulated charge.(84) Another type of new DDD is the monolithic active pixel sensor (MAPS), in which the signal is proportional to the energy deposited in the sensitive element.(85) The DDD uses a radiation-hardened monolithic active-pixel sensor developed for charged particle tracking and imaging and smaller pixel size (5 μm).86,87 These detectors are being combined with the CMOS (complementary metal–oxide–semiconductor) design, in which the amplifiers are built into each pixel, enabling local conversion from charge to voltage and thus faster readout. Direct exposure to the incident electron beam significantly improves the signal-to-noise ratio in comparison to a CCD. This type of sensor has high radiation tolerance and allows for capture of electron images at 200 and 300 keV. A comparison of digital detectors demonstrated that DDD in combination with CMOS can provide good DQE, MTF, and improved signal-to-noise ratio at low dose.87,88

Design of digital cameras in EM continues to improve: the latest cameras are able to register electrons over a broad energy range and cover large areas with smaller pixels so that the detector area (16k × 16k pixels) becomes comparable to or bigger than EM films.84,87,89

3.2. Computer-Controlled Data Collection and Particle Picking

The goal of automated data collection is to replace the human operator in time-consuming, repetitive operations such as searching for suitable specimen areas and recording very large data sets, including low-dose operation, particle selection, and obtaining tilt data.(90) Most EMs now have computer-controlled operation for lens settings and stage movement, along with basic image analysis operations such as FTs. Essential steps to control are settings for illumination, stage position, magnification, tilt, and focus. The automation system must be able to recognize the same region at different magnification scales, so that objects selected in a lower magnification overview can be located for data collection, in particular after stage movements. The software must compensate for inaccuracies in mechanical stage positioning. This compensation is done by collecting overview images and finding the areas of interest by cross-correlation with previously recorded images, so that the selected area can be positioned with sufficient precision for high-magnification recording. For all of these operations, electronic image recording is essential, and the availability of high-resolution CCD cameras has enabled the development of automation. Several automation systems have been developed, both by academic users (e.g., Leginon;(90) JADAS(91)) and by EM suppliers (FEI, JEOL systems). Some of them are coupled to data processing pipelines that extend the automation through the stages of particle picking and image processing (e.g., Appion(92)). Serial EM is a widely used system that provides semiautomated procedures for manually selecting a series of targets for subsequent unsupervised collection of tomograms and can also be used for single-particle data.(93)

In single-particle EM, data processing begins with particle selection. Conventionally, the particles are identified by shape and characteristic features that are often difficult to recognize for a new complex. Even for known complexes, manual selection of ∼100 000 single-particle images is prohibitively time-consuming and tedious. Not surprisingly, the idea of automating particle selection has been a focus of research efforts. The first computational methods were based on template matching,94,95 and more sophisticated approaches were subsequently based on pattern recognition.(96) A comparative evaluation of different programs can be found in the review by Zhu and coauthors,(97) and other programs have been developed more recently.(98)

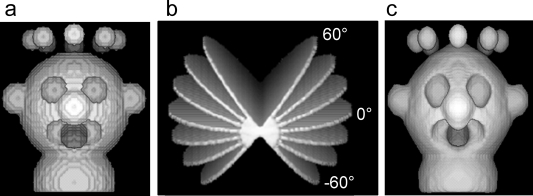

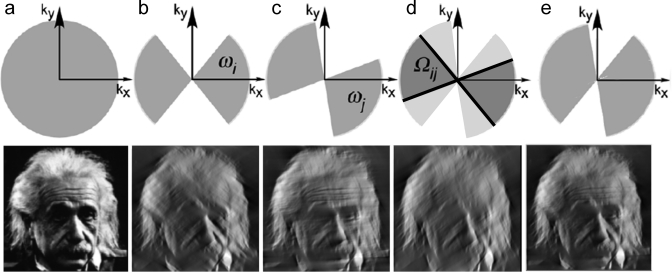

3.3. Tomographic Data Collection

The purpose of electron tomography is to obtain a 3D reconstruction of a unique object, such as a cell section, isolated subcellular structure, or macromolecular complex, that can take up a variety of different structures. A series of images of the same region is recorded over the largest possible range of tilt angles, up to −70° to 70°. The limitation on tilt is ultimately due to the increased path length of the beam through the sample, although the specimen holder may also limit the tilt. Electron tomograms will therefore be missing information from a 40–60° wedge of space, resulting in some distortion to the 3D map (Figure 12(99)).

Figure 12.

Distortions caused by the missing wedge. (a) A model object. (b) Set of image planes at different angles from a tilt series. The limitation on maximum tilt angle results in a missing wedge of data. (c) Reconstruction of the object showing elongated features due to the missing wedge. Reproduced with permission from ref (99). Copyright 1998 Elsevier Inc.

The high tilt, especially of thicker specimens, increases the inelastic scattering and multiple elastic scattering, therefore reducing the fraction of coherent electrons useful in image formation, in the scattered beam. The energy-loss electrons can be removed from the image by an energy filter, which is particularly important for improving contrast in tomography.

For plastic sections, the initial exposure to the beam causes thinning of the sections, but subsequently the sample changes little during data collection. Therefore, data collection with fine angular steps is possible. Room temperature tomography also facilitates dual-axis data collection, in which a second tilt series is collected after 90° rotation of the specimen in the plane of the stage, so that the missing wedge is reduced to a missing pyramid. Therefore, data collection can be optimized for plastic sections, but dehydration and staining do not preserve molecular detail. However, as mentioned above, sections up to 300 nm thick can be used to give a 3D overview of larger structures.

On the other hand, in electron cryo-tomography, the molecular structure is preserved in the frozen-hydrated sample, but it is hard to get beyond 3–4 nm resolution. The resolution of cryo-tomography is severely limited by radiation damage, because at least 50–100 images must be collected of the same area for the tilt series. These conflicting requirements for low dose and many exposures mean that the images are recorded with extremely low electron dose and therefore very low SNR, and the accumulated damage changes the structure during the tilt series. The thicker is the sample, the more views are needed to reach a given resolution. In addition, the limitation on maximum tilt angle leaves a missing wedge of data. These problems make processing of cryo-tomograms more difficult. The best resolution is obtained by averaging subregions of cryo-tomograms containing multiple occurrences of the same object, for example, viral spikes (see section 4.4). This method is called subtomogram averaging.

Automation is indispensable for electron tomography.(100) Well-developed tomography software is available from both academic and commercial sources (SerialEM;(93) UCSF Tomo;(101) Protomo;(102) TOM;(103) Xplore3D;(104) IMOD105,106). Leginon incorporates tomographic and other tilt data collection protocols such as conical tilt (section 6.1).(107)

3.4. Preprocessing of Single-Particle Images

Although theoretical and technical progress in electron microscopy has improved the imaging of weak phase objects, defocusing complicates the interpretation of the image because features in some size ranges will have reversed contrast. Imaging of biological objects requires a compromise between contrast enhancement and minimization of image distortions.

The intensity distribution in the EM image plane is related to the projected electron potential by

where sin γ is the phase contrast transfer function and γ is defined by eq 9. F–1{sin γ} defines the shape of the image of a point in the object plane formed by the microscope optics, the point spread function (PSF) of the microscope. Therefore, the real image is distorted, because the ideal object image is convoluted with the PSF, and is not directly related to the density distribution in the original object. To restore the image, so that it corresponds to the projected electron potential of the sample, the image must be corrected for the effect of contrast modulation by sin γ, the microscope phase contrast transfer function (CTF). The CTF, modified by an envelope decay, and the PSF of a microscope are related by Fourier transformation. For weak phase objects, deconvolution with the PSF of the microscope is necessary for complete restoration of image data. The procedure of eliminating the effects of the CTF is called CTF correction.

3.4.1. Determination of the CTF

For a given microscope setup, the voltage and spherical aberration are constant, but the defocus varies from image to image because of variations in lens settings, sample height, and thickness. There are two main approaches for determination and correction of the CTF. In the first one, the images are CTF-corrected before structural analysis. In the second approach, structural analysis is done separately on each micrograph, and determination and correction of the CTF are performed on the structures obtained. Each approach has its own advantages and disadvantages.

If CTF correction is done first, data can be combined from many different micrographs and subsequently processed together. The second method is applicable if each micrograph has a sufficient number of particles to calculate a 3D reconstruction. This method works well for particles at high concentration and has the advantage that CTF determination is more accurate because of the high SNR in the reconstruction, in which the images have been combined. However, with fewer particles and lower symmetry, it will not be possible to get a good reconstruction of the object from a single micrograph, so that the first approach is more practical.

Manual CTF determination(108) involves calculation of the rotationally averaged power spectrum (diffraction intensity) of a set of 2D images, which can only be done in the absence of astigmatism. The amplitude profile (square root of the intensities) is compared to a model CTF. The model defocus is varied to find the best match between the two profiles. The value corresponding to the match is then used as the defocus for that particular set of images. It is also possible to include additional processing steps such as band-pass filtering to remove background and provide smoothing for more accurate detection of the positions of the CTF minima.

The rotational averaging used in the above method assumes good astigmatism correction. Software developed by Mindell and Grigorieff (CTFFIND3(109)) searches for the best match of experimental with theoretical CTF functions calculated at different defoci. This software includes the determination of astigmatism in the images, assuming that its effect on the CTF can be approximated by an ellipse (valid for small astigmatism), with averaging of the profiles over sectors of the ellipse. Scripts can be used to automate the search and correction.

In some studies, statistical analysis has been employed to sort power spectra of particle images (squared amplitudes of the image Fourier transform) into groups with similar CTF. Class averages of the spectra provide a higher SNR for CTF determination.(110) Other, more sophisticated approaches that take into account background and noise are described by Huang(111) and Fernández and coauthors.(112) A fully automated program, ACE, implemented in Matlab, incorporates a model for background noise and uses edge detection to define the elliptical shape of the Thon rings.(113)

3.4.2. CTF Correction

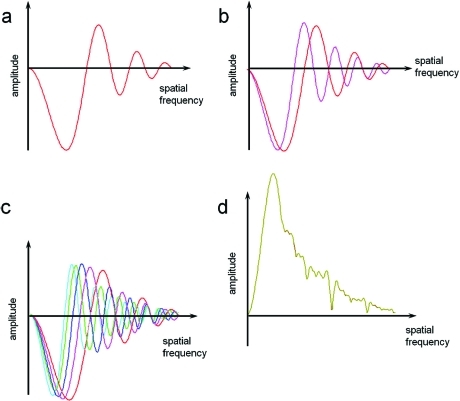

The representation of the object of interest is considered as faithful if the EM images corresponding to its projections are corrected for the effects of the microscope CTF. A full restoration of the specimen spectrum requires division of the F{Ψsam(r⃗)} (eq 7) by the CTF, sin γ. However, this operation is not possible because of the CTF zeroes, and the spectrum cannot be restored from images taken at a single defocus. To fully restore the information, it is necessary to use images taken at different defocus values, so that zeroes of each particular CTF will be filled by merging data from images with different defoci (Figure 13).

Figure 13.

CTF curves, for a single defocus (a), overlaid for two different defocus values (b). The red curve corresponds to a closer to focus image, and oscillates more slowly. Image (c) shows multiple defocus values. The cyan/green curves correspond to the images with the highest defocus, and the red curve is closest to focus. (d) The sum of amplitude absolute values of all curves in (c), showing the overall transfer of spatial frequency components in a data set with the defocus distribution shown. Images courtesy of Neil Ranson.

3.4.2.1. Phase Correction

The simplest method of CTF correction is to flip the image phases in regions of the spectra where sin γ reverses its sign. In many cases, this produces reliable reconstructions because a large number of images with different defoci are merged together, leading to restoration of information lost in individual images in the vicinity of CTF zeroes. Practically all EM image analysis software packages have options for this type of CTF correction.

3.4.2.2. Amplitude Correction and Wiener Filtration

A more advanced method of information restoration is correction of both amplitude distortions and phases of the image spectra. This correction usually takes into account not only CTF oscillations but also compensates for the amplitude decay at high spatial frequencies. In theory, the following operation should be sufficient:

where Im is the recorded image, PSF is the point spread function of the microscope, the Fourier transform of which is CTF, and Imcor is the corrected image. If there were no noise in the image spectra, reliable correction could be done everywhere except for points where the CTF is zero. In practice, small CTF values suppress signal transfer in these regions, and noise unaffected by the CTF dominates the spectra there. Thus, simply dividing the image spectra by the CTF would lead to preferential amplification of noise. To avoid this, a Wiener filter(114) is used to take account of the SNR and perform an optimal filtration to correctly restore the spectra:

where c is a function of SNR: c = 1/(SNR).

Multiplication of the Fourier transform of the image by the CTF corrects the image phases, while division by CTF2 + 1/(SNR) provides the amplitude correction. Addition of 1/(SNR) to the denominator is necessary to avoid division by values close to zero(115) (Figure 14). Amplitude correction has been implemented in EMAN,(116) SPIDER,(117) Xmipp,(118) and other software packages. To visualize high-resolution details, it is also important to correct the envelope decay of image amplitudes at high spatial frequencies (see section 8.3).

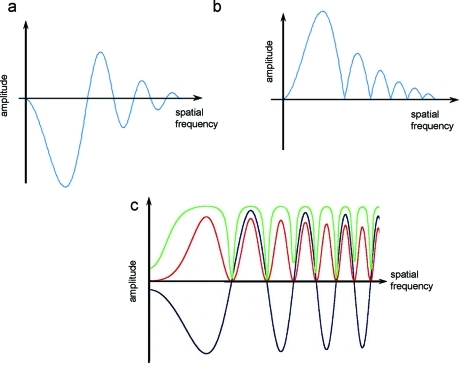

Figure 14.

(a) CTF curve from uncorrected data, (b) after phase correction, and (c) overlay of original (black), the square of the curve after rescaling (red), and amplitude correction (green). Panel (c) courtesy of Stephen Fuller.

3.4.3. Image Normalization

After CTF correction, the image of a weak phase object can be considered as a reasonable approximation of the 2D projection of the 3D object, except for the regions affected by CTF zeros, where the signal is low. This allows the process of image analysis to progress toward determination of the 3D density distribution for the object. Nonetheless, some important steps of preprocessing are necessary.

Even with the same EM settings during data acquisition, variations in specimen particle orientation, support film thickness, and film processing conditions lead to differences in image contrast. In addition, structural analysis requires the merging of image data collected during multiple EM sessions. Optimization of data processing requires standardization of images known as normalization. It is conventional in EM image processing to set the mean density of all particle images to the same level, usually zero, and to scale the standard deviation of the densities to the same value for all images, which is important for the alignment procedure.

Images are normalized using the formula:

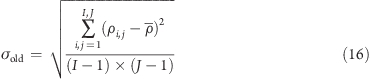

σold is the standard deviation of the original image, and σnew is the target standard deviation in the data.

The mean density ρ̅ of the images is defined as

|

where I and J are dimensions of the image array, and ρi,j is the density in the image pixel with coordinates i and j. σold is defined as

|

The normalization sets all images to the same standard deviation and a mean density of zero.

4. Image Alignment

The information we wish to extract from EM images, the signal, is the projected density of the structure of interest. The recorded images contain, in addition to the signal, fluctuations in intensity caused by noise from many different sources. Sources of noise include background variations in ice or stain, damage to the molecule from preparation procedures or radiation, and detector noise. The signal-to-noise ratio (SNR) is defined as

where Psignal is the energy (the integral of the power spectrum after normalization) of the signal spectrum, and Pnoise is the energy of the noise.

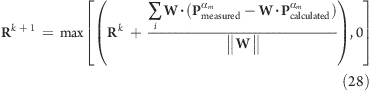

Many views of the particle are recorded in different orientations, but each individual image has a low SNR. The main task in extracting the 3D structural information is to determine the relative positions and orientations of these particle images so that they can be precisely superimposed. Alignment is done by finding shifts and rotations that bring each image into register with a reference image. Cross correlation is the main tool for measuring similarity of images, but it is not very reliable at low SNR. In practice, alignments are iterated so that successive averages contain finer details, which in turn improve the reference image, for subsequent rounds of refinement.

4.1. The Cross-Correlation Function

The correlation function is widely used as a measure of consistency or dependency between two values or functions. In image analysis it is used for assessment of similarity between images. Cross correlation compares two different images.

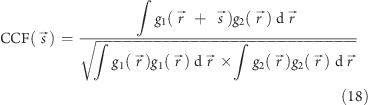

|

Equation 18 defines the normalized cross correlation function (CCF) between two functions, g1(r⃗) and g2(r⃗), where r⃗ is a vector in space, and s⃗ is the shift between images. In our case, images are the 2D functions being compared, and r⃗ and s⃗ are vectors in the image plane. The images are normalized to a mean value of zero, to avoid influence of the background level. Without normalization of the images, the CCF would be offset by a constant proportional to the product of the mean values of the images.

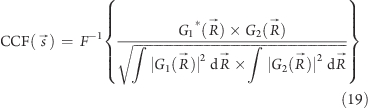

The normalized cross-correlation function is maximal when the two images are identical and perfectly aligned, and the displacement s⃗p of the correlation peak from the origin gives the displacement of image g1 with respect to image g2. It is quicker to calculate the CCF in Fourier space, because the FT of the correlation integral is the product of the complex conjugate of the first image FT with the second image FT.

|

where R⃗ is a vector in Fourier space, and G1 and G2 are FTs of g1 and g2.

The cross-correlation of an image with itself is the autocorrelation function (ACF). In crystallography, the ACF is known as the Patterson function, which is obtained by Fourier transformation of intensities in diffraction patterns and gives a map of interatomic distances (correlation peaks between pairs of atoms).

4.2. Alignment Principles and Strategies

Faced with a data set of images of an unknown structure, we do not have an a priori reference for alignment. A suitable reference can be generated from the data by approaches known as reference-free alignment. In one such approach, the first step in alignment of preprocessed images is to center the particles in their selected boxes. Particles can be centered either by shifting the center of mass of the image to the center of the image frame or by a few iterations of translational alignment to the rotationally averaged sum of all images.(119) In another version of reference-free alignment, a series of arbitrarily selected images are used in turn as references to align all of the other images.(120)

If the signal is weak, or the reference image does not match the data, noise can be correlated to the reference image during alignment. Therefore, to avoid bias, it is important to start a new analysis with reference-free alignment. The problem of reference bias is illustrated by tests in which pure noise data sets are aligned to a reference image.121,122 Because the correlation is sensitive to the SNR in the image data, the accuracy of the correlation measure can be improved by weighting the correlation of Fourier components according to their SNR.(122)

Alignment of a single-particle data set is accomplished by a series of comparisons in which alignment parameters are determined on the basis of correlations of each raw image with one or a set of reference images. The major information for alignment comes from the stronger, low-frequency components of the images. Because of the low SNR in cryo-images, it is important to maximize the contribution of the signal to the correlation measurement by reducing noise. There are two ways to reduce noise in the images. In real space, a mask around the particle serves to exclude background regions outside the particle. In reciprocal space, a band-pass filter can be applied to exclude low-frequency components related to background variations over distances greater than the maximum extent of the particle and high-frequency components beyond the resolution of the analysis. In later iterations of alignment, it is useful to increase the contribution of higher-frequency components.

In addition to their arbitrary positions and orientations in the plane of the image projection, the particles may have different out-of-plane orientations, which will give rise to different projections. To sort the images into groups with common orientations, statistical analysis and classification are essential tools (see next section) in “alignment by classification”.(12) Initial class averages selected from a first round of classification can serve as references to bring similar images to the same in-plane position and orientation and to separate different out-of-plane views. A few iterations of these alignment and classification steps provide good averages representing the characteristic views in the data set.

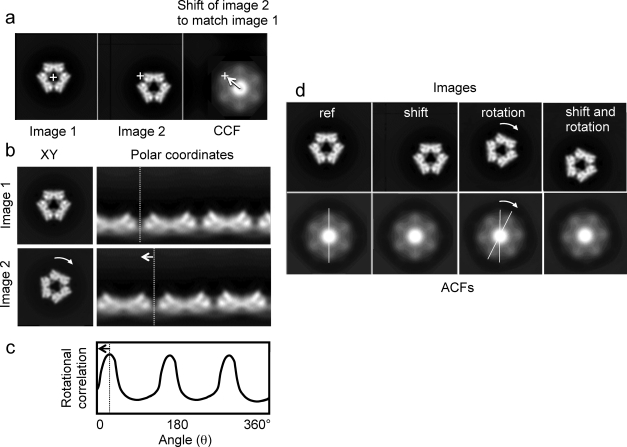

Various protocols have been developed for translational and rotational alignment of image data sets. Tests on model data suggest that, after initial centering, iterations of rotational followed by translational alignment to the reference images are effective12,123,124 (Figure 15). The quality of the result also depends on the accuracy of the interpolation procedures, because the digital images must be rotated and shifted by non integral pixel values during alignment.(125)

Figure 15.

Alignment using translational and rotational cross correlations and autocorrelation. (a) Two images to be aligned, with the second shifted off center. The right panel shows the cross-correlation function (CCF) of the second image with the first. The crosses indicate the image centers. The arrow indicates the shift that must be applied to image 2 to bring it into alignment with image 1, according to the CCF maximum. (b) Two images, related by a 25° rotation, shown in Cartesian (left) and polar coordinates (right). The curved arrow shows the rotation in the Cartesian coordinate view, and the dashed line shows the corresponding shift of a feature in the polar coordinate representation. (c) Plot of the rotational correlation between the two images in (b). The arrow shows the angular shift required to align image 2 to image 1. (d) Images and their corresponding autocorrelation functions (ACF). When the image is shifted (second panel), the ACF is unchanged (translationally invariant). The reference image (ref) has 3-fold symmetry, but its ACF is 6-fold because of the centrosymmetric property of ACFs. Rotation of the image causes the same rotation of the ACF, but has an ambiguity of 180°. A possible alignment strategy is to use the ACF first for rotational alignment, using the property of translational invariance. The CCF then can be used for translational alignment, checking both 0° and 180° rotational positions.

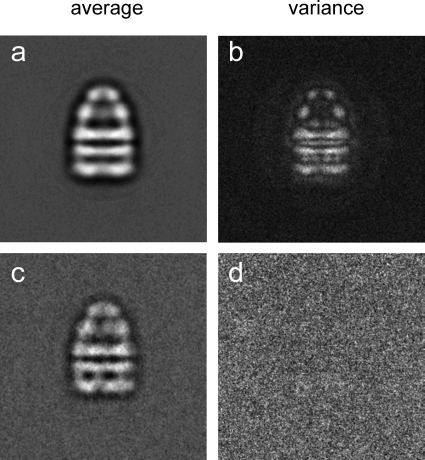

The progress of an alignment can be evaluated by examining the average and variance images (Figure 16). The average of an aligned set of similar images should improve in contrast and visible detail during refinement, and the variance should decrease. In addition, the cross-correlation (CC; maximum value of the normalized CCF) between references and raw images should increase during refinement.

Figure 16.

Average and variance for an image set of particles whose orientations are distributed by rotation around the symmetry axis (a,b) and for an image set of particles in a single orientation (c,d). The average in (a) contains images of particles in different orientations, resulting in significant variation of image features (b). Panel (d) is featureless, because all of the particles in the average (c) have the same orientation, so that the projections only differ in the noise background. Figure courtesy of Neil Ranson.

4.2.1. Maximum Likelihood Methods

For alignment of an image data set to a set of references, each image is assigned the alignment parameters of the single reference image with which it has the highest CC. The maximum likelihood approach uses the whole set of CC values between each image and all of the references to define a probability distribution of orientation parameters for the image being aligned.126,127 For clusters of references giving similar CC values, this approach is likely to provide more reliable alignment parameters than would be obtained just taking account of the highest CC, but it is computationally very expensive.

4.3. Template Matching in 2D and 3D

So far, we have considered alignment of 2D images to references, but there is also a need to detect known features (motifs) in noisy and distorted image data in both 2D and 3D. In 2D, motif detection is used for automated particle picking in raw micrographs. In 3D, the task is to search for occurrences of known molecular complexes in tomograms. These tasks represent 2D and 3D versions of a search for a known, or approximately known, structural motif in image data. In automated particle picking from micrographs, individual particles with low SNR are located by a cross-correlation search of the whole micrograph with one or more template images, references derived from the data, a model, or a related structure. In 3D, if a known structural motif is expected to be present in the reconstructed volume, the 3D map of that motif can be used to search for occurrences of related features in the tomogram. The main problem is reliable identification of motifs in noisy data. In the case of template matching, a small region of the whole micrograph or 3D structure is searched by cross-correlation with the template. If the image or structure is normalized as a whole (global normalization), the cross-correlation between the template and each small, local region will be influenced by many features outside the region of interest. On the other hand, if the image or structure is normalized just in the local region at each step of the search, the resulting correlation values will give more reliable results reflecting the local match with the template. A locally normalized correlation approach was developed by Roseman and is widely used.124,128,129

4.4. Alignment in Tomography

4.4.1. Alignment with and without Fiducial Markers

The accuracy of tomographic reconstruction depends on the alignment of successive tilt views. Alignment is done by tracking the displacements of marker particles (fiducial markers) across the image as a function of tilt angle. Dense particles such as colloidal gold beads or quantum dots (semiconductor particles that are both fluorescent and electron dense) are used for this purpose. For plastic sections, these markers are applied to the surfaces. With a good distribution of fiducial markers and a stable specimen, it is possible to obtain accurate alignment and even to correct for local distortions. Alignment can sometimes be improved by restricting it to subregions of interest, which move coherently through the tilt series.(130)