Abstract

Objective

To conduct a grounded needs assessment to elicit community-based physicians' current views on clinical decision support (CDS) and its desired capabilities that may assist future CDS design and development for community-based practices.

Materials and methods

To gain insight into community-based physicians' goals, environments, tasks, and desired support tools, we used a human–computer interaction model that was based in grounded theory. We conducted 30 recorded interviews with, and 25 observations of, primary care providers within 15 urban and rural community-based clinics across Oregon. Participants were members of three healthcare organizations with different commercial electronic health record systems. We used a grounded theory approach to analyze data and develop a user-centered definition of CDS and themes related to desired CDS functionalities.

Results

Physicians viewed CDS as a set of software tools that provide alerts, prompts, and reference tools, but not tools to support patient management, clinical operations, or workflow, which they would like. They want CDS to enhance physician–patient relationships, redirect work among staff, and provide time-saving tools. Participants were generally dissatisfied with current CDS capabilities and overall electronic health record usability.

Discussion

Physicians identified different aspects of decision-making in need of support: clinical decision-making such as medication administration and treatment, and cognitive decision-making that enhances relationships and interactions with patients and staff.

Conclusion

Physicians expressed a need for decision support that extended beyond their own current definitions. To meet this requirement, decision support tools must integrate functions that align time and resources in ways that assist providers in a broad range of decisions.

Keywords: Qualitative/ethnographic field study, biomedical informatics, developing/using clinical decision support (other than diagnostic) and guideline systems, knowledge acquisition and knowledge management, human-computer interaction and human-centered computing, social/organizational study, developing/using computerized provider order entry, you have, improving the education and skills training of health professionals, system implementation and management issues

Introduction

Clinical decision support (CDS) systems ‘provide clinicians, staff, patients, and other individuals with knowledge and person-based information, intelligently filtered and presented at appropriate times, to enhance health and healthcare.’1 Beginning in 2011, federal financial incentives will encourage community-based physicians to install and ‘meaningfully use’ electronic health record (EHR) systems that include CDS.2 Community-based physicians, however, are a relatively new population in which to study biomedical informatics with general and CDS in particular. We sought to understand what CDS means to these physicians and how it can better meet their needs. Furthermore, we wanted to determine if community-based physicians faced decisions not addressed by CDS. Our goal was to provide a user-centered perspective that could help developers optimize CDS functions to meet the needs of physicians in community-based settings.

Background

The American Reinvestment and Recovery Act directs $27 billion to hospitals and eligible physicians to encourage adoption of certified EHRs that meet ‘meaningful use’ standards.1 Certified EHRs are required to provide specific CDS mechanisms such as automatically identifying and preventing unsafe drug–drug interactions, as well as optionally presenting mechanisms such as drug formulary tools.2 3 To date, relatively few community-based physicians use fully functioning EHRs with CDS.4

CDS evaluations have historically focused on inpatient settings within a small cohort of academic medical centers.5–7 Few published studies on CDS have been conducted in community settings,8–12 even though medical errors commonly occur in these environments.13 Those that have been conducted demonstrate inconsistencies in outcomes and processes.8 11 14–18 To our knowledge, the only randomized controlled study of CDS in community-based clinics related to an automated dyslipidemia alert prompting higher screening rates compared to as-needed alerts or no alerts at all.12 However, the authors did not discuss why a large proportion (35%) of physicians in the automated alerts group did not carry out the recommended screening, thereby leaving unanswered questions about how CDS can optimally influence user behavior.

To determine the effects alerts had on primary care providers, Krall conducted focus groups and reported that participants felt workflow was the most pressing concern.19 20 This finding is in line with the first three of the CDS ‘ten commandments,’ which state that ‘speed is everything, anticipate needs and deliver in real time, and fit (CDS) into the user's workflow.’21 Weingart et al surveyed community-based physicians and found that more than one-third reported changing a ‘potentially dangerous prescription’ within the previous 30 days due to e-prescribing alerts.9 Those physicians also reported ignoring a host of alerts, yet the ignored alerts may still have altered their approaches to care by prompting them to offer patients additional counseling, for example. That finding led the authors to note that rates of ‘ignored’ alerts alone may not have wholly reflected the impact alerts had on medical practice, thereby raising questions as to how CDS impacts user behavior.

Informatics researchers have begun considering that decision supports may extend beyond the alert-reminder paradigm. Stead et al identified a range of types from ‘simple rule-based alerts’ to ‘statistical and heuristic’ logics.22 The authors described ‘patient-centered cognitive support’ as an aspect of CDS that requires particular attention. Examples of cognitive support include holistic patient information developed from ‘fragmented’ patient data as well as tools that simplify data collection. These CDS functions are particularly salient within the context of the patient-centered medical home model that places a premium on clinical decision-making for patients who receive care over time from multiple providers. Bates and Bitton noted a significant knowledge gap in our understanding of CDS functions in these contexts.23 Armijo et al framed CDS as merely one group of information tools that are needed to support clinical decision-making, the others being ‘memory aids, computational aids, and collaboration aids.’24 Our own research described constituencies' competing and sometimes conflicting understandings of the meanings of CDS, which include ‘alerting, workflow, and cognitive’ supports.25 These studies demonstrate a ‘pressing need’26 to determine how CDS should support clinical decision-making, particularly in community-based practices.

We attempted to understand CDS needs from provider perspectives in community-based practices. Although informatics literature provides multiple needs analyses,22 27–42 we were unable to locate any that sought insight from community-based physicians within the US.43–46 Outside of informatics literature, Hoff conducted a thorough sociological study of primary care physicians and discussed EHRs in general but did not address CDS.47 He reported providers' general frustration with poor EHR usability and burdensome ‘cognitive work’ for users, such as coordinating care on patients' behalf.

Given that CDS is a critical component of EHR meaningful use and that much remains to be learned about effective CDS in community-based practices, we sought to understand how physician users view CDS. We believe their perspectives can inform the design of future CDS tools.48–53

Methods

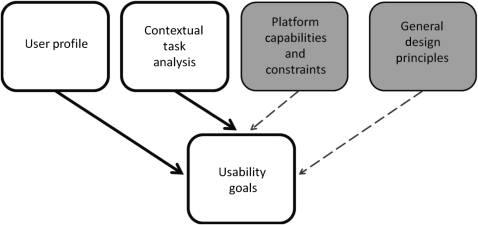

Our study design was based on aspects of a human–computer interaction framework54 in order to understand users and their tasks (see figure 1). We analyzed our data using the grounded theory method, an inductive approach in which transcribed interview and observation data are labeled (‘coded’) and then organized into themes as they ‘emerge.’27 55–58 We sought to answer two research questions: (1) How do community-based physicians conceptualize CDS? and (2) What do community-based physicians need from CDS? We received institutional review board approval for all study sites.

Figure 1.

Mayhew's human–computer interaction model focusing on ‘user profile‘ and ‘contextual task analysis.’ Shaded areas were not examined in this study.

Site selection

We purposefully recruited community-based physicians from three healthcare organizations across Oregon that each supported a different EHR with CDS. Organization A oversaw the installation and maintenance of NextGen (NextGen Healthcare; Horsham, Pennsylvania, USA) on behalf of its independent practice members. Organization B used eClinicalWorks (eClinicalWorks; Westborough, Massachusetts, USA) that was supported by an EHR service provider. Organization C oversaw the installation and maintenance of Epic Ambulatory (Epic Systems; Verona, Wisconsin, USA). The inclusion of three different healthcare organizations and EHRs provided a sample with maximum variability that enabled an investigation of physicians' felt needs59 that extended beyond any single software platform or organizational boundary and could generate themes based on a wider range of experiences, opinions, and insights. We did not collect clinic-level patient population data.

We received a minimum of 1 hour training on each EHR to become familiar with screen layouts, available CDS, and workflows for documenting a patient encounter. The purpose was to inform our observations so that we would know when a physician was not using available CDS functions. We refrained from extensive system training so as to not become immersed in system functionalities but rather remain focused on users.

Participant recruitment

Recruitment centered on the predominant primary care specialties in the US: (1) family practice, (2) internal medicine, (3) obstetrics-gynecology, and (4) pediatrics.60 61 We sought study participants with exposure to CDS within their EHR system (including active and passive alerts and reminders) to ensure that participants were able to discuss CDS-related issues. We also sought 30 subjects (10 from each organization) to achieve thematic saturation so that there would be sufficient data to identify recurring themes. We recruited participants with the assistance of organizational sponsors, participant referrals, and staff from various clinics. Contact with potential participants was made in person, by email, or over the phone. Rural or urban status was not a requirement for inclusion in the study, although we actively sought participants from both settings. Small tokens of appreciation were given to participants and staff for donating their time and effort.

Observation and interview strategies

We observed physicians to conduct a ‘contextual task analysis’54 of their decision-making in community-based settings. All participants were de-identified and no personally identifiable patient data were collected. Patients were provided with a study handout and agreed to any observations before an observer entered the patient room. The observer (JER) took field notes and noted participants' decision-making related to goals, tasks, and interactions with their environments. Observations were minimally obtrusive, and the observer only asked questions during opportune moments in clinical workflow.

Interviews probed participants' views on current CDS tools to gain insight into desired CDS functionalities. Participants provided signed consent prior to any digitally recorded interview and all interviews but one were conducted in private. The questions (see online appendix A) were designed to provide a deep understanding of participants' goals, motivations, and decision-making needs. We also probed and explored topics that arose during observations. The interview guide evolved as the study progressed and as subjects revealed topics we had not originally considered.

Analysis

We reviewed transcripts of interviews and observations, then coded content deemed significant or noteworthy using NVivo qualitative software version 8.x (QSR International; Doncaster, Australia). We then organized codes into broader themes related to CDS definitions and needs.

We also took three steps to improve the reliability of our results. First, we consulted with two medical anthropologists not associated with the study to compare codes. In these discussions, we addressed questions and competing interpretations of the data until each researcher determined that an appropriately descriptive codebook had been developed. Second, we conferred with health organizations to clarify if observed or discussed system limitations resulted from the EHR/CDS systems, limitations in participants' knowledge, or both. Third, we sought feedback on preliminary results from all 30 participants via email according to published best practices.62 We received feedback from seven respondents, all of whom approved the user-centered CDS definition and affirmed the user-centered distinction between CDS and non-CDS functions.

Results

Participating providers and clinics

We conducted interviews and observations in 15 urban and rural community-based clinics across three healthcare organizations over the course of 2 months in the spring of 2010 (table 1).

Table 1.

Clinic characteristics

| Clinics | Data |

| Number of clinics | 15 |

| Clinics with Epic Ambulatory | 6 |

| Clinics with eClinicalWorks | 5 |

| Clinics with NextGen | 4 |

| Average number of doctors/clinic | 8 (7.333) |

| Median number of doctors/clinic | 7 |

| Range of doctors/clinic | 1–21 |

| Rural clinics | 5 |

| Urban clinics | 10 |

We interviewed 30 primary care physicians (table 2). Twenty-four physicians consented to observations in patient rooms, two consented to observations in clinic work areas only (an obstetrics/gynecology clinic), and four declined observations. Semi-structured interviews averaged 26 min in length and ranged from 11 min to 38 min.

Table 2.

Subject demographics (includes all subjects except one non-respondent)

| Participants | Data |

| Males | 17 |

| Females | 13 |

| Average age in years (range) | 48 (31–63) |

| Family practitioners | 16 |

| General internists | 7 |

| Obstetrics/gynecology | 3 |

| Pediatricians | 4 |

| Years practicing medicine | 17 |

| Average years using the current EHR | 2.75 |

| Subjects who had used another EHR | 7 |

| Level of EHR proficiency, self-assessment (current EHR only) | 4 Novice |

| 15 Average | |

| 10 Advanced |

EHR, electronic health record system.

Participants' definition of what is and is not CDS

Participants were familiar with CDS functions and adept at describing its impact based on their experiences. Participants described facets of CDS that informed the following definition: ‘Clinical decision support is made up of tools that are intended to inform clinical decisions by way of electronically delivered medication safety alerts, health maintenance alerts and prompts, best practice prompts, and access to accurate and timely reference materials.’

Participants distinguished clinical decisions directly related to patient care from other types of decisions that were tangential to patient care. For example, a family practitioner (FP) made the following distinction: ‘[Clinical] decision support … is … knowing what drug to prescribe … but I think [ensuring that lab test results are available prior to a patient visit] would just be a DOCTOR support, or care management support ….’ The distinction is salient because participants expressed a need for ‘clinical decision support’ as well as types of ‘care management support’ that buttressed administrative activities.

Participants saw subtle but clear distinctions between CDS and administrative support. An internist highlighted the distinction: ‘… answering phone calls [or] inputting medication in lists…[That is] just CLINICAL support … [Clinical] decision support is … the algorithms … The patient has a thyroid nodule. What do you do NEXT?’

Physicians from all four specialties described what did not constitute CDS. The need for EHR navigation, a common frustration among study participants, was not considered a form of CDS. A clear distinction was made between navigating the EHR to find information and ultimately deciding what to do. An FP noted, ‘I don't know [if EHR navigation] affects my CLINICAL decision … the ultimate outcome is the same. I just take a lot more steps to GET there.’ The quote conveys a sentiment commonly expressed among participants—that poor navigation merely caused ‘more steps’ to get to an inevitable clinical decision. Probing questions, however, revealed examples of when poor navigation impacted medical practice: ‘I have to go in and look at each different [medical] note until I find a foot exam … And so rather than DO that, I just do another foot exam.’

Community-based physicians' requirements of CDS

We grouped data into three themes: (1) provider characteristics and motivations that inform their goals; (2) system characteristics that support decision-making; and (3) work environment and characteristics of clinical tasks (see box 1 and supplemental table 4 in online appendix C for a detailed summary).

Box 1. Summary of themes.

- 1.0 The users

- 1.1 Personalities and perspectives

- 1.2 Patient relationships

- 2.0 The system

- 2.1 Medication alerts

- 2.2 Health maintenance alerts and prompts

- 2.3 Best practice alerts and flowsheets

- 2.4 Access to accurate and timely reference materials

- 2.5 Usability barriers and facilitators

- 2.6 Patient panel management tools

- 2.7 Data availability

- 3.0 The work

- 3.1 The community-based practice environment

- 3.2 Time

- 3.3 Cognitive work

- 3.4 Collaboration

- 3.5 Workflow

The users

User-centered design encourages developing an understanding of users' goals and motivations, whether professional or personal. Participants stated that they enjoyed the ‘variety’ and ‘spectrum’ of patients in primary care and that they would feel ‘bored’ by the uniformity of cases in specialty care. Participants saw themselves as ‘compulsive’ and ‘a little OCD [obsessive-compulsive disorder]’; some would prepare for patient visits the night before because preparation reduced ‘anxiety’ when seeing patients. More than one described primary care practitioners as a ‘tribe’ and some lamented that their specialty is not always professionally and economically valued.

Participants placed a high value on patient relationships and the ‘trust’ engendered in relationships played a role in their decision-making. Relationships not only created trust but were also were a source of providers' job satisfaction.

The system

Participants commented that the EHR systems were built for billing purposes rather than to support clinical decisions, and as such benefitted people other than themselves such as ‘lawyers’ and ‘billers.’

Participants stated that they rejected alerts, and were observed rejecting alerts, for three primary reasons: (1) ‘fatigue’ from the abundance of alerts; (2) alerts not reflecting perceived clinical importance; and (3) perceived inaccuracies of alerts given a patient's context. Participants reported an overall sense that alerts were not trustworthy, and users reportedly turned off CDS within hours of initial use.

We also observed health maintenance alerts and prompts being ignored. When we asked why, a common reason concerned perceived time shortages. Yet at the same time, physicians expressed a need for systems that prompted best practices such as alerts or flowsheets. One FP recalled not remembering the procedure for receiving a positive RPR (test for syphilis) and stated she had lacked access to recommended procedures.

Community-based physicians also placed a high premium on timely access to reference material (see online appendix B). Being able to access online material while in the patient room was considered a boon, despite the occasional awkwardness of searching in front of patients. Reference material often informed physicians' decision-making and care plans.

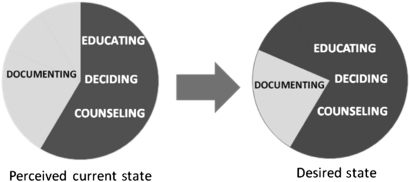

Participants' comments demonstrated that poor CDS usability affected their decision whether or not to take action. First, EHRs separated medications, conditions, diagnoses, and more across display tabs. Physicians reported that these compartmentalized displays slowed the ‘flow’ of patient visits and diminished patient–provider interactions. Second, slow data collection tools hindered participants' abilities to interact with patients and shortened the time for patient education and counseling (see figure 2). Participants appreciated built-in functions and third-party applications that made data collection quicker. Third, participants often took paper-based notes during patient visits to capture data for eventual entry into the system. Lastly, some participants appreciated improved documentation through templates and flowsheets, while others expressed concern that these tools promoted medicine that was not patient-centered.

Figure 2.

Perceived need for clinical decision support (CDS) to redirect work away from documentation.

Participants expressed a need for tools to help manage patient panels in order to identify groups of patients requiring particular follow-up. One organization's EHR system had an unused panel management capability, yet decision-makers were unaware that there was an expressed need for this function among users.

Participants were well aware of their inability to share data within and across practices, and bemoaned waste and inefficiency due to inconsistencies in medication lists, laboratory data, and patients' health maintenance data. One participant expressed frustration that different values from within a single basic metabolic panel arrived on different days.

The work

Participants idealized CDS tools that would enhance their decision-making and clinical efforts within community-based practice settings. Clinic environments placed physical and cognitive demands on participants who described challenges associated with clinical decision-making.

Participants were exceedingly busy. The pace of work in both urban and rural practices felt rushed. Observation notes indicated that participants frequently ran behind schedule. Lunch times were often abbreviated and food hastily eaten while the participants caught up with paperwork and charting. Within this context, physicians ignored health maintenance flags, reminders, and alerts due to perceived lack of time.

Participants reported day-to-day ‘cognitive work’ for which doctors do not get paid and receive little support. An internist described ‘cognitive work’ as the effort required to synthesize disparate data both within and outside the EHR. Participants reported needing tools that helped present a overall view of a patient or patients so that decisions about care plans and strategies could be implemented.

The observations identified four groups with whom participants often collaborated: patients, patients' families and friends, clinic staff, and entities within the larger healthcare system. Physicians often used EHR displays to facilitate communication and joint decision-making with patients and patients' families, and one provider envisioned a CDS that could shift ‘routine’ work to staff and ‘doctor stuff,’ like patient education, to providers.

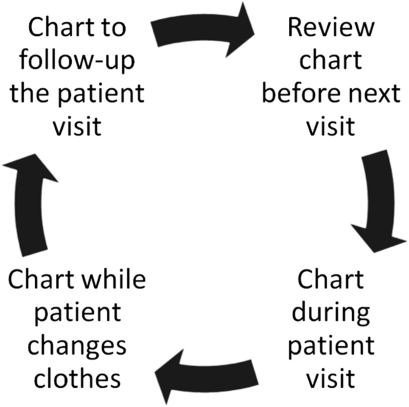

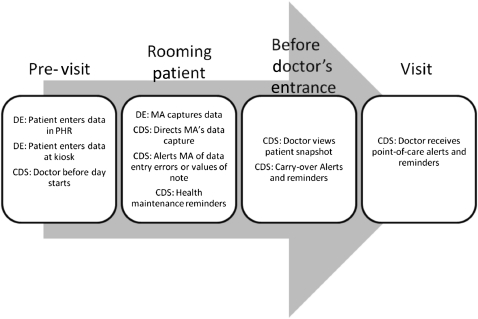

Participants noted that the workflow of meeting a patient, making initial notes, and then charting after the visit was time consuming and ‘energy draining.’ Although participants exhibited idiosyncrasies when charting, a cyclical pattern emerged that appeared to hold true regardless of site, physician role, or EHR system (see figure 3).

Figure 3.

Common instances of charting during patient visits.

Discussion

How participants viewed CDS

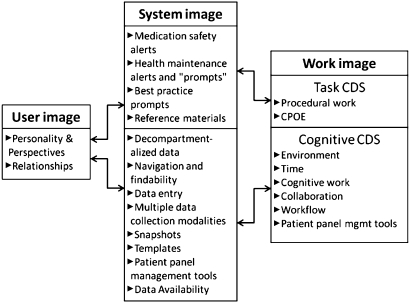

Participants distinguished clinical decisions from clinic decisions. Clinical decisions dealt with procedures such as ordering medications and administering evidence-based treatments. Participants wanted improved alerts, reminders, and algorithms to help with clinical decisions. However, participants also described a class of clinic decisions that entailed collaboration, workflow, and time management. Based on participants' perspectives, we distinguish CDS meant to support clinical decisions from ‘cognitive decision support’ meant to support clinic decisions (see figure 4). We found this distinction to be noteworthy and it supports the view that a spectrum of decision supports may optimize EHRs intended to support clinical performance.22 24 Further investigation into the nature of clinical decision-making and cognitive decision-making as it relates to CDS design is therefore required.

Figure 4.

A proposed model for using a system to connect users with larger goals. CDS, clinical decision support; CPOE, computerized provider order entry.

What participants needed from CDS

We present our proposed model for using a system to connect users with larger goals in figure 4.

The users

CDS designers could utilize this understanding of participants' personalities and perspectives as well as their desire to maintain bonds with patients. The physicians we studied wanted CDS features that support immediate tasks as well as enhance patient communication, such as informative yet brief patient summaries. This would help to provide physicians with a greater sense of control over an EHR that facilitates information and knowledge exchange with patients, and engender greater trust between patients and physicians, giving physicians another ‘tool’ with which to foster patient interaction.

The system

The incorporation of snapshots into EHRs could support decision-making by providing a tool that gathers data into high-level patient views. Snapshots would be akin to web-based maps that enable users to zoom in and out of a particular location, genograms that visually display familial relationships (see figure 5), and data maps.63 Snapshots would also address a number of requirements raised in this study: the need to establish or re-establish a patient's identity in a provider's mind, the need to promote relationships with patients, the need to view data within a model of the patient rather than a model of a paper record, and the need to provide patient data pertinent to a physician's role. We encourage further research in this area.

Figure 5.

A genogram showing medical conditions, familial relations, and occupational information.66 HTN, hypertension.

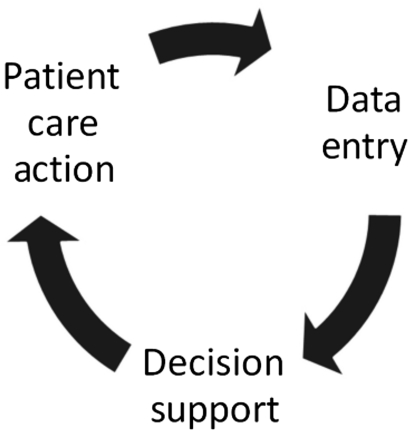

Observations in the field revealed the difficulty participants had in capturing clinical data. Our time spent observing and interviewing participants highlighted a need for efficient tools that populate data in EHRs. This shortcoming is critical since a CDS system can only be as good as the data entered into it, and if the data are suspect, then so too is the CDS. First, we suggest that patient encounters require minimal typing by the physician. Second, we propose a model that views data entry as one step in a CDS lifecycle whereby clinical data drive CDS, CDS drives actions, and those actions require follow-up data entry (see figure 6).

Figure 6.

Clinical decision support (CDS) as one stage in a cycle.

The work

Decision-making occurred within demanding and tiring work environments where interruptions were frequent. Our findings concerning the work fit into three key categories: (1) the use of CDS to redirect work among clinical staff; (2) cognitive work; and (3) workflow.

Redirecting work

CDS could be designed in ways that shift ‘data work’ tasks to lower level staff members who (guided by flowsheets, reminders, and alerts) could collect data required for routine health maintenance. Shifting these tasks would allow physicians to carry out ‘doctor stuff’ such as educating, counseling, and motivating patients to meet a variety of individual needs. Doctors often described these activities as the enjoyable aspects of their work, underpinning the reasons why they chose to work in primary care settings. Given this perspective, differently designed CDS would enable physicians to offer more to patients by freeing them from simple but time-consuming tasks that can be done by others.

Cognitive work

Participants used the term ‘cognitive work’ in support of Hoff's findings.47 Cognitive work was described as the unpaid work of communicating and coordinating care as opposed to the procedural work for which providers are paid. Cognitive work consists of myriad tasks such as reviewing laboratory results from disparate sources, communicating with providers in hospitals and other practices, contacting patients, and synthesizing notes. Participants asserted the need for cognitive support to better meet patients' needs because they felt burdened by inefficient methods of documentation, communication, and coordination; physicians feel new forms of ‘decision support’ (not CDS) may help with their cognitive work. These issues may become more relevant as an increasing number of providers in the US attempt to create practices that are modeled on the patient-centered medical home.64 65

Workflow

Finally, we found that users may respond more positively to CDS that is presented during opportune moments within workflow rather than primarily at the point-of-care (see figure 7).

Figure 7.

Opportunities for CDS display in community-based practices. DE, data entry opportunities; CDS, clinical decision support opportunities.

Limitations

This study focused more on users and their tasks and less on the systems they used (see figure 1). However, we received training in each EHR system and consulted participating organizations when we had questions about EHR or CDS functionality and design. Participating organizations were all based in Oregon, but we sought participants from clinics across healthcare organizations who practiced in a range of communities, including urban and rural settings, and who used different EHR systems. Finally, the first author coded the data but did so in consultation with the second author, unaffiliated medical anthropologists, and with feedback from a subset of participants .

Conclusion

Our user-centered approach generated an understanding of participants' motivations, goals, and insights into decision-making in community-based practices. Participants delineated between clinical and clinic-related decisions and maintained that the two are quite different, even though the lines sometimes blurred upon further questioning. This finding may inform future discussions as researchers attempt to develop models of different decision support systems.

Participants described the requirements of clinical decision tools such as effective medication alerts and cognitive decision supports that address ‘cognitive work’ and improve connectedness. First, participants wanted tools that supported their personal satisfaction in developing and promoting relationships with a wide spectrum of patients who present with a variety of conditions. Second, participants wanted CDS that offered patient-specific alerts and reminders as well as decision supports that aggregate data into patient snapshots and data maps. Third, participants needed tools that support collaboration with patients as well as staff. Future research into the various aspects and types of decision-making can broaden discussion to better define decision support and how it can be designed to better meet users' needs.

Acknowledgments

The first author would like to thank the following for their input and guidance: Aaron M Cohen, MD, MS; David A Dorr, MD, MS; Justin Fletcher, PhD; Gregory Fraser, MD, MBI; and Thomas R Yackel, MD, MPH, MS. We would also like to thank Carmit McMullen, PhD, and Jill A Pope for their editorial assistance. Finally, we would like to thank the participating healthcare organizations, clinics, and providers who gave time and effort to this project.

Footnotes

Funding: This study was supported Training Grant 2-T15-LM007088 and NLM Research Grant 563 R56-LM006942 from the National Library of Medicine.

Competing interests: None.

Ethics approval: Ethics approval was provided by OHSU.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Blumenthal D. Stimulating the adoption of health information technology. N Engl J Med 2009;360:1477–9 [DOI] [PubMed] [Google Scholar]

- 2.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med 2010;363:501–4 [DOI] [PubMed] [Google Scholar]

- 3.Berner ES. Clinical Decision Support Systems: State of the Art. 2009. http://healthit.ahrq.gov/images/jun09cdsreview/09_0069_ef.html [Google Scholar]

- 4.DesRoches CM, Campbell EG, Rao SR, et al. Electronic health records in ambulatory care—a national survey of physicians. N Engl J Med 2008;359:50–60 [DOI] [PubMed] [Google Scholar]

- 5.Shojania KG, Jennings A, Mayhew A, et al. The effects of on-screen, point of care computer reminders on processes and outcomes of care. Cochrane Database Syst Rev 2009;(3):CD001096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 7.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 8.Steele AW, Eisert S, Witter J, et al. The effect of automated alerts on provider ordering behavior in an outpatient setting. PLoS Med 2005;2:e255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Weingart SN, Massagli M, Cyrulik A, et al. Assessing the value of electronic prescribing in ambulatory care: a focus group study. Int J Med Inform 2009;78:571–8 [DOI] [PubMed] [Google Scholar]

- 10.Weingart SN, Simchowitz B, Shiman L, et al. Clinicians' assessments of electronic medication safety alerts in ambulatory care. Arch Intern Med 2009;169:1627–32 [DOI] [PubMed] [Google Scholar]

- 11.Tamblyn R, Huang A, Taylor L, et al. A randomized trial of the effectiveness of on-demand versus computer-triggered drug decision support in primary care. J Am Med Inform Assoc 2008;15:430–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.van Wyk JT, van Wijk MA, Sturkenboom MC, et al. Electronic alerts versus on-demand decision support to improve dyslipidemia treatment: a cluster randomized controlled trial. Circulation 2008;117:371–8 [DOI] [PubMed] [Google Scholar]

- 13.Woods DM, Thomas EJ, Holl JL, et al. Ambulatory care adverse events and preventable adverse events leading to a hospital admission. Qual Saf Health Care 2007;16:127–31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lo HG, Matheny ME, Seger DL, et al. Impact of non-interruptive medication laboratory monitoring alerts in ambulatory care. J Am Med Inform Assoc 2009;16:66–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gandhi TK, Weingart SN, Seger AC, et al. Outpatient prescribing errors and the impact of computerized prescribing. J Gen Intern Med 2005;20:837–41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Berner ES, ed. Clinical Decision Support Systems: Theory and Practice. c2007. New York, NY: Springer Science+Business Media, LLC [Google Scholar]

- 17.Isaac T, Weissman JS, Davis RB, et al. Overrides of medication alerts in ambulatory care. Arch Intern Med 2009;169:305–11 [DOI] [PubMed] [Google Scholar]

- 18.Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006;13:5–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Krall MA. Assessments of Outpatient Electronic Medical Record Alert and Reminder Usability and Usefulness Requirements: A Qualitative Stud, 2002. http://drl.ohsu.edu/cgi-bin/showfile.exe?CISOROOT=/etd&CISOPTR=603&filename=324.pdf (accessed 1 Mar 2010). [PMC free article] [PubMed] [Google Scholar]

- 20.Krall MA, Sittig DF. Clinician's assessments of outpatient electronic medical record alert and reminder usability and usefulness requirements. Proc AMIA Symp 2002:400–4 [PMC free article] [PubMed] [Google Scholar]

- 21.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003;10:523–30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stead W, National Research Council (US) Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. Washington, DC: National Academies Press, 2009 [PubMed] [Google Scholar]

- 23.Bates DW, Bitton A. The future of health information technology in the patient-centered medical home. Health Aff (Millwood) 2010;29:614–21 [DOI] [PubMed] [Google Scholar]

- 24.Armijo D, McDonnell C, Werner K. Electronic Health Record Usability: Interface Design Considerations. Rockville, MD: Agency for Healthcare Research and Quality, 2009. http://healthit.ahrq.gov/portal/server.pt/.0./09(10)-0091-2-EF.pdf [Google Scholar]

- 25.Richardson JE, Ash JS, Sittig DF, et al. Multiple perspectives on the meaning of clinical decision support. AMIA Annu Symp Proc 2010:1427–31 [PMC free article] [PubMed] [Google Scholar]

- 26.Armijo D, McDonnell C, Werner K. Electronic Health Record Usability: Evaluation and Use Case Framework. Rockville, MD: Agency for Healthcare Research and Quality, 2009. http://healthit.ahrq.gov/portal/server.pt/.0./09(10)-0091-1-EF.pdf [Google Scholar]

- 27.Forsythe DE, Buchanan BG, Osheroff JA, et al. Expanding the concept of medical information: an observational study of physicians' information needs. Comput Biomed Res 1992;25:181–200 [DOI] [PubMed] [Google Scholar]

- 28.Teach RL, Shortliffe EH. An analysis of physician attitudes regarding computer-based clinical consultation systems. Comput Biomed Res 1981;14:542–58 [DOI] [PubMed] [Google Scholar]

- 29.McGowan JJ, Richwine M. Electronic information access in support of clinical decision making: a comparative study of the impact on rural health care outcomes. Proc AMIA Symp 2000:565–9 [PMC free article] [PubMed] [Google Scholar]

- 30.Mihailidis A, Krones L, Boger J. Assistive computing devices: a pilot study to explore nurses' preferences and needs. Comput Inform Nurs 2006;24:328–36 [DOI] [PubMed] [Google Scholar]

- 31.Carlson BA, Neal D, Magwood G, et al. A community-based participatory health information needs assessment to help eliminate diabetes information disparities. Health Promot Pract 2006;7(3 Suppl):213S–22S [DOI] [PubMed] [Google Scholar]

- 32.Lloyd SC. Clinical Decision Support Systems for Ambulatory Care. 1984. http://www.pubmedcentral.nih.gov/pagerender.fcgi?artid=2578687 [Google Scholar]

- 33.Kukafka R, Millery M, Chan C. Needs assessment for the design of an informatics tool for HIV counselors. AMIA Annu Symp Proc 2007:1017. [PubMed] [Google Scholar]

- 34.Morris M, Lundell J, Dishman E. Catalyzing social interaction with ubiquitous computing: a needs assessment of elders coping with cognitive decline. In: CHI '04 Extended Abstracts on Human Factors in Computing Systems. Vienna, Austria: ACM, 2004:1151–4 [Google Scholar]

- 35.Ash JS, Gorman PN, Lavelle M, et al. Bundles: meeting clinical information needs. Bull Med Libr Assoc 2001;89:294–6 [PMC free article] [PubMed] [Google Scholar]

- 36.Covell DG, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med 1985;103:596–9 [DOI] [PubMed] [Google Scholar]

- 37.Currie LM, Graham M, Allen M, et al. Clinical information needs in context: an observational study of clinicians while using a clinical information system. AMIA Annu Symp Proc 2003:190–4 [PMC free article] [PubMed] [Google Scholar]

- 38.Graham MJ, Currie LM, Allen M, et al. Characterizing information needs and cognitive processes during CIS use. AMIA Annu Symp Proc 2003:852. [PMC free article] [PubMed] [Google Scholar]

- 39.Wager KA, Lee FW, White AW, et al. Impact of an electronic medical record system on community-based primary care practices. J Am Board Fam Pract 2000;13:338–48 [PubMed] [Google Scholar]

- 40.Yoon-Flannery K, Zandieh SO, Kuperman GJ, et al. A qualitative analysis of an electronic health record (EHR) implementation in an academic ambulatory setting. Inform Prim Care 2008;16:277–84 [DOI] [PubMed] [Google Scholar]

- 41.Perley CM, Gentry CA, Fleming AS, et al. Conducting a user-centered information needs assessment: the Via Christi Libraries' experience. J Med Libr Assoc 2007;95:173–81, e54–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Barrett JR, Strayer SM, Schubart JR. Assessing medical residents' usage and perceived needs for personal digital assistants. Int J Med Inform 2004;73:25–34 [DOI] [PubMed] [Google Scholar]

- 43.Short D, Frischer M, Bashford J. Barriers to the adoption of computerised decision support systems in general practice consultations: a qualitative study of GPs' perspectives. Int J Med Inform 2004;73:357–62 [DOI] [PubMed] [Google Scholar]

- 44.Wilson A, Duszynski A, Turnbull D, et al. Investigating patients' and general practitioners' views of computerised decision support software for the assessment and management of cardiovascular risk. Inform Prim Care 2007;15:33–44 [DOI] [PubMed] [Google Scholar]

- 45.Christensen T, Grimsmo A. Instant availability of patient records, but diminished availability of patient information: a multi-method study of GP's use of electronic patient records. BMC Med Inform Decis Mak 2008;8:12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Christensen T, Grimsmo A. Expectations for the next generation of electronic patient records in primary care: a triangulated study. Inform Prim Care 2008;16:21–8 [DOI] [PubMed] [Google Scholar]

- 47.Hoff T. Practice Under Pressure: Primary Care Physicians and their Medicine in the Twenty-First Century. New Brunswick, NJ: Rutgers University Press, 2010 [Google Scholar]

- 48.Weiner M, Callahan CM, Tierney WM, et al. Using information technology to improve the health care of older adults. Ann Intern Med 2003;139:430–6 [DOI] [PubMed] [Google Scholar]

- 49.Cooper A. About Face 2.0: The Essentials of Interaction Design. New York; Chichester: Wiley, 2003 [Google Scholar]

- 50.Rich E. User modeling via stereotypes. In: Readings in Intelligent User Interfaces. San Francisco, CA: Morgan Kaufmann Publishers Inc, 1998:329–42 [Google Scholar]

- 51.Cooper A. The Inmates are Running the Asylum: Why High Tech Products Drive Us Crazy and How to Restore the Sanity. Pearson Higher Education, 2004 [Google Scholar]

- 52.Dreyfuss H. Designing for People. New York: Allworth Press, 2003 [Google Scholar]

- 53.Beyer H. Contextual Design: Defining Customer-Centered Systems. San Francisco, CA: Morgan Kaufmann, 1998 [Google Scholar]

- 54.Mayhew DJ. Requirements specifications within the usability engineering lifecycle. In: Human-Computer Interaction: Development Process. Boca Raton, FL: CRC Press, 2009:23–53 [Google Scholar]

- 55.Kaplan B, Maxwell J. Qualitative research methods for evaluating computer information systems. Evaluating the Organizational Impact of Healthcare Information Systems. 2005:30–55 [Google Scholar]

- 56.Laxmisan A, Hakimzada F, Sayan OR, et al. The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care. Int J Med Inform 2007;76:801–11 [DOI] [PubMed] [Google Scholar]

- 57.Richardson JE, Ash JS. The effects of hands-free communication device systems: communication changes in hospital organizations. J Am Med Inform Assoc 2010;17:91–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Glaser B, Strauss A. Grounded Theory: The Discovery of Grounded Theory. New York: de Gruyter, 1967 [Google Scholar]

- 59.Royse D. Program Evaluation: An Introduction. 5th edn Australia; Belmont, CA: Wadsworth Cengage Learning, 2010 [Google Scholar]

- 60.Cherry DK, Woodwell DA, Rechtsteiner EA. National Ambulatory Medical Care Survey: 2005 summary. Adv Data 2007:1–39 [PubMed] [Google Scholar]

- 61.NAMCS/NHAMCS Physician Office Visit Data. http://www.cdc.gov/nchs/ahcd/physician_office_visits.htm (accessed 28 Dec 2010).

- 62.Edwards PJ, Roberts I, Clarke MJ, et al. Methods to increase response to postal and electronic questionnaires. Cochrane Database Syst Rev 2009;(3):MR000008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Tufte E. The Visual Display of Quantitative Information. 2nd edn Cheshire, CT: Graphics Press, 2001 [Google Scholar]

- 64.Rittenhouse DR, Shortell SM. The patient-centered medical home: will it stand the test of health reform? JAMA 2009;301:2038–40 [DOI] [PubMed] [Google Scholar]

- 65.National Committee on Quality Assurance NCQA's Patient-Centered Medical Home (PCMH) 2011. 2011. https://inetshop01.pub.ncqa.org/publications/product.asp?dept_id=2&pf_id=30004-301-11

- 66.McDaniel S. Family-oriented primary care. 2nd ed. New York: Springer, 2005 [Google Scholar]