Abstract

Visual expertise is usually defined as the superior ability to distinguish between exemplars of a homogeneous category. Here, we ask how real-world expertise manifests at basic-level categorization and assess the contribution of stimulus-driven and top-down knowledge-based factors to this manifestation. Car experts and novices categorized computer-selected image fragments of cars, airplanes, and faces. Within each category, the fragments varied in their mutual information (MI), an objective quantifiable measure of feature diagnosticity. Categorization of face and airplane fragments was similar within and between groups, showing better performance with increasing MI levels. Novices categorized car fragments more slowly than face and airplane fragments, while experts categorized car fragments as fast as face and airplane fragments. The experts’ advantage with car fragments was similar across MI levels, with similar functions relating RT with MI level for both groups. Accuracy was equal between groups for cars as well as faces and airplanes, but experts’ response criteria were biased toward cars. These findings suggest that expertise does not entail only specific perceptual strategies. Rather, at the basic level, expertise manifests as a general processing advantage arguably involving application of top-down mechanisms, such as knowledge and attention, which helps experts to distinguish between object categories.

Keywords: object recognition, categorization, visual cognition, expertise

Introduction

Visual expertise is traditionally defined as a superior skill in the discrimination of similar members of a homogenous category (Diamond & Carey, 1986; Tarr & Gauthier, 2000). Usually, expertise encompasses a very specific object category. For example, car experts can easily distinguish between similar models of modern cars, but they do not easily distinguish between similar models of antique cars (Bukach, Philips, & Gauthier, 2010). Several accounts for the acquisition of expertise have been suggested (for a review, see Palmeri, Wong, & Gauthier, 2004). For example, a widely cited account suggests that attaining expertise involves changing object perceptual representations from part-based in novices to holistic/global representations in experts, as well as changing processing strategies from focusing on features to computing the spatial relations between the features, that is, configural processing (Bukach, Gauthier, & Tarr, 2006; Gauthier & Tarr, 2002). According to this view, holistic representations and configural processing allow experts to overcome inherent difficulties in discriminating similar objects and eventually lead to the automatic categorization of objects of expertise at their subordinate level identity. For example, Gauthier and Tarr (2002) suggested that learning to distinguish among individual exemplars of an artificial category of objects (“Greebles”) is associated with an increase in sensitivity to configural information as well as with a greater inability to process a part of an individual Greeble without processing its other parts (but see McKone, Kanwisher, & Duchaine, 2007 for a critical reevaluation of the findings of these studies).

Despite the hallmark property of visual expertise, which is fast and accurate categorization at the subordinate level (Tarr & Gauthier, 2000), prior studies report that expertise might also be consequential at basic-level categorization (Harel & Bentin, 2009; Hershler, Golan, Bentin, & Hochstein, 2010; Hershler & Hochstein, 2009; Johnson & Mervis, 1997; Scott, Tanaka, Sheinberg, & Curran, 2006, 2008; Wong, Palmeri, & Gauthier, 2010). However, whereas relatively much is known about the information processing strategies characteristic of expert subordinate categorization, less is known about the information that is diagnostic for expert categorization of objects at the basic level. Does expert object recognition at the basic level depend on the automatic application of configural processing strategies, just as it is presumed for expert subordinate categorization? One approach to investigate this question is to explore whether perceptual expertise is consequential in situations that do not allow the application of holistic processing. For example, almost all people are experts with faces. Indeed, studies showed that faces are perceived holistically at least initially (e.g., Bentin, Golland, Flevaris, Robertson, & Moscovitch, 2006; Tanaka & Farah, 1993), and configural processes are needed to recognize faces at the individual level (for a review see Maurer, Le Grand, & Mondloch, 2002). Yet, in a recent study, we showed that expertise for faces is also evident while typical participants categorized at a basic level individual fragments of faces, which cannot be processed holistically (Harel, Ullman, Epshtein, & Bentin, 2007).

According to the fragment-based model of object recognition (Ullman, 2007; Ullman, Vidal-Naquet, & Sali, 2002), objects are represented in the visual cortex by a combination of image-based category-specific pictorial features called “fragments.” Simply put, the fragments are image patches of different sizes extracted from a large number of images from different object categories by maximizing the amount of information they deliver for categorization. This information can be formally expressed by the equation I(C, F) = H(C) − H(C|F), where I(C, F) denotes the mutual information (MI; Cover & Thomas, 1991) between the fragment F and the category C of images, and H denotes entropy. C and F in this scheme are binary variables: F denotes whether a certain feature is found in the image and C denotes whether the image belongs to the target category C. Thus, the usefulness of a fragment for representing a category is measured by the reduction in uncertainty about the presence of an object category C in an image by the possible presence of that fragment F in the image. This value is evaluated for a large number of candidate fragments, and the most informative ones are selected. Note that in contrast to models suggesting that either local features (Mel, 1997; Wiskott, Fellous, Krüger, & von der Malsburg, 1997) or global features (Turk & Pentland, 1991) are optimal for object categorization, the fragment-based model posits that the optimal features for different categorization tasks are typically of intermediate complexity (IC), that is, patches of intermediate size at high resolution or a larger size at intermediate resolution (for further details about the fragment extraction process, see Ullman et al., 2002).

In a previous study, we demonstrated that the seemingly abstract computational measure of MI is psychologically real with neurophysiological consequences (Harel et al., 2007). The level of MI contained in fragments of car and face images was shown to be correlated with accuracy and speed of human categorization of these fragments as well as with the amplitude of a negative occipitotemporal ERP component peaking 270 ms post stimulus onset. Two significant findings of this study are relevant here. First, the relation between MI and the N270 amplitude was not modulated by task demands as shown by very similar patterns in explicit and implicit categorization paradigms. This outcome was taken as evidence that MI utilization is the default of the visual system, acting even in the absence of explicit intention. Second, both RT and ERP data manifested different categorization patterns for the car and face fragments as a function of the MI. Across all levels, faces were more accurately recognized than cars, putatively manifesting the natural face expertise of the participants. In addition, whereas face fragments displayed a monotonic relation between the level of MI and the RT (as well as between MI and the N270 amplitude), in car fragments this relation was in the shape of a step function, dividing the fragments into “low” or “high” in terms of their informativeness.

What drives the between-category differences in the utilization of MI? Several factors may account for the differences in MI utilization for the two fragment categories. First, any performance difference between face and car fragments may be attributed to different levels of visual experience that people have with the two categories. Since most people are experts with faces but not with cars, the difference in the utilization of information between the car and face fragments might be attributed to the different levels of expertise that our participants had with faces and cars. Specifically, typical people may have learned to extract more information from faces, utilizing in the process a broader spectrum of features. Conversely, cars may necessitate only general distinctions, sufficiently captured by “low” and “high” level features. However, the difference in MI utilization between the two categories might also reflect inherent patterns of face specificity, which, critically, are found also for single face parts (Bentin, Allison, Puce, Perez, & McCarthy, 1996; Zion-Golumbic & Bentin, 2007). To disentangle the above two accounts, another type of expertise should be included in the design. Lastly, since in our previous study we presented only fragments from the car and face categories, it is also possible that different RT curves reflect the utilization of different kinds of diagnostic features (cf. Harel & Bentin, 2009), serving as unique “psychometric signatures” for these object categories. Put differently, it is quite possible that categorization of fragments from a third category of objects may show an altogether different pattern of response.

The goal of the present study was twofold: First, it was aimed at determining which of the above alternative accounts for the between-category differences reported in Harel et al. (2007) is correct. Second, more generally, the current study examined how visual expertise affects basic-level categorization of IC fragments, which do not provide a holistic image of the object and cannot be configurally processed. It is important to stress that we treat expertise in the current work as a psychological, observer-dependent variable rather than a computational measure. That is, we do not assume a priori that expertise is associated with qualitatively different fragments (although it might, see Discussion section) and do not try to simulate their derivation and usage. Instead, we ask how real-world experts, who were selected independently of the current task, categorize the same car fragments that we used previously. Thus, even though expertise is usually considered as a superior skill in the subordinate categorization of highly similar objects that involves holistic processing, we ask here whether expertise is consequential also for basic-level categorization and even if holistic processes are not possible.

To achieve the above goals, we conducted an experiment, which was almost identical to the explicit categorization experiment in our previous study (Harel et al., 2007, Experiment 1). Two critical additions distinguished, however, the current experiment from the previous one. First, in order to test the influence of acquired expertise on fragment categorization, a group of car experts participated in the experiment in addition to a new control group of novices. Second, fragments of a third category of objects, which neither group was expert with (passenger airplanes), replaced the “non-class” fragments (used as baseline in Harel et al.’s Experiment 1). Thus, both groups were experts with faces, only one group was expert with cars, and none of the groups was expert with airplanes. By using these categories of fragments, we were able to manipulate expertise both within subject and between the two groups.

We hypothesized that if car expertise leads to changes in visual processing strategies as reflected by the utilization of MI contained in the car fragments, several outcomes are possible. One is that the slope of the RT curve as a function of MI should be steeper in experts than novices, that is, experts should reach a plateau faster than novices. This would be so if the MI in each car fragment is more informative for experts than novices (suggesting that the experts are more efficient in their MI utilization). For example, if this assumption is correct, the diagnostic information that an expert can obtain from a fragment with little MI should parallel what a novice might be able to obtain only from a fragment with higher MI. Hence, comparing experts relative to novices, this should manifest in a shift to the left of the graph presenting the RT as function of the MI level and a shift to the right in accuracy level as a function of MI. Further, whereas both novices and car experts might show equivalent performance while categorizing car fragments with high MI conditions, a difference between the groups might emerge at low levels of MI, implying that experts are better at extracting the full available information from the lower MI features. Such a result would imply that although MI is an observer-independent measure of feature diagnosticity, its utility for categorization might differ with varying levels of the observer’s experience. Alternatively, it is possible that expertise does not only entail changes in processing strategies but influence basic-level categorization in other ways. If so, the categorization of car fragments should be overall faster and more accurate for experts than novices, but this difference would be similar across all MI levels. Such an outcome might suggest that the effect of expertise on basic-level categorization (at least for image fragments) is not manifested as changes in representations but rather as an enhancement or facilitation of existing categorization mechanisms, potentially involving top-down processes (such as expectations, prior knowledge, or attention). In line with this conjecture, a recent fMRI study demonstrated that expertise effects stem primarily from the enhanced engagement of experts with objects from their domain of expertise (Harel, Gilaie-Dotan, Malach, & Bentin, 2010). Finally, a third option is that there will be no difference between the car experts and novices in the categorization of car fragments. This might imply that the advantage provided by expertise depends on the ability to apply expert-specific processing strategies or that is not expressed as the extraction of mutual information from image fragments. In either case, this outcome would suggest that the fragment-based approach may fail to capture the dynamics of expert object recognition.

Methods

Participants

Twelve male car experts (21–42 years, M = 26.4) and sixteen male undergraduate students from the Hebrew University of Jerusalem who were not experts with cars (19–31 years, M = 25.2) participated in the study. Novices were matched in age, gender, and education to the experts.1 Both groups received monetary compensation for their participation. Experts were defined in a previous independent study (Harel et al., 2010) based on their significantly superior performance relative to novices in a perceptual discrimination task of whole car images. The car experts’ selection procedure was inspired by a previous study (Gauthier, Skudlarski, Gore, & Anderson, 2000) and required subordinate categorization: In each trial, candidates had to determine whether 2 cars presented sequentially (for 500 ms each and separated by 500-ms interstimulus interval) were of the same model (e.g., “Honda Civic” or not). The 2 cars in each trial were always of the same make (e.g., Honda) but differed in year of production, color, angle, and direction of presentation. Overall, the task consisted of 80 pairs of cars (half same model, half different model) and all of the car images were of frequently encountered models from recent years. Expertise was defined at least at 83% accuracy on this task, whereas the average accuracy of novices was at chance (~50%). To assure that the expertise displayed by the car experts was category specific, all participants performed an analog task with passenger airplanes. The ability of car experts and car novices to discriminate between airplanes was equivalent (see further details in Harel et al., 2010). All participants had normal or corrected-to-normal visual acuity and no history of psychiatric or neurological disorders. Participants signed an informed written consent according to the Institutional Review Board of the Hebrew University.

Stimuli selection

Informative fragments were extracted from training images using the algorithm described by Ullman et al. (2002) and briefly summarized below. The face and car fragments were the same used in Harel et al. (2007). The airplane fragments were extracted from a total of 470 airplane images downloaded from the web, with image sizes ranging from 150 × 200 to 200 × 250 pixels. The fragment selection process initially extracts a large number of candidate fragments at multiple positions, sizes, and scale from the object images. The information supplied by each candidate fragment is estimated by detecting it in the training images (for full details of the fragment selection procedure, see Harel et al., 2007; Ullman et al., 2002).

The most informative fragments found for different categorization tasks are typically of intermediate complexity (IC), including intermediate size at high resolution and larger size at intermediate resolution. The reason is that to be informative, a feature should be present with high likelihood in category examples, and low likelihood in non-category examples. These requirements are optimized by IC features: A large and complex object fragment is unlikely to be present in non-category images, but its detection likelihood in different category examples also decreases; conversely, simple local fragments are often found in both category and non-category images.

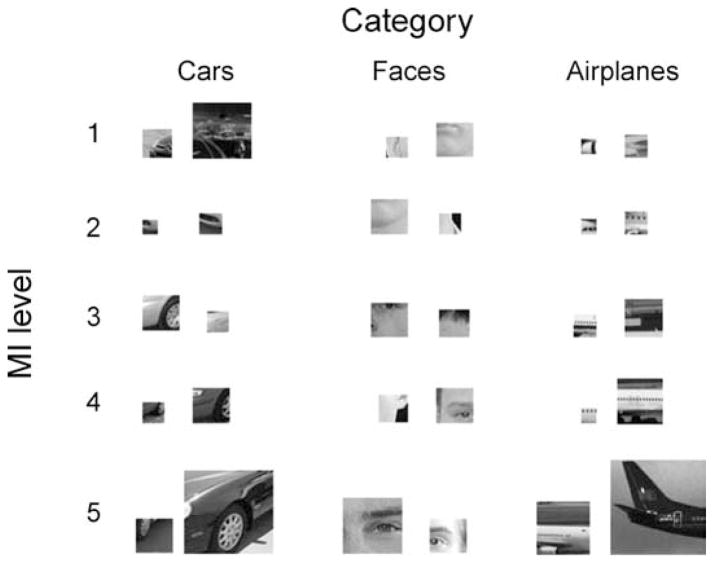

The stimuli used in the experiment were 500 car fragments, 500 face fragments, and 500 airplane fragments (see Figure 1 for examples of stimuli). The MI range was 0.05–0.65 for face fragments, 0.04–0.35 for car fragments, and 0.01–0.45 for airplane fragments. This difference in the range of MI probably reflects intrinsic differences between face, car, and airplane images, most notably their within-category variation. Therefore, to allow valid comparison among categories, we divided the continuous MI range of each category of fragments into five consecutive discrete levels of equal size, ranging from 1 (lowest MI level) to 5 (highest MI level) with 100 different fragments within each level. The ordinal (rather than continuous) scale allowed direct comparison between categories with different ranges of MI. For the remaining of the text, we refer to the discrete values as MI level and to the continuous values as MI proper.

Figure 1.

Examples of intermediate complexity fragments used in the experiment. Fragments from the car, face, and airplane categories are ordered according to their level of MI, ranging from 1 (lowest MI level) to 5 (highest MI level), with two examples for each combination of category and MI level.

Experimental design and procedure

The 1500 fragments (100 × 5 MI levels per category) were presented sequentially in a fully randomized order and the participants were instructed to categorize each fragment as a part of a face, a part of a car, or a part of an airplane by pressing one of three pre-designated buttons. The sequence was presented in 10 blocks of 150 trials each with a short break between blocks. At the end of each block, the participants received feedback about the performance accuracy in that block. Each stimulus was presented for 100 ms. A subsequent trial commenced only after a response was made. Stimuli were presented at fixation and seen from a distance of approximately 60 cm.

Results

Reaction times

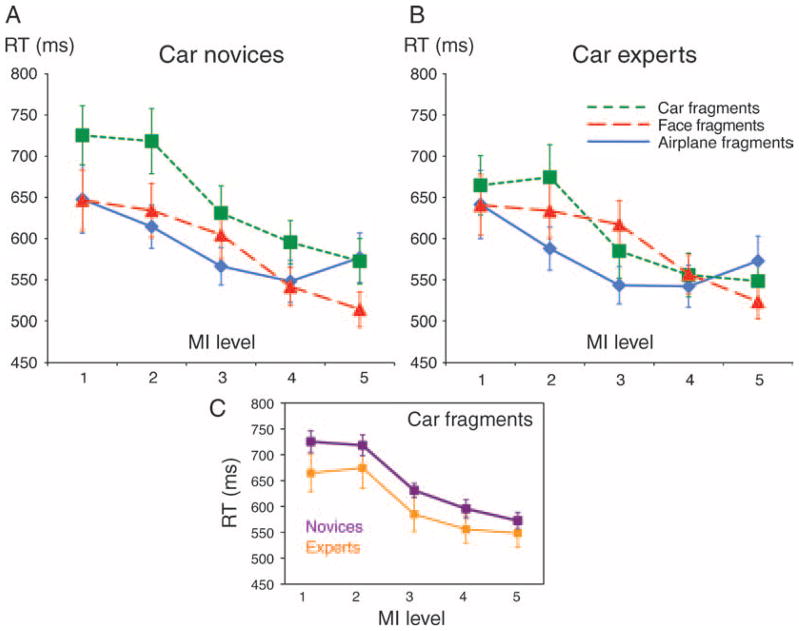

Mean reaction times (RTs) for the different experimental conditions are presented in Figure 2. Mean RTs were calculated based on correct responses only. Trials in which RT exceeded the mean of the particular condition ±2 standard deviations were also excluded. Mixed-model ANOVA with Group (car experts/novices) as a between-subjects factor and Category (faces/cars/airplanes) and MI level (levels 1–5) as within-subject factors showed that there was no main effect of Group (F(1,26) < 1.00), but there was a significant Group × Category interaction (F(2,52) = 3.56, MSE = 5862, p < 0.03). Across groups, there was a significant main effect of MI level (F(4,104) = 97.73, MSE = 2096, p < 0.001), similar for the two groups (MI level × Group interaction, F(4,104) < 1.00). Post-hoc analysis revealed that participants were overall faster with increasing levels of MI, as a significant decrease in RT was noted between every two successive MI levels (all pairwise comparisons, p < 0.05, Bonferroni corrected) except for the difference between MI 4 and MI 5, which was not significant (p = 0.90). Importantly, the second-order MI level × Category × Group interaction was not significant (F(8,208) × 1.00), which indicates that the expertise effect (i.e., the Group × Category interaction) was similar across all MI values.

Figure 2.

Mean reaction times (RTs) of categorization of car, face, and airplane fragments (green, red, and blue lines, respectively) as a function of MI level for (A) novices and (B) car experts. MI levels are in ascending order, 1 representing the lowest level and 5 representing the highest level. Error bars indicate SEM. Note the similarity in shapes of the RT curves for the categorization of car fragments by car experts and novices, highlighted in (C).

To explore the source of the Group × Category interaction, separate one-way ANOVAs with Category as an independent variable were conducted separately for each group of participants. A significant main effect of Category was evident in novices (F(2,30) = 16.61, MSE = 1120, p < 0.001). Pairwise comparisons showed that the categorization of face and airplane fragments was equally fast (p ~ 1.00) and both were categorized significantly faster than car fragments (Figure 2A; p < 0.0001 and p < 0.002, respectively). In contrast, for car experts there was no significant effect of Category (Figure 2B; F(2,22) = 1.94, MSE = 1242, p > 0.16). Thus, in contrast to novices, the car experts categorized fragments of cars as fast as they categorized fragments of faces and airplanes.2 As can be seen from Figure 2C, this major difference was expressed as an overall modulation of the RTs across all MI levels, which is reflected in the similar shape of the RT curves for car fragments.

Finally, a significant MI level × Category interaction (F(8,208) = 17.12, MSE = 1068, p < 0.001) revealed slightly different patterns of MI utilization for each category of fragments. A separate one-way ANOVA with MI level as an independent variable was conducted for each category of fragments. The effect of MI level was significant for all three categories (airplanes: F(4,108) = 35.14, MSE = 1273, p < 0.001; cars: F(4,108) = 61.67, MSE = 1957, p < 0.001; faces: F(4,108) = 90.56, MSE = 938, p < 0.001). Post-hoc analyses showed that for airplane fragments, there was a constant decrease in RTs as a function of MI level until it reached the fourth level (pairwise comparisons, p < 0.05, Bonferroni corrected) and then RTs increased 30 ms from the fourth to the fifth MI level (p < 0.001). For face fragments, there was no significant difference between the first and second levels of MI (p = 0.60), but afterward, there was a constant decrease in categorization speed (pairwise comparisons between successive MI levels, p < 0.05). A similar pattern of decreasing RTs as a function of MI level starting from the second level of MI was also found in the car fragments. Additional analyses of within-session learning effects on RT are reported in the Supplementary materials.

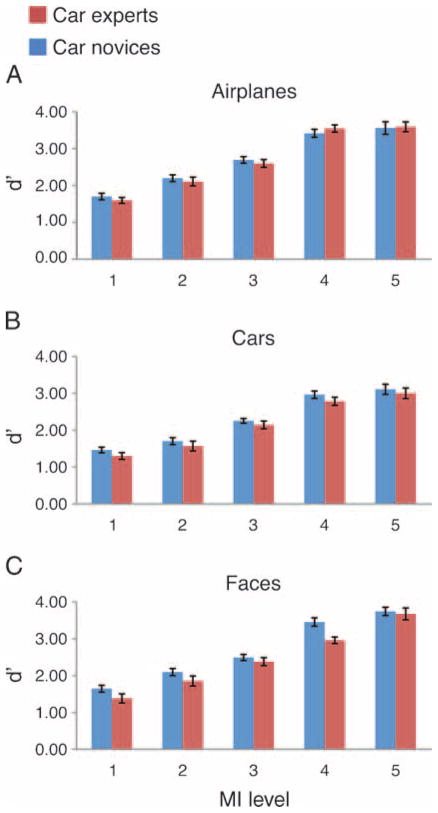

Signal detection analysis—d′

In order to determine the ability of the participants to distinguish each category from the other two, we calculated separately d′ values for each category collapsing across the other two categories. Mean d′ values for the different experimental conditions are presented in Figure 3. Mixed-model ANOVA with Group (car experts/novices) as a between-subjects factor and Category (faces/cars/airplanes) and MI level (levels 1–5) as within-subject factors showed a significant main effect of MI level (F(4,104) = 447.88, MSE = 0.12, p < 0.001), similar for both groups (MI level × Group interaction F(4,104) < 1.00) with sensitivity increasing monotonically with increasing levels of MI (all pairwise comparisons, p < 0.01, Bonferroni corrected). There was a main effect of Category (F(2,52) = 113.44, MSE = 0.07, p < 0.001) with face fragments better categorized than airplane fragments, which, in turn, were better categorized than car fragments (all pairwise comparisons, p < 0.01, Bonferroni corrected). There was no main effect of Group (F(1,26) = 1.21, MSE = 1.52, p = 0.28), but the interaction between Group and Category was significant (F(2,52) = 5.30, MSE = 0.07, p < 0.01). Follow-up analyses of the Group × Category interaction showed no significant differences between the two groups for any of the categories, although there was a slight trend showing a novice advantage in the face fragments (airplane fragments: t(26) = 0.20, p > 0.80; car fragments: t(26) = 1.12, p > 0.25; face fragments: t(26) = 1.85, p > 0.07). Note that the RT data also failed to show any significant differences between groups (Footnote 2 above). Finally, in addition to the Group × Category interaction, the d′ analysis revealed a significant MI level × Category × Group interaction (F(8,208) = 2.17, MSE = 0.04, p < 0.04).

Figure 3.

Mean perceptual categorization sensitivity (d′) for (A) airplane fragments, (B) car fragments, and (C) face fragments as a function of MI level for novices (blue bars) and car experts (red bars). MI levels are in ascending order, 1 representing the lowest level and 5 representing the highest level. Error bars indicate SEM.

In order to explore the three-way interaction, we conducted separate two-way ANOVAs with Category and Group as independent variables for each MI level. Interestingly, in all MI levels but the fourth, the Category × Group interaction did not reach significance (MI 1: F(2.52) = 1.46, MSE = 0.03, p > 0.20; MI 2: F(2.52) = 1.70, MSE = 0.02, p > 0.19; MI 3: F(2.52) < 1.00; MI 4: F(2.52) = 12.26, MSE = 0.05, p < 0.001; MI 5: F(2.52) < 1.00). To formally compare how the experts and novices differed in their categorization of fragments from different object categories at the fourth MI level, we conducted separate t-tests for each category. Notably, the experts and novices did not differ in their categorization sensitivity of the car fragments at the fourth MI level (t(26) = 1.21, p > 0.20). There was a novice advantage for categorizing face fragments (t(26) = 3.30, p < 0.003) but no difference between the two groups for the airplane fragments (t(26) = − 0.86, p > 0.35). Finally, in each of the MI levels, there was no main effect of Group (p > 0.15, for all five comparisons). In sum, perceptual categorization sensitivity to variations in MI level did not differ between car experts and car novices either for car fragments or for airplane and face fragments. Experts and novices showed a very similar pattern of increased sensitivity with increasing MI level for all categories (with some interactions reflecting minor differences in performance between the two groups). The next step was to assess whether the faster RTs to cars in car experts relative to novices might have reflected a response bias.

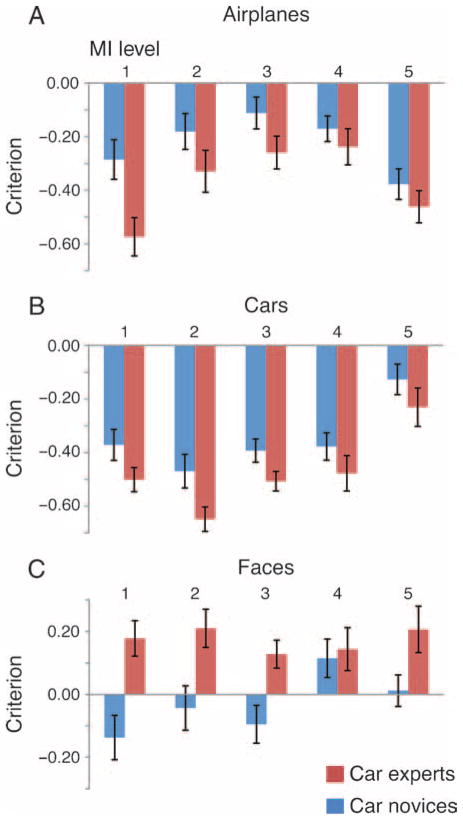

Signal detection analysis—Response criterion

Like d′ the criteria (c) for the different experimental conditions were calculated separately for each category, collapsing across the other two categories. Based on this procedure, a negative c-value reflects a bias for categorizing the fragment as belonging to that particular category compared to the other two categories. The mean criteria values for the different experimental conditions are presented in Figure 4. Mixed-model ANOVA with Group (car experts/novices) as a between-subjects factor and Category (faces/cars/airplanes) and MI level (levels 1–5) as within-subject factors showed that across the two groups, there were significant main effects of MI level (F(4,104) = 84.00, MSE = 0.01, p < 0.001) and Category (F(2,52) = 34.36, MSE = 0.25, p < 0.001) and a tendency for a Group effect (F(1,26) = 3.53, MSE = 0.06, p = 0.07). These main effects were qualified by interactions with Group, which were all significant (MI level × Group: F(4,104) = 2.86, MSE = 0.01, p < 0.03; Category × Group: F(2,52) = 5.20, MSE = 0.25, p < 0.01; MI level × Category × Group: F(8,208) = 2.05, MSE = 0.03, p < 0.05). To evaluate the source of these interactions, separate ANOVAs were conducted for each category of fragments with Group and MI level as independent variables.

Figure 4.

Mean response criteria of (A) airplane fragments, (B) car fragments, and (C) face fragments as a function of MI level for novices (blue bars) and car experts (red bars). MI levels are in ascending order, 1 representing the lowest level and 5 representing the highest level. Error bars indicate SEM.

For car fragments, the MI level × Group interaction was not significant (F(4,104) < 1.00). Both main effects of MI level and Group were significant (F(4,104) = 27.76, MSE = 0.02, p < 0.001; F(1,26) = 3.92, MSE = 0.13, p = 0.05, respectively). In both groups, the bias to respond “car” was statistically significant (relative to c = 0; experts: t(11) = − 12.56, p < 0.001; novices: t(15) =− 7.40, p < 0.001). However, car experts had a larger bias to identify fragments as cars as suggested by an overall larger negative criterion than that found in the novice group (Mexperts = − 0.47, SE = 0.04; Mnovices = − 0.34, SE = 0.04).

In the airplane fragments, the MI level × Group interaction was also not significant (F(4,104) = 2.10, MSE = 0.02, p > 0.08). A significant MI level effect was evident (F(4,104) = 15.40, MSE = 0.02, p < 0.001), and like for cars, there was a tendency for a Group effect (F(1,26) = 3.81, MSE = 0.19, p = 0.06) with experts showing more negative criteria than the novices (Mexperts = − 0.37, SE = 0.05; Mnovices = − 0.22, SE = 0.04). Response criteria significantly differed from zero (experts: t(11) = − 6.84, p < 0.001; novices: t(15) = − 4.42, p < 0.001).

Finally, for face fragments, the MI level × Group interaction was significant (F(4,104) = 3.57, MSE = 0.02, p < 0.01), in addition to significant main effects of MI level (F(4,104) = 3.29, MSE = 0.02, p < 0.02) and Group (F(1,26) = 7.40, MSE = 0.19, p < 0.02). In contrast to the previous two categories of fragments, in which the mean criterion of the experts was more negative than the mean criterion of the novices (i.e., a bias for identifying fragments as either cars or airplanes), in the face fragments the car experts were more conservative in their response bias, as reflected in their positive mean criterion (M = 0.17, SE = 0.05, significantly different from zero (t(11) = 4.00, p < 0.001)). Interestingly, the novices did not show a response bias, as their mean criterion was not significantly different from zero (t(15) = − 0.53, p > 0.30). The investigation of the significant MI level × Group interaction was based on separate one-way ANOVAs for each group of participants with MI as an independent variable. Car experts did not show a significant effect of MI (F(4,44) < 1.00). The MI effect was significant for novices (F(4,60) = 9.05, MSE = 0.01, p < 0.01) but post-hoc pairwise comparisons (Bonferroni corrected) revealed that this effect was primarily driven by the difference between the fourth level of MI (M = 0.11, SD = 0.24) and the other MI levels (all comparisons, p < 0.01), which did not significantly differ among themselves (all pairwise comparisons, p > 0.05).

Discussion

The main question addressed in the present study was how experts utilize diagnostic visual information for basic-level object recognition. Using the fragment-based approach, we were able to assess the categorization performance of experts and novices as a function of a quantifiable measure (MI) known to have psychological and neural underpinnings (Harel et al., 2007; Hedge, Bart, & Kersten, 2008; Lerner, Epshtein, Ullman, & Malach, 2008; Nestor, Vettel, & Tarr, 2008). Operationally, we asked how the effects of MI and the category of the fragments on their categorization are modulated by the expertise of the subjects. Along with the traditional view, car expertise was assessed by asking participants to make fine distinctions between different cars (i.e., subordinate categorization). Notably, in the fragment categorization task, participants were required to recognize the fragments by generalizing across exemplars from the same category and contrasting them with exemplars of other categories (i.e., basic-level categorization). Car fragments were recognized by car experts as fast as all other fragment categories, whereas novices were much slower to categorize car fragments relative to the face and airplane fragments. This pattern demonstrates an advantage of expertise even at basic-level categorization. Critically, the expert RT advantage was limited to cars, suggesting that it was specific to expertise rather than stemming from other group-related artifacts, such as increased motivation of the expert group relative to the novice. However, the RT advantage of the experts relative to novices was similar across all MI levels and the functions relating RT with MI level were roughly similar in shape for experts and novices. Moreover, whereas perceptual sensitivity of categorization (d′) was positively correlated with MI level, this effect was not modulated by expertise. In fact, both car experts and novices were less accurate with car fragments than either face or airplane fragments.

If the advantage shown by car experts relative to novices in recognizing fragments of cars would entail higher sensitivity to the informational content of objects of expertise, then the expertise advantage should have been more conspicuous when recognition was difficult, that is, at lower MI levels. This should have manifested as larger differences between experts and novices at low rather than at high MI levels of car fragments, as a result of faster increase in RT in response to cars with decreasing MI levels in the car novice group. Hence, the similar effect of MI on the shape of the RT curves for experts and novices and the absence of expertise effect on d′ suggest that the amount of information used at each level to categorize a fragment as representing a car was equal for car experts and car novices. In other words, while the present data demonstrate that expertise affects basic-level categorization, this effect does not seem to be related to enhanced sensitivity to the category information contained in the image fragments from the category of expertise. Instead, we interpret the faster RTs of the car experts in basic-level categorization of car fragments across all MI levels as a demonstration of a general interest in cars, perhaps mediated by attention, rather than a change in perceptual representations or visual processing of objects of expertise. Further support for this interpretation was provided by the signal detection analysis in the current study. Whereas the sensitivity (d′) analysis showed that car experts did not distinguish cars from other categories more accurately than novices, they had the highest response bias to car fragments (which might putatively explain the RT advantage). In other words, car experts tended to categorize the insufficiently defined fragments as parts of a car rather than as parts of faces or airplanes more than novices did. Thus, the current pattern of data leads to the conclusion that the usage of information in expert basic-level object recognition is not qualitatively different than in ordinary object recognition (for a similar view, see Biederman, Subramaniam, Bar, Kalocsai, & Fiser, 1999). Rather, it suggests that top-down mechanisms (e.g., attention or faster access to knowledge) might influence expert basic-level categorization of IC fragments. Specifically, we suggest that the involvement of top-down mechanisms led to faster categorization of fragments from the category of expertise even though the fragments by themselves were equally informative for the experts as they were for the novices.

A recent fMRI study provides neural evidence pointing to the same conclusion (Harel et al., 2010). That study showed that the neural expressions of expertise are top-down modulated by the engagement of the experts with objects from their domain of expertise. When car experts were highly engaged in recognition of cars, a widespread pattern of BOLD activation emerged across multiple cortical areas, encompassing early visual areas as well as frontoparietal attentional networks. In contrast, when the experts were instructed to ignore their objects of expertise, their unique pattern of BOLD activation was diminished becoming very similar to that of novices. These findings further indicate that, at least for basic-level categorization, expertise is not manifested in an automatic, stimulus-driven fashion but is rather modulated by top-down factors, such as attention and knowledge.

The current study continues and expands the findings of our previous fragment categorization study (Harel et al., 2007). One of the main findings of that study was the difference in the shapes of the RT curves for car and face fragments. A question left open by this result was whether the difference between fragments of faces and objects (e.g., cars) could be generalized to other object categories. By adding a third category of objects and by comparing the performance of car experts and car novices, we were able to provide answers to this question. If the different patterns in MI utilization for different fragment categories reflect face-selectivity patterns (found for whole objects as well as for parts, see Bentin et al., 1996; Zion-Golumbic & Bentin, 2007), one might expect that a similar difference would also be found between the face and airplane fragments. However, in the present study, face and airplane fragments were categorized in a very similar fashion, as reflected by both the shape of the RT change with MI and the range of absolute RTs. Moreover, categorization of car fragments by car novices differed from the categorization of airplane fragments. This pattern was also evident in the categorization sensitivity data, which suggest that the differences in MI utilization between face fragments and car fragments found in Harel et al. (2007) stemmed from differences in task difficulty (reflected by their accuracy data, see Experiments 1 and 2) rather than visual experience. This conclusion is valid particularly because if there was a difference between the experience that car novices had with cars and airplane, it should have been favoring cars, which are usually seen more frequent than airplanes. Yet, the novices in the present study categorized airplanes faster than cars. Presumably, this category effect reflects the additional processing time required by novices to evaluate the perceptual evidence provided by car fragments relative to airplane fragments and then reach a decision.

An implicit assumption concerning object recognition is that diagnostic information is reducible to isolated perceptual units, namely, the parts of the object. Parts are usually defined as “divisible, local components of an object that are segmented at points of discontinuity, are perceptually salient, and are usually identified by a linguistic label” (Tanaka & Gauthier, 1997, p. 5). Indeed, a variety of studies over the years have shown that object parts are important and useful for everyday object recognition (Biederman, 1987; Borghi, 2004; Cave & Kosslyn, 1993; De Winter & Wagemans, 2006; Tversky, 1989; Tversky & Hemenway, 1984). However, it is quite possible that the critical features for object recognition are not necessarily nameable parts. Peterson (2003) has suggested the term “partial configuration” to describe object representations that are smaller than an entire region or surface but at the same time specify a configuration of features rather than a single one. According to this suggestion, objects are represented by a number of overlapping partial configurations in the posterior parts of the ventral visual pathway (Peterson, 2003; Peterson & Skow, 2008). Support for this hypothesis comes from the finding that certain cells in the inferotemporal cortex respond selectively to features of intermediate complexity (Tanaka, 1996, 2003). The features of intermediate complexity are more complex than simple features, such as orientation, size, color, or texture, which are known to be extracted and represented by cells in V1, but at the same time are not sufficiently complex to represent natural objects, as well as complete parts of an object. Considered as “less than an object, more than a feature,” the notion of intermediate level representations breaks away from the traditional dichotomy between parts, on the one hand, and their holistic configuration, on the other. Still, one may ask, if it is not parts or configuration of parts, how can object-diagnostic information be quantified? The current study focused on the fragment-based approach for object recognition as it offers an answer to this question by suggesting an objective experimenter-independent measure of feature diagnosticity (Ullman, 2007; Ullman & Sali, 2000; Ullman et al., 2002). In that respect, the fragment-based approach for object recognition is extremely useful for describing stimulus information in basic-level expertise. First, the fragment-based model is not theoretically committed to either local parts, configuration of parts, or global shapes, since the selection process is initially based on extracting candidate fragments at multiple positions and of multiple sizes and scales.3 This is a major advantage for studying basic-level expertise where the actual diagnostic information is not known in advance. Moreover, not only is the diagnostic information not available to the experimenter, but often it is also unavailable for explicit verbalization to the experts themselves (Palmeri et al., 2004). Thus, the choice of MI as a key measure minimizes biases in selection and manipulation of specific stimuli dimensions. Second, the model explicitly quantifies the diagnostic value of each fragment for categorization by the measure of mutual information between fragment and category. This allows a direct and objective measure for the informativeness of different object parts for expert recognition. For example, one may directly compare two different parts of a car and predict their usefulness for car categorization.

Expertise in object recognition has been mainly characterized as a superior skill in subordinate categorization of objects (for a recent review, see Curby & Gauthier, 2010). In contrast, the current study investigated expertise using a computational approach that emphasizes basic-level rather than subordinate categorization. It is conceivable that expertise effects may manifest differently at basic and subordinate levels. For example, whereas the diagnostic cues needed to distinguish between two models of the same manufacturer might require expert knowledge, the distinction between cars, faces, and airplanes is based on knowledge shared by experts and novices. Yet it has now become widely acknowledged that expertise in object recognition is manifested at both basic and subordinate levels of categorization (Hershler & Hochstein, 2009; Johnson & Mervis, 1997; Scott et al., 2006; Wong et al., 2010; Wong, Palmeri, Rogers, Gore, & Gauthier, 2009). From this perspective, it is possible that a general top-down bias rather than perceptual sensitivity is characteristic primarily to expert object recognition at the basic level. However, it still remains to be seen whether top-down effects are also manifested at expertise in subordinate categorization. While some authors strongly argue that subordinate expertise is an automatic perceptual skill (Richler, Cheung, Wong, & Gauthier, 2009; Richler, Wong, & Gauthier, 2011), others suggest that subordinate expertise involves employing strategic knowledge of the diagnostic features rather than qualitative changes in perception (Amir, Xu, & Biederman, 2011; Biederman & Shiffrar, 1987; Biederman et al., 1999; Harel et al., 2010; Robbins & McKone, 2007). Future studies of real-world experts (cf. Wong et al., 2009) will be needed to address this issue by teasing apart the role of knowledge and perceptual strategies in subordinate expertise.

It also remains an open question what is the role of intermediate complexity fragments in subordinate expert categorization and how fragments may support fine discriminations made by experts. One possibility is that experts learn to use a larger and richer set of fragments, including lower MI ones. Experts may become very familiar with very unique parts that consequently have lower MI (e.g., the grill of a particular Rolls Royce model, the taillights of a Ford Mustang, etc.). As these are very specific features, they are not on the whole representative of the entire category of cars (high MI), but they may be needed for identification. Support for this conjecture comes from a recent computational work showing that the optimal features for making subordinate distinctions are “sparse,” that is, distinctive highly specific features that are nevertheless tolerant to variability in image appearance (Akselrod-Ballin & Ullman, 2008). Critically, this work employed the same principal of information maximization used here but for the purpose of subordinate rather than basic-level categorization.

Finally, two recent studies using single-trial EEG analysis (Philiastides, Ratcliff, & Sajda, 2006; Philiastides & Sajda, 2006) shed additional light on the possible mechanisms that underlie the expert categorization of intermediate complexity fragments. These studies investigated two stimulus-locked EEG components in a face vs. car categorization task, an early component (~170 ms post stimulus onset) and a late component (~300 ms post stimulus onset). Both were correlated with performance, but whereas the timing of the early component did not change as a function of task difficulty (manipulated by the level of phase coherence of the image), the late component was more delayed in time as a function of task difficulty and it was a better predictor of the subject’s decision. This late component was taken to represent perceptual decision-making stages, driven by the accumulation of stimulus evidence, whereas the early component was taken to represent early perceptual processing. Interestingly, the time window of the late component in the above studies coincides with the peak latency of the N270, the ERP component found to be sensitive to the MI level contained in the fragments as well as to their category (Harel et al., 2007). Although ERPs were not recorded in the current study, the finding that MI level modulated the “late” N270 could be considered additional evidence that expertise operates at a post-sensory stage, which reflects the accumulation of stimulus evidence, namely, the MI contained in the fragment. Of course, this hypothesis concerning the time window at which expertise operates should be further investigated in future research.

In summary, the current study shows that expertise in basic-level object recognition is not manifested as a peculiar or different pattern of diagnostic information usage concerning the category of expertise. Instead, we propose that expertise involves the application of top-down mechanisms (such as experience-based knowledge and attention) that allow the expert to overcome the inherent difficulty of the categorization task. Our findings show that the difference between car experts and novices was not in the way they categorized intermediate complexity car fragments but in the overall faster RTs of the car experts. Though the fragments by themselves were equally informative to the experts as they were to the novices, the experts overcame the need for additional processing time required for the novice to evaluate the perceptual evidence and reach a decision. We reason that this might be achieved by a greater allocation of resources to fragments from the category of expertise, leading to their faster categorization.

Supplementary Material

Acknowledgments

The authors thank Tal Golan for help with data collection and analysis.

Footnotes

While all car experts were male, note that visual expertise is clearly not gender specific. Prior studies report both male and female experts in a variety of other domains of expertise, such as radiologists (Harley et al., 2009), dog show judges (Robbins & McKone, 2007), and bird experts (Tanaka & Curran, 2001).

A different and equally valid way of breaking down the Group × Category interaction is to compare the speed of categorization between the two groups in each category separately. Notably, using this comparison, we found that although, descriptively, the experts showed a substantial RT advantage for the car fragments (~40 ms), this difference between the groups was not significant (t(26) = − 1.35, p > 0.18). Further, there was no significant difference in the speed of categorization of the two groups for the airplane and face fragments (t(26) = − 0.42, p > 0.65; t(26) = 0.21, p > 0.80, respectively).

Hence, the name “fragments,” as an informative image patch, may contain a fragment of an object part or even of a conjunction of object parts.

Commercial relationships: none.

Contributor Information

Assaf Harel, Laboratory of Brain and Cognition, National Institute of Mental Health, Bethesda, MD, USA.

Shimon Ullman, Department of Computer Science and Applied Mathematics, Weizmann Institute of Science, Rehovot, Israel.

Danny Harari, Department of Computer Science and Applied Mathematics, Weizmann Institute of Science, Rehovot, Israel.

Shlomo Bentin, Department of Psychology, Hebrew University of Jerusalem, Jerusalem, Israel, & Center of Neural Computation, Hebrew University of Jerusalem, Jerusalem, Israel.

References

- Akselrod-Ballin A, Ullman S. Distinctive and compact features. Image and Vision Computing. 2008;26:1269–1276. [Google Scholar]

- Amir O, Xu X, Biederman I. The spontaneous appeal by naïve subjects to nonaccidental properties when distinguishing among highly similar members of subspecies of birds closely resembles descriptions produced by experts. Paper presented at the Vision Sciences Society Annual Meeting.2011. [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Golland Y, Flevaris A, Robertson LC, Moscovitch M. Processing the trees and the forest during initial stages of face perception: Electro-physiological evidence. Journal of Cognitive Neuroscience. 2006;18:1406–1421. doi: 10.1162/jocn.2006.18.8.1406. [DOI] [PubMed] [Google Scholar]

- Biederman I. Recognition-by-components: A theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Biederman I, Shiffrar MM. Sexing day-old chicks: A case study and expert systems analysis of a difficult perceptual learning task. Journal of Experimental Psychology: Learning Memory and Cognition. 1987;13:640–645. [Google Scholar]

- Biederman I, Subramaniam S, Bar M, Kalocsai P, Fiser J. Subordinate-level object classification re-examined. Psychological Research. 1999;62:131–153. doi: 10.1007/s004260050047. [DOI] [PubMed] [Google Scholar]

- Borghi AM. Object concepts and action: Extracting affordances from objects parts. Acta Psychologica. 2004;115:69–96. doi: 10.1016/j.actpsy.2003.11.004. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Gauthier I, Tarr MJ. Beyond faces and modularity: The power of an expertise framework. Trends in Cognitive Sciences. 2006;10:159–166. doi: 10.1016/j.tics.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Philips SW, Gauthier I. Limits of generalization between categories and implications for theories of category specificity. Attention, Perception, & Psychophysics. 2010;72:1865–1874. doi: 10.3758/APP.72.7.1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cave CB, Kosslyn SM. The role of parts and spatial relations in object identification. Perception. 1993;22:229–248. doi: 10.1068/p220229. [DOI] [PubMed] [Google Scholar]

- Cover TM, Thomas JA. Elements of information theory. New York: Wiley; 1991. [Google Scholar]

- Curby KM, Gauthier I. To the trained eye: Perceptual expertise alters visual processing. Topics in Cognitive Science. 2010;2:189–201. doi: 10.1111/j.1756-8765.2009.01058.x. [DOI] [PubMed] [Google Scholar]

- De Winter J, Wagemans J. Segmentation of object outlines into parts: A large-scale integrative study. Cognition. 2006;99:275–325. doi: 10.1016/j.cognition.2005.03.004. [DOI] [PubMed] [Google Scholar]

- Diamond D, Carey S. Why faces are and are not special: An effect of expertise. Journal of Experimental Psychology: General. 1986;115:107–117. doi: 10.1037//0096-3445.115.2.107. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Unraveling mechanisms for expert object recognition: Bridging brain activity and behavior. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:431–446. doi: 10.1037//0096-1523.28.2.431. [DOI] [PubMed] [Google Scholar]

- Harel A, Bentin S. Stimulus type, level of categorization, and spatial-frequencies utilization: Implications for perceptual categorization hierarchies. Journal of Experimental Psychology: Human Perception and Performance. 2009;35:1264–1273. doi: 10.1037/a0013621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harel A, Gilaie-Dotan S, Malach R, Bentin S. Top-down engagement modulates the neural expressions of visual expertise. Cerebral Cortex. 2010;20:2304–2318. doi: 10.1093/cercor/bhp316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harel A, Ullman S, Epshtein B, Bentin S. Mutual information of image fragments predicts categorization in humans: Electrophysiological and behavioral evidence. Vision Research. 2007;47:2010–2020. doi: 10.1016/j.visres.2007.04.004. [DOI] [PubMed] [Google Scholar]

- Harley EM, Pope WB, Villablanca JP, Mumford J, Suh R, Mazziotta JC, et al. Engagement of fusiform cortex and disengagement of lateral occipital cortex in the acquisition of radiological expertise. Cerebral Cortex. 2009;19:2746–2754. doi: 10.1093/cercor/bhp051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hedge J, Bart E, Kersten D. Fragment-based learning of visual object categories. Current Biology. 2008;18:597–601. doi: 10.1016/j.cub.2008.03.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hershler O, Golan T, Bentin S, Hochstein S. The wide window of face detection. Journal of Vision. 2010;10(10):21, 1–14. doi: 10.1167/10.10.21. http://www.journalofvision.org/content/10/10/21. [DOI] [PMC free article] [PubMed]

- Hershler O, Hochstein S. The importance of being expert: Top-down attentional control in visual search with photographs. Attention, Perception & Psychophysics. 2009;71:1478–1486. doi: 10.3758/APP.71.7.1478. [DOI] [PubMed] [Google Scholar]

- Johnson KE, Mervis CB. Effects of varying levels of expertise on the basic level of categorization. Journal of Experimental Psychology: General. 1997;126:248–277. doi: 10.1037//0096-3445.126.3.248. [DOI] [PubMed] [Google Scholar]

- Lerner Y, Epshtein B, Ullman S, Malach R. Class information predicts activation by object fragments in human object areas. Journal of Cognitive Neuroscience. 2008;20:1189–1206. doi: 10.1162/jocn.2008.20082. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand RL, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- McKone E, Kanwisher N, Duchaine BC. Can generic expertise explain special processing for faces? Trends in Cognitive Sciences. 2007;11:8–15. doi: 10.1016/j.tics.2006.11.002. [DOI] [PubMed] [Google Scholar]

- Mel BW. SEEMORE: Combining color, shape, and texture histogramming in a neurally inspired approach to visual object recognition. Neural Computation. 1997;9:777–804. doi: 10.1162/neco.1997.9.4.777. [DOI] [PubMed] [Google Scholar]

- Nestor A, Vettel JM, Tarr MJ. Task-specific codes for face recognition: How they shape the neural representation of features for detection and individuation. PLoS ONE. 2008;3:e3978. doi: 10.1371/journal.pone.0003978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmeri TJ, Wong AC, Gauthier I. Computational approaches to the development of perceptual expertise. Trends in Cognitive Sciences. 2004;8:378–386. doi: 10.1016/j.tics.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Peterson MA. Overlapping partial configurations in object memory: An alternative solution to classic problems in perception and recognition. In: Peterson MA, Rhodes G, editors. Perception of faces, objects and scenes. Analytic and holistic processes. New York: Oxford University Press; 2003. pp. 269–294. [Google Scholar]

- Peterson MA, Skow E. Inhibitory competition between shape properties in figure–ground perception. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:251–267. doi: 10.1037/0096-1523.34.2.251. [DOI] [PubMed] [Google Scholar]

- Philiastides MG, Ratcliff R, Sajda P. Neural representation of task difficulty and decision making during perceptual categorization: A timing diagram. Journal of Neuroscience. 2006;26:8965–8975. doi: 10.1523/JNEUROSCI.1655-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Philiastides MG, Sajda P. Temporal characterization of the neural correlates of perceptual decision making in the human brain. Cerebral Cortex. 2006;16:509–518. doi: 10.1093/cercor/bhi130. [DOI] [PubMed] [Google Scholar]

- Richler JJ, Cheung OS, Wong ACN, Gauthier I. Does response interference contribute to face composite effects? Psychonomic Bulletin & Review. 2009;16:258–263. doi: 10.3758/PBR.16.2.258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler JJ, Wong YK, Gauthier I. Perceptual expertise as a shift from strategic interference to automatic holistic processing. Current Directions in Psychological Science. 2011;20:129–134. doi: 10.1177/0963721411402472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins R, McKone E. No face-like processing for objects-of-expertise in three behavioural tasks. Cognition. 2007;103:34–79. doi: 10.1016/j.cognition.2006.02.008. [DOI] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Sheinberg DL, Curran T. A Reevaluation of the electrophysiological correlates of expert object processing. Journal of Cognitive Neuroscience. 2006;18:1453–1465. doi: 10.1162/jocn.2006.18.9.1453. [DOI] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Sheinberg DL, Curran T. The role of category learning in the acquisition and retention of perceptual expertise: A behavioral and neurophysiological study. Brain Research. 2008;1210:204–215. doi: 10.1016/j.brainres.2008.02.054. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Curran T. A neural basis for expert object recognition. Psychological Science. 2001;12:43–47. doi: 10.1111/1467-9280.00308. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology A: Human Experimental Psychology. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Gauthier I. Expertise in object and face recognition. In: Goldstone RL, Medin DL, Schyns PG, editors. Psychology of learning and motivation. Vol. 36. San Diego, CA: Academic Press; 1997. pp. 83–125. [Google Scholar]

- Tanaka K. Inferotemporal cortex and object vision. Annual Review of Neuroscience. 1996;19:109–139. doi: 10.1146/annurev.ne.19.030196.000545. [DOI] [PubMed] [Google Scholar]

- Tanaka K. Columns for complex visual object features in the inferotemporal cortex: Clustering of cells with similar but slightly different stimulus selectivities. Cerebral Cortex. 2003;13:90–99. doi: 10.1093/cercor/13.1.90. [DOI] [PubMed] [Google Scholar]

- Tarr MJ, Gauthier I. FFA: A flexible fusiform area for subordinate-level visual processing automatized by expertise. Nature Neuroscience. 2000;3:764–770. doi: 10.1038/77666. [DOI] [PubMed] [Google Scholar]

- Turk MA, Pentland A. Eigenfaces for recognition. Journal of Cognitive Neuroscience. 1991;3:71–86. doi: 10.1162/jocn.1991.3.1.71. [DOI] [PubMed] [Google Scholar]

- Tversky B. Parts, partonomies, and taxonomies. Developmental Psychology. 1989;25:983–995. [Google Scholar]

- Tversky B, Hemenway K. Objects, parts, and categories. Journal of Experimental Psychology: General. 1984;113:169–193. [PubMed] [Google Scholar]

- Ullman S. Object recognition and segmentation by a fragment-based hierarchy. Trends in Cognitive Sciences. 2007;11:58–64. doi: 10.1016/j.tics.2006.11.009. [DOI] [PubMed] [Google Scholar]

- Ullman S, Sali E. Object classification using a fragment-based representation. Biologically Motivated Computer Vision, Proceeding. 2000;1811:73–87. [Google Scholar]

- Ullman S, Vidal-Naquet M, Sali E. Visual features of intermediate complexity and their use in classification. Nature Neuroscience. 2002;5:682–687. doi: 10.1038/nn870. [DOI] [PubMed] [Google Scholar]

- Wiskott L, Fellous JM, Krüger N, von der Malsburg C. Face recognition by elastic bunch graph matching. IEEE Transactions of Pattern Analysis and Machine Intelligence. 1997;19:775–779. [Google Scholar]

- Wong ACN, Palmeri TJ, Gauthier I. Conditions for face-like expertise with objects: Becoming a Ziggerin expert—But which type? Psychological Science. 2010;21:1108–1117. doi: 10.1111/j.1467-9280.2009.02430.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong ACN, Palmeri TJ, Rogers BP, Gore JC, Gauthier I. Beyond shape: How you learn about objects affects how they are represented in visual cortex. PLoS One. 2009;4:e8405. doi: 10.1371/journal.pone.0008405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zion-Golumbic E, Bentin S. Dissociated neural mechanisms for face detection and configural encoding: Evidence from N170 and induced gamma-band oscillation effects. Cerebral Cortex. 2007;17:1741–1749. doi: 10.1093/cercor/bhl100. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.