Abstract

Background

To improve endoscopic surgical skills, an increasing number of surgical residents practice on box or virtual reality (VR) trainers. Current training is focused mainly on hand–eye coordination. Training methods that focus on applying the right amount of force are not yet available.

Methods

The aim of this project is to develop a low-cost training system that measures the interaction force between tissue and instruments and displays a visual representation of the applied forces inside the camera image. This visual representation continuously informs the subject about the magnitude and the direction of applied forces. To show the potential of the developed training system, a pilot study was conducted in which six novices performed a needle-driving task in a box trainer with visual feedback of the force, and six novices performed the same task without visual feedback of the force. All subjects performed the training task five times and were subsequently tested in a post-test without visual feedback.

Results

The subjects who received visual feedback during training exerted on average 1.3 N (STD 0.6 N) to drive the needle through the tissue during the post-test. This value was considerably higher for the group that received no feedback (2.6 N, STD 0.9 N). The maximum interaction force during the post-test was noticeably lower for the feedback group (4.1 N, STD 1.1 N) compared with that of the control group (8.0 N, STD 3.3 N).

Conclusions

The force-sensing training system provides us with the unique possibility to objectively assess tissue-handling skills in a laboratory setting. The real-time visualization of applied forces during training may facilitate acquisition of tissue-handling skills in complex laparoscopic tasks and could stimulate proficiency gain curves of trainees. However, larger randomized trials that also include other tasks are necessary to determine whether training with visual feedback about forces reduces the interaction force during laparoscopic surgery.

Keywords: Augmented reality, Visual force feedback, Training/courses, Box trainers, Laparoscopy, Endoscopy

In endoscopic surgery, trocar friction, scaling, and mirror effects make it difficult to estimate the forces that are exerted at the tip of the instruments during a tissue manipulation task. Due to this distorted haptic feedback, surgeons need to rely on other information sources (e.g., tissue deformation or color changes) to prevent tissue damage during manipulation of tissue. In training, the role of force feedback is not always unambiguous. Some manufacturers of training simulators incorporate some kind of haptic feedback in their virtual reality (VR) trainers [1, 2], while others state that haptic feedback in VR is not essential for simple training tasks. For more complex tasks that are often used for skills assessment (i.e., suturing), many studies suggest that force feedback is essential [3–5]. Previous studies show that interaction forces between tissue and needle during needle-driving are related to suture depth and quality, while forces applied on the wires during knot-tying are related to the quality of the knot [4, 5]. Unfortunately, the force feedback provided in most commercial VR trainers is far from optimal and does not yet mimic the feedback experienced during real laparoscopic surgery [5, 6]. A good alternative is the box trainer. In this physical model the haptic feedback at the instrument handles is as real as it is in live surgery. If the interaction force at the tip is fed back to the trainee in a clear and intuitive way, the trainee can learn how the distorted haptic feedback at the instrument handle and color or shape changes of the tissue are related to the real force applied at the tip. One possibility is to provide continuous feedback about actual forces to the trainee in the form of a visual representation that is integrated in the camera image. However, a potential drawback of visually displayed forces is that the computations that are necessary to integrate the measured forces into a modified camera image will introduce time delays. Many studies suggest that time delays can distract the trainee due to unnatural visualization during fast instrument movements [7–10]. For realistic instrument movements, the total time delay should be kept as small as possible. Further, the screen update frequency should be kept at a minimum of 30 Hz [11, 12].

The present research consists of two parts. The first objective is to develop a low-cost training system that continuously informs the trainee about the force applied on tissue. The second objective is to investigate the aspects of such visual force feedback. In the experiment, the performance of six novices who received visual feedback about interaction force is compared with the performance of six novices who received no feedback during training. A lower magnitude of applied forces during post-testing for the first group indicates that novices can learn to reduce forces based on visual feedback.

Materials and methods

Hardware

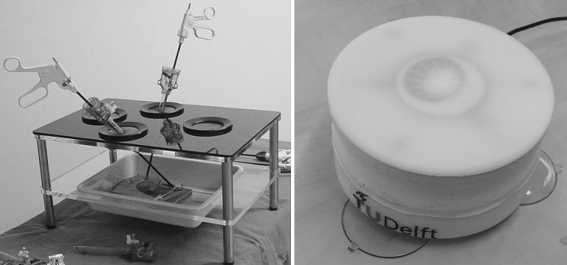

The Force Platform, a force sensor specially developed for force measurements in laparoscopic box trainers, can measure forces from 0 to 10 N in three dimensions with an accuracy of 0.1 N and a measurement frequency of 60 Hz [4]. A webcam (Logitech webcam C600) was used to capture images of the workspace of the instruments. Figure 1 shows the latest version of the Force Platform and a standard box trainer that is commonly used in laparoscopic training. Figure 2 shows how the webcam and the Force Platform are fixed inside the modified box trainer. Eight white LEDs were placed around the camera lens to create a small light beam. Comparable to real laparoscopic camera systems, the adjustable light beam creates a more realistic vision inside the box trainer.

Fig. 1.

Left Standard box for laparoscopic training. Right New and waterproof version of the Force Platform

Fig. 2.

A webcam, light source, and new Force Platform equipped with artificial tissue are fixed inside the custom-made box trainer

Artificial tissue, imitating the skin and fat layers (Professional Skin Pad, Mk 2, Limbs & Things, Bristol, UK), was fixed on the Force Platform. On top of the artificial tissue, the point of insertion and direction were marked by two lines. The line thickness was 2 mm and the distance between the two lines was 9 mm.

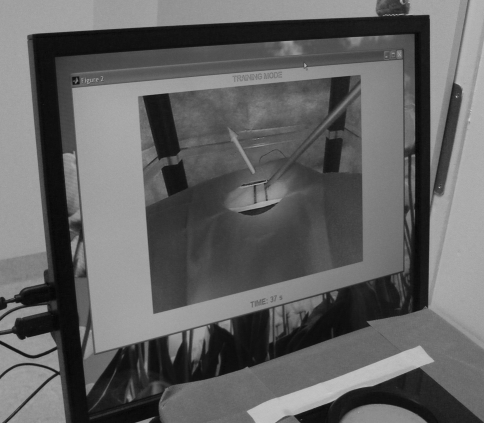

Software

A user interface was built in Matlab® (MathWorks, Natick, MA) to display the camera image inside a separate screen while data were recorded from the Force Platform at a rate of 30 Hz. The data are saved in arbitrary units together with a time vector. Since the relationship between the force sensor output and the applied forces in newtons (N) is known after calibration, the output is computed in newtons [4]. Second, the user interface allows the user to display an arrow inside the camera image that represents the magnitude and direction of the force as it is exerted on the training task and the Force Platform. Figure 3 shows that the offset between the arrow’s point of origin and the lower part of the arrow prevents the work field from being obstructed by the arrow itself. The linear relationship between offset distance and force magnitude increases the intuitiveness of the provided visual feedback of the force.

Fig. 3.

Arrow representation of the force magnitude and direction. The arrow is displayed as an overlay inside the laparoscopic image. An offset between point of needle insertion and arrow prevents blockage of the view of interest

If available, information about the maximum allowable interaction force for a particular task can be stored in the user interface. If 75% of the maximum interaction force is reached, the arrow turns from green to yellow. If the maximal interaction force is exceeded, the arrow turns red.

Time delays

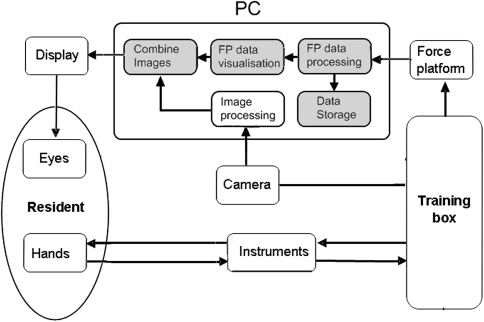

To investigate whether the video and Force Platform data processing time is within the defined specifications, additional tests are necessary. Since the process consists of multiple computational steps, multiple time delays are expected. The blue/gray blocks in Fig. 4 illustrate where processing time is lost before an image is displayed on the screen after it is captured by the camera. In addition, some time is lost before the data from the Force Platform is interpreted and visualized inside the recorded image from the camera.

Fig. 4.

Time delays in the training system. The colored blocks show where noticeable processing time is lost during training. The total time delay is determined by a summation of the delays in each individual colored block in the representation

An additional video camera was placed on a tripod in front of the training setup. To determine the delay between image capture by the webcam and image presentation on the monitor, an instrument handle was moved as fast as possible toward a marked bar (point A) above the box trainer. The movements of the instrument handle above the box are recorded by the video recorder as well as the indirect instrument motions from the monitor of the webcam. Figure 5 shows a picture from the video recorder of the training setup with two marked points. The number of frames between the moment that point A is reached by the instrument handle and the moment the corresponding point B is reached on the monitor of the webcam determines the delay of the video system. This test was repeated for six times while the complete setup, i.e., box, screen, and hand, is recorded at 30 frames per second. The first three tests were conducted 10 s after the system was started. The last three tests were conducted 5 min after the system was started. During those tests, there was no feedback generated from the Force Platform. Since feedback of the force is not always helpful, the delays found in those tests give an indication of the system’s processing time if the force feedback option is not used.

Fig. 5.

Determination of the total time delay. An additional camera (not in photo) is placed in front of the system and records the instrument’s movements and monitor simultaneously. After recording, the number of frames can be counted between the moment the real instrument reaches point A and the moment that the displayed instrument reaches the corresponding point B at the screen

Next to the delay in display of the instruments, it is important to determine the time span between sensor loading and the moment the force feedback is displayed on the monitor. This delay is caused by time required to process all video and sensor data before it is visualized on the monitor. To determine this time delay, an instrument was placed in a trocar and pressed with a small constant load of 200 g on the artificial tissue. With a fast downward motion, the instrument handle was manually tapped by the experimenter. As a result, the instrument shaft was pressed against the Force Platform and the load that was registered increased. This test was repeated three times. Again, the first three tests were conducted 10 s after the system was started and the last three tests were conducted 5 min after the system was started. Afterward, the number of recorded frames between the moment the instrument handle was tapped and the arrow was displayed on the screen was taken as the total time delay of the system.

Because the processing time may depend on the processor speed and capabilities of the display adapter being used, we performed all tests on two different commonly used computer systems to get an impression of the variance in time delays. The first system (PC-1) was a Dell Dual Core E6600 Computer System that operates on 2.4 GHz and has 2 Gb of ddr2 RAM. For this desktop system an Intel q965/963 express chipset family was used as the display adapter. The second system (PC-2) was a HP Intel Core 2 Duo T7700 laptop that operates on 2.4 MHz with 3 Gb of ddr3 RAM. This laptop is equipped with an ATI mobility Radeon HD 2600 as a display adapter.

Finally, six experienced surgeons were asked to perform a complete suture task on the training system to see if the system delay affected their performance. The knot type in the suture task was not defined so all surgeons were allowed to produce a suture similar to what they would use in surgery. Four of the experienced surgeons performed the task on the training setup with PC-1 and two on the training setup with PC-2. All surgeons were asked to qualify their own work.

Pilot study: needle-driving task

A pilot study was performed to investigate the potential benefits of visual feedback during a needle-driving task. During the task, the participant was asked to pick up a needle (Vicryl 3-0 SH plus 26 mm, Ethicon, Somerville, NJ) with the needle drivers and to insert it at the right line on the tissue (Fig. 3). Second, the participant was asked to drive the needle, in the desired direction, through the tissue and to remove it completely at the location of the left line. This needle-driving task was performed during the pretest, training session, and post-test.

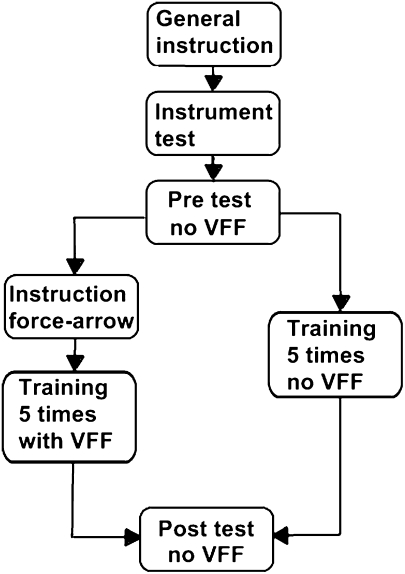

Figure 6 illustrates the setup of this pilot study and how the participants were divided into two groups. The test group consisted of 12 first-year medical students without hands-on experience in laparoscopic surgery or training. The participants were randomly assigned to one of the two groups. During training, the participants in the first group received real-time visual feedback about the interaction force until they completed the task. The participants in the second group received no visual feedback and thus performed the same task as during the pre- and post-tests. During the training session, all participants performed the needle driving task five times.

Fig. 6.

Setup of this pilot study. This illustration shows how the participants were divided into two groups. One group received visual feedback about the interaction forces (VFF) during the training session and one group received no visual feedback

Each participant performed the pre-test, training session, and post test in chronological order. Before the pretest started, both groups received general instructions about the needle-driving task and all participants were allowed to manipulate and test the instruments. In addition, both groups were told that the artificial material is delicate and should be handled with care. After the pretest, the first group was instructed on how the size and direction of the visualized arrow was related to the exerted force. The second group received no extra instructions.

After all participants completed the tests, any differences in maximum and mean nonzero force between the groups during the pre- and post-tests were determined with Student’s t-test (SPSS v16, SPSS, Inc., Chicago, IL). A p value < 0.05 was taken as a significant difference. Finally, all participants from the group that received feedback were asked if they understood the given feedback and whether it helped them to minimize the applied force.

Results

Time delays

The delays from all tests as conducted on two different computers remained almost constant during the test session. The average delay during all tests was 0.05 (STD 0.02) s for PC-1 and 0.04 (STD 0.01) s for PC-2. One of the expert surgeons indicated that he noticed some delay during fast movements on PC-1. However, this surgeon also explained that the noticed delay had no effect on the task itself since suturing requires slow motions. The other five experts did not mention any delays during or after the suture task. After the task was completed, all surgeons described the quality of their own suture as “good.”

Pilot study: needle-driving task

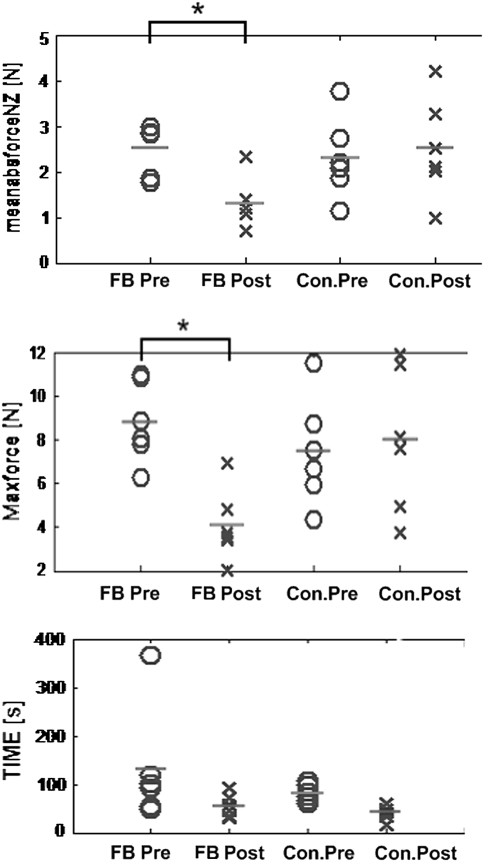

Figure 7 shows the results from the pre- and post-tests of both groups. The left column represents the group that received visual feedback about the interaction force during training. The right column represents the group that received no feedback about the interaction force during training. The mean absolute nonzero interaction force and the maximum interaction force during the post-test are noticeably lower for the feedback group (1.3 N, STD 0.6 N and 2.6 N, STD 0.9 N) compared to the same parameters measured during the post-test of the control group (4.1 N, STD 1.6 N and 8 N, STD 3.3 N). With a mean value of 55.4 (STD 24) s and 51.2 (STD 15) s, the time to completion in the post-test is comparable for the two groups. All participants from the group that received visual feedback about the interaction force reported that they understood what the arrow represented and how its properties related to the exerted interaction force. Four of the six participants reported that the arrow helped them to minimize the interaction force during needle-driving. Of those four participants two explained that the force arrow taught them that removing the curved needle while rotating it results in lower forces.

Fig. 7.

Results of the pilot study. FB Pre, pretest of the group that received visual feedback; FB post, post-test of the group that received visual feedback; Con.Pre, pretest of the control group that received no visual feedback; Con.Post, post-test of the control group that received no visual feedback. The “*” indicates that the difference between pre- and post-test is significant

Discussion

The results from our study show that there is a significant improvement in tissue-handling force after training with visual feedback of the force. The group that received visual feedback of the force during training applied on average 68% less force during the post suture test compared with the control group. The maximum force applied during the post-test was on average 48% lower for the group that received visual feedback compared with the control group. These results and the subjective judgments of six expert surgeons suggest that the use of training systems with visual feedback about applied forces has a clear added value for the training of residents.

The results of the pilot study suggest that visual feedback of the force does reduce the force exerted on the tissue during a suture task. In addition, the visual feedback of the force had an immediate effect on the needle-driving strategy of two of the six participants. Based on the feedback, those participants learned to use the curvature of the needle during extraction to minimize the exerted forces. Furthermore, the improvement in task completion time was almost similar for the two groups. This could indicate that visualization of the interaction force as an arrow does not influence the complexity of the suture task. This result corresponds with the work of Reiley et al. [13]. Their research concluded that visual feedback during robot surgery reduced forces and decreased force inconsistencies among novice robot surgeons, although elapsed time and knot quality were unaffected.

The current study was limited to investigating the effect of visual feedback about interaction forces during the needle-driving phase of a suture task. Further studies are necessary to determine whether it is possible to teach participants to minimize the interaction forces on tissue during the knot-tying phase of the suture task. Also, studies with larger groups of subjects and longer time periods between post-test and training session are needed to determine whether the reduction of force is temporary or permanent. Furthermore, more research is required to identify other training tasks that can benefit from this type of training.

It is important to minimize time delays when providing feedback during training. Time delays cause unnatural visualization of motions and may disrupt the motor behavior of the trainee. In the experiment, only one experienced surgeon made a remark about a delay in the display of images at the start of the trial. However, this delay was noticed only for the first 2 s after the system was started. Further investigation of the software confirmed that in the first 2 s, frames are buffered by the camera software. During this initialization process, the delay time increased up to 0.2 s. To solve this minor problem, we modified the software to force the application to finish initialization before the task started.

Considering the time delay of the developed training system, we found that the delay comparable was to or lower than the delays of existing simulators. For professional simulators these delays are between 45 and 141 ms [8–10]. In the current study, the average delay was 50 ms for PC-1 and 40 ms for PC-2. Since voluntary movements of humans reach a maximum 10 Hz, the computers used in this study are fast enough to generate intuitive feedback [7]. However, if faster and newer computers are used in combination with faster camera systems, delays of less than 0.04 s can be reached.

Conclusion

The force-sensing training system provides us with the unique possibility to objectively assess tissue-handling skills in a laboratory setting. The real-time visualization of applied forces during training may facilitate acquisition of tissue-handling skills for complex laparoscopic tasks and could stimulate proficiency gain curves of trainees. However, larger randomized trials that also include other tasks are necessary to determine whether training with visual feedback about forces reduces the interaction force during laparoscopic surgery.

Acknowledgment

The authors thank all students, surgeons, and gynecologists for participating in this study. They thank all surgeons and gynecologists for providing practical information about surgical suture tasks in box trainers.

Conflicts of interest

Tim Horeman, Sharon P. Rodrigues, Frank-Willem Jansen, Jenny Dankelman, and John J. van den Dobbelsteen have no conflicts of interest or financial ties to disclose.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Footnotes

T. Horeman and S. P. Rodrigues contributed equally to this work.

References

- 1.Schijven M, Jakimowicz J. Virtual reality surgical laparoscopic simulators. Surg Endosc. 2003;17(12):1943–1950. doi: 10.1007/s00464-003-9052-6. [DOI] [PubMed] [Google Scholar]

- 2.Aggarwal R, Moorthy K, Darzi A. Laparoscopic skills training and assessment. Br J Surg. 2004;91(12):1549–1558. doi: 10.1002/bjs.4816. [DOI] [PubMed] [Google Scholar]

- 3.Tholey G, Desai JP, Castellanos AE. Force feedback plays a significant role in minimally invasive surgery: results and analysis. Ann Surg. 2005;241(1):102–109. doi: 10.1097/01.sla.0000149301.60553.1e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Horeman T, Rodrigues SP, Jansen F-W, Dankelman J, van den Dobbelsteen JJ. Force measurement platform for training and assessment of laparoscopic skills. Surg Endosc. 2010;24(12):3102–3108. doi: 10.1007/s00464-010-1096-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Botden SMBI, Torab F, Buzink SN, Jakimowicz JJ. The importance of haptic feedback in laparoscopic suturing training and the additive value of virtual reality simulation. Surg Endosc. 2008;22(5):1214–1222. doi: 10.1007/s00464-007-9589-x. [DOI] [PubMed] [Google Scholar]

- 6.Chmarra MK, Dankelman J, van den Dobbelsteen JJ, Jansen FW. Force feedback and basic laparoscopic skills. Surg Endosc. 2008;22(10):2140–2148. doi: 10.1007/s00464-008-9937-5. [DOI] [PubMed] [Google Scholar]

- 7.Williams LEP, Loftin RB, Aldridge HA, Leiss EL, Bluethmann WJ (2002) Kinesthetic and visual force display for telerobotics. In: Proceedings 2002 IEEE international conference on robotics and automation (Cat. No. 02CH37292), May 2002, pp 1249–1254

- 8.Berkouwer WR, Stroosma O, Paassen MMRV, Mulder M, Mulder JAB (2005) Measuring the performance of the SIMONA research. Channels (August):15–18

- 9.Stroosma O, Mulder M, Postema FN (2007) Measuring time delays in simulator displays. In: AIAA modeling and simulation technologies conference and exhibit, 20–23 August, 2007, pp 1–9

- 10.Kijima R, Yamada E, Ojika T (2001) A development of reflex HMD - HMD with time delay compensation capability. In: Proceedings of international symposium on mixed reality, March 2001, pp 1–8

- 11.Cannys J (2000) Haptic interaction with global deformations. In: Proceedings of the 2000 IEEE international conference on robotics & automation 2000 (April), pp 2428–2433

- 12.Mendoza CA, Laugier C (2001) Realistic haptic rendering for highly deformable virtual objects. In: IEEE virtual reality conference 2001 (VR 2001), p 264. http://www.computer.org/portal/web/csdl/doi/10.1109/VR.2001.913795

- 13.Reiley CE, Akinbiyi T, Burschka D, et al. Effects of visual force feedback on robot-assisted surgical task performance. J Thorac Cardiovasc Surg. 2008;135(1):196–202. doi: 10.1016/j.jtcvs.2007.08.043. [DOI] [PMC free article] [PubMed] [Google Scholar]