Abstract

Introduction:

Communication of critical imaging findings is an important component of medical quality and safety. A fundamental challenge includes retrieval of radiology reports that contain these findings. This study describes the expressiveness and coverage of existing medical terminologies for critical imaging findings and evaluates radiology report retrieval using each terminology.

Methods:

Four terminologies were evaluated: National Cancer Institute Thesaurus (NCIT), Radiology Lexicon (RadLex), Systemized Nomenclature of Medicine (SNOMED-CT), and International Classification of Diseases (ICD-9-CM). Concepts in each terminology were identified for 10 critical imaging findings. Three findings were subsequently selected to evaluate document retrieval.

Results:

SNOMED-CT consistently demonstrated the highest number of overall terms (mean=22) for each of ten critical findings. However, retrieval rate and precision varied between terminologies for the three findings evaluated.

Conclusion:

No single terminology is optimal for retrieving radiology reports with critical findings. The expressiveness of a terminology does not consistently correlate with radiology report retrieval.

Introduction

Communication of critical test results between caregivers is an important component of medical quality and safety and is one of the Joint Commission’s National Patient Safety Goals for 2011.(1) Monitoring critical imaging result communication is essential in achieving this goal. A fundamental challenge, however, includes retrieval of radiology reports that contain critical results. Quality assurance programs have historically relied on manual searches of small numbers of radiology reports, which are in the form of narrative text and are typically unstructured.(2) The use of natural language processing (NLP) applications, specifically information retrieval (IR) applications, is a viable alternative to overcome the limitations of manual analysis of narrative radiology reports.

A significant problem with using NLP to retrieve these reports is formulating the necessary search term lists for each potentially critical radiology finding. Term lists are usually generated manually by subject matter experts, an exercise that is both time-intensive and often produces variable results. Leveraging existing medical terminologies could potentially generate term lists in a more timely and consistent manner. However, there are numerous medical terminologies, developed for a variety of purposes, making it difficult to select the most appropriate terminology for a corresponding clinical finding.

The goal of this study is to evaluate the expressiveness and coverage of each of four existing terminologies for generating term lists for ten potentially critical radiology findings.(3;4) In addition, retrieval of radiology reports that contain three critical imaging findings will be assessed using the corresponding term lists generated from the four terminologies. The four terminologies that were utilized for this study include the National Cancer Institute Thesaurus (NCIT), the Radiology Lexicon (RadLex), Systemized Nomenclature of Medicine (SNOMED-CT), and the International Classification of Diseases, 9th edition (ICD-9-CM).(5–8) These terminologies are readily available and have been used extensively in the medical domain.

Terminologies

A short description of the four terminologies evaluated in this study follows.

NCIT

NCIT has approximately 34,000 concepts.(6) It contains a broad range of clinical terminology with extensive cancer-related concepts (e.g., cancer subtypes, cancer-specific treatments such as chemotherapy). It is updated monthly by the National Cancer Institute (NCI). The logical relationship definitions are moderately extensive. In addition, the thesaurus has been augmented with substantial terms that are not cancer-related.

RadLex

RadLex contains approximately 10,000 concepts.(7) It is maintained by the Radiologic Society of North America (RSNA) and was developed by an expert panel of radiologists and specialists in the medical imaging field. First released in 2006, it contains many radiology-specific concepts including concepts relating to imaging examination types, imaging findings, imaging devices, and techniques for performing imaging examinations. It has the least extensively developed logical relationship definitions among the terminologies evaluated.

SNOMED-CT

SNOMED-CT contains approximately 308,000 concepts.(5) It originated from a merger and expansion of the College of American Pathologists’ SNOMED Reference Terminology (SNOMED-RT) and the United Kingdom’s National Health Service (NHS) Clinical Terminology, otherwise known as Read codes, in 2002. It was then acquired by the International Health Terminology Standards Development Organization (IHTSDO) in 2007. The logical relationship definitions are more extensive than those of the other three terminologies evaluated.

ICD-9-CM

The ICD-9-CM contains approximately 13,000 concepts.(8) Originally based upon Dr. Bertillon’s cause of death list in 1893, it has been extensively updated and modified to code and classify morbidity data from inpatient and outpatient records and physician offices.(8) It is used extensively for billing. The version evaluated is based on the World Health Organization 9th revision in 1977, which has been further modified for clinical use by the National Center for Health Statistics and Centers for Medicare and Medicaid Services (CMS) and is updated annually.

Methods

In order to evaluate term lists generated from each terminology, ten potentially critical imaging findings were randomly selected from a starter set of 29 highest urgency category critical results. This set contains examples of potentially critical imaging findings that may be utilized for critical result communication initiatives (Table 1).(9)

Table 1.

Potentially critical imaging findings used to evaluate expressiveness and coverage

| Aortic dissection | Ovarian torsion |

| Appendicitis | Pneumothorax |

| Ectopic pregnancy* | Pulmonary embolism* |

| Intracranial hemorrhage* | Testicular torsion |

| Intrauterine fetal demise | Volvulus (of gastrointestinal tract) |

Findings with asterisks were also used to evaluate report retrieval.

For each critical imaging finding, the National Cancer Institute (NCI) Metathesaurus Term Browser was used to search each terminology and record all synonyms for each parent concept, corresponding to each potentially critical imaging finding.(10) The relationships marked as “synonym” by the NCI Metathesaurus browser was utilized. Synonyms include all the unique text descriptions of the concept as well as lexical variants, e.g., for the concept of intracranial hemorrhage NCIT had the following synonyms: “bleeding, intracranial”, “hemorrhage, intracranial”, “intracranial bleeding”, and “intracranial hemorrhage”. Synonyms of any immediate children concepts, defined by the “child” relationship, were likewise added to the list. For example, for the concept of intracranial hemorrhage NCIT had the following synonyms for the two second order (children) terms of “cerebral hemorrhage” and “intracranial tumor hemorrhage”: “extradural hemorrhage”, “hemorrhage, extradural”, “non-traumatic extradural hemorrhage”, “pituitary hemorrhage”, “hemorrhage, subarachnoid”, “subarachnoid hemorrhage”, “hemorrhage, subdural”, “subdural hemorrhage”, “bleeding, cerebral”, “cerebral bleeding”, “cerebral hemorrhage”, “hemorrhage, cerebral”, “intracerebral hemorrhage”, “parenchymatous hemorrhage”, and “intracranial tumor hemorrhage.”

The term lists generated for each critical imaging finding using each of the four terminologies were evaluated using coverage and expressiveness. We measure expressiveness as the number of search terms in the ontology for a given concept, which includes all relevant synonyms of the parent concept and any immediate children concepts in order to roughly estimate the expressive power of each terminology.(4) Coverage is a binary measure, and reflects whether a terminology contains a given concept.(3)

Subsequently, these term lists were utilized for radiology report retrieval and further evaluation was performed using retrieval rate and document retrieval precision. For this evaluation, three critical imaging findings were selected— ectopic pregnancy, intracranial hemorrhage and pulmonary embolism—and the gold standard of whether or not the radiology report contained positive mention of this concept was determined by manual review as detailed below.

Data Sources and Setting

This retrospective study was performed at a 750-bed urban adult tertiary referral academic medical center, and affiliated sites including a cancer center, a community hospital, and six outpatient imaging facilities. Institutional review board approval was obtained for this HIPAA-compliant study, with waiver of informed consent for performing retrospective medical record review.

Findings consistent with three critical imaging results were queried from three corresponding examinations – Chest CT examinations with contrast for pulmonary embolism, Head CT and Brain MRI examinations for intracranial hemorrhage, and first trimester pelvic ultrasounds for ectopic pregnancy. All reports from January to December, 2009 were analyzed.

Radiology Report Retrieval

We utilized a customized toolkit developed at our institution, iSCOUT, to perform automated report retrieval for unstructured radiology reports. iSCOUT is comprised of a core set of tools, utilized in series, to enable a query and retrieve a list of relevant documents, rendered as a list of accession numbers corresponding to unstructured radiology reports. The components include the Data Loader, Header Extractor, Terminology Interface, Reviewer and Analyzer. The matching algorithm proceeds by parsing the text document into individual reports, each identified by a unique accession number. It then parses each report into individual words and sentences. A match is determined for a radiology report when a sentence contains all of the tokens within the search term, even when the tokens are not adjacent to each other. The toolkit also includes a negator that excludes reports when the query terms are negated. Further, an interface for expanding query terms to include related terms or synonyms was enabled. The Terminology Interface component, in particular, allows query expansion based on synonymous or other related terms that are derived from an expert or a terminology. This interface enables comparison of retrieval performance utilizing term lists derived from multiple sources. All iSCOUT components are written in the Java programming language.

Relevant Report Retrieval and Precision

The number of reports retrieved utilizing term lists generated from each terminology was used to estimate retrieval performance. Precision was calculated to evaluate whether retrieved reports were relevant to the potentially critical imaging finding. Precision is defined as the proportion of true positive reports to the total number of reports retrieved. Precision was determined by manual review of a random subset of retrieved reports to identify true positives. 10% of retrieved reports for ectopic pregnancy and pulmonary embolism and 5% of retrieved reports for intracranial hemorrhage were reviewed by two independent physicians. Kappa agreement was measured for reports retrieved with each terminology.(11) Disagreements between the two reviewers were subsequently settled by consultation until both reviewers agree on a final adjudication. Using this gold standard, retrieval was classified as true positive if the potentially critical finding concept was contained in the report, or as a false negative if the finding was not present. For all false positive results, the reason for false positive classification was manually determined and classified as either failure of negation or other.

Results

The expressiveness of each terminology for each of the ten critical imaging findings is shown in Table 2.

Table 2.

Total term count for each critical imaging finding by terminology

| Potentially Critical Finding | NCIT | RadLex | SNOMED-CT | ICD-9-CM |

|---|---|---|---|---|

| Aortic dissection | 2 | 1 | 4 | 7 |

| Appendicitis | 4 | 1 | 20 | 56 |

| Ectopic pregnancy | 2 | 1 | 21 | 15 |

| Intracranial Hemorrhage | 19 | 3 | 80 | 37 |

| Intrauterine Fetal Demise | 4 | 0 | 14 | 11 |

| Ovarian torsion | 0 | 0 | 4 | 6 |

| Pneumothorax | 1 | 1 | 24 | 8 |

| Pulmonary Embolism | 2 | 1 | 26 | 21 |

| Testicular torsion | 1 | 0 | 7 | 10 |

| Volvulus | 0 | 1 | 15 | 6 |

| Mean Expressiveness | 3.5 | 0.9 | 21.5 | 17.7 |

SNOMED-CT and ICD-9-CM had full coverage of the critical imaging findings (100%) compared to 80% for NCIT and 70% for RadLex. SNOMED-CT was the most expressive of the four terminologies (mean expressiveness=21.5), and RadLex was least expressive (mean expressiveness=0.9). Using 2-tailed paired homoscedastic t-test, both SNOMED-CT and ICD-9-CM had significantly more expressive power than NCIT and RadLex (SNOMED-CT vs. NCIT, p=0.008; RadLex vs. ICD-9-CM, p=0.009; NCIT vs. ICD-9-CM, p=0.012; RadLex vs. SNOMED, p=0.013). There was no significant difference in expressiveness between RadLex and NCIT (p=0.13) and between SNOMEDCT and ICD-9-CM (p=0.53). The Simes-Hochberg method was used to verify that these first four comparisons remain significant when corrected for the total six pair wise comparisons performed.

Table 3 shows the expressiveness of each terminology when the term lists are further augmented by utilizing second order terms.

Table 3.

Second order term count for each critical imaging finding by terminology

| Potentially Critical Finding | NCIT | RadLex | SNOMED-CT | ICD-9-CM |

|---|---|---|---|---|

| Aortic dissection | 0 | 0 | 2 | 6 |

| Appendicitis | 2 | 0 | 19 | 50 |

| Ectopic pregnancy | 0 | 0 | 19 | 14 |

| Intracranial Hemorrhage | 15 | 0 | 78 | 31 |

| Intrauterine Fetal Demise | 0 | 0 | 8 | 6 |

| Ovarian torsion | 0 | 0 | 2 | 0 |

| Pneumothorax | 0 | 0 | 23 | 7 |

| Pulmonary Embolism | 0 | 0 | 24 | 5 |

| Testicular torsion | 0 | 0 | 4 | 9 |

| Volvulus | 0 | 0 | 11 | 0 |

| Mean Expressiveness | 1.7 | 0 | 19 | 12.8 |

Radiology Report Retrieval

Three distinct data sets were utilized to evaluate radiology report retrieval. All 10,557 chest CT scan reports, 25,441 head CT and MRI scan reports, and 6,147 first trimester pelvic ultrasound reports finalized in 2009 were included for analysis. The total number of radiology reports retrieved containing pulmonary embolism, intracranial hemorrhage, and ectopic pregnancy, utilizing each terminology, is shown in Table 4. The term list utilizing SNOMED-CT was able to retrieve the most reports with pulmonary embolism (2.3%). However, it retrieved the least number of reports with intracranial hemorrhage (5.9%).

Table 4.

Number of reports retrieved for each potentially critical imaging finding by terminology.

| Potentially Critical Finding | NCIT | RadLex | SNOMED-CT | ICD-9-CM |

|---|---|---|---|---|

| Ectopic Pregnancy (n=6,147) | 308 (5.0%) | 308 (5.0%) | 309 (5.0%) | 309 (5.0%) |

| Pulmonary Embolism (n=10,557) | 151 (1.4%) | 151 (1.4%) | 239 (2.3%) | 170 (1.6%) |

| Intracranial Hemorrhage (n=25,441) | 1866 (7.3%) | 1600 (6.3%) | 1496 (5.9%) | 1842 (7.2%) |

The gold standard for radiology reports that had the three critical findings were based on manual review. Kappa agreement for the two reviewers showed perfect agreement for reports that had findings of ectopic pregnancy. Kappa statistics were 0.44 – 0.82 for reports that had findings of pulmonary embolus, corresponding to “moderate” to “almost perfect” agreement. Kappa statistics were 0.76 – 0.86 for reports that had findings of intracranial hemorrhage, corresponding to “substantial” to “almost perfect” agreement.(11)

Precision of report retrieval was 1 for all three critical findings and terminologies, if negation failures are excluded. An example of negation failure is the following statement regarding intracranial hemorrhage: “There is no mass, edema, or intracranial hemorrhage present.” Table 5 shows precision of report retrieval when negation failures are classified as false positives.

Table 5.

Report retrieval precision, classifying negation failures as false positives

| Potentially Critical Finding | NCIT | RadLex | SNOMED-CT | ICD-9-CM |

|---|---|---|---|---|

| Ectopic Pregnancy | 1 | 1 | 1 | 1 |

| Pulmonary Embolism | 0.93 | 0.93 | 0.87 | 0.88 |

| Intracranial Hemorrhage | 0.72 | 0.98 | 0.60 | 0.62 |

The term list generated utilizing RadLex had the greatest precision in retrieving radiology reports for the three critical imaging findings. Noticeably, there was perfect precision for retrieving radiology reports indicating presence of ectopic pregnancy.

Discussion

This study has two phases of evaluation – intrinsic and extrinsic.(12;13) The first phase, the intrinsic phase, evaluates expressiveness and coverage of each terminology for ten potentially critical imaging findings. Following this evaluation, a second, task-based evaluation (i.e. extrinsic phase) further illustrates how well the terminologies perform in a document retrieval task.

SNOMED-CT and ICD-9-CM were the most expressive and showed the highest coverage among the four terminologies evaluated. This is not surprising, especially for SNOMED, given that it has the most number of concepts within the terminology.(5) On the other hand, ICD-9-CM is often utilized for billing purposes, and appropriate representation of critical results is expectedly excellent, in spite of the fewer number of concepts within the terminology. Several reasons may account for incomplete coverage of critical imaging findings for RadLex and NCIT. These include the smaller terminology size (e.g. fewer concepts), and the specialized nature of these terminologies. RadLex is specifically developed for use in radiology, while NCIT was originally developed for the cancer domain.

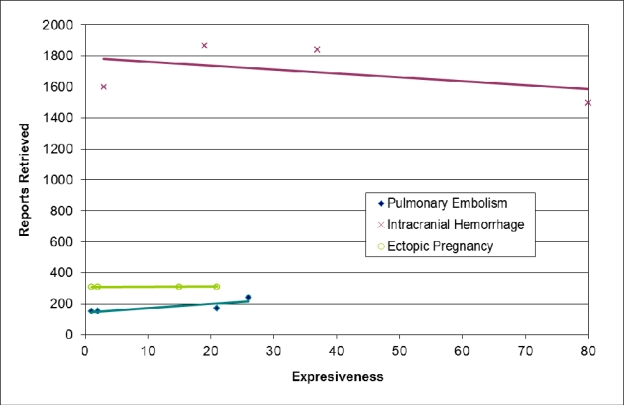

Notwithstanding the increased expressiveness of SNOMED, retrieval rates were inconsistent with respect to the potentially critical imaging finding. Figure 1 illustrates a lack of consistent correlation between expressiveness and report retrieval rate.

Figure 1.

Number of reports retrieved for each potentially critical imaging finding: pulmonary embolism (blue), intracranial hemorrhage (purple), and ectopic pregnancy (green). Data points represent reports retrieved by term lists generated from the 4 terminologies for each potentially critical finding. Linear regression trend lines are also shown for each finding.

While the number of reports retrieved increased as the expressiveness increased for pulmonary embolism, with the greatest number of reports retrieved utilizing the term list generated with SNOMED-CT, the number of reports decreased with increasing expressiveness for retrieving reports with intracranial hemorrhage. For this finding, the greatest number of reports was retrieved utilizing the term list generated from NCIT, which had only 19 concepts including first and second order terms, compared to the most expressive from SNOMED-CT, which had 80 concepts.

There are several reasons why having increased expressiveness did not translate to higher retrieval rate. Some terms were simply not used in the radiology report, e.g., pulmonary apoplexy, grumbling appendix, hydatid of Morgagni torsion, clicking pneumothorax, and intraligamentous pregnancy. The degree of granularity in representing concepts within a terminology may not correspond with actual term usage by radiologists. Secondly, some terms were overly specific, e.g., “intrauterine death, affecting management of mother, antepartum” and “acute obstructive appendicitis with generalized peritonitis.” These overly specific terms add to expressiveness, but do not necessarily add to retrieval. For instance, although the less specific term “intrauterine death, affecting management of mother, antepartum” may retrieve a relevant report, it will already be retrieved by the less specific term “intrauterine death” and will therefore not add to the retrieval rate. Finally, some concepts were overly verbose, e.g., “extradural hemorrhage following injury without open intracranial wound and with prolonged loss of consciousness (more than 24 hours) and return to pre-existing conscious level.” It will be uncommon for a radiology report to be expressed in the exact way that this concept is described. Thus, it will almost certainly never retrieve a report.

Retrieval rate was utilized in this study in lieu of recall (e.g. sensitivity) because of the expectedly high precision. With perfect precision, comparing the retrieval rate between terminologies is akin to comparing recall because the prevalence of the finding is constant with respect to the terminology used for retrieval. The precision measure was consistently 1.0 for all terminologies, except for negation errors. Negation error was more pronounced for intracranial hemorrhage, where the number of concepts for three of the terminologies (NCIT=19, SNOMED=80 and ICD=37) were among the greatest. It remains a challenge to perform negation on complex terms, especially when they are composed of numerous words. Disregarding negation, retrieval rate (i.e. finding the critical imaging finding within the report) did not consistently correlate with expressiveness. Likewise, precision did not correlate with expressiveness.

It remains unclear how a terminology can be utilized for generating terms lists for critical result findings that are not covered by a single concept. For instance, the critical result “new or unexpected pneumothorax” can be represented by utilizing term composition (e.g. concatenating several concepts).(14) However, using term composition to automatically generate term lists is still a challenging task.

Conclusion

No single terminology is optimal for retrieving radiology reports that contain potentially critical imaging findings. The expressiveness and coverage of a terminology are not consistently associated with (improved) retrieval rate and precision for automated radiology report retrieval.

Acknowledgments

This work was funded in part by Grant R18HS019635 from the Agency for Healthcare Research and Quality and Grant UC4EB012952 from the National Institute of Biomedical Imaging and Bioengineering, NIH.

Reference List

- (1).The Joint Commission National Patient Safety Goals. 2011. 2011. http://www.jointcommission.org/standards_information/npsgs.aspx. Ref Type: Internet Communication.

- (2).Andriole KP, Prevedello LM, Dufault A, Pezeshk P, Bransfield R, Hanson R, et al. Augmenting the impact of technology adoption with financial incentive to improve radiology report signature times. J Am Coll Radiol. 2010;7(3):198–204. doi: 10.1016/j.jacr.2009.11.011. [DOI] [PubMed] [Google Scholar]

- (3).Aronson AR. Effective mapping of biomedical text to the UMLS Metathesaurus: the MetaMap program. Proc AMIA Symp. 2001:17–21. [PMC free article] [PubMed] [Google Scholar]

- (4).Andrews JE, Patrick TB, Richesson RL, Brown H, Krischer JP. Comparing heterogeneous SNOMED CT coding of clinical research concepts by examining normalized expressions. J Biomed Inform. 2008;41(6):1062–1069. doi: 10.1016/j.jbi.2008.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (5).Cote RA, Robboy S. Progress in medical information management. Systematized nomenclature of medicine (SNOMED) JAMA. 1980;243(8):756–762. doi: 10.1001/jama.1980.03300340032015. [DOI] [PubMed] [Google Scholar]

- (6).de Coronado S, Haber MW, Sioutos N, Tuttle MS, Wright LW. NCI Thesaurus: using science-based terminology to integrate cancer research results. Stud Health Technol Inform. 2004;107(Pt 1):33–37. [PubMed] [Google Scholar]

- (7).Langlotz CP. RadLex: a new method for indexing online educational materials. Radiographics. 2006;26(6):1595–1597. doi: 10.1148/rg.266065168. [DOI] [PubMed] [Google Scholar]

- (8).Loy P. International Classification of Diseases--9th revision. Med Rec Health Care Inf J. 1978;19(2):390–396. [PubMed] [Google Scholar]

- (9).Poon EG. Critical Radiology Result Starter Set. 2011. http://www.macoalition.org/Initiatives/docs/CTRstarterSet.xls. Ref Type: Internet Communication.

- (10).NCI Term Browser 2011. http://nciterms.nci.nih.gov/ncitbrowser/pages/multiple_search.jsf. Ref Type: Internet Communication.

- (11).Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–174. [PubMed] [Google Scholar]

- (12).Jones KS, Galliers JR. Evaluating natural language processing systems: an analysis and review. New York: Springer; 1996. [Google Scholar]

- (13).Lacson R, Barzilay R, Long W. Automatic analysis of medical dialogue in the home hemodialysis domain: structure induction and summarization. J Biomed Inform. 2006;39(5):541–555. doi: 10.1016/j.jbi.2005.12.009. [DOI] [PubMed] [Google Scholar]

- (14).de Keizer NF, Abu-Hanna A, Cornet R, Zwetsloot-Schonk JH, Stoutenbeek CP. Analysis and design of an ontology for intensive care diagnoses. Methods Inf Med. 1999;38(2):102–112. [PubMed] [Google Scholar]