Abstract

A clinical diagnosis is a decision-making process that consists of not only the final diagnostic decision but also a series of information seeking decisions. Members of a patient-care team such as nurses, residents, and attending physicians play different roles but work collaboratively during this process. To better support the different roles and their collaborations during this process, we need to understand how different users interact with decision support systems. We developed SRCAST-Diagnosis to test how nurses, residents, and attending physicians use decision support system to improve diagnosis accuracy and resource efficiency. Nurses seemed more willing to take recommendations and therefore saved a greater amount of lab resources, but made less improvements on diagnosis accuracy. Attending physicians appeared more cautious in accepting SRCAST-Diagnosis recommendations. These findings will provide useful information for future CDSS design to support better collaborations of team members.

Introduction

A clinical diagnosis is “the act or process of identifying or determining the nature and cause of a disease or injury through evaluation of patient history, examination, and review of laboratory data” 1. As implied by this definition, a clinical diagnosis is not just a simple step of knowledge inference which maps patient symptoms to their causes, but rather a process consisting of information seeking activities, such as physical examinations and lab tests, which finally lead to a clinical conclusion. A diagnostic decision-making process usually involves a team of healthcare providers instead of just an individual decision-maker. Team members such as nurses, residents, and attending physicians (i.e. attending) all play specific roles in this process. For instance, attendings make a final diagnosis for patients, while nurses typically are involved in information seeking/gathering activities. Therefore, a clinical diagnosis is essentially a process in which healthcare providers work collaboratively to identify the appropriate diagnosis.

Clinical decision support systems (CDSS) were developed to assist healthcare providers with their diagnostic decision-making. However, the focus of most CDSSs were on providing diagnosis support to a single type of decision-makers – physicians 2, but not on supporting the needs of other members of a patient-care team such as nurses who are involved in the diagnosis process, particularly in collecting information necessary for the final diagnosis. Therefore, to better support the process of clinical diagnosis, we designed a CDSS prototype, SRCAST-Diagnosis, to support a team of healthcare providers and their collaborations during this process. It not only supports the diagnosis decision-making, but also provides recommendations on information seeking decisions (i.e. lab tests). The feature of supporting information seeking provides a basis for understanding how different members of a patient care team may benefit from such systems. Hence, the purpose of this study is to investigate how different types of users such as nurses, residents, and attendings interact with SRCAST-Diagnosis with regard to the diagnosis decisions and information seeking decisions such as ordering labs. We recruited nurses, residents, and attending physicians from an emergency department of a large academic hospital for a set of experiments involving SRCAST-Diagnosis. After the experiment, we also conducted a brief survey to ascertain users’ perceptions of the features of SRCAST-Diagnosis.

The paper is organized as follows. In the next section, we present a brief background on CDSSs. Then in the section 3, we briefly introduce the key features of SRCAST-Diagnosis. Section 4 describes the experimental design. Then we present the results from experiment and survey in section 5 and section 6. In section 7, we discuss the implications and limitations of the study. Finally, we conclude with some thoughts on collaboration and clinical decision support.

Background

CDSSs are active knowledge systems which generate case-specific advices using two or more items of patient data3. The target areas of a CDSS include preventive care, diagnostic, planning or implementing treatment, follow-up management, hospital and provider efficiency, cost reductions and improved patient convenience4, 5. The general goals of CDSS are to improve patient safety, care quality, and efficiency in healthcare delivery 6.

Providing diagnostic support to healthcare providers is an important focus of CDSSs. However, studies of CDSSs revealed that the improvements on diagnosis are very limited 7–9. Kawamoto et al identified four features of a CDSS as independent predictors to improve clinical practice: providing automatic decision support as part of clinician workflow, providing recommendations rather than just assessment, providing support at time and location of decision-making, and providing computer-based decision support 10.

These findings were important for a CDSS to be better accepted by clinical decision-makers. Although they did not explicitly mention supporting patient care team collaboration, they did mention the importance of supporting clinician workflow, which involves the collaboration of nurses, residents, physicians, and other healthcare providers. A diagnosis has long been considered a collaborative activity of a patient-care team where members have distributed knowledge and responsibilities but need to collaborate on the diagnosis 11, 12. Healthcare providers such as nurses are actively involved in the diagnostic decision-making process 13–15, but were not supported by clinical decision support systems 2. Systems for nurses focused on the features such as data input and document management. For example, in a study that examined how nurses respond to documentation alerts, the researchers found that nurses can reasonably recognize the true positive alerts and false positive alerts and had an acceptable way to deal with them 16. Supporting nurses with such features is important. However, supporting nurses and other healthcare providers during information seeking activities can improve diagnosis accuracy and efficiency.. Consequently, to better support different roles during the information seeking and diagnosis processes, we need to better understand how they would interact with a CDSS.

SRCAST-Diagnosis: A Prototype to Support Diagnostic Decision-Making

SRCAST-Diagnosis is a web-based prototype designed to provide diagnostic decision support. It is based on Multi-Layer Bayesian Network (MLBN)17 and Hypothesis Driven Story Building (HDSB)18. MLBN was used to calculate the probabilities for differential diagnoses. HDSB was used to describe the key steps, such as hypothesis generation, hypothesis evaluation, information seeking, and hypothesis revision during a diagnosis process.

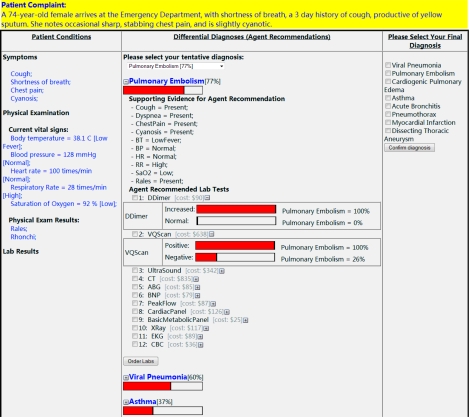

Figure 1 highlights the main features of SRCAST-Diagnosis. The top of the screen shows the patient’s initial complaints. The major component of the screen is a table that consists of patient conditions, differential diagnoses recommended by the system, and a list of diagnoses for the user to choose from. The first column shows the patient conditions which include symptoms, physical examinations, and lab test results. The second column shows the differential diagnoses of a particular patient case ranked from higher probability to lower probability. If a user clicks a particular diagnosis, more information will be displayed, including the supporting evidence and a list of lab tests that could be ordered. The lab tests are ranked based on their usefulness in further differentiating the differential diagnoses. Clicking on a lab test will further explain how useful this test will be: it compares the posterior probabilities of the suspected diagnosis given the assumption that a particular value of a test has been returned. For example, as shown in Figure 1, DDimer is very useful because the result of DDimer can definitely tell whether pulmonary embolism exists or not, while VQScan is not as useful as DDimer in this case, since the negative result cannot exclude pulmonary embolism with the likelihood still at 26%. Therefore, a user can select and order the lab tests based on their usefulness. Once the lab results are returned, the system will upgrade the rankings accordingly to reflect the most recent information.

Figure 1:

SRCAST-Diagnosis Interface

For experiment purpose, we identified 12 diseases that are all related to shortness of breath. The probabilities that reflect the disease-symptom causal relationships were obtained by searching literature and interviewing experts. The data were then cross-verified by experts and represented in the MLBN.

Experimental Design

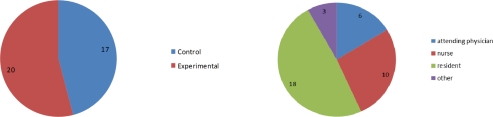

To investigate how nurses, residents, and attendings use SRCAST-Diagnosis, we designed a controlled experiment. Participants were randomly divided into experimental group and control group with close numbers in each type.

Participants

We recruited nurses, residents, and attending physicians to participate in the experiments because of their different but collaborative roles in patient care. 37 participants were recruited from Emergency Department at Penn State Hershey Medical Center. Of the 37 participants, 20 were assigned to the experimental group, and 17 to the control group. There were 18 residents (11 in experimental group, 7 in control group), 10 nurses (5 in experimental group, 5 in control group), and 6 attendings (3 in experimental group, 3 in control group).

Procedure

Participants in both groups were presented with 10 patient scenarios. Each scenario described that a patient arrives at the emergency department with initial symptoms and physical examination results. The results of a given set of labs would not be presented to the participant until they were explicitly ordered. The patient may have one actual diagnosis or two co-existing diagnoses. The participants were not told the number of diagnoses in each scenario. They were asked to identify the appropriate diagnoses based on patient conditions and ordered lab results.

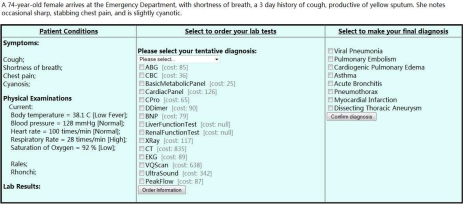

A participant in the experimental group could use the information and recommendations offered by SRCAST-Diagnosis to make the decision. SRCAST-Diagnosis was able to: (1) rank the differential diagnoses based on their probabilities; (2) rank the lab tests based on their usefulness to differentiate the differential diagnoses; and (3) adjust those rankings automatically when new information arrives. However, a participant in the control group was asked to make diagnostic decisions for the same scenarios based on their own judgment without any support from SRCAST-Diagnosis (Figure 3). The participants from both groups were asked to select a final diagnosis from the list of given choices. They were free to select more than one potential diagnosis.

Figure 3:

Interface for Control Group Users

After completing the experiment, participants in the experimental group were given a survey about their experiences using SRCAST-Diagnosis.

Experimental Results

Two measures were used to evaluate the outcomes of different types of users using SRCAST-Diagnosis. Diagnosis accuracy measures the correctness of a participant’s final diagnosis. Diagnosis efficiency measures the total cost of lab resources that a user spent in each scenario. Diagnosis accuracy and diagnosis efficiency were compared for nurses, residents, and attendings between experimental group and control group. P-values were used to measure the significance of improvements.

Measure 1: Diagnosis Accuracy

In the 10 scenarios, there were 6 with one actual diagnosis and 4 with two co-existing diagnoses. Therefore, to measure the accuracy of diagnosis, we used two criteria. A scenario is considered partial accurate if at least one diagnosis is correctly diagnosed. Therefore, a single-diagnosis scenario is considered partial accurate if its true diagnosis is identified with an extra diagnosis; a dual-diagnosis scenario is considered partial accurate if only one of the two diagnoses is correctly identified. A scenario is considered exactly accurate, if all the diagnoses are exactly identified. Then for each scenario, we used a percentage to measure its average accuracy (Eq. 1).

| (Eq. 1) |

A MANOVA test disclosed that there is a significant difference on diagnosis accuracy (in either criterion) between the control group and the experimental group (p = 0.0), as well as between the different roles (p=0.013). However, the MANOVA test did not show the significant difference between nurses, residents, and attendings regarding their level of improvements. It needs to be further investigated with regard to the types of scenarios. Table 1 contains all the p-values by comparing the experimental group and the control group for different types of scenarios and different types of users with both criteria using 2-sample T-test.

Table 1:

Accuracies and P-Values of Scenarios and Roles

| Role | Criterion of Accuracy | Single-Diagnosis Scenarios (s0 – s5) (mean accuracy of control vs. experimental) P-value | Dual-Diagnosis Scenarios (s6 – s9) (mean accuracy of control vs. experimental) P-value |

|---|---|---|---|

| Nurses | Partial Accuracy | (83% vs. 83%) 1 | (75% vs. 95%) 0.092 |

| Exact Accuracy | (73% vs. 73%) 1 | (15% vs. 15%) 1 | |

| Residents | Partial Accuracy | (87% vs.97%) 0.344 | (87.5% vs. 95%) 0.075 |

| Exact Accuracy | (78% vs. 89%) 0.275 | (9.4% vs. 29%) 0.042 | |

| Attendings | Partial Accuracy | (67% vs. 100%) 0.012 | (67% vs. 75%) 0.761 |

| Exact Accuracy | (67% vs. 67%) 1 | (8% vs. 17%) 0.391 |

As shown in Table 1, nurses did not show improvements in the exact accuracy either for single-diagnosis scenarios or dual-diagnosis scenarios. However, they did show improvements in the partial accuracy scenarios (p = 0.092). This suggests that the SRCAST-Diagnosis helped the nurses identify at least one of the two correct diagnoses. For residents, the improvement in single-diagnosis scenarios was less significant than in dual-diagnosis scenarios, for which the improvements were substantial (p = 0.075 and 0.042), indicating that SRCAST-Diagnosis was more useful to them in diagnosing complex patient situations. The attendings’ improvement in single-diagnosis scenarios was more significant in the partial accuracy scenarios (p = 0.012) than in the exact accuracy scenarios (p = 1). For these scenarios, attendings selected two diagnoses in order not to miss the true one, which reflected their cautiousness in accepting recommendations from SRCAST-Diagnosis.

Measure 2: Lab Resource Cost

Nurses, residents, and attendings not only made different levels of improvements on diagnosis accuracy, but also differed in reducing lab resource cost. Table 2 shows the average cost a participant spent for each type of scenarios. The percentages indicate the ratio of cost savings by using SRCAST-Diagnosis. The nurses had the greatest portions of savings (57% in single-diagnosis scenarios, and 41% in dual-diagnosis scenarios).

Table 2:

Means of Resource Cost

| Group | Single-Diagnosis Scenarios ($) | Dual-Diagnosis Scenarios ($) | |

|---|---|---|---|

| All Participants | Control | 781 | 721 |

| Experiment | 383 (−51%) | 547 (−24%) | |

| Nurses | Control | 744 | 736 |

| Experiment | 322 (−57%) | 432 (−41%) | |

| Residents | Control | 773 | 763 |

| Experiment | 385 (−50%) | 582 (−24%) | |

| Attendings | Control | 990 | 719 |

| Experiment | 467 (−53%) | 651 (−9%) |

Table 3 lists the p-values comparing the resource costs between the control group and the experimental group for the three different roles and the different types of scenarios. The decreases of resource cost in single-diagnosis scenarios by the three roles are all significant (0.001, 0.001, 0.007), while the decreases in dual-diagnosis scenarios are not as significant (0.222, 0.227, 0.751).

Table 3:

P-Values for Resource Cost Comparison

| All Scenarios | Single-Diagnosis Scenarios | Dual-Diagnosis Scenarios | |

|---|---|---|---|

| All Participants | 0 | 0 | 0.178 |

| Nurses | 0.001 | 0.001 | 0.222 |

| Residents | 0.001 | 0.001 | 0.227 |

| Attendings | 0.023 | 0.007 | 0.751 |

If combining the findings from lab resource cost and diagnosis accuracy together, we found that nurses were more willing to take the recommended lab tests which saved them a greater portion of lab resource cost. However, the lab tests they ordered were not yet sufficient to make a correct diagnosis so that their improvements on diagnosis accuracy were poor. On the other hand, attendings were very cautious in accepting recommendations. They selected 2 diagnoses for single-diagnosis scenarios in order not to miss the actual one, which made them the only group who made significant improvements on single-diagnosis scenarios. However, they would spend more resources on lab tests to make sure information is sufficient to correctly diagnose the patient.

Survey Results

After the experiment, each participant in the experimental group was asked to complete a survey about their experiences using SRCAST-Diagnosis.

Each participant was first asked to give their rates about its general usefulness. Table 4 lists the percentages of participants in each group for each answer. Nurses tended to like it more than residents and attendings; while attendings tended to give a hesitating remark. Residents seemed more skeptical than nurses, but less skeptical then attendings.

Table 4:

General Ratings of the Usefulness

| Answers | Nurses (5) | Residents (9) | Attendings (3) |

|---|---|---|---|

| Yes | 80% | 44% | 0 |

| No | 0 | 33% | 0 |

| Hard to say | 20% | 22% | 100% |

Participants were also asked to specify the reasons for their choices. These feedbacks suggested that there was a very different perspective between nurses and residents/attendings. Nurses focused more on the usability of the tool. For example, one nurse wrote: “(T)he tool was quite helpful. The rapid lab results and notification of changes in patient’s condition aided in making a diagnosis.” However, residents and attendings focused more on the content of the recommendations. One attending said that he needed more clinical pictures or history of the patient. Another resident wrote: “I think it makes you tunnel in a diagnosis which narrows your thinking and this could be dangerous.” These differences suggested that nurses, residents, and attendings would pay attention to different aspects of a decision support system, which possibly reflected their different levels of knowledge and experience.

Table 5 listed three general statements about the capabilities of SRCAST-Diagnosis, and the average ratings (0: most disagreed, 10: most agreed) from each groups. These statements covered diagnosis accuracy, resource efficiency, and time efficiency in respect. The average ratings were pretty consistent across the groups. Nurses always have a higher rating than residents, and then residents have a higher rating than attendings.

Table 5:

The final outcome of diagnosis brought by SRCAST-Diagnosis

| Statements | Nurses (5) | Residents (9) | Attendings (3) |

|---|---|---|---|

| The ranking of the differential diagnosis were useful for me to quickly identify true diagnosis. | 7.6 | 5.78 | 4 |

| It reduced redundant resource use and improved resource efficiency in lab ordering activities. | 8 | 4.89 | 4 |

| It reduced the time needed to make a diagnosis. | 7.4 | 6 | 3.33 |

Participants were also asked to rate their level of trust to SRCAST-Diagnosis. Nurses, residents, and attendings gave the average trust level at 7.0, 4.44, and 3.67 respectively, which indicated that it was easier for nurses to accept the recommendations, but more difficult for attendings.

Discussion

The experiment results and the survey results both showed the differences among nurses, residents, and attendings using SRCAST-Diagnosis for their decision-making. The findings are summarized here:

Nurses were more willing to accept the recommended lab tests and had the largest savings on lab resource cost. However they stopped ordering lab tests too early during the information seeking process which affected their ability to make an accurate diagnosis (experimental results).

Attendings were more cautious in accepting recommendations. On one hand, they tended to select two diagnoses for those single-diagnosis scenarios, which improved their partial accuracy significantly, but had a negative effect on their exact accuracy. On the other hand, for dual-diagnosis scenarios, they tended to spend more lab resources to make the diagnosis (experimental results).

Nurses accepted the usefulness of the prototype, while residents and attendings were more skeptical (survey results).

These different outcomes reflected their different levels of training and different responsibilities in clinical diagnosis. Nurses, residents, and attendings are all involved in diagnostic decision-making processes. However their roles differ significantly. Attendings and residents typically order labs and make the final diagnostic decision, while nurses normally focus on providing information for attendings and residents. Nurses are not trained with comprehensive medical knowledge often necessary to make a diagnostic decision. Therefore, they are more likely to follow recommendations given by SRCAST-Diagnosis. They saved on lab resources, but their diagnostic decision-making was unsurprisingly not as good as the physicians. Residents and attendings, on the other hand, made substantial improvements on diagnosis accuracy, but they utilized more resources than nurses.

The different performance of nurses and attendings/residents suggested that an accurate diagnosis not only relies on the accuracy of a decision support system itself, but more importantly on the capability of a user to effectively use it. Therefore, from a design perspective, a decision support system should satisfy the needs of different users. For example, for nurses, a decision support system should focus more on supporting their information seeking/gathering activities. We propose several design strategies for a nurse-oriented decision support system:

It should be convenient for nurses to input patient-related information, since a great amount of patient-related information is collected by nurses from their observations and conversations with patients and their families.

The feature of recommending useful preliminary lab tests instead of differential diagnosis may be useful for nurses, so that they can order the necessary preliminary lab tests quickly and efficiently.

It should be designed with efficient communication capabilities, so that requests and information can be effectively exchanged between nurses and physicians and therefore their collaboration can be better supported.

There were also a significant difference between residents and attendings. The survey indicated that attendings were more conservative and cautious in accepting recommendations. They not only hesitated to give a deterministic remark on the usefulness of SRCAST-Diagnosis (all selecting “hard to say”), but also gave the lowest rate on their trust to the tool (3.67). Attendings’ conservativeness and cautiousness could also be observed from their choices in the experiment. Their improvements on diagnosis accuracy were consistently lower than residents’, with only one exception on partial accuracy for single-diagnosis scenarios (p-value = 0.012 vs. 0.344 by residents), because they selected one more suspected diagnosis than the actual one. At the same time, they had the lowest resource savings (only 9%) on the dual-diagnosis scenarios, for which they ordered more labs to make sure information was sufficient for the right diagnosis.

Attendings’ choices reflected their cautiousness, which results from their increased responsibility for patient safety. By comparing residents and attendings, we learned that their cautiousness in using decision support systems may accumulate with the increase of clinical experience. Their cautiousness partly came from their suspect of the reliability of the probabilities used by SRCAST-Diagnosis for inferring differential diagnoses. Although they were extracted from literature or verified by domain experts and therefore are reliable to some degree, a more reliable set of probabilities is always desired. Therefore, it is important in the future to connect a decision support system with EMR systems. By doing so, the probabilities will be based on a large amount of patient data and will be much more reliable. Moreover, it will also become possible for real-time learning so that recommendations can always reflect the most recent information and knowledge.

Study limitations

Both the experiment results and the survey results presented interesting findings regarding the different outcomes of nurses, residents, and attendings’ interactions with SRCAST-Diagnosis. However, there were some limitations. First, the number of participants recruited in the experiment was relatively small because of the time and resource constraint, although they did show their differences when interacting with SRCAST-Diagnosis. An experiment with larger number of participants is desired so that the results could be more reliable. Second, the scenarios used in the experiment may have looked artificial to some participants. Although these scenarios were designed and verified by medical experts and have highlighted the key features we wanted to test in the experiment, they are expected to include more patient picture and history to reflect realistic patient situations.

Conclusion

Diagnostic decision-making is a process that involves a team of healthcare providers collaborating to identify the health problem of a patient. It involves not only the final diagnostic decision, but also a series of information seeking decisions, such as ordering lab tests. Various team members such as attendings, residents, and nurses are responsible for different types of decisions during this process. SRCAST-Diagnosis is an attempt to support the process, which involves not only the diagnostic decision, but also the information seeking decisions. It is a first step in an attempt to support team decision-making.

Through this study, we found that attendings, residents, and nurses have different perceptions and utilized SRCAST-Diagnosis in different ways. In future versions of SRCAST-Diagnosis, we hope to design features that more effectively supports the needs of the different users.

Figure 2:

Number of Participants

Acknowledgments

We would like to thank the nurses, residents, and attending who participated in this study. This research was supported in part by a grant from Lockheed Martin Corporation.

Reference

- 1.Diagnosis. The American Heitage Dictionary of the English Language. 4th Edition. Houghton Mifflin Company; 2003. [Google Scholar]

- 2.Kaplan B. Evaluating Informatics Applications -- Clinical Decision Support Systems Literature Review. International Journal of Medical Informatics. 2001;64:15–37. doi: 10.1016/s1386-5056(01)00183-6. [DOI] [PubMed] [Google Scholar]

- 3.Wyatt J, Spiegelhalter D. Field trials of medical decision-aids: potential problems and solutions. In: Clayton PD, editor. Proceedings of the Fifteenth Annual Symposium on Computer Applications in Medical Care; Washington, DC: American Medical Informatics Association; 1991. pp. 3–7. [PMC free article] [PubMed] [Google Scholar]

- 4.Perreault LE, Metzger JB. A pragmatic framework for understanding clinical decision support. The Journal of the Healthcare Information and Management Systems Society. 1999;13(2):5–21. [Google Scholar]

- 5.Berner ES. Clinical Decision Support Systems: State of the Art. Rockville, Maryland: Agency for Healthcare Research and Quality; 2009. [Google Scholar]

- 6.Coiera E. Clinical Decision Support Systems Guide to Health Informatics. 2nd ed. A Hodder Arnold Publication; 2003. [Google Scholar]

- 7.Johnston ME, Langton KB, Haynes RB, Mathieu A. Effects of Computer-based Clinical Decision Support Systems on Clinician Performance and Patient Outcome: A Critical Appraisal of Research. Annals of Internal Medicine. 1994;120(2):135–42. doi: 10.7326/0003-4819-120-2-199401150-00007. [DOI] [PubMed] [Google Scholar]

- 8.Garg AX, Adhikari NKJ, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, et al. Effects of Computerized Clinical Decision Support Systems on Practitioner Performance and Patient Outcomes. Journal of the American Medical Association. 2005;293(10):1223–38. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 9.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of Computer-Based Clinical Decision Support Systems on Physician Performance and Patient Outcomes: A Systematic Review. Journal of American Medical Association. 1998;280(15):1339–47. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- 10.Kawamoto K, Houlihan LA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765–73. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Baggs JG, Schmitt MH, Mushlin AI, Eldredge DH, Oakes D, Hutson AD. Nurse-physician collaboration and satisfaction with the decision-making process in three critical care units. Am J Crit Care. 1997;6(5):393–9. [PubMed] [Google Scholar]

- 12.Cicourel AV. Intellectual Teamwork. Hillsdale, NJ: L. Erlbaum Associates Inc; 1990. The Integration of Distributed Knowledge in Collaborative Medical Diagnosis. [Google Scholar]

- 13.Hamers JPH, Abu-Saad HH, Hout MAvd, Halfens RJG, Kester ADM. The influence of children’s vocal expressions, age, medical diagnosis and information obtained from parents on nurses’ pain assessments and decisions regarding interventions. Pain. 1996;65(1):53–61. doi: 10.1016/0304-3959(95)00147-6. [DOI] [PubMed] [Google Scholar]

- 14.Marsden J. Cataract: the role of nurses in diagnosis, surgery and aftercare. Nurs Times. 2004;100(7):36–40. [PubMed] [Google Scholar]

- 15.Carpenito-Moyet LJ. Nursing Diagnosis: Application to Clinical Practice. 11 ed. Philadelphia, PA: Lippincott Williams & Wilkins; 2005. [Google Scholar]

- 16.Cimino JJ, Farnum L, Cochran K, Moore SD, Sengstack PP, McKeeby JW, editors. Interpreting Nurses’ Responses to Clinical Documentation Alerts. AMIA Annual Symposium; Washington, D. C.: 2010. [PMC free article] [PubMed] [Google Scholar]

- 17.Zhu S, Chen P-C, Yen J, editors. Multi-Layer Bayesian Network for Variable Bound Inference. IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology 2008 (WI-IAT’08); 2008; Sydney, Australia. [Google Scholar]

- 18.Zhu S, Reddy M, Yen J, editors. Hypothesis-Driven Story Building Framework: Enhancing Iterative Process Support in Clinical Diagnostic Decision Support Systems. AMIA Annual Symposium; 2009; San Francisco, CA. [PMC free article] [PubMed] [Google Scholar]