Abstract

The Multi-source Integrated Platform for Answering Clinical Questions (MiPACQ) is a QA pipeline that integrates a variety of information retrieval and natural language processing systems into an extensible question answering system. We present the system’s architecture and an evaluation of MiPACQ on a human-annotated evaluation dataset based on the Medpedia health and medical encyclopedia. Compared with our baseline information retrieval system, the MiPACQ rule-based system demonstrates 84% improvement in Precision at One and the MiPACQ machine-learning-based system demonstrates 134% improvement. Other performance metrics including mean reciprocal rank and area under the precision/recall curves also showed significant improvement, validating the effectiveness of the MiPACQ design and implementation.

Introduction

The increasing requirement to deliver higher quality care with increased speed and reduced cost has forced clinicians to adopt new approaches to how diagnoses are made and care is administered. One approach, evidence-based medicine, focuses on the integration of the current best clinical expertise and research into the ongoing practice of medicine 1.

One of the positive side effects of this requirement for clinicians to make better use of medical evidence with less time and effort has been practice-enhancing technological solutions. Medical citation databases such as PubMed have enabled immediate access to a wide array of research over the Internet. In many cases, medical information retrieval systems allow clinicians to quickly locate articles that are specific to their information need. However, given that medical information retrieval systems generally return results only at a coarse level, and given that clinicians have a minimal amount of time to search for answers – often as little as two minutes – there is substantial room for improvement 2. The adoption of natural language processing (NLP) techniques allows for the creation of clinical question answering systems that better understand the clinician’s query and can more precisely serve the user’s information need. Such systems typically accept clinical questions in free-text and produce a fine-grained list of potential answers to the question. The result is an improvement in retrieval performance and a reduction in the effort required by the clinician.

The Multi-source Integrated Platform for Answering Clinical Questions (MiPACQ) is an integrated framework for semantic-based question processing and information extraction. Using NLP and information retrieval (IR) techniques, MiPACQ accepts free-text clinical questions and presents the user with succinct answers from a variety of sources 3 such as general medical encyclopedic resources and the patient’s data residing in the Electronic Medical Record (EMR). We present a design and implementation overview of the MiPACQ system along with an evaluation dataset that includes clinical questions and human-annotated answers taken from the Clinques 4 question set and Medpedia 5 online medical encyclopedia. We demonstrate that the MiPACQ system shows significant improvement over the existing Medpedia search engine and a Lucene 6 baseline information retrieval system on standard question answering evaluation metrics such as Precision at One and mean reciprocal rank (MRR).

Prior Work

The use of readily-available Internet search engines such as Google has become commonplace in clinical contexts: Schwartz et. al 7 surveyed emergency medicine residents and found that they considered Internet searches to be highly reliable and routinely used them to answer clinical questions in the emergency department. Unfortunately, the same study found that the residents had difficulty distinguishing between reliable and unreliable answers. Worse, while residents in the study expressed a high degree of confidence in the reliability of answers found through Internet searches, when tested the residents provided incorrect answers 33% of the time. When Athenikos and Han 8 surveyed biomedical QA systems, they asserted that “current medical QA approaches have limitations in terms of the types and formats of questions they can process.”

Some projects have attacked the question answering problem using syntactic information and text-based analysis. Yu et. al. created such a system 9 based on Google definitions of terms from the UMLS 10. The system can provide summarized answers to definitional questions and provides context by citing relevant MEDLINE articles. In subjective evaluations with physicians, Yu’s system did well with a variety of satisfaction metrics; however only definitional questions were tested.

There are a number of clinical question answering systems that make use of semantic information in clinical documents. Demner-Fushman et. al. 11 developed a system of feature extractors that identify subject populations, interventions, and outcomes in medical studies. Demner-Fushman et. al. later extended this research to create a general-purpose QA system 12. This system is similar in principle to the MiPACQ architecture, but smaller in scope as it retrieves answers only at the document level and targets a more restricted answer corpus (MiPACQ returns answers at the paragraph level and targets arbitrary information sources). Wang et. al. 13 experimented with UMLS semantic relationships and created a system that extracts exact answers from the output of a Lucene-based information retrieval system; however, their approach requires an exact semantic match, which limits performance on more complex and non-factoid questions. Athenikos et. al 14 propose a rule-based system based on human-developed question/answer UMLS semantic patterns; such an approach is likely to perform well on specific question types but might have difficulty generalizing to new questions. AskHermes15 finds answers based on keyword searching.

The MiPACQ system is distinguished from existing clinical QA systems in several ways. MiPACQ is broader in scope because it integrates numerous NLP components to enable deeper semantic understanding of medical questions and resources and it is designed to allow integration with a wide range of information sources and NLP systems. Additionally, unlike most medical QA research, MiPACQ was designed from the start to provide an extensible framework for the integration of new system components. Finally, MiPACQ uses machine learning (ML) based re-ranking rather than the fixed rule-based re-ranking used in most other research.

System Architecture

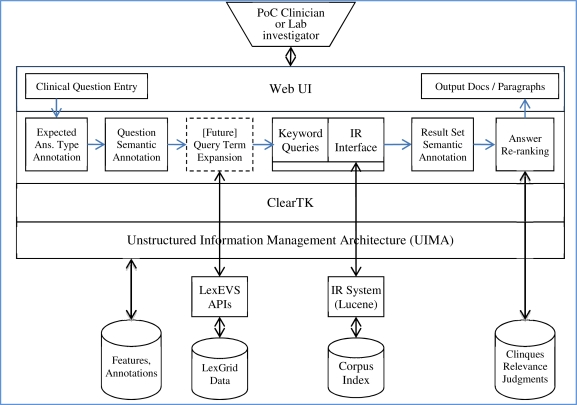

The MiPACQ system integrates multiple NLP tools into a single system that provides query formulation, automatic question and candidate answer annotation, answer re-ranking, and training for the various components. The system is based on ClearTK 16, a UIMA-based 17 toolkit developed by the computational semantics research group 18 at the University of Colorado at Boulder. ClearTK provides common data formats and interfaces for many popular NLP and ML systems.

Figure 1 provides an overview of the MiPACQ architecture (for further details see Nielsen et al., 2010 3). Questions are submitted by a clinician or investigator using the web-based user interface. The question is then processed by the annotation pipeline which adds semantic annotations. MiPACQ then interfaces with the information retrieval (IR) system which uses term overlap to retrieve candidate answer paragraphs. Candidate answer paragraphs are annotated using the annotation pipeline. Finally, the paragraphs are re-ordered by the answer re-ranking system based on the semantic annotations, and the results are presented to the user.

Figure 1:

MiPACQ System Architecture

Corpus

Medpedia

Because the MiPACQ system is designed to be used with a wide variety of medical resources, we wanted to test its performance against a medical article database with a broad focus. Medpedia is a Wiki-style collaborative medical database that targets both clinicians and the general public. Unlike some other Wiki projects (such as Wikipedia), Medpedia restricts editing to approved physicians and doctoral-degreed biomedical scientists 19. Despite this restricted authorship, Medpedia’s volunteer-based collaborative editing model results in a broader range of writing styles and article quality than traditional medical databases or journals. This variability provides a good test of the MiPACQ system’s ability to generalize to noisy and diverse data. Additionally, because Medpedia’s text is freely available under a Creative Commons license, we will be able to distribute our full-text index, annotation guidelines, and annotations to other researchers late in 2011.

Because Medpedia is a continually evolving corpus, we took a snapshot of Medpedia’s full text on April 26th, 2010. This snapshot has served as our Medpedia corpus throughout the MiPACQ project. Articles were broken into paragraphs based on HTML “p” tag boundaries and all other HTML tags (including formatting, images, and hyperlinks were stripped).

Clinical Questions

The clinical question data is a set of full text questions and Medpedia article and paragraph relevance judgments. We started with 4654 clinical questions from the Clinques corpus collected by Ely et. al. from interviews with physicians 4. During the interviews, which were conducted between patient visits, the physicians were asked what non-patient-specific questions came up during the preceding visit, including “vague fleeting uncertainties”.

Given the variability in quality in the Clinques corpus and our annotation resources, we narrowed the question set to 353 questions that were likely to be answered by the Medpedia medical encyclopedia. The exclusions consisted of questions that were incomprehensible or incomplete, required temporal logic to answer, required qualitative judgments, or required patient-specific knowledge that was not present in Medpedia 5. The remaining questions were then randomly divided into training and evaluation sets. A medical information retrieval and annotation specialist manually formulated and ran queries against the Medpedia corpus and annotated all of the paragraphs in articles that appeared relevant and in a few of the other top ranked articles.

The human annotator found individual paragraphs in Medpedia that answered 177 of the questions. We discarded the remaining questions because Medpedia lacked the answer content. The training set contains 80 questions with at least one paragraph answering the question, and the evaluation set contains 81 questions with at least one answer paragraph. The annotated questions will also be released in late 2011.

Annotation Pipeline

The main NLP component of the MiPACQ question answering system is the annotation pipeline. The pipeline incorporates multiple rule-based and ML-based systems that build on each other to produce question and answer annotations that facilitate re-ranking candidate answers based on semantics (e.g., raising the rank of candidates containing the expected answer type or that include similar UMLS entities). Although the pipeline stages are the same for questions and candidate answers (excluding the expected answer type system, which operates only on questions), all of the ML-based pipeline components use separate models for questions and answers.

cTAKES

The first stage in the annotation pipeline processes the question (or candidate answer) with the cTAKES clinical text analysis system. cTAKES is a multi-function system 20 that incorporates sentence detection, tokenization, stemming, part of speech detection, named entity recognition, and dependency parsing. Close collaboration between the MiPACQ project and the cTAKES project resulted in the addition of several features to cTAKES that are directly useful in the MiPACQ system. Because MiPACQ builds upon the unified type system used throughout cTAKES, annotations added by the cTAKES components (such as part-of-speech tags) are readily utilized by later stages in the MiPACQ annotation pipeline.

cTAKES is designed as a pipeline of connected systems that work together to create useful textual annotations 21. The pipeline first locates sentences in the document or question using the OpenNLP 22 sentence detector. Individual sentences are then annotated with token boundaries with a custom rule based tokenizer that handles details such as punctuation and hyphenated words. The next pipeline stage wraps the National Library of Medicine (NLM) SPECIALIST tools 23, providing term canonicalization and lemmatization.

The cTAKES pipeline then performs part-of-speech tagging using a custom UIMA wrapper around the OpenNLP part of speech tagger. The wrapper adapts OpenNLP’s data format to match the cTAKES unified type system and provides functionality for building the tag dictionary and tagging model. cTAKES provides a pre-generated standard tagging model trained on a combination of Penn Treebank 24 and clinical data.

Syntactic parsing is provided in cTAKES using the CLEAR dependency parser 25, a state-of-the-art transition based parser developed by the CLEAR computational semantics group at the University of Colorado at Boulder.

Named entity detection in cTAKES is done using a series of dictionary lookup annotators, which identify drug mentions, anatomical sites, procedures, signs/symptoms, and diseases/disorders using a series of dictionaries based on the UMLS Metathesaurus. Taken together, these annotators provide good coverage for a variety of medical named entity types.

The final stage in the cTAKES pipeline annotates the discovered mentions with negations (e.g. “no chest pain”) and status information (such as “history of myocardial infarction”). These annotations are generated using the finite state machine based NegEx algorithm 26.

Expected Answer Type

The expected answer type system annotates questions with the UMLS entity type or types of the expected answer. We anticipate that paragraphs containing UMLS entities of the expected answer type will be more likely to be correct answers. The expected answer type system is a ML-based classification system built using ClearTK and the SVM-Light training system. Our expected answer type system uses bag-of-words and bigram data to generate feature vectors for each question. We use a series of one-vs.-many binary classifiers to implement multi-way classification. The output of the system is a UMLS entity type category that is expected to match the key UMLS entity contained in the correct answer. For example, in the question “How do you diagnose reactive hypoglycemia?” the expected answer type is “Diagnostic Procedure”.

Question Answering Systems

Medpedia Search

Like many other Wiki sites, Medpedia has built-in full-text search capabilities. Medpedia is based on the MediaWiki content engine and utilizes the MediaWiki search engine, which is based on MySQL’s underlying full-text search index. Search in Medpedia is done at the article (document) level and is therefore directly comparable to the MiPACQ document-level baseline system described in the next section.

The Medpedia search data was obtained using HTTP from the live Medpedia server on November 24, 2010 by running the default Medpedia search engine on the unmodified clinical questions and collecting the top 50 results (or fewer, when Medpedia did not return at least 50 results). These articles were filtered to include just those that were present in the original April 26th download. The results were recorded in a database for later analysis.

Document-level baselines were developed just as a means of ensuring that our initial article filter provided reasonable results. The emphasis of this work is on performing paragraph-level question answering.

MiPACQ Document-Level Baseline

Because of the deficiencies in Medpedia’s default search, we decided to create a baseline information retrieval system that operates at the document level. The document-level baseline is based on the Lucene full-text search index, which by default (and in our application) uses the vector space model 27 with normalized tf-idf parameters to rank the documents being queried.

The document-level index was built from Medpedia articles as represented in the MiPACQ database. The entire document is indexed as a single text string. Each indexed document also includes the article title and a unique document ID. There was no specialized processing performed to improve results based on any specific structure or characteristics of Medpedia; hence, the final QA results should transfer without significant differences to other medical resources with similar content.

As is common with information retrieval systems, the MiPACQ document-level baseline makes extensive use of stemming and stop-listing to improve both the precision and recall of the results. The question and document were processed with the Snowball 28 stemmer. Stop-listing was initially limited to Lucene’s StandardAnalyzer, but we were later able to improve performance by switching to a domain-specific stop list generated by removing medical terms from the 50 most frequent words in the Medpedia article corpus. The final stop list contained the following words: to, they, but, other, can, for, no, by, been, has, who, was, of, were, are, if, when, on, do, these, be, may, with, is, it, such, how, or, a, at, into, as, you, the, should, in, and, not, that, which, an, then, there, will, their, this.

To improve recall, the document-level baseline uses OR as the default conjunction operator. Although OR has a detrimental effect on precision, the fact that the input to the system is full questions means that a substantial fraction of the test questions return no results whatsoever with AND as the default operator, even with stemming and stop-listing.

As with the Medpedia search baseline, the MiPACQ document-level system is configured to return only the top 50 results. Results beyond this point have minimal effect on the evaluation metrics and in practice are rarely reviewed by a user.

MiPACQ Paragraph-Level Baseline

Similar to the document-level baseline system, the MiPACQ paragraph-level baseline system is a traditional information retrieval system based on Lucene. The paragraph-level baseline system uses two indices: the document index described in the previous section and a paragraph index, which uses the same Snowball stemming and stop list.

Question answering in the paragraph-level baseline system requires two steps. First, the top n (currently, 20) documents are retrieved using the document-level index. The paragraph index is then queried, but only paragraphs in the previous document set are considered. The final paragraph scores are the product of the document-level scores and the paragraph scores. This composite score was found to be more reliable in training data experiments than simply querying the paragraph index directly because it is more sensitive to context from the document. To produce the final answer list, the paragraph-level system ranks the candidate answer paragraphs by composite score in descending order.

As with the document-level baseline system, the paragraph-level baseline makes no use of the annotation pipeline. Answer ranking is dependent entirely on the overlap between the set of question tokens and the set of tokens in the candidate answer paragraph and document.

MiPACQ Rule-Based Re-ranking

The rule-based re-ranking system first uses the paragraph-level baseline system to retrieve the top n (currently 100) candidate answer paragraphs. The question and the top answer paragraphs are then annotated using the MiPACQ annotation pipeline. Candidate answer paragraphs are then re-ranked (re-ordered) using a fixed formula based, in part, on the semantic annotations produced by the annotation pipeline. This method is used as a baseline to demonstrate performance based on a few simple informative features.

There are three components to the rule-based scoring function as described in Equation 1. The first component, S, is the original score from the paragraph-level baseline system (which is itself the product of document- and paragraph-level scores from Lucene). This score is then multiplied by the sum of two other components: a bag-of-words component and a UMLS entity component.

| Equation 1: Rule Based Scoring Function |

The UMLS entity component compares the UMLS entity types in the question and answer paragraph. The number of matching UMLS features (AQ ∩ AA) is divided by the number of UMLS entity annotations found in the question (AQ). Add-one smoothing is used to prevent division by zero and scores of zero. For questions with no UMLS entities (and by extension no possible UMLS entity intersections), the smoothing results in a UMLS entity component of 1 for every candidate answer paragraph.

The bag-of-words component is structured similarly to the UMLS component, but it considers matches between individual word tokens (WQ ∩ WA) rather than UMLS entities. Although the Lucene scoring algorithm used by the baseline system already examines word overlap between the question and answer, based on our experience with the training set we determined that adding the bag-of-words component (with uniform versus tf-idf term weighting, no stop words, and no stemming) improved overall QA performance slightly.

MiPACQ ML-Based Re-ranking

Increasingly, information retrieval and question answering systems are turning to machine-learning based ranking systems (sometimes called “learning-to-rank”). The most common approaches are point-wise methods (which use supervised learning to predict the absolute scores of individual results), pair-wise methods (which attempt to classify pairs of documents as correctly or incorrectly ordered), and list-wise methods (which consider the entire rank list as a whole) 29. However, all of these methods are excessive for question answering tasks because QA systems prioritize ranking at least one answer highly rather than ranking all of the documents in the correct order. As a result, our ML based re-ranking system uses a method similar to that described by Moschitti and Quarteroni 30 in which question/answer pairs are classified as “valid” or “invalid”.

As with the rule-based QA system, the ML-based system first uses the paragraph baseline system to retrieve the top 100 paragraphs for the question, then the question and each answer paragraph are run through the annotation system. The annotated questions and answers are then used to generate feature vectors for training and classification.

For each UMLS entity in the question and each UMLS entity in the candidate answer paragraph, the system generates a feature in the vector equal to the frequency of that entity (typically 0 or 1, although some questions and answers contain more than one UMLS entity of the same type). The system also generates features for the token frequencies in the question and answer, for the original baseline score and for the expected answer type.

For each question/paragraph pair in the training dataset (for the top 100 paragraphs, as noted above, for each question), a training instance is generated using the computed feature vector and a binary value indicating whether the question/paragraph pair is valid (the gold standard annotation indicates that the paragraph answers the question). The SVM-Light training system is then used to create a corresponding binary classifier.

During the QA process, the re-ranking system multiplies the baseline scores by the answer probability from the answer classifier. This probability is obtained by fitting a sigmoid function to the classifier output (over the training dataset) using an improved variant of Platt calibration 31 as described by Lin, Lin, and Weng 32. In experiments with the training dataset, we found that this produced better results than ranking all paragraphs classified as “answer” above those classified as “not answer”.

Results

Evaluation metrics

| Equation 2: Mean Reciprocal Rank |

The three evaluation metrics we selected were average Precision at One (P@1), mean reciprocal rank (MRR), and area under the precision/recall curve (AUC). Average Precision at One is the fraction of the questions where a correct answer was returned as the first result. The formula for MRR is presented in Equation 2, where Q represents the set of questions being evaluated, and top_rank(Qi) represents the index of the most highly ranked answer for the question being evaluated.

Precision at One and MRR emphasize ranking at least one answer highly, whereas obtaining high AUC depends on the system highly ranking all correct answers. The AUC values were generated by finding the area under a step-function interpolation of the precision/recall curve. In the interpolation, the precision at a given recall is the maximum precision with the same or better recall. All of the presented results are averages across all of the evaluation set questions.

Document Level

The document-level systems (Medpedia search and the MiPACQ document-level baseline system) were evaluated against the 81 questions in the evaluation set. For the purpose of this evaluation, documents containing at least one paragraph annotated “answer” were considered valid answer documents.

Given that both the MiPACQ and Medpedia systems are based on shallow textual features, the performance difference is dramatic (Precision at One increased by 302%). Despite the fact that Lucene is not intended to be a question answering system, the document-level results are acceptable as a first step in the question answering process. This is in contrast to the Medpedia search system: while it might be acceptable as a search system, as a question answering system it fails to locate relevant documents at an acceptable rate.

Paragraph Level

The paragraph-level QA system results are presented in Table 2. These results were generated by running the evaluation system against the same question test set as in the document-level evaluation.

Table 2:

Performance of Paragraph Level QA Systems

| System | P@1 | MRR | AUC |

|---|---|---|---|

| Baseline | 0.074 | 0.140 | 0.105 |

| Rule-based Re-ranking | 0.136 | 0.212 | 0.149 |

| ML Based Re-ranking | 0.173 | 0.266 | 0.141 |

Several interesting facts emerge from the data. Although the document-level and paragraph-level results are not directly comparable, the paragraph-level results indicate (as would be expected) that finding the answer within the document is considerably more difficult than simply finding the relevant document. Whereas the Lucene-based MiPACQ document-level baseline system might be considered acceptable as a general-purpose QA system (an “answer” result was ranked first for 49% of the questions), the paragraph-level comparisons show that traditional information retrieval systems are inadequate for the more fine-grained paragraph level question answering task (only 7% of the questions resulted in an answer paragraph being returned as the first result).

Additionally, we can see that even the simple rule-based re-ranking algorithm using UMLS and BOW features provides a substantial performance boost. The rule-based system returned a correct answer in the first position 13.6% of the time (an 84% improvement relative to the baseline system). Because the rule-based system considers only UMLS entity matches and word matches between the question and answer, we can infer that these matches are strong predictors of correct answers. Additionally, despite the fact that the cTAKES system was not trained on the Medpedia corpus (at the time of this writing, we had not yet created gold-standard syntactic or semantic annotations for the Medpedia paragraphs) the generated UMLS entities were sufficiently valid to result in a significant performance improvement, providing additional evidence that the system should work well with new medical resources.

The ML-based re-ranking results showed further improvement over the rule-based system. The ML based system did significantly better at ranking answers in the first position (P@1): a correct answer was ranked highest 17.3% of the time (134% better than the IR baseline system and 27% better than the rule-based system). ML-based re-ranking also showed improvement on MRR compared to both the IR baseline (90% better) and the rule-based system (26% better). AUC showed significant improvement relative to the IR baseline, but was slightly lower than in the rule-based approach.

For 21 questions (26% of the evaluation set) the paragraph-level baseline system returned no answer paragraphs in the top 100. The re-ranking systems (both rule and ML based) cannot improve performance on these questions because paragraphs outside of the top 100 are not currently considered for re-ranking. We expect that adding keyword expansion will help to mitigate this problem by improving the baseline IR system’s recall. The accompanying precision loss should be mitigated by the filtering effect of the ML based re-ranking.

Future Work

To improve document/paragraph recall, one strategy is term expansion. Each question is expanded into a series of queries with different versions of key terms. A standard information retrieval system is used to query the corpus with each query, and then the ranked results are combined into a single result set. We plan to implement term expansion using the LexEVS 33 terminology server and the UMLS entity features provided by the cTAKES annotator.

Moschitti and Quarteroni demonstrated that semantic role labeling (SRL) of questions and answers with PropBank-style predicate argument structures 34 can provide significant improvements in question answering performance 28. Predicate-argument annotations describe the semantic meanings of predicates and identify their arguments and argument roles. To investigate the effectiveness of adding predicate argument structures, we created a custom SRL system trained on a mixture of the Clinques corpus and PropBank data 34. Evaluation of our SRL system with the CoNLL-2009 shared task development dataset 35 indicates that our system is competitive with other state-of-the-art SRL systems.

Features extracted from UMLS semantic relations may also improve the answer re-ranking and we are completing a system to perform this classification. Full evaluation of the system will be completed once we have integrated the predicate argument structures and UMLS relations into the re-ranking systems.

MiPACQ will be released under an Apache v2 open-source license in late 2011. Associated lexical resources including gold standard annotations will also be made available to the research community.

Conclusion

The increased desire to incorporate medical databases into the clinical practice of medicine has resulted in increased demand for clinical question answering systems. Traditional information retrieval systems have proven inadequate for the task. While baseline systems performed marginally well at retrieving documents pertinent to the question, physicians do not have time to scour the entire document to find the answer and performance was substantially worse when we attempted to apply IR technologies to obtain answers at the paragraph level.

This is the first evaluation of MiPACQ. It demonstrates that the application of NLP and machine learning to clinical question answering can provide substantial performance improvements. By annotating both questions and candidate answers with a variety of syntactic and semantic features and incorporating both existing and new NLP systems into a comprehensive annotation and re-ranking pipeline, we were able to improve question answering performance significantly (an improvement of 133% in Precision at One relative to the baseline IR system). This is particularly significant considering that we have not yet incorporated query re-formulation (key word expansion). When MiPACQ is run against other corpora, the extent to which the re-ranking is boosted by the current machine-learning classifiers remains to be seen. And while the expected answer type classifier is dependent upon the training data, the classifier is only one component of the re-ranking. If needed, they can be retrained easily due to our use of the ClearTK framework. Our approach demonstrates the effectiveness of NLP for clinical question answering tasks as well as the utility of integrating multiple layers of annotation with machine-learning based re-ranking systems.

Table 1:

Performance of Document Level QA Systems

| System | P@1 | MRR | AUC |

|---|---|---|---|

| Medpedia | 0.123 | 0.134 | 0.062 |

| MiPACQ | 0.494 | 0.593 | 0.530 |

Acknowledgments

The project described was supported by award number NLM RC1LM010608. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NLM/NIH.

References

- 1.Sackett DL, Rosenberg WMC, Gray JAM, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996 Jan;312(71):71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zweigenbaum P. Question Answering in Biomedicine. Proceedings of EACL 03 Workshop: Natural Language Processing for Question Answering; Budapest: 2003. pp. 1–4. [Google Scholar]

- 3.Nielsen RD, Masanz J, Ogren P, et al. An Architecture for Complex Clinical Question Answering. The 1st ACM International Health Informatics Symposium; 2010. pp. 395–399. [Google Scholar]

- 4.Ely J, Osheroff J, Chambliss M, Ebell M, Rosenbaum M. Answering Physicians’ Clinical Questions: Obstacles and Potential Solutions. J Am Med Inform Assoc. 2005 Mar-Apr;12(2):217–224. doi: 10.1197/jamia.M1608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Medpedia Available at: http://www.medpedia.com/. Last accessed: May 16, 2011.

- 6.Apache Lucene Available at: http://lucene.apache.org/java/docs/index.html. Last accessed: May 8, 2011.

- 7.Schwartz DG, Abbas J, Krause R, Moscati R, Halpern S. Are Internet Searches a Reliable Source of Information for Answering Residents’ Clinical Questions in the Emergency Room. Proceedings of the 1st ACM International Health Informatics Symposium; New York: 2010. pp. 391–394. [Google Scholar]

- 8.Athenikos SJ, Han H. Biomedical question answering: a survey. Comput Methods Programs Biomed. 2010 Jul;99(1):1–24. doi: 10.1016/j.cmpb.2009.10.003. [DOI] [PubMed] [Google Scholar]

- 9.Yu H, Lee M, Kaufman D, et al. Development, implementation, and a cognitive evaluation of a definitional question answering system for physicians. J Biomed Inform. 2007 Jun;40(3):236–51. doi: 10.1016/j.jbi.2007.03.002. [DOI] [PubMed] [Google Scholar]

- 10.Lindberg D, Humphreys B, McCray A. The Unified Medical Language System. Meth Inform Med. 1993 Aug;32(4):281–291. doi: 10.1055/s-0038-1634945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Demner-Fushman D, Lin J. AAAI-05 Workshop on Question Answering. Pittsburgh: 2005. Knowledge Extraction for Clinical Question Answering: Preliminary Results; pp. 1–9. [Google Scholar]

- 12.Demner-Fushman D, Lin J. Answering Clinical Questions with Knowledge-Based and Statistical Techniques. Computational Linguistics. 2007;33(1) [Google Scholar]

- 13.Wang W, Hu D, Feng M, Liu W. Automatic Clinical Question Answering Based on UMLS Relations. SKG ’07 Proceedings of the Third International Conference on Semantics, Knowledge and Grid; Xi’an: 2007. pp. 495–498. [Google Scholar]

- 14.Athenikos SJ, Han H, Brooks AD. A framework of a logic-based question-answering system for the medical domain (LOQAS-Med). Proceedings of the 2009 ACM symposium on Applied Computing; New York: 2009. pp. 847–851. [Google Scholar]

- 15.Yu H, Cao YG. Automatically extracting information needs from ad-hoc clinical questions. American Medical Informatics Association (AMIA) Fall Symposium; San Francisco: 2008. pp. 96–100. [PMC free article] [PubMed] [Google Scholar]

- 16.Ogren PV, Wetzler PG, Bethard SJ. LREC. Marrakech: 2008. ClearTK: A UIMA Toolkit for Statistical Natural Language Processing; pp. 865–869. [Google Scholar]

- 17.Ferrucci D, Lally A. UIMA: an architectural approach to unstructured information processing in the corporate research environment. Natural Language Engineering. 2004 Sep;10(3–4):327–348. [Google Scholar]

- 18.CLEAR (Computational Language and EducAtion Research) Available at: http://clear.colorado.edu/start/index.html. Last accessed: May 10, 2011.

- 19.Rethlefsen ML. Medpedia. J Med Libr Assoc. 2009:97. doi: 10.3163/1536-5050.97.4.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Savova GK, Masanz JJ, Ogren PV, et al. Mayo clinical Text Analysis and Knowledge Extraction System (cTAKES): architecture, component evaluation and applications. J Am Med Inform Assoc. 2010;(17):568–574. doi: 10.1136/jamia.2009.001560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Clinical Text Analysis and Knowledge Extraction System User Guide Available at: http://ohnlp.sourceforge.net/cTAKES/#_document_preprocessor. Last accessed: May 10, 2011. [DOI] [PMC free article] [PubMed]

- 22.http://incubator.apache.org/opennlp/. Apache OpenNLP. Last accessed: May 12, 2011.

- 23.Lexical Tools Available at: http://lexsrv2.nlm.nih.gov/LexSysGroup/Projects/lvg/current/web/index.html.

- 24.Marcus MP, Marcinkiewicz MA, Santorini B. Building a Large Annotated Corpus of English: The Penn Treebank. Computational Linguistics. 1993 Jun;19(2):313–330. [Google Scholar]

- 25.Choi JD, Nicolov N. K-best, Locally Pruned, Transition-based Dependency Parsing Using Robust Risk Minimization. Collections of Recent Advances in Natural Language Processing. 5:205–216. [Google Scholar]

- 26.Negex Available at: http://code.google.com/p/negex/. Last accessed: May 16, 2011.

- 27.Manning CD, Raghavan P, Schütze H. Introduction to Information Retrieval: Cambridge University Press. 2008. pp. 118–125.

- 28.Porter M. The Porter Stemming Algorithm. http://snowball.tartarus.org/algorithms/porter/stemmer.html. Last accessed: March 6, 2011.

- 29.Liu TY. Learning to Rank for Information Retrieval. Foundations and Trends in Information Retrieval. 2009;3(3):225–331. [Google Scholar]

- 30.Moschitti A, Quarteroni S. Linguistic kernels for answer re-ranking in question answering systems. Information Processing & Management. 2010 In press. [Google Scholar]

- 31.Platt JC. Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods. Advances in Large Margin Classifiers: MIT Press. 1999:61–74. [Google Scholar]

- 32.Lin HT, Lin CJ, Weng RC. A note on Platt’s probabilistic outputs for support vector machines. Machine Learning. 2007;68(3):267–276. [Google Scholar]

- 33.LexBig and LexEVS . Available at: https://cabig-kc.nci.nih.gov/Vocab/KC/index.php/LexBig_and_LexEVS. Last accessed: May 16, 2011. [Google Scholar]

- 34.Kingsbury P, Palmer M. Language Resources and Evaluation. Las Palmas: 2002. From TreeBank to PropBank; pp. 29–31. [Google Scholar]

- 35.Hajič J, Ciaramita M, Johansson R, et al. The CoNLL-2009 Shared Task: Syntactic and Semantic Dependencies in Multiple Languages. Proceedings of the 13th Conference on Computational Natural Language Learning (CoNLL-2009); Boulder: 2009. pp. 1–18. [Google Scholar]